Deep Learning Based Pavement Inspection Using Self-Reconfigurable Robot

Abstract

:1. Introduction

1.1. Literature Review

1.2. Objectives

2. Panthera Robot Architecture

- Self-reconfigurable pavement sweeping robot Panthera can reconfigure its shape with contracted and extended state shown in Figure 1a,b respectively. This feature enables it to travel on different pavement widths, avoid static obstructions, and response to the pedestrian density.

- With reconfigurable mechanism, Panthera can access variable pavement width.

- Panthera has omnidirectional locomotion, which helps in taking sharp turns, avoiding the defects, potholes, etc.

- The Panthera is equipped with vision sensors to detect the garbage or litters on the pavement and also the cracks present on it using the images taken during daylight conditions.

2.1. Mechanical Components

2.1.1. Reconfigurable Mechanism

2.1.2. Locomotion and Steering Units

2.1.3. Sweeping and Vacuum Units

2.2. Electrical and Programming Units

2.2.1. Sensory Units

- Vision system: Intel Realsense D435 depth camera is utilized in panthera vision sensor as shown in Figure 3a (i). The camera has wide field of view () and high pixel resolution 1920 × 1080 which is fixed in center of the front panel of Panthera robot.

- Global Navigation Satellite System (GNSS) receiver was used for getting the geographical location of the defect region, as shown in Figure 3a (ii). The GNSS device namely NovAtel’s PwrPak7D which is robust and accuracy of 2.5 cm to few meters A 16 GB internal storage device is used to log the locations of the robot. The device is shown in Figure 3a (ii). The accuracy can further be improved by combining the wheel odometry data along with the filters.

- Mechanical limit switches: In order to to limit the the reconfiguration of the robot between 0.8 to 1.7 meters the mechanical switch was used Figure 3 a (iii). Limit switch with roller type plunger is attached at the end of the lead screw to limit the movement of the re-configuring frame safely. The limit switch, when triggered, results in an immediate stop in the rotation of the lead screw shaft.

- Absolute encoders: Absolute encoders were used to get the feedback for the steering rotation achieved by differential action of the wheels, as shown in Figure 2b (iii) and Figure 3a (iv). The A2 optical encoder from US Digital was mounted on top of the four steering units and communicated using RS-485 serial bus utilizing US Digital’s Serial Encoder Interface (SEI) was used.

2.2.2. Electrical Units

2.2.3. Control Units

3. Defect and Garbage Detection Framework

3.1. Input Layer

3.2. Pavement Segmentation

3.2.1. Pavement Defects and Garbage Detection

3.2.2. Convolutional Layers

3.2.3. Pooling Layer

3.2.4. SoftMax Layer

3.2.5. Activation Function

3.2.6. Bounding Box

3.3. Mobile Mapping System for Defect Localization

4. Experiments and Results

4.1. Data set Preparation and Training the Model

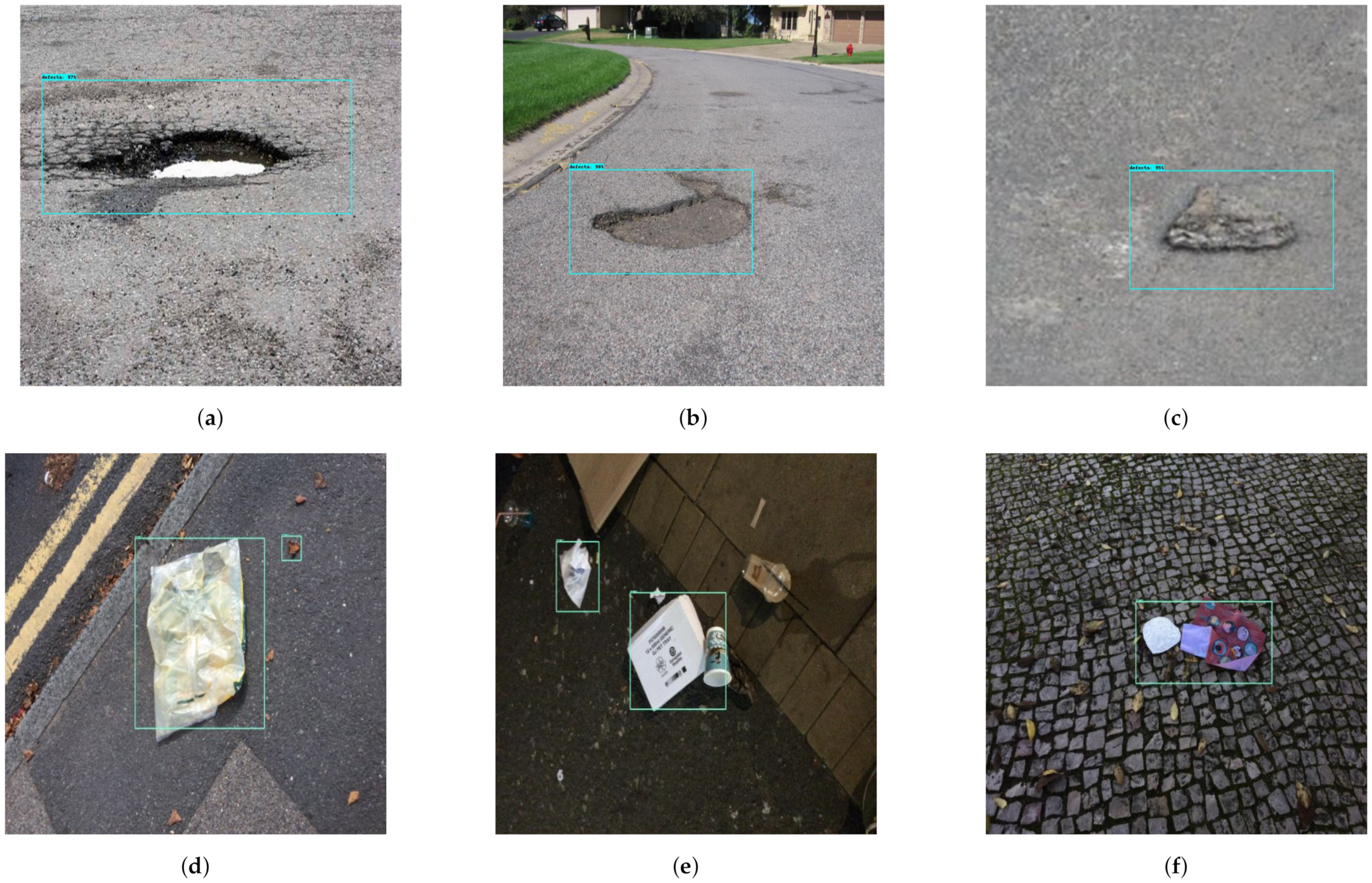

4.2. Validation of Defect and Garbage Detection Framework

4.3. Performance Comparison with Other Semantic and Object Detection Framework

Validation with Other Defects and Garbage Image Database

4.4. Comparison with Existing Schemes

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Term | Explanation |

| GPU | Graphical Processing Unit |

| CNN | Convolutional Neural Network |

| DCNN | Deep Convolutional Neural Network |

| MMS | Mobile Mapping System |

| PMS | Pavement Management System |

| ML | Machine Learning |

| DL | Deep Learning |

| VGG16 | Visual Geometry Group 16 |

| YOLOV2 | You Only Look Once |

| CAR | Classification Accuracy Rate |

| F-RCNN | Fast Region Convolution Neural Network |

| SVM | Support Vector Model |

| SIFT | Scale Invariant Feature Transform |

| SSD | Single Shot Detector |

| GNSS | Global Navigation Satellite System |

| GB | Giga Byte |

| SEI | Serial Encoder Interface |

| DNNP | Deep Neural Network Processing |

| RAM | Random Access Memory |

| ROS | Robot Operating System |

| PWM | Pulse Width Modulation |

| DL | Deep Learning |

| ReLU | Rectified Linear Unit |

| RoI | Region of Interest |

| IOU | Intersection Over Union |

| 4G | Fourth Generation |

| tp | true positives |

| fp | false positives |

| tn | true negatives |

| fn | false negatives |

| Acc | Accuracy |

| Prec | Precision |

| Rec | Recall |

| F1 | F1measure |

| fps | frames per second |

| MAP | Mean Average Precision |

| FCN-8 | Fully Convolutional Neural Network |

| SGD | Stochastic Gradient Descent |

| upper left X coordinate of the bounding box | |

| upper left X coordinate of the bounding box | |

| lower right X coordinate of the bounding box | |

| lower right X coordinate of the bounding box |

References

- Saidi, K.S.; Bock, T.; Georgoulas, C. Robotics in construction. In Springer Handbook of Robotics; Springer: New York, NY, USA, 2016; pp. 1493–1520. [Google Scholar]

- Tan, N.; Mohan, R.E.; Watanabe, A. Toward a framework for robot-inclusive environments. Autom. Constr. 2016, 69, 68–78. [Google Scholar] [CrossRef]

- Jeon, J.; Jung, B.; Koo, J.C.; Choi, H.R.; Moon, H.; Pintado, A.; Oh, P. Autonomous robotic street sweeping: Initial attempt for curbside sweeping. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–11 January 2017; pp. 72–73. [Google Scholar]

- Djuric, A.; Saidi, R.A.; ElMaraghy, W. Global Kinematic Model generation for n-DOF reconfigurable machinery structure. In Proceedings of the 2010 IEEE International Conference on Automation Science and Engineering, Vancouver, BC, Canada, 22–26 August 2010; pp. 804–809. [Google Scholar]

- Samarakoon, S.B.P.; Muthugala, M.V.J.; Le, A.V.; Elara, M.R. HTetro-infi: A reconfigurable floor cleaning robot with infinite morphologies. IEEE Access 2020, 8, 69816–69828. [Google Scholar] [CrossRef]

- Hayat, A.A.; Karthikeyan, P.; Vega-Heredia, M.; Elara, M.R. Modeling and Assessing of Self-Reconfigurable Cleaning Robot hTetro Based on Energy Consumption. Energies 2019, 12, 4112. [Google Scholar] [CrossRef] [Green Version]

- Hayat, A.A.; Parween, R.; Elara, M.R.; Parsuraman, K.; Kandasamy, P.S. Panthera: Design of a reconfigurable pavement sweeping robot. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7346–7352. [Google Scholar]

- Le, A.V.; Hayat, A.A.; Elara, M.R.; Nhan, N.H.K.; Prathap, K. Reconfigurable Pavement Sweeping Robot and Pedestrian Cohabitant Framework by Vision Techniques. IEEE Access 2019, 7, 159402–159414. [Google Scholar] [CrossRef]

- Yi, L.; Le, A.V.; Ramalingam, B.; Hayat, A.A.; Elara, M.R.; Minh, T.H.Q.; Gómez, B.F.; Wen, L.K. Locomotion with Pedestrian Aware from Perception Sensor by Pavement Sweeping Reconfigurable Robot. Sensors 2021, 21, 1745. [Google Scholar] [CrossRef]

- Tan, N.; Hayat, A.A.; Elara, M.R.; Wood, K.L. A Framework for Taxonomy and Evaluation of Self-Reconfigurable Robotic Systems. IEEE Access 2020, 8, 13969–13986. [Google Scholar] [CrossRef]

- Hayat, A.A.; Elangovan, K.; Rajesh Elara, M.; Teja, M.S. Tarantula: Design, modeling, and kinematic identification of a quadruped wheeled robot. Appl. Sci. 2019, 9, 94. [Google Scholar] [CrossRef] [Green Version]

- Chun, C.; Ryu, S.K. Road Surface Damage Detection Using Fully Convolutional Neural Networks and Semi-Supervised Learning. Sensors 2019, 19, 5501. [Google Scholar] [CrossRef] [Green Version]

- Ramalingam, B.; Yin, J.; Rajesh Elara, M.; Tamilselvam, Y.K.; Mohan Rayguru, M.; Muthugala, M.A.V.J.; Félix Gómez, B. A Human Support Robot for the Cleaning and Maintenance of Door Handles Using a Deep-Learning Framework. Sensors 2020, 20, 3543. [Google Scholar] [CrossRef]

- Teng, T.W.; Veerajagadheswar, P.; Ramalingam, B.; Yin, J.; Elara Mohan, R.; Gómez, B.F. Vision Based Wall Following Framework: A Case Study With HSR Robot for Cleaning Application. Sensors 2020, 20, 3298. [Google Scholar] [CrossRef]

- Zhao, L.; Li, F.; Zhang, Y.; Xu, X.; Xiao, H.; Feng, Y. A Deep-Learning-based 3D Defect Quantitative Inspection System in CC Products Surface. Sensors 2020, 20, 980. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Wang, Z.; Hu, S.; Lepine, J.; Na, X.; Ainalis, D.; Stettler, M. An Automated Machine-Learning Approach for Road Pothole Detection Using Smartphone Sensor Data. Sensors 2020, 20, 5564. [Google Scholar] [CrossRef]

- Lv, X.; Duan, F.; Jiang, J.J.; Fu, X.; Gan, L. Deep Active Learning for Surface Defect Detection. Sensors 2020, 20, 1650. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Li, H.; Wang, H. Pixel-Wise Crack Detection Using Deep Local Pattern Predictor for Robot Application. Sensors 2018, 18, 3042. [Google Scholar] [CrossRef] [Green Version]

- Wang, K.; Yan, F.; Zou, B.; Tang, L.; Yuan, Q.; Lv, C. Occlusion-Free Road Segmentation Leveraging Semantics for Autonomous Vehicles. Sensors 2019, 19, 4711. [Google Scholar] [CrossRef] [Green Version]

- Chun, C.; Lee, T.; Kwon, S.; Ryu, S.K. Classification and Segmentation of Longitudinal Road Marking Using Convolutional Neural Networks for Dynamic Retroreflection Estimation. Sensors 2020, 20, 5560. [Google Scholar] [CrossRef]

- Balado, J.; Martínez-Sánchez, J.; Arias, P.; Novo, A. Road Environment Semantic Segmentation with Deep Learning from MLS Point Cloud Data. Sensors 2019, 19, 3466. [Google Scholar] [CrossRef] [Green Version]

- Aldea, E.; Hégarat-Mascle, S.L. Robust crack detection for unmanned aerial vehicles inspection in an a-contrario decision framework. J. Electron. Imaging 2015, 24, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Stathaki, T. Automatic crack detection for tunnel inspection using deep learning and heuristic image post-processing. Appl. Intell. 2018, 49, 2793–2806. [Google Scholar] [CrossRef]

- Fan, R.; Bocus, M.J.; Zhu, Y.; Jiao, J.; Wang, L.; Ma, F.; Cheng, S.; Liu, M. Road Crack Detection Using Deep Convolutional Neural Network and Adaptive Thresholding. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 474–479. [Google Scholar]

- Naddaf-Sh, M.M.; Hosseini, S.; Zhang, J.; Brake, N.A.; Zargarzadeh, H. Real-Time Road Crack Mapping Using an Optimized Convolutional Neural Network. Complexity 2019, 2019, 2470735. [Google Scholar] [CrossRef]

- Mei, Q.; Gül, M.; Azim, M.R. Densely connected deep neural network considering connectivity of pixels for automatic crack detection. Autom. Constr. 2019, 110, 103018. [Google Scholar] [CrossRef]

- Yusof, N.A.M.; Ibrahim, A.; Noor, M.H.M.; Tahir, N.M.; Yusof, N.M.; Abidin, N.Z.; Osman, M.K. Deep convolution neural network for crack detection on asphalt pavement. J. Phys. Conf. Ser. 2019, 1349, 012020. [Google Scholar] [CrossRef]

- Chen, T.; Cai, Z.; Zhao, X.; Chen, C.; Liang, X.; Zou, T.; Wang, P. Pavement crack detection and recognition using the architecture of segNet. J. Ind. Inf. Integr. 2020, 18, 100144. [Google Scholar] [CrossRef]

- Mandal, V.; Uong, L.; Adu-Gyamfi, Y. Automated Road Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5212–5215. [Google Scholar]

- Nhat-Duc, H.; Nguyen, Q.L.; Tran, V.D. Automatic recognition of asphalt pavement cracks using metaheuristic optimized edge detection algorithms and convolution neural network. Autom. Constr. 2018, 94, 203–213. [Google Scholar] [CrossRef]

- Zhihong, C.; Hebin, Z.; Yanbo, W.; Binyan, L.; Yu, L. A vision-based robotic grasping system using deep learning for garbage sorting. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11223–11226. [Google Scholar]

- Mittal, G.; Yagnik, K.B.; Garg, M.; Krishnan, N.C. Spotgarbage: smartphone app to detect garbage using deep learning. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 940–945. [Google Scholar]

- Thung, G.; Yang, M. Classification of Trash for Recyclability Status; CS229 Project Report; Stanford University: Palo Alto, CA, USA, 2016. [Google Scholar]

- Tang, P.; Wang, H.; Kwong, S. G-MS2F: GoogLeNet based multi-stage feature fusion of deep CNN for scene recognition. Neurocomputing 2017, 225, 188–197. [Google Scholar] [CrossRef]

- Ramalingam, B.; Lakshmanan, A.K.; Ilyas, M.; Le, A.V.; Elara, M.R. Cascaded Machine-Learning Technique for Debris Classification in Floor-Cleaning Robot Application. Appl. Sci. 2018, 8, 2649. [Google Scholar] [CrossRef] [Green Version]

- Fulton, M.; Hong, J.; Islam, M.J.; Sattar, J. Robotic Detection of Marine Litter Using Deep Visual Detection Models. arXiv 2018, arXiv:1804.01079. [Google Scholar]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Road Segmentation of Remotely-Sensed Images Using Deep Convolutional Neural Networks with Landscape Metrics and Conditional Random Fields. Remote Sens. 2017, 9, 680. [Google Scholar] [CrossRef] [Green Version]

- Mancini, A.; Malinverni, E.S.; Frontoni, E.; Zingaretti, P. Road pavement crack automatic detection by MMS images. In Proceedings of the 21st Mediterranean Conference on Control and Automation, Chania, Crete, 25–28 June 2013; pp. 1589–1596. [Google Scholar]

- El-Sheimy, N. An Overview of Mobile Mapping Systems. In Proceedings of the FIG Working Week 2005 and 8th International Conference on the Global Spatial Data Infrastructure (GSDI-8): From Pharaohs to Geoinformatics, Cairo, Egypt, 16–21 April 2005. [Google Scholar]

- Cui, L.; Qi, Z.; Chen, Z.; Meng, F.; Shi, Y. Pavement Distress Detection Using Random Decision Forests. In Proceedings of the International Conference on Data Science, Sydney, Australia, 8–9 August 2015; Springer: New York, NY, USA, 2015; pp. 95–102. [Google Scholar]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature Pyramid and Hierarchical Boosting Network for Pavement Crack Detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1525–1535. [Google Scholar] [CrossRef] [Green Version]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road Damage Detection and Classification Using Deep Neural Networks with Smartphone Images. Comput. Aided Civ. Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar] [CrossRef]

- Proença, P.F.; Simões, P. TACO: Trash Annotations in Context for Litter Detection. arXiv 2020, arXiv:2003.06975. [Google Scholar]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Huyan, J.; Li, W.; Tighe, S.; Xu, Z.; Zhai, J. CrackU-net: A novel deep convolutional neural network for pixelwise pavement crack detection. Struct. Control. Health Monit. 2020, 27, e2551. [Google Scholar] [CrossRef]

- Bang, S.; Park, S.; Kim, H.; Kim, H. Encoder–decoder network for pixel-level road crack detection in black-box images. Comput. Aided Civ. Infrastruct. Eng. 2019, 34, 713–727. [Google Scholar] [CrossRef]

- Tong, Z.; Yuan, D.; Gao, J.; Wang, Z. Pavement defect detection with fully convolutional network and an uncertainty framework. Comput. Aided Civ. Infrastruct. Eng. 2020, 35, 832–849. [Google Scholar] [CrossRef]

- Majidifard, H.; Jin, P.; Adu-Gyamfi, Y.; Buttlar, W.G. Pavement Image Datasets: A New Benchmark Dataset to Classify and Densify Pavement Distresses. Transp. Res. Rec. 2020, 2674, 328–339. [Google Scholar] [CrossRef] [Green Version]

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-Level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-Learning Network. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Deep Learning Based Robot for Automatically Picking Up Garbage on the Grass. IEEE Trans. Consum. Electron. 2018, 64, 382–389. [Google Scholar] [CrossRef] [Green Version]

- Valdenegro-Toro, M. Submerged marine debris detection with autonomous underwater vehicles. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Ettimadai, India, 18–20 December 2016; pp. 1–7. [Google Scholar]

| Parameter | Dimension | Unit |

|---|---|---|

| Panthera height | 1.65 | m |

| Panthera width (Retracted) | 0.80 | m |

| Panthera width (Extended) | 1.70 | m |

| Side brush’s diameter | 0.27 | m |

| Wheels radius and numbers | 0.2, 8 | m, unit |

| Drive | Differential | – |

| Driving power | 700 | W |

| Turning radius | Zero | m |

| Continuous working time | hrs | |

| Working speed | 3 | km/h |

| Driving speed | 5 | km/h |

| Net weight | 530 | Kgs |

| Payload | 150–200 | Kgs |

| Platform locomotion | Omnidirectional | – |

| Power source | Traction batteries DC | 24 V |

| Sensors | RGBD Camera | – |

| Average IOU Matching | Over All Confidence |

|---|---|

| 0.7044 | 0.589 |

| Test | Offline Test | Online Test | Other Metrics | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | Precision | Recall | Overall Accuracy | |||||

| Defect | 93.0 | 91.8 | 92.1 | 89.5 | 87.1 | 86.67 | 4 | 2 | 93.3 |

| Garbage | 96.0 | 94.43 | 93.22 | 91.5 | 87.55 | 87.0 | 7 | 3 | 95.0 |

| Semantic Framework | Pixel-Accuracy | IOU | F1 Score |

|---|---|---|---|

| FCN-8 | 85.33 | 86.72 | 86.89 |

| U-Net | 88.12 | 88.56 | 88.18 |

| SegNet | 93.30 | 93.18 | 90.93 |

| Semantic Framework | Accuracy | Precision | Recall |

|---|---|---|---|

| Faster RCNN ResNet | 97.89 | 96.30 | 96.82 |

| SSD MobileNet | 94.64 | 93.25 | 92.88 |

| Proposed system | 95.00 | 93.11 | 92.66 |

| Database | Precision | Recall | F1 | Average Confidence Level |

|---|---|---|---|---|

| Crack forest (Defect) [40,44] | 94.5 | 91.8 | 92.2 | 96.0 |

| Fan Yang (pavement crack) [41,45] | 93.3 | 89.7 | 90.0 | 95.0 |

| Road Damage Dataset [42] | 92.3 | 88.5 | 89.0 | 93.0 |

| TACO [43] | 96.0 | 93.2 | 92.5 | 97.0 |

| Trashnet [33] | 94 | 98.5 | 97.6 | 94.0 |

| Case Study | Inspection Type | Algorithm | Detection Accuracy |

|---|---|---|---|

| Ju Huyan et al. [46] | Offline | CrackU-net | 98.56 |

| Bang et al. [47] | Offline | deep encoder-decoder network | 90.67 |

| Naddaf-Sh et al. [25] | Real time with drone | multi layer CNN | 96.00 |

| Zheng Tong et al. [48] | Multifunction testing vehicle | FCN with a G-CRF | 82.20 |

| Mandal et al. [29] | Offline | YOLO v2 | 88.00 |

| Majidifard et al. [49] | Offline | YOLO v2 | 93.00 |

| Maeda et al. [42] | Real time with smartphone | SSD MobileNet | 77.00 |

| Zhang et al. [50] | Offline | CrackNet | 90.00 |

| Proposed system | Online and offine | 16 layer CNN | 93.30 |

| Case Study | Algorithm | Detection Accuracy |

|---|---|---|

| Garbage detection on grass [51] | SegNet + ResNet | 96.00 |

| Floor trash detection [35] | Mobilenet V2 SSD | 95.00 |

| Garbage detection on marine [52] | Faster RCNN Inception v2 | 8100 |

| Garbage detection on marine [52] | MobileNet v2 with SSD | 69.00 |

| Proposed system | 16 layer CNN | 95.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ramalingam, B.; Hayat, A.A.; Elara, M.R.; Félix Gómez, B.; Yi, L.; Pathmakumar, T.; Rayguru, M.M.; Subramanian, S. Deep Learning Based Pavement Inspection Using Self-Reconfigurable Robot. Sensors 2021, 21, 2595. https://doi.org/10.3390/s21082595

Ramalingam B, Hayat AA, Elara MR, Félix Gómez B, Yi L, Pathmakumar T, Rayguru MM, Subramanian S. Deep Learning Based Pavement Inspection Using Self-Reconfigurable Robot. Sensors. 2021; 21(8):2595. https://doi.org/10.3390/s21082595

Chicago/Turabian StyleRamalingam, Balakrishnan, Abdullah Aamir Hayat, Mohan Rajesh Elara, Braulio Félix Gómez, Lim Yi, Thejus Pathmakumar, Madan Mohan Rayguru, and Selvasundari Subramanian. 2021. "Deep Learning Based Pavement Inspection Using Self-Reconfigurable Robot" Sensors 21, no. 8: 2595. https://doi.org/10.3390/s21082595