Natural Disasters Intensity Analysis and Classification Based on Multispectral Images Using Multi-Layered Deep Convolutional Neural Network

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Block-I Convolutional Neural Network (B-I CNN)

3.2. Block-II Convolutional Neural Network (B-II CNN)

4. Results and Discussion

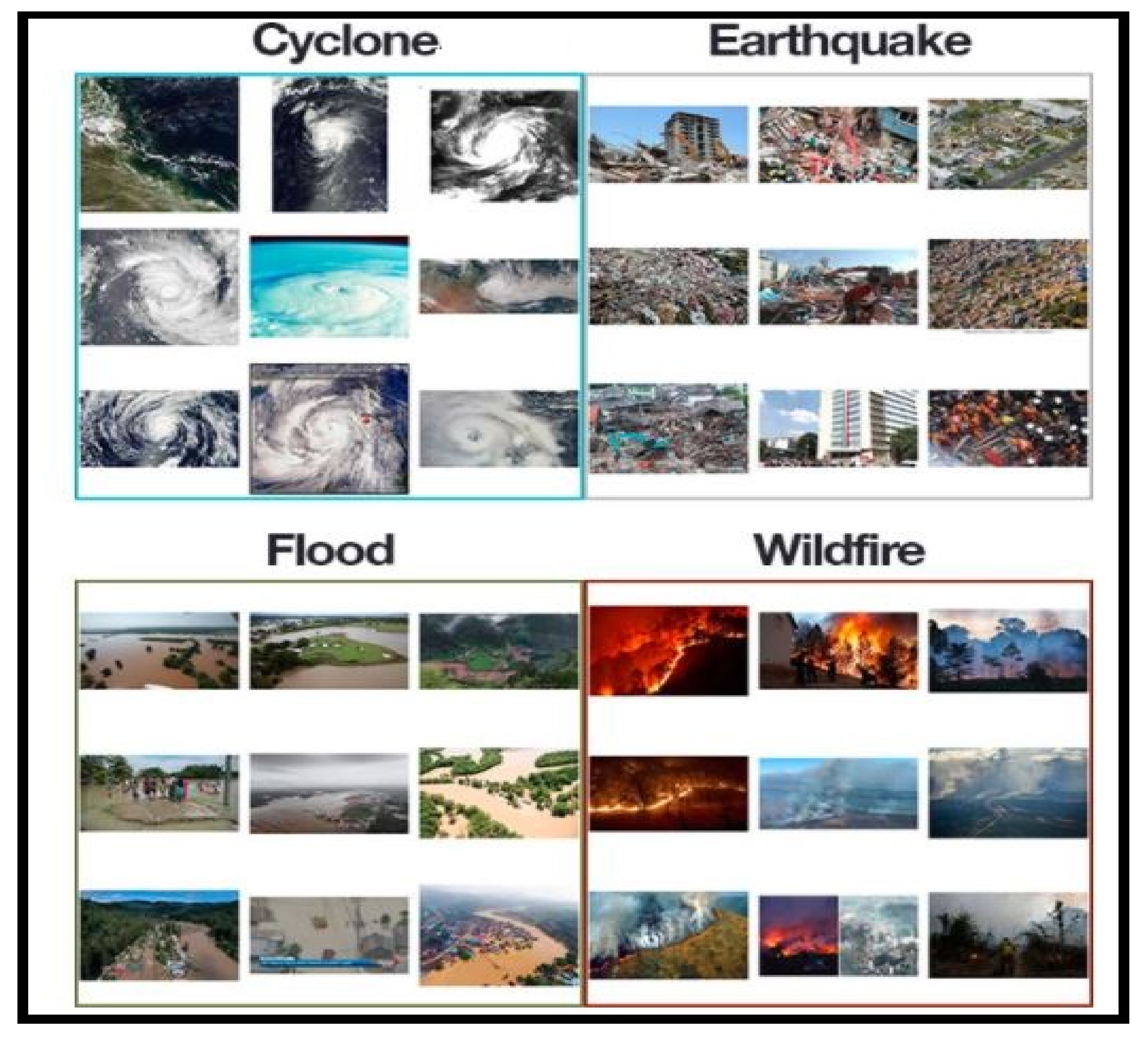

4.1. Dataset and Preprocessing

4.2. Evaluation Criterion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mignan, A.; Broccardo, M. Neural network applications in earthquake prediction (1994–2019): Meta-analytic and statistical insights on their limitations. Seism. Res. Lett. 2020, 91, 2330–2342. [Google Scholar] [CrossRef]

- Tonini, M.; D’Andrea, M.; Biondi, G.; Degli Esposti, S.; Trucchia, A.; Fiorucci, P. A Machine Learning-Based Approach for Wildfire Susceptibility Mapping. The Case Study of the Liguria Region in Italy. Geosciences 2020, 10, 105. [Google Scholar] [CrossRef] [Green Version]

- Islam, A.R.M.T.; Talukdar, S.; Mahato, S.; Kundu, S.; Eibek, K.U.; Pham, Q.B.; Kuriqi, A.; Linh, N.T.T. Flood susceptibility modelling using advanced ensemble machine learning models. Geosci. Front. 2021, 12, 101075. [Google Scholar] [CrossRef]

- Schlemper, J.; Caballero, J.; Hajnal, V.; Price, A.N.; Rueckert, D. A deep cascade of convolutional neural networks for dynamic MR image reconstruction. IEEE Trans. Med. Imaging 2017, 37, 491–503. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tang, C.; Zhu, Q.; Wu, W.; Huang, W.; Hong, C.; Niu, X. PLANET: Improved convolutional neural networks with image enhancement for image classification. Math. Probl. Eng. 2020, 2020. [Google Scholar] [CrossRef]

- Ashiquzzaman, A.; Oh, S.M.; Lee, D.; Lee, J.; Kim, J. Context-aware deep convolutional neural network application for fire and smoke detection in virtual environment for surveillance video analysis. In Smart Trends in Computing and Communications, Proceedings of the SmartCom 2020, Paris, France, 29–31 December 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 459–467. [Google Scholar]

- Li, T.; Zhao, E.; Zhang, J.; Hu, C. Detection of Wildfire Smoke Images Based on a Densely Dilated Convolutional Network. Electronics 2019, 8, 1131. [Google Scholar] [CrossRef] [Green Version]

- Mangalathu, S.; Burton, H.V. Deep learning-based classification of earthquake-impacted buildings using textual damage descriptions. Int. J. Disaster Risk Reduct. 2019, 36, 101111. [Google Scholar] [CrossRef]

- Hartawan, D.R.; Purboyo, T.W.; Setianingsih, C. Disaster Victims Detection System Using Convolutional Neural Network (CNN) Method. In Proceedings of the 2019 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), Bali, Indonesia, 1–3 July 2019; pp. 105–111. [Google Scholar]

- Amit, S.N.K.B.; Aoki, Y. Disaster detection from aerial imagery with convolutional neural network. In Proceedings of the 2017 International Electronics Symposium on Knowledge Creation and Intelligent Computing (IES-KCIC), Surabaya, Indonesia, 26–27 September 2017; pp. 239–245. [Google Scholar]

- Yang, S.; Hu, J.; Zhang, H.; Liu, G. Simultaneous Earthquake Detection on Multiple Stations via a Convolutional Neural Network. Seism. Res. Lett. 2021, 92, 246–260. [Google Scholar] [CrossRef]

- Madichetty, S.; Sridevi, M. Detecting informative tweets during disaster using deep neural networks. In Proceedings of the 2019 11th International Conference on Communication Systems & Networks (COMSNETS), Bangalore, India, 7–11 January 2019; pp. 709–713. [Google Scholar]

- Nunavath, V.; Goodwin, M. The role of artificial intelligence in social media big data analytics for disaster management-initial results of a systematic literature review. In Proceedings of the 2018 5th International Conference on Information and Communication Technologies for Disaster Management (ICT-DM), Sendai, Japan, 4– December 2018; pp. 1–4. [Google Scholar]

- Boonsuk, R.; Sudprasert, C.; Supratid, S. An Investigation on Facial Emotional Expression Recognition Based on Linear-Decision-Boundaries Classifiers Using Convolutional Neural Network for Feature Extraction. In Proceedings of the 2019 11th International Conference on Information Technology and Electrical Engineering (ICITEE), Pattaya, Thailand, 10–11 October 2019; pp. 1–5. [Google Scholar]

- Zhou, F.; Huang, J.; Sun, B.; Wen, G.; Tian, Y. Intelligent Identification Method for Natural Disasters along Transmission Lines Based on Inter-Frame Difference and Regional Convolution Neural Network. In Proceedings of the 2019 IEEE International Conference on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Communications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), Xiamen, China, 16–18 December 2019; pp. 218–222. [Google Scholar]

- Sulistijono, I.A.; Imansyah, T.; Muhajir, M.; Sutoyo, E.; Anwar, M.K.; Satriyanto, E.; Basuki, A.; Risnumawan, A. Implementation of Victims Detection Framework on Post Disaster Scenario. In Proceedings of the 2018 International Electronics Symposium on Engineering Technology and Applications (IES-ETA), Bali, Indonesia, 29–30 October 2018; pp. 253–259. [Google Scholar]

- Padmawar, P.M.; Shinde, A.S.; Sayyed, T.Z.; Shinde, S.K.; Moholkar, K. Disaster Prediction System using Convolution Neural Network. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 17–19 July 2019; pp. 808–812. [Google Scholar]

- Chen, Y.; Zhang, Y.; Xin, J.; Wang, G.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. UAV Image-based Forest Fire Detection Approach Using Convolutional Neural Network. In Proceedings of the 2019 14th IEEE Conference on Industrial Electronics and Applications (ICIEA), Xi’an, China, 18–21 June 2019; pp. 2118–2123. [Google Scholar]

- Gonzalez, A.; Zuniga, M.D.; Nikulin, C.; Carvajal, G.; Cardenas, D.G.; Pedraza, M.A.; Fernández, C.; Munoz, R.; Castro, N.; Rosales, B.; et al. Accurate fire detection through fully convolutional network. In Proceedings of the 7th Latin American Conference on Networked and Electronic Media (LACNEM 2017), Valparaiso, Chile, 6–7 November 2017. [Google Scholar]

- Samudre, P.; Shende, P.; Jaiswal, V. Optimizing Performance of Convolutional Neural Network Using Computing Technique. In Proceedings of the 2019 IEEE 5th International Conference for Convergence in Technology (I2CT), Pune, India, 29–31 March 2019; pp. 1–4. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.-T.; Lee, H.-W.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 8–11 January 2017; pp. 252–253. [Google Scholar]

- Nguyen, D.T.; Ofli, F.; Imran, M.; Mitra, P. Damage assessment from social media imagery data during disasters. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, Sydney, NSW, Australia, 31 July–3 August 2017; pp. 569–576. [Google Scholar]

- Direkoglu, C. Abnormal Crowd Behavior Detection Using Motion Information Images and Convolutional Neural Networks. IEEE Access 2020, 8, 80408–80416. [Google Scholar] [CrossRef]

- Yuan, F.; Zhang, L.; Xia, X.; Huang, Q.; Li, X. A wave-shaped deep neural network for smoke density estimation. IEEE Trans. Image Process. 2019, 29, 2301–2313. [Google Scholar] [CrossRef]

- Layek, A.K.; Poddar, S.; Mandal, S. Detection of Flood Images Posted on Online Social Media for Disaster Response. In Proceedings of the 2019 Second International Conference on Advanced Computational and Communication Paradigms (ICACCP), Gangtok, Sikkim, India, 28–28 February 2019; pp. 1–6. [Google Scholar]

- Amezquita-Sanchez, J.; Valtierra-Rodriguez, M.; Adeli, H. Current efforts for prediction and assessment of natural disasters: Earthquakes, tsunamis, volcanic eruptions, hurricanes, tornados, and floods. Sci. Iran. 2017, 24, 2645–2664. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.Y.; Li, X.; Lin, X. The data mining technology of particle swarm optimization algorithm in earthquake prediction. Adv. Mater. Res. 2014, 989–994, 1570–1573. [Google Scholar] [CrossRef]

- Adeli, H.; Panakkat, A. A probabilistic neural network for earthquake magnitude prediction. Neural Netw. 2009, 22, 1018–1024. [Google Scholar] [CrossRef]

- Kradolfer, U. SalanderMaps: A rapid overview about felt earthquakes through data mining of web-accesses. In Proceedings of the EGU General Assembly Conference, Vienna, Austria, 7–12 April 2013; pp. EGU2013–6400. [Google Scholar]

- Merz, B.; Kreibich, H.; Lall, U. Multi-variate flood damage assessment: A tree-based data-mining approach. Nat. Hazards Earth Syst. Sci. 2013, 13, 53–64. [Google Scholar] [CrossRef]

- Sahay, R.R.; Srivastava, A. Predicting monsoon floods in rivers embedding wavelet transform, genetic algorithm and neural network. Water Resour. Manag. 2014, 28, 301–317. [Google Scholar] [CrossRef]

- Venkatesan, M.; Thangavelu, A.; Prabhavathy, P. An improved Bayesian classification data mining method for early warning landslide susceptibility model using GIS. In Proceedings of the Seventh International Conference on Bio-Inspired Computing: Theories and Applications (BIC-TA 2012), Springer, Gwalior, India, 12–16 December 2012; pp. 277–288. [Google Scholar]

- Korup, O.; Stolle, A. Landslide prediction from machine learning. Geol. Today 2014, 30, 26–33. [Google Scholar] [CrossRef]

- Di Salvo, R.; Montalto, P.; Nunnari, G.; Neri, M.; Puglisi, G. Multivariate time series clustering on geophysical data recorded at Mt. Etna from 1996 to 2003. J. Volcanol. Geotherm. Res. 2013, 251, 65–74. [Google Scholar] [CrossRef]

- Das, H.S.; Jung, H. An efficient tool to assess risk of storm surges using data mining. Coast. Hazards 2013, 80–91. [Google Scholar] [CrossRef]

- Chatfield, A.T.; Brajawidagda, U. Twitter early tsunami warning system: A case study in Indonesia’s natural disaster management. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2013; pp. 2050–2060. [Google Scholar]

- Khalaf, M.; Alaskar, H.; Hussain, A.J.; Baker, T.; Maamar, Z.; Buyya, R.; Liatsis, P.; Khan, W.; Tawfik, H.; Al-Jumeily, D. IoT-enabled flood severity prediction via ensemble machine learning models. IEEE Access 2020, 8, 70375–70386. [Google Scholar] [CrossRef]

- Zhai, C.; Zhang, S.; Cao, Z.; Wang, X. Learning-based prediction of wildfire spread with real-time rate of spread measurement. Combust. Flame 2020, 215, 333–341. [Google Scholar] [CrossRef]

- Tan, J.; Chen, S.; Wang, J. Western North Pacific tropical cyclone track forecasts by a machine learning model. Stoch. Environ. Res. Risk Assess. 2020, 1–14. [Google Scholar] [CrossRef]

- Liu, Y.Y.; Li, L.; Liu, Y.S.; Chan, P.W.; Zhang, W.H.; Zhang, L. Estimation of precipitation induced by tropical cyclones based on machine-learning-enhanced analogue identification of numerical prediction. Meteorol. Appl. 2021, 28, e1978. [Google Scholar] [CrossRef]

- Meadows, M.; Wilson, M. A Comparison of Machine Learning Approaches to Improve Free Topography Data for Flood Modelling. Remote Sens. 2021, 13, 275. [Google Scholar] [CrossRef]

- Nisa, A.K.; Irawan, M.I.; Pratomo, D.G. Identification of Potential Landslide Disaster in East Java Using Neural Network Model (Case Study: District of Ponogoro). J. Phys. Conf. Ser. 2019, 1366, 012095. [Google Scholar] [CrossRef]

- Chen, X.; Xu, Y.; Wong, D.W.K.; Wong, T.Y.; Liu, J. Glaucoma detection based on deep convolutional neural network. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 715–718. [Google Scholar]

- Asaoka, R.; Murata, H.; Iwase, A.; Araie, M. Detecting preperimetric glaucoma with standard automated perimetry using a deep learning classifier. Ophthalmology 2016, 123, 1974–1980. [Google Scholar] [CrossRef]

- Salam, A.A.; Khalil, T.; Akram, M.U.; Jameel, A.; Basit, I. Automated detection of glaucoma using structural and non structural features. Springerplus 2016, 5, 1519. [Google Scholar] [CrossRef] [Green Version]

- Claro, M.; Santos, L.; Silva, W.; Araújo, F.; Moura, N.; Macedo, A. Automatic glaucoma detection based on optic disc segmentation and texture feature extraction. CLEI Electron. J. 2016, 19, 5. [Google Scholar] [CrossRef]

- Abbas, Q. Glaucoma-deep: Detection of glaucoma eye disease on retinal fundus images using deep learning. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 41–45. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Xie, X.; Shen, L.; Liu, S. Reverse active learning based atrous DenseNet for pathological image classification. BMC Bioinform. 2019, 20, 445. [Google Scholar] [CrossRef]

- Thakoor, K.A.; Li, X.; Tsamis, E.; Sajda, P.; Hood, D.C. Enhancing the Accuracy of Glaucoma Detection from OCT Probability Maps using Convolutional Neural Networks. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2036–2040. [Google Scholar]

- Li, L.; Xu, M.; Wang, X.; Jiang, L.; Liu, H. Attention Based Glaucoma Detection: A Large-scale Database and CNN Model. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 10571–10580. [Google Scholar]

- Aamir, M.; Irfan, M.; Ali, T.; Ali, G.; Shaf, A.; Al-Beshri, A.; Alasbali, T.; Mahnashi, M.H. An Adoptive Threshold-Based Multi-Level Deep Convolutional Neural Network for Glaucoma Eye Disease Detection and Classification. Diagnostics 2020, 10, 602. [Google Scholar] [CrossRef]

- Aamir, M.; Ali, T.; Shaf, A.; Irfan, M.; Saleem, M.Q. ML-DCNNet: Multi-level Deep Convolutional Neural Network for Facial Expression Recognition and Intensity Estimation. Arab. J. Sci. Eng. 2020, 45, 10605–10620. [Google Scholar] [CrossRef]

| Reference | Methodology Name | Outcomes | Weakness |

|---|---|---|---|

| [26] | Signal processing, image processing and statistical technique | More accurate prediction of natural disasters | Limited statistical parameters for prediction |

| [27] | Particle swarm optimization | Predict magnitude of earthquake | Work only for prediction on seismic dataset |

| [28] | Neural network | Predict magnitude of earthquake | Limited parameters used for prediction |

| [29] | Text mining, regular log mining technique | Detect earthquake with speed and accuracy on seismological data | Depends on public feedback to detect earthquake |

| [30] | Decision tree | Utilize some parameters to access the model for flood damage area detection | Parametric limitation for the detection of flood damaging regions |

| [31] | Artificial neural network, genetic algorithm and wavelet transfer technique | Sum-up good results as compared to the already existing techniques in the southeast Asia | Work for monsoon floods in June and September for specific regions in India for time series data |

| [32] | Support vector machine, naïve Bayes | Classify the natural disasters on various parameters | Limited for only early stages of natural disasters |

| [33] | Machine learning technique | Predict the land slidding with the accuracy rate of 75 to 95 | More guidlines for model selection for predition large scale landslide |

| [34] | Neural network and back propagation | Prediction occur on past dataset | Dyanamic prediction is very much crucial for this system |

| [35] | Clustering for multivariable time series | Proposed a dynamic clustering approch for time series analysis and self-optimize organizing mapping technique | Dynamic time series data required for clustering process |

| [36] | Data mining technique | A real time desktop-based GUI system is designed to predict local storm | Use parallel computing process that takes various amounts of time to predict storm |

| [37] | Text mining technique | Develop a public platform to inform early tsunami prediction and information | Public feedback is compulsory for prediction process |

| [38] | Random forest, long short-term model | Evaluate the flood severity in terms of sensitivity, specificity and accuracy as 71.4%, 85.9%, 81.13%, respectively | Particle swarm optimization and other deep learning techniques can be used as a future work |

| [39] | A learning-based wildfire model | Proposed method can predict the short term spread of wildfire | Real time rate of wildfire spread is required for initial stage |

| [40] | Machine learning technique | The gradient boosting tree and CLIPER model used for cyclone prediction | Model is still weak to produce velocity sensitivities |

| [41] | Machine learning technique with numerical weather prediction | The prediction method is used for China that shows significant improvement as compared to the traditional methods | Still lack symmetric parameters for numerical computations |

| [42] | Artificial neural network | A fully connected neural network for segmentation which is used for multivariable pattern recognition at different levels | It works on multivariable parameters rather than the pixel by pixel parameters |

| Block-I Convolutional Neural Network (B-I CNN) with Learning Rate = 0.001 and Epochs = 40 | ||

|---|---|---|

| Layer Name and Batches | Parameters | |

| Image Input Layer | Height: 100, Width: 120, Channel: 3 | |

| Batch I: | Convolution Layer Batch Normalization Layer Relu Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 8, stride = 1 |

| Batch II: | Convolution Layer Batch Normalization Layer Relu Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 16, stride = 1 |

| Batch III: | Convolution Layer Batch Normalization Layer Relu Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 32, stride = 1 |

| Fully Connected Layer | 4 Classes | |

| Block-II Convolutional Neural Network (B-II CNN) with Learning Rate = 0.001 and Epochs = 30 | ||

|---|---|---|

| Layer Name and Batches | Parameters | |

| Image Input Layer | Height: 100, Width: 120, Channel: 3 | |

| Batch I: | Convolution Layer Batch Normalization Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 8, stride = 1 |

| Batch II: | Convolution Layer Batch Normalization Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 16, stride = 1 |

| Batch III: | Convolution Layer Batch Normalization Layer Max Pooling Layer | Filter size: 3 × 3, No. of filters = 32, stride = 1 |

| Fully Connected Layer | 4 Classes | |

| Disaster Type | Total | Training | Test | Validation |

|---|---|---|---|---|

| Cyclone | 928 | 500 | 300 | 128 |

| Earthquake | 1350 | 600 | 300 | 450 |

| Flood | 1073 | 600 | 300 | 173 |

| Wildfire | 1077 | 600 | 300 | 177 |

| Total | 4428 | 2300 | 1200 | 928 |

| Sr. | Disaster Type | SE (%) | SP (%) | AR (%) | PRE (%) | F1 (%) |

|---|---|---|---|---|---|---|

| 1 | Cyclone | 97.15 | 98.08 | 100.00 | 97.32 | 97.36 |

| 2 | Earthquake | 95.18 | 97.11 | 99.70 | 96.34 | 98.88 |

| 3 | Flood | 99.17 | 99.13 | 100.00 | 99.05 | 99.23 |

| 4 | Wildfire | 98.67 | 98.56 | 100.00 | 98.45 | 96.44 |

| Average | 97.54 | 98.22 | 99.92 | 97.79 | 97.97 | |

| Cited | Technique Used | Accuracy-Rate (%) | Year |

|---|---|---|---|

| [43] | CNN | 84.00 | 2015 |

| [44] | Feed-Forward neural network | 92.00 | 2016 |

| [45] | Support Vector Machine | 87.00 | 2016 |

| [46] | CNN | 90.00 | 2016 |

| [47] | Glaucoma-Deep (CNN, DBN d, Softmax) | 99.0 | 2017 |

| [48] | RestNet-50 | 96.02 | 2018 |

| [7] | WSDD-Net | 99.20 | 2019 |

| [49] | OCT Probability map using CNN | 95.7 | 2019 |

| [50] | Attention Guided Convolutional Neural Network | 95.3 | 2019 |

| [51] | ML-DCNN | 99.39 | 2020 |

| [52] | ML-DCNNet | 99.14 | 2020 |

| Proposed Multilayered Deep Convolutional Neural Network | 99.92 | 2021 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aamir, M.; Ali, T.; Irfan, M.; Shaf, A.; Azam, M.Z.; Glowacz, A.; Brumercik, F.; Glowacz, W.; Alqhtani, S.; Rahman, S. Natural Disasters Intensity Analysis and Classification Based on Multispectral Images Using Multi-Layered Deep Convolutional Neural Network. Sensors 2021, 21, 2648. https://doi.org/10.3390/s21082648

Aamir M, Ali T, Irfan M, Shaf A, Azam MZ, Glowacz A, Brumercik F, Glowacz W, Alqhtani S, Rahman S. Natural Disasters Intensity Analysis and Classification Based on Multispectral Images Using Multi-Layered Deep Convolutional Neural Network. Sensors. 2021; 21(8):2648. https://doi.org/10.3390/s21082648

Chicago/Turabian StyleAamir, Muhammad, Tariq Ali, Muhammad Irfan, Ahmad Shaf, Muhammad Zeeshan Azam, Adam Glowacz, Frantisek Brumercik, Witold Glowacz, Samar Alqhtani, and Saifur Rahman. 2021. "Natural Disasters Intensity Analysis and Classification Based on Multispectral Images Using Multi-Layered Deep Convolutional Neural Network" Sensors 21, no. 8: 2648. https://doi.org/10.3390/s21082648