Hybrid Task Coordination Using Multi-Hop Communication in Volunteer Computing-Based VANETs

Abstract

1. Introduction

- (1)

- We propose a hybrid task coordination model for job execution and surplus resource utilization. This model consists of the infrastructure and ad-hoc task coordination simultaneously.

- (2)

- We propose a method to identify the boundary relay vehicles to enhance the region of resource utilization without using additional RSUs.

- (3)

- We design and validate the primary and secondary task coordination algorithms.

2. Related Works

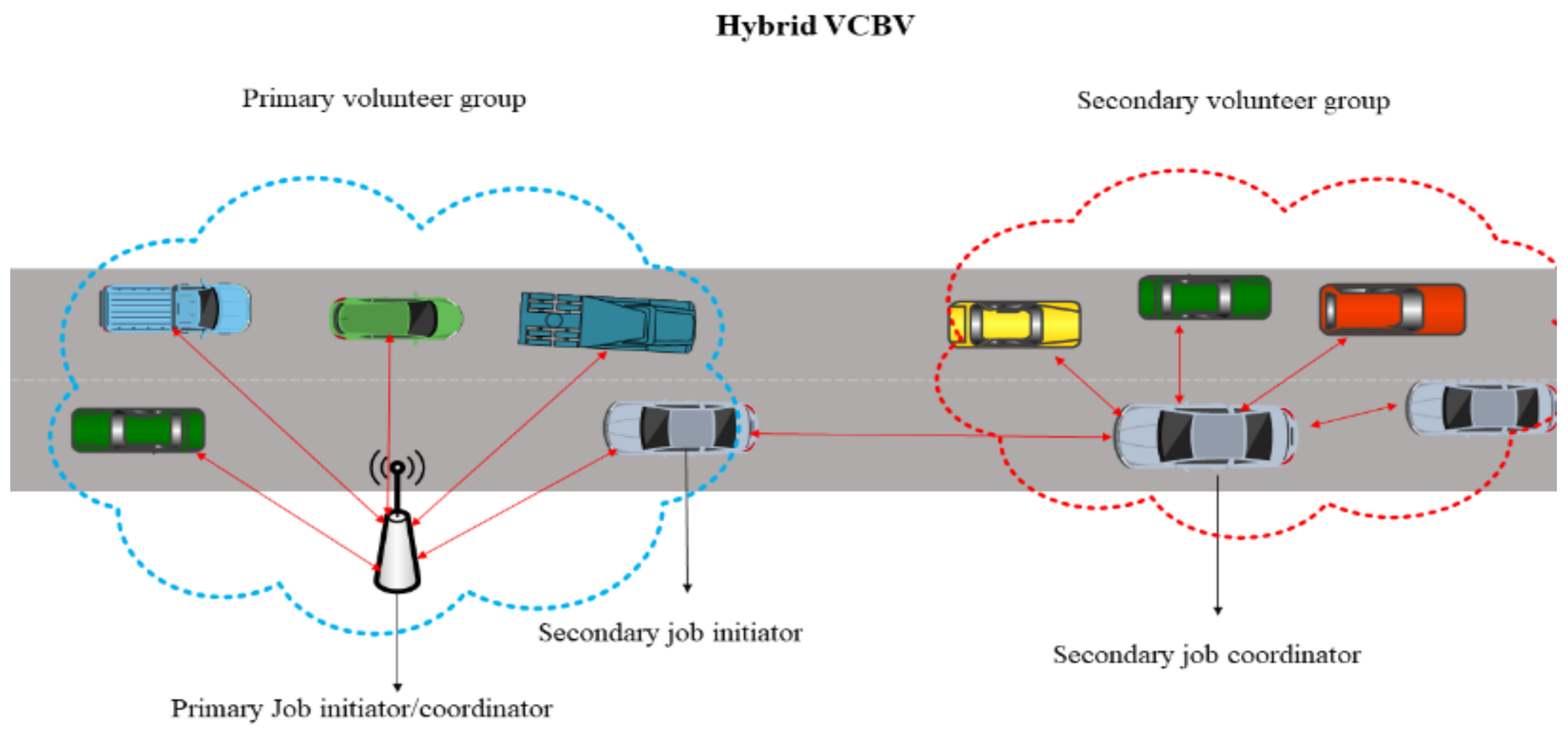

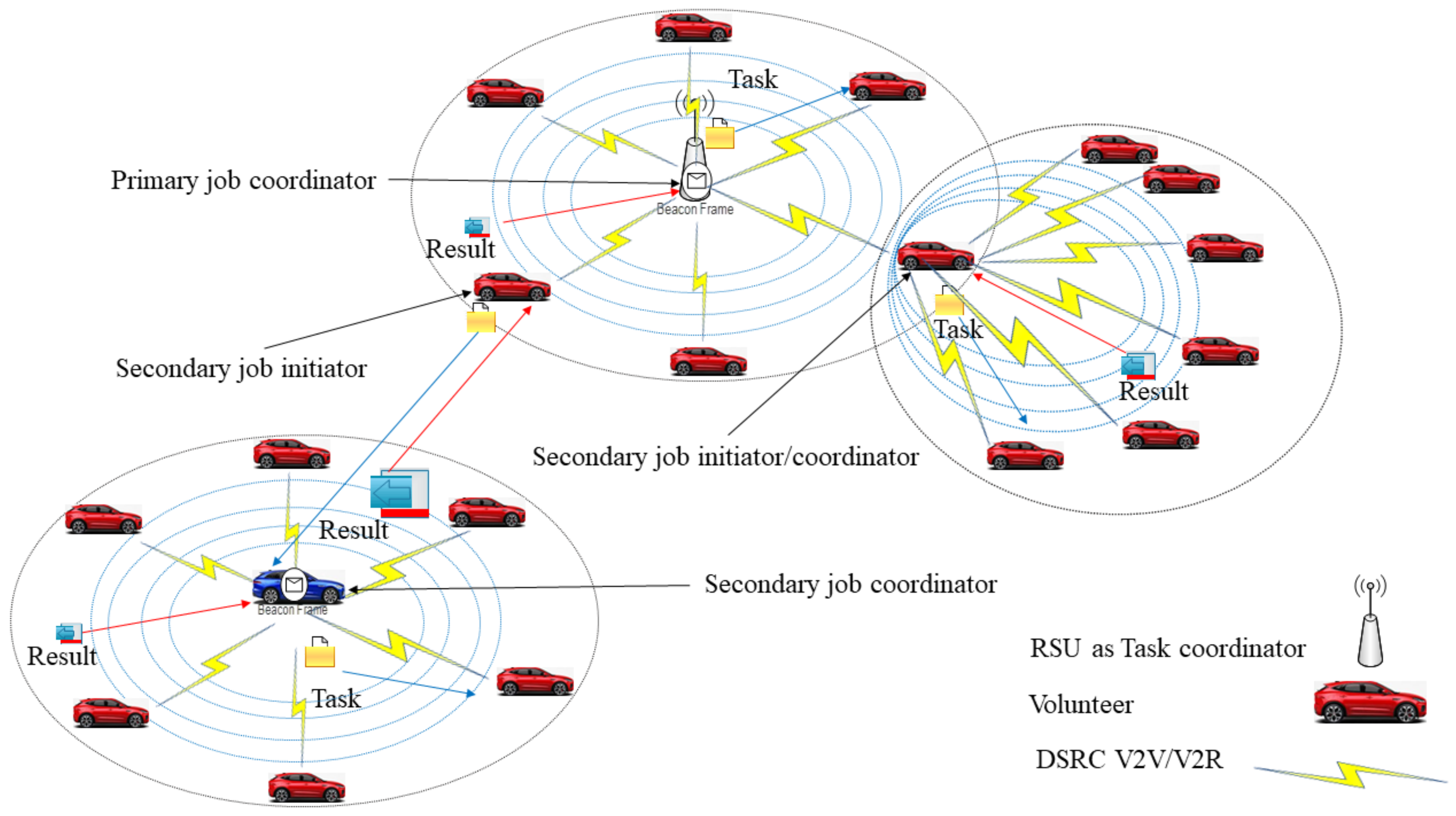

3. Hybrid Volunteer Computing Based VANET

4. Hybrid VCBV System Model

4.1. Network Model

4.1.1. Primary Job Initiator

4.1.2. Primary Task Coordinator

4.1.3. Volunteer Vehicles

4.1.4. Secondary Job Initiator

4.1.5. Secondary Task Coordinator

4.2. Communication Model

4.3. Task Model

4.4. Vehicle Computation Model

4.5. Cloud Computation Model

4.6. Edge Computation Model

4.7. System Utility Function

5. Avoiding Costs Paid to Third-Party Vendors

6. Proposed Offloading and Resource Allocation Model

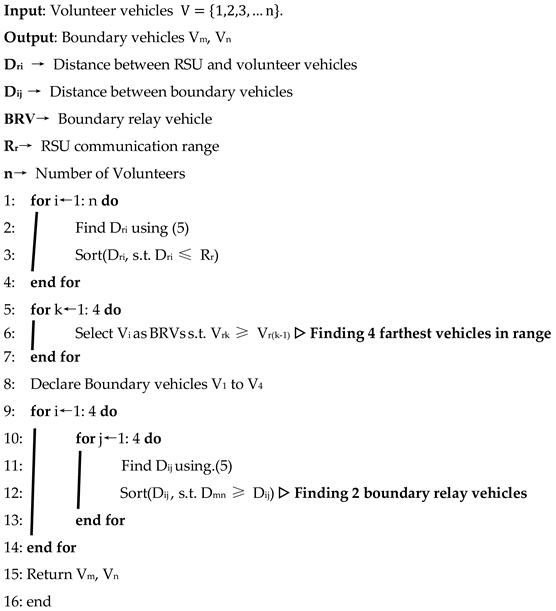

6.1. Boundary Relay Vehicles Determination Algorithm

| Algorithm I: Proposed BRVD algorithm for Hybrid VCBV |

|

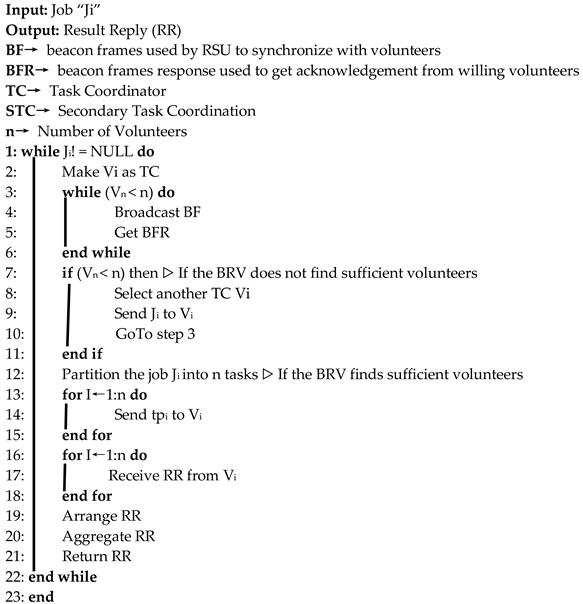

6.2. Hybrid Based VCBV Task Coordination Algorithm

| Algorithm II: Proposed HBVTC algorithm for Hybrid VCBV |

|

6.3. Secondary Task Coordination

| Algorithm III: Proposed STC algorithm for Hybrid VCBV |

|

7. Performance Evaluations

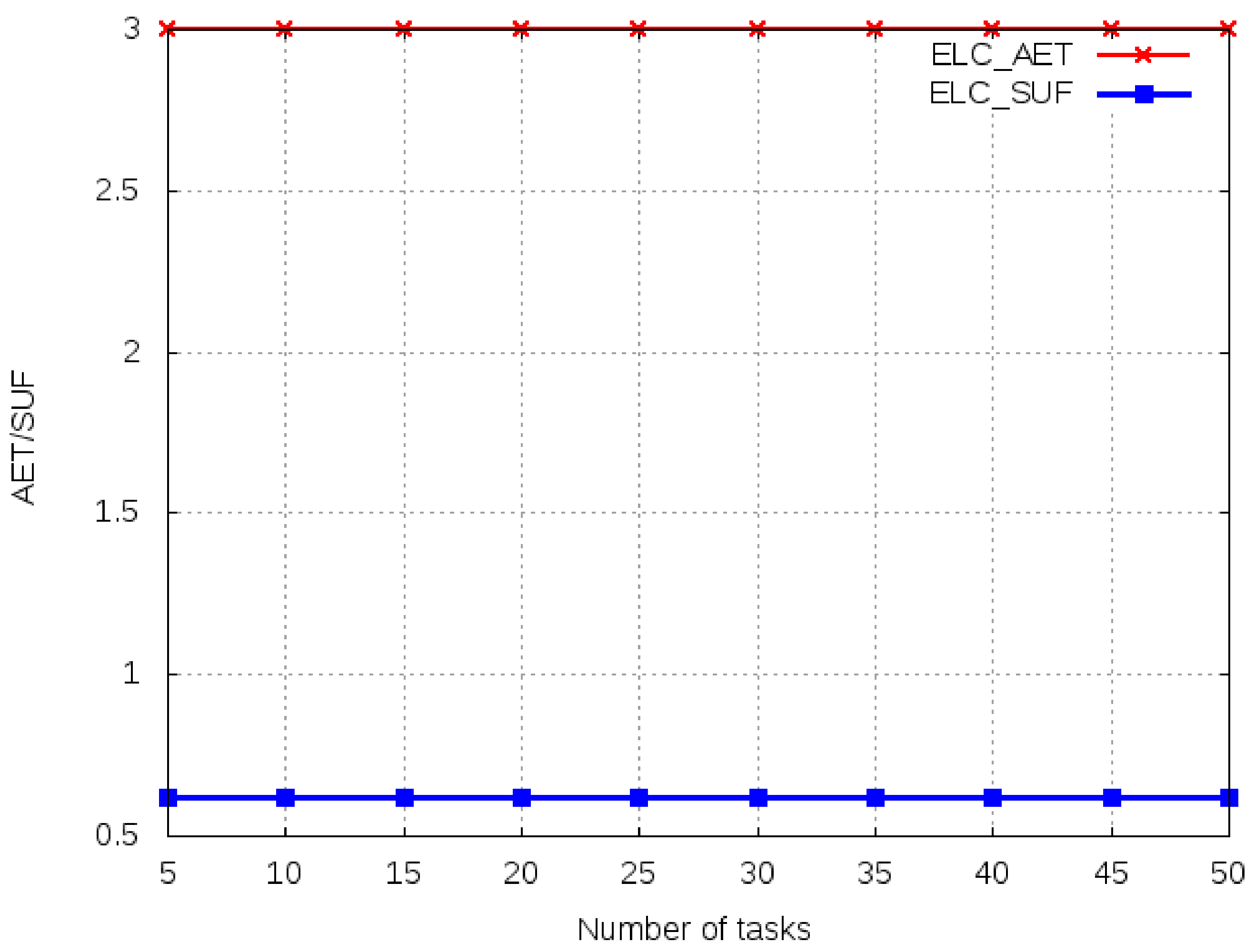

- The Entire Local Computing (ELC) scheme, where all the jobs are executed on the vehicles locally. We take ELC as a benchmark for the decision to offload. Any offloading job expected to have makespan more than ELC will be rejected for the offloading procedure.

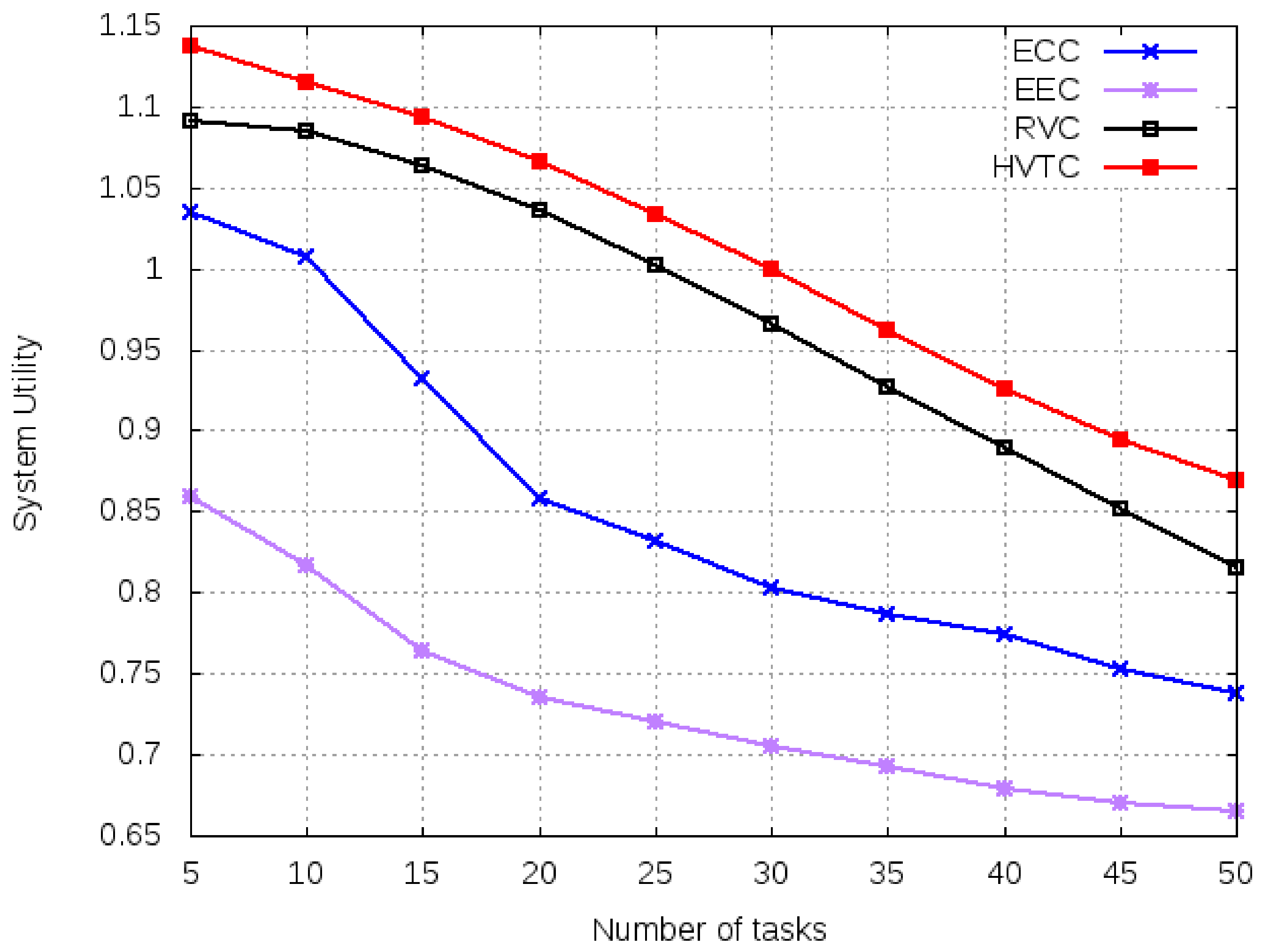

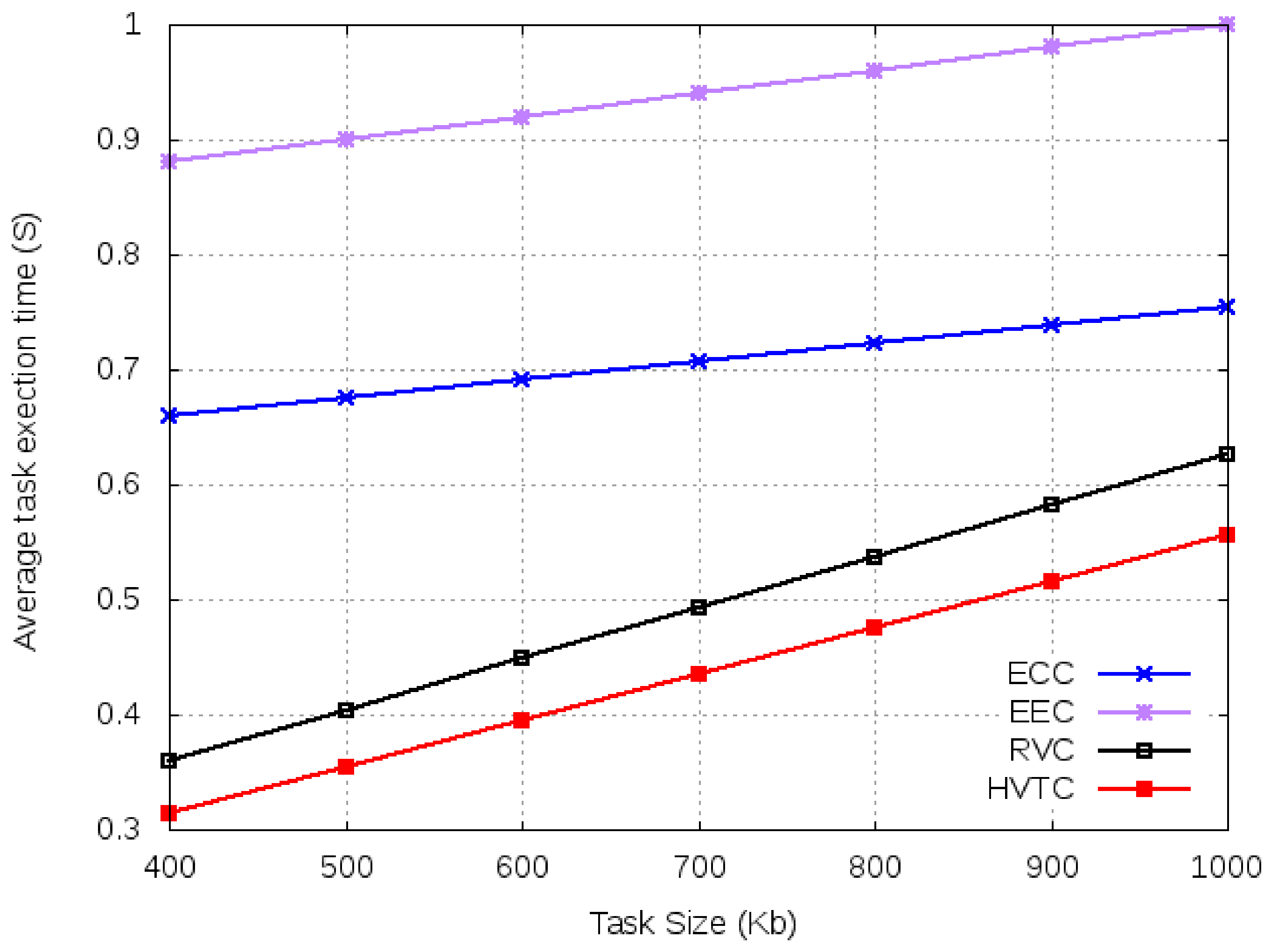

- The Entire Cloud Computing (ECC) scheme, where all the jobs are offloaded to cloud servers for execution. ECC is modelled using the eDors algorithm [19] which optimizes the consumed energy and latency using dynamic offloading and resource scheduling at the cloud.

- The Entire Edge Computing (EEC) scheme, where all the jobs are executed at edge servers. In VEC, these edge servers are placed at RSU and named as VEC servers. We use JSCO [31], a low complexity algorithm to model EEC.

- The RSU-based VCBV Computing (RVC) using the single-hop scheme, where all the jobs are executed using volunteers in the communication range of the RSU. This scheme uses infrastructure based VCBV where all the volunteer vehicles are lying in the communication range (one hop) of the RSU.

7.1. Simulation Setup

7.2. Performance Comparisons

7.2.1. Different Number of Tasks

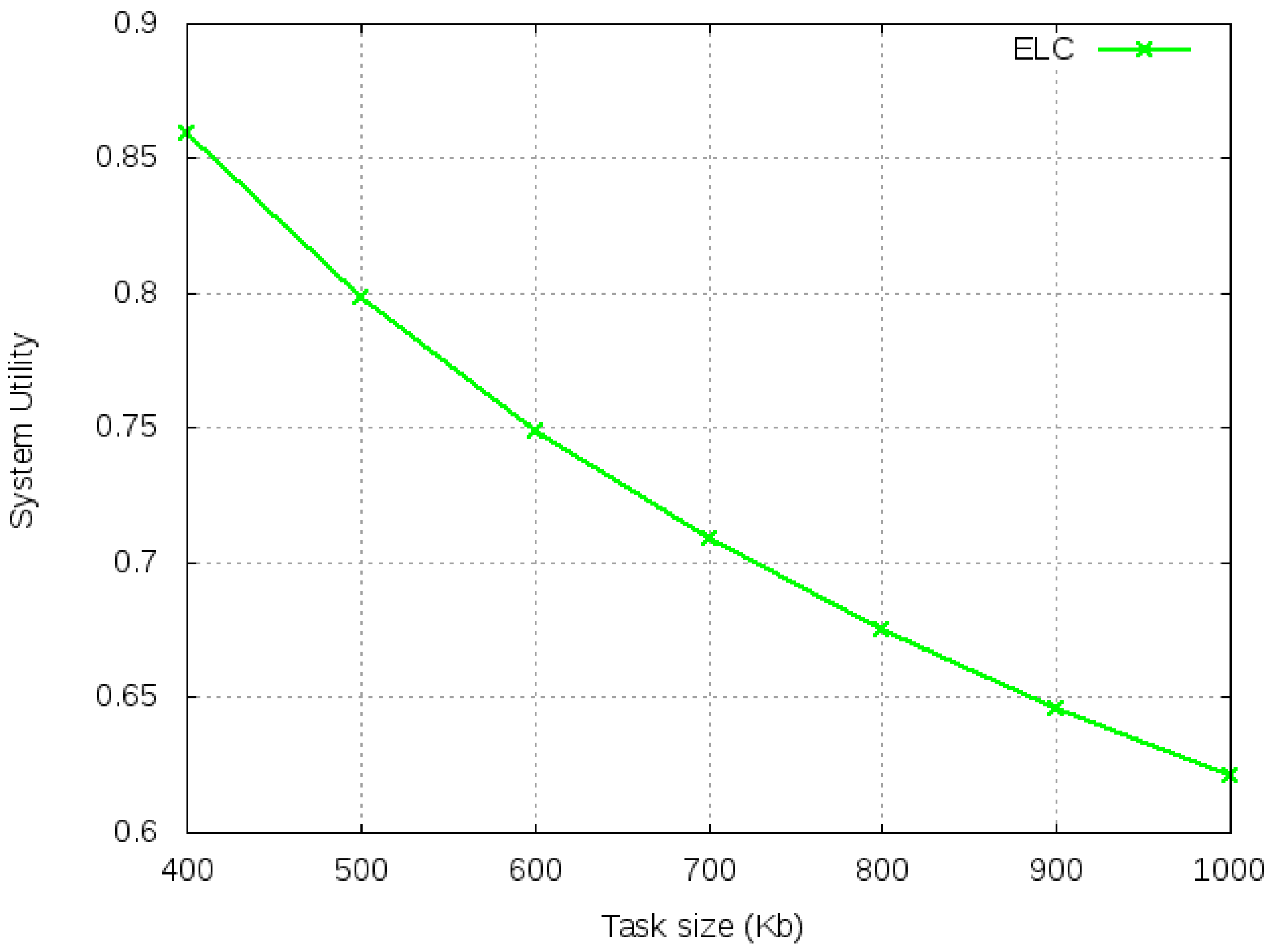

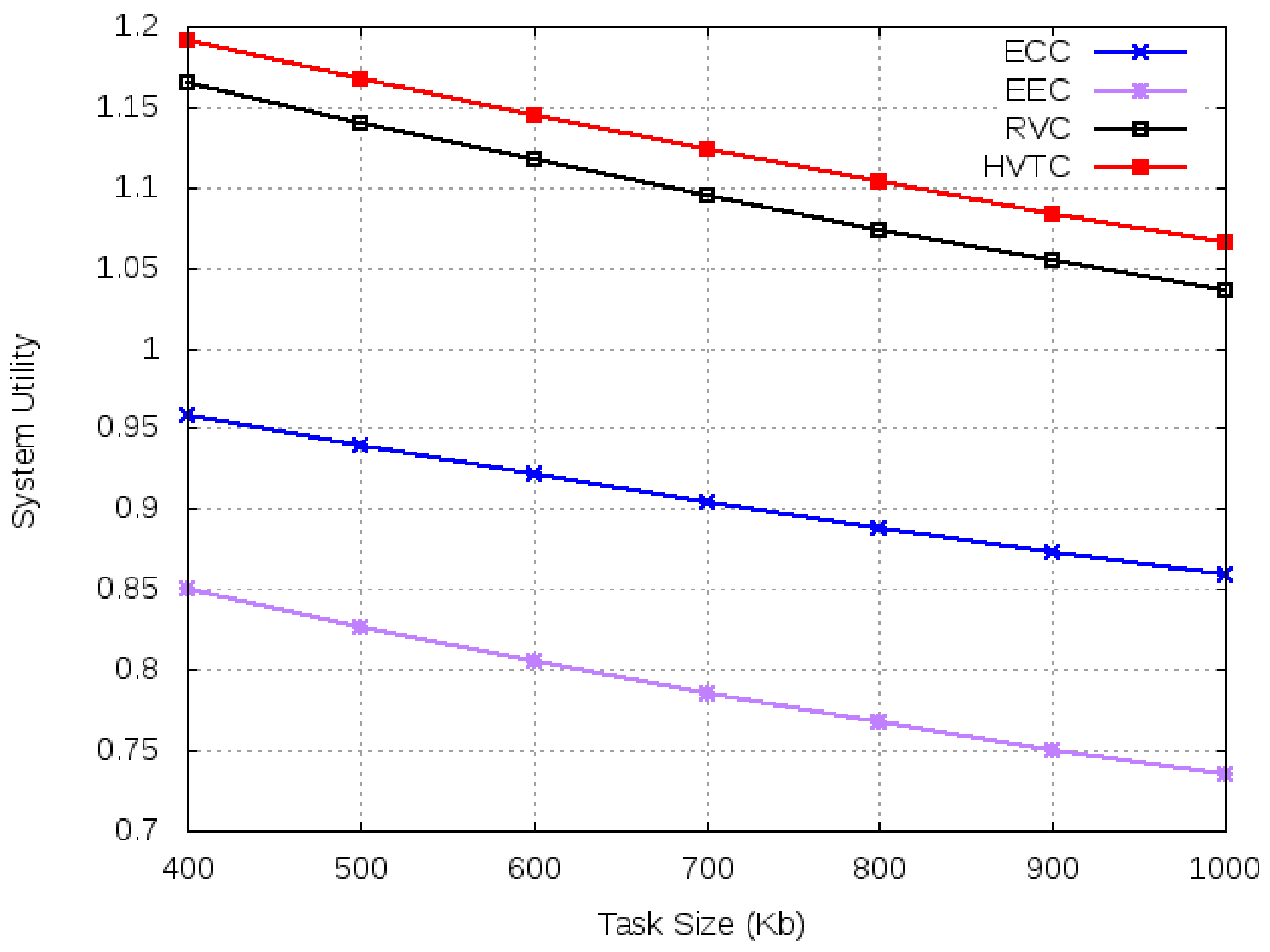

7.2.2. Varied Task Size

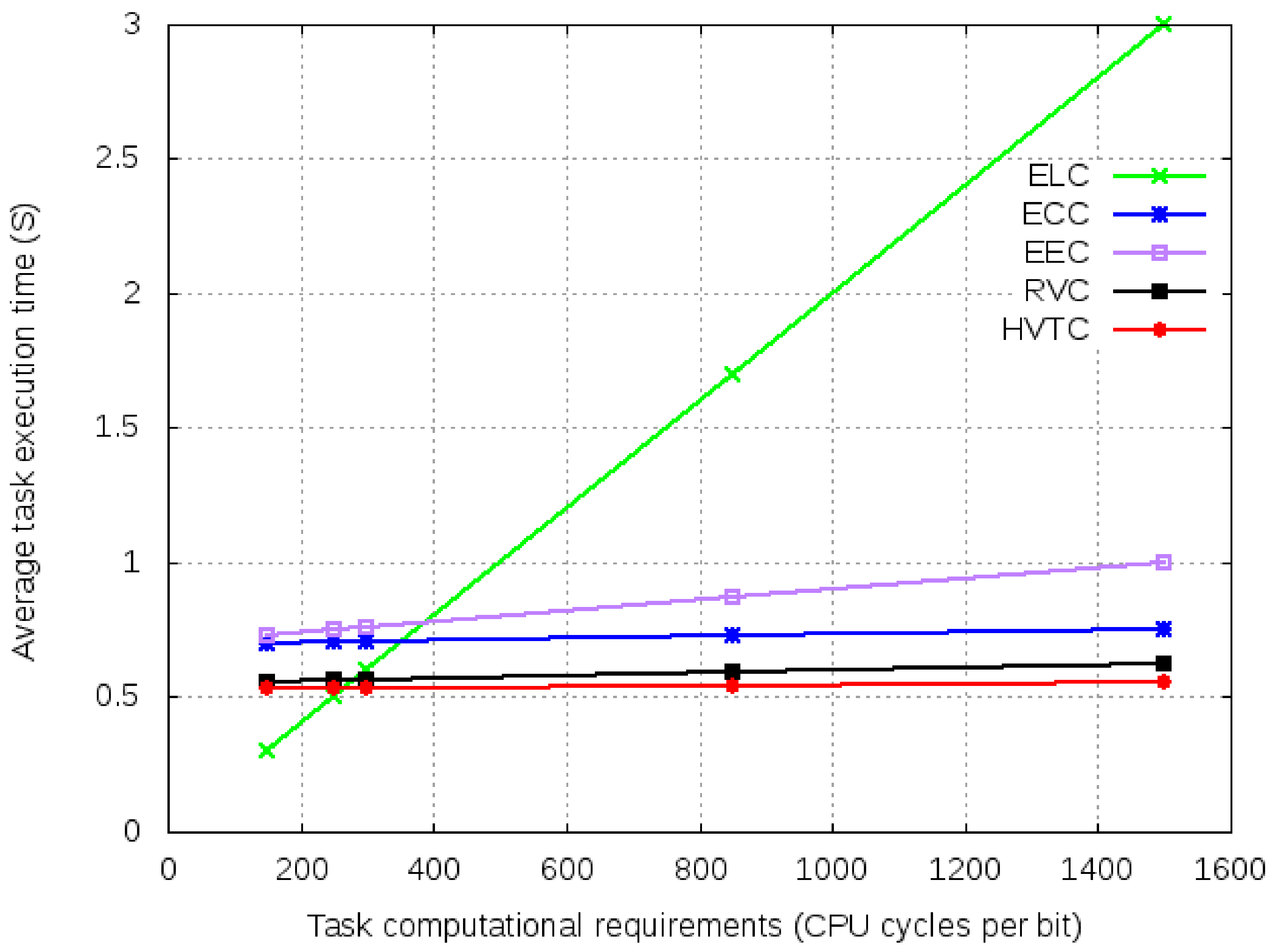

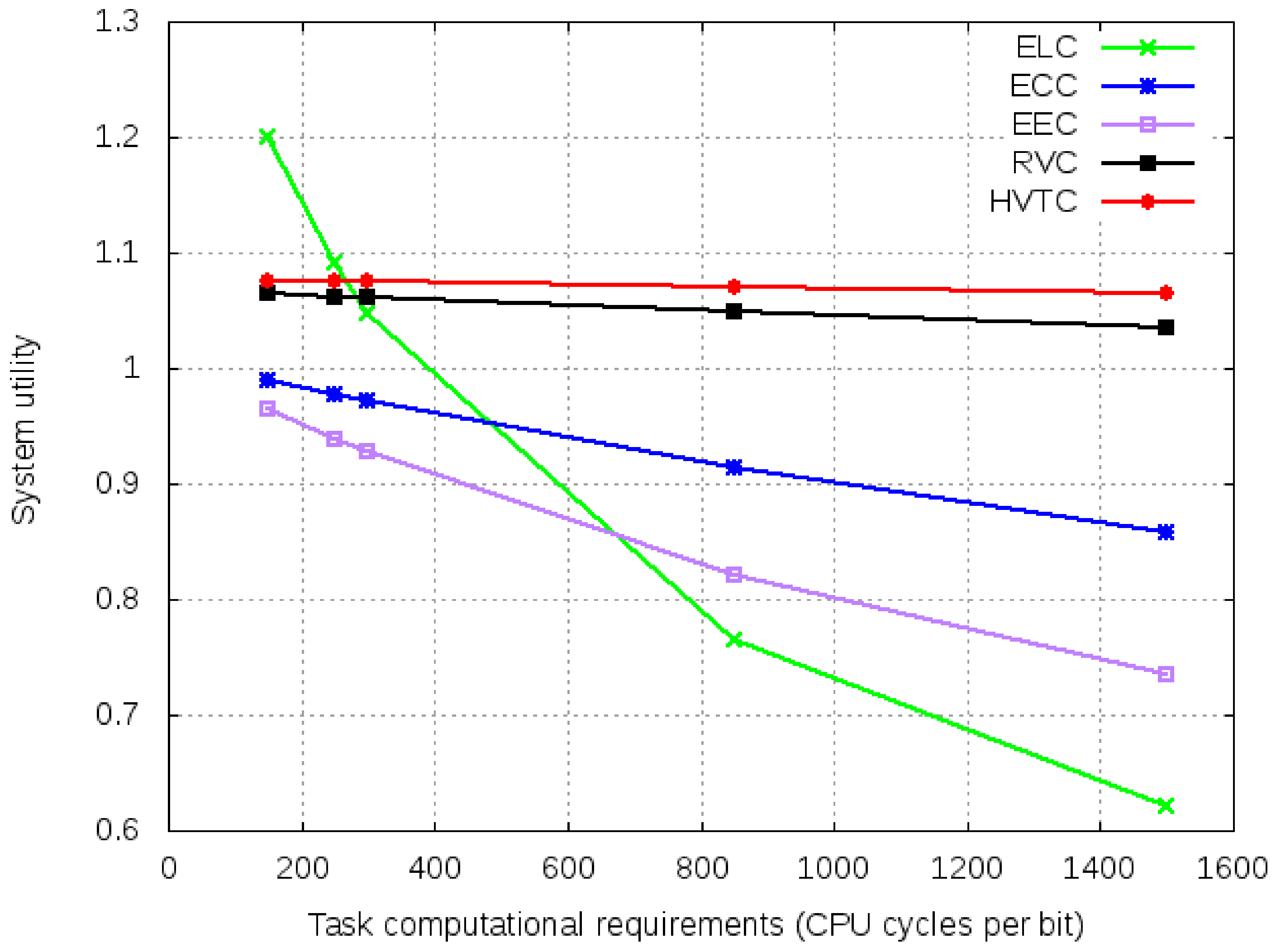

7.2.3. Varied Computational Requirements

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Voelcker, J. 1.2 Billion Vehicles On World’s Roads Now, 2 Billion By 2035. Available online: https://www.greencarreports.com/news/1093560_1-2-billion-vehicles-on-worlds-roads-now-2-billion-by-2035-report (accessed on 27 November 2020).

- Afrin, T.; Yodo, N. A survey of road traffic congestion measures towards a sustainable and resilient transportation system. Sustainability 2020, 12, 4660. [Google Scholar] [CrossRef]

- Zhao, C.; Han, J.; Ding, X.; Shi, L.; Yang, F. An analytical model for interference alignment in broadcast assisted vanets. Sensors 2019, 19, 4988. [Google Scholar] [CrossRef]

- Li, H.; Lan, C.; Fu, X.; Wang, C.; Li, F.; Guo, H. A secure and lightweight fine-grained data sharing scheme for mobile cloud computing. Sensors 2020, 20, 4720. [Google Scholar] [CrossRef] [PubMed]

- Huang, Q.; Yang, Y.; Shi, Y. SmartVeh: Secure and efficient message access control and authentication for vehicular cloud computing. Sensors 2018, 18, 666. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Li, Q.; Zhou, M.; Abusorrah, A. Recent advances in collaborative scheduling of computing tasks in an edge computing paradigm. Sensors 2021, 21, 779. [Google Scholar] [CrossRef]

- Li, D.; Xu, S.; Li, P. Deep reinforcement learning-empowered resource allocation for mobile edge computing in cellular v2x networks. Sensors 2021, 21, 372. [Google Scholar] [CrossRef]

- Li, Z.; Peng, E. Software-defined optimal computation task scheduling in vehicular edge networking†. Sensors 2021, 21, 955. [Google Scholar] [CrossRef]

- Losada, M.; Cortés, A.; Irizar, A.; Cejudo, J.; Pérez, A. A flexible fog computing design for low-power consumption and low latency applications. Electronics 2021, 10, 57. [Google Scholar] [CrossRef]

- Ran, M.; Bai, X. Vehicle cooperative network model based on hypergraph in vehicular fog computing. Sensors 2020, 20, 2269. [Google Scholar] [CrossRef]

- Sookhak, M.; Yu, F.R.; He, Y.; Talebian, H.; Safa, N.S.; Zhao, N.; Khan, M.K.; Kumar, N. Fog Vehicular Computing: Augmentation of Fog Computing Using Vehicular Cloud Computing. In IEEE Vehicular Technology Magazine; IEEE: Piscataway Township, NJ, USA, 2017; pp. 55–64. [Google Scholar] [CrossRef]

- Hussain, M.M.; Alam, M.S.; Beg, M.M.S. Vehicular Fog Computing-Planning and Design. Procedia Comput. Sci. 2020, 167, 2570–2580. [Google Scholar] [CrossRef]

- Preden, J.S.; Tammemäe, K.; Jantsch, A.; Leier, M.; Riid, A.; Calis, E. The Benefits of Self-Awareness and Attention in Fog and Mist Computing. Computer 2015, 37–45. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Waheed, A.; Shah, M.A.; Khan, A.; ul Islam, S.; Khan, S.; Maple, C.; Khan, M.K. Volunteer Computing in Connected Vehicles: Opportunities and Challenges. IEEE Netw. 2020, 34, 212–218. [Google Scholar] [CrossRef]

- Elazhary, H. Internet of Things (IoT), mobile cloud, cloudlet, mobile IoT, IoT cloud, fog, mobile edge, and edge emerging computing paradigms: Disambiguation and research directions. J. Netw. Comput. Appl. 2019, 128, 105–140. [Google Scholar] [CrossRef]

- Cardellini, V.; De Nitto Personé, V.; Di Valerio, V.; Facchinei, F.; Grassi, V.; Lo Presti, F.; Piccialli, V. A game-theoretic approach to computation offloading in mobile cloud computing. Math. Program. 2016, 157, 421–449. [Google Scholar] [CrossRef]

- Wu, H.; Sun, Y.; Wolter, K. Energy-Efficient Decision Making for Mobile Cloud Offloading. IEEE Trans. Cloud Comput. 2018, 8, 570–584. [Google Scholar] [CrossRef]

- Guo, S.; Liu, J.; Yang, Y. Energy-Efficient Dynamic Computation Offloading and Cooperative Task Scheduling in Mobile Cloud Computing. IEEE Trans. Mob. Comput. 2019, 18, 319–333. [Google Scholar] [CrossRef]

- Goudarzi, M.; Zamani, M.; Haghighat, A.T. A fast hybrid multi-site computation offloading for mobile cloud computing. J. Netw. Comput. Appl. 2017, 80, 219–231. [Google Scholar] [CrossRef]

- Cui, Y.; Liang, Y.; Wang, R. Resource Allocation Algorithm with Multi-Platform Intelligent Offloading in D2D-Enabled Vehicular Networks. IEEE Access 2019, 7, 21246–21253. [Google Scholar] [CrossRef]

- Hu, Q.; Wu, C.; Zhao, X.; Chen, X.; Ji, Y.; Yoshinaga, T. Vehicular multi-access edge computing with licensed sub-6 GHz, IEEE 802.11p and mmWave. IEEE Access 2017, 6, 1995–2004. [Google Scholar] [CrossRef]

- Ranadheera, S.; Maghsudi, S.; Hossain, E. Mobile Edge Computation Offloading Using Game Theory and Reinforcement Learning. Available online: http://arxiv.org/abs/1711.09012 (accessed on 24 June 2020).

- Guo, H.; Liu, J.; Zhang, J. Computation offloading for multi-access mobile edge computing in ultra-dense networks. IEEE Commun. Mag. 2018, 56, 14–19. [Google Scholar] [CrossRef]

- Khan, W.Z.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge computing: A survey. Futur. Gener. Comput. Syst. 2019, 97, 219–235. [Google Scholar] [CrossRef]

- Huang, X.; Yu, R.; Kang, J.; Zhang, Y. Distributed reputation management for secure and efficient vehicular edge computing and networks. IEEE Access 2017, 5, 25408–25420. [Google Scholar] [CrossRef]

- Siming, W.; Zehang, Z.; Rong, Y.; Yan, Z. Low-latency caching with auction game in vehicular edge computing. In Proceedings of the IEEE/CIC International Conference on Communications in China (ICCC), Qingdao, China, 22–24 October 2017; pp. 1–6. [Google Scholar]

- Sun, J.; Gu, Q.; Zheng, T.; Dong, P.; Qin, Y. Joint communication and computing resource allocation in vehicular edge computing. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Chen, X.; Zhong, W.; Xie, S. Efficient Mobility-Aware Task Offloading for Vehicular Edge Computing Networks. IEEE Access 2019, 7, 26652–26664. [Google Scholar] [CrossRef]

- Junhui, Z.; Qiuping, L.; Yi, G.; Ke, Z. Computation Offloading and Resource Allocation for Cloud Assisted Mobile Edge Computing in Vehicular Networks. IEEE Trans. Veh. Technol. 2019, 8, 1320–1323. [Google Scholar] [CrossRef]

- Dai, Y.; Xu, D.; Maharjan, S.; Zhang, Y. Joint load balancing and offloading in vehicular edge computing and networks. IEEE Internet Things J. 2019, 6, 4377–4387. [Google Scholar] [CrossRef]

- Hou, X.; Li, Y.; Chen, M.; Wu, D.; Jin, D.; Chen, S. Vehicular Fog Computing: A Viewpoint of Vehicles as the Infrastructures. IEEE Trans. Veh. Technol. 2016, 65, 3860–3873. [Google Scholar] [CrossRef]

- Kai, K.; Cong, W.; Tao, L. Fog computing for vehicular Ad-hoc networks: Paradigms, scenarios, and issues. J. China Univ. Posts Telecommun. 2016, 23, 56–65. [Google Scholar] [CrossRef]

- Ning, Z.; Huang, J.; Wang, X. Vehicular fog computing: Enabling real-time traffic management for smart cities. IEEE Wirel. Commun. 2019, 26, 87–93. [Google Scholar] [CrossRef]

- Klaimi, J.; Senouci, S.M.; Messous, M.A. Theoretical Game Approach for Mobile Users Resource Management in a Vehicular Fog Computing Environment. In Proceedings of the 2018 14th International Wireless Communications and Mobile Computing Conference, IWCMC 2018, Limassol, Cyprus, 25–29 June 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 452–457. [Google Scholar] [CrossRef]

- Wang, Z.; Zhong, Z.; Ni, M. Application-aware offloading policy using SMDP in vehicular fog computing systems. In Proceedings of the 2018 IEEE International Conference on Communications Workshops, ICC Workshops 2018-Proceedings, Kansas City, MO, USA, 20–24 May 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, P.; Feng, J.; Zhang, Y.; Mumtaz, S.; Rodriguez, J. Computation Resource Allocation and Task Assignment Optimization in Vehicular Fog Computing: A Contract-Matching Approach. IEEE Trans. Veh. Technol. 2019, 68, 3113–3125. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.Y.; Wei, H.Y. Parking Reservation Auction for Parked Vehicle Assistance in Vehicular Fog Computing. IEEE Trans. Veh. Technol. 2019, 68, 3126–3139. [Google Scholar] [CrossRef]

- Wu, Q.; Liu, H.; Wang, R.; Fan, P.; Fan, Q.; Li, Z. Delay-Sensitive Task Offloading in the 802.11p-Based Vehicular Fog Computing Systems. IEEE Internet Things J. 2020, 7, 773–785. [Google Scholar] [CrossRef]

- Du, H.; Leng, S.; Wu, F.; Chen, X.; Mao, S. A New Vehicular Fog Computing Architecture for Cooperative Sensing of Autonomous Driving. IEEE Access 2020, 8, 10997–11006. [Google Scholar] [CrossRef]

- Xie, J.; Jia, Y.; Chen, Z.; Nan, Z.; Liang, L. Efficient task completion for parallel offloading in vehicular fog computing. China Commun. 2019, 16, 42–55. [Google Scholar] [CrossRef]

- Mengistu, T.M.; Che, D. Survey and taxonomy of volunteer computing. ACM Comput. Surv. 2019, 52. [Google Scholar] [CrossRef]

- Amjid, A.; Khan, A.; Shah, M.A. VANET-Based Volunteer Computing (VBVC): A Computational Paradigm for Future Autonomous Vehicles. IEEE Access 2020, 8, 71763–71774. [Google Scholar] [CrossRef]

- Wang, Y.; Duan, X.; Tian, D.; Lu, G.; Yu, H. Throughput and Delay Limits of 802.11p and its Influence on Highway Capacity. In Proceedings of the Procedia-Social and Behavioral Sciences; Elsevier B.V.: Amsterdam, The Netherlands, 2013; Volume 96, pp. 2096–2104. [Google Scholar] [CrossRef]

- Mahn, T.; Wirth, M.; Klein, A. Game Theoretic Algorithm for Energy Efficient Mobile Edge Computing with Multiple Access Points. In Proceedings of the 2020 8th IEEE International Conference on Mobile Cloud Computing, Services, and Engineering, MobileCloud, Oxford, MS, USA, 13–16 April 2020; pp. 31–38. [Google Scholar] [CrossRef]

- Ren, J.; Yu, G.; He, Y.; Li, G.Y. Collaborative Cloud and Edge Computing for Latency Minimization. IEEE Trans. Veh. Technol. 2019, 68, 5031–5044. [Google Scholar] [CrossRef]

- Zhang, J.; Guo, H.; Liu, J.; Zhang, Y. Task Offloading in Vehicular Edge Computing Networks: A Load-Balancing Solution. IEEE Trans. Veh. Technol. 2020, 69, 2092–2104. [Google Scholar] [CrossRef]

- Raza, S.; Liu, W.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Sun, Q.; Wang, S. An efficient task offloading scheme in vehicular edge computing. J. Cloud Comput. 2020, 9. [Google Scholar] [CrossRef]

- Miettinen, A.P.; Nurminen, J.K. Energy efficiency of mobile clients in cloud computing. In Proceedings of the 2nd USENIX Workshop on Hot Topics in Cloud Computing, Boston, MA, USA, 22 June 2010. HotCloud: 2010. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| Set/number of volunteer vehicles | |

| Distance of node from the boundary | |

| Distance between vehicle and RSU | |

| Data transmission rate for the wireless channel between V2V and V2R | |

| Communication range of an RSU | |

| A tuple representing the task allocated to vehicle | |

| Identity of the task sent to vehicle | |

| Output data size | |

| Input size of the task allocated to vehicle | |

| Required computational resources for computing task | |

| The computational capability of the volunteer vehicle | |

| The computational capability of the cloud | |

| The computational capability of the edge | |

| Time taken by a task to complete execution on an OBU | |

| Makespan for job j | |

| Average execution time for all m jobs | |

| The total time taken for a task from transmission time to completion of task | |

| Number of jobs for vehicle | |

| The number of tasks a vehicle has for execution | |

| Objective functions | |

| Constants used for differentiation of available computation capabilities | |

| Link expiration time | |

| Vehicle | |

| System utility function |

| Notation | Description | Values |

|---|---|---|

| BF | Hello packet size | 20 B |

| TA | Data packet size | 1000 B |

| Input data size [47] | [400,1000] Kb | |

| Output data size [47] | [50,200] Kb | |

| Output data size for | [2,10] Kb | |

| Vehicle computation capacity [47] | CPU cycles/s | |

| Edge computation capacity [46] | CPU cycles/s | |

| Cloud computation capacity [46] | CPU cycles/s | |

| Backhaul link capacity [46] | [, ] bits/s | |

| Communication Range of vehicles [48] | 150 m | |

| Communication Range of RSU [48] | 200 m | |

| Computation resource cost at cloud [30] | $0.015/GHz | |

| Computation resource cost at edge [30] | $0.03/GHz | |

| Task computational requirements [47] | 1500 CPU cycles per bit |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Waheed, A.; Shah, M.A.; Khan, A.; Maple, C.; Ullah, I. Hybrid Task Coordination Using Multi-Hop Communication in Volunteer Computing-Based VANETs. Sensors 2021, 21, 2718. https://doi.org/10.3390/s21082718

Waheed A, Shah MA, Khan A, Maple C, Ullah I. Hybrid Task Coordination Using Multi-Hop Communication in Volunteer Computing-Based VANETs. Sensors. 2021; 21(8):2718. https://doi.org/10.3390/s21082718

Chicago/Turabian StyleWaheed, Abdul, Munam Ali Shah, Abid Khan, Carsten Maple, and Ikram Ullah. 2021. "Hybrid Task Coordination Using Multi-Hop Communication in Volunteer Computing-Based VANETs" Sensors 21, no. 8: 2718. https://doi.org/10.3390/s21082718

APA StyleWaheed, A., Shah, M. A., Khan, A., Maple, C., & Ullah, I. (2021). Hybrid Task Coordination Using Multi-Hop Communication in Volunteer Computing-Based VANETs. Sensors, 21(8), 2718. https://doi.org/10.3390/s21082718