Tomographic Proximity Imaging Using Conductive Sheet for Object Tracking †

Abstract

:1. Introduction

- To present an improved novel proximity imaging method [21] for an object tracking application.

- To develop a proximity imaging sensor using a low-cost conductive sheet and evaluate its proximity and horizontal position estimation accuracy.

- To implement a hand-tracking demonstration as a potential application of the proposed system.

2. Methods

2.1. Overview

2.2. Forward Problem

2.3. Inverse Problem

2.3.1. Jacobian Matrix

2.3.2. Regularization

2.4. Proximity Mapping and Calibration

3. Implementation

3.1. Sensor Construction

3.2. Reconstruction Solver

4. Experiments

4.1. Performance Evaluation

4.1.1. Testing Device

4.1.2. Metrics

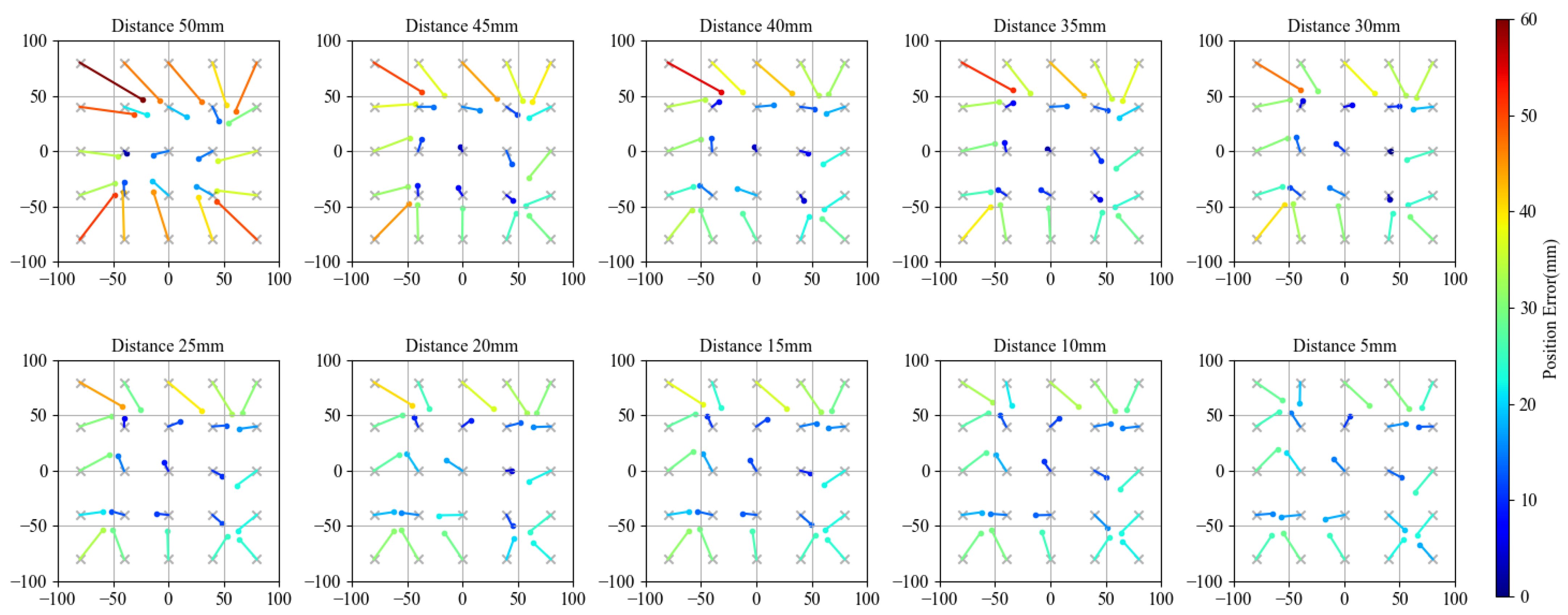

4.1.3. Results

4.2. Hand-Tracking Application

4.2.1. Measuring Device

4.2.2. Results

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef] [Green Version]

- Hu, K.; Ye, J.; Fan, E.; Shen, S.; Huang, L.; Pi, J. A novel object tracking algorithm by fusing color and depth information based on single valued neutrosophic cross-entropy. J. Intell. Fuzzy Syst. 2017, 32, 1775–1786. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1328–1338. [Google Scholar]

- Palinko, O.; Rea, F.; Sandini, G.; Sciutti, A. Robot reading human gaze: Why eye tracking is better than head tracking for human-robot collaboration. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Korea, 9–14 October 2016; pp. 5048–5054. [Google Scholar]

- Hubmann, C.; Becker, M.; Althoff, D.; Lenz, D.; Stiller, C. Decision making for autonomous driving considering interaction and uncertain prediction of surrounding vehicles. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017; pp. 1671–1678. [Google Scholar]

- Milford, P.N. Augmented Reality Proximity Sensing. U.S. Patent 9606612B2, 28 March 2017. [Google Scholar]

- Hsiao, K.; Nangeroni, P.; Huber, M.; Saxena, A.; Ng, A.Y. Reactive grasping using optical proximity sensors. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 2098–2105. [Google Scholar]

- Kan, W.; Huang, Y.; Zeng, X.; Guo, X.; Liu, P. A dual-mode proximity sensor with combination of inductive and capacitive sensing units. Sens. Rev. 2018, 38, 199–206. [Google Scholar]

- Li, N.; Zhu, H.; Wang, W.; Gong, Y. Parallel double-plate capacitive proximity sensor modelling based on effective theory. AIP Adv. 2014, 4, 1–9. [Google Scholar] [CrossRef]

- Hu, X.; Yang, W. Planar capacitive sensors–designs and applications. Sens. Rev. 2010, 30, 24–39. [Google Scholar]

- Nguyen, T.D.; Kim, T.; Noh, J.; Phung, H.; Kang, G.; Choi, H.R. Skin-Type Proximity Sensor by Using the Change of Electromagnetic Field. IEEE Trans. Ind. Electron. 2021, 68, 2379–2388. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, C.; Hudson, S.E.; Harrison, C.; Sample, A. Wall++ Room-Scale Interactive and Context-Aware Sensing. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–15. [Google Scholar]

- Ye, Y.; He, C.; Liao, B.; Qian, G. Capacitive proximity sensor array with a simple high sensitivity capacitance measuring circuit for human–computer interaction. IEEE Sens. J. 2018, 18, 5906–5914. [Google Scholar] [CrossRef]

- Nagakubo, A.; Alirezaei, H.; Kuniyoshi, Y. A deformable and deformation sensitive tactile distribution sensor. In Proceedings of the 2007 IEEE International Conference on Robotics and Biomimetics, Sanya, China, 15–18 December 2007; pp. 1301–1308. [Google Scholar]

- Kato, Y.; Mukai, T.; Hayakawa, T.; Shibata, T. Tactile sensor without wire and sensing element in the tactile region based on EIT method. In Proceedings of the SENSORS (2007 IEEE), Atlanta, GA, USA, 28–31 October 2007; pp. 792–795. [Google Scholar]

- Yao, A.; Yang, C.L.; Seo, J.K.; Soleimani, M. EIT-Based Fabric Pressure Sensing. Comput. Math. Methods Med. 2013, 2013, 1999. [Google Scholar] [CrossRef] [PubMed]

- Russo, S.; Nefti-Meziani, S.; Carbonaro, N.; Tognetti, A. A quantitative evaluation of drive pattern selection for optimizing EIT-based stretchable sensors. Sensors 2017, 17, 1999. [Google Scholar] [CrossRef] [PubMed]

- Yoshimoto, S.; Kuroda, Y.; Oshiro, O. Tomographic approach for universal tactile imaging with electromechanically coupled conductors. IEEE Trans. Ind. Electron. 2018, 67, 627–636. [Google Scholar] [CrossRef]

- Mühlbacher-Karrer, S.; Zangl, H. Object detection based on electrical capacitance tomography. In Proceedings of the 2015 IEEE Sensors Applications Symposium (SAS), Zadar, Croatia, 13–15 April 2015; pp. 1–5. [Google Scholar]

- Mühlbacher-Karrer, S.; Zangl, H. Detection of conductive objects with electrical capacitance tomography. In Proceedings of the 2016 IEEE SENSORS, Orlando, FL, USA, 30 October–3 November 2016; pp. 1–3. [Google Scholar]

- Li, Z.; Yoshimoto, S.; Yamamoto, A. Tomographic Approach for Proximity Imaging using Conductive Sheet. In Proceedings of the IECON 2020 The 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 748–753. [Google Scholar]

- Cheney, M.; Isaacson, D.; Newell, J.C. Electrical impedance tomography. SIAM Rev. 1999, 41, 85–101. [Google Scholar] [CrossRef] [Green Version]

- Silvera-Tawil, D.; Rye, D.; Soleimani, M.; Velonaki, M. Electrical impedance tomography for artificial sensitive robotic skin: A review. IEEE Sens. J. 2014, 15, 2001–2016. [Google Scholar] [CrossRef] [Green Version]

- Lionheart, W.; Polydorides, N.; Borsic, A. The Reconstruction Problem; CRC Press: Boca Raton, FL, USA, 2004; pp. 3–64. [Google Scholar]

- Graham, B.; Adler, A. Objective selection of hyperparameter for EIT. Physiol. Meas. 2006, 27, S65–S79. [Google Scholar] [CrossRef] [PubMed]

- Knudsen, K.; Lassas, M.; Mueller, J.L.; Siltanen, S. Regularized D-bar method for the inverse conductivity problem. Inverse Probl. Imaging 2009, 3, 599. [Google Scholar] [CrossRef]

- Hamilton, S.J.; Hauptmann, A. Deep D-bar: Real-time electrical impedance tomography imaging with deep neural networks. IEEE Trans. Med Imaging 2018, 37, 2367–2377. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, Z.; Guo, R.; Zhang, K.; Li, M.; Yang, F.; Xu, S.; Abubakar, A. Neural network-based supervised descent method for 2D electrical impedance tomography. Physiol. Meas. 2020, 41, 074003. [Google Scholar] [CrossRef] [PubMed]

- Martin, S.; Choi, C.T.M. Nonlinear Electrical Impedance Tomography Reconstruction Using Artificial Neural Networks and Particle Swarm Optimization. IEEE Trans. Magn. 2016, 52, 1–4. [Google Scholar] [CrossRef]

- Liu, S.; Wu, H.; Huang, Y.; Yang, Y.; Jia, J. Accelerated structure-aware sparse Bayesian learning for three-dimensional electrical impedance tomography. IEEE Trans. Ind. Inform. 2019, 15, 5033–5041. [Google Scholar] [CrossRef]

| Object A | Object B | Object C | Object D | |

|---|---|---|---|---|

| 20 mm | 30 mm | 40 mm | 80 mm | |

| Distance (mm) | RSD (%) | RSD (%) | RSD (%) | RSD (%) |

| 5 | 20.5 | 21.9 | 22.2 | 27.5 |

| 10 | 6.0 | 6.5 | 7.9 | 8.3 |

| 15 | 4.9 | 4.2 | 7.9 | 6.4 |

| 20 | 9.1 | 7.2 | 7.3 | 8.9 |

| 25 | 6.3 | 5.5 | 5.2 | 8.5 |

| 30 | 5.9 | 6.5 | 6.4 | 8.3 |

| 35 | 8.1 | 6.2 | 9.8 | 8.6 |

| 40 | 11.5 | 10.2 | 11.7 | 7.8 |

| 45 | 9.1 | 9.3 | 12.0 | 12.6 |

| 50 | 10.2 | 10.9 | 10.9 | 10.9 |

| Detection Range (mm) | 50 | 60 | 70 | 90 |

| Object A | Object B | Object C | Object D | |||||

|---|---|---|---|---|---|---|---|---|

| 20 mm × 20 mm | 30 mm × 30 mm | 40 mm × 40 mm | 80 mm × 80 mm | |||||

| Distance (mm) | Mean (mm) | STD (mm) | Mean (mm) | STD (mm) | Mean (mm) | STD (mm) | Mean (mm) | STD (mm) |

| 5 | 21.518 | 8.806 | 20.806 | 7.316 | 19.935 | 7.644 | 20.725 | 6.860 |

| 10 | 22.241 | 8.681 | 22.676 | 7.769 | 21.999 | 10.089 | 21.414 | 8.833 |

| 15 | 24.601 | 11.214 | 22.909 | 8.696 | 26.662 | 14.314 | 22.067 | 10.144 |

| 20 | 28.592 | 14.277 | 21.983 | 9.313 | 24.732 | 9.994 | 22.504 | 10.675 |

| 25 | 28.030 | 12.690 | 25.554 | 12.157 | 23.756 | 11.524 | 22.815 | 11.779 |

| 30 | 27.743 | 13.933 | 26.624 | 13.009 | 25.251 | 13.458 | 24.401 | 13.887 |

| 35 | 30.909 | 15.684 | 26.621 | 12.205 | 31.335 | 15.177 | 25.189 | 14.571 |

| 40 | 37.804 | 19.256 | 26.554 | 11.726 | 29.406 | 13.474 | 24.721 | 13.829 |

| 45 | 34.174 | 15.813 | 39.648 | 19.574 | 29.178 | 16.170 | 27.489 | 14.647 |

| 50 | 38.869 | 17.132 | 39.191 | 21.504 | 30.349 | 16.173 | 36.275 | 16.522 |

| Object A | Object B | Object C | Object D | |||||

|---|---|---|---|---|---|---|---|---|

| 20 mm × 20 mm | 30 mm × 30 mm | 40 mm × 40 mm | 80 mm × 80 mm | |||||

| Distance (mm) | x Axis (mm) | y Axis (mm) | x Axis (mm) | y Axis (mm) | x Axis (mm) | y Axis (mm) | x Axis (mm) | y Axis (mm) |

| 5 | 0.826 | 0.915 | 0.503 | 0.555 | 0.331 | 0.364 | 0.676 | 0.845 |

| 10 | 1.282 | 1.388 | 0.731 | 0.741 | 0.583 | 0.648 | 0.827 | 1.025 |

| 15 | 1.558 | 1.503 | 1.601 | 1.192 | 0.765 | 0.861 | 1.187 | 1.461 |

| 20 | 1.805 | 1.759 | 1.424 | 1.737 | 1.829 | 1.917 | 1.339 | 1.544 |

| 25 | 2.328 | 2.400 | 1.481 | 1.573 | 1.877 | 1.483 | 1.654 | 1.838 |

| 30 | 3.030 | 2.701 | 1.669 | 1.993 | 1.188 | 1.453 | 1.560 | 1.809 |

| 35 | 3.545 | 2.907 | 2.221 | 2.919 | 1.735 | 1.878 | 2.227 | 2.650 |

| 40 | 2.995 | 2.540 | 2.544 | 2.524 | 2.266 | 2.406 | 2.268 | 2.650 |

| 45 | 3.770 | 4.120 | 2.903 | 2.679 | 3.370 | 2.943 | 2.597 | 2.871 |

| 50 | 4.217 | 3.776 | 3.052 | 2.842 | 2.434 | 2.550 | 2.528 | 2.460 |

| Experiment Set | Set 1 | Set 2 | Set 3 |

|---|---|---|---|

| Arm Direction | |||

| Mean Distance (mm) | 46.66 | 33.38 | 45.14 |

| Mean X Difference (mm) | 32.45 | 18.7 | 21.7 |

| Mean Y Difference (mm) | 10.62 | 21.4 | 23.2 |

| Mean Z Difference (mm) | 6.31 | 9.5 | 10.4 |

| X Correlation Coefficient | 0.905 | 0.954 | 0.892 |

| Y Correlation Coefficient | 0.949 | 0.981 | 0.907 |

| Z Correlation Coefficient | 0.851 | 0.933 | 0.725 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Yoshimoto, S.; Yamamoto, A. Tomographic Proximity Imaging Using Conductive Sheet for Object Tracking. Sensors 2021, 21, 2736. https://doi.org/10.3390/s21082736

Li Z, Yoshimoto S, Yamamoto A. Tomographic Proximity Imaging Using Conductive Sheet for Object Tracking. Sensors. 2021; 21(8):2736. https://doi.org/10.3390/s21082736

Chicago/Turabian StyleLi, Zehao, Shunsuke Yoshimoto, and Akio Yamamoto. 2021. "Tomographic Proximity Imaging Using Conductive Sheet for Object Tracking" Sensors 21, no. 8: 2736. https://doi.org/10.3390/s21082736

APA StyleLi, Z., Yoshimoto, S., & Yamamoto, A. (2021). Tomographic Proximity Imaging Using Conductive Sheet for Object Tracking. Sensors, 21(8), 2736. https://doi.org/10.3390/s21082736