An Artificial Intelligence of Things-Based Picking Algorithm for Online Shop in the Society 5.0’s Context

Abstract

:1. Introduction

- The REST API is operated to get a reply from the online shop, which depends on the last transaction, and a selective data-driven mode completed by “data/last_transaction” data for YOLOv2 is proposed.

- The shelf collision obstacle for manipulators in shop shelves is weighed. This problem is solved by proposing a modified selective YOLOv2 technique to classify the edge of shelf as a forbidden points cloud to avoid each shelf edge.

- We are specific to robotic manipulator conditions, and the AIoT-based picking algorithm is implemented and evaluated; it provides a reference for eye-in-hand manipulator systems concerning Society 5.0 in terms of comfort and safety.

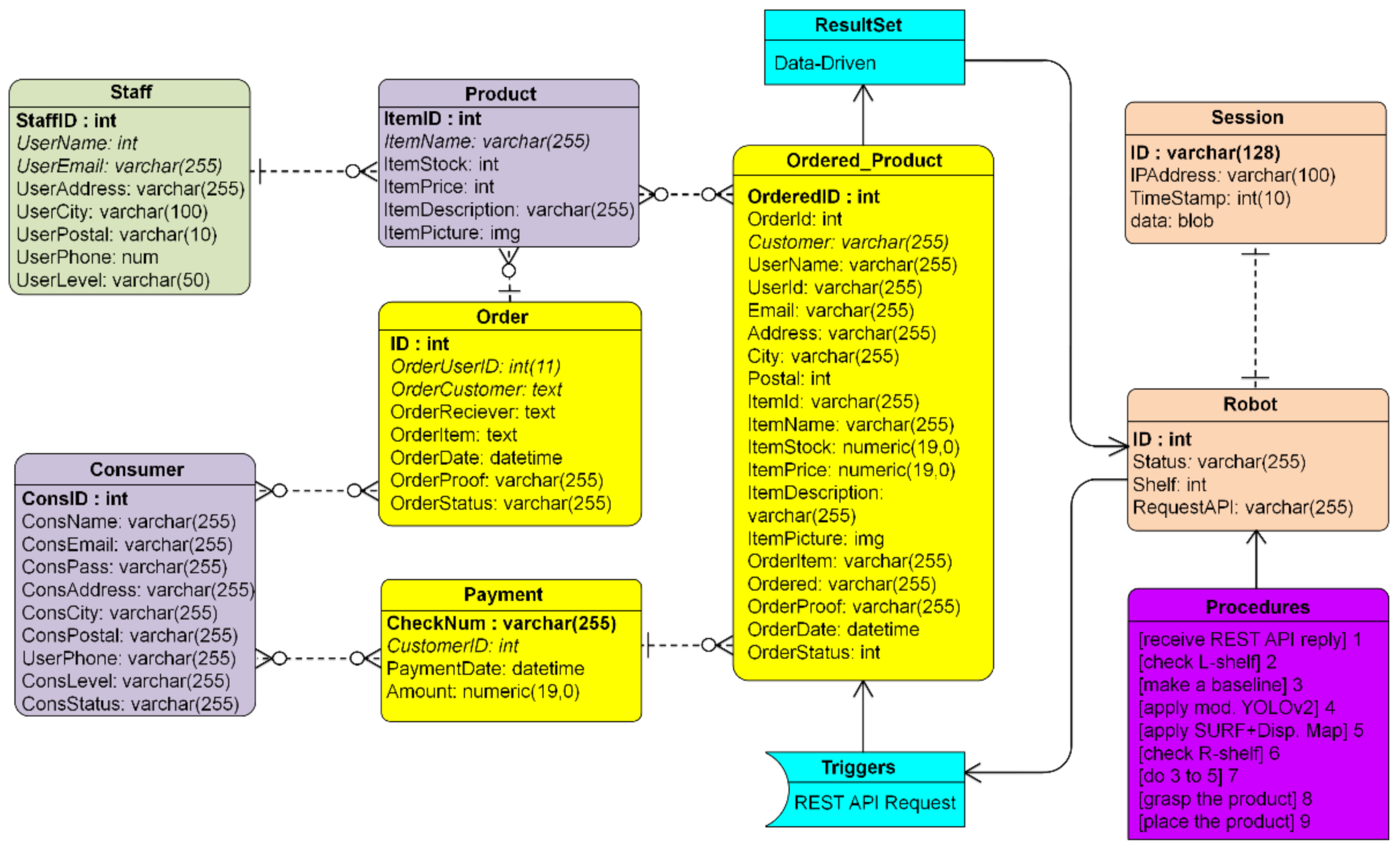

2. System Design

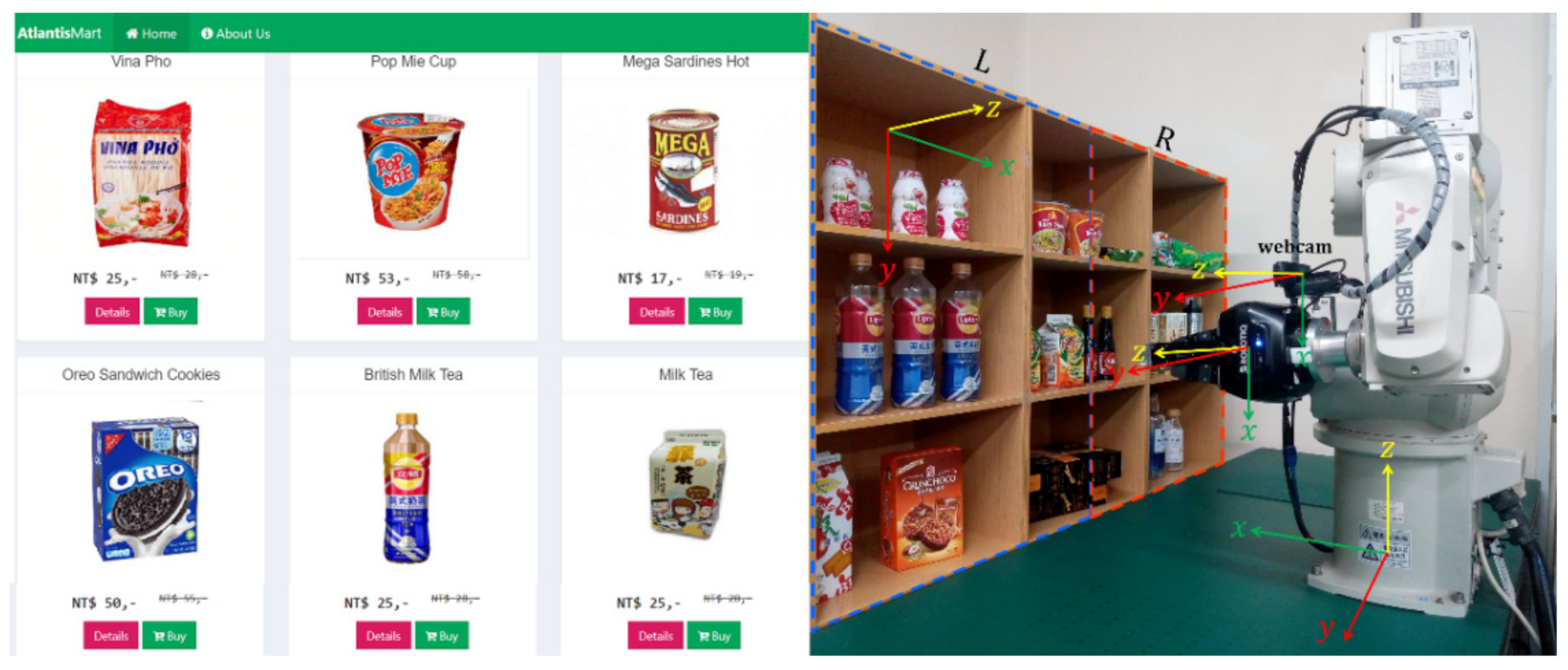

3. Online Shop in Society 5.0

3.1. Online Shop

3.2. Society 5.0′s Context

3.3. AIoT with Data-Driven Mode

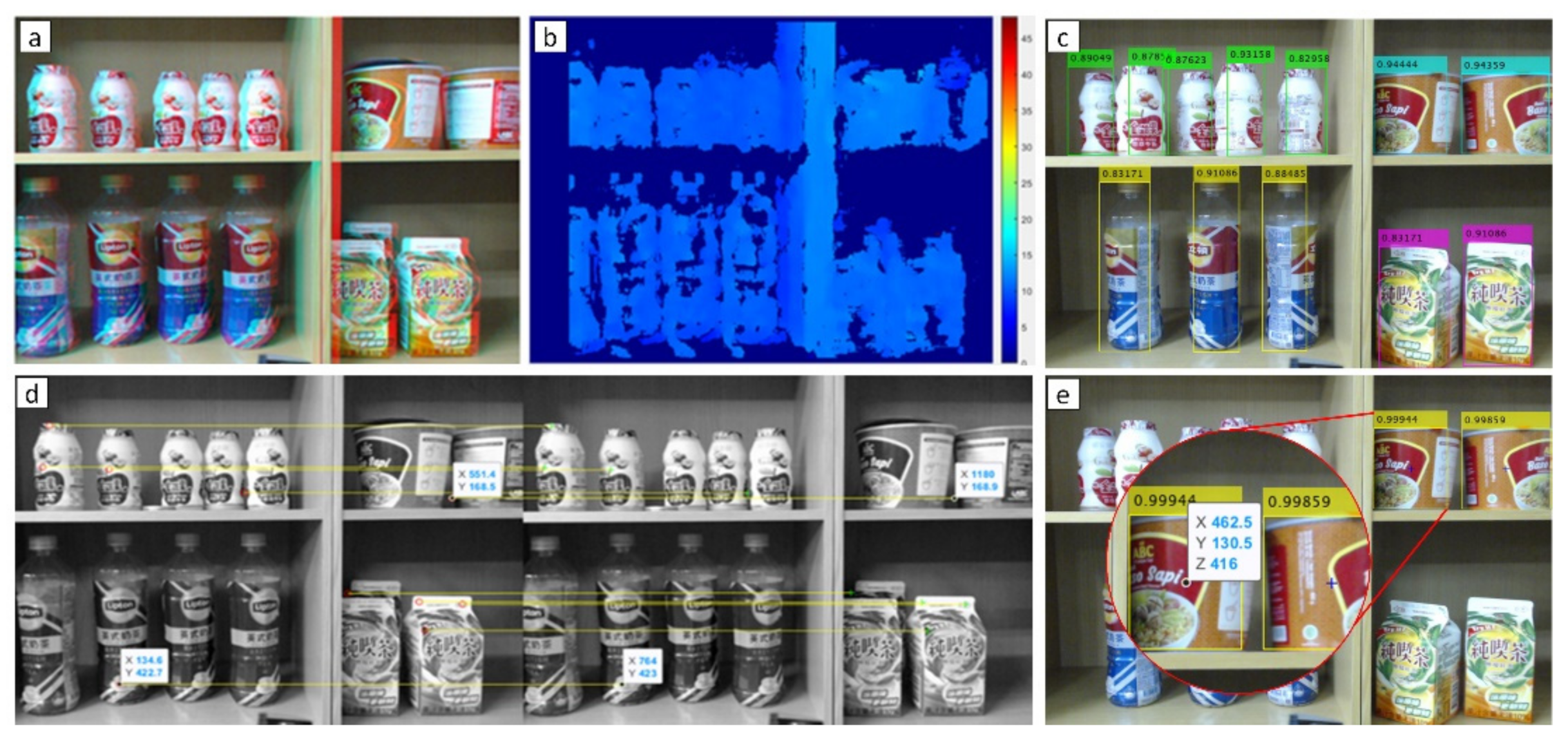

3.4. Purchased Products Recognition

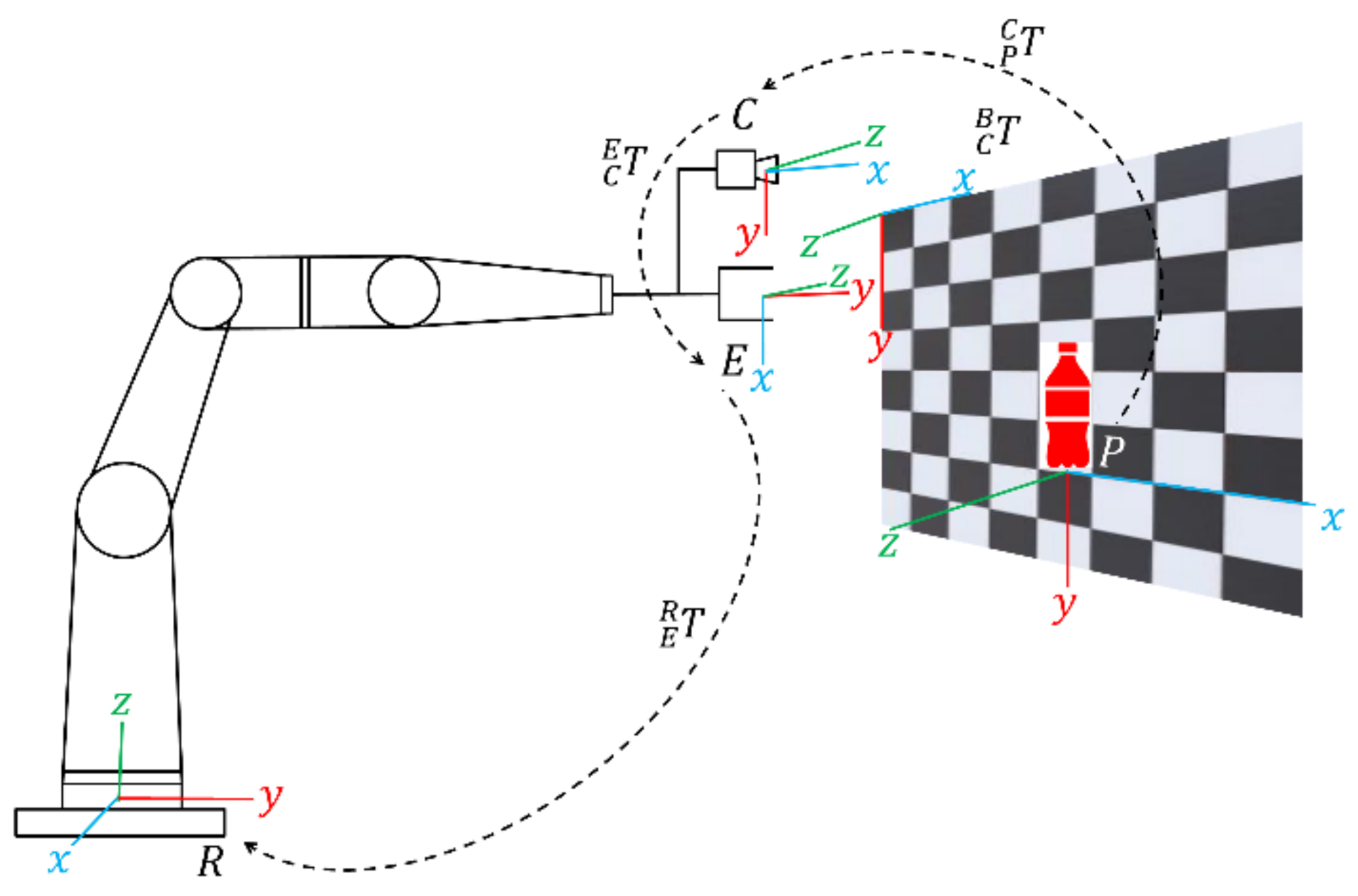

3.5. Localization for Recognized Products

3.5.1. SURF with Disparity Map

3.5.2. A Half Shelf System

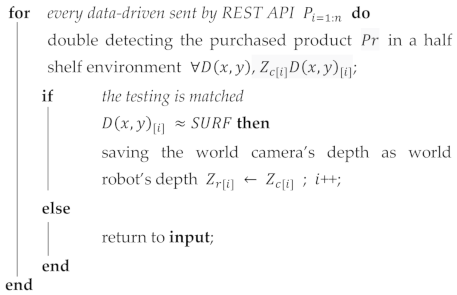

| Algorithm 1 Search Purchased Product in A Half Shelf | |

| predefined | : a product in a half shelf system frame; |

| input | : the REST API data-driven. |

| |

3.5.3. Shelf to World Transformation

4. Picking Algorithm for Offline Shop

4.1. Purchased Products in Shelf

| Algorithm 2 Ascertain Whether Purchased Product Is Within Multi-Detection, Overlapping, or Mixed Products | |

| Predefined input | ; : Algorithm 1. |

| for | each detected purchased productdo |

| |

| end | |

| function a | heading the gripper to the product ; grasping the product ; placing to home position . |

4.2. Grasping Purchased Product

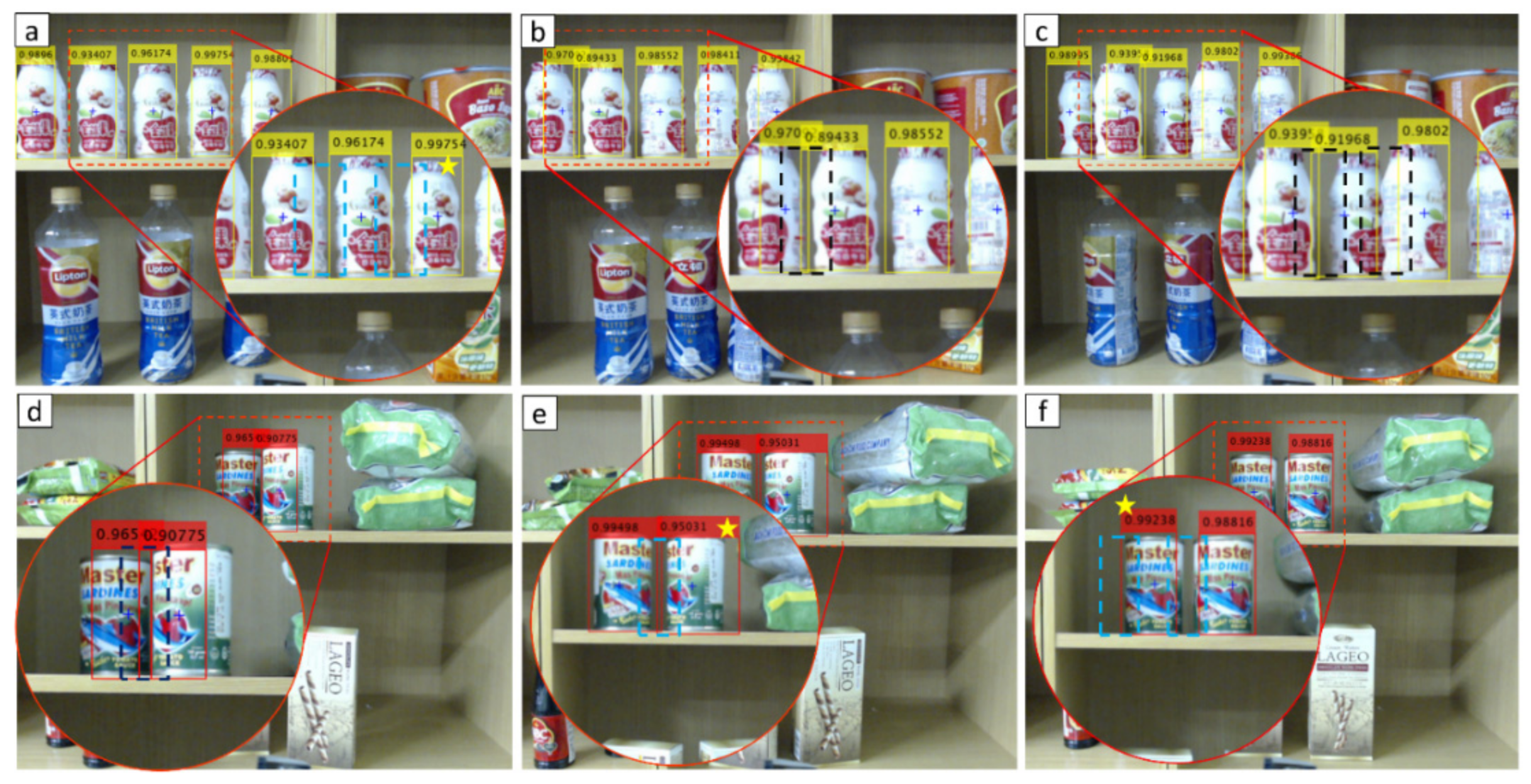

4.2.1. Multi-Detection

4.2.2. Overlapping

4.2.3. Mixed Products

5. Experimental Works

5.1. Experimental Settings

5.2. Evaluation of Detection Method

5.3. Experiments of AIoT for grasping

5.4. AIoT Shop in Society 5.0 Evaluation

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- De Bellis, E.; Johar, G.V. Autonomous Shopping Systems: Identifying and Overcoming Barriers to Consumer Adoption. J. Retail. 2020, 96, 74–87. [Google Scholar] [CrossRef]

- Loke, K. Automatic recognition of clothes pattern and motifs empowering online fashion shopping. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics—Taiwan (ICCE-TW), Taipei, Taiwan, 12–14 June 2017; pp. 375–376. [Google Scholar]

- Mantha, A.; Arora, Y.; Gupta, S.; Kanumala, P.; Liu, Z.; Guo, S.; Achan, K. A Large-Scale Deep Architecture for Personalized Grocery Basket Recommendations. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3807–3811. [Google Scholar]

- Matsuda, K.; Uesugi, S.; Naruse, K.; Morita, M. Technologies of Production with Society 5.0. In Proceedings of the 2019 6th International Conference on Behavioral, Economic and Socio-Cultural Computing (BESC), Beijing, China, 28–30 October 2019; pp. 1–4. [Google Scholar]

- Martynov, V.V.; Shavaleeva, D.N.; Zaytseva, A.A. Information Technology as the Basis for Transformation into a Digital Society and Industry 5.0. In Proceedings of the 2019 International Conference “Quality Management, Transport and Information Security, Information Technologies” (IT&QM&IS), Sochi, Russia, 23–27 September 2019; pp. 539–543. [Google Scholar]

- Shibata, M.; Ohtsuka, Y.; Okamoto, K.; Takahashi, M. Toward an Efficient Search Method to Capture the Future MOT Curriculum Based on the Society 5.0. In Proceedings of the 2017 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 9–13 July 2017; pp. 1–7. [Google Scholar]

- Fukuda, K. Science, technology and innovation ecosystem transformation toward society 5.0. Int. J. Prod. Econ. 2020, 220, 107460. [Google Scholar] [CrossRef]

- Grunwitz, K. The future is Society 5.0. Comput. Fraud. Secur. 2019, 2019, 20. [Google Scholar] [CrossRef]

- Tripathy, A.K.; Carvalho, R.; Pawaskar, K.; Yadav, S.; Yadav, V. Mobile based healthcare management using artificial intelligence. In Proceedings of the 2015 International Conference on Technologies for Sustainable Development (ICTSD), Mumbai, India, 4–6 February 2015; pp. 1–6. [Google Scholar]

- Hadipour, M.; Derakhshandeh, J.F.; Shiran, M.A. An experimental setup of multi-intelligent control system (MICS) of water management using the Internet of Things (IoT). ISA Trans. 2020, 96, 309–326. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Hsu, C.-H.; Lin, C.-P.; Yang, T.-C. A Deep Learning-Based Intelligent Medicine Recognition System for Chronic Patients. IEEE Access 2019, 7, 44441–44458. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Chen, M.-C.; Chiu, Y.-C.; Lin, J.-Y. ScalpEye: A Deep Learning-Based Scalp Hair Inspection and Diagnosis System for Scalp Health. IEEE Access 2020, 8, 134826–134837. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Hsu, C.-H.; Chen, J.-H.; Yang, T.-C.; Lin, C.-P. MedGlasses: A Wearable Smart-Glasses-Based Drug Pill Recognition System Using Deep Learning for Visually Impaired Chronic Patients. IEEE Access 2020, 8, 17013–17024. [Google Scholar] [CrossRef]

- Chang, W.-J.; Chen, L.-B.; Su, K.-Y. DeepCrash: A Deep Learning-Based Internet of Vehicles System for Head-On and Single-Vehicle Accident Detection with Emergency Notification. IEEE Access 2019, 7, 148163–148175. [Google Scholar] [CrossRef]

- Chiu, P.-S.; Chang, J.-W.; Lee, M.-C.; Chen, C.-H.; Lee, D.-S. Enabling Intelligent Environment by the Design of Emotionally Aware Virtual Assistant: A Case of Smart Campus. IEEE Access 2020, 8, 62032–62041. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Mao, Q.-C.; Sun, H.-M.; Liu, Y.-B.; Jia, R.-S. Fast and Efficient Non-Contact Ball Detector for Picking Robots. IEEE Access 2019, 7, 175487–175498. [Google Scholar] [CrossRef]

- Yang, H.; Chen, L.; Chen, M.; Ma, Z.; Deng, F.; Li, M.; Li, X. Tender Tea Shoots Recognition and Positioning for Picking Robot Using Improved YOLO-V3 Model. IEEE Access 2019, 7, 180998–181011. [Google Scholar] [CrossRef]

- Zhang, Q.; Gao, G. Grasping Point Detection of Randomly Placed Fruit Cluster Using Adaptive Morphology Segmentation and Principal Component Classification of Multiple Features. IEEE Access 2019, 7, 158035–158050. [Google Scholar] [CrossRef]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object Classification Using CNN-Based Fusion of Vision and LIDAR in Autonomous Vehicle Environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Muslikhin; Horng, J.-R.; Yang, S.-Y.; Wang, M.-S. Object Localization and Depth Estimation for Eye-in-Hand Manipulator Using Mono Camera. IEEE Access 2020, 8, 121765–121779. [Google Scholar] [CrossRef]

- Ge, Y.; Xiong, Y.; Tenorio, G.L.; From, P.J. Fruit Localization and Environment Perception for Strawberry Harvesting Robots. IEEE Access 2019, 7, 147642–147652. [Google Scholar] [CrossRef]

- Song, C.; Boularias, A. Inferring 3D Shapes of Unknown Rigid Objects in Clutter through Inverse Physics Reasoning. IEEE Robot. Autom. Lett. 2018, 4, 201–208. [Google Scholar] [CrossRef] [Green Version]

- Gupta, M.; Muller, J.; Sukhatme, G.S. Using Manipulation Primitives for Object Sorting in Cluttered Environments. IEEE Trans. Autom. Sci. Eng. 2014, 12, 608–614. [Google Scholar] [CrossRef]

- Koehn, D.; Lessmann, S.; Schaal, M. Predicting online shopping behaviour from clickstream data using deep learning. Expert Syst. Appl. 2020, 150, 113342. [Google Scholar] [CrossRef]

- Yamamoto, Y.; Kawabe, T.; Tsuruta, S.; Damiani, E.; Yoshitaka, A.; Mizuno, Y.; Sakurai, Y.; Knauf, R. Enhanced IoT-Aware Online Shopping System. In Proceedings of the 2016 12th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Naples, Italy, 28 November–1 December 2016; pp. 31–35. [Google Scholar]

- Rashid, Z.; Peig, E.; Pous, R. Bringing online shopping experience to offline retail through augmented reality and RFID. In Proceedings of the 2015 5th International Conference on the Internet of Things (IOT), Seoul, Korea, 26–28 October 2015; pp. 45–51. [Google Scholar]

- Gaggero, M.; Di Paola, D.; Petitti, A.; Caviglione, L. When Time Matters: Predictive Mission Planning in Cyber-Physical Scenarios. IEEE Access 2019, 7, 11246–11257. [Google Scholar] [CrossRef]

- Li, L.; Chou, W.; Zhou, W.; Luo, M. Design Patterns and Extensibility of REST API for Networking Applications. IEEE Trans. Netw. Serv. Manag. 2016, 13, 154–167. [Google Scholar] [CrossRef]

- Du, Y.-C.; Muslikhin, M.; Hsieh, T.-H.; Wang, M.-S. Stereo Vision-Based Object Recognition and Manipulation by Regions with Convolutional Neural Network. Electronics 2020, 9, 210. [Google Scholar] [CrossRef] [Green Version]

- Sriyakul, H.; Koolpiruck, D.; Songkasiri, W.; Nuratch, S. Cyber-Physical System Based Production Monitoring for Tapioca Starch Production. In Proceedings of the 2017 4th International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 926–930. [Google Scholar]

- Shiroishi, Y.; Uchiyama, K.; Suzuki, N. Society 5.0: For Human Security and Well-Being. Computer 2018, 51, 91–95. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, Z.; Lv, J. CPS-Based Smart Control Model for Shopfloor Material Handling. IEEE Trans. Ind. Inform. 2018, 14, 1764–1775. [Google Scholar] [CrossRef]

- Paredes-Valverde, M.A.; Alor-Hernandez, G.; Rodr’Guez-González, A.; Hernández-Chan, G. Developing Social Networks Mashups: An Overview of REST-Based APIs. Procedia Technol. 2012, 3, 205–213. [Google Scholar] [CrossRef] [Green Version]

- Li, T.-H.S.; Liu, C.-Y.; Kuo, P.-H.; Fang, N.-C.; Li, C.-H.; Cheng, C.-W.; Hsieh, C.-Y.; Wu, L.-F.; Liang, J.-J.; Chen, C.-Y. A Three-Dimensional Adaptive PSO-Based Packing Algorithm for an IoT-Based Automated e-Fulfillment Packaging System. IEEE Access 2017, 5, 9188–9205. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, D.; Jia, W.; Ji, W.; Ruan, C.; Sun, Y. Cucumber Fruits Detection in Greenhouses Based on Instance Segmentation. IEEE Access 2019, 7, 139635–139642. [Google Scholar] [CrossRef]

- Taryudi; Wang, M.-S. Eye to hand calibration using ANFIS for stereo vision-based object manipulation system. Microsyst. Technol. 2018, 24, 305–317. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Ferreira, F.; Veruggio, G.; Caccia, M.; Bruzzone, G. Speeded up Robust Features for vision-based underwater motion estimation and SLAM: Comparison with correlation-based techniques. IFAC Proc. Vol. 2009, 42, 273–278. [Google Scholar] [CrossRef]

- Kadaikar, A.; Dauphin, G.; Mokraoui, A. Sequential block-based disparity map estimation algorithm for stereoscopic image coding. Signal Process. Image Commun. 2015, 39, 159–172. [Google Scholar] [CrossRef]

- Naso, D.; Turchiano, B. Multicriteria Meta-Heuristics for AGV Dispatching Control Based on Computational Intelligence. IEEE Trans. Syst. Man Cybern. Part B 2005, 35, 208–226. [Google Scholar] [CrossRef]

- Reynosa-Guerrero, J.; Garcia-Huerta, J.-M.; Vazquez-Cervantes, A.; Reyes-Santos, E.; Perez-Ramos, J.-L.; Jimenez-Hernandez, H. Estimation of disparity maps through an evolutionary algorithm and global image features as descriptors. Expert Syst. Appl. 2021, 165, 113900. [Google Scholar] [CrossRef]

- Lin, C.-M.; Tsai, C.-Y.; Lai, Y.-C.; Li, S.-A.; Wong, C.-C. Visual Object Recognition and Pose Estimation Based on a Deep Semantic Segmentation Network. IEEE Sens. J. 2018, 18, 9370–9381. [Google Scholar] [CrossRef]

- Ji, W.; Meng, X.; Qian, Z.; Xu, B.; Zhao, D. Branch localization method based on the skeleton feature extraction and stereo matching for apple harvesting robot. Int. J. Adv. Robot. Syst. 2017, 14. [Google Scholar] [CrossRef] [Green Version]

- Asghari, P.; Rahmani, A.M.; Javadi, H.H.S. Internet of Things applications: A systematic review. Comput. Netw. 2019, 148, 241–261. [Google Scholar] [CrossRef]

- Mohd, N.A.B.; Zaaba, Z.F. A Review of Usability and Security Evaluation Model of Ecommerce Website. Procedia Comput. Sci. 2019, 161, 1199–1205. [Google Scholar] [CrossRef]

- Storto, C.L. Evaluating ecommerce websites cognitive efficiency: An integrative framework based on data envelopment analysis. Appl. Ergon. 2013, 44, 1004–1014. [Google Scholar] [CrossRef]

| Products | Title 2 | Title 3 | ||

|---|---|---|---|---|

| Size (mm) | Package | Weight (gr) | Shape | |

| ABC Soy Sweet Ketchup | 64 × 42 × 42 | bottle | 140 | S |

| British Milk Tea | 222 × 67 × 67 | bottle | 532 | S |

| Bun Gao Vermicelli | 120 × 220 × 62 | pouch | 200 | B |

| Cup Noodle ABC | 92 × 107 × 84 | cup | 65 | C |

| Golden Apple Yogurt | 106 × 52 × 52 | bottle | 175 | S |

| Indomie Soto Noodle | 130 × 92 × 32 | pouch | 76 | B |

| Lageo Wafers | 150 × 71 × 34 | box | 47 | B |

| Master Sardines | 86 × 52 × 52 | can | 176 | S |

| Tai Lemon Tea | 144 × 70 × 71 | box | 255 | B |

| Parameters | Specification |

|---|---|

| CPU | Intel Core i5 @3.0 GHz (6 CPUs) |

| Memory | RAM 16 GB |

| Op. System | Windows 10 |

| GPU | Onboard Intel UHD Graphics 630 |

| Camera | Logitech C920 |

| Robot Arm | MELFA RV-3SD 6 DOF |

| Gripper | Robotiq 3 fingers |

| IDEs | MATLAB, PHP 7, Laravel |

| Database | MySQLi |

| Host | https://indoaltantis.com (1 GB) |

| Mobile Comp. | Android 6+, iOS |

| Browser Comp. | Chrome (recommended), Firefox, Opera, Microsoft Edge, and Safari |

| Class of Product | Method | Parameters | ||||||

|---|---|---|---|---|---|---|---|---|

| Confid. | Accur. | Precis. | Recall | F1 | AP | Time (s) | ||

| A | modYOLOv2 | 0.942 | 0.990 | 0.990 | 1 | 0.995 | 0.990 | 0.055 |

| MobileNet2 | 0.905 | 0.963 | 0.963 | 1 | 0.981 | 0.941 | 0.157 | |

| ResNet18 | 0.890 | 0.950 | 1 | 1 | 1 | 0.961 | 0.056 | |

| B | modYOLOv2 | 0.938 | 0.972 | 0.971 | 1 | 0.985 | 0.927 | 0.054 |

| MobileNet2 | 0.626 | 0.925 | 1 | 1 | 1 | 0.958 | 0.053 | |

| ResNet18 | 0.707 | 0.750 | 0.944 | 1 | 0.971 | 0.971 | 0.056 | |

| C | modYOLOv2 | 0.852 | 0.990 | 1 | 0.989 | 0.994 | 0.771 | 0.053 |

| MobileNet2 | 0.768 | 0.740 | 1 | 0.958 | 0.978 | 0.827 | 0.087 | |

| ResNet18 | 0.719 | 0.750 | 0.969 | 0.969 | 0.969 | 0.633 | 0.053 | |

| D | modYOLOv2 | 0.925 | 0.990 | 0.990 | 1 | 0.995 | 0.990 | 0.054 |

| MobileNet2 | 0.864 | 0.963 | 0.963 | 1 | 0.981 | 0.909 | 0.053 | |

| ResNet18 | 0.862 | 0.925 | 0.974 | 1 | 0.987 | 0.923 | 0.053 | |

| E | modYOLOv2 | 0.885 | 0.854 | 0.854 | 1 | 0.921 | 0.754 | 0.053 |

| MobileNet2 | 0.818 | 0.777 | 0.777 | 1 | 0.875 | 0.650 | 0.054 | |

| ResNet18 | 0.709 | 0.875 | 0.875 | 1 | 0.933 | 0.739 | 0.052 | |

| F | modYOLOv2 | 0.922 | 0.981 | 0.981 | 1 | 0.990 | 0.979 | 0.054 |

| MobileNet2 | 0.708 | 0.963 | 0.963 | 1 | 0.981 | 0.998 | 0.053 | |

| ResNet18 | 0.690 | 0.875 | 0.875 | 1 | 0.933 | 0.739 | 0.052 | |

| G | modYOLOv2 | 0.875 | 0.945 | 0.990 | 0.954 | 0.971 | 0.867 | 0.055 |

| MobileNet2 | 0.641 | 0.851 | 1 | 0.851 | 0.920 | 0.776 | 0.058 | |

| ResNet18 | 0.785 | 0.975 | 0.975 | 1 | 0.987 | 0.957 | 0.056 | |

| H | modYOLOv2 | 0.952 | 0.981 | 1 | 0.981 | 0.990 | 0.907 | 0.056 |

| MobileNet2 | 0.841 | 0.851 | 1 | 0.923 | 0.960 | 0.749 | 0.062 | |

| ResNet18 | 0.952 | 0.925 | 0.974 | 1 | 0.987 | 0.925 | 0.058 | |

| I | modYOLOv2 | 0.917 | 1 | 1 | 1 | 1 | 1 | 0.056 |

| MobileNet2 | 0.868 | 0.925 | 1 | 1 | 1 | 0.9616 | 0.053 | |

| ResNet18 | 0.742 | 0.950 | 0.974 | 0.974 | 0.974 | 1 | 0.055 | |

| µ modYOLOv2 | 0.912 | 0.967 | 0.975 | 0.992 | 0.982 | 0.909 | 0.054 | |

| µ MobileNet2 | 0.782 | 0.884 | 0.963 | 0.970 | 0.964 | 0.863 | 0.070 | |

| µ ResNet18 | 0.784 | 0.886 | 0.951 | 0.994 | 0.971 | 0.872 | 0.055 | |

| σ modYOLOv2 | 0.032 | 0.043 | 0.044 | 0.015 | 0.015 | 0.089 | 0.001 | |

| σ MobileNet2 | 0.097 | 0.079 | 0.068 | 0.049 | 0.039 | 0.112 | 0.032 | |

| σ ResNet18 | 0.089 | 0.079 | 0.043 | 0.012 | 0.022 | 0.124 | 0.002 | |

| Methods | Conditions | Num. of Successes | Num. of Failures | Success Rate | Ave. Rate |

|---|---|---|---|---|---|

| YOLOv2 | Single product | 74 | 14 | 0.841 | 0.807 |

| Mixed product | 69 | 19 | 0.773 | ||

| Modified YOLOv2 | Single product | 77 | 11 | 0.875 | 0.835 |

| Mixed product | 70 | 18 | 0.795 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muslikhin, M.; Horng, J.-R.; Yang, S.-Y.; Wang, M.-S.; Awaluddin, B.-A. An Artificial Intelligence of Things-Based Picking Algorithm for Online Shop in the Society 5.0’s Context. Sensors 2021, 21, 2813. https://doi.org/10.3390/s21082813

Muslikhin M, Horng J-R, Yang S-Y, Wang M-S, Awaluddin B-A. An Artificial Intelligence of Things-Based Picking Algorithm for Online Shop in the Society 5.0’s Context. Sensors. 2021; 21(8):2813. https://doi.org/10.3390/s21082813

Chicago/Turabian StyleMuslikhin, Muslikhin, Jenq-Ruey Horng, Szu-Yueh Yang, Ming-Shyan Wang, and Baiti-Ahmad Awaluddin. 2021. "An Artificial Intelligence of Things-Based Picking Algorithm for Online Shop in the Society 5.0’s Context" Sensors 21, no. 8: 2813. https://doi.org/10.3390/s21082813