CricShotClassify: An Approach to Classifying Batting Shots from Cricket Videos Using a Convolutional Neural Network and Gated Recurrent Unit

Abstract

:1. Introduction

- We developed the novel dataset CricShot10 consisting of 10 different cricket shots. To the best of our knowledge, this is the highest number of distinct cricket shots in a single work.

- We designed a custom convolutional neural network (CNN)–recurrent neural network (RNN) architecture to classify the 10 different cricket shots.

- We investigated the impact of different types of convolution on our dataset.

- We investigated various transfer learning models to accurately classify batting shots.

2. Related Work

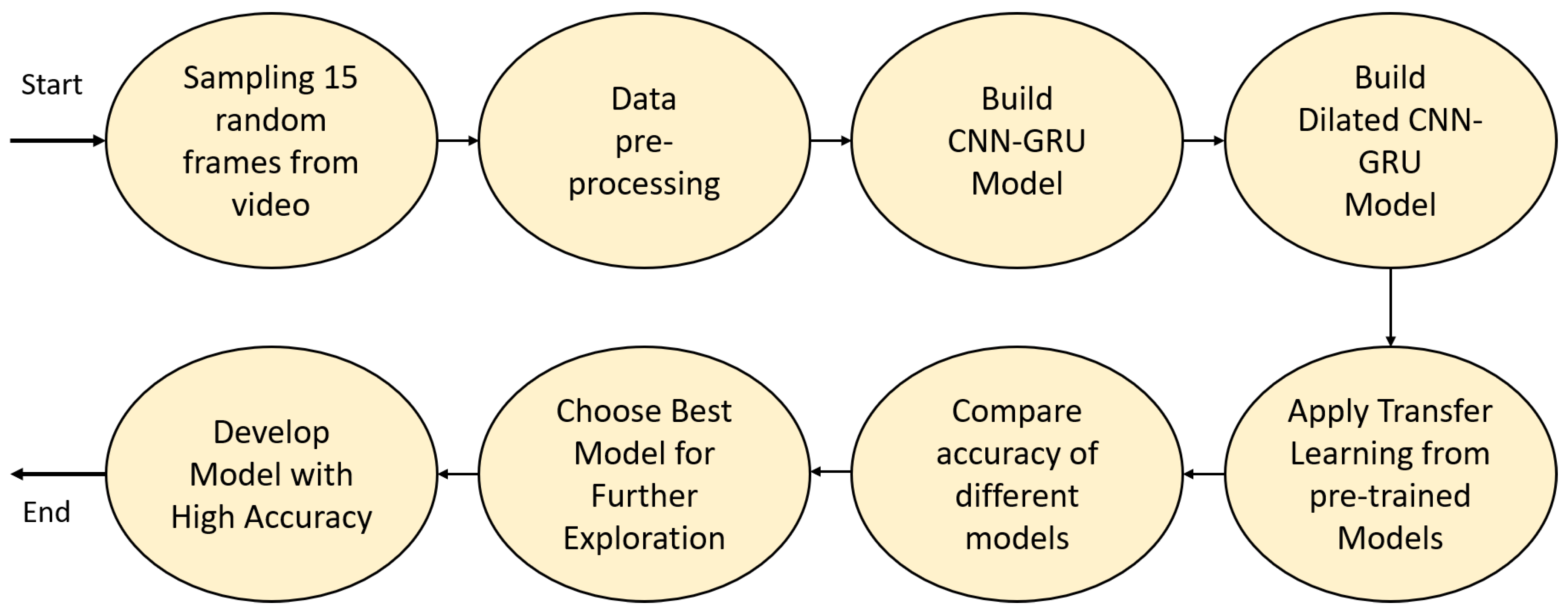

3. Workflow of Proposed Architecture

3.1. Custom CNN–GRU Architecture

3.2. Custom DCNN–GRU Architecture

3.3. Transfer-Learning-Based Proposed Architecture

3.4. Proposed VGG-Based Architecture

4. Result and Discussion

4.1. Hardware Configuration

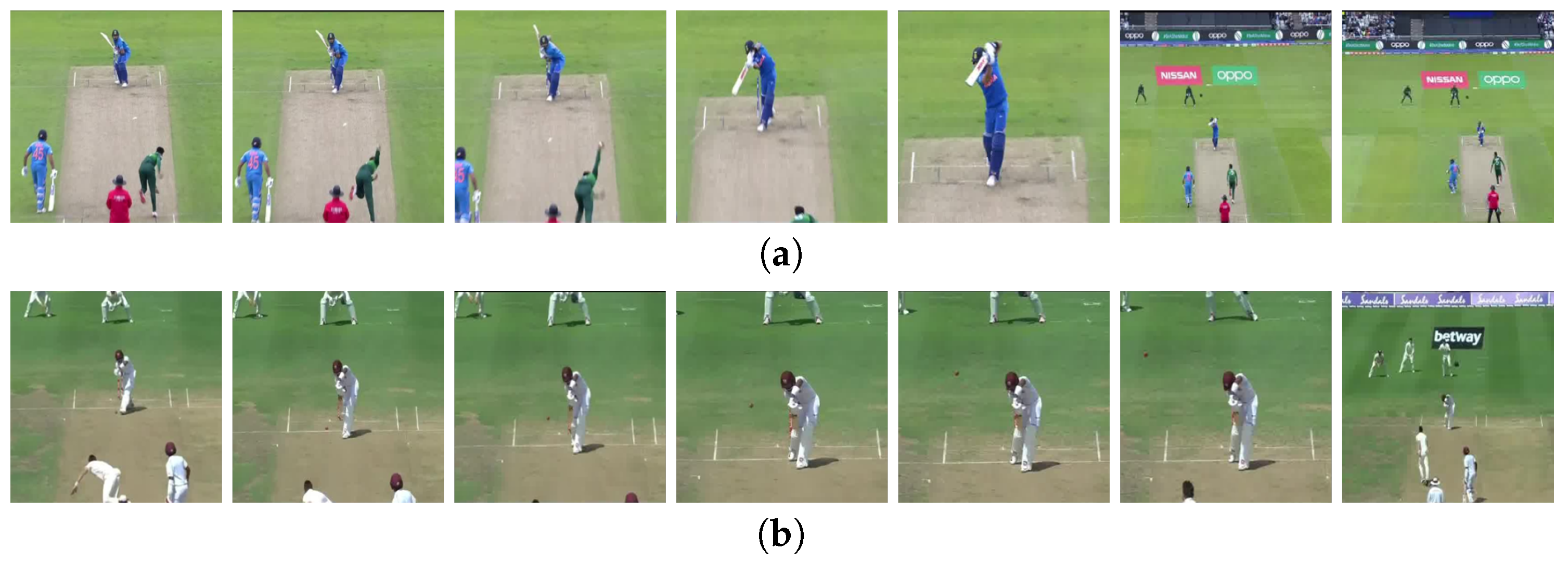

4.2. Dataset Generation

4.3. Evaluation

4.4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| CBSC | Cricket batting-shot categorization |

| DNN | Deep neural network |

| DCNN | Dilated convolutional neural network |

| RNN | Recurrent neural network |

| GRU | Gated recurrent unit |

| LSTM | Long short-term memory |

References

- Russo, M.A.; Filonenko, A.; Jo, K.H. Sports Classification in Sequential Frames Using CNN and RNN. In Proceedings of the International Conference on Information and Communication Technology Robotics (ICT-ROBOT), Busan, Korea, 6–8 September 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Russo, M.A.; Kurnianggoro, L.; Jo, K.H. Classification of sports videos with combination of deep learning models and transfer learning. In Proceedings of the International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’s Bazar, Bangladesh, 7–9 February 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Hanna, J.; Patlar, F.; Akbulut, A.; Mendi, E.; Bayrak, C. HMM based classification of sports videos using color feature. In Proceedings of the 6th IEEE International Conference Intelligent Systems, Sofia, Bulgaria, 6–8 September 2012; pp. 388–390. [Google Scholar] [CrossRef]

- Cricri, F.; Roininen, M.J.; Leppänen, J.; Mate, S.; Curcio, I.D.; Uhlmann, S.; Gabbouj, M. Sport type classification of mobile videos. IEEE Trans. Multimed. 2014, 16, 917–932. [Google Scholar] [CrossRef]

- 2019 Men’s Cricket World Cup Most Watched Ever. Available online: https://www.icc-cricket.com/media-releases/1346930 (accessed on 10 October 2020).

- Khan, M.Z.; Hassan, M.A.; Farooq, A.; Khan, M.U.G. Deep CNN Based Data-Driven Recognition of Cricket Batting Shots. In Proceedings of the International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 4–5 September 2018; pp. 67–71. [Google Scholar] [CrossRef]

- Karmaker, D.; Chowdhury, A.; Miah, M.; Imran, M.; Rahman, M. Cricket shot classification using motion vector. In Proceedings of the 2nd International Conference on Computing Technology and Information Management (ICCTIM), Johor, Malaysia, 21–23 April 2015; pp. 125–129. [Google Scholar] [CrossRef]

- Khan, A.; Nicholson, J.; Plötz, T. Activity recognition for quality assessment of batting shots in cricket using a hierarchical representation. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–31. [Google Scholar] [CrossRef] [Green Version]

- Foysal, M.F.A.; Islam, M.S.; Karim, A.; Neehal, N. Shot-Net: A convolutional neural network for classifying different cricket shots. In International Conference on Recent Trends in Image Processing and Pattern Recognition; Springer: Berlin, Germany, 2018; pp. 111–120. [Google Scholar]

- Semwal, A.; Mishra, D.; Raj, V.; Sharma, J.; Mittal, A. Cricket shot detection from videos. In Proceedings of the 9th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Bengaluru, India, 10–12 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Harikrishna, N.; Satheesh, S.; Sriram, S.D.; Easwarakumar, K. Temporal classification of events in cricket videos. In Proceedings of the Seventeenth National Conference on Communications (NCC), Bangalore, India, 28–30 January 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Kolekar, M.H.; Palaniappan, K.; Sengupta, S. Semantic event detection and classification in cricket video sequence. In Proceedings of the Sixth Indian Conference on Computer Vision, Graphics & Image Processing, Bhubaneswar, India, 16–19 December 2008; pp. 382–389. [Google Scholar] [CrossRef]

- Premaratne, S.; Jayaratne, K. Structural approach for event resolution in cricket videos. In Proceedings of the International Conference on Video and Image Processing, Singapore, 27–29 December 2017; pp. 161–166. [Google Scholar] [CrossRef]

- Javed, A.; Bajwa, K.B.; Malik, H.; Irtaza, A.; Mahmood, M.T. A hybrid approach for summarization of cricket videos. In Proceedings of the IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Seoul, Korea, 26–28 October 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Kolekar, M.H.; Sengupta, S. Semantic concept mining in cricket videos for automated highlight generation. Multimed. Tools Appl. 2010, 47, 545–579. [Google Scholar] [CrossRef]

- Bhalla, A.; Ahuja, A.; Pant, P.; Mittal, A. A Multimodal Approach for Automatic Cricket Video Summarization. In Proceedings of the 6th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, Uttar Pradesh, India, 7–8 March 2019; pp. 146–150. [Google Scholar] [CrossRef]

- Tang, H.; Kwatra, V.; Sargin, M.E.; Gargi, U. Detecting highlights in sports videos: Cricket as a test case. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Kumar, Y.S.; Gupta, S.K.; Kiran, B.R.; Ramakrishnan, K.; Bhattacharyya, C. Automatic summarization of broadcast cricket videos. In Proceedings of the IEEE 15th International Symposium on Consumer Electronics (ISCE), Singapore, 14–17 June 2011; pp. 222–225. [Google Scholar] [CrossRef]

- Ramsaran, M.; Pooransingh, A.; Singh, A. Automated Highlight Generation from Cricket Broadcast Video. In Proceedings of the 8th International Conference on Computational Intelligence and Communication Networks (CICN), Tehri, India, 23–25 December 2016; pp. 251–255. [Google Scholar] [CrossRef]

- Ringis, D.; Pooransingh, A. Automated highlight generation from cricket broadcasts using ORB. In Proceedings of the IEEE Pacific Rim Conference on Communications, Computers and Signal Processing (PACRIM), Victoria, BC, Canada, 24–26 August 2015; pp. 58–63. [Google Scholar] [CrossRef]

- Rafiq, M.; Rafiq, G.; Agyeman, R.; Jin, S.I.; Choi, G.S. Scene classification for sports video summarization using transfer learning. Sensors 2020, 20, 1702. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Steels, T.; Van Herbruggen, B.; Fontaine, J.; De Pessemier, T.; Plets, D.; De Poorter, E. Badminton Activity Recognition Using Accelerometer Data. Sensors 2020, 20, 4685. [Google Scholar] [CrossRef] [PubMed]

- Rangasamy, K.; As’ari, M.A.; Rahmad, N.A.; Ghazali, N.F. Hockey activity recognition using pre-trained deep learning model. ICT Express 2020, 6, 170–174. [Google Scholar] [CrossRef]

- Junjun, G. Basketball action recognition based on FPGA and particle image. Microprocess. Microsyst. 2021, 80, 103334. [Google Scholar] [CrossRef]

- Gu, X.; Xue, X.; Wang, F. Fine-Grained Action Recognition on a Novel Basketball Dataset. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 2563–2567. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the PMLR International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning (ICML), Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. CoRR 2015, abs/1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef] [Green Version]

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| input_1 (Input Layer) | (None, 180, 180, 3) | - |

| conv_1 (Conv2D) | (None, 180, 180, 64) | 1792 |

| batch_norm_1 (Batch Normalization) | (None, 180, 180, 64) | 256 |

| max_pool_1 (MaxPooling2D) | (None, 90, 90, 64) | 0 |

| conv_2 (Conv2D) | (None, 90, 90, 128) | 73,856 |

| conv_3 (Conv2D) | (None, 90, 90, 128) | 1,47,584 |

| batch_norm_2 (Batch Normalization) | (None, 90, 90, 128) | 512 |

| max_pool_2 (MaxPooling2D) | (None, 45, 45, 128) | 0 |

| conv_4 (Conv2D) | (None, 45, 45, 256) | 2,95,168 |

| conv5 (Conv2D) | (None, 45, 45, 256) | 5,90,080 |

| batch_norm_3 (Batch Normalization) | (None, 45, 45, 256) | 1024 |

| max_pool_3 (MaxPooling2D) | (None, 22, 22, 256) | 0 |

| conv_6 (Conv2D) | (None, 22, 22, 384) | 8,85,120 |

| conv_7 (Conv2D) | (None, 22, 22, 384) | 13,27,488 |

| batch_norm_4 (Batch Normalization) | (None, 22, 22, 384) | 1536 |

| max_pool_4 (MaxPooling2D) | (None, 11, 11, 384) | 0 |

| conv_8 (Conv2D) | (None, 11, 11, 480) | 16,59,360 |

| conv_9 (Conv2D) | (None, 11, 11, 480) | 20,74,080 |

| batch_norm_5 (Batch Normalization) | (None, 11, 11, 480) | 1920 |

| max_pool_5 (MaxPooling2D) | (None, 5, 5, 480) | 0 |

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| time_dist (custom_cnn_model) | (None, 15, 5, 5, 480) | 70,59,776 |

| time_dist (Flatten()) | (None, 15, 12,000) | 0 |

| gru_1 (GRU) | (None, 180) | 65,77,740 |

| dense_1 (Dense) | (None, 512) | 92,672 |

| dense_2 (Dense) | (None, 128) | 65,664 |

| dense_3 (Dense) | (None, 64) | 8256 |

| dense_4 (Dense) | (None, 10) | 650 |

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| input_1 (Input Layer) | (None, 180, 180, 3) | - |

| conv_1 (Conv2D) | (None, 176, 176, 96) | 2688 |

| batch_norm_1 (Batch Normalization) | (None, 176, 176, 96) | 384 |

| max_pool_1 (MaxPooling2D) | (None, 88, 88, 96) | 0 |

| conv_2 (Conv2D) | (None, 84, 84, 128) | 1,10,720 |

| conv_3 (Conv2D) | (None, 80, 80, 128) | 1,47,584 |

| batch_norm_2 (Batch Normalization) | (None, 80, 80, 128) | 512 |

| max_pool_2 (MaxPooling2D) | (None, 40, 40, 128) | 0 |

| conv_4 (Conv2D) | (None, 36, 36, 480) | 5,53,440 |

| conv_5 (Conv2D) | (None, 32, 32, 480) | 20,74,080 |

| batch_norm_3 (Batch Normalization) | (None, 32, 32, 480) | 1920 |

| max_pool_3 (MaxPooling2D) | (None, 16, 16, 480) | 0 |

| conv_6 (Conv2D) | (None, 12, 12, 768) | 33,18,528 |

| conv_7 (Conv2D) | (None, 8, 8, 768) | 53,09,184 |

| batch_norm_4 (Batch Normalization) | (None, 8, 8, 768) | 3072 |

| max_pool_4 (MaxPooling2D) | (None, 4, 4, 768) | 0 |

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| time_dist (dilated_cnn_model) | (None, 15, 4, 4, 768) | 1,15,22,112 |

| time_dist (Flatten()) | (None, 15, 12,288) | 0 |

| gru_1 (GRU) | (None, 180) | 67,33,260 |

| dense_1 (Dense) | (None, 512) | 92,672 |

| dense_2 (Dense) | (None, 128) | 65,664 |

| dense_3 (Dense) | (None, 64) | 8256 |

| dense_4 (Dense) | (None, 10) | 650 |

| Output From | Trainable Layers | GRU Hidden Units | Dense 1 Hidden Units | Dense 2 Hidden Units | Dense 3 Hidden Units | |

|---|---|---|---|---|---|---|

| Model 1 | block5_pool | Final 4 | 128 | 512 | 128 | 64 |

| Model 2 | block5_pool | Final 8 | 128 | 512 | 128 | 64 |

| Name | Training Set | Test Set |

|---|---|---|

| Cover Drive | 153 | 35 |

| Defensive Shot | 157 | 35 |

| Flick | 146 | 35 |

| Hook | 146 | 35 |

| Late Cut | 147 | 35 |

| Lofted Shot | 151 | 35 |

| Pull | 144 | 35 |

| Square Cut | 160 | 35 |

| Straight Drive | 154 | 35 |

| Sweep | 159 | 35 |

| Model | Trainable Parameters | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| CNN–GRU Model | 13,802,134 | 82.30 | 81.70 | 82.00 |

| DCNN–GRU Model | 18,419,670 | 83.00 | 82.60 | 83.00 |

| Model | Trainable Parameters | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| InceptionV3–GRU | 12,773,066 | 81.00 | 81.00 | 81.00 |

| Xception–GRU | 28,501,706 | 82.50 | 81.00 | 81.00 |

| DenseNet169–GRU | 16,164,554 | 84.90 | 82.90 | 83.00 |

| VGG16–GRU | 5,105,354 | 87.70 | 86.00 | 86.00 |

| Model | Trainable Parameters | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| Model 1 | 12,184,778 | 93.00 | 92.60 | 93.00 |

| Model 2 | 18,084,554 | 93.40 | 93.10 | 93.00 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Cover Drive | 0.85 | 1.00 | 0.92 | 35 |

| Defensive Shot | 1.00 | 1.00 | 1.00 | 35 |

| Flick Shot | 1.00 | 0.80 | 0.89 | 35 |

| Hook Shot | 0.97 | 0.89 | 0.93 | 35 |

| Late Cut | 0.86 | 0.91 | 0.89 | 35 |

| Lofted Shot | 0.89 | 0.97 | 0.93 | 35 |

| Pull Shot | 0.91 | 0.86 | 0.88 | 35 |

| Square Cut | 0.97 | 0.86 | 0.91 | 35 |

| Straight Drive | 1.00 | 1.00 | 1.00 | 35 |

| Sweep Shot | 0.85 | 0.97 | 0.91 | 35 |

| Average F1-Score | 0.93 | 350 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Cover Drive | 0.80 | 0.91 | 0.85 | 35 |

| Defensive Shot | 1.00 | 0.97 | 0.99 | 35 |

| Flick Shot | 0.94 | 0.83 | 0.88 | 35 |

| Hook Shot | 0.97 | 0.86 | 0.91 | 35 |

| Late Cut | 0.94 | 0.91 | 0.93 | 35 |

| Lofted Shot | 0.95 | 1.00 | 0.97 | 35 |

| Pull Shot | 0.89 | 0.89 | 0.89 | 35 |

| Square Cut | 0.94 | 0.97 | 0.96 | 35 |

| Straight Drive | 0.97 | 1.00 | 0.99 | 35 |

| Sweep Shot | 0.94 | 0.97 | 0.96 | 35 |

| Average F1-Score | 0.93 | 350 |

| Method | # of Shots Classified | Classified Shots | Accuracy (%) |

|---|---|---|---|

| Khan et al. [6] | 8 | Cover drive, straight drive, pull, hook, cut, sweep, onside hit, flick | 90.00 |

| Karmaker et al. [7] | 4 | Square cut, hook, flick, off drive | 60.21 |

| Our proposed approach | 10 | Cover drive, defensive shot, flick hook, late cut, lofted shot, pull, square cut, straight drive, sweep | 93.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sen, A.; Deb, K.; Dhar, P.K.; Koshiba, T. CricShotClassify: An Approach to Classifying Batting Shots from Cricket Videos Using a Convolutional Neural Network and Gated Recurrent Unit. Sensors 2021, 21, 2846. https://doi.org/10.3390/s21082846

Sen A, Deb K, Dhar PK, Koshiba T. CricShotClassify: An Approach to Classifying Batting Shots from Cricket Videos Using a Convolutional Neural Network and Gated Recurrent Unit. Sensors. 2021; 21(8):2846. https://doi.org/10.3390/s21082846

Chicago/Turabian StyleSen, Anik, Kaushik Deb, Pranab Kumar Dhar, and Takeshi Koshiba. 2021. "CricShotClassify: An Approach to Classifying Batting Shots from Cricket Videos Using a Convolutional Neural Network and Gated Recurrent Unit" Sensors 21, no. 8: 2846. https://doi.org/10.3390/s21082846

APA StyleSen, A., Deb, K., Dhar, P. K., & Koshiba, T. (2021). CricShotClassify: An Approach to Classifying Batting Shots from Cricket Videos Using a Convolutional Neural Network and Gated Recurrent Unit. Sensors, 21(8), 2846. https://doi.org/10.3390/s21082846