On-the-Fly Fusion of Remotely-Sensed Big Data Using an Elastic Computing Paradigm with a Containerized Spark Engine on Kubernetes

Abstract

1. Introduction

2. Data and Method

2.1. Experimental Data

2.2. The Proposed Cloud-Based Processing Framework

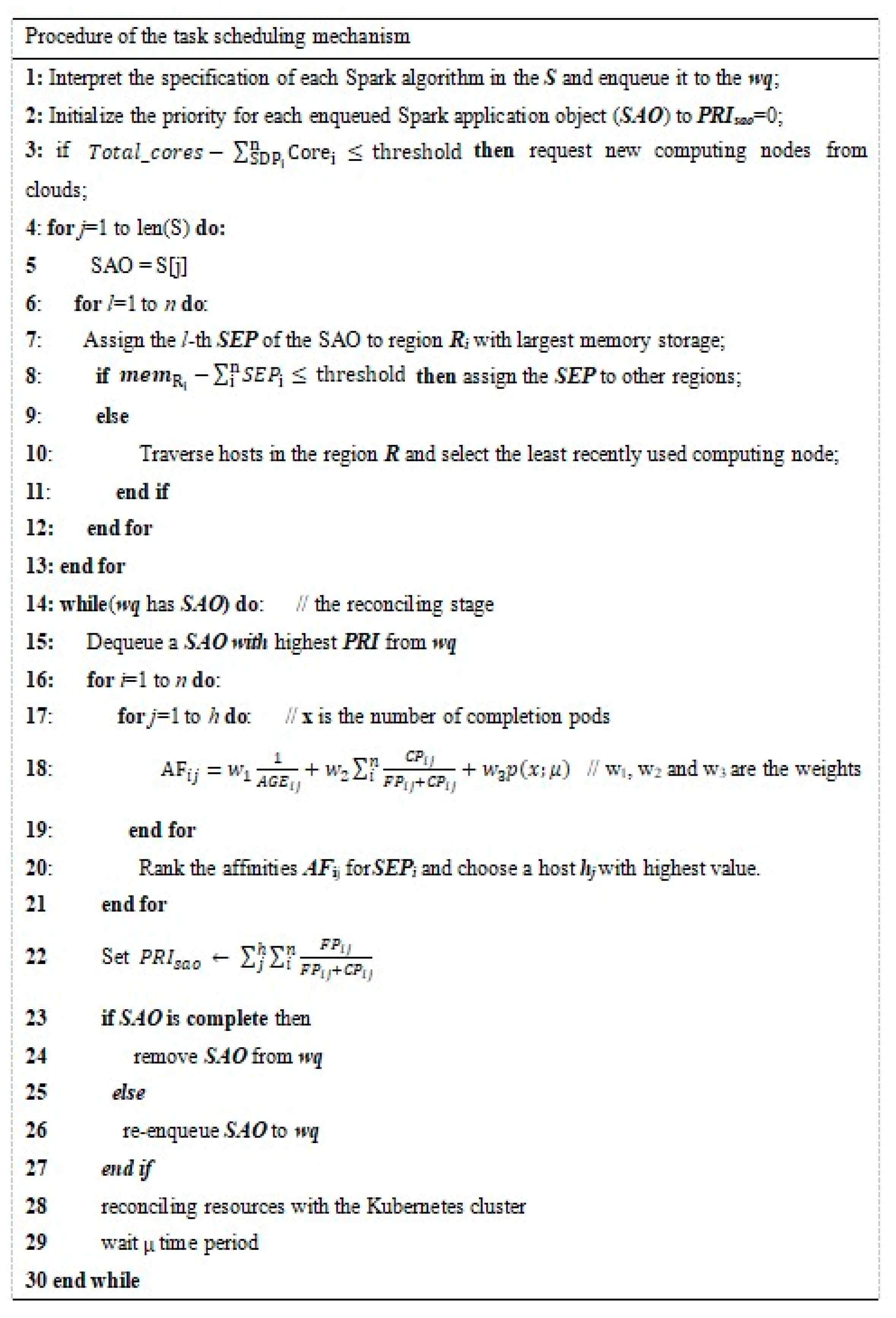

2.2.1. Proposed Task-Scheduling Mechanism

2.2.2. Proposed Parallel Fusion Algorithm

3. Experimental Environment

4. Results

4.1. Total Time Cost of PESTARFM on the Framework

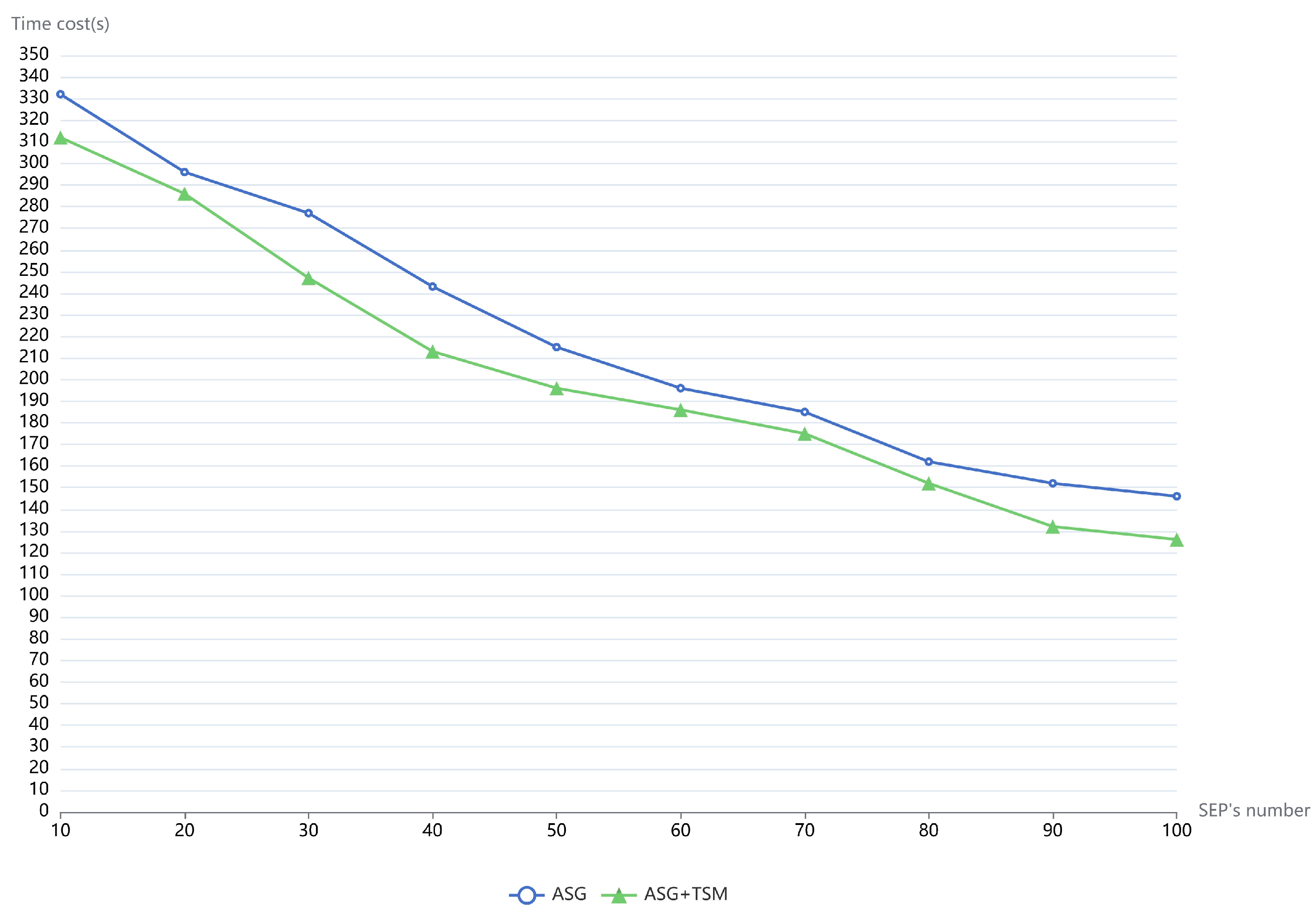

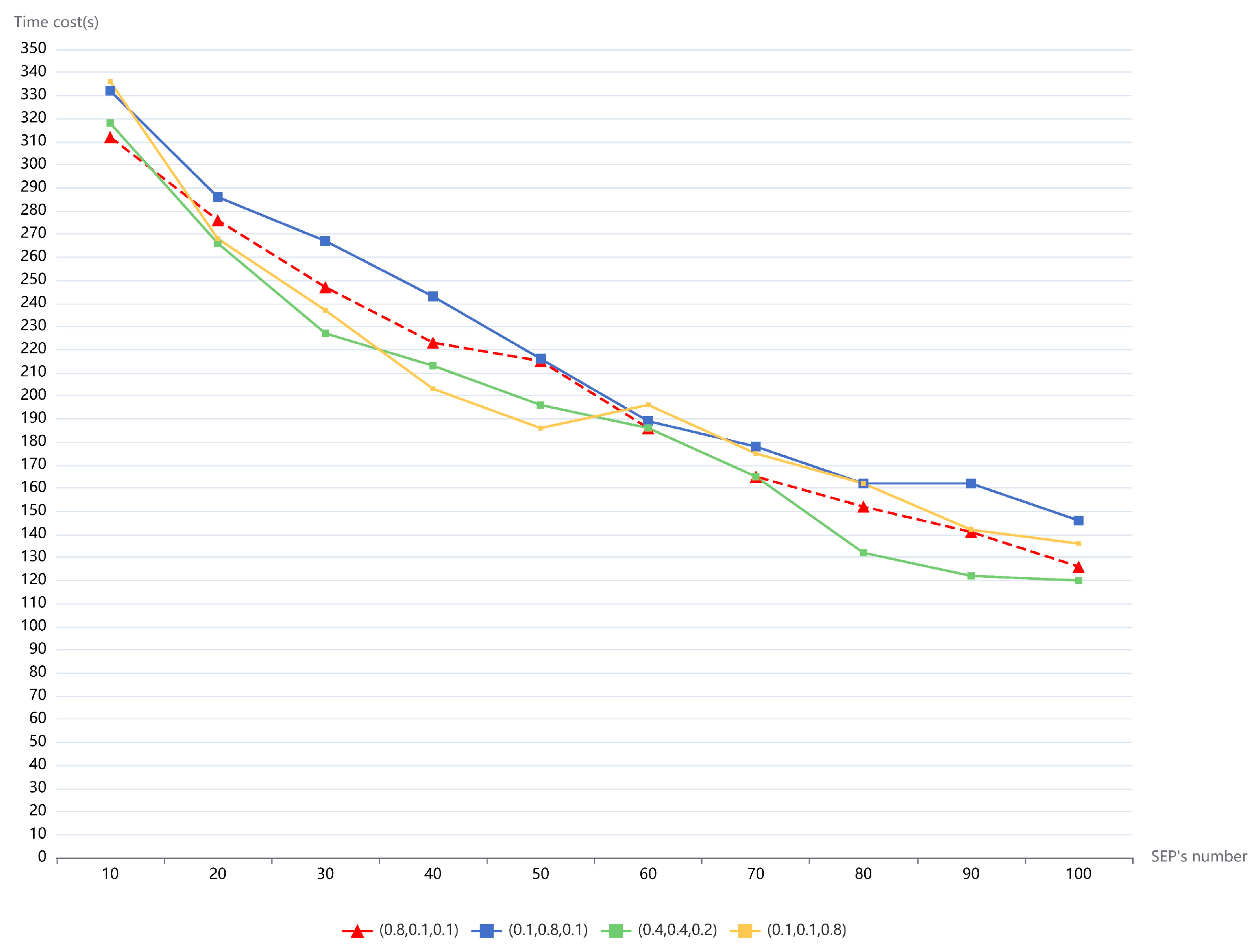

4.2. Performance of the Task Scheduling Mechanism

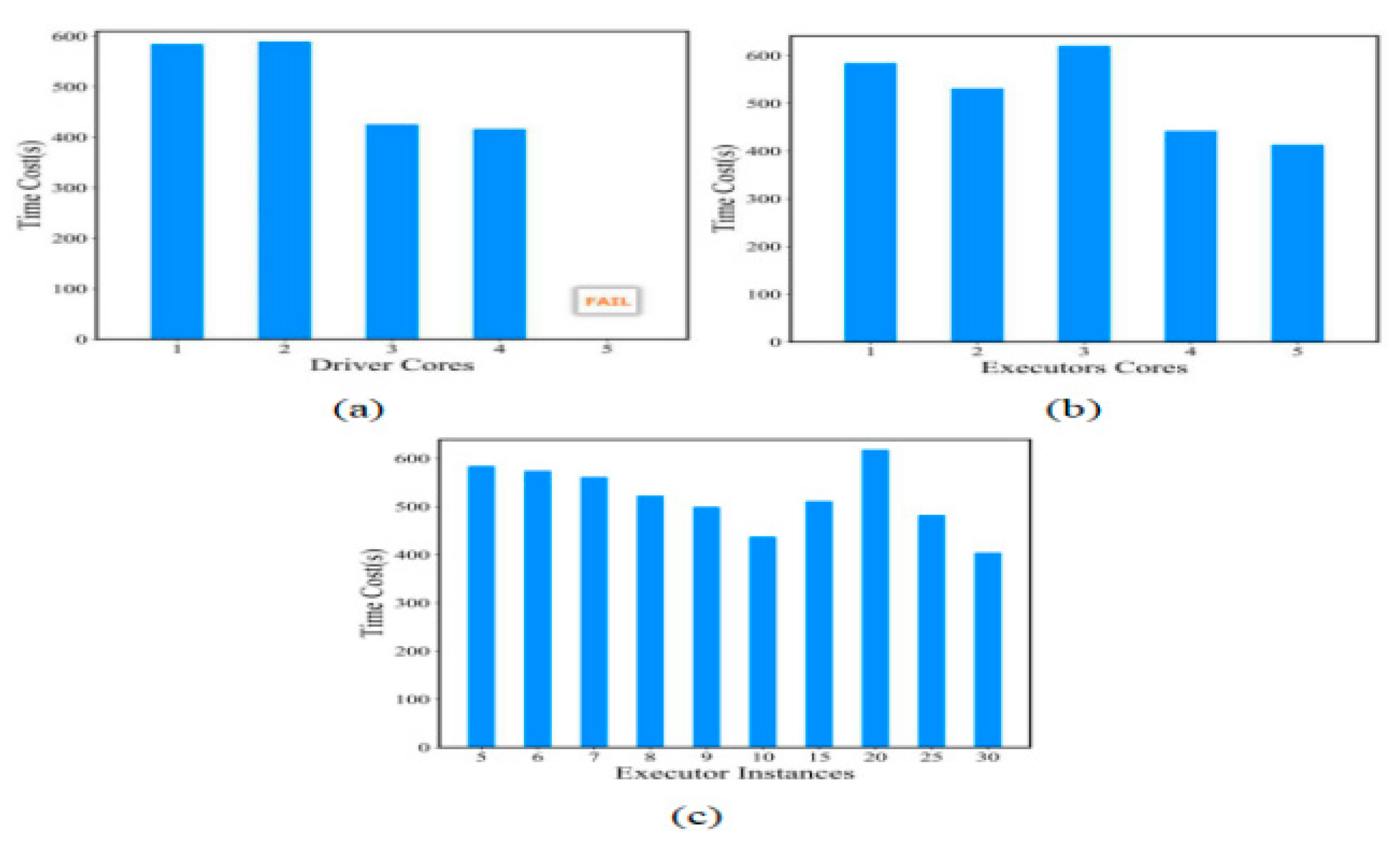

4.3. Performance of PESTARFM Using Containerized Hadoop

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Langmead, B.; Nellore, A. Cloud computing for genomic data analysis and collaboration. Nat. Rev. Genet. 2018, 19, 208–219. [Google Scholar] [CrossRef] [PubMed]

- Teluguntla, P.; Thenkabail, P.S.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Yan, J.; Ma, Y.; Wang, L.; Choo, K.-K.R.; Jie, W. A cloud-based remote sensing data production system. Future Gener. Comput. Syst. 2018, 86, 1154–1166. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Sun, J.; Zhang, Y.; Wu, Z.; Zhu, Y.; Yin, X.; Ding, Z.; Wei, Z.; Plaza, J.; Plaza, A. An Efficient and Scalable Framework for Processing Remotely Sensed Big Data in Cloud Computing Environments. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4294–4308. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient Identification of Corn Cultivation Area with Multitemporal Synthetic Aperture Radar and Optical Images in the Google Earth Engine Cloud Platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef]

- Cheng, D.; Zhou, X.; Lama, P.; Wu, J.; Jiang, C. Cross-Platform Resource Scheduling for Spark and MapReduce on YARN. IEEE Trans. Comput. 2017, 66, 1341–1353. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, H.; Deng, X.; Li, K.; Li, K. A scheduling scheme in the cloud computing environment using deep Q-learning. Inf. Sci. 2020, 512, 1170–1191. [Google Scholar] [CrossRef]

- Mann, Z.Á. Allocation of Virtual Machines in Cloud Data Centers—A Survey of Problem Models and Optimization Algorithms. ACM Comput. Surv. 2015, 48, 1–31. [Google Scholar] [CrossRef]

- Bhimani, J.; Yang, Z.; Leeser, M.; Mi, N. Accelerating big data applications using lightweight virtualization framework on enterprise cloud. In Proceedings of the 2017 IEEE High Performance Extreme Computing Conference (HPEC), Institute of Electrical and Electronics Engineers (IEEE), Waltham, MA, USA, 12–14 September 2017; pp. 1–7. [Google Scholar]

- Sollfrank, M.; Loch, F.; Denteneer, S.; Vogel-Heuser, B. Evaluating Docker for Lightweight Virtualization of Distributed and Time-Sensitive Applications in Industrial Automation. IEEE Trans. Ind. Inform. 2021, 17, 3566–3576. [Google Scholar] [CrossRef]

- Baresi, L.; Denaro, G.; Quattrocchi, G. Big-Data Applications as Self-Adaptive Systems of Systems. In Proceedings of the 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Berlin, Germany, 27–30 October 2019; pp. 155–162. [Google Scholar]

- Štefanič, P.; Cigale, M.; Jones, A.C.; Knight, L.; Taylor, I.; Istrate, C.; Suciu, G.; Ulisses, A.; Stankovski, V.; Taherizadeh, S.; et al. SWITCH workbench: A novel approach for the development and deployment of time-critical microservice-based cloud-native applications. Future Gener. Comput. Syst. 2019, 99, 197–212. [Google Scholar] [CrossRef]

- Wang, L.; Diao, C.; Xian, G.; Yin, D.; Lu, Y.; Zou, S.; Erickson, T.A. A summary of the special issue on remote sensing of land change science with Google earth engine. Remote Sens. Environ. 2020, 248, 112002. [Google Scholar] [CrossRef]

- Zhu, H.; Ma, M.; Ma, W.; Jiao, L.; Hong, S.; Shen, J.; Hou, B. A spatial-channel progressive fusion ResNet for remote sensing classification. Inf. Fusion 2021, 70, 72–87. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and Multispectral Data Fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Haase, R.; Royer, L.A.; Steinbach, P.; Schmidt, D.; Dibrov, A.; Schmidt, U.; Weigert, M.; Maghelli, N.; Tomancak, P.; Jug, F.; et al. CLIJ: GPU-accelerated image processing for everyone. Nat. Methods 2020, 17, 5–6. [Google Scholar] [CrossRef] [PubMed]

- Jia, D.; Cheng, C.; Song, C.; Shen, S.; Ning, L.; Zhang, T. A Hybrid Deep Learning-Based Spatiotemporal Fusion Method for Combining Satellite Images with Different Resolutions. Remote Sens. 2021, 13, 645. [Google Scholar] [CrossRef]

- Hong, S.; Choi, J.; Jeong, W.K. Distributed Interactive Visualization using GPU-Optimized Spark. IEEE Trans. Vis. Comput. Graph. 2020, 1–15. [Google Scholar] [CrossRef]

- Baresi, L.; Quattrocchi, G. Towards Vertically Scalable Spark Applications. In Proceedings of the Euro-Par 2018: Parallel Processing Workshops, Turin, Italy, 27–28 August 2018; pp. 106–118. [Google Scholar]

- Jha, D.N.; Garg, S.; Jayaraman, P.P.; Buyya, R.; Li, Z.; Morgan, G.; Ranjan, R. A study on the evaluation of HPC microservices in containerized environment. Concurr. Comput. Pract. Exp. 2021, 33, e5323. [Google Scholar] [CrossRef]

- Xu, C.; Du, X.; Yan, Z.; Fan, X. ScienceEarth: A Big Data Platform for Remote Sensing Data Processing. Remote Sens. 2020, 12, 607. [Google Scholar] [CrossRef]

- Huang, W.; Meng, L.; Zhang, D.; Zhang, W. In-Memory Parallel Processing of Massive Remotely Sensed Data Using an Apache Spark on Hadoop YARN Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3–19. [Google Scholar] [CrossRef]

- Buzachis, A.; Galletta, A.; Carnevale, L.; Celesti, A.; Fazio, M.; Villari, M. Towards Osmotic Computing: Analyzing Overlay Network Solutions to Optimize the Deployment of Container-Based Microservices in Fog, Edge and IoT Environments. In Proceedings of the 2018 IEEE 2nd International Conference on Fog and Edge Computing (ICFEC), Washinton, DC, USA, 1–3 May 2018; pp. 1–10. [Google Scholar]

- Suo, K.; Zhao, Y.; Chen, W.; Rao, J. An Analysis and Empirical Study of Container Networks. In Proceedings of the IEEE INFOCOM 2018-IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 189–197. [Google Scholar]

- Armbrust, M.; Xin, R.S.; Lian, C.; Huai, Y.; Liu, D.; Bradley, J.K.; Meng, X.; Kaftan, T.; Franklin, M.J.; Ghodsi, A.; et al. Spark SQL: Relational Data Processing in Spark. In Proceedings of the Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data, Melbourne, Australia, 31 May–4 June 2015; pp. 1383–1394. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Sefraoui, O.; Aissaoui, M.; Eleuldj, M. OpenStack: Toward an open-source solution for cloud computing. Int. J. Comput. Appl. 2012, 55, 38–42. [Google Scholar] [CrossRef]

- Meireles, F.; Malheiro, B. Integrated Management of IaaS Resources. In Proceedings of the Euro-Par 2014: Parallel Processing Workshops, Berlin, Germany, 25–29 August 2014; pp. 73–84. [Google Scholar]

- Tamiminia, H.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.; Adeli, S.; Brisco, B. Google Earth Engine for geo-big data applications: A meta-analysis and systematic review. ISPRS J. Photogramm. Remote Sens. 2020, 164, 152–170. [Google Scholar] [CrossRef]

- Wang, L.; Ma, Y.; Yan, J.; Chang, V.; Zomaya, A.Y. pipsCloud: High performance cloud computing for remote sensing big data management and processing. Future Gener. Comput. Syst. 2018, 78, 353–368. [Google Scholar] [CrossRef]

- Kang, S.; Lee, K. Auto-Scaling of Geo-Based Image Processing in an OpenStack Cloud Computing Environment. Remote Sens. 2016, 8, 662. [Google Scholar] [CrossRef]

- Huang, W.; Zhang, W.; Zhang, D.; Meng, L. Elastic Spatial Query Processing in OpenStack Cloud Computing Environment for Time-Constraint Data Analysis. ISPRS Int. J. Geo Inf. 2017, 6, 84. [Google Scholar] [CrossRef]

- Herodotou, H.; Chen, Y.; Lu, J. A Survey on Automatic Parameter Tuning for Big Data Processing Systems. ACM Comput. Surv. 2020, 53, 1–37. [Google Scholar] [CrossRef]

| Sentinel-2A/B | PlanetScope | ||||

|---|---|---|---|---|---|

| Band Name | Band Width (μm) | Spatial Resolution (m) | Band Name | Band Width (μm) | Spatial Resolution (m) |

| Blue | 460~525 | 10 | Blue | 450~515 | 3 |

| Green | 525~605 | 10 | Green | 525~600 | 3 |

| Red | 650~680 | 10 | Red | 630~680 | 3 |

| NIR | 785~900 | 10 | NIR | 845~885 | 3 |

| Software | Version |

|---|---|

| Kubernetes | v1.13.4 |

| Docker | 18.06.1-ce |

| Spark | 2.4.0 |

| JDK | 1.8.0_172 |

| Etcd | v3.1.10 |

| Flannel | v0.10.0-amd64 |

| Kube-dns | 1.14.7 |

| Spark-operator | 0.1.9 |

| Scala | 2.11.2 |

| Hadoop | 3.1.2 |

| Spark | 2.4.0 |

| Driver Memory (MB) | Executor Memory (MB) | Executors | Partitions | Time Cost (s) |

|---|---|---|---|---|

| 8096 | 10,240 | 5 | 20 | 913 |

| 8096 | 10,240 | 6 | 20 | 910 |

| 8096 | 10,240 | 7 | 20 | 889 |

| 8096 | 10,240 | 8 | 20 | 869 |

| 8096 | 10,240 | 10 | 20 | 851 |

| 8096 | 10,240 | 15 | 20 | 839 |

| 8096 | 10,240 | 20 | 20 | 823 |

| 8096 | 10,240 | 25 | 20 | 788 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, W.; Zhou, J.; Zhang, D. On-the-Fly Fusion of Remotely-Sensed Big Data Using an Elastic Computing Paradigm with a Containerized Spark Engine on Kubernetes. Sensors 2021, 21, 2971. https://doi.org/10.3390/s21092971

Huang W, Zhou J, Zhang D. On-the-Fly Fusion of Remotely-Sensed Big Data Using an Elastic Computing Paradigm with a Containerized Spark Engine on Kubernetes. Sensors. 2021; 21(9):2971. https://doi.org/10.3390/s21092971

Chicago/Turabian StyleHuang, Wei, Jianzhong Zhou, and Dongying Zhang. 2021. "On-the-Fly Fusion of Remotely-Sensed Big Data Using an Elastic Computing Paradigm with a Containerized Spark Engine on Kubernetes" Sensors 21, no. 9: 2971. https://doi.org/10.3390/s21092971

APA StyleHuang, W., Zhou, J., & Zhang, D. (2021). On-the-Fly Fusion of Remotely-Sensed Big Data Using an Elastic Computing Paradigm with a Containerized Spark Engine on Kubernetes. Sensors, 21(9), 2971. https://doi.org/10.3390/s21092971