Abstract

Blind and Visually impaired people (BVIP) face a range of practical difficulties when undertaking outdoor journeys as pedestrians. Over the past decade, a variety of assistive devices have been researched and developed to help BVIP navigate more safely and independently. In addition, research in overlapping domains are addressing the problem of automatic environment interpretation using computer vision and machine learning, particularly deep learning, approaches. Our aim in this article is to present a comprehensive review of research directly in, or relevant to, assistive outdoor navigation for BVIP. We breakdown the navigation area into a series of navigation phases and tasks. We then use this structure for our systematic review of research, analysing articles, methods, datasets and current limitations by task. We also provide an overview of commercial and non-commercial navigation applications targeted at BVIP. Our review contributes to the body of knowledge by providing a comprehensive, structured analysis of work in the domain, including the state of the art, and guidance on future directions. It will support both researchers and other stakeholders in the domain to establish an informed view of research progress.

1. Introduction

According to the World Health Organization (WHO), at least 1 billion people are visually impaired in 2020 [1]. There are various causes of vision impairment and blindness, including uncorrected refractive errors, neurological defects from birth, and age-related cataracts [1]. For those who suffer from vision impairment, both independence and confidence in undertaking daily activities of living are impacted. Assistive systems exist to help BVIP in various activities of daily living, such as recognizing people [2], distinguishing banknotes [3,4], choosing clothes [5], and navigation support, both indoors and outdoors [6].

BVIP face particularly serious problems when navigating public outdoor areas on foot, where simple tasks such as crossing a road, obstacle avoidance, and using public transportation present major hazards and difficulties [7]. These problems threaten the confidence, safety and independence of BVIP, limiting their ability to engage in society. In recent years, technological solutions to support BVIP in outdoor pedestrian navigation has been an active research area (see Table 1). In addition, we find that overlapping areas of research, whilst not tagged as assistive navigation systems research, are addressing challenges that can contribute to its progress, such as smart cities, robot navigation and automated journey planning. The combined substantial body of work needs further examination and analysis in order to understand the progress, gaps and direction for future research towards full support of BVIP in outdoor navigation. Our review provides a comprehensive resource for other researchers, commercial and not for profit technology companies, and indeed to any stakeholders in the BVIP sector.

The contributions of this survey are summarised as follows:

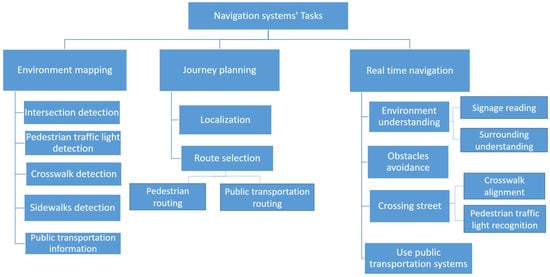

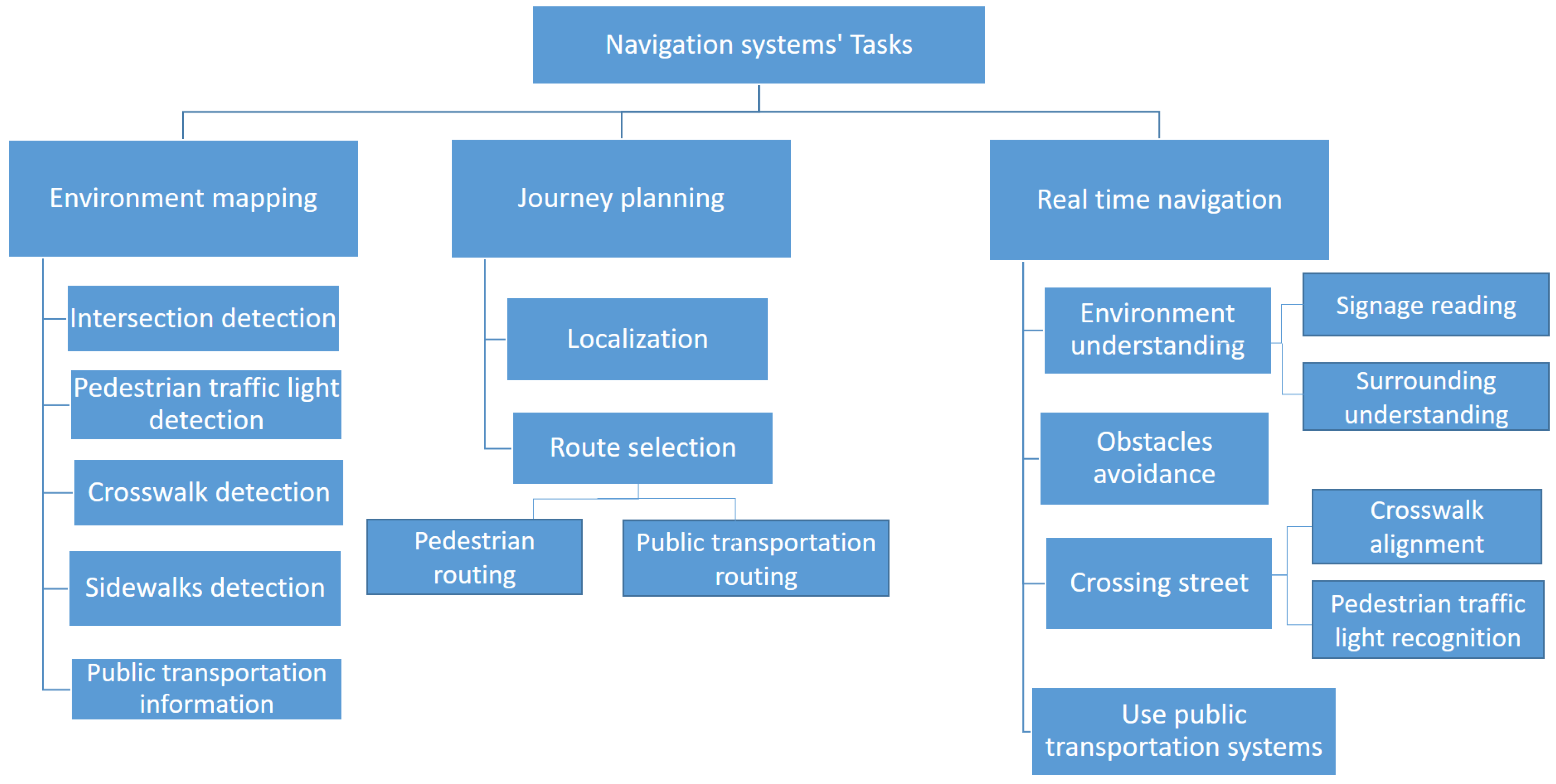

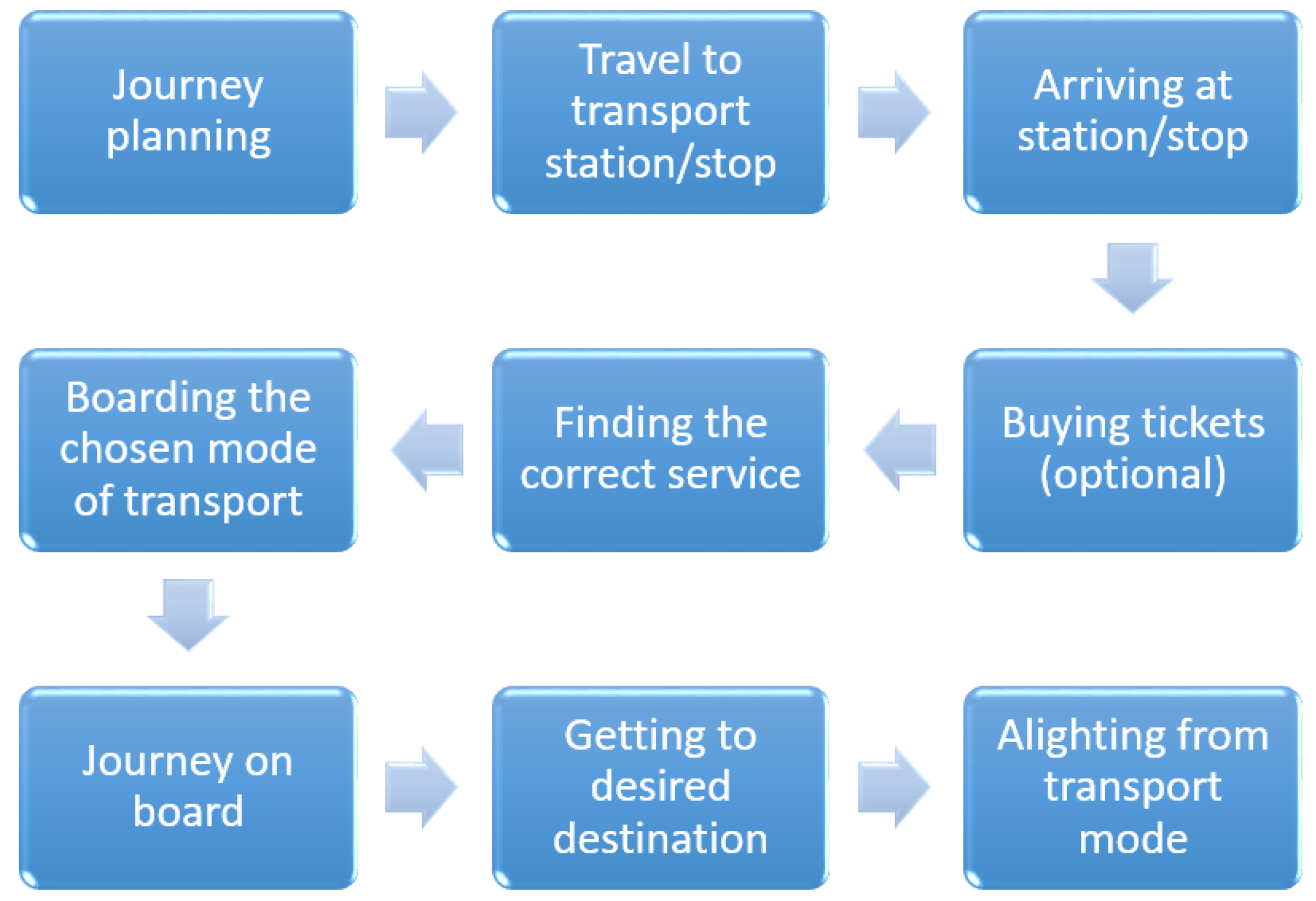

- A hierarchical taxonomy of the phases and associated task breakdown of pedestrian urban navigation associated with safe navigation for BVIP, is presented.

- For each task, we provide a detailed review of research work and developments, limitations of approaches taken, and potential future directions.

- The research area of navigation systems for BVIP overlaps with other research fields including smart cities, automated journey planning, autonomous vehicles, and robot navigation. We highlight these overlaps throughout to provide a useful and far-reaching review of this domain and its context to other areas.

- We highlight and clarify the range of used terminologies in the domain.

- We review the range of available applications and purpose-built/modified devices to support BVIP.

In this survey, we mainly included papers that discussed the area of outdoor navigation systems for BVIP from 2015 until 2020. The paper comprises recent scientific works to reveal the current gaps and future trends of the area. However, sometimes we encompass papers from earlier years if it has significant information. We used Google Scholar as a source of papers. Firstly, we searched for assistive and aid navigation systems for VI. Secondly, for each task, we used different keywords to look for the scientific works which related to the area of interest that we are concerned with. In addition, we checked work that was done within our domains. Finally, we excluded papers under two criteria (1) if a paper is irrelevant after reading the abstract, or (2) if a paper is published in journals and conferences with an impact factor of less than one.

The structure of our review is as the following. Section 2 discusses previous surveys in the area of outdoor navigation for BVIP, and explored different terminologies in the area. The taxonomy of phases and tasks of assistive navigation systems is presented in Section 3. In Section 4, the analysis of previous research works in assistive outdoor navigation systems for BVIP is explored. Section 5, Section 6 and Section 7 explore each phase and its tasks in detail, including both BVIP research and overlapping domain research for each task. We explore other aspects of designing navigation systems such as feedback and wearability in Section 8. In Section 9, applications and devices are compared. Section 10 summarizes the main findings of our review and discusses the main challenges in the area. Finally, a conclusion and future work are highlighted in Section 11.

2. Related Work

Our focus in this section is to examine the range and scope of previous reviews in the domain of navigation system for the BVIP domain. Islam et al. [8] focussed specifically on walking systems. They compared indoor and outdoor walking systems that support BVIP during navigation. To conduct this comparison, they used the following features: capturing devices, feedback devices/types, hardware components, coverage area, detection range, weight, and cost-effectiveness. Real and Araujo [9] presented a historical development of indoor and outdoor navigation systems between 1960 and 2019. However, they did not discuss the underlying algorithms used.

Fernandes et al. [10] defined the main components in navigation systems—namely interface, location, orientation, and navigation. They also presented a review of technologies that were used for each component. They emphasized the need to combine various technologies together to build a comprehensive system. Their review, however, did not study in detail the algorithms and datasets and did not attempt to present a comparison between systems. Paiva and Gupta [11] explored indoor and outdoor navigation systems and obstacle detection systems. They identified approaches and equipment used in each one. However, they excluded a comparison between approaches and a discussion about the algorithms used.

A number of reviews presented small-scale surveys of a small number of indoor and outdoor navigation systems [12,13,14]. While they provided information about technologies and limitations, they did not mention or explore the applied algorithms. Manjari et al. [15] explored previous navigation systems in the domain and defined features of each one. They provided a brief and general summary of utilized algorithms and techniques but did not provide detailed analysis of data, techniques, methods or gaps.

Tapu et al. [16] assessed features of outdoor navigation systems such as wearability, portability, reliability, low cost, real-time, user-friend, robustness, and wireless/no connection. Although they presented a new direction of evaluation Electronic Travel Aids (ETAs), they covered only 12 articles.

2.1. Specific Sub-Domain Surveys

Survey publications in this category have explored navigation systems for a specific sub-domain—where they have discussed the previous work from one perspective, such as computer vision.

Fei et al. [17] focused on indoor and outdoor ETAs based on computer vision. They classified ETAs according to the provided information to the user during the journey, classifying by road situations and obstacles, reading signs and tags, object recognition, and text extraction. The features and limitations of each system were explained. However, they did not discuss the future work of ETAs or compare between available systems. Budrionis et al. [18] compared 15 mobile navigation applications that use computer vision. A comparison was done from distinct perspectives (objectives/functions, input/output, data processing, algorithms, and evaluation of the solution). The capabilities of a smartphone to help BVIP in their navigation are discussed by Kuriakose et al. [19]. They identified the advantages and limitations of six smartphone applications [19]. Budrionis et al. [18] and Kuriakose et al. [19] included a limited number of navigation systems. This lack of included articles eliminates use of these surveys as a complete overview of the area.

To recap, no single review provides a complete and detailed coverage of research into navigation supports for the BVIP sector. The majority of previous surveys reviewed a limited number of published works, resulting in either a narrow or a more cursory presentation of previous work. Likewise, previous reviews discussed navigation systems at a high level, without including details about how the individual aspects or tasks of navigation were addressed. In addition, the algorithms and associated research datasets were not discussed, so state of the art approaches and the existence of benchmarks datasets are not identifiable. As a result, the previous review articles present a cursory overview of an area of interest. This lack of a comprehensive in-depth review of this domain motivated us to investigate this area and present our survey.

2.2. Terminology

This subsection will present the different terminologies used in a navigation systems for the BVIP community. In addition, it emphasizes that there is no agreed terminology. There are five phrases used to express all activities related to navigation of BVIP, namely walking assistants for BVIP [8], traveling aid systems for BVIP [20], visual substitution navigation systems for BVIP [21], navigation systems for BVIP [9], and assistive navigation systems for BVIP [10]. In addition to these different terms, navigation activities are classified in different ways and have various meanings. Traveling aid system tasks were divided into micro-navigation tasks (define obstacles and the environment around the user) and macro-navigation tasks (related to defining a path to a destination and information needs like the existence of intersections, road signs, and so on) [20].

Fernandes et al. [10] defined the required tasks for assisting people in navigation. These tasks are (1) an interface (to convey useful information to a user) (2) localization (to define the location of the user) (3) orientation (to define the environment around the user) and (4) navigation (to define the route for the destination). Dakopoulos and Bourbakis [21] divided the visual substitution systems for navigation to (1) ETAs: to receive data about surroundings, such as obstacles, (2) Electronic Orientation Aids (EOAs) which help the user to reach a destination by selecting the route, and (3) Position Locator Devices (PLDs) which defines the user’s location.

The definition of travel aids differs somewhat across the research. For example, Petrie et al. [20] considered a travel aid to be a system that involves all tasks related to navigation activities. On the other hand, Manjari et al. [15] define travel aids as responsible only for understanding the environment. The term “orientation” is used with two different definitions. The absence of agreed terminology can lead difficulties in understanding literature, especially for new readers in the area. In addition, it may lead to the investigator accidentally excluding research works using these different terms during searching.

5. Environment Mapping

The first phase of navigation systems is an environment mapping phase. This phase is about converting street elements to practical information on maps. There are a large variety of permanent and semi-permanent street components that are relevant to BVIP, including intersections, traffic lights, crosswalks, transportation stations/stops and sidewalks. Whilst these safety-critical components are easy to detect by sighted people, they present a huge challenge for BVIP—with environment mapping representing a fundamental phase in navigation systems that has limited attention thus far in the research domain. This encourages us to study work done on other domains to determine the research challenges and gaps as well as introduce prospective future directions on the environment mapping phase detailed by task. As a result, this emphasizes the need to transfer knowledge between other domains and the area of navigation systems for BVIP.

5.1. Intersection Detection

The intersection detection task is an important component of an environment mapping stage as it helps BVIP to avoid uncontrolled intersections on their journey (i.e., those that do not have traffic lights). Previous research works used different ways to recognize junctions, such as the existence of traffic lights [53,62], audible units [77], or ramps [81].

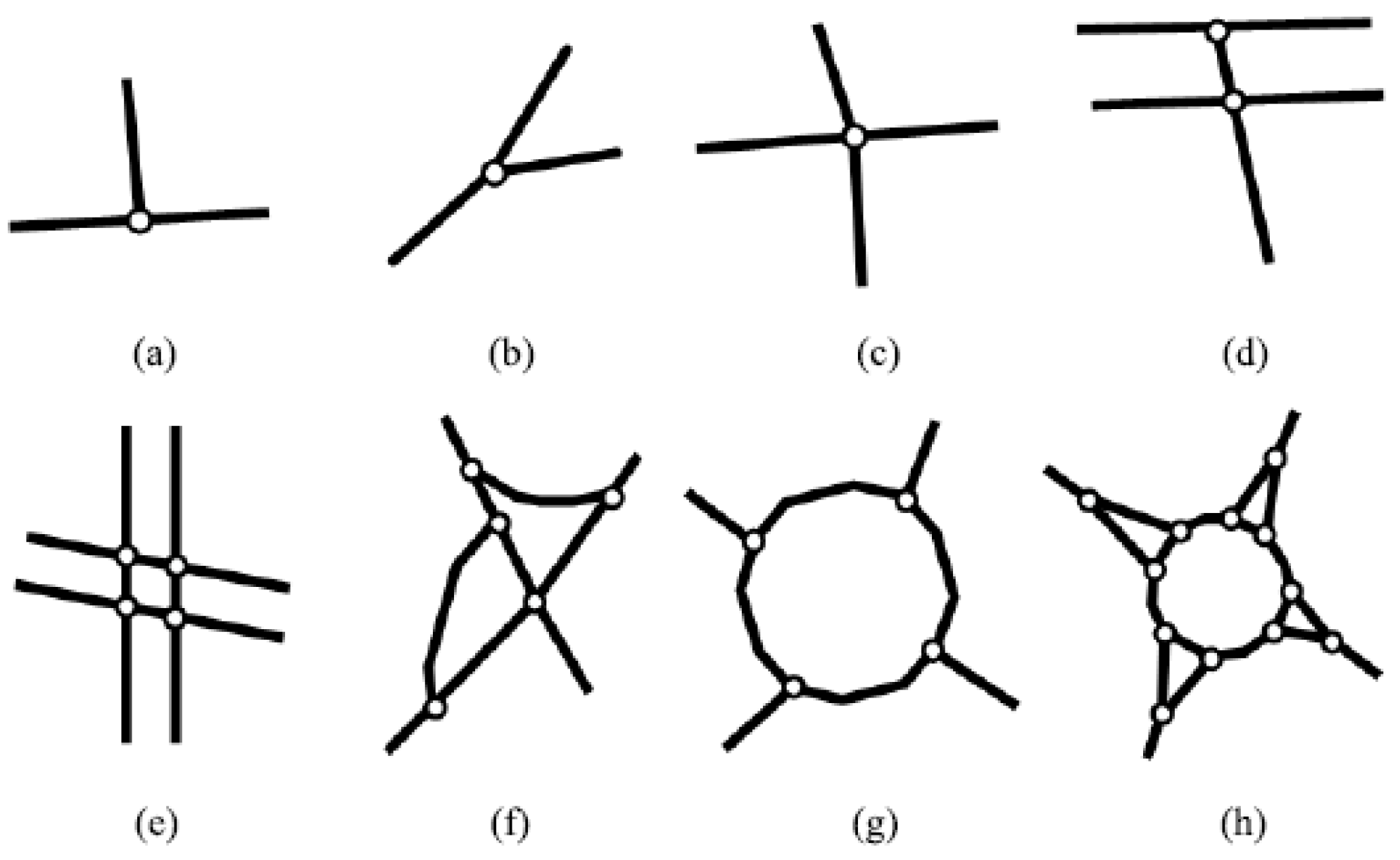

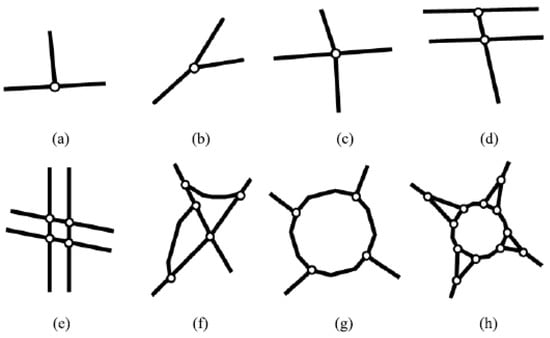

Both the existence and type of intersection are important to the BVIP, as the type will determine how the road should be navigated. Intersection types vary across the literature. Zhou and Li [82] identified nine types of intersections. Dai et al. [83] classified junctions into three main classes: the typical road intersection structure (Figure 2a–c), the complex typical intersection structure (Figure 2d–f), and the round-about road intersection structure (Figure 2g,h), as shown in Figure 2.

Figure 2.

Types of intersection from Dai et al. [83]: (a–c) typical road intersections; (d–f) complex intersections; (g,h) round-about intersection

By analysing the various types of intersections, we found that there are 14 unique types of junctions. We also note that intersection detection task is discussed in several domains such as autonomous vehicles [84], driver assistance systems [85], and transformation of maps to digital datasets [86]. Although it is significant for navigation systems [7], it is not addressed in any of them.

A variety of data sources are used in the detection of intersections: images [87], map tiles [86], videos [88], LiDAR sensors [85], and vehicle trajectories [89,90]. Here, computer vision approaches will be discussed as images and videos are considered a rich source of information, providing detailed junction information, such as the number of lanes. The problem of intersection detection has been addressed to date via two computer vision approaches:

An image classification problem: researchers have treated the problem as three levels of classification: a binary problem of existence of an interface, a multi-class intersection type problem, and a road detection problem. This latter approach is about detecting a road in an image, and then determining intersections as part of road detection [87,91]. Looking at each in turn, for binary classification: Kumar et al. [88] determined the existence of an intersection in a video or not—the network consists of Convolutional Neural Network (CNN), bi-Long short-term memory (LSTM), and Siamese-CNN. For BVIP, however, the type of intersection is also important, so this approach has limited use. Looking at the problem as a multi-classification intersection type problem, Bhatt et al. [84] used CNN and LSTM networks to classify sequences of frames (video) into three classes non-intersection, a T-junction, a cross junction. Oeljeklaus et al. [92] utilized a common encoder for semantic segmentation and recognition of road topology tasks. They were able to recognize six types of intersections. Koji and Kanji [93] used two types of input. First, they used images before an intersection of Third-Person Vision (TPV) and sequences of images while an intersection is passed First-person vision (FPV). For TPV, they used deep Convolutions Neural Networks (DCN) and applied LSTM for FPV. Finally, they integrated the two outputs to define seven classes of junctions. The third approach, identify road before classification, both Rebai et al. [91] and Tümen and Ergen [87] depend on different edge-based approaches to detect the road prior to the classification step. For a classification step, Rebai et al. [91] used a hierarchical support vector machine (SVM), while Tümen and Ergen [87] applied a CNN network.

An object detection problem: Saeedimoghaddam and Stepinski [86] dealt with an intersection detection task as an object detection problem, detecting both the existence and placement of the intersection within the scene (image). They used Faster RCNN to define all intersections on map tiles, achieving an 0.86 F1-score for the identification of road intersections.

Datasets in intersection detection research: Researchers may wish to use existing datasets for comparative evaluations or to support model developments. The datasets used in intersection detection model training and testing are listed in Table 2.

Table 2.

Intersection datasets.

5.2. Pedestrian Traffic Lights Detection

Pedestrian Traffic Lights (PTLs) are an essential component of an urban environment. Thus, defining the location of PTLs is an important part of the environment mapping phase. The existence of PTLs is mandatory for crossing roads, but is particularly critical for the BVIP community [62]. Selection of the safest route should exclude all uncontrolled intersections. Recently, the detection and geolocation of different street objects from street images, such as traffic lights, were discussed [100,101]. This line of research which enables automatic mapping of complex street scenes with multiple objects of interest is in the general domain of street object identification will be of interest to the BVIP research community as the importance of environment mapping becomes apparent. However, location needs to be captured for environment mapping in order to provide rich mapping information.

5.3. Crosswalk Detection

Highlighting designated crosswalk locations is an important task in an environment mapping phase. Adding this type of information will support better route selection to include designated crosswalks where people can cross safely [51]. While this is considered a simple task for sighted people, it is a challenging one for BVIP, whereby they must understand where the crosswalk is, and also the placement of the crosswalk on the street, so that the BVIP crosses within the boundaries of the cross-walk (see Section 7.3.1). Many applications such as enhanced online map [102], road management [103], navigation systems for BVIP [104], and automated cars [87] have discussed this task. Images used to address this problem have been taken from a variety of perspectives: aerial [102,105], vehicle [87], and pedestrian perspectives [62].

The detection of crosswalks from natural scene images has to cater for many variations which complicates the task for trained models [106]. The specific challenges are:

- Crosswalks differ in shape and style across countries.

- The painting of crosswalks may be partially or completely worn away, especially in countries with poor road maintenance practices.

- Vehicle, pedestrians, and other objects may mask the crosswalk.

- Strong shadows may darken the appearance of the crosswalk.

- The change in weather and time when an image is captured affects the illumination of the image.

In addition to the lack of uniformity of crosswalks for detecting the presence and location of the crosswalk, BVIP need to be able to determine with precision the direction of the crosswalk on the road. If the system relies a camera to identify the crosswalk alignment in real-time, the captured images may only find part of a crosswalk or/and with a wrong angle. Several articles discuss these challenges. These papers employ a variety of approaches: traditional computer vision [106,107], traditional machine learning such as SVM [65], and deep learning algorithms [105,108]. The work of Wu et al. [106] concluded that deep learning outweighs traditional computer vision techniques in their comparisons. We analyse the deep learning works, based on grouping them as follows:

Classification: A pre-trained network VGG is used by Berrie et al. [105,108] to identify whether images contain a crosswalk or not. Tümen and Ergen [87] used a custom networked termed RoIC-CNN for the existence of crosswalks as a contribution to driven assistance research.

Object detection: With object detection, both the existence and location within a scene (image) is determined. Kurath et al. [102] employed a sliding window over an image to detect the crosswalk using an Inception-v3 model. Malbog [109] used MASK R-CNN to detect the crosswalk. This model outputs are bounding box, mask, and classification score.

Segmentation: Yang et al. [104] used a CNN semantic segmenter to detect a crosswalk and other objects from the road, where segmentation builds upon object detection by providing a precise placement, shape and scale of the crosswalk within a scene.

Location detection: detecting location of crosswalks is critical for the BVIP to determine a safe place to cross the road. Yu et al. [62] presented a modification on MobileNetV3 to detect the start and endpoint of a crosswalk.

Datasets in Crosswalk Detection Research: In Table 3, we list the datasets used in this task by researchers for modelling training and/or evaluation, including their availability to other researchers. The table highlights the diversity and coverage of used datasets. It describes the perspective, number, and coverage area of captured images.

Table 3.

Crosswalk datasets.

Looking at the datasets in Table 3, we note that each dataset contains just one type of crosswalk (zebra crosswalk), and thus there are various shapes of crosswalk which are not included. This limits the generalisability of models generated from the associated research works. Only the Pedestrian Traffic Lane [112] dataset contains the geographic location of crosswalks, and thus is the only one currently suited to enriching maps with crosswalk locations. Most datasets do not cover the various crosswalk challenges (painting can be fading away, objects partially occluding it, etc.). The majority are local datasets and are not published for general use.

5.4. Sidewalk Detection

For BVIP, a sidewalk is a critical street component, as it is the safest area to walk on. Sidewalk detection is a task in an environment mapping phase, where it is required to build a comprehensive map based on sidewalks. This map helps in producing precise instructions for BVIP [113]. In BVIP navigation systems literature, sidewalk detection was discussed as an obstacle avoidance task where the navigation system detects them to avoid falling [47,60].

5.5. Public Transportation Information

We deem public transportation information as relevant to the mapping environment phase to support users who may wish to include public transport into their journey. Before using public transportation means, there are various types of information that need to be gathered such as the locations of public transportation stations or stops [114], accessibility information of stations and stops [115] and schedule of routes [116]. This level of information is relevant for the route selection task (see Section 6.2). Some of these details are available through applications or on the internet but not in a form that is easy to use by BVIP [117]. We suggest that this area needs to be recognised as a component to be deployed in an environment mapping application, with public transport information included as part of map enrichment.

5.6. Discussion of Environment Mapping Research

Having reviewed the levels and types of research approaches being undertaken in various aspects of environment mapping, we now take a summary view of the area.

The information and locations of PTLs, intersections, sidewalks, crosswalks, and public transportation need to be involved in maps for the benefit of BVIP undertaking a journey. The available work in intersection detection to date does not cover all types of intersections. The binary classification approach defines only the existence or not of an intersection. In addition, the accuracy of a multi-classification approach (six or seven types) is very low. While the direction of detecting a road before an intersection classification has a promising accuracy that ranges between 81.8 % 100 %, it only detects three types of intersection, which is not enough. These approaches do not define the location of a junction, which is critical in the environment mapping phase. In contrast, the object detection approach can detect the location of an intersection with 0.86 F1-score from map tiles. This location is on the image, but it can in theory be projected to the real location.

The crosswalk detection task has a variety of works using deep learning based computer vision approaches including classification, object detection, segmentation, and location detection. The environment mapping stage is more sophisticated than detecting the absence or existence of crosswalks. Therefore, appropriate directions are object detection, segmentation, and location detection approaches, as in theory they can all define crosswalk location. Only the location detection approach was tested for defining a start and end point of a crosswalk with an average angle error of 6.15° [62]. To the best of our knowledge, no paper discussed different shapes of the crosswalks (see Table 3).

5.7. Future Work for Environment Mapping

The environment mapping phase as a pre-stage for BVIP navigation needs to be addressed as a key area of BVIP navigation systems research. Approaches from other domains such as driver assistance and autonomous vehicles can be built upon to produce maps for BVIP navigation. Looking at the various approaches of intersection and crosswalk detection, object detection approaches hold promise for determining the type and location of each street component.

6. Journey Planning

Once the main components of an urban environment have been used to provide enriched maps (see details in Section 5), these maps will be used in the journey planning phase. The journey planning phase is used to plan the route to the user’s destination before starting their journey, helping the user to choose the optimal route, and providing a complete overview of the route before starting the journey. The following section will discuss research in support of journey planning in detail. The relative merits of the journey research approaches are then provided at the end of this section.

6.1. Localization

In the planning stage, a user has two options (1) obtain directions between two locations and (2) to obtain directions between their current location and destination. In the first option, the user will define a start and destination location. In the second one, the localization task is used to define their current location. Localization is an essential task in a variety of domains: robot navigation [118], automated cars [118], and BVIP navigation systems [23,80]. For BVIP, the precision of localization is significant because it affects the quality of instructions that are provided by a navigation system. The approaches of other applications are not enough for the safety of BVIP [59,113].

Indoor and outdoor localization systems employ different system architectures. Indoor approaches, such as radio frequency identification tags [119], active radio-frequency identification technology [24], and Bluetooth beacons [74,120], are not suitable for outdoor environments because they have a localized infrastructure that does not scale to outdoor. We identify two approaches to outdoor navigation systems, both of which are relevant to BVIP Localization. Global Positioning Systems (GPS) are employed in assistive outdoor navigation systems to receive data about the location of the user from satellites [22,23,54,55,56,59,75,80]. Typical GPS accuracy, in the range 20 metres, needs to be supplemented for pinpointing more fine grained location to support BVIP [73]. They employed an external GPS tracker to define the location of the user using a u-blox NEO-6M chip with a location accuracy of less than 0.4 m. A second approach is image-based positioning systems. This approach defines a location of a user by querying a captured image in a dataset that contains images and location information [36,37,38,58]. V-Eye [39] used visual simultaneous localization and mapping (SLAM) and model-based localization (MBL) to localize the BVIP with a median error of approximately 0.27 m.

6.2. Route Selection

After defining a journey start point, the optimal route(s) from start point to destination is determined during route selection, allowing for distance, safety and considerations of the BVIP base. Although this task is very important for BVIP, there is a limited amount of research to address it from the perspective of this user group [121,122]. Most BVIP outdoor navigation systems used available path finding services, such as QQMap [80], open source route planner [55] and BaiduMap [54], without personalised selection of the shortest path with allowance for the BVIP’s preferences. We suggest that is related to the issue of lack of street market BVIP relevant information on maps (like traffic lights, sidewalks, etc.)—all of which are needed to choose the best path for our user base.

Route selection consists of pedestrian routing and public transportation as sub-tasks (read Section 7.4). Public transportation as part of journey planning does not appear in the literature [32,78,79] therefore, the focus of this section is on pedestrian routing. This problem of route selection problem is a significant task for navigation of vehicles [123] or pedestrians with and without disabilities [122].

Route selection algorithms divide into two approaches, namely static and dynamic approaches, depending on their consideration or not of the time during the day (rush hour, morning, evening, etc.) [123]. The problem of route selection is solved in two steps. As a general approach, a graph is built first, including nodes, edges that link between nodes, and weights to evaluate each segment. Second, the routing algorithms step chooses the best route, allowing for predefined criteria assessed against weighted routes derived from the map [10].

In the literature, route selection has different terminology such as wayfinding, route planning, route recommendation, and path planning. Analysing the literature, we group the routing selection algorithms approaches into two groups. Simple Distance criteria: in this approach, graph weights depend only on the distance between nodes, so the routing algorithms choose the shortest path. Different routing algorithms are employed for this problem, such as Dijkstra’s algorithm [74] and particle swarm optimization strategy [124]. Secondly, we noted a Customised Criteria approach, where graph weights determine the accessibility of each edge and the distance between nodes to choose the optimal path of the user. Cohen and Dalyot [121] used information about length, complexity, landmarks, and way type from Open Street Map to build a network-weighted graph and used a Dijkstra algorithm to choose the best route. Fogli et al. [125] depended on using accessibility information (manually gathering) and Google Maps services to navigate disabled people.

We also reviewed orientation systems for other disabilities. For wheelchair users, Wheeler et al. [126] presented a sidewalk network that has accessibility information (width, length, slope, surface type, surface condition, and steps of each sidewalk segment), and a Dijkstra algorithm calculated the best road depending on that information. Bravo1 and Giret [122] constructed a wayfinding system that depends on the user profile (the type of disabilities) to find the best route according to each disability.

For BVIP route planning, we suggest that a customised criteria approach is required for suitable journey planning, utilising the information generated from the environment mapping phase in addition to accessibility information.

6.3. Discussion of Journey Planning Research

Looking at localization, GPS accuracy provides a precise location within 10–20 m [24], which is not as precise as that ideally required to pinpoint the exact location of BVIP. In addition, GPS is further affected by high buildings in crowded cities. On the other hand, the alternative approach using image-based localization reaches a median error of approximately 0.27 m. It requires enormous effort to collect local images with location information. Image-based depends on the ability of a blind user to capture a stable image to query over the image dataset. At this point in time, these data gathering and usability issues render the image-based approach unsuitable for BVIP Localization.

Looking at the research related to the route selection, disabled people require enjoyable, safer paths that are appropriate to their needs (fewer turns, more traffic lights, and so on) rather than the routes selected primarily on distance [127]. Therefore, customised criteria are considered a more promising approach than the simple distance based approach. We also noted that most navigation systems used the centre of the street (centre lines), and this negatively affects the accuracy of instructions for pedestrian navigation—particularly for BVIP [113]. While dynamic approaches depend on accessibility information, which increases user confidence about suggested routes, these approaches are not currently used in most navigation systems for BVIP [80]. Accessibility information plays a significant role in dynamic approaches but most of it is gathered manually [121,125]. Although most of the navigation systems for BVIP used the Dijkstra algorithm, the time response of this algorithm limits its suitability as the best option [128], especially on a large map. Finally, we note that navigation systems for BVIP did not incorporate public transportation into the journey planning phase.

6.4. Future Work for Journey Planning

For localization, the approach of using the external GPS tracker is suitable to define the location of the user, as used by Meliones et al. [73]. As per the previously stated pre-requisite for route selection, there is a need to build a system that can gather accessibility information automatically. We also identify that further investigation is needed to discover the most suitable algorithm for routing selection problems in terms of time response. Finally, we suggest building a navigation system that includes routing selection in any mode (walking or using public transportation) and using dynamic routing selection approaches to help BVIP in choosing the preferred route.

9. Applications and Devices

Whilst the largest focus of this review is the active research work in BVIP and overlapping navigation systems work, we also include an overview of the applications and devices available to be used by BVIP in real life. Table 7 presented a detailed and comprehensive comparison between them. For each one, we declare a name, components, features, feedback/wearability/cost, and limitations. Components are the physical hardware components available to the user, while features summarise the functionality offered by the device. The output will be stated in a feedback column. A wearability column describes the carry mode of the device. Finally, for each one, the disadvantages are defined in the weak points column. These application and devices can be divided according to carry mode into wearable and handheld categories.

Table 7.

Real navigation devices and applications.

9.1. Handheld

A handheld is a device, or application that is held in a user’s hand. UltraCane [177], and SmartCane [173] are examples of handheld devices. All these devices are traditional canes, with enhancements added to detect all levels of obstacles.

WeWalk [174] provides users with a cane that contains sensors to detect obstacles on all levels and a mobile app for navigation guidance. It can control the mobile phone during a cane, so one hand will be free. Nearby Explorer [181] gives information about objects that the user points to, such as distance, height. PathVu Navigation [183] gives information only about obstacles that were informed about them by another user, so a user must use the traditional methods to detect other obstacles.

Aira [190] and Be My Eyes [191] are phone applications that provide support to BVIP in difficult situations, such as when lost or when faced with obstacles. These applications do not preserve user privacy.

9.2. Wearable

Some navigation applications or devices can be worn without occupying the BVIP hand. Wearable devices such as Maptic [171] and Sunu Band [188] do not discover obstacles on all levels, so a user must use other devices, such as a cane. However, Horus [175], Envision Glasses [179], and Eye See [180] do not provide users with navigation guidance.

9.3. Discussion

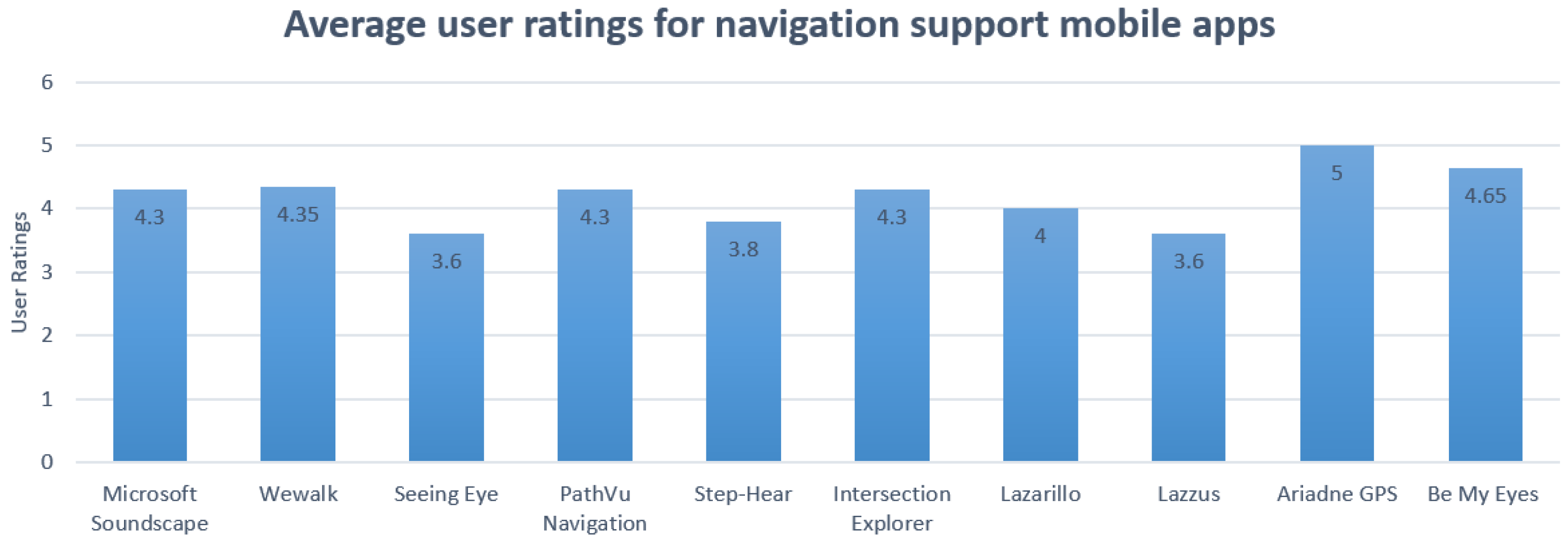

At present, the available applications and devices do not support all mandatory tasks for navigation activity. The majority of aid devices and applications support obstacle avoidance and guidance tasks (see Table 7). Although there are two means of feedback, the majority of applications provide feedback via audio. Using a headset for audio feedback raises the problem of blocking out other environmental audio sounds, but this can be solved using bone-conducting headphones. Most mobile application are free, while other navigation assistive devices are not. Wearable devices, although not yet common, have the advantage of being hands-free. Real end-users experiences with available applications and devices are very important. This kind of information is generally only available for mobile apps. We collected end-user ratings from Google Play Store and Apple App Store taking the average rating of each, as shown in Figure 4.

Figure 4.

User experience for navigation support mobile apps.

10. Main Findings

The principal finding of our review is that although development has been done in this field, it is still some distance from producing complete and robust solutions for BVIP navigation support.

The previous analysis of the environment mapping phase demonstrates that various annotations are needed to available maps. These annotations include safety critical information on the location of PTLs, intersections, sidewalks, crosswalks, and public transportation (review Section 5.6). Localization of BVIP needs to yield highly precise locations, and typical GPS accuracy is not adequate. Selection of the optimal route for BVIP is not about the shortest path. It is about an enjoyable, safe, well supported route appropriate to their needs (fewer turns, more traffic lights, and so on) [127]. Most of the navigation systems for BVIP do not discuss using public transportation. Accessibility information has a great role in routing selection task, while most of it is gathered manually (review Section 6.3).

Environment understanding is not included in the majority of aid systems. There is limited work done in the area of PTL recognition tasks. Each available obstacle avoidance system covers a limited number of hurdles, but it is not practical to use different systems at the same time to avoid each type of danger on the road. A more generalised obstacle avoidance system approach required.

No single BVIP application or device of those available are considered a comprehensive solution for BVIP (review Section 9.3). We also point out that differences exist in the terminology for the navigation systems area for BVIP (review Section 2.2).

10.1. Discussion

For benchmarking, a huge dataset(s) is required with a sufficient number of images for each type of intersection, crosswalk, PTL, sidewalk, scene, and obstacle. These images must be acquired under different conditions (illumination, shadow), various times (day and night), in different countries, with a diversity of conditions (objects partially occluding the crosswalks, shadows of other objects may be partially or completely darkening the road), and styles. The shortage of datasets not only influences the effectiveness of solutions for each task, it also means that there is no common way to compare solutions. Most algorithms are not available online to allow a fair comparison between current solutions. Apart from the the features and tasks for BVIP navigation systems already covered, other aspects such as wearability, feedback, cost, coverage, etc. need to be considered during the design stage. Users are reliant on these mobile devices when they are out walking, so energy consumption is a concern. A potential widespread disadvantage in real devices and applications is that the user may need to use more than one device to cover all of their initial needs.

Most of the presented navigation systems were not tested by end-users. Consequently, the status of user satisfaction regarding the services provided by research on BVIP navigation systems is unknown. This is a critical point that needs to be covered for two reasons. First, it will enhance research in this domain according to users’ opinions. Secondly, it will encourage manufacturing of prototypes that meet users’ requirements. For real applications and devices, user ratings are available only for mobile apps, see Figure 4.

10.2. General Comparison

Electromagnetic/radar-based systems were found to outperform sensor-based systems, both of which are mainly used on obstacles avoidance tasks, see Table 1. The high frequency in these systems corresponds to a smaller wavelength which in turn leads to compact, lightweight circuits. In addition, they can differentiate between near objects and detect tiny gaps and hanging obstacles [193].

Camera-based systems are affected by weather and illumination conditions, but provide more detail about obstacles such as shape and color. The advantage of smartphone-based systems is that one device contains different useful components that are need for navigation tasks, such as camera and GPS. These technologies are used in the majority of mandatory tasks required by BVIP navigation systems, see Table 1.

11. Conclusions and Future Work

Our review presents a comprehensive survey of outdoor BVIP navigation systems. Our paper improves on previous surveys by including a broad overview of the area and detailed investigations about research completed for each stage. This provides a highly accessible way for other researchers to assess the scope of previous work done against the task area of interest—even if they are not concerned with the end-to-end navigation view. In each task, we investigate the algorithms used, research datasets, limitations, and future work. We clarify and explain the different terminology used in this field. In addition to research developments, we provide details about applications and devices that help BVIP in urban navigation.

In summary, more work is needed in this field to present a reliable and comprehensive navigation device for BVIP. We also emphasize the need to transfer learning between other domains to this domain, such as the domains of automated cars, driver assistance and robot navigation. The design of navigation systems should consider other preferences, such as wearability and feedback. Deep learning-based methods described will require real-time network models so power consumption will be a practical concern, relative to the type of device it is running on. For example, the feasibility of running real-time obstacle detection via wearable camera device needs to be determined, for the various methods in the literature- but for now, most of the research is “lab-based”, focusing on achieving accurate results, rather than dealing with deployment issues of power consumption and device deployment. These issues will need to be addressed as more complex deep learning solutions become the state of the art for wearable vision support systems.

Author Contributions

Conceptualization, F.E.-z.E.-t. and S.M.; methodology, F.E.-z.E.-t. and S.M.; formal analysis, F.E.-z.E.-t., A.T. and S.M.; investigation, F.E.-z.E.-t.; writing—original draft preparation, F.E.-z.E.-t.; writing—review and editing, F.E.-z.E.-t., A.T., J.C. and S.M.; supervision, S.M.; project administration, S.M.; funding acquisition, S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This publication has emanated from research supported in part by a Grant from Science Foundation Ireland under Grant number 18/CRT/6222.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- WHO. Visual Impairment and Blindness. Available online: https://www.who.int/en/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 25 November 2020).

- Mocanu, B.C.; Tapu, R.; Zaharia, T. DEEP-SEE FACE: A Mobile Face Recognition System Dedicated to Visually Impaired People. IEEE Access 2018, 6, 51975–51985. [Google Scholar] [CrossRef]

- Dunai Dunai, L.; Chillarón Pérez, M.; Peris-Fajarnés, G.; Lengua Lengua, I. Euro banknote recognition system for blind people. Sensors 2017, 17, 184. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Cho, S.W.; Baek, N.R.; Choi, J.; Park, K.R. Deep Feature-Based Three-Stage Detection of Banknotes and Coins for Assisting Visually Impaired People. IEEE Access 2020, 8, 184598–184613. [Google Scholar] [CrossRef]

- Tateno, K.; Takagi, N.; Sawai, K.; Masuta, H.; Motoyoshi, T. Method for Generating Captions for Clothing Images to Support Visually Impaired People. In Proceedings of the 2020 Joint 11th International Conference on Soft Computing and Intelligent Systems and 21st International Symposium on Advanced Intelligent Systems (SCIS-ISIS), Hachijo Island, Japan, 5–8 December 2020; pp. 1–5. [Google Scholar]

- Aladren, A.; López-Nicolás, G.; Puig, L.; Guerrero, J.J. Navigation Assistance for the Visually Impaired Using RGB-D Sensor with Range Expansion. IEEE Syst. J. 2016, 10, 922–932. [Google Scholar] [CrossRef]

- Alwi, S.R.A.W.; Ahmad, M.N. Survey on outdoor navigation system needs for blind people. In Proceedings of the 2013 IEEE Student Conference on Research and Developement, Putrajaya, Malaysia, 16–17 December 2013; pp. 144–148. [Google Scholar]

- Islam, M.M.; Sadi, M.S.; Zamli, K.Z.; Ahmed, M.M. Developing walking assistants for visually impaired people: A review. IEEE Sens. J. 2019, 19, 2814–2828. [Google Scholar] [CrossRef]

- Real, S.; Araujo, A. Navigation systems for the blind and visually impaired: Past work, challenges, and open problems. Sensors 2019, 19, 3404. [Google Scholar] [CrossRef]

- Fernandes, H.; Costa, P.; Filipe, V.; Paredes, H.; Barroso, J. A review of assistive spatial orientation and navigation technologies for the visually impaired. Univers. Access Inf. Soc. 2019, 18, 155–168. [Google Scholar] [CrossRef]

- Paiva, S.; Gupta, N. Technologies and Systems to Improve Mobility of Visually Impaired People: A State of the Art. In Technological Trends in Improved Mobility of the Visually Impaired; Paiva, S., Ed.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 105–123. [Google Scholar]

- Mohamed, A.M.A.; Hussein, M.A. Survey on obstacle detection and tracking system for the visual impaired. Int. J. Recent Trends Eng. Res. 2016, 2, 230–234. [Google Scholar]

- Lakde, C.K.; Prasad, P.S. Review paper on navigation system for visually impaired people. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 166–168. [Google Scholar] [CrossRef]

- Duarte, K.; Cecílio, J.; Furtado, P. Overview of assistive technologies for the blind: Navigation and shopping. In Proceedings of the International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 10–12 December 2014; pp. 1929–1934. [Google Scholar]

- Manjari, K.; Verma, M.; Singal, G. A Survey on Assistive Technology for Visually Impaired. Internet Things 2020, 11, 100188. [Google Scholar] [CrossRef]

- Tapu, R.; Mocanu, B.; Tapu, E. A survey on wearable devices used to assist the visual impaired user navigation in outdoor environments. In Proceedings of the International Symposium on Electronics and Telecommunications (ISETC), Timisoara, Romania, 14–15 November 2014; pp. 1–4. [Google Scholar]

- Fei, Z.; Yang, E.; Hu, H.; Zhou, H. Review of machine vision-based electronic travel aids. In Proceedings of the 23rd International Conference on Automation and Computing (ICAC), Huddersfield, UK, 7–8 September 2017; pp. 1–7. [Google Scholar]

- Budrionis, A.; Plikynas, D.; Daniušis, P.; Indrulionis, A. Smartphone-based computer vision travelling aids for blind and visually impaired individuals: A systematic review. Assist. Technol. 2020, 1–17. [Google Scholar] [CrossRef]

- Kuriakose, B.; Shrestha, R.; Sandnes, F.E. Smartphone Navigation Support for Blind and Visually Impaired People-A Comprehensive Analysis of Potentials and Opportunities. In International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2020; pp. 568–583. [Google Scholar]

- Petrie, H.; Johnson, V.; Strothotte, T.; Raab, A.; Fritz, S.; Michel, R. MoBIC: Designing a travel aid for blind and elderly people. J. Navig. 1996, 49, 45–52. [Google Scholar] [CrossRef]

- Dakopoulos, D.; Bourbakis, N.G. Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2009, 40, 25–35. [Google Scholar] [CrossRef]

- Kaushalya, V.; Premarathne, K.; Shadir, H.; Krithika, P.; Fernando, S. ‘AKSHI’: Automated help aid for visually impaired people using obstacle detection and GPS technology. Int. J. Sci. Res. Publ. 2016, 6, 110. [Google Scholar]

- Meshram, V.V.; Patil, K.; Meshram, V.A.; Shu, F.C. An astute assistive device for mobility and object recognition for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2019, 49, 449–460. [Google Scholar] [CrossRef]

- Alghamdi, S.; van Schyndel, R.; Khalil, I. Accurate positioning using long range active RFID technology to assist visually impaired people. J. Netw. Comput. Appl. 2014, 41, 135–147. [Google Scholar] [CrossRef]

- Jeong, G.Y.; Yu, K.H. Multi-section sensing and vibrotactile perception for walking guide of visually impaired person. Sensors 2016, 16, 1070. [Google Scholar] [CrossRef] [PubMed]

- Chun, A.C.B.; Al Mahmud, A.; Theng, L.B.; Yen, A.C.W. Wearable Ground Plane Hazards Detection and Recognition System for the Visually Impaired. In Proceedings of the 2019 International Conference on E-Society, E-Education and E-Technology, Taipei, Taiwan, 15–17 August 2019; pp. 84–89. [Google Scholar]

- Rahman, M.A.; Sadi, M.S.; Islam, M.M.; Saha, P. Design and Development of Navigation Guide for Visually Impaired People. In Proceedings of the IEEE International Conference on Biomedical Engineering, Computer and Information Technology for Health (BECITHCON), Dhaka, Bangladesh, 28–30 November 2019; pp. 89–92. [Google Scholar]

- Chang, W.J.; Chen, L.B.; Chen, M.C.; Su, J.P.; Sie, C.Y.; Yang, C.H. Design and Implementation of an Intelligent Assistive System for Visually Impaired People for Aerial Obstacle Avoidance and Fall Detection. IEEE Sen. J. 2020, 20, 10199–10210. [Google Scholar] [CrossRef]

- Kwiatkowski, P.; Jaeschke, T.; Starke, D.; Piotrowsky, L.; Deis, H.; Pohl, N. A concept study for a radar-based navigation device with sector scan antenna for visually impaired people. In Proceedings of the 2017 First IEEE MTT-S International Microwave Bio Conference (IMBIOC), Gothenburg, Sweden, 15–17 May 2017; pp. 1–4. [Google Scholar]

- Sohl-Dickstein, J.; Teng, S.; Gaub, B.M.; Rodgers, C.C.; Li, C.; DeWeese, M.R.; Harper, N.S. A device for human ultrasonic echolocation. IEEE Trans. Biomed. Eng. 2015, 62, 1526–1534. [Google Scholar] [CrossRef] [PubMed]

- Patil, K.; Jawadwala, Q.; Shu, F.C. Design and construction of electronic aid for visually impaired people. IEEE Trans. Hum. Mach. Syst. 2018, 48, 172–182. [Google Scholar] [CrossRef]

- Sáez, Y.; Muñoz, J.; Canto, F.; García, A.; Montes, H. Assisting Visually Impaired People in the Public Transport System through RF-Communication and Embedded Systems. Sensors 2019, 19, 1282. [Google Scholar] [CrossRef]

- Cardillo, E.; Di Mattia, V.; Manfredi, G.; Russo, P.; De Leo, A.; Caddemi, A.; Cerri, G. An electromagnetic sensor prototype to assist visually impaired and blind people in autonomous walking. IEEE Sens. J. 2018, 18, 2568–2576. [Google Scholar] [CrossRef]

- Pisa, S.; Pittella, E.; Piuzzi, E. Serial patch array antenna for an FMCW radar housed in a white cane. Int. J. Antennas Propag. 2016, 2016. [Google Scholar] [CrossRef]

- Kiuru, T.; Metso, M.; Utriainen, M.; Metsävainio, K.; Jauhonen, H.M.; Rajala, R.; Savenius, R.; Ström, M.; Jylhä, T.N.; Juntunen, R.; et al. Assistive device for orientation and mobility of the visually impaired based on millimeter wave radar technology—Clinical investigation results. Cogent Eng. 2018, 5, 1450322. [Google Scholar] [CrossRef]

- Cheng, R.; Hu, W.; Chen, H.; Fang, Y.; Wang, K.; Xu, Z.; Bai, J. Hierarchical visual localization for visually impaired people using multimodal images. Expert Syst. Appl. 2021, 165, 113743. [Google Scholar] [CrossRef]

- Lin, S.; Cheng, R.; Wang, K.; Yang, K. Visual localizer: Outdoor localization based on convnet descriptor and global optimization for visually impaired pedestrians. Sensors 2018, 18, 2476. [Google Scholar] [CrossRef]

- Fang, Y.; Yang, K.; Cheng, R.; Sun, L.; Wang, K. A Panoramic Localizer Based on Coarse-to-Fine Descriptors for Navigation Assistance. Sensors 2020, 20, 4177. [Google Scholar] [CrossRef]

- Duh, P.J.; Sung, Y.C.; Chiang, L.Y.F.; Chang, Y.J.; Chen, K.W. V-Eye: A Vision-based Navigation System for the Visually Impaired. IEEE Trans. Multimed. 2020. [Google Scholar] [CrossRef]

- Hairuman, I.F.B.; Foong, O.M. OCR signage recognition with skew & slant correction for visually impaired people. In Proceedings of the International Conference on Hybrid Intelligent Systems (HIS), Malacca, Malaysia, 5–8 December 2011; pp. 306–310. [Google Scholar]

- Devi, P.; Saranya, B.; Abinayaa, B.; Kiruthikamani, G.; Geethapriya, N. Wearable Aid for Assisting the Blind. Methods 2016, 3. [Google Scholar] [CrossRef]

- Bazi, Y.; Alhichri, H.; Alajlan, N.; Melgani, F. Scene Description for Visually Impaired People with Multi-Label Convolutional SVM Networks. Appl. Sci. 2019, 9, 5062. [Google Scholar] [CrossRef]

- Mishra, A.A.; Madhurima, C.; Gautham, S.M.; James, J.; Annapurna, D. Environment Descriptor for the Visually Impaired. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics (ICACCI), Bangalore, India, 19–22 September 2018; pp. 1720–1724. [Google Scholar]

- Lin, Y.; Wang, K.; Yi, W.; Lian, S. Deep learning based wearable assistive system for visually impaired people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Younis, O.; Al-Nuaimy, W.; Rowe, F.; Alomari, M.H. A smart context-aware hazard attention system to help people with peripheral vision loss. Sensors 2019, 19, 1630. [Google Scholar] [CrossRef]

- Elmannai, W.; Elleithy, K.M. A novel obstacle avoidance system for guiding the visually impaired through the use of fuzzy control logic. In Proceedings of the IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 12–15 January 2018; pp. 1–9. [Google Scholar]

- Yang, K.; Wang, K.; Bergasa, L.M.; Romera, E.; Hu, W.; Sun, D.; Sun, J.; Cheng, R.; Chen, T.; López, E. Unifying terrain awareness for the visually impaired through real-time semantic segmentation. Sensors 2018, 18, 1506. [Google Scholar] [CrossRef]

- Kang, M.C.; Chae, S.H.; Sun, J.Y.; Lee, S.H.; Ko, S.J. An enhanced obstacle avoidance method for the visually impaired using deformable grid. IEEE Trans. Consum. Electron. 2017, 63, 169–177. [Google Scholar] [CrossRef]

- Kang, M.C.; Chae, S.H.; Sun, J.Y.; Yoo, J.W.; Ko, S.J. A novel obstacle detection method based on deformable grid for the visually impaired. IEEE Trans. Consum. Electron. 2015, 61, 376–383. [Google Scholar] [CrossRef]

- Poggi, M.; Mattoccia, S. A wearable mobility aid for the visually impaired based on embedded 3d vision and deep learning. In Proceedings of the IEEE Symposium on Computers and Communication (ISCC), Messina, Italy, 27–30 June 2016; pp. 208–213. [Google Scholar]

- Cheng, R.; Wang, K.; Lin, S. Intersection Navigation for People with Visual Impairment. In International Conference on Computers Helping People with Special Needs; Springer: Berlin/Heidelberg, Germany, 2018; pp. 78–85. [Google Scholar]

- Cheng, R.; Wang, K.; Yang, K.; Long, N.; Bai, J.; Liu, D. Real-time pedestrian crossing lights detection algorithm for the visually impaired. Multimed. Tools Appl. 2018, 77, 20651–20671. [Google Scholar] [CrossRef]

- Li, X.; Cui, H.; Rizzo, J.R.; Wong, E.; Fang, Y. Cross-Safe: A computer vision-based approach to make all intersection-related pedestrian signals accessible for the visually impaired. In Science and Information Conference; Springer: Berlin/Heidelberg, Germany, 2019; pp. 132–146. [Google Scholar]

- Chen, Q.; Wu, L.; Chen, Z.; Lin, P.; Cheng, S.; Wu, Z. Smartphone Based Outdoor Navigation and Obstacle Avoidance System for the Visually Impaired. In International Conference on Multi-disciplinary Trends in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2019; pp. 26–37. [Google Scholar]

- Velazquez, R.; Pissaloux, E.; Rodrigo, P.; Carrasco, M.; Giannoccaro, N.I.; Lay-Ekuakille, A. An outdoor navigation system for blind pedestrians using GPS and tactile-foot feedback. Appl. Sci. 2018, 8, 578. [Google Scholar] [CrossRef]

- Spiers, A.J.; Dollar, A.M. Outdoor pedestrian navigation assistance with a shape-changing haptic interface and comparison with a vibrotactile device. In Proceedings of the 2016 IEEE Haptics Symposium (HAPTICS), Philadelphia, PA, USA, 8–11 April 2016; pp. 34–40. [Google Scholar]

- Bai, J.; Liu, D.; Su, G.; Fu, Z. A cloud and vision-based navigation system used for blind people. In Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, Wuhan, China, 7–9 April 2017; pp. 1–6. [Google Scholar]

- Cheng, R.; Wang, K.; Bai, J.; Xu, Z. Unifying Visual Localization and Scene Recognition for People With Visual Impairment. IEEE Access 2020, 8, 64284–64296. [Google Scholar] [CrossRef]

- Gintner, V.; Balata, J.; Boksansky, J.; Mikovec, Z. Improving reverse geocoding: Localization of blind pedestrians using conversational ui. In Proceedings of the 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Debrecen, Hungary, 11–14 September 2017; pp. 000145–000150. [Google Scholar]

- Shadi, S.; Hadi, S.; Nazari, M.A.; Hardt, W. Outdoor navigation for visually impaired based on deep learning. Proc. CEUR Workshop Proc. 2019, 2514, 97–406. [Google Scholar]

- Lin, B.S.; Lee, C.C.; Chiang, P.Y. Simple smartphone-based guiding system for visually impaired people. Sensors 2017, 17, 1371. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Lee, H.; Kim, J. Street Crossing Aid Using Light-Weight CNNs for the Visually Impaired. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 2593–2601. [Google Scholar]

- Ghilardi, M.C.; Simoes, G.S.; Wehrmann, J.; Manssour, I.H.; Barros, R.C. Real-Time Detection of Pedestrian Traffic Lights for Visually-Impaired People. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Ash, R.; Ofri, D.; Brokman, J.; Friedman, I.; Moshe, Y. Real-time pedestrian traffic light detection. In Proceedings of the IEEE International Conference on the Science of Electrical Engineering in Israel (ICSEE), Eilat, Israel, 12–14 December 2018; pp. 1–5. [Google Scholar]

- Ghilardi, M.C.; Junior, J.J.; Manssour, I.H. Crosswalk Localization from Low Resolution Satellite Images to Assist Visually Impaired People. IEEE Comput. Graph. Appl. 2018, 38, 30–46. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Li, Y.; Huang, T.Y.; Hsieh, W.A.; Lee, S.Y.; Yeh, I.H.; Lin, G.K.; Yu, N.H.; Tang, H.H.; Chang, Y.J. BusMyFriend: Designing a bus reservation service for people with visual impairments in Taipei. In Proceedings of Companion Publication of the 2020 ACM Designing Interactive Systems Conference; ACM: New York, NY, USA, 2020; pp. 91–96. [Google Scholar]

- Ni, D.; Song, A.; Tian, L.; Xu, X.; Chen, D. A walking assistant robotic system for the visually impaired based on computer vision and tactile perception. Int. J. Soc. Robot. 2015, 7, 617–628. [Google Scholar] [CrossRef]

- Joshi, R.C.; Yadav, S.; Dutta, M.K.; Travieso-Gonzalez, C.M. Efficient Multi-Object Detection and Smart Navigation Using Artificial Intelligence for Visually Impaired People. Entropy 2020, 22, 941. [Google Scholar] [CrossRef]

- Vera, D.; Marcillo, D.; Pereira, A. Blind guide: Anytime, anywhere solution for guiding blind people. In World Conference on Information Systems and Technologies; Springer: Berlin/Heidelberg, Germany, 2017; pp. 353–363. [Google Scholar]

- Islam, M.M.; Sadi, M.S.; Bräunl, T. Automated walking guide to enhance the mobility of visually impaired people. IEEE Trans. Med. Robot. Bionics 2020, 2, 485–496. [Google Scholar] [CrossRef]

- Martinez, M.; Roitberg, A.; Koester, D.; Stiefelhagen, R.; Schauerte, B. Using technology developed for autonomous cars to help navigate blind people. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 1424–1432. [Google Scholar]

- Long, N.; Wang, K.; Cheng, R.; Hu, W.; Yang, K. Unifying obstacle detection, recognition, and fusion based on millimeter wave radar and RGB-depth sensors for the visually impaired. Rev. Sci. Instrum. 2019, 90, 044102. [Google Scholar] [CrossRef]

- Meliones, A.; Filios, C. Blindhelper: A pedestrian navigation system for blinds and visually impaired. In Proceedings of the ACM International Conference on PErvasive Technologies Related to Assistive Environments, Corfu Island, Greece, 29 June–1 July 2016; pp. 1–4. [Google Scholar]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99. [Google Scholar]

- Elmannai, W.; Elleithy, K.M. A Highly Accurate and Reliable Data Fusion Framework for Guiding the Visually Impaired. IEEE Access 2018, 6, 33029–33054. [Google Scholar] [CrossRef]

- Mocanu, B.C.; Tapu, R.; Zaharia, T.B. When Ultrasonic Sensors and Computer Vision Join Forces for Efficient Obstacle Detection and Recognition. Sensors 2016, 16, 1807. [Google Scholar] [CrossRef]

- Shangguan, L.; Yang, Z.; Zhou, Z.; Zheng, X.; Wu, C.; Liu, Y. Crossnavi: Enabling real-time crossroad navigation for the blind with commodity phones. In Proceedings of ACM International Joint Conference on Pervasive and Ubiquitous Computing; ACM: New York, NY, USA, 2014; pp. 787–798. [Google Scholar]

- Flores, G.H.; Manduchi, R. A public transit assistant for blind bus passengers. IEEE Pervasive Comput. 2018, 17, 49–59. [Google Scholar] [CrossRef]

- Shingte, S.; Patil, R. A Passenger Bus Alert and Accident System for Blind Person Navigational. Int. J. Sci. Res. Sci. Technol. 2018, 4, 282–288. [Google Scholar]

- Bai, J.; Liu, Z.; Lin, Y.; Li, Y.; Lian, S.; Liu, D. Wearable travel aid for environment perception and navigation of visually impaired people. Electronics 2019, 8, 697. [Google Scholar] [CrossRef]

- Guth, D.A.; Barlow, J.M.; Ponchillia, P.E.; Rodegerdts, L.A.; Kim, D.S.; Lee, K.H. An intersection database facilitates access to complex signalized intersections for pedestrians with vision disabilities. Transp. Res. Rec. 2019, 2673, 698–709. [Google Scholar] [CrossRef]

- Zhou, Q.; Li, Z. Experimental analysis of various types of road intersections for interchange detection. Trans. GIS 2015, 19, 19–41. [Google Scholar] [CrossRef]

- Dai, J.; Wang, Y.; Li, W.; Zuo, Y. Automatic Method for Extraction of Complex Road Intersection Points from High-resolution Remote Sensing Images Based on Fuzzy Inference. IEEE Access 2020, 8, 39212–39224. [Google Scholar] [CrossRef]

- Bhatt, D.; Sodhi, D.; Pal, A.; Balasubramanian, V.; Krishna, M. Have i reached the intersection: A deep learning-based approach for intersection detection from monocular cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4495–4500. [Google Scholar]

- Baumann, U.; Huang, Y.Y.; Gläser, C.; Herman, M.; Banzhaf, H.; Zöllner, J.M. Classifying road intersections using transfer-learning on a deep neural network. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 683–690. [Google Scholar]

- Saeedimoghaddam, M.; Stepinski, T.F. Automatic extraction of road intersection points from USGS historical map series using deep convolutional neural networks. Int. J. Geogr. Inf. Sci. 2019, 34, 947–968. [Google Scholar] [CrossRef]

- Tümen, V.; Ergen, B. Intersections and crosswalk detection using deep learning and image processing techniques. Phys. A Stat. Mech. Appl. 2020, 543, 123510. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, G.; Sharma, A.; Krishna, K.M. Towards view-invariant intersection recognition from videos using deep network ensembles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1053–1060. [Google Scholar]

- Bock, J.; Krajewski, R.; Moers, T.; Runde, S.; Vater, L.; Eckstein, L. The ind dataset: A drone dataset of naturalistic road user trajectories at german intersections. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1929–1934. [Google Scholar]

- Wang, J.; Wang, C.; Song, X.; Raghavan, V. Automatic intersection and traffic rule detection by mining motor-vehicle GPS trajectories. Comput. Environ. Urban Syst. 2017, 64, 19–29. [Google Scholar] [CrossRef]

- Rebai, K.; Achour, N.; Azouaoui, O. Road intersection detection and classification using hierarchical SVM classifier. Adv. Robot. 2014, 28, 929–941. [Google Scholar] [CrossRef]

- Oeljeklaus, M.; Hoffmann, F.; Bertram, T. A combined recognition and segmentation model for urban traffic scene understanding. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Koji, T.; Kanji, T. Deep Intersection Classification Using First and Third Person Views. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 454–459. [Google Scholar]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 Year, 1000 km: The Oxford RobotCar Dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Lara Dataset. Available online: http://www.lara.prd.fr/benchmarks/trafficlightsrecognition (accessed on 27 November 2020).

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- GrandTheftAutoV. Available online: https://en.wikipedia.org/wiki/Development_of_Grand_Theft_Auto_V (accessed on 25 November 2020).

- Mapillary. Available online: https://www.mapillary.com/app (accessed on 25 November 2020).

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? In The kitti vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Krylov, V.A.; Kenny, E.; Dahyot, R. Automatic discovery and geotagging of objects from street view imagery. Remote Sens. 2018, 10, 661. [Google Scholar] [CrossRef]

- Krylov, V.A.; Dahyot, R. Object geolocation from crowdsourced street level imagery. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2018; pp. 79–83. [Google Scholar]

- Kurath, S.; Gupta, R.D.; Keller, S. OSMDeepOD-Object Detection on Orthophotos with and for VGI. GI Forum. 2017, 2, 173–188. [Google Scholar] [CrossRef]

- Riveiro, B.; González-Jorge, H.; Martínez-Sánchez, J.; Díaz-Vilariño, L.; Arias, P. Automatic detection of zebra crossings from mobile LiDAR data. Opt. Laser Technol. 2015, 70, 63–70. [Google Scholar] [CrossRef]

- Intersection Perception Through Real-Time Semantic Segmentation to Assist Navigation of Visually Impaired Pedestrians. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; pp. 1034–1039.

- Berriel, R.F.; Lopes, A.T.; de Souza, A.F.; Oliveira-Santos, T. Deep Learning-Based Large-Scale Automatic Satellite Crosswalk Classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1513–1517. [Google Scholar] [CrossRef]

- Wu, X.H.; Hu, R.; Bao, Y.Q. Block-Based Hough Transform for Recognition of Zebra Crossing in Natural Scene Images. IEEE Access 2019, 7, 59895–59902. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Manduchi, R.; Coughlan, J.M.; Mascetti, S. Mind Your Crossings: Mining GIS Imagery for Crosswalk Localization. ACM Trans. Access. Comput. 2017, 9, 1–25. [Google Scholar] [CrossRef]

- Berriel, R.F.; Rossi, F.S.; de Souza, A.F.; Oliveira-Santos, T. Automatic large-scale data acquisition via crowdsourcing for crosswalk classification: A deep learning approach. Comput. Graph. 2017, 68, 32–42. [Google Scholar] [CrossRef]

- Malbog, M.A. MASK R-CNN for Pedestrian Crosswalk Detection and Instance Segmentation. In Proceedings of the IEEE International Conference on Engineering Technologies and Applied Sciences (ICETAS), Kuala Lumpur, Malaysia, 20–21 December 2019; pp. 1–5. [Google Scholar]

- Neuhold, G.; Ollmann, T.; Rota Bulo, S.; Kontschieder, P. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5000–5009. [Google Scholar]

- Cheng, R.; Wang, K.; Yang, K.; Long, N.; Hu, W.; Chen, H.; Bai, J.; Liu, D. Crosswalk navigation for people with visual impairments on a wearable device. J. Electron. Imaging 2017, 26, 053025. [Google Scholar] [CrossRef]

- Pedestrian-Traffic-Lane (PTL) Dataset. Available online: https://github.com/samuelyu2002/ImVisible (accessed on 27 November 2020).

- Zimmermann-Janschitz, S. The Application of Geographic Information Systems to Support Wayfinding for People with Visual Impairments or Blindness. In Visual Impairment and Blindness: What We Know and What We Have to Know; IntechOpen: London, UK, 2019. [Google Scholar]

- Hara, K.; Azenkot, S.; Campbell, M.; Bennett, C.L.; Le, V.; Pannella, S.; Moore, R.; Minckler, K.; Ng, R.H.; Froehlich, J.E. Improving public transit accessibility for blind riders by crowdsourcing bus stop landmark locations with google street view: An extended analysis. ACM Trans. Access. Comput. 2015, 6, 1–23. [Google Scholar] [CrossRef]

- Cáceres, P.; Sierra-Alonso, A.; Vela, B.; Cavero, J.M.; Ángel Garrido, M.; Cuesta, C.E. Adding Semantics to Enrich Public Transport and Accessibility Data from the Web. Open J. Web Technol. 2020, 7, 1–18. [Google Scholar]

- Mirri, S.; Prandi, C.; Salomoni, P.; Callegati, F.; Campi, A. On Combining Crowdsourcing, Sensing and Open Data for an Accessible Smart City. In Proceedings of the Eighth International Conference on Next Generation Mobile Apps, Services and Technologies, Oxford, UK, 10–12 September 2014; pp. 294–299. [Google Scholar]

- Low, W.Y.; Cao, M.; De Vos, J.; Hickman, R. The journey experience of visually impaired people on public transport in London. Transp. Policy 2020, 97, 137–148. [Google Scholar] [CrossRef]

- Arroyo, R.; Alcantarilla, P.F.; Bergasa, L.M.; Romera, E. Are you able to perform a life-long visual topological localization? Auton. Robot. 2018, 42, 665–685. [Google Scholar] [CrossRef]

- Tang, X.; Chen, Y.; Zhu, Z.; Lu, X. A visual aid system for the blind based on RFID and fast symbol recognition. In Proceedings of the International Conference on Pervasive Computing and Applications, Port Elizabeth, South Africa, 26–28 October 2011; pp. 184–188. [Google Scholar]

- Kim, J.E.; Bessho, M.; Kobayashi, S.; Koshizuka, N.; Sakamura, K. Navigating visually impaired travelers in a large train station using smartphone and bluetooth low energy. In Proceedings of Annual ACM Symposium on Applied Computing; ACM: New York, NY, USA, 2016; pp. 604–611. [Google Scholar]

- Cohen, A.; Dalyot, S. Route planning for blind pedestrians using OpenStreetMap. Environ. Plan. Urban Anal. City Sci. 2020. [Google Scholar] [CrossRef]

- Bravo, A.P.; Giret, A. Recommender System of Walking or Public Transportation Routes for Disabled Users. In International Conference on Practical Applications of Agents and Multi-Agent Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 392–403. [Google Scholar]

- Hendawi, A.M.; Rustum, A.; Ahmadain, A.A.; Hazel, D.; Teredesai, A.; Oliver, D.; Ali, M.; Stankovic, J.A. Smart personalized routing for smart cities. In Proceedings of the International Conference on Data Engineering (ICDE), San Diego, CA, USA, 19–22 April 2017; pp. 1295–1306. [Google Scholar]

- Yusof, T.; Toha, S.F.; Yusof, H.M. Path planning for visually impaired people in an unfamiliar environment using particle swarm optimization. Procedia Comput. Sci. 2015, 76, 80–86. [Google Scholar] [CrossRef]

- Fogli, D.; Arenghi, A.; Gentilin, F. A universal design approach to wayfinding and navigation. Multimed. Tools Appl. 2020, 79, 33577–33601. [Google Scholar] [CrossRef]

- Wheeler, B.; Syzdykbayev, M.; Karimi, H.A.; Gurewitsch, R.; Wang, Y. Personalized accessible wayfinding for people with disabilities through standards and open geospatial platforms in smart cities. Open Geospat. Data Softw. Stand. 2020, 5, 1–15. [Google Scholar] [CrossRef]

- Gupta, M.; Abdolrahmani, A.; Edwards, E.; Cortez, M.; Tumang, A.; Majali, Y.; Lazaga, M.; Tarra, S.; Patil, P.; Kuber, R.; et al. Towards More Universal Wayfinding Technologies: Navigation Preferences Across Disabilities. In Proceedings of the CHI Conference on Human Factors in Computing Systems; ACM: New York, NY, USA, 2020; pp. 1–13. [Google Scholar]

- Jung, J.; Park, S.; Kim, Y.; Park, S. Route Recommendation with Dynamic User Preference on Road Networks. In Proceedings of the International Conference on Big Data and Smart Computing (BigComp), Kyoto, Japan, 27 February–2 March 2019; pp. 1–7. [Google Scholar]

- Hossain, M.Z.; Sohel, F.; Shiratuddin, M.F.; Laga, H. A comprehensive survey of deep learning for image captioning. ACM Comput. Surv. 2019, 51, 1–36. [Google Scholar] [CrossRef]

- Matsuzaki, S.; Yamazaki, K.; Hara, Y.; Tsubouchi, T. Traversable Region Estimation for Mobile Robots in an Outdoor Image. J. Intell. Robot. Syst. 2018, 92, 453–463. [Google Scholar] [CrossRef]

- Yang, K.; Wang, K.; Cheng, R.; Hu, W.; Huang, X.; Bai, J. Detecting traversable area and water hazards for the visually impaired with a pRGB-D sensor. Sensors 2017, 17, 1890. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Wang, K.; Hu, W.; Bai, J. Expanding the detection of traversable area with RealSense for the visually impaired. Sensors 2016, 16, 1954. [Google Scholar] [CrossRef] [PubMed]

- Chang, N.H.; Chien, Y.H.; Chiang, H.H.; Wang, W.Y.; Hsu, C.C. A Robot Obstacle Avoidance Method Using Merged CNN Framework. In Proceedings of the International Conference on Machine Learning and Cybernetics (ICMLC), Kobe, Japan, 7–10 July 2019; pp. 1–5. [Google Scholar]

- Mancini, M.; Costante, G.; Valigi, P.; Ciarfuglia, T.A. Fast robust monocular depth estimation for Obstacle Detection with fully convolutional networks. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 4296–4303. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- The PASCAL Visual Object Classes Challenge. 2007. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/ (accessed on 27 November 2020).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Jensen, M.B.; Nasrollahi, K.; Moeslund, T.B. Evaluating state-of-the-art object detector on challenging traffic light data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 9–15. [Google Scholar]

- Rothaus, K.; Roters, J.; Jiang, X. Localization of pedestrian lights on mobile devices. In Proceedings of the Asia-Pacific Signal and Information Processing Association, 2009 Annual Summit and Conference, Lanzhou, China, 18–21 November 2009; pp. 398–405. [Google Scholar]

- Fernández, C.; Guindel, C.; Salscheider, N.O.; Stiller, C. A deep analysis of the existing datasets for traffic light state recognition. In Proceedings of the International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 248–254. [Google Scholar]