Observational and Accelerometer Analysis of Head Movement Patterns in Psychotherapeutic Dialogue †

Abstract

:1. Introduction

2. Materials and Methods

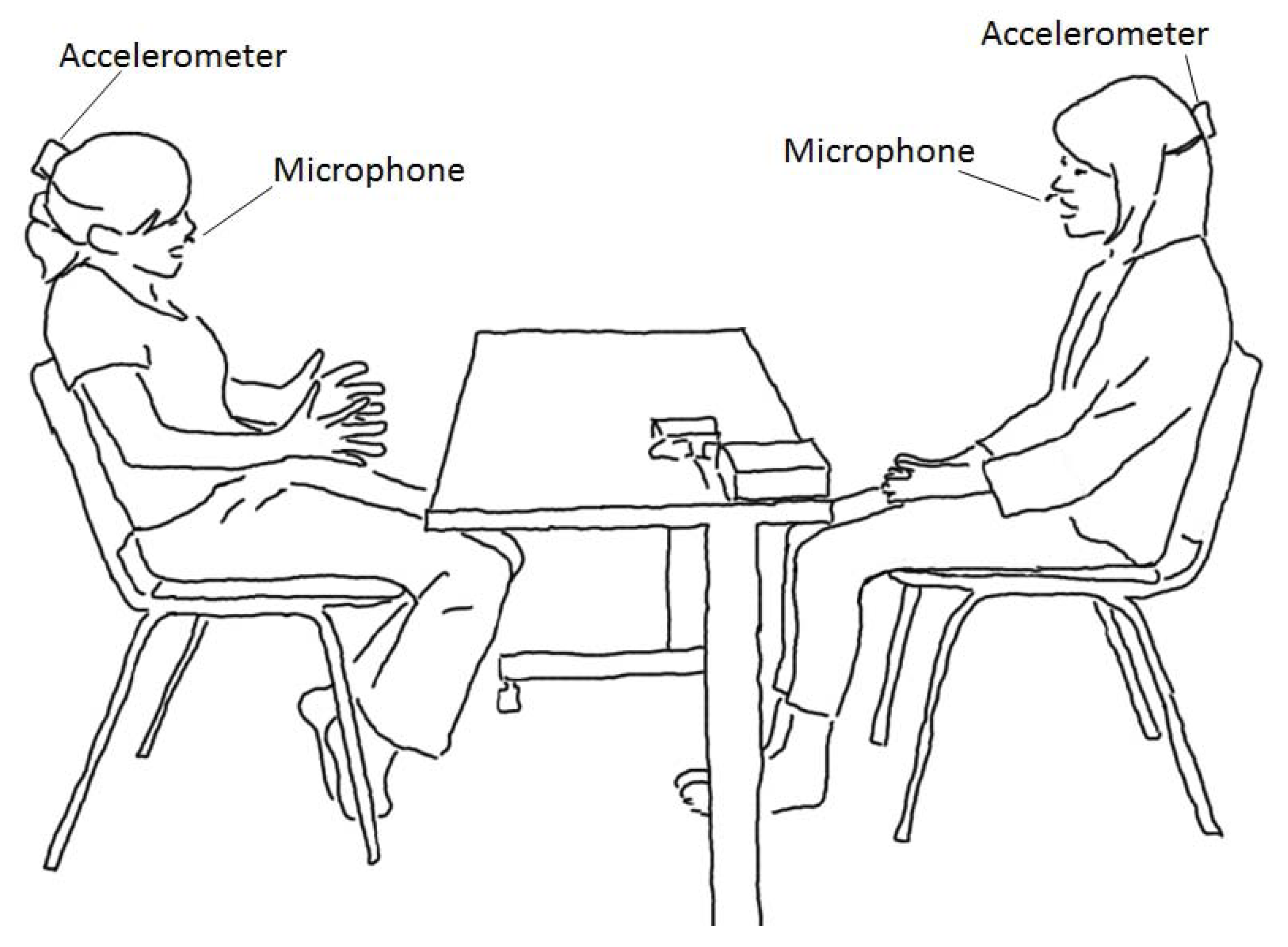

2.1. Data Collection

Data Acquisition

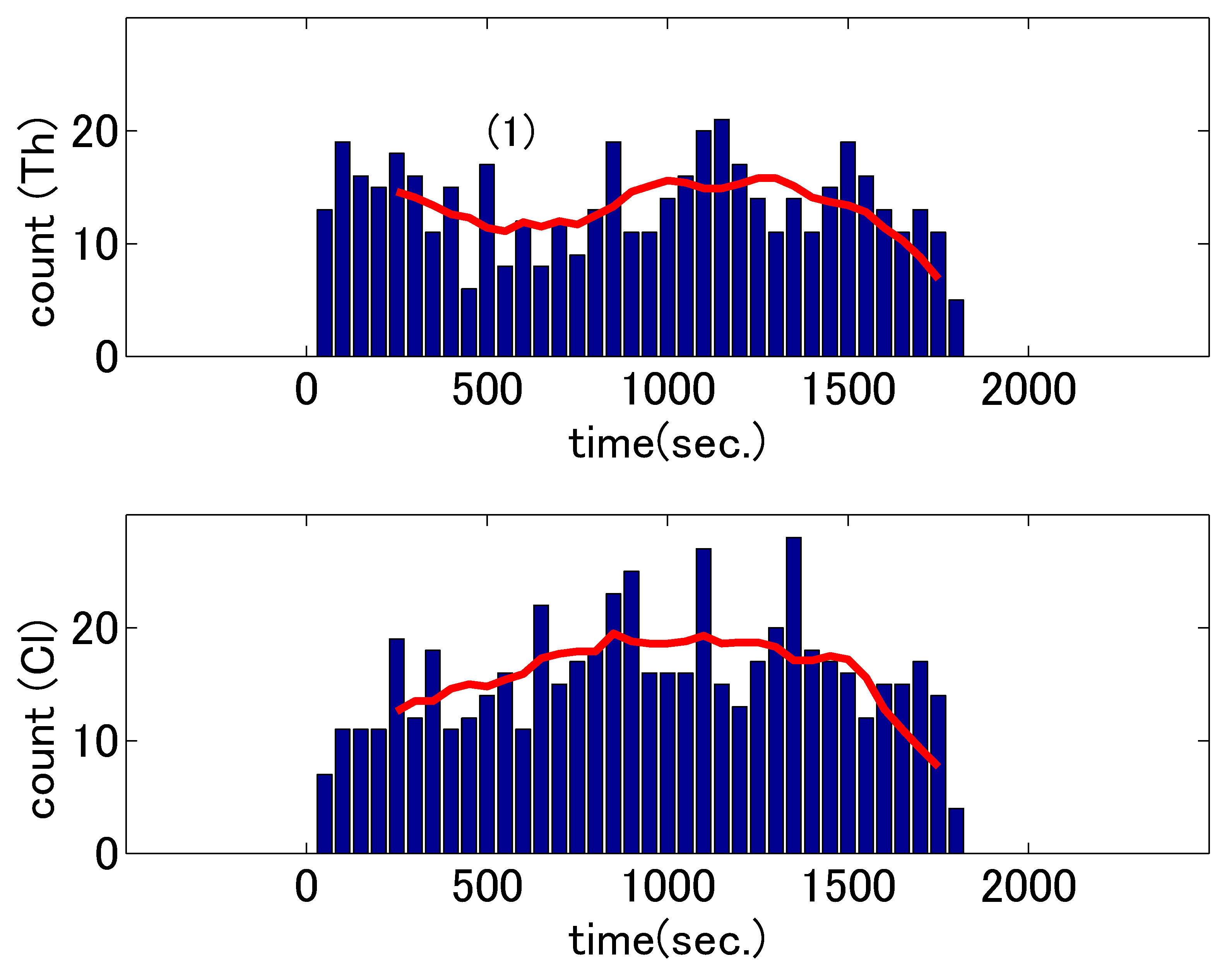

2.2. Nodding Counts

2.3. Head Movement Synchronization Degree

3. Results

3.1. Analysis of Nodding Counts

3.2. Analysis of Head Movement Synchronization Degree

4. Discussion

4.1. Automatic Annotation of Head Nods

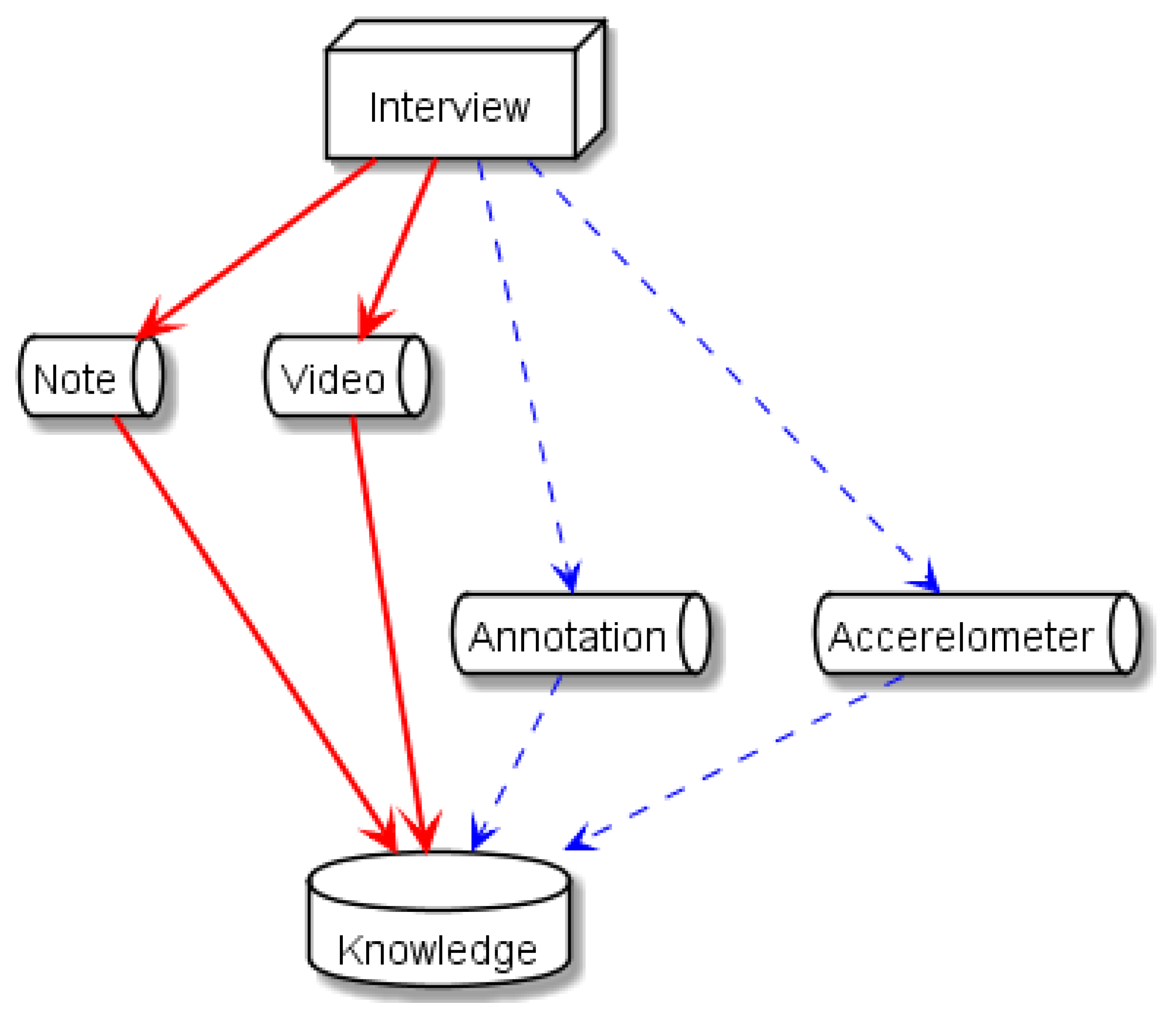

4.2. Channels of Case Reflection

4.3. Therapist Feedback on Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rogers, C. The use of electronically recorded interviews in improving psychotherapeutic techniques. Am. J. Orthopsychiatry 1942, 12, 429–434. [Google Scholar] [CrossRef]

- Andersen, T. The Reflecting Team-Dialogues and Dialogues about the Dialogues; W.W. Norton: New York, NY, USA, 1991. [Google Scholar]

- Lee, D.Y.; Uhlemann, M.R.; Haase, R.F. Counselor verbal and nonverbal responses and perceived expertness, trustworthiness, and attractiveness. J. Couns. Psychol. 1985, 32, 181. [Google Scholar] [CrossRef]

- Hill, C.E.; Stephany, A. Relation of nonverbal behavior to client reactions. J. Couns. Psychol. 1990, 37, 22–26. [Google Scholar] [CrossRef]

- Hackney, H. Facial gestures and subject expression of feelings. J. Couns. Psychol. 1974, 21, 173. [Google Scholar] [CrossRef]

- Rogers, C. Client-Centered Therapy: Its Current Practice, Implications, and Theory; Houghton Mifflin: Boston, MA, USA, 1951. [Google Scholar]

- Altorfer, A.; Jossen, S.; Würmle, O.; Käsermann, M.L.; Foppa, K.; Zimmermann, H. Measurement and meaning of head movements in everyday face-to-face communicative interaction. Behav. Res. Methods Instrum. Comput. 2000, 32, 17–32. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McConville, K.M.V.; Milosevic, M. Active video game head movement inputs. Pers. Ubiquitous Comput. 2014, 18, 253–257. [Google Scholar] [CrossRef]

- Ahmadi, A.; Rowlands, D.D.; James, D.A. Development of inertial and novel marker-based techniques and analysis for upper arm rotational velocity measurements in tennis. Sport. Eng. 2010, 12, 179–188. [Google Scholar] [CrossRef]

- Nguyen, T.; Cho, M.; Lee, T. Automatic fall detection using wearable biomedical signal measurement terminal. In Proceedings of the 2009 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 5203–5206. [Google Scholar] [CrossRef]

- Lin, C.L.; Chiu, W.C.; Chu, T.C.; Ho, Y.H.; Chen, F.H.; Hsu, C.C.; Hsieh, P.H.; Chen, C.H.; Lin, C.C.K.; Sung, P.S.; et al. Innovative Head-Mounted System Based on Inertial Sensors and Magnetometer for Detecting Falling Movements. Sensors 2020, 20, 5774. [Google Scholar] [CrossRef] [PubMed]

- Dowad, T. PAWS: Personal action wireless sensor. Pers. Ubiquitous Comput. 2006, 10, 173–176. [Google Scholar] [CrossRef]

- Pacher, L.; Chatellier, C.; Vauzelle, R.; Fradet, L. Sensor-to-Segment Calibration Methodologies for Lower-Body Kinematic Analysis with Inertial Sensors: A Systematic Review. Sensors 2020, 20, 3322. [Google Scholar] [CrossRef]

- Xu, C.; He, J.; Zhang, X.; Zhou, X.; Duan, S. Towards Human Motion Tracking: Multi-Sensory IMU/TOA Fusion Method and Fundamental Limits. Electronics 2019, 8, 142. [Google Scholar] [CrossRef] [Green Version]

- Neuwirth, C.; Snyder, C.; Kremser, W.; Brunauer, R.; Holzer, H.; Stöggl, T. Classification of Alpine Skiing Styles Using GNSS and Inertial Measurement Units. Sensors 2020, 20, 4232. [Google Scholar] [CrossRef]

- Vieira, F.; Cechinel, C.; Ramos, V.; Riquelme, F.; Noel, R.; Villarroel, R.; Cornide-Reyes, H.; Munoz, R. A Learning Analytics Framework to Analyze Corporal Postures in Students Presentations. Sensors 2021, 21, 1525. [Google Scholar] [CrossRef] [PubMed]

- Imenes, K.; Aasmundtveit, K.; Husa, E.M.; Høgetveit, J.O.; Halvorsen, S.; Elle, O.J.; Mirtaheri, P.; Fosse, E.; Hoff, L. Assembly and packaging of a three-axis micro accelerometer used for detection of heart infarction. Biomed. Microdevices 2007, 9, 951–957. [Google Scholar] [CrossRef]

- Halvorsen, P.S.; Fleischer, L.A.; Espinoza, A.; Elle, O.J.; Hoff, L.; Skulstad, H.; Edvardsen, T.; Fosse, E. Detection of myocardial ischaemia by epicardial accelerometers in the pig. BJA Br. J. Anaesth. 2008, 102, 29–37. [Google Scholar] [CrossRef] [Green Version]

- Fridolfsson, J.; Börjesson, M.; Arvidsson, D. A Biomechanical Re-Examination of Physical Activity Measurement with Accelerometers. Sensors 2018, 18, 3399. [Google Scholar] [CrossRef] [Green Version]

- Fridolfsson, J.; Börjesson, M.; Ekblom-Bak, E.; Ekblom, Ö.; Arvidsson, D. Stronger Association between High Intensity Physical Activity and Cardiometabolic Health with Improved Assessment of the Full Intensity Range Using Accelerometry. Sensors 2020, 20, 1118. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matarazzo, J.; Saslow, G.; Wiens, A.; Weitman, M.; Allen, B. Interviewer head nodding and interviewee speech durations. Psychother. Theory Res. Pract. 1964, 1, 54–63. [Google Scholar] [CrossRef]

- Duncan, S., Jr. Some signals and rules for taking speaking turns in conversations. J. Personal. Soc. Psychol. 1972, 23, 283–292. [Google Scholar] [CrossRef]

- Peräkylä, A.; Ruusuvuori, J.; Lindfors, P. What is patient participation. Reflections arising from the study of general practice, homeopathy and psychoanalysis. In Patient Participation in Health Care Consultations; Collins, S., Britten, N., Ruusuvuori, J., Thompson, A., Eds.; Open University Press: London, UK, 2007; pp. 121–142. [Google Scholar]

- Oshima, S. Achieving Consensus Through Professionalized Head Nods: The Role of Nodding in Service Encounters in Japan. Int. J. Bus. Commun. 2014, 51, 31–57. [Google Scholar] [CrossRef]

- Majumder, S.; Kehtarnavaz, N. Vision and Inertial Sensing Fusion for Human Action Recognition: A Review. IEEE Sens. J. 2021, 21, 2454–2467. [Google Scholar] [CrossRef]

- Coviello, G.; Avitabile, G.; Florio, A. The Importance of Data Synchronization in Multiboard Acquisition Systems. In Proceedings of the 2020 IEEE 20th Mediterranean Electrotechnical Conference (MELECON), Palermo, Italy, 16–18 June 2020; pp. 293–297. [Google Scholar]

- Inoue, M.; Irino, T.; Furuyama, N.; Hanada, R.; Ichinomiya, T.; Massaki, H. Manual and accelerometer analysis of head nodding patterns in goal-oriented dialogues. In Proceedings of the 14th International Conference, HCI International 2011, Orlando, FL, USA, 9–14 July 2011. [Google Scholar]

- Sloetjes, H.; Wittenburg, P. Annotation by Category: ELAN and ISO DCR. In Proceedings of the Sixth International Conference on Language Resources and Evaluation (LREC’08), Marrakech, Morocco, 28–30 May 2008. [Google Scholar]

- Maynard, S. Interactional functions of a nonverbal sign: Head movement in Japanese dyadic casual conversation. J. Pragmat. 1987, 11, 589–606. [Google Scholar] [CrossRef]

- McClave, E. Linguistic functions of head movements in the context of speech. J. Pragmat. 2000, 32, 855–878. [Google Scholar] [CrossRef]

- Poggi, I.; D’Errico, F.; Vincze, L. Types of Nods. The Polysemy of a Social Signal. In Proceedings of the Seventh International Conference on Language Resources and Evaluation (LREC’10), Valletta, Malta, 19–21 May 2010. [Google Scholar]

- Ramseyer, F.; Tschacher, W. Nonverbal synchrony of head- and body-movement in psychotherapy: Different signals have different associations with outcome. Front. Psychol. 2014, 5, 979. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nagaoka, C.; Komori, M. Body movement synchrony in psychotherapeutic counseling: A study using the video-based quantification method. IEICE Trans. Inf. Syst. 2008, E91-D, 1634–1640. [Google Scholar] [CrossRef] [Green Version]

- Kodama, K.; Hori, K.; Tanaka, S.; Matsui, H. How Interpersonal Coordination Can Reflect Psychological Counseling: An Exploratory Study. Psychology 2018, 9, 1128–1142. [Google Scholar] [CrossRef] [Green Version]

- Schoenherr, D.; Paulick, J.; Strauss, B.M.; Deisenhofer, A.K.; Schwartz, B.; Rubel, J.A.; Lutz, W.; Stangier, U.; Altmann, U. Identification of movement synchrony: Validation of windowed cross-lagged correlation and -regression with peak-picking algorithm. PLoS ONE 2019, 14, e0211494. [Google Scholar] [CrossRef]

- Altmann, U.; Schoenherr, D.; Paulick, J.; Deisenhofer, A.K.; Schwartz, B.; Rubel, J.A.; Stangier, U.; Lutz, W.; Strauss, B. Associations between movement synchrony and outcome in patients with social anxiety disorder: Evidence for treatment specific effects. Psychother. Res. 2020, 30, 574–590. [Google Scholar] [CrossRef] [PubMed]

- Pomeranz, A. Agreeing and disagreeing with assessments: Some features of preferred/dispreferred turn shapes. In Structures of Social Action: Studies in Conversation Analysis; Atkinson, J.M., Heritage, J., Eds.; Cambridge University Press: Cambridge, UK, 1984; pp. 57–101. [Google Scholar]

- Tomori, C.; Bavelas, J.B. Using microanalysis of communication to compare solution-focused and client-centered therapies. J. Fam. Psychother. 2007, 18, 25–43. [Google Scholar] [CrossRef] [Green Version]

- Hayashi, R. Rhythmicity, sequence and synchrony of English and Japanese face-to-face conversation. Lang. Sci. 1990, 12, 155–195. [Google Scholar] [CrossRef]

- Otsuka, K.; Tsumori, M. Analyzing Multifunctionality of Head Movements in Face-to-Face Conversations Using Deep Convolutional Neural Networks. IEEE Access 2020, 8, 217169–217195. [Google Scholar] [CrossRef]

- Paggio, P.; Agirrezabal, M.; Jongejan, B.; Navarretta, C. Automatic Detection and Classification of Head Movements in Face-to-Face Conversations. In Proceedings of the LREC2020 Workshop “People in Language, Vision and the Mind” (ONION2020), Marseille, France, 16 May 2020; European Language Resources Association (ELRA): Marseille, France, 2020; pp. 15–21. [Google Scholar]

- Hill, C.E.; Lent, R.W. A narrative and meta-analytic review of helping skills training: Time to revive a dormant area of inquiry. Psychother. Theory Res. Pract. Train. 2006, 43, 154. [Google Scholar] [CrossRef] [PubMed]

- Bennett-Levy, J. Why therapists should walk the talk: The theoretical and empirical case for personal practice in therapist training and professional development. J. Behav. Ther. Exp. Psychiatry 2019, 62, 133–145. [Google Scholar] [CrossRef]

- Fukkink, R.G.; Trienekens, N.; Kramer, L.J. Video feedback in education and training: Putting learning in the picture. Educ. Psychol. Rev. 2011, 23, 45–63. [Google Scholar] [CrossRef] [Green Version]

- Arakawa, R.; Yakura, H. INWARD: A Computer-Supported Tool for Video-Reflection Improves Efficiency and Effectiveness in Executive Coaching. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Mieskes, M.; Stiegelmayr, A. Preparing data from psychotherapy for natural language processing. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Inoue, M.; Ogihara, M.; Hanada, R.; Furuyama, N. Gestural cue analysis in automated semantic miscommunication annotation. Multimed. Tools Appl. 2012, 61, 7–20. [Google Scholar] [CrossRef]

- Gupta, A.; Strivens, F.L.; Tag, B.; Kunze, K.; Ward, J.A. Blink as You Sync: Uncovering Eye and Nod Synchrony in Conversation Using Wearable Sensing. In Proceedings of the 23rd International Symposium on Wearable Computers, London, UK, 11–13 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 66–71. [Google Scholar] [CrossRef]

- Nasir, M.; Baucom, B.R.; Georgiou, P.; Narayanan, S. Predicting couple therapy outcomes based on speech acoustic features. PLoS ONE 2017, 12, e0185123. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Valenstein-Mah, H.; Greer, N.; McKenzie, L.; Hansen, L.; Strom, T.Q.; Wiltsey Stirman, S.; Wilt, T.J.; Kehle-Forbes, S.M. Effectiveness of training methods for delivery of evidence-based psychotherapies: A systematic review. Implement. Sci. 2020, 15, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Frank, H.E.; Becker-Haimes, E.M.; Kendall, P.C. Therapist training in evidence-based interventions for mental health: A systematic review of training approaches and outcomes. Clin. Psychol. Sci. Pract. 2020, 27, e12330. [Google Scholar] [CrossRef]

- Inoue, M. Human Judgment on Humor Expressions in a Community-Based Question-Answering Service. In Proceedings of the AAAI Fall Symposium: Artificial Intelligence of Humor, Arlington, VA, USA, 2–4 November 2012. [Google Scholar]

- Artstein, R.; Poesio, M. Inter-Coder Agreement for Computational Linguistics. Comput. Linguist. 2008, 34, 555–596. [Google Scholar] [CrossRef] [Green Version]

- Booth, B.M.; Narayanan, S.S. Fifty Shades of Green: Towards a Robust Measure of Inter-Annotator Agreement for Continuous Signals. In Proceedings of the 2020 International Conference on Multimodal Interaction, Utrecht, The Netherlands, 25–29 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 204–212. [Google Scholar]

- Feng, T.; Narayanan, S.S. Modeling Behavioral Consistency in Large-Scale Wearable Recordings of Human Bio-Behavioral Signals. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–9 May 2020; pp. 1011–1015. [Google Scholar]

- Coviello, G.; Avitabile, G. Multiple Synchronized Inertial Measurement Unit Sensor Boards Platform for Activity Monitoring. IEEE Sens. J. 2020, 20, 8771–8777. [Google Scholar] [CrossRef]

- Kwon, H.; Tong, C.; Haresamudram, H.; Gao, Y.; Abowd, G.D.; Lane, N.D.; Plötz, T. IMUTube: Automatic Extraction of Virtual on-Body Accelerometry from Video for Human Activity Recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 87. [Google Scholar] [CrossRef]

- Ogihara, M.; Ren, G.; Martin, K.B.; Cohn, J.F.; Cassell, J.; Hammal, Z.; Messinger, D.S. Categorical Timeline Allocation and Alignment for Diagnostic Head Movement Tracking Feature Analysis. In Proceedings of the CVPR ’19 Workshop on Face and Gesture Analysis for Health Informatics (FGAHI), Long Beach, CA, USA, 17 June 2019; pp. 43–51. [Google Scholar]

| Time | Therapist (Experience) | Client | |

|---|---|---|---|

| Dialogue 1 | 2850 s | Female (novice: 1 year) | Male |

| Dialogue 2 | 1789 s | Female (experienced: 11 years) | Female |

| Sec. | Content | |

|---|---|---|

| (1) | 950 | Cl: Well, I’m not characterized as a parent. |

| (2) | 1600 | Th: Great! You can see yourself very well. |

| Cl: Ah, O, only this time. | ||

| (3) | 2463 | Cl: Such, (pause) well, my parents brought me up, |

| and I feel kind of, well, happy about it. | ||

| (4) | 2566 | Cl: However, my parents were, well, different. |

| They, well, stood up for their children earnestly. |

| Sec. | Content | |

|---|---|---|

| (1) | 550 | Cl: I’m wondering what I should do. (folding her arms) |

| Th: So, she does not take it out on someone outside. | ||

| (scratching her head) | ||

| (2) | 793 | Cl: They left me out; something like [Cl] is okay already. |

| (laughing) | ||

| Th: (nodding deeply) So, they do not worry about you. | ||

| Cl: That’s right. | ||

| (3) | 974 | Th: It’s something like evacuation. |

| Cl: Yes, evacuation. | ||

| Th: You can do that. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Inoue, M.; Irino, T.; Furuyama, N.; Hanada, R. Observational and Accelerometer Analysis of Head Movement Patterns in Psychotherapeutic Dialogue. Sensors 2021, 21, 3162. https://doi.org/10.3390/s21093162

Inoue M, Irino T, Furuyama N, Hanada R. Observational and Accelerometer Analysis of Head Movement Patterns in Psychotherapeutic Dialogue. Sensors. 2021; 21(9):3162. https://doi.org/10.3390/s21093162

Chicago/Turabian StyleInoue, Masashi, Toshio Irino, Nobuhiro Furuyama, and Ryoko Hanada. 2021. "Observational and Accelerometer Analysis of Head Movement Patterns in Psychotherapeutic Dialogue" Sensors 21, no. 9: 3162. https://doi.org/10.3390/s21093162