Ultra-Widefield Fluorescein Angiography Image Brightness Compensation Based on Geometrical Features

Abstract

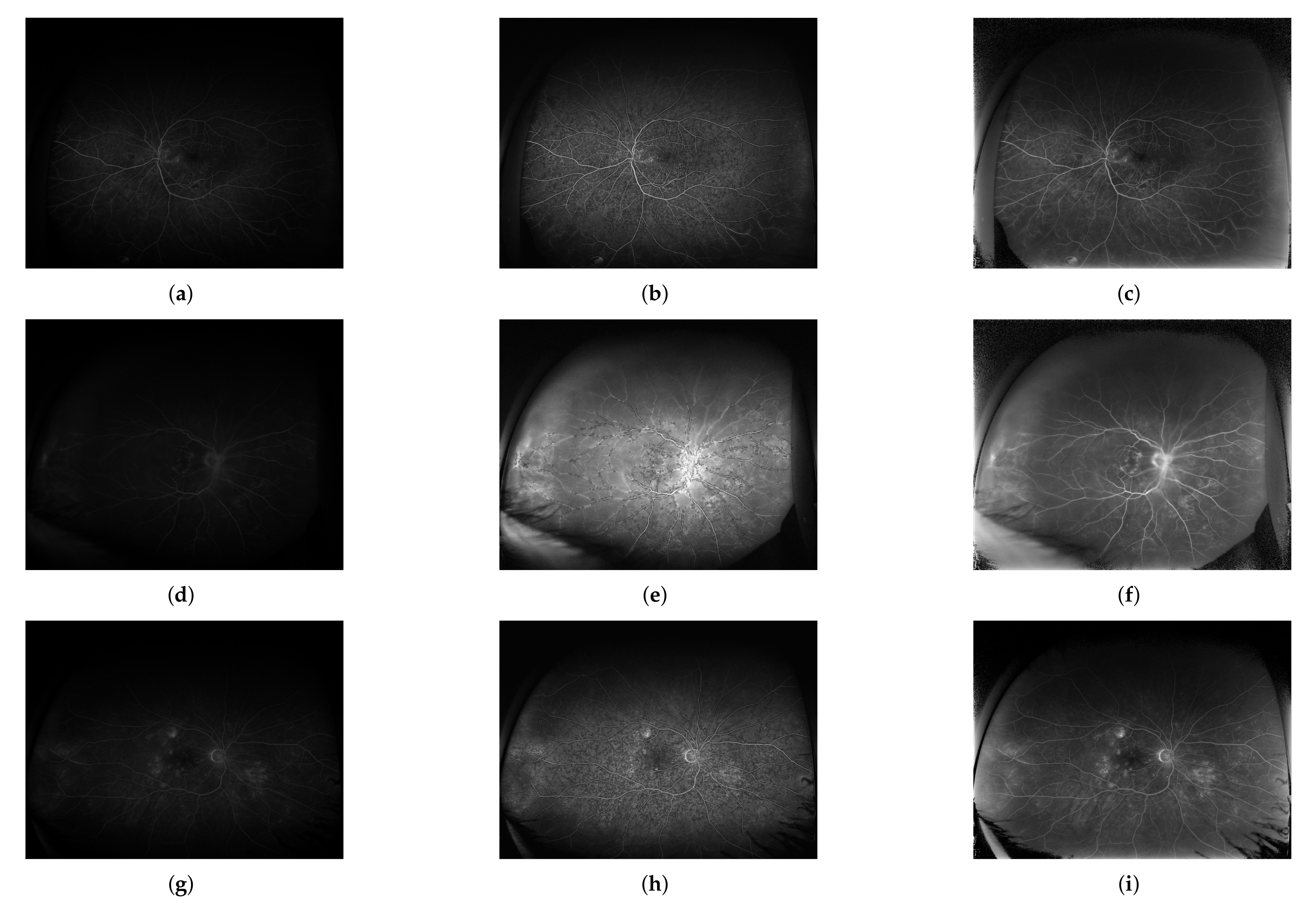

:1. Introduction

2. Materials and Methods

2.1. 2D Case Projected onto 1D Space

2.2. 3D Case Projected onto 2D Space

2.3. Image Database

2.4. Quality Measures

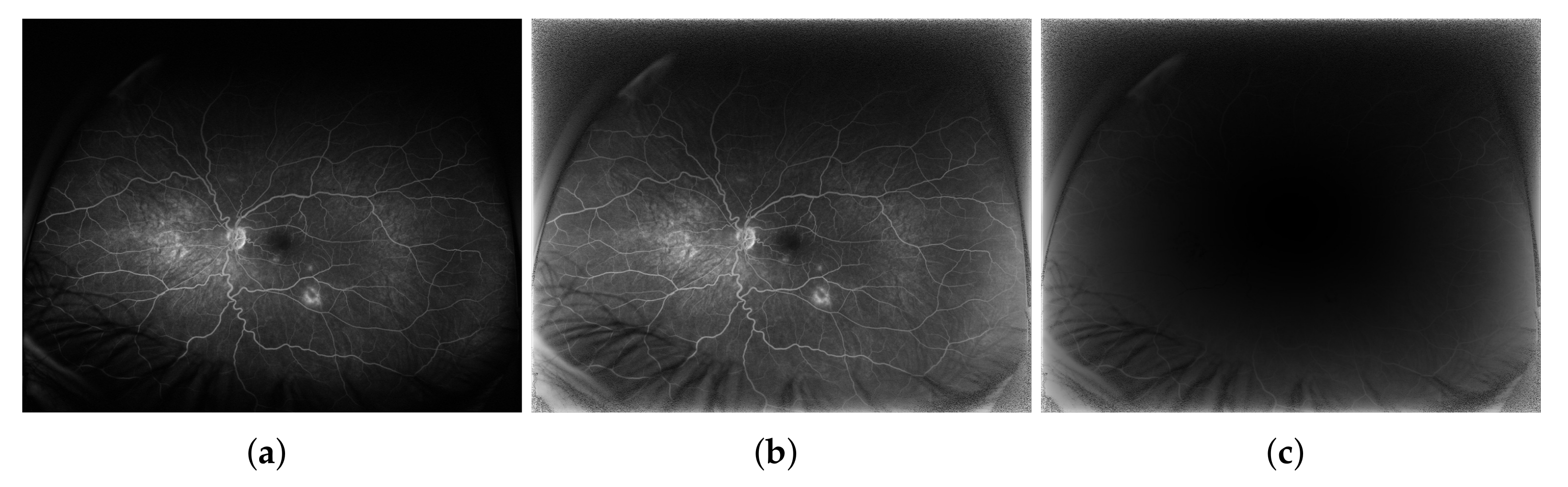

3. Results

4. Discussion and Conclusions

5. Summary and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Quality Measures

Appendix A.1. Edge-Based Contrast Measure

Appendix A.2. Pixel-to-Pixel Error Measures

Appendix A.3. Structural Information Measures

Appendix A.4. Edge Preservation Measures

References

- Kato, Y.; Inoue, M.; Hirakata, A. Quantitative Comparisons of Ultra-Widefield Images of Model Eye Obtained with Optos® 200Tx and Optos® California. BMC Ophthalmol. 2019, 19, 115. [Google Scholar] [CrossRef] [Green Version]

- Oishi, A.; Miyata, M.; Numa, S.; Otsuka, Y.; Oishi, M.; Tsujikawa, A. Wide-Field Fundus Autofluorescence Imaging in Patients with Hereditary Retinal Degeneration: A Literature Review. Int. J. Retin. Vitr. 2019, 5, 23. [Google Scholar] [CrossRef]

- Rabiolo, A.; Parravano, M.; Querques, L.; Cicinelli, M.V.; Carnevali, A.; Sacconi, R.; Centoducati, T.; Vujosevic, S.; Bandello, F.; Querques, G. Ultra-Wide-Field Fluorescein Angiography in Diabetic Retinopathy: A Narrative Review. Clin. Ophthalmol. 2017, 11, 803–807. [Google Scholar] [CrossRef] [Green Version]

- Shoughy, S.; Arevalo, J.F.; Kozak, I. Update on Wide- and Ultra-Widefield Retinal Imaging. Indian J. Ophthalmol. 2015, 63, 575–581. [Google Scholar] [CrossRef]

- Oishi, A.; Hidaka, J.; Yoshimura, N. Quantification of the Image Obtained with a Wide-Field Scanning Ophthalmoscope. Investig. Ophthalmol. Vis. Sci. 2014, 55, 2424–2431. [Google Scholar] [CrossRef] [PubMed]

- Croft, D.E.; Hemert, J.V.; Wykoff, C.C.; Clifton, D.; Verhoek, M.; Fleming, A.; Brown, D.M. Precise Montaging and Metric Quantification of Retinal Surface Area from Ultra-Wide-Field Fundus Photography and Fluorescein Angiography. Ophthalmic Surg. Lasers Imaging Retin. 2014, 45, 312–317. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.S.; Chew, M.C.; van Hemert, J.; Singer, M.A.; Bell, D.; Sadda, S.R. Measuring the Precise Area of Peripheral Retinal Non-Perfusion using Ultra-Wide-Field Imaging and Its Correlation with the Ischaemic Index. Br. J. Ophthalmol. 2016, 100, 235–239. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Zheng, Y.; He, Y. A Review of Methods for Bias Correction in Medical Images. Biomed. Eng. Rev. 2017, 1, 1–10. [Google Scholar] [CrossRef]

- Soomro, T.A.; Afifi, A.J.; Gao, J.; Hellwich, O.; Zheng, L.; Manoranjan, P. Strided Fully Convolutional Neural Network for Boosting the Sensitivity of Retinal Blood Vessels Segmentation. Expert Syst. Appl. 2019, 134, 36–52. [Google Scholar] [CrossRef]

- Jin, Q.; Meng, Z.; Pham, T.D.; Chen, Q.; Wei, L.; Su, R. DUNet: A Deformable Network for Retinal Vessel Segmentation. Knowl.-Based Syst. 2019, 178, 149–162. [Google Scholar] [CrossRef] [Green Version]

- Laha, S.; LaLonde, R.; Carmack, A.E.; Foroosh, H.; Olson, J.C.; Shaikh, S.; Bagci, U. Analysis of Video Retinal Angiography With Deep Learning and Eulerian Magnification. Front. Comput. Sci. 2020, 2, 24. [Google Scholar] [CrossRef]

- Ding, L.; Ajay, E.K.; Rajeev, S.R.; Wykoff, C.C.; Sharma, G.; Kuriyan, A.E. Weakly-Supervised Vessel Detection in Ultra-Widefield Fundus Photography Via Iterative Multi-Modal Registration and Learning. IEEE Trans. Med. Imaging 2020, 40, 2748–2758. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Y.; MacCormick, I.J.; Parry, D.G.; Leach, S.; Beare, N.A.; Harding, S.P.; Zheng, Y. Automated Detection of Leakage in Fluorescein Angiography Images with Application to Malarial Retinopathy. Sci. Rep. 2015, 5, 10425. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ganjee, R.; Moghaddam, M.E.; Nourinia, R. Automatic Segmentation of Abnormal Capillary Nonperfusion Regions in Optical Coherence Tomography Angiography Images using Marker-Controlled Watershed Algorithm. J. Biomed. Opt. 2018, 23, 096006. [Google Scholar] [CrossRef] [PubMed]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. Graph. Gems 1994, IV, 474–485. [Google Scholar]

- Sopharak, A.; Uyyanonvara, B.; Barman, S. Simple Hybrid Method for Fine Microaneurysm Detection from Non-Dilated Diabetic Retinopathy Retinal Images. Comput. Med Imaging Graph. 2013, 37, 394–402. [Google Scholar] [CrossRef] [PubMed]

- Intaramanee, T.; Khoeun, R.; Chinnasarn, K. Automatic Microaneurysm Detection using Multi-Level Threshold based on ISODATA. In Proceedings of the 2017 14th International Joint Conference on Computer Science and Software Engineering (JCSSE), NakhonSiThammarat, Thailand, 12–14 July 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Sheet, S.S.M.; Tan, T.S.; As’ari, M.; Hitam, W.H.W.; Sia, J.S. Retinal Disease Identification using Upgraded CLAHE Filter and Transfer Convolution Neural Network. ICT Express 2021, in press. [CrossRef]

- Mehta, N.; Braun, P.X.; Gendelman, I.; Alibhai, A.Y.; Arya, M.; Duker, J.S.; Waheed, N.K. Repeatability of Binarization Thresholding Methods for Optical Coherence Tomography Angiography Image Quantification. Sci. Rep. 2020, 10, 15368. [Google Scholar] [CrossRef] [PubMed]

- Sonali; Sahu, S.; Singh, A.K.; Ghrera, S.P.; Elhoseny, M. An Approach for De-Noising and Contrast Enhancement of Retinal Fundus Image Using CLAHE. Opt. Laser Technol. 2019, 110, 87–98. [Google Scholar] [CrossRef]

- Alwazzan, M.J.; Ismael, M.A.; Ahmed, A.N. A Hybrid Algorithm to Enhance Colour Retinal Fundus Images Using a Wiener Filter and CLAHE. J. Digit. Imaging 2021, 34, 750–759. [Google Scholar] [CrossRef]

- Rasta, S.H.; Nikfarjam, S.; Javadzadeh, A. Detection of Retinal Capillary Nonperfusion in Fundus Fluorescein Angiogram of Diabetic Retinopathy. BioImpacts 2015, 5, 183–190. [Google Scholar] [CrossRef] [PubMed]

- Kaba, D.; Salazar-Gonzalez, A.G.; Li, Y.; Liu, X.; Serag, A. Segmentation of Retinal Blood Vessels Using Gaussian Mixture Models and Expectation Maximisation. In Health Information Science; Huang, G., Liu, X., He, J., Klawonn, F., Yao, G., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 105–112. [Google Scholar]

- Zheng, Y.; Vanderbeek, B.; Xiao, R.; Daniel, E.; Stambolian, D.; Maguire, M.; O’Brien, J.; Gee, J. Retrospective Illumination Correction of Retinal Fundus Images From Gradient Distribution Sparsity. In Proceedings of the International Symposium on Biomedical Imaging, Barcelona, Spain, 2–5 May 2012; pp. 972–975. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing; Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Kells, L.M.; Kern, W.F.; Bland, J.R. Plane and Spherical Trigonometry; Creative Media Partners, LLC.: Sacramento, CA, USA, 2018. [Google Scholar]

- Sędziak-Marcinek, B.; Teper, S.; Chełmecka, E.; Wylęgała, A.; Marcinek, M.; Bas, M.; Wylęgała, E. Diabetic Macular Edema Treatment with Bevacizumab does not Depend on the Retinal Nonperfusion Presence. J. Diabetes Res. 2021, 2021, 6620122. [Google Scholar] [CrossRef]

- Shen, H.; Wang, J.; Niu, T.; Chen, J.; Xu, X. Dynamic Versus Static Ultra-Widefield Fluorescein Angiography in Eyes with Diabetic Retinopathy: A Pilot Prospective Cross-sectional Study. Int. J. Ophthalmol. 2020, 14, 409–415. [Google Scholar] [CrossRef]

- Beghdadi, A.; Le Negrate, A. Contrast Enhancement Technique Based on Local Detection of Edges. Comput. Vis. Graph. Image Process. 1989, 46, 162–174. [Google Scholar] [CrossRef]

- Celik, T.; Tjahjadi, T. Automatic Image Equalization and Contrast Enhancement Using Gaussian Mixture Modeling. IEEE Trans. Image Process. 2012, 21, 145–156. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Michel-González, E.; Cho, M.H.; Lee, S.Y. Geometric Nonlinear Diffusion Filter and its Application to X-ray Imaging. Biomed. Eng. Online 2011, 10, 47. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sattar, F.; Floreby, L.; Salomonsson, G.; Lövström, B. Image Enhancement Based on a Nonlinear Multiscale Method. IEEE Trans. Image Process. 1997, 6, 888–895. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovik, A.C. A Universal Image Quality Index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Peli, E. Contrast in Complex Images. J. Opt. Soc. Am. A 1990, 7, 2032–2040. [Google Scholar] [CrossRef]

- Siwek, S.; Więcławek, W. Automatic Blood Vessel Segmentation Algorithm in Ultrawide-Field Fluorescein Angiography Images. In Recent Advances in Computational Oncology and Personalized Medicine; Silesian University of Technology, Ed.; Silesian University of Technology: Gliwice, Poland, 2021; in press. [Google Scholar]

- Jaya, V.L.; Gopikakumari, R. IEM: A New Image Enhancement Metric for Contrast and Sharpness Measurements. Int. J. Comput. Appl. 2013, 79, 1–9. [Google Scholar]

| Subjects | Eye | Images | Phase | ||||

|---|---|---|---|---|---|---|---|

| L | R | L | R | Early | Mid | Late | |

| 34 | 19 | 22 | 124 | 132 | 74 | 146 | 36 |

| 41 | 256 | 256 | |||||

| Phase | EBCM | MAE | MSE | RMS | SSIM | Q | ||

|---|---|---|---|---|---|---|---|---|

| Early | 0.97 | 0.1 | 0.02 | 0.13 | 0.67 | 0.61 | 0.04 | 0.66 |

| Mid | 0.97 | 0.03 | 0.00 | 0.03 | 0.94 | 0.92 | 0.99 | 0.99 |

| Late | 0.99 | 0.05 | 0.00 | 0.05 | 0.84 | 0.80 | 0.83 | 0.95 |

| Measure | Method | Central Region | Peripheral Region | ||||||

|---|---|---|---|---|---|---|---|---|---|

| E | M | L | G | E | M | L | G | ||

| EBCM | Proposed | 1.00 | 0.99 | 0.99 | 0.99 | 1.51 | 1.21 | 1.24 | 1.30 |

| CLAHE | 0.62 | 0.62 | 0.59 | 0.62 | 1.88 | 1.46 | 1.99 | 1.57 | |

| MAE | Proposed | 0.02 | 0.02 | 0.02 | 0.02 | 1.12 | 0.13 | 0.14 | 0.13 |

| CLAHE | 0.08 | 0.08 | 0.08 | 0.08 | 0.05 | 0.06 | 0.06 | 0.05 | |

| MSE | Proposed | 0.00 | 0.00 | 0.00 | 0.00 | 0.03 | 0.04 | 0.04 | 0.04 |

| CLAHE | 0.01 | 0.01 | 0.01 | 0.01 | 0.00 | 0.00 | 0.00 | 0.00 | |

| RMS | Proposed | 0.03 | 0.03 | 0.03 | 0.03 | 0.17 | 0.18 | 0.19 | 0.18 |

| CLAHE | 0.09 | 0.10 | 0.09 | 0.09 | 0.06 | 0.06 | 0.06 | 0.06 | |

| SSIM | Proposed | 0.97 | 0.97 | 0.97 | 0.97 | 0.44 | 0.49 | 0.48 | 0.47 |

| CLAHE | 0.71 | 0.72 | 0.70 | 0.71 | 0.53 | 0.56 | 0.54 | 0.55 | |

| Q | Proposed | 0.96 | 0.97 | 0.97 | 0.97 | 0.35 | 0.41 | 0.41 | 0.39 |

| CLAHE | 0.53 | 0.54 | 0.51 | 0.53 | 0.31 | 0.36 | 0.34 | 0.34 | |

| Proposed | 0.99 | 0.99 | 0.98 | 0.99 | 0.33 | 0.37 | 0.31 | 0.35 | |

| CLAHE | 0.93 | 0.92 | 0.91 | 0.92 | 0.97 | 0.96 | 0.96 | 0.96 | |

| Proposed | 0.99 | 0.99 | 0.99 | 0.99 | 0.41 | 0.44 | 0.44 | 0.43 | |

| CLAHE | 0.92 | 0.95 | 0.95 | 0.94 | 0.96 | 0.96 | 0.97 | 0.96 | |

| Image Region | EBCM | MAE | MSE | RMS | SSIM | Q | ||

|---|---|---|---|---|---|---|---|---|

| Central | + | + | ∘ | + | + | + | + | + |

| Peripheral | (−) | + | + | + | (∘/+) | ∘ | (+) | (+) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Więcławek, W.; Danch-Wierzchowska, M.; Rudzki, M.; Sędziak-Marcinek, B.; Teper, S.J. Ultra-Widefield Fluorescein Angiography Image Brightness Compensation Based on Geometrical Features. Sensors 2022, 22, 12. https://doi.org/10.3390/s22010012

Więcławek W, Danch-Wierzchowska M, Rudzki M, Sędziak-Marcinek B, Teper SJ. Ultra-Widefield Fluorescein Angiography Image Brightness Compensation Based on Geometrical Features. Sensors. 2022; 22(1):12. https://doi.org/10.3390/s22010012

Chicago/Turabian StyleWięcławek, Wojciech, Marta Danch-Wierzchowska, Marcin Rudzki, Bogumiła Sędziak-Marcinek, and Slawomir Jan Teper. 2022. "Ultra-Widefield Fluorescein Angiography Image Brightness Compensation Based on Geometrical Features" Sensors 22, no. 1: 12. https://doi.org/10.3390/s22010012

APA StyleWięcławek, W., Danch-Wierzchowska, M., Rudzki, M., Sędziak-Marcinek, B., & Teper, S. J. (2022). Ultra-Widefield Fluorescein Angiography Image Brightness Compensation Based on Geometrical Features. Sensors, 22(1), 12. https://doi.org/10.3390/s22010012