Smart Sensors for Augmented Electrical Experiments

Abstract

:1. Introduction

- Sense: Our system is capable of collecting information from the context in which it is introduced;

- Analyze: Our system is able to generate higher-level indicators from the data collected in the sensing process using data analysis techniques;

- React: While AR has typically been employed without sensor input or cumbersome, separate sensors, our system enables opportunities for new and expanded experiments through integrated sensors, such as educational data mining analysis and AI-based, individualized feedback using the same sensor technology. In this way, our system will prospectively provide customized recommendations for stakeholders based on the data collected during the sensing process and its interpretation performed during the analysis process.

- A smart sensor system for educational STEM experiments in electrical circuits consisting of sensors for voltage and current measurement, position identification with a focus on a 2D plane, and cable identification for circuit reconstruction;

- Energy-efficient and robust data transmission of the measured values to the Microsoft HoloLens 2 through the use of the widely used Bluetooth Low Energy (BLE) standard;

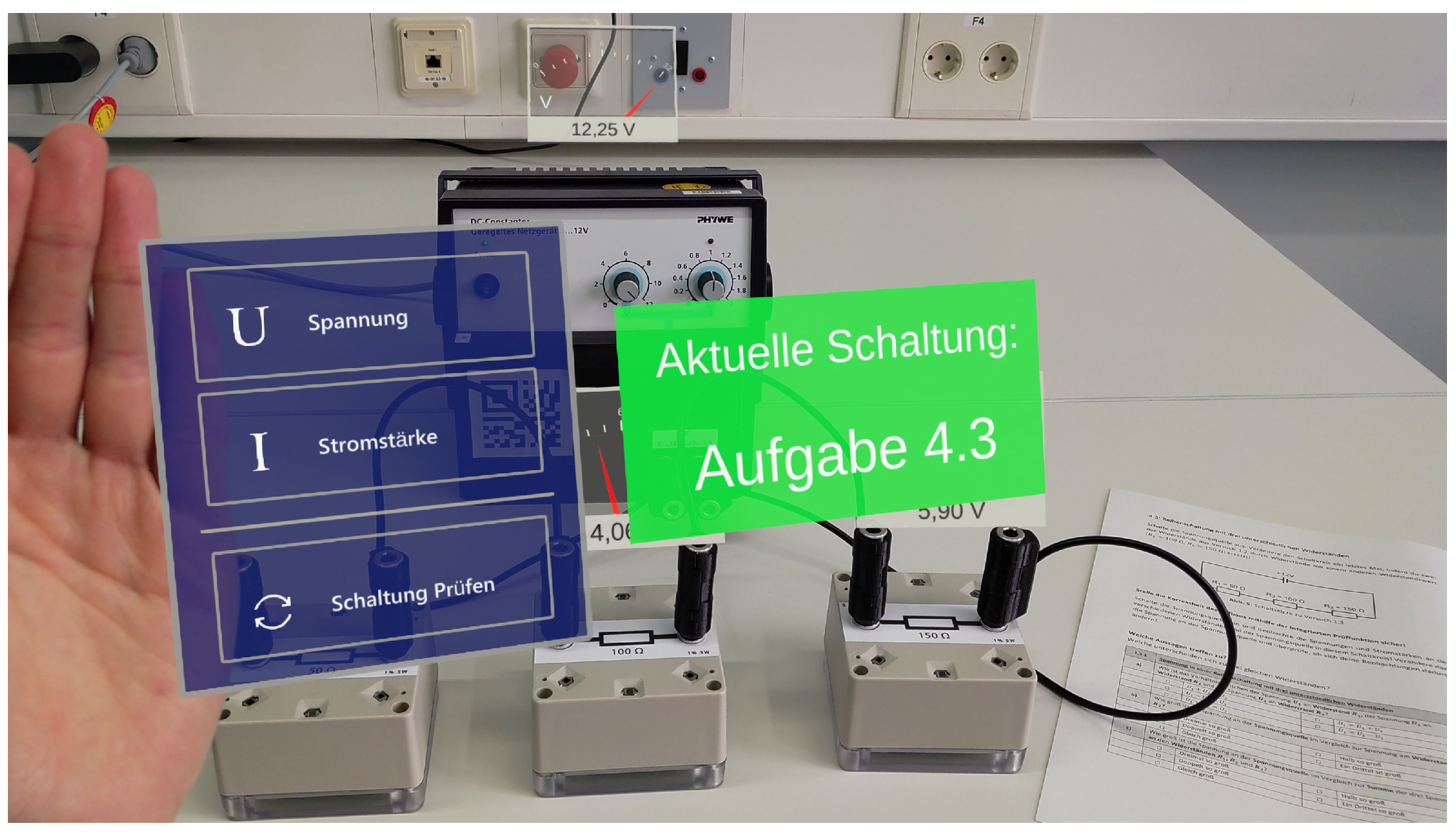

- Software solution for processing and visualizing sensor values in AR on the Microsoft HoloLens 2;

- An evaluation of the installed sensor systems in terms of accuracy and precision;

- An initial field study with students to evaluate the usability of the whole system consisting of multiple sensor boxes and including the first AR application running on the Microsoft HoloLens 2.

2. Background and Related Work

2.1. Learning with Multiple External Representations (MER)

2.2. Cognitive Load Theory and Cognitive Theory of Multimedia Learning

2.3. Sensors for Augmented Reality

2.4. SteamVR Locating System

3. Hardware for the Smart Learning Environment

3.1. Electrical Voltage and Current Measurement

3.2. Cable Identification

3.3. Position Identification

3.3.1. Hardware of the SteamVR 2.0 Base Stations

3.3.2. Lightbeam Receiver

3.3.3. Pre-Calculation for Position Detection

3.4. Data Communication

4. Data Processing

4.1. Data Communication

4.2. Electrical Voltage and Current Measurement

4.3. Circuit Reconstruction

4.4. Position Identification

4.4.1. Direction Calculation

4.4.2. Base Station Position Localization

4.4.3. Positioning on Table

5. Evaluation

5.1. Sensor Accuracy

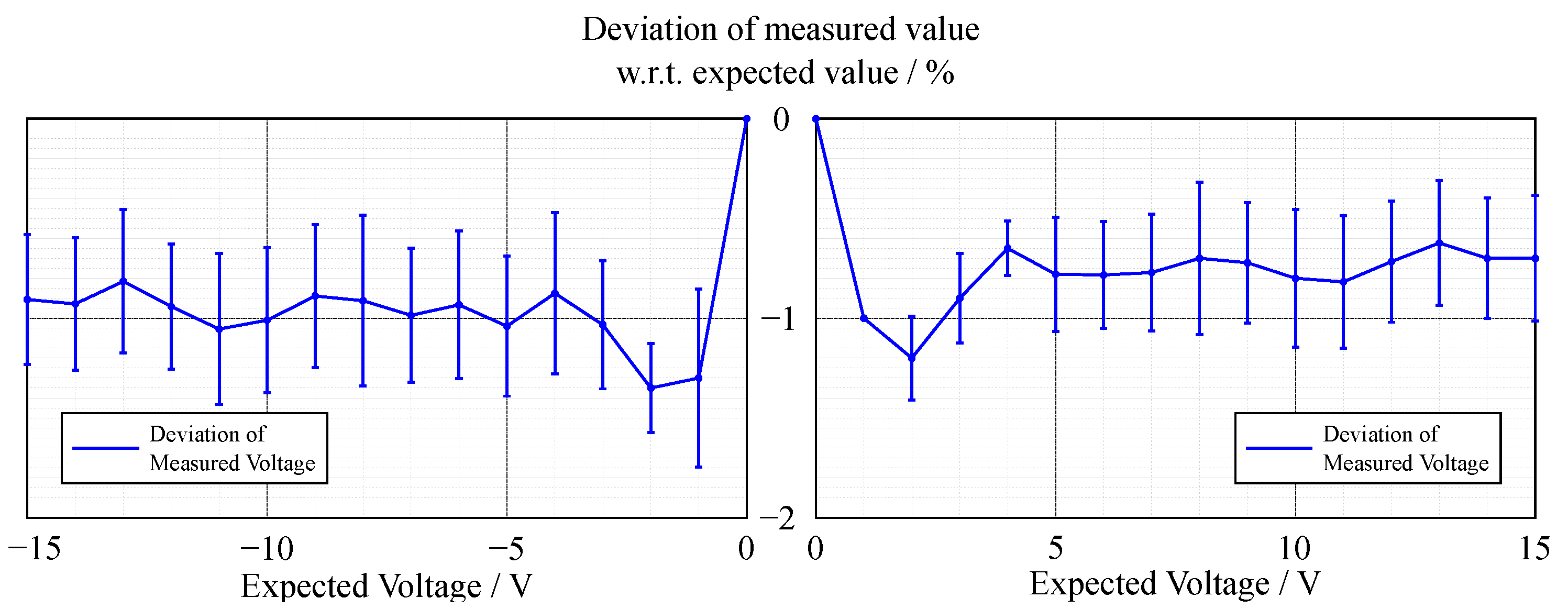

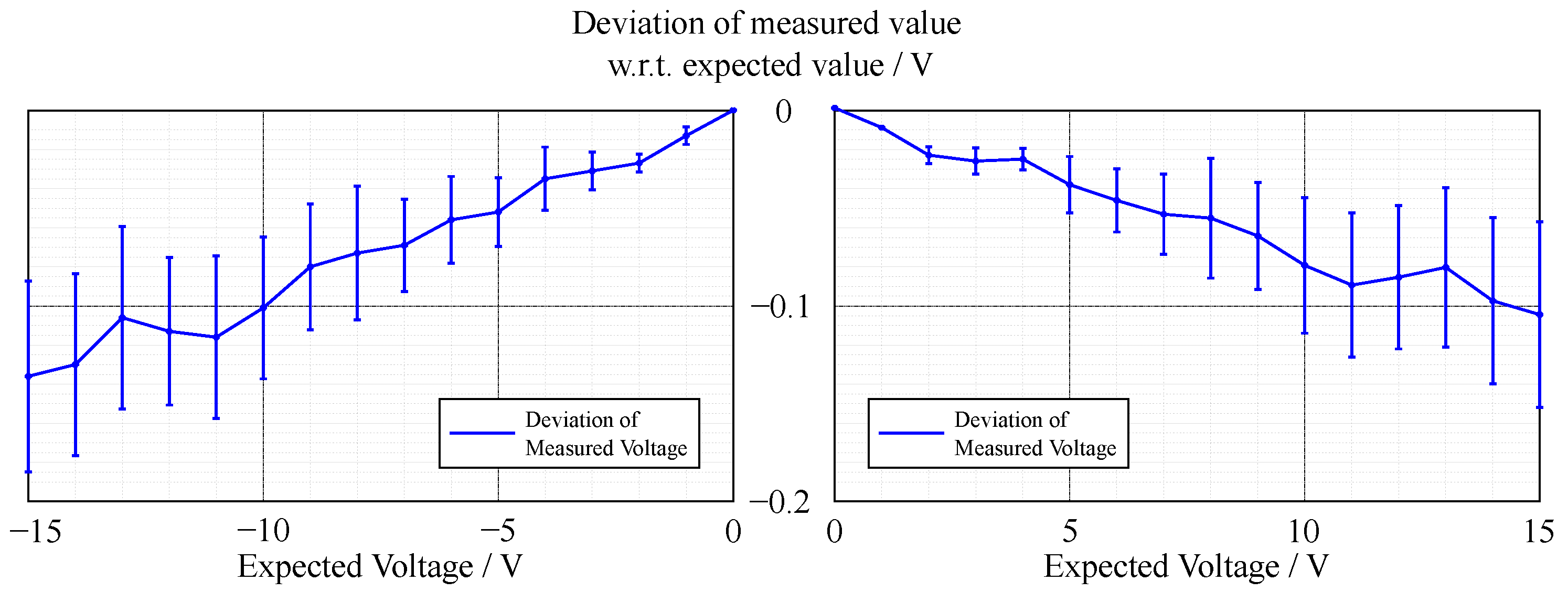

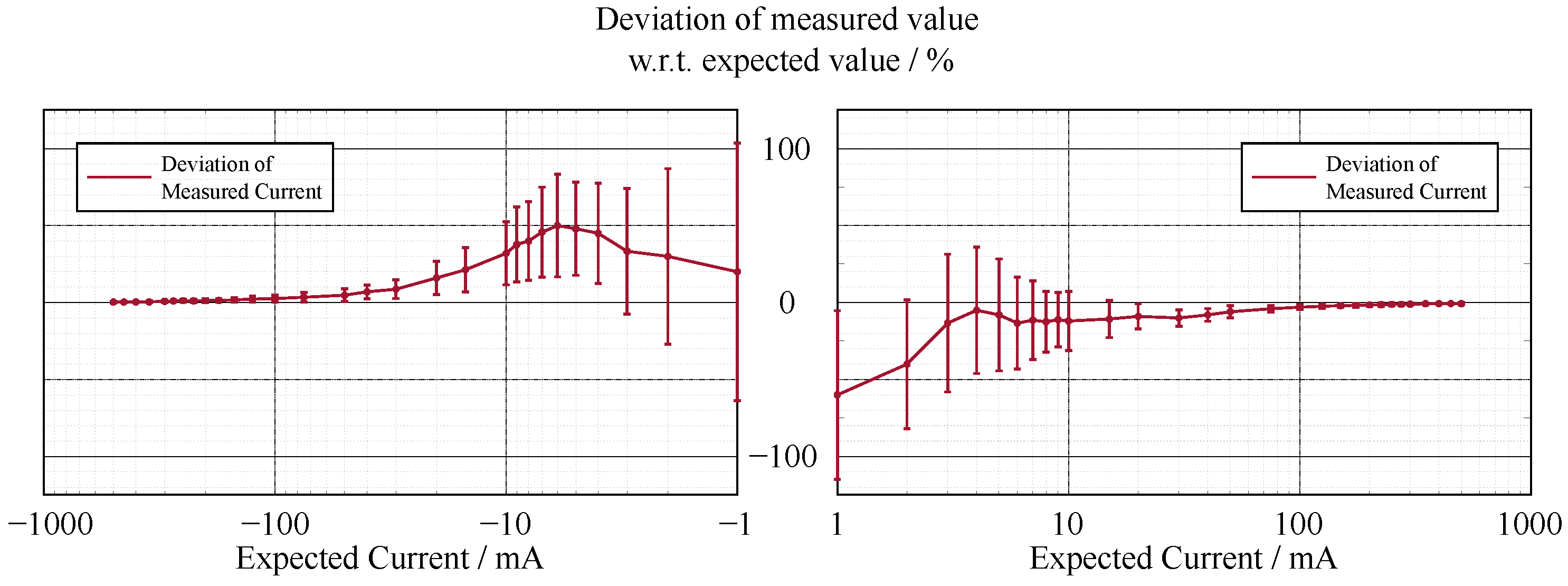

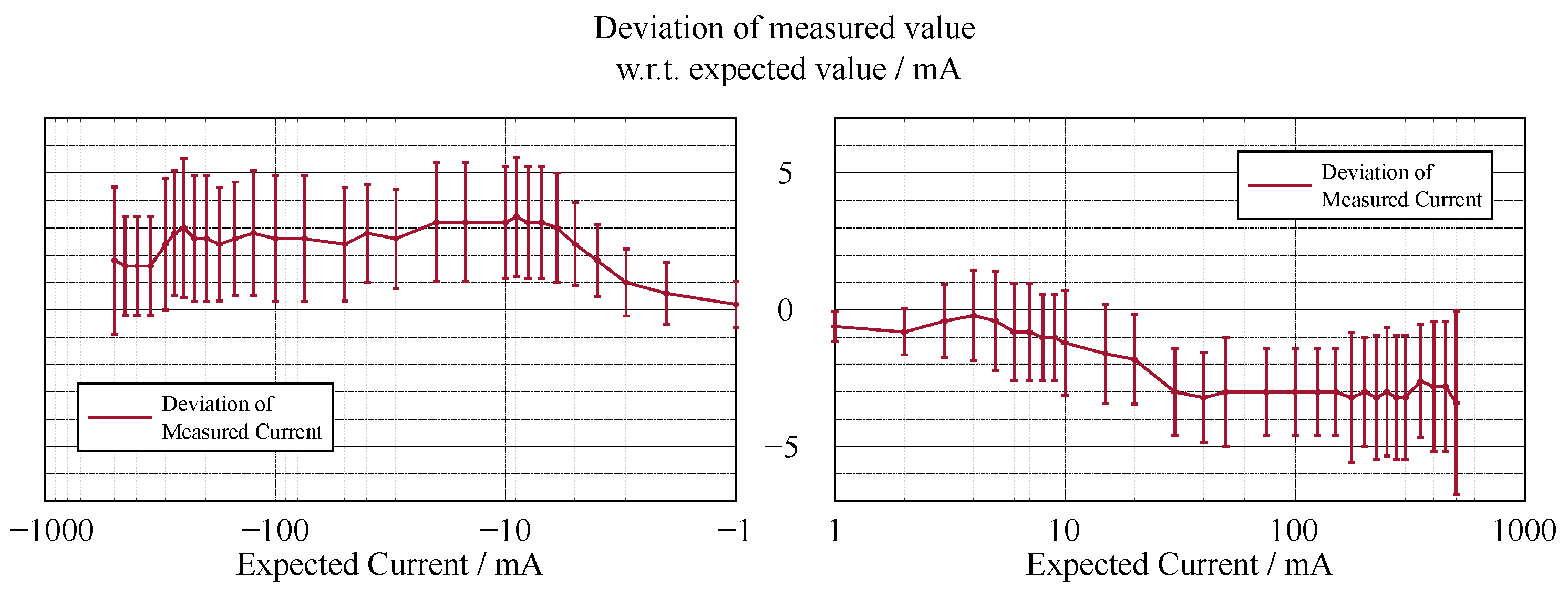

5.1.1. Electrical Voltage and Current Measurement

5.1.2. Cable Detection

5.1.3. Position

5.2. Usability Evaluation

5.2.1. Hypotheses and Research Questions

5.2.2. Participants

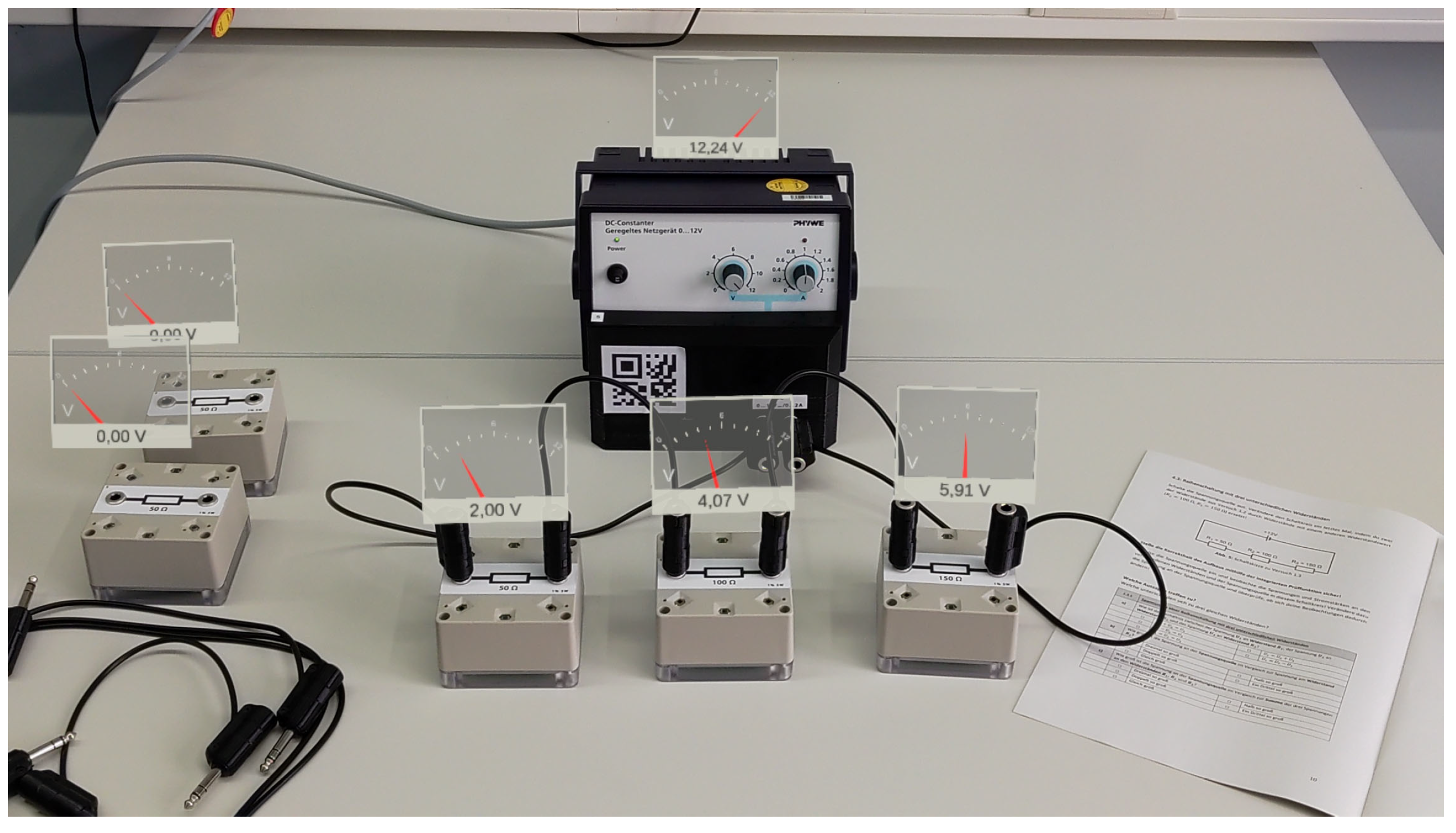

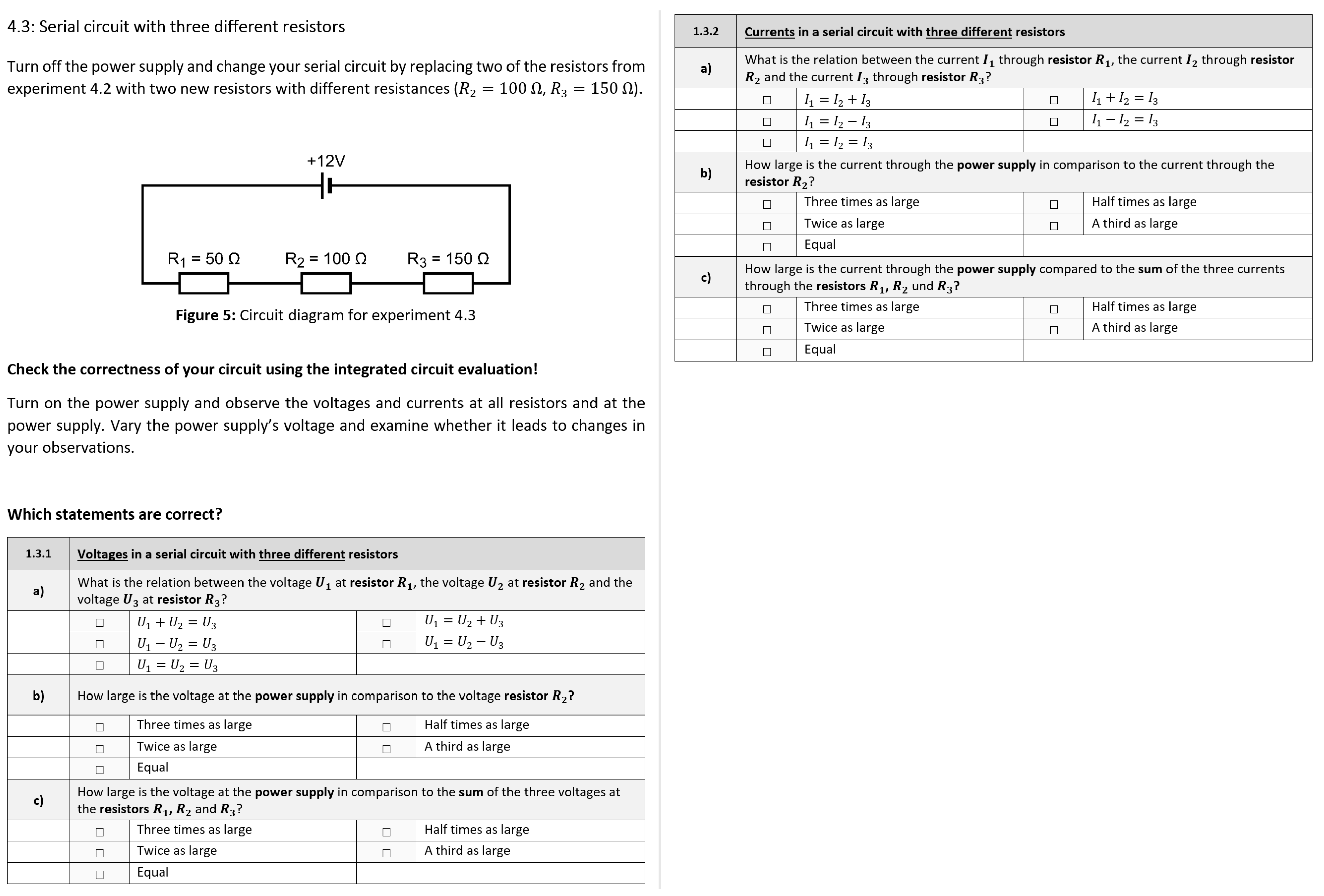

5.2.3. System Setup

5.2.4. Instruments

5.2.5. Procedure

5.2.6. Results

6. Discussion

6.1. Current State and Limitations

6.2. Further Development

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bennet, D.; Bennet, A. The depth of knowledge: Surface, shallow or deep? VINE 2008, 38, 405–420. [Google Scholar] [CrossRef]

- Vosniadou, S. Conceptual Change and Education. Hum. Dev. 2007, 50, 47–54. [Google Scholar] [CrossRef]

- Pedaste, M.; Mäeots, M.; Siiman, L.A.; de Jong, T.; van Riesen, S.A.; Kamp, E.T.; Manoli, C.C.; Zacharia, Z.C.; Tsourlidaki, E. Phases of inquiry-based learning: Definitions and the inquiry cycle. Educ. Res. Rev. 2015, 14, 47–61. [Google Scholar] [CrossRef] [Green Version]

- Hofstein, A.; Lunetta, V.N. The laboratory in science education: Foundations for the twenty-first century. Sci. Educ. 2004, 88, 28–54. [Google Scholar] [CrossRef]

- Holmes, N.G.; Ives, J.; Bonn, D.A. The Impact of Targeting Scientific Reasoning on Student Attitudes about Experimental Physics. In Proceedings of the 2014 Physics Education Research Conference Proceedings, Minneapolis, MN, USA, 30–31 July 2014; pp. 119–122. [Google Scholar] [CrossRef] [Green Version]

- Husnaini, S.J.; Chen, S. Effects of guided inquiry virtual and physical laboratories on conceptual understanding, inquiry performance, scientific inquiry self-efficacy, and enjoyment. Phys. Rev. Phys. Educ. Res. 2019, 15, 010119. [Google Scholar] [CrossRef] [Green Version]

- Kapici, H.O.; Akcay, H.; de Jong, T. Using Hands-On and Virtual Laboratories Alone or Together―Which Works Better for Acquiring Knowledge and Skills? J. Sci. Educ. Technol. 2019, 28, 231–250. [Google Scholar] [CrossRef]

- Wilcox, B.R.; Lewandowski, H.J. Developing skills versus reinforcing concepts in physics labs: Insight from a survey of students’ beliefs about experimental physics. Phys. Rev. Phys. Educ. Res. 2017, 13, 010108. [Google Scholar] [CrossRef] [Green Version]

- Lazonder, A.W.; Harmsen, R. Meta-Analysis of Inquiry-Based Learning. Rev. Educ. Res. 2016, 86, 681–718. [Google Scholar] [CrossRef]

- de Jong, T. Moving towards engaged learning in STEM domains; there is no simple answer, but clearly a road ahead. J. Comput. Assist. Learn. 2019, 35, 153–167. [Google Scholar] [CrossRef] [Green Version]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- de Jong, T.; Linn, M.C.; Zacharia, Z.C. Physical and virtual laboratories in science and engineering education. Science 2013, 340, 305–308. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zacharia, Z.C.; de Jong, T. The Effects on Students’ Conceptual Understanding of Electric Circuits of Introducing Virtual Manipulatives Within a Physical Manipulatives-Oriented Curriculum. Cogn. Instr. 2014, 32, 101–158. [Google Scholar] [CrossRef]

- Rau, M.A. Comparing Multiple Theories about Learning with Physical and Virtual Representations: Conflicting or Complementary Effects? Educ. Psychol. Rev. 2020, 32, 297–325. [Google Scholar] [CrossRef]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Garzón, J.; Kinshuk; Baldiris, S.; Gutiérrez, J.; Pavón, J. How do pedagogical approaches affect the impact of augmented reality on education? A meta-analysis and research synthesis. Educ. Res. Rev. 2020, 31, 100334. [Google Scholar] [CrossRef]

- Pedaste, M.; Mitt, G.; Jürivete, T. What Is the Effect of Using Mobile Augmented Reality in K12 Inquiry-Based Learning? Educ. Sci. 2020, 10, 94. [Google Scholar] [CrossRef] [Green Version]

- Thees, M.; Kapp, S.; Strzys, M.P.; Beil, F.; Lukowicz, P.; Kuhn, J. Effects of augmented reality on learning and cognitive load in university physics laboratory courses. Comput. Hum. Behav. 2020, 108, 106316. [Google Scholar] [CrossRef]

- Kapp, S.; Thees, M.; Strzys, M.P.; Beil, F.; Kuhn, J.; Amiraslanov, O.; Javaheri, H.; Lukowicz, P.; Lauer, F.; Rheinländer, C.; et al. Augmenting Kirchhoff’s laws: Using augmented reality and smartglasses to enhance conceptual electrical experiments for high school students. Phys. Teach. 2019, 57, 52–53. [Google Scholar] [CrossRef]

- Reyes-Aviles, F.; Aviles-Cruz, C. Handheld augmented reality system for resistive electric circuits understanding for undergraduate students. Comput. Appl. Eng. Educ. 2018, 26, 602–616. [Google Scholar] [CrossRef]

- Renkl, A.; Scheiter, K. Studying Visual Displays: How to Instructionally Support Learning. Educ. Psychol. Rev. 2017, 29, 599–621. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Billinghurst, M.; Duenser, A. Augmented Reality in the Classroom. Computer 2012, 45, 56–63. [Google Scholar] [CrossRef]

- Ibáñez, M.B.; Delgado-Kloos, C. Augmented reality for STEM learning: A systematic review. Comput. Educ. 2018, 123, 109–123. [Google Scholar] [CrossRef]

- Specht, M.; Hang, L.B.; Barnes, J.S. Sensors for Seamless Learning. In Seamless Learning; Lecture Notes in Educational Technology; Looi, C.K., Wong, L.H., Glahn, C., Cai, S., Eds.; Springer: Singapore, 2019; pp. 141–152. [Google Scholar] [CrossRef]

- Limbu, B.H.; Jarodzka, H.; Klemke, R.; Specht, M. Can You Ink While You Blink? Assessing Mental Effort in a Sensor-Based Calligraphy Trainer. Sensors 2019, 19, 3244. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Limbu, B.; Vovk, A.; Jarodzka, H.; Klemke, R.; Wild, F.; Specht, M. WEKIT.One: A Sensor-Based Augmented Reality System for Experience Capture and Re-enactment. In Transforming Learning with Meaningful Technologies; Lecture Notes in Computer Science; Scheffel, M., Broisin, J., Pammer-Schindler, V., Ioannou, A., Schneider, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11722, pp. 158–171. [Google Scholar] [CrossRef]

- Di Mitri, D.; Schneider, J.; Trebing, K.; Sopka, S.; Specht, M.; Drachsler, H. Real-Time Multimodal Feedback with the CPR Tutor. In Artificial Intelligence in Education; Lecture Notes in Computer Science; Bittencourt, I.I., Cukurova, M., Muldner, K., Luckin, R., Millán, E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12163, pp. 141–152. [Google Scholar] [CrossRef]

- Di Mitri, D.; Schneider, J.; Specht, M.; Drachsler, H. From signals to knowledge: A conceptual model for multimodal learning analytics. J. Comput. Assist. Learn. 2018, 34, 338–349. [Google Scholar] [CrossRef] [Green Version]

- Tabuenca, B.; Serrano-Iglesias, S.; Carruana-Martin, A.; Villa-Torrano, C.; Dimitriadis, Y.A.; Asensio-Perez, J.I.; Alario-Hoyos, C.; Gomez-Sanchez, E.; Bote-Lorenzo, M.L.; Martinez-Mones, A.; et al. Affordances and Core Functions of Smart Learning Environments: A Systematic Literature Review. IEEE Trans. Learn. Technol. 2021, 14, 129–145. [Google Scholar] [CrossRef]

- Tytler, R.; Prain, V.; Hubber, P.; Waldrip, B. Constructing Representations to Learn in Science; SensePublishers: Rotterdam, The Netherlands, 2013. [Google Scholar] [CrossRef] [Green Version]

- Etkina, E.; van Heuvelen, A.; White-Brahmia, S.; Brookes, D.T.; Gentile, M.; Murthy, S.; Rosengrant, D.; Warren, A. Scientific abilities and their assessment. Phys. Rev. Spec. Top.-Phys. Educ. Res. 2006, 2, 020103. [Google Scholar] [CrossRef] [Green Version]

- Treagust, D.F.; Duit, R.; Fischer, H.E. Multiple Representations in Physics Education; Models and Modeling in Science Education; Springer: Cham, Switzerland, 2017; Volume 10. [Google Scholar]

- Hubber, P.; Tytler, R.; Haslam, F. Teaching and Learning about Force with a Representational Focus: Pedagogy and Teacher Change. Res. Sci. Educ. 2010, 40, 5–28. [Google Scholar] [CrossRef]

- van Heuvelen, A.; Zou, X. Multiple representations of work–energy processes. Am. J. Phys. 2001, 69, 184–194. [Google Scholar] [CrossRef]

- Verschaffel, L.; de Corte, E.; de Jong, T. (Eds.) Use of Representations in Reasoning and Problem Solving: Analysis and Improvement; Routledge: London, UK, 2010. [Google Scholar]

- diSessa, A.A. Metarepresentation: Native Competence and Targets for Instruction. Cogn. Instr. 2004, 22, 293–331. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Ainsworth, S. The Educational Value of Multiple-representations when Learning Complex Scientific Concepts. In Visualization: Theory and Practice in Science Education; Models and Modeling in Science Education; Gilbert, J., Ed.; Springer Science: Dordrecht, The Netherlands, 2008; pp. 191–208. [Google Scholar] [CrossRef]

- de Jong, T.; Ainsworth, S.; Dobson, M.; van der Hulst, A.; Levonen, J.; Reimann, P.; Sime, J.A.; van Someren, M.; Spada, H.; Swaak, J. Acquiring knowledge in science and mathematics: The use of multiple representations in technology based learning environments. In Learning with Multiple Representations; Advances in Learning and Instruction Series; van Someren, M.W., Reimann, P., Boshuizen, H.P., Eds.; Pergamon/Elsevier: Oxford, UK, 1998; pp. 9–40. [Google Scholar]

- Nieminen, P.; Savinainen, A.; Viiri, J. Force Concept Inventory-based multiple-choice test for investigating students’ representational consistency. Phys. Rev. Spec. Top.-Phys. Educ. Res. 2010, 6, 020109. [Google Scholar] [CrossRef]

- Santos, M.E.C.; Chen, A.; Taketomi, T.; Yamamoto, G.; Miyazaki, J.; Kato, H. Augmented Reality Learning Experiences: Survey of Prototype Design and Evaluation. IEEE Trans. Learn. Technol. 2014, 7, 38–56. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Sweller, J.; Ayres, P.; Kalyuga, S. Cognitive Load Theory, 1st ed.; Explorations in the Learning Sciences, Instructional Systems and Performance Technologies; Springer: New York, NY, USA, 2011. [Google Scholar]

- Mayer, R.E. Cognitive Theory of Multimedia Learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 43–71. [Google Scholar] [CrossRef] [Green Version]

- Thees, M.; Kapp, S.; Altmeyer, K.; Malone, S.; Brünken, R.; Kuhn, J. Comparing Two Subjective Rating Scales Assessing Cognitive Load During Technology-Enhanced STEM Laboratory Courses. Front. Educ. 2021, 6, 236. [Google Scholar] [CrossRef]

- Mayer, R.E.; Fiorella, L. Principles for Reducing Extraneous Processing in Multimedia Learning: Coherence, Signaling, Redundancy, Spatial Contiguity, and Temporal Contiguity Principles. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 279–315. [Google Scholar] [CrossRef]

- Mayer, R.E.; Moreno, R. Nine Ways to Reduce Cognitive Load in Multimedia Learning. Educ. Psychol. 2003, 38, 43–52. [Google Scholar] [CrossRef] [Green Version]

- Ginns, P. Integrating information: A meta-analysis of the spatial contiguity and temporal contiguity effects. Learn. Instr. 2006, 16, 511–525. [Google Scholar] [CrossRef]

- Schroeder, N.L.; Cenkci, A.T. Spatial Contiguity and Spatial Split-Attention Effects in Multimedia Learning Environments: A Meta-Analysis. Educ. Psychol. Rev. 2018, 30, 679–701. [Google Scholar] [CrossRef]

- Mayer, R.E.; Pilegard, C. Principles for Managing Essential Processing in Multimedia Learning: Segmenting, Pre-training, and Modality Principles. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 316–344. [Google Scholar] [CrossRef]

- Sweller, J.; Chandler, P. Why Some Material Is Difficult to Learn. Cogn. Instr. 1994, 12, 185–233. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef] [Green Version]

- Sonntag, D.; Albuquerque, G.; Magnor, M.; Bodensiek, O. Hybrid learning environments by data-driven augmented reality. Procedia Manuf. 2019, 31, 32–37. [Google Scholar] [CrossRef]

- Albuquerque, G.; Sonntag, D.; Bodensiek, O.; Behlen, M.; Wendorff, N.; Magnor, M. A Framework for Data-Driven Augmented Reality. In Augmented Reality, Virtual Reality, and Computer Graphics; Lecture Notes in Computer Science; de Paolis, L.T., Bourdot, P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; Volume 11614, pp. 71–83. [Google Scholar] [CrossRef]

- Kapp, S.; Thees, M.; Beil, F.; Weatherby, T.; Burde, J.; Wilhelm, T.; Kuhn, J. The Effects of Augmented Reality: A Comparative Study in an Undergraduate Physics Laboratory Course. In Proceedings of the 12th International Conference on Computer Supported Education—Volume 2: CSEDU; SciTePress: Setubal, Portugal, 2020; pp. 197–206. [Google Scholar] [CrossRef]

- Kapp, S.; Thees, M.; Beil, F.; Weatherby, T.; Burde, J.P.; Wilhelm, T.; Kuhn, J. Using Augmented Reality in an Inquiry-Based Physics Laboratory Course. In Computer Supported Education; Communications in Computer and Information Science; Lane, H.C., Zvacek, S., Uhomoibhi, J., Eds.; Springer International Publishing: Cham, Switzerland, 2021; Volume 1473, pp. 177–198. [Google Scholar] [CrossRef]

- Thees, M.; Altmeyer, K.; Kapp, S.; Rexigel, E.; Beil, F.; Klein, P.; Malone, S.; Brünken, R.; Kuhn, J. Augmented Reality for Presenting Real-Time Data during Students Laboratory Work: Comparing Smartglasses with a Separate Display; Technische Universität Kaiserslautern: Kaiserslautern, Germany, submitted.

- Altmeyer, K.; Kapp, S.; Thees, M.; Malone, S.; Kuhn, J.; Brünken, R. The use of augmented reality to foster conceptual knowledge acquisition in STEM laboratory courses—Theoretical background and empirical results. Br. J. Educ. Technol. 2020, 51, 611–628. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Buchner, J.; Buntins, K.; Kerres, M. The impact of augmented reality on cognitive load and performance: A systematic review. J. Comput. Assist. Learn. 2021. [Google Scholar] [CrossRef]

- Radu, I. Augmented reality in education: A meta-review and cross-media analysis. Pers. Ubiquitous Comput. 2014, 18, 1533–1543. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef] [Green Version]

- Bauer, P.; Lienhart, W.; Jost, S. Accuracy Investigation of the Pose Determination of a VR System. Sensors 2021, 21, 1622. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef]

- Burde, J.P.; Wilhelm, T. Teaching electric circuits with a focus on potential differences. Phys. Rev. Phys. Educ. Res. 2020, 16, 020153. [Google Scholar] [CrossRef]

- Ishimaru, S.; Bukhari, S.S.; Heisel, C.; Großmann, N.; Klein, P.; Kuhn, J.; Dengel, A. Augmented Learning on Anticipating Textbooks with Eye Tracking. In Positive Learning in the Age of Information: A Blessing or a Curse? Zlatkin-Troitschanskaia, O., Wittum, G., Dengel, A., Eds.; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2018; pp. 387–398. [Google Scholar] [CrossRef]

- Kapp, S.; Barz, M.; Mukhametov, S.; Sonntag, D.; Kuhn, J. ARETT: Augmented Reality Eye Tracking Toolkit for Head Mounted Displays. Sensors 2021, 21, 2234. [Google Scholar] [CrossRef] [PubMed]

| x Position | z Position | y Rotation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| SD in cm | SD in cm | SD in deg | |||||||

| Position | −50 cm | 0 cm | 50 cm | −50 cm | 0 cm | 50 cm | −50 cm | 0 cm | 50 cm |

| 30 cm | 0.011 | 0.011 | 0.006 | 0.014 | 0.014 | 0.010 | 0.127 | 0.110 | 0.137 |

| 0 cm | 0.011 | 0.003 | 0.012 | 0.013 | 0.006 | 0.012 | 0.080 | 0.076 | 0.198 |

| −30 cm | 0.008 | 0.007 | 0.009 | 0.011 | 0.017 | 0.010 | 0.128 | 0.102 | 0.106 |

| 1. Light Beam | 2. Light Beam | |||||

|---|---|---|---|---|---|---|

| SD in bit | SD in bit | |||||

| Position | −50 cm | 0 cm | 50 cm | −50 cm | 0 cm | 50 cm |

| 30 cm | 1.193 | 1.503 | 1.810 | 1.279 | 1.369 | 1.501 |

| 0 cm | 1.051 | 1.394 | 1.310 | 1.130 | 1.593 | 1.318 |

| −30 cm | 0.950 | 1.269 | 1.060 | 1.211 | 1.057 | 1.196 |

| Item | Text |

|---|---|

| 1 | I felt disturbed/impaired by the HoloLens. |

| 2 | would have performed better without the HoloLens. |

| 3 | I felt uncomfortable due to the HoloLens. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapp, S.; Lauer, F.; Beil, F.; Rheinländer, C.C.; Wehn, N.; Kuhn, J. Smart Sensors for Augmented Electrical Experiments. Sensors 2022, 22, 256. https://doi.org/10.3390/s22010256

Kapp S, Lauer F, Beil F, Rheinländer CC, Wehn N, Kuhn J. Smart Sensors for Augmented Electrical Experiments. Sensors. 2022; 22(1):256. https://doi.org/10.3390/s22010256

Chicago/Turabian StyleKapp, Sebastian, Frederik Lauer, Fabian Beil, Carl C. Rheinländer, Norbert Wehn, and Jochen Kuhn. 2022. "Smart Sensors for Augmented Electrical Experiments" Sensors 22, no. 1: 256. https://doi.org/10.3390/s22010256