An Angle Recognition Algorithm for Tracking Moving Targets Using WiFi Signals with Adaptive Spatiotemporal Clustering

Abstract

:1. Introduction

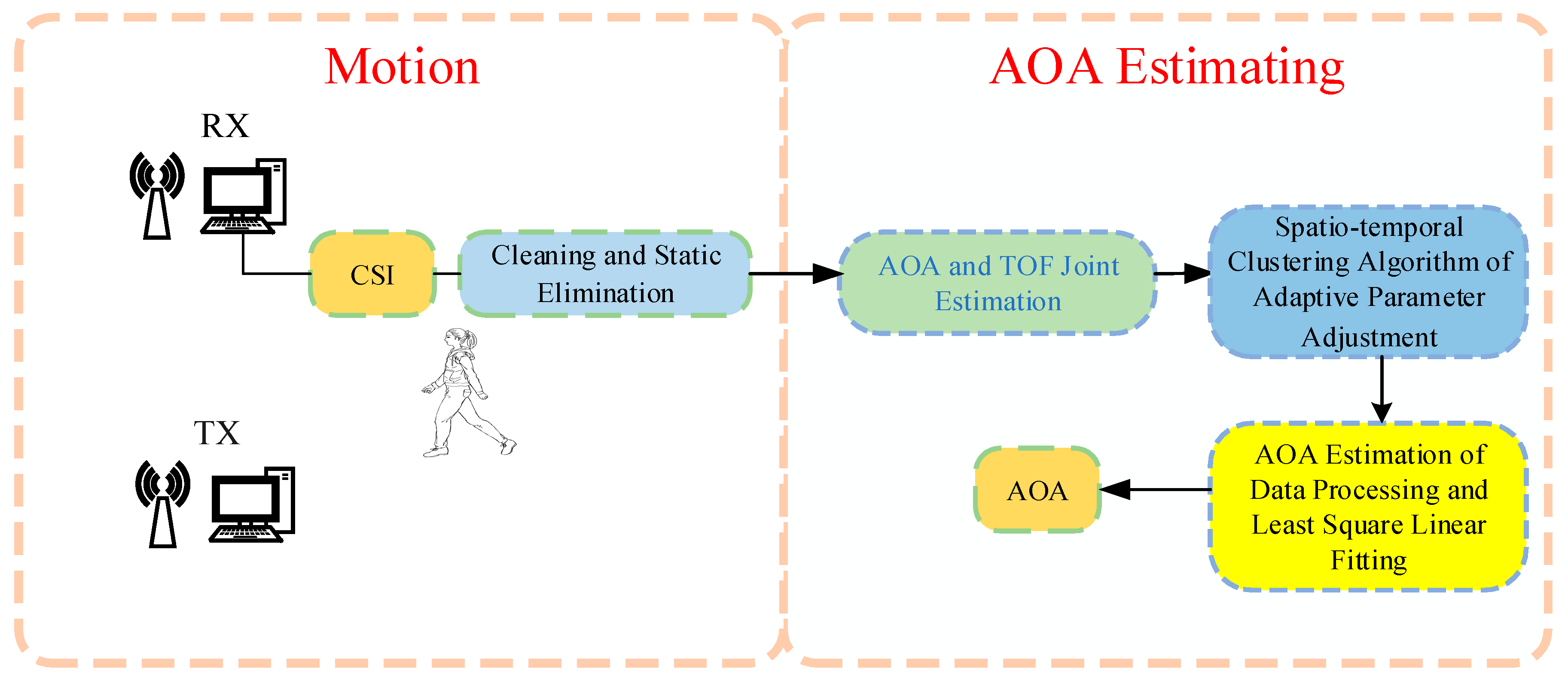

- (1)

- Phase calibration and static path elimination are performed on the collected CSI signals, and then AOA and TOF are jointly used for the AOA estimations;

- (2)

- A DBscan spatiotemporal clustering algorithm with adaptive parameter adjustment is proposed to reduce multipath effects;

- (3)

- The linear fitting method of the least-squares method is introduced and applied to supplement and finalize the AOA results.

2. Materials and Methods

2.1. CSI Model

2.2. Phase Calibration and Static Path Elimination

2.3. Joint Estimation with AOA and TOF Using the MNM Algorithm

2.4. Spatiotemporal Clustering Algorithm of DBscan Based on Adaptive Parameter Adjustment

| Algorithm 1: Spatiotemporal Cluster Algorithm |

| Input: D, Eps0, t′, Minpts, t0 |

| Output: clu |

| While (clu(t′(end))-clu(t′(start) > (size(t)-t0)) |

| Do step 1: The distance distribution of the points to be clustered is calculated |

| step 2: for i = 1:n Neighbors = find(dist(D(i)) ≤ Eps0) |

| If num (Neighbors) < Minpts D(i) = noise |

| Else Expand Cluster (D(i), Neighbors) |

| End if |

| End for End while |

2.5. Processing of AOA Data after Clustering

3. Results

3.1. Experimental Setting and Environment

3.2. Analysis of Experimental Results

3.3. System Performance

- (1)

- Different environments.

- (2)

- Different walking speeds.

- (3)

- Different sampling rates.

- (4)

- Different shapes’ trajectories.

- (5)

- Different directions of motion.

- (6)

- Different walking distances.

- (7)

- Different filtering methods.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, S.; Chan, S. WiFi Fingerprint-Based Indoor Positioning: Recent Advances and Comparisons. IEEE Commun. Surv. Tutor. 2017, 18, 466–490. [Google Scholar] [CrossRef]

- Liu, S.; Jiang, Y.; Striegel, A. Face-to-Face Proximity Estimation Using The blue linetooth on Smartphones. IEEE Trans. Mob. Comput. 2014, 13, 811–823. [Google Scholar] [CrossRef]

- Zhao, X.; Xiao, Z.; Markham, A.; Trigoni, N.; Ren, Y. Does BTLE measure up against WiFi? A comparison of indoor location performance. In Proceedings of the 20th European Wireless Conference, Barcelona, Spain, 14–16 May 2014. [Google Scholar]

- Zheng, S.; Purohit, A.; Chen, K.; Pan, S.; Pering, T.; Zhang, P. PANDAA: Physical arrangement detection of networked devices through ambient-sound awareness. In Proceedings of the Ubicomp: Ubiquitous Computing, International Conference, Ubicomp, Beijing, China, 17–21 September 2011. [Google Scholar]

- Huang, W.; Xiong, Y.; Li, X.-Y.; Lin, H.; Mao, X.; Yang, P.; Liu, Y. Shake and walk: Acoustic direction finding and fine-grained indoor localization using smartphones. In Proceedings of the IEEE INFOCOM 2014—IEEE Conference on Computer Communications, Toronto, ON, Canada, 27 April–2 May 2014. [Google Scholar]

- Mohammadmoradi, H.; Heydariaan, M.; Gnawali, O.; Kim, K. UWB-Based Single-Anchor Indoor Localization Using Reflected Multipath Components. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019. [Google Scholar]

- Wang, C.; Xu, A.; Kuang, J.; Sui, X.; Hao, Y.; Niu, X. A High-Accuracy Indoor Localization System and Applications Based on Tightly Coupled UWB/INS/Floor Map Integration. IEEE Sens. J. 2021, 21, 18166–18177. [Google Scholar] [CrossRef]

- Jin, G.-Y.; Lu, X.-Y.; Park, M.-S. An Indoor Localization Mechanism Using Active RFID Tag. In Proceedings of the IEEE International Conference on Sensor Networks, Ubiquitous, and Trustworthy Computing (SUTC’06), Taichung, Taiwan, 5–7 June 2006. [Google Scholar]

- Gharat, V.; Colin, E.; Baudoin, G.; Richard, D. Indoor performance analysis of LF-RFID based positioning system: Comparison with UHF-RFID and UWB. In Proceedings of the International Conference on Indoor Positioning & Indoor Navigation, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Chen, R.; Huang, X.; Zhou, Y.; Hui, Y.; Cheng, N. UHF-RFID-Based Real-Time Vehicle Localization in GPS-Less Environments. IEEE Trans. Intell. Transp. Syst. 2021, 1–8, in press. [Google Scholar] [CrossRef]

- Piccinni, G.; Avitabile, G.; Coviello, G.; Talarico, C. Real-Time Distance Evaluation System for Wireless Localization. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 3320–3330. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, Q.; Ma, X.; Wang, J.; Yang, T.; Wang, H. DeFi: Robust Training-Free Device-Free Wireless Localization with WiFi. IEEE Trans. Veh. Technol. 2018, 67, 8822–8831. [Google Scholar] [CrossRef]

- Zheng, Y.; Sheng, M.; Liu, J.; Li, J. OpArray: Exploiting Array Orientation for Accurate Indoor Localization. IEEE Trans. Commun. 2019, 67, 847–858. [Google Scholar] [CrossRef]

- Vasisht, D.; Kumar, S.; Katabi, D. Decimeter-level localization with a single WiFi access point. In Proceedings of the 13th USENIX Symposium on Networked Systems Design and Implementation, Santa Clara, CA, USA, 16–18 March 2016. [Google Scholar]

- Kotaru, M.; Joshi, K.; Bharadia, D.; Katti, S. Spotfi: Decimeter level localization using WiFi. In Proceedings of the SIGCOMM’15 ACM Conference on Special Interest Group on Data Communication, London, UK, 17–32 August 2015. [Google Scholar]

- Kotaru, M.; Katti, S. Position Tracking for Virtual Reality Using Commodity WiFi. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2671–2681. [Google Scholar] [CrossRef] [Green Version]

- Fadzilla, M.A.; Harun, A.; Shahriman, A.B. Localization Assessment for Asset Tracking Deployment by Comparing an Indoor Localization System with a Possible Outdoor Localization System. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA), Kuching, Malaysia, 15–17 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Xiao, N.; Yang, P.; Li, X.; Zhang, Y.; Yan, Y.; Zhou, H. MilliBack: Real-Time Plug-n-Play Millimeter Level Tracking Using Wireless Backscattering. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 112. [Google Scholar] [CrossRef]

- Shu, Y.; Huang, Y.; Zhang, J.; Coué, P.; Chen, J.; Shin, K.G. Gradient-Based Fingerprinting for Indoor Localization and Tracking. IEEE Trans. Ind. Electron. 2016, 63, 2424–2433. [Google Scholar] [CrossRef]

- Wang, X.; Gao, L.; Mao, S.; Pandey, S. CSI-Based Fingerprinting for Indoor Localization: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2017, 66, 763–776. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.; Xue, M.; Yu, H.; Tang, H.; Lin, A. Augmentation of Fingerprints for Indoor WiFi Localization Based on Gaussian Process Regression. IEEE Trans. Veh. Technol. 2018, 67, 10896–10905. [Google Scholar] [CrossRef]

- Shi, S.; Sigg, S.; Chen, L.; Ji, Y. Accurate Location Tracking From CSI-Based Passive Device-Free Probabilistic Fingerprinting. IEEE Trans. Veh. Technol. 2018, 67, 5217–5230. [Google Scholar] [CrossRef] [Green Version]

- Xiang, L.; Li, S.; Zhang, D.; Xiong, J.; Wang, Y.; Mei, H. Dynamic-MUSIC: Accurate device-free indoor localization. In Proceedings of the 2016 ACM International Joint Conference ACM, Heidelberg, Germany, 12–16 September 2016. [Google Scholar]

- Qian, K.; Wu, C.; Zhang, G.; Zheng, Y.; Yunhao, L. Widar2.0: Passive Human Tracking with a Single WiFi Link. In Proceedings of the MobiSys’18 16th Annual International Conference on Mobile Systems, Applications, and Services, Munich, Germany, 10–15 June 2018. [Google Scholar]

- Li, X.; Zhang, D.; Lv, Q.; Xiong, J.; Li, S.; Zhang, Y.; Mei, H. IndoTrack: Device-Free Indoor Human Tracking with Commodity WiFi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 72. [Google Scholar] [CrossRef]

- Li, F.; Vaccaro, R.J.; Tufts, D.W. Min-norm linear prediction for arbitrary sensor arrays. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, Glasgow, UK, 23–26 May 1989; Volume 4, pp. 2613–2616. [Google Scholar] [CrossRef]

- Salo, J.; Vuokko, L.; El-Sallabi, H.M.; Vainikainen, P. An additive model as a physical basis for shadow fading. IEEE Trans. Veh. Technol. 2007, 56, 13–26. [Google Scholar] [CrossRef]

- Palipana, S.; Pietropaoli, B.; Pesch, D. Recent advances in RF-based passive device-free localisation for indoor applications. Ad Hoc Netw. 2017, 64, 80–98. [Google Scholar] [CrossRef]

- Chrysikos, T.; Kotsopoulos, S. Characterization of large-scale fading for the 2.4 GHz channel in obstacle-dense indoor propagation topologies. In Proceedings of the 2012 IEEE Vehicular Technology Conference (VTC Fall), Quebec City, QC, Canada, 3–6 September 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, L.; Chen, L.; Xu, Z.; Chen, Z. An Angle Recognition Algorithm for Tracking Moving Targets Using WiFi Signals with Adaptive Spatiotemporal Clustering. Sensors 2022, 22, 276. https://doi.org/10.3390/s22010276

Tian L, Chen L, Xu Z, Chen Z. An Angle Recognition Algorithm for Tracking Moving Targets Using WiFi Signals with Adaptive Spatiotemporal Clustering. Sensors. 2022; 22(1):276. https://doi.org/10.3390/s22010276

Chicago/Turabian StyleTian, Liping, Liangqin Chen, Zhimeng Xu, and Zhizhang Chen. 2022. "An Angle Recognition Algorithm for Tracking Moving Targets Using WiFi Signals with Adaptive Spatiotemporal Clustering" Sensors 22, no. 1: 276. https://doi.org/10.3390/s22010276