Damage Detection and Localization under Variable Environmental Conditions Using Compressed and Reconstructed Bayesian Virtual Sensor Data

Abstract

:1. Introduction

2. Data Compression and Reconstruction Using Bayesian Virtual Sensing

2.1. Empirical Bayesian Virtual Sensing

2.2. Storing Physical Sensor Data

2.3. Storing Virtual Sensor Data

2.4. Comparison of the Two Storage Strategies

2.5. Optimal Sensor Placement

3. Damage Detection and Localization

3.1. Spatial and Spatiotemporal Correlation

3.2. Data Normalization Using Whitening Transformation

3.3. Residual Generation

3.4. Principal Component Analysis

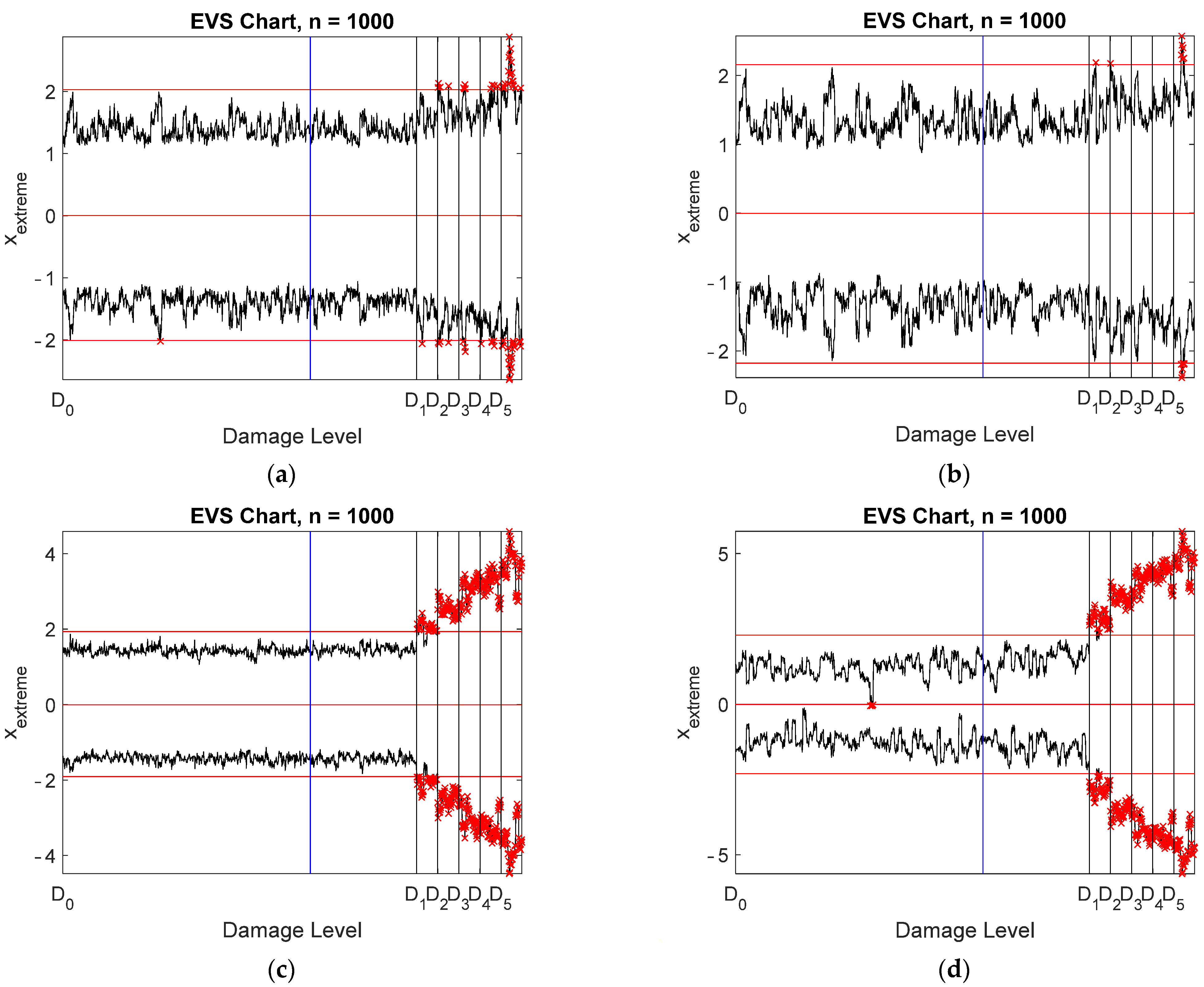

3.5. Extreme Value Statistics

3.6. Control Chart

3.7. Damage Localization

4. Numerical Experiment

4.1. Bayesian Virtual Sensing and Selection of Sensors for Storage

4.2. Damage Detection and Localization Using Spatial Correlation

4.3. Damage Detection Using Spatiotemporal Correlation

4.4. Strain Measurements

4.5. Different Damage Locations

4.6. Different Damage Detection Algorithms

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Sadoughi, M.; Downey, A.; Yan, J.; Hu, C.; Laflamme, S. Reconstruction of unidirectional strain maps via iterative signal fusion for mesoscale structures monitored by a sensing skin. Mech. Syst. Signal Process. 2018, 112, 401–416. [Google Scholar] [CrossRef]

- Brincker, R.; Ventura, C. Introduction to Operational Modal Analysis; Wiley: Chichester, UK, 2015. [Google Scholar]

- Cawley, P. Structural health monitoring: Closing the gap between research and industrial deployment. Struct. Health Monit. 2018, 17, 1225–1244. [Google Scholar] [CrossRef] [Green Version]

- Sharma, S. Applied Multivariate Techniques; Wiley: New York, NY, USA, 1996. [Google Scholar]

- Brandt, A. Noise and Vibration Analysis: Signal Analysis and Experimental Procedures; Wiley: Chichester, UK, 2011. [Google Scholar]

- Kullaa, J. Optimal sensor placement of Bayesian virtual sensors. In Proceedings of the ISMA2020, International Conference on Noise and Vibration Engineering, KU Leuven, Belgium, 7–9 September 2020; Desmet, W., Pluymers, B., Moens, D., Vandemaele, S., Eds.; KU Leuven-Departement Werktuigkunde: Leuven, Belgium, 2020; pp. 973–985. [Google Scholar]

- Mallardo, V.; Aliabadi, M. Optimal sensor placement for structural, damage and impact identification: A review. Struct. Durab. Health Monit. 2013, 9, 287–323. [Google Scholar] [CrossRef]

- Yi, T.-H.; Li, H.-N. Methodology developments in sensor placement for health monitoring of civil infrastructures. Int. J. Distrib. Sens. Netw. 2012, 8, 612726. [Google Scholar] [CrossRef]

- Krause, A.; Guestrin, C.; Gupta, A.; Kleinberg, J. Near-optimal sensor placements: Maximizing information while minimizing communication cost. In Proceedings of the 5th International Conference on Information Processing in Sensor Networks (IPSN ’06), New York, NY, USA, 19–21 April 2006; pp. 2–10. [Google Scholar]

- Meo, M.; Zumpano, G. On the optimal sensor placement techniques for a bridge structure. Eng. Struct. 2005, 27, 1488–1497. [Google Scholar] [CrossRef]

- Leyder, C.; Chatzi, E.; Frangi, A.; Lombaert, G. Comparison of optimal sensor placement algorithms via implementation on an innovative timber structure. In Life-Cycle of Engineering Systems: Emphasis on Sustainable Civil Infrastructure. In Proceedings of the Fifth International Symposium on Life-Cycle Civil Engineering (IALCCE 2016), Delft, The Netherlands, 16–19 October 2016; Bakker, J., Frangopol, D.M., van Breugel, K., Eds.; Taylor & Francis Group: London, UK, 2017; pp. 260–267. [Google Scholar]

- Papadimitriou, C. Optimal sensor placement methodology for parametric identification of structural systems. J. Sound Vib. 2004, 278, 923–947. [Google Scholar] [CrossRef]

- Han, J.-H.; Lee, I. Optimal placement of piezoelectric sensors and actuators for vibration control of a composite plate using genetic algorithms. Smart Mater. Struct. 1999, 8, 257–267. [Google Scholar] [CrossRef]

- Worden, K.; Burrows, A.P. Optimal sensor placement for fault detection. Eng. Struct. 2001, 23, 885–901. [Google Scholar] [CrossRef]

- Kammer, D.C. Sensor placement for on-orbit modal identification and correlation of large space structures. J. Guid. Control Dyn. 1991, 14, 251–259. [Google Scholar] [CrossRef]

- Kammer, D.C. Effects of noise on sensor placement for on-orbit modal identification of large space structures. J. Dyn. Syst. Meas. Control—Trans. ASCE 1992, 114, 436–443. [Google Scholar] [CrossRef]

- Papadimitriou, C.; Lombaert, G. The effect of prediction error correlation on optimal sensor placement in structural dynamics. Mech. Syst. Signal Process. 2012, 28, 105–127. [Google Scholar] [CrossRef]

- Kay, S.M. Fundamentals of Statistical Signal Processing. Detection Theory; Prentice-Hall: Upper Saddle River, NJ, USA, 1998. [Google Scholar]

- Sohn, H. Effects of environmental and operational variability on structural health monitoring. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2007, 365, 539–560. [Google Scholar] [CrossRef] [PubMed]

- Kullaa, J. Robust damage detection using Bayesian virtual sensors. Mech. Syst. Signal Process. 2020, 135, 106384. [Google Scholar] [CrossRef]

- Hyvärinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; John Wiley & Sons: New York, NY, USA, 2001. [Google Scholar]

- Kullaa, J. Comparison of time domain and feature domain damage detection. In Proceedings of the 8th International Operational Modal Analysis Conference (IOMAC 2019), Copenhagen, Denmark, 12–14 May 2019; pp. 115–126. [Google Scholar]

- Kullaa, J. Bayesian virtual sensing in structural dynamics. Mech. Syst. Signal Process. 2018, 115, 497–513. [Google Scholar] [CrossRef]

- Scharf, L.L. Statistical Signal Processing: Detection, Estimation, and Time Series Analysis; Addison-Wesley: Reading, MA, USA, 1991. [Google Scholar]

- Stark, H.; Woods, J.W. Probability and Random Processes with Applications to Signal Processing, 3rd ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Kullaa, J. Whitening transformation in damage detection. In Smart structures. In Proceedings of the 5th European Conference on Structural Control—EACS 2012, Genoa, Italy, 18–20 June 2012; Del Grosso, A.E., Basso, P., Eds.; Erredi Grafiche Editoriali: Genoa, Italy, 2012. [Google Scholar]

- Worden, K.; Allen, D.; Sohn, H.; Farrar, C.R. Damage detection in mechanical structures using extreme value statistics. In Proceedings of the SPIE 9th Annual International Symposium on Smart Structures and Materials, San Diego, CA, USA, 17 March 2002; Volume 4693, pp. 289–299. [Google Scholar]

- Coles, S. An Introduction to Statistical Modeling of Extreme Values; Springer: Bristol, UK, 2001. [Google Scholar]

- Montgomery, D.C. Introduction to Statistical Quality Control, 3rd ed.; Wiley: New York, NY, USA, 1997. [Google Scholar]

- Kullaa, J. Eliminating environmental influences in structural health monitoring using spatiotemporal correlation models. In Proceedings of the Fourth European Workshop on Structural Health Monitoring, Krakow, Poland, 2–4 July 2008; Uhl, T., Ostachowicz, W., Holnicki-Szulc, J., Eds.; DEStech Publications: Lancaster, PA, USA, 2008; pp. 1033–1040. [Google Scholar]

- Kullaa, J. Distinguishing between sensor fault, structural damage, and environmental or operational effects in structural health monitoring. Mech. Syst. Signal Process. 2011, 25, 2976–2989. [Google Scholar] [CrossRef]

- Worden, K.; Manson, G.; Fieller, N.R.J. Damage detection using outlier analysis. J. Sound Vib. 2000, 229, 647–667. [Google Scholar] [CrossRef]

| Damage Level | Plate Thickness mm | Thickness Decrease mm | Measurements |

|---|---|---|---|

| D0 | 5.0 | 0 | 1–100 |

| D1 | 4.5 | 0.5 | 101–106 |

| D2 | 4.0 | 1.0 | 107–112 |

| D3 | 3.5 | 1.5 | 113–118 |

| D4 | 3.0 | 2.0 | 119–124 |

| D5 | 2.5 | 2.5 | 125–130 |

| Damage Location | Nearest Sensors | Acceleration Detection | Acceleration Localization | Strain Detection | Strain Localization |

|---|---|---|---|---|---|

| Loc 1 | 1 | D5 | Fail (5) | D3–D5 | OK (1) |

| Loc 2 | 15–16 | Fail (22) | Fail 23 | ||

| Loc 3 | 21–22 | Fail (26) | D2–D5 | OK (22) | |

| Loc 4 | 28–29 | OK (28) | Fail 16 | ||

| Loc 5 | 51–52 | OK (51) | Fail 1 | ||

| Loc 6 | 22–23 | OK (22) | Fail 59 |

| Damage Location | Nearest Sensors | Acceleration Detection | Acceleration Localization | Strain Detection | Strain Localization |

|---|---|---|---|---|---|

| Loc 1 | 1 | D1–D5 | Fail (3) | D1–D5 | OK (1) |

| Loc 2 | 15–16 | OK (16) | D3–D5 | Fail (21) | |

| Loc 3 | 21–22 | D2–D5 | Fail (25) | D1–D5 | OK (22) |

| Loc 4 | 28–29 | D3–D5 | OK (28) | D4–D5 | Fail (38) |

| Loc 5 | 51–52 | D3–D5 | OK (52) | D5 | Fail (58) |

| Loc 6 | 22–23 | D3–D5 | Fail (26) | D3–D5 | Fail (17) |

| Damage Location | Nearest Sensors | Acceleration Detection | Acceleration Localization | Strain Detection | Strain Localization |

|---|---|---|---|---|---|

| Loc 1 | 1 | D1–D5 | Fail (3) | D1–D5 | OK (1) |

| Loc 2 | 15–16 | D5 | OK (15) | D3–D5 | Fail (23) |

| Loc 3 | 21–22 | D2–D5 | Fail (19) | D1–D5 | OK (22) |

| Loc 4 | 28–29 | D2–D5 | OK (28) | D2–D5 | Fail (21) |

| Loc 5 | 51–52 | D2–D5 | OK (52) | D3–D5 | Fail (59) |

| Loc 6 | 22–23 | D1–D5 | Fail (19) | D2–D5 | OK (23) |

| Data | MD Detection | MMSE Detection | MMSE Localization |

|---|---|---|---|

| Acc raw | D5 | D5 | Fail (4) |

| Acc VS | D2–D5 | D1–D5 | Fail (3) |

| Acc rec | D1–D5 | D1–D5 | Fail (3) |

| Strain raw | D5 | D5 | OK (1) |

| Strain VS | D2–D5 | D1–D5 | OK (1) |

| Strain rec | D1–D5 | D1–D5 | OK (1) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kullaa, J. Damage Detection and Localization under Variable Environmental Conditions Using Compressed and Reconstructed Bayesian Virtual Sensor Data. Sensors 2022, 22, 306. https://doi.org/10.3390/s22010306

Kullaa J. Damage Detection and Localization under Variable Environmental Conditions Using Compressed and Reconstructed Bayesian Virtual Sensor Data. Sensors. 2022; 22(1):306. https://doi.org/10.3390/s22010306

Chicago/Turabian StyleKullaa, Jyrki. 2022. "Damage Detection and Localization under Variable Environmental Conditions Using Compressed and Reconstructed Bayesian Virtual Sensor Data" Sensors 22, no. 1: 306. https://doi.org/10.3390/s22010306

APA StyleKullaa, J. (2022). Damage Detection and Localization under Variable Environmental Conditions Using Compressed and Reconstructed Bayesian Virtual Sensor Data. Sensors, 22(1), 306. https://doi.org/10.3390/s22010306