Abstract

In order to reduce the amount of hyperspectral imaging (HSI) data transmission required through hyperspectral remote sensing (HRS), we propose a structured low-rank and joint-sparse (L&S) data compression and reconstruction method. The proposed method exploits spatial and spectral correlations in HSI data using sparse Bayesian learning and compressive sensing (CS). By utilizing a simultaneously L&S data model, we employ the information of the principal components and Bayesian learning to reconstruct the hyperspectral images. The simulation results demonstrate that the proposed method is superior to LRMR and SS&LR methods in terms of reconstruction accuracy and computational burden under the same signal-to-noise tatio (SNR) and compression ratio.

1. Introduction

HSI is a collection of hundreds of images that are usually acquired simultaneously in narrow and adjacent spectral bands by airborne sensors [1] or spaceborne spectrometers [2]. HSI combines the traditional two-dimensional remote sensing imaging technology and the optical imaging technology of spectroscopy [3]. Moreover, HSI has achieved the effect of acquiring images and spectra of objects at the same time, which has set off a revolution in the field of remote sensing. In recent years, with the rapid development of precision agriculture, hyperspectral imaging (HSI) technology in hyperspectral remote sensing [4] has been widely used. In precision agriculture, hyperspectral images can be used to monitor drought and flooding in farmlands, pests and diseases, and crop growth, as well as to predict farmland yield. According to the estimated variability from HSI, precision agriculture can improve resource-use efficiency, productivity, quality, profitability, and sustainability of agricultural production, such as by using less irrigation water, fewer pesticides and fertilizers, etc. HSI is also used in many applications besides precision agriculture, including forestry monitoring, natural resource investigation, vegetation observation, food safety, and geological mapping.

However, the acquisition and processing of HSI require a high-sensitivity detector, ultra-long-distance transmission, great computing power, and a huge data storage capacity in order to deal with such huge amounts of data. The HSI data need to be transmitted to ground stations. In this regard, one of the obstacles that researchers have had to face in the past 20 years is that of finding a way to program hyperspectral satellites to reduce the HSI data. Fortunately, HSI data are low-rank [5] and sparse in the transform domain (discrete cosine transform (DCT) or wavelet transform) [6,7,8]. These kinds of data have the features of being low-rank and sparse, which means that the data are redundant, and a small number of datasets can be used to express the entirety of the data. So, we can use these features to reduce the amount of HSI data to be transmitted. This might be a good way to solve the problem.

Recently, many works have focused on modeling, compressing, and reconstructing HSI data using a structured framework. By dividing HSI into many blocks, Zhang et al. [5] introduced an HSI data reconstruction method based on low-rank matrix recovery (LRMR). In particular, for this kind of signal, many new compressive sensing (CS)-based methods [9,10,11] have been proposed [6,8,12,13,14,15,16,17,18,19]. Golbabaee et al. [6] simultaneously reconstructed HSI data with a low-rank and joint-sparse (L&S) structure by assuming that HSI data are low-rank and using a spatially joint-sparse wavelet representation. Zhang et al. [12] showed that the block sparse Bayesian learning (bSBL) algorithm has good recovery performance for data with a spatial block structure (such as an L&S structure). However, most of the existing research focused on low-rank structure reconstruction methods or HSI data denoising methods, and there are no methods for integrating the process of HSI acquisition and combining a sparse structure reconstruction method to reconstruct HSI data.

In this paper, a method based on the bSBL framework is proposed to compress, transmit, and reconstruct the entirety of HSI data. Here, each sub-matrix of multi-channel data is collected, compressed, and transmitted to the ground processing center through push-broom imaging. The proposed method not only combines the L&S structure priors on HSI data and the appropriate priors on hyperparameters, but also uses the information of the main components of the data. Firstly, we assume that the covariance matrix of the data is a diagonal matrix, and then obtain the initial value of the hyperparameter. Finally, an EM-like method is used to obtain the best reconstruction of the HSI data. The simulation results show that the proposed method has better performance than other existing methods.

We highlight the following main contributions:

- (1)

- The proposed method gives the structure of the covariance matrix of the L&S signals, models HSI data with the L&S structure, and utilizes the CS and Bayesian learning methods to compress and reconstruct HSI data.

- (2)

- In the reconstruction part, the proposed method makes use of the relationship between multiple dimensions of high-dimensional data and combines data reconstruction with HSI data acquisition. It can be used to realize the segmented acquisition, compression, and transmission of HSI data so as to reduce the amount of calculation and data transmission that must be performed in the satellite.

- (3)

- We demonstrate the superior performance of the proposed method in comparison with state-of-the-art alternatives by conducting experiments on both synthetic signals and real signals.

The rest of this paper is arranged as follows: Section 2 introduces two 2D reconstruction methods for HSI data. Section 3 introduces the L&S data compression and reconstruction method combined with the acquisition mode. Section 3.1 introduces a model of the problem, and Section 3.2 proposes the HSI reconstruction method based on push-broom imaging. Section 3.3 introduces the simulation results. Discussion is given in Section 4. Finally, this work is concluded in Section 5.

Notation: denotes the probability density function of , which follows a Gaussian distribution with mean and variance . denotes the determinant of . denotes the norm of . denotes the vectorization of the matrix formed by stacking its columns into a single column vector. denotes the transpose of . denotes the trace of .

2. Two-Dimensional Reconstruction Methods for HSI Data

As described in the previous section, this section will combine the fast method proposed in our previous work [20] to compress and reconstruct HSI data from two different two-dimensional (2D) slice methods and will provide two 2D reconstruction algorithms for three-dimensional (3D) HSI data. These two methods are the method of expanding 3D data HSI from different bands to 2D data by using a tensor and the method of slicing them into 2D data one by one according to the acquisition mode.

The HSI data experiment was carried out with the repetition of 100 real tests. Each experimental datum was selected from the Salinas Database (http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Salinas-A_scene (accessed on 10 November 2021)) dataset in a area, and 20 bands were used, that is, the data matrix was a 3D matrix with . The two 2D reconstruction methods are first introduced in the following sections. The core part of this section shows the direct application of the fast L&S-bSBL method [20]. The same abbreviations as in [20] are used here.

- (1)

- Estimation of .After the Bayesian posterior probability is obtained with the Bayesian rule, the maximum a posteriori (MAP) is used to obtain the estimation of :where is a block matrix of , and there are few elements that are non-zero. Moreover, the sparsity of blocks of is determined by . When , the value of the kth related block in is zero.

- (2)

- Estimation of .In order to obtain , we simplify the expression of asAfter maximizing the logarithm of the joint probability of and , we take the derivative of it with respect to :

- (3)

- Estimation of .where

- (4)

- Estimation of .The algorithm calculates the singular values of through singular value decomposition (SVD) for each slice of the HSI data and sorts the singular values in descending order. Depending on the singular value of the sequence, we can obtain the value of the corresponding , . The corresponding to the larger singular value is 1, or is otherwise 0. For HSI data, a large singular value distribution can be obtained by analyzing only one segment of its 2D slice signal in advance. The expression of is

- (5)

- Estimation of .

2.1. Two-Dimensional HSI Reconstruction Algorithm—L&S-bSBL (1)

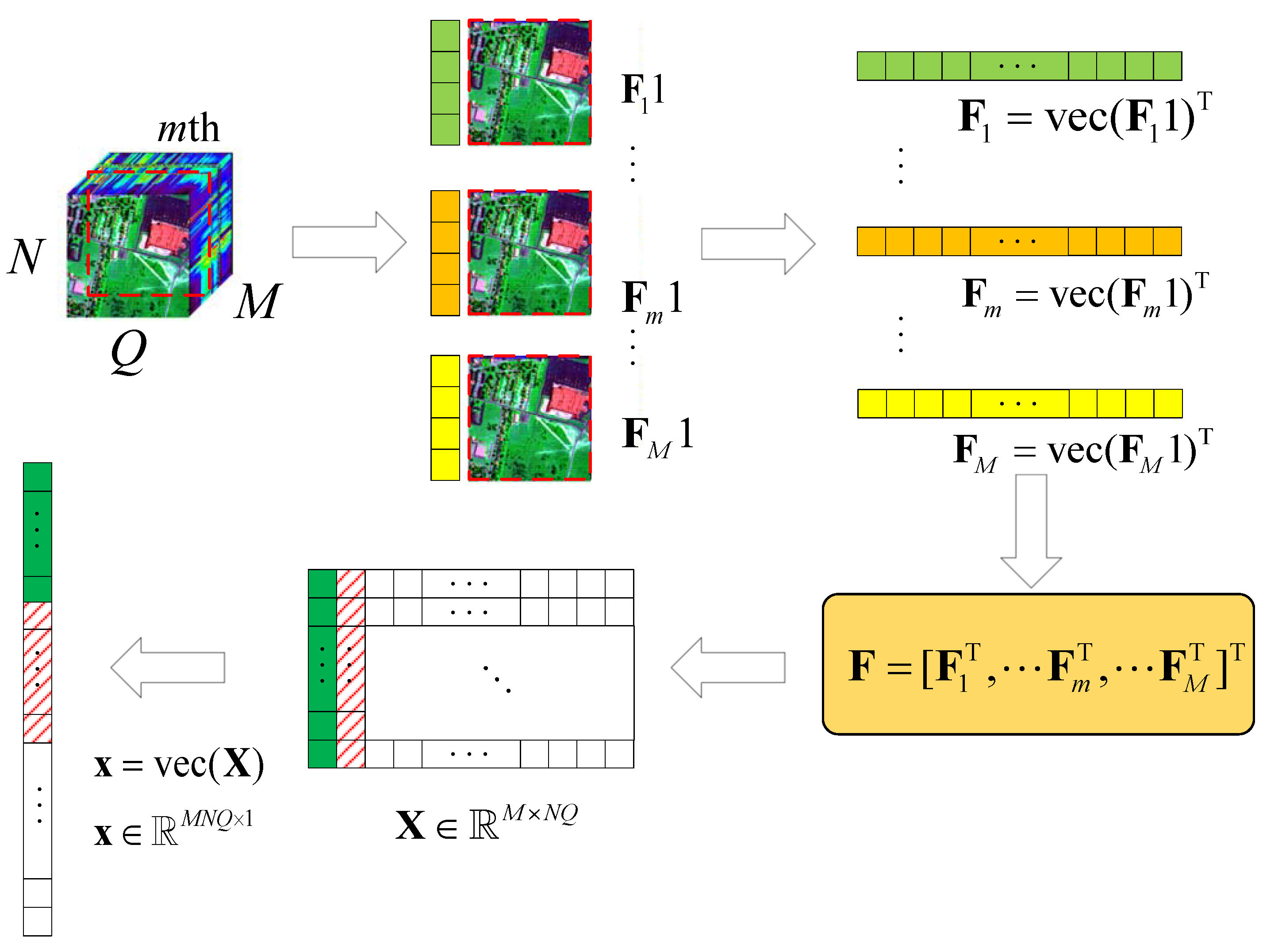

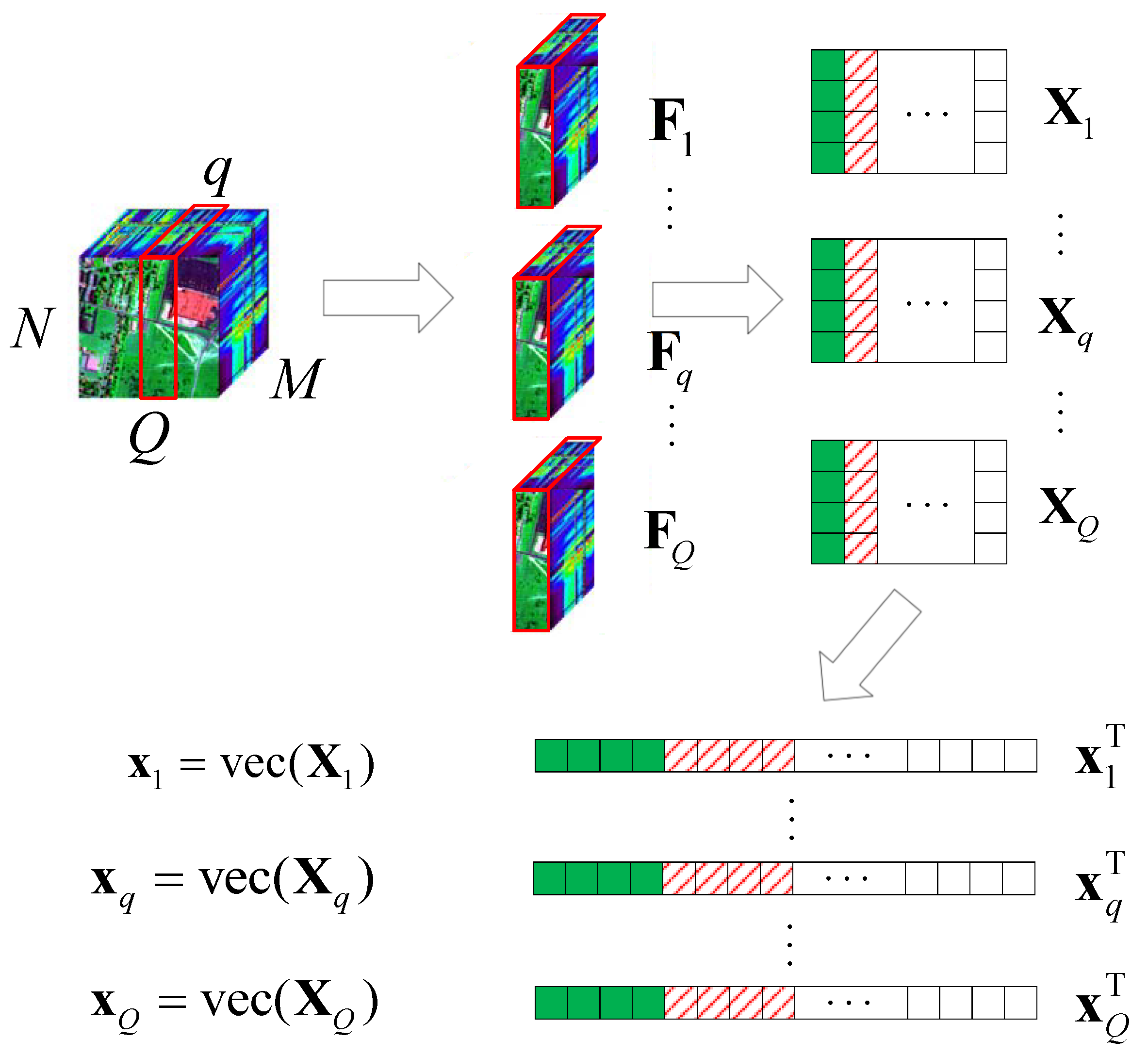

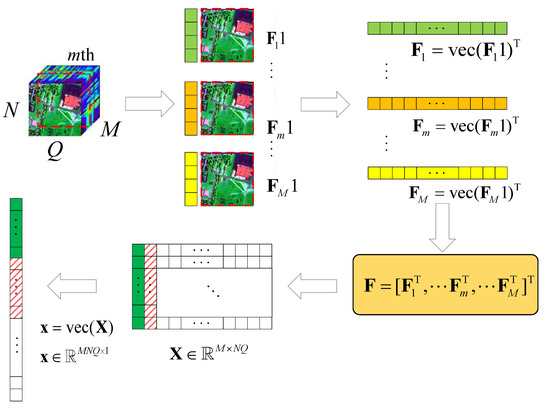

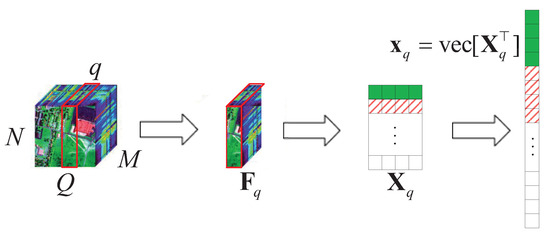

Here, we consider a typical HSR scenario (push-broom imaging) in which there are M sensors with different wavelengths (called channels or bands; here, we have M bands) for acquiring HSI data , in an area following the Q-dimension, where , . Here, we simply express this as , . After all HSI data are collected, the proposed algorithm performs the following steps on the HSI data, as shown in Figure 1.

Figure 1.

Schematic diagram of the HSI data tensor expanded into 2D data.

- (1)

- According to the different bands M, we use the tensor to expand with , , ;

- (2)

- let , turn into , by using the operator;

- (3)

- let , ;

- (4)

- obtain the corresponding value of in the DCT domain .

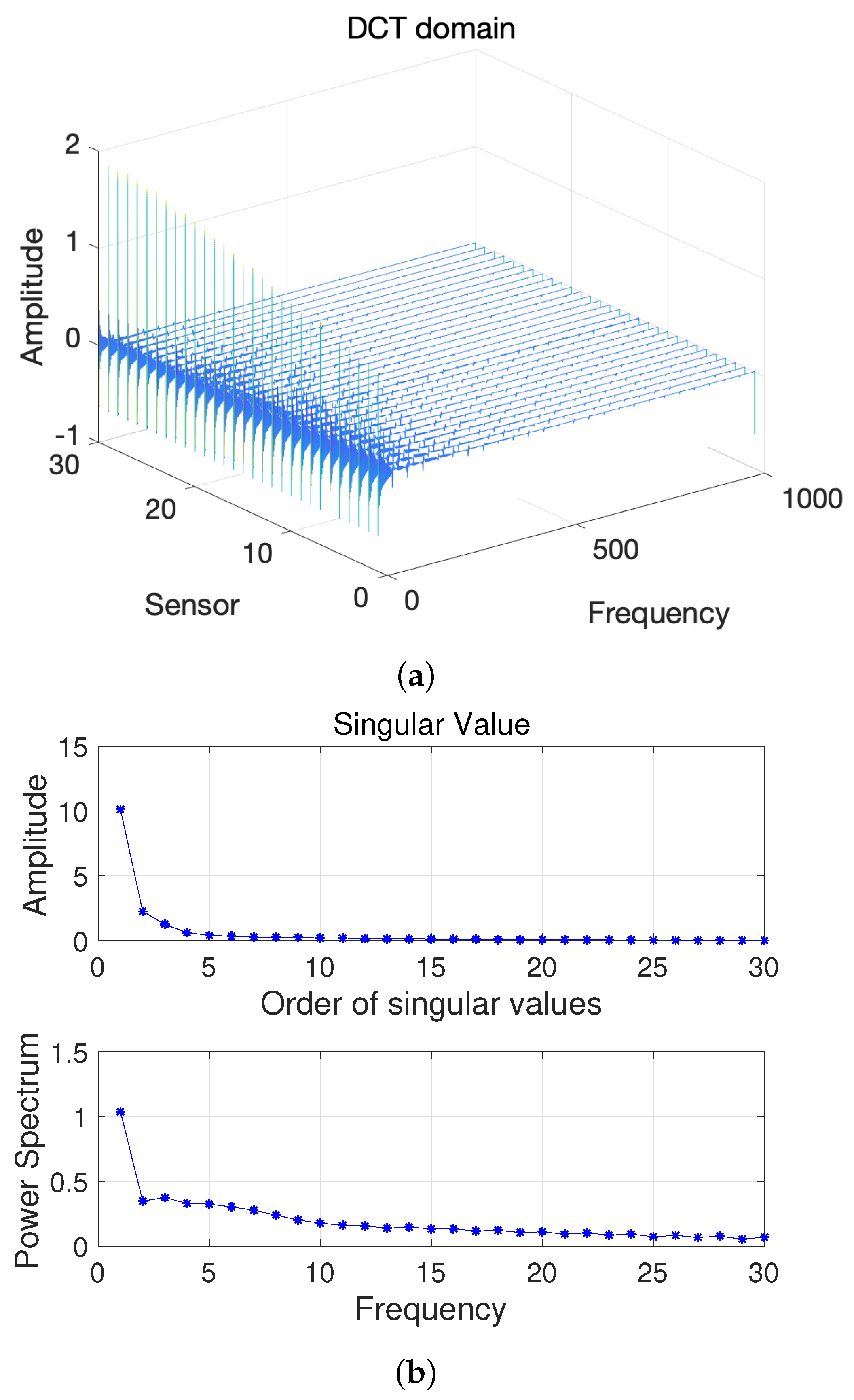

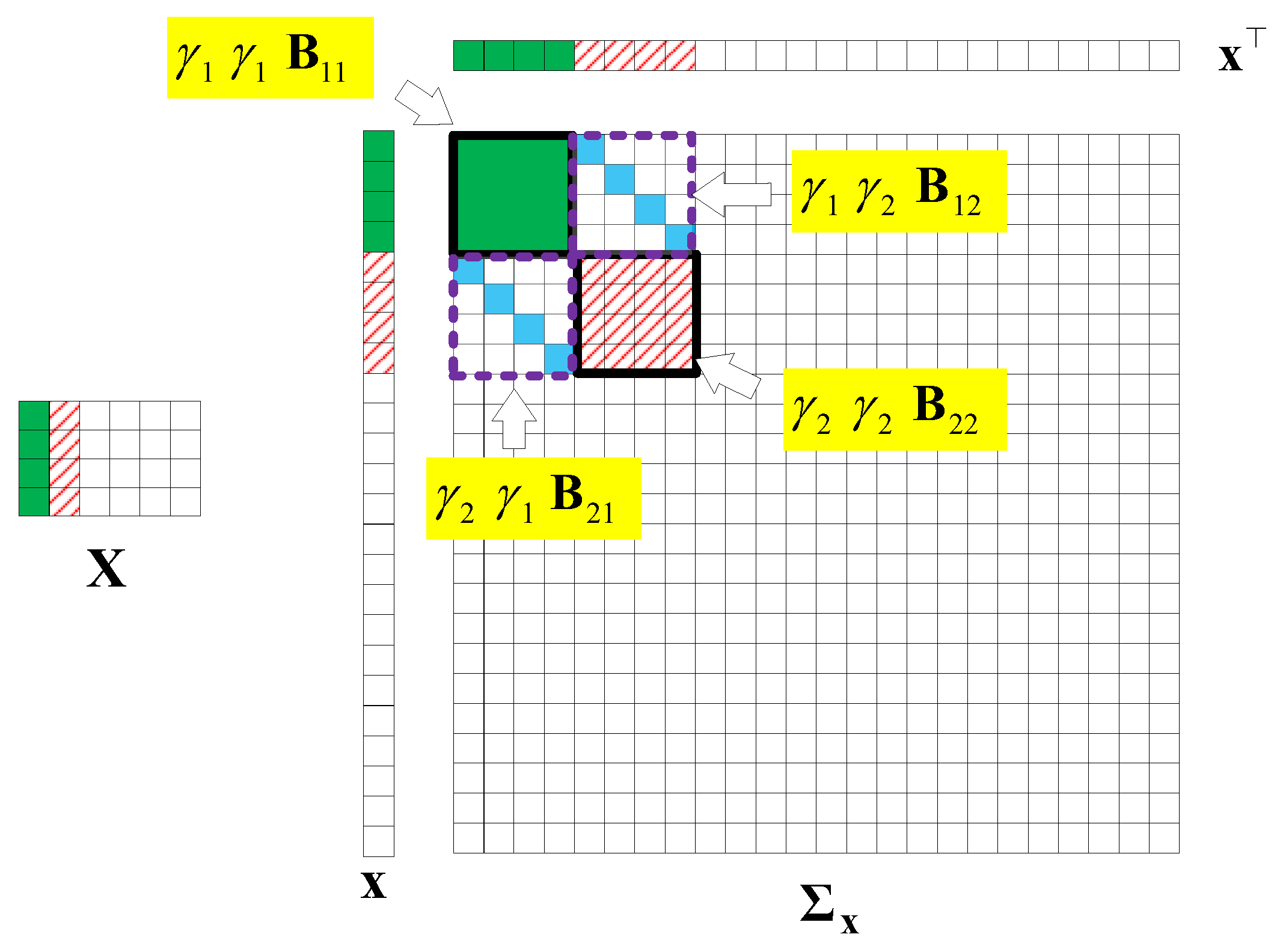

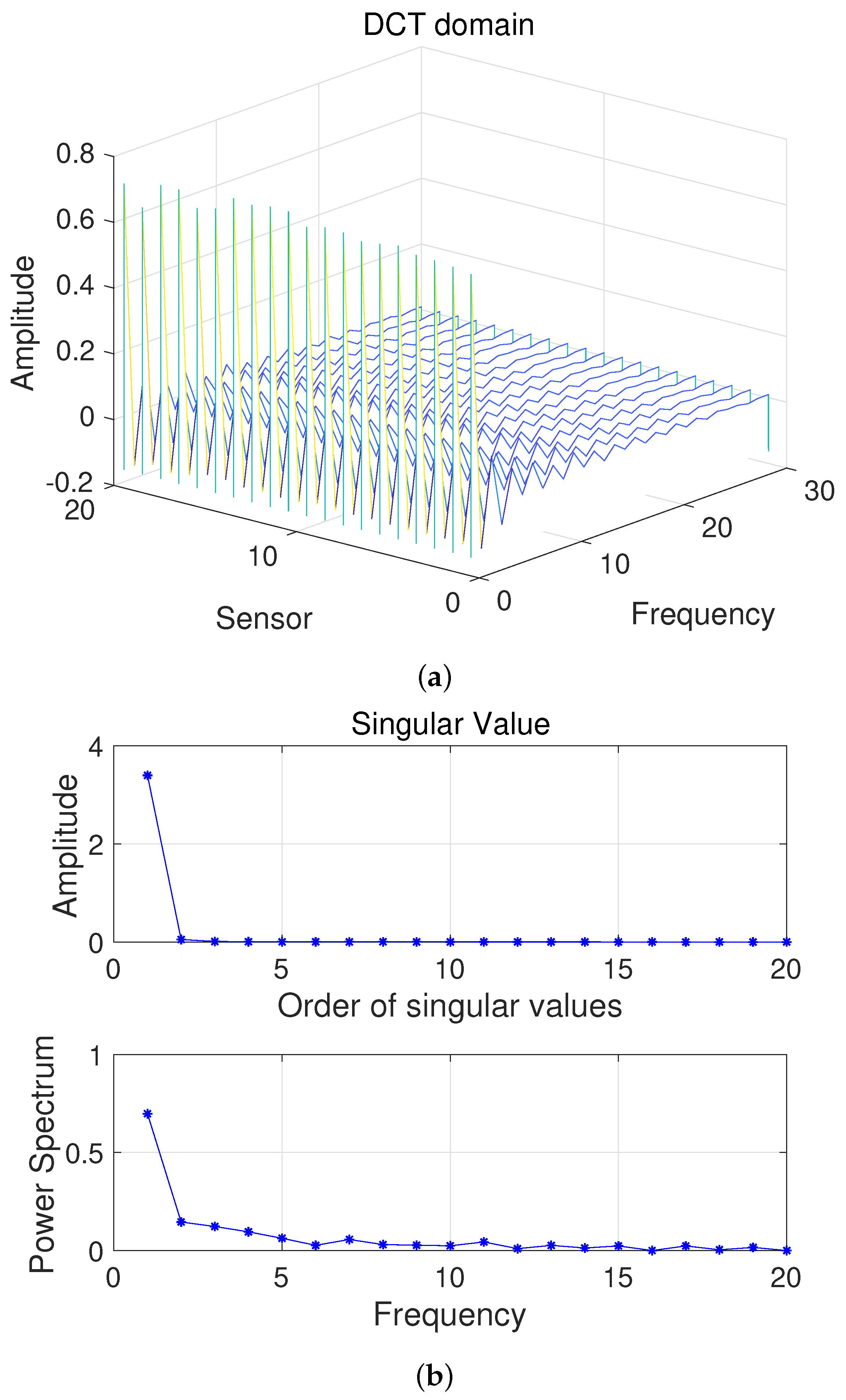

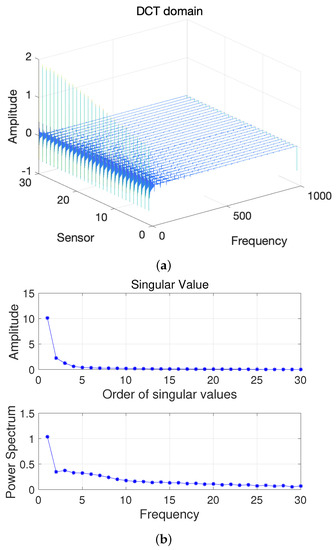

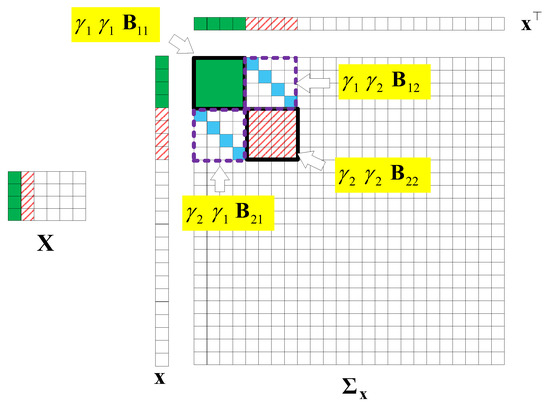

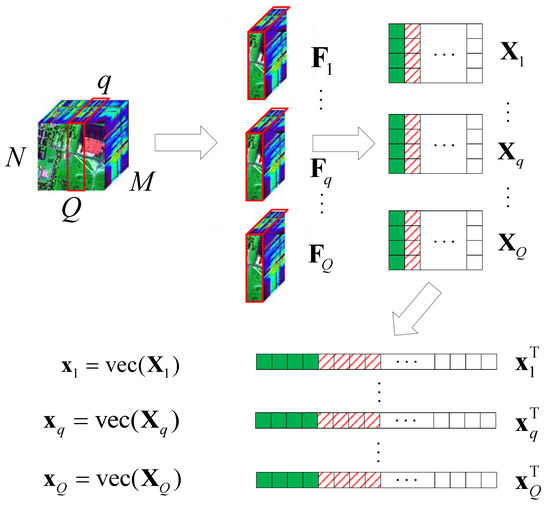

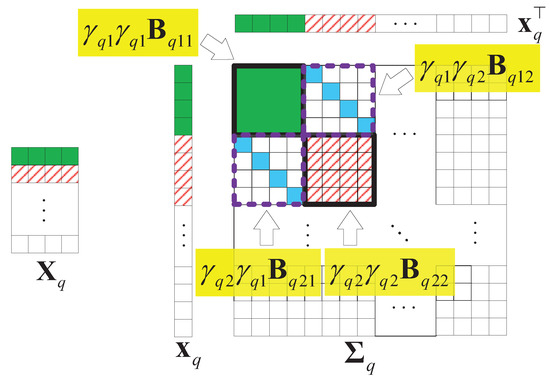

Here, we first conduct a principle component analysis (PCA) on the data . As shown in Figure 2, we can obtain 2D HSI tensor expansion data in the sparse domain, which is mainly distributed in the first two columns, as well as the corresponding and ; the other , is 0. Figure 3 shows a schematic diagram of the covariance matrix of x.

Figure 2.

(a) Two-dimensional HSI in the DCT domain and (b) Singular value vs. Frequency.

Figure 3.

A covariance matrix of 2D HSI data .

For the obtained , the single measurement vector (SMV) model is used to solve the problem of data compression, transmission, and reconstruction. First, the acquired data are columnarized into , encoded by the linear mapping matrix , and then transmitted to the ground receiving station through a wireless channel. Here, we assume that the encoded data will be superimposed with noise in the process of channel transmission, and the data received at the ground receiving station are denoted as . Therefore, the mathematical model of the problem can be expressed as . The proposed algorithm uses the fast L&S-bSBL algorithm in Equations (1), (3), (4), (7) and (8) to iteratively reconstruct the original data ; then, we obtain , . Most existing research regards the HSI signal as a low-rank signal to reconstruct, so the typical Bayesian low-rank reconstruction algorithm BARM [21] and the simultaneous low-rank and joint-sparse reconstruction algorithm SS&LR [22] are selected as the algorithms for comparison.

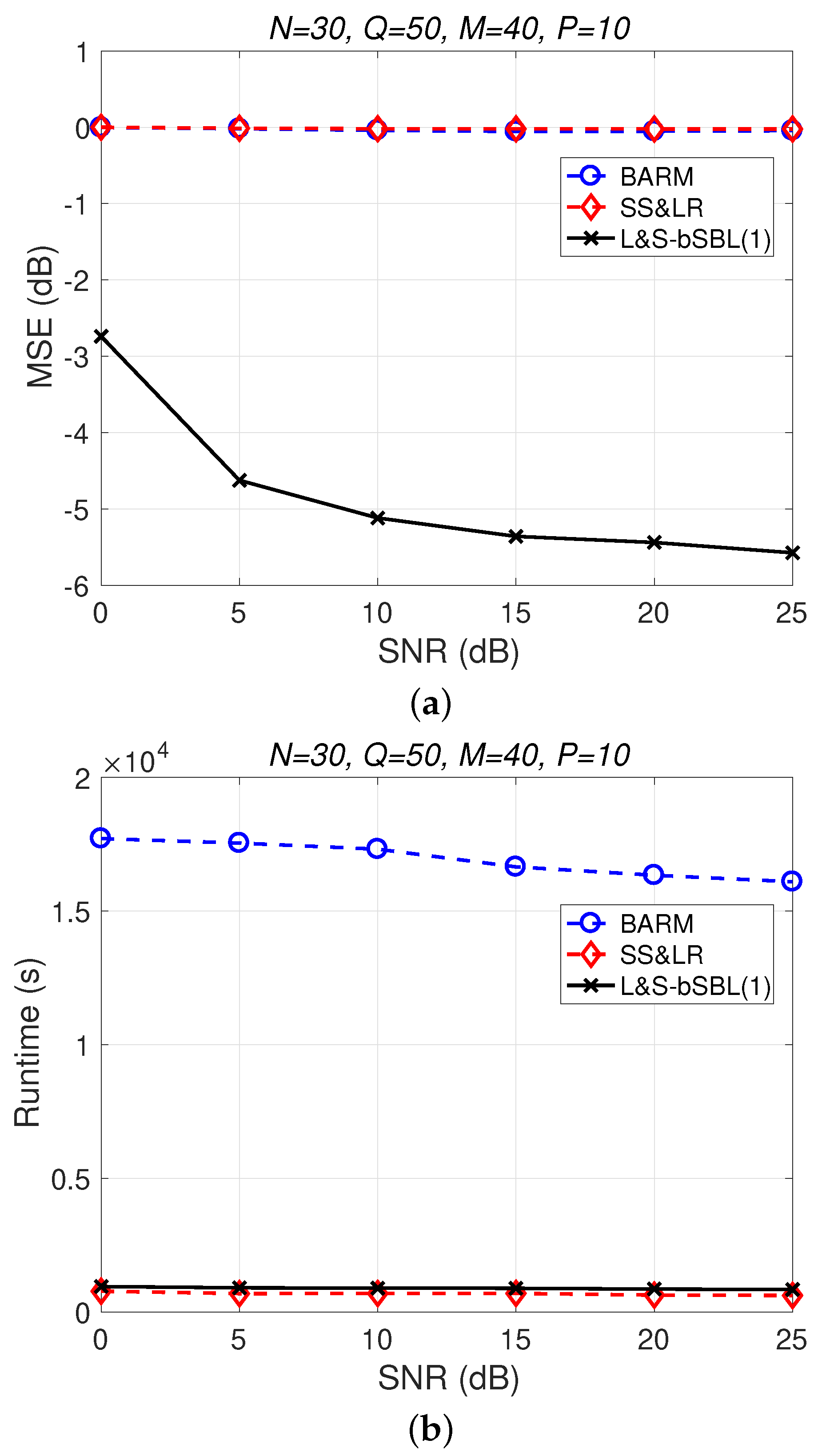

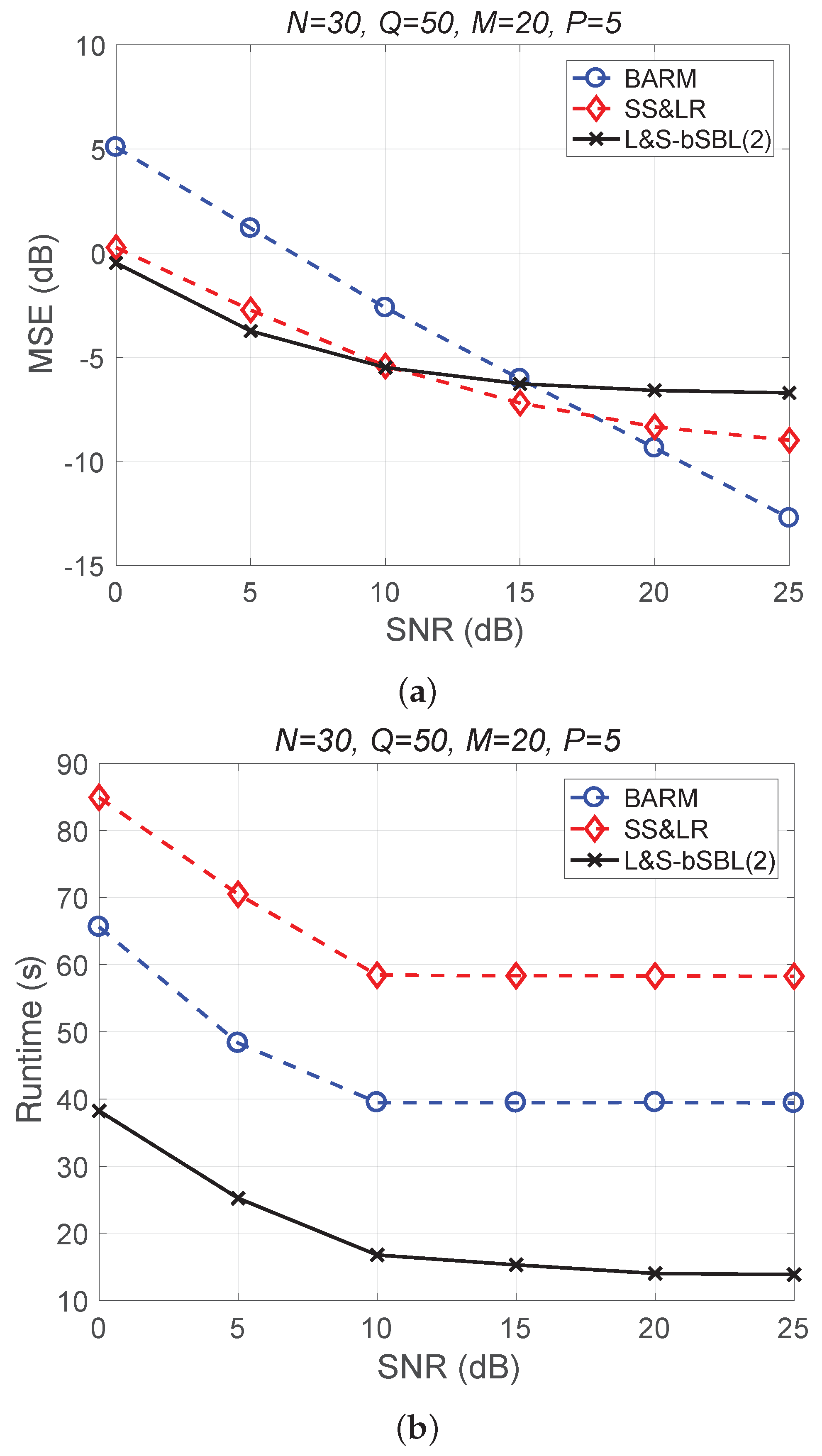

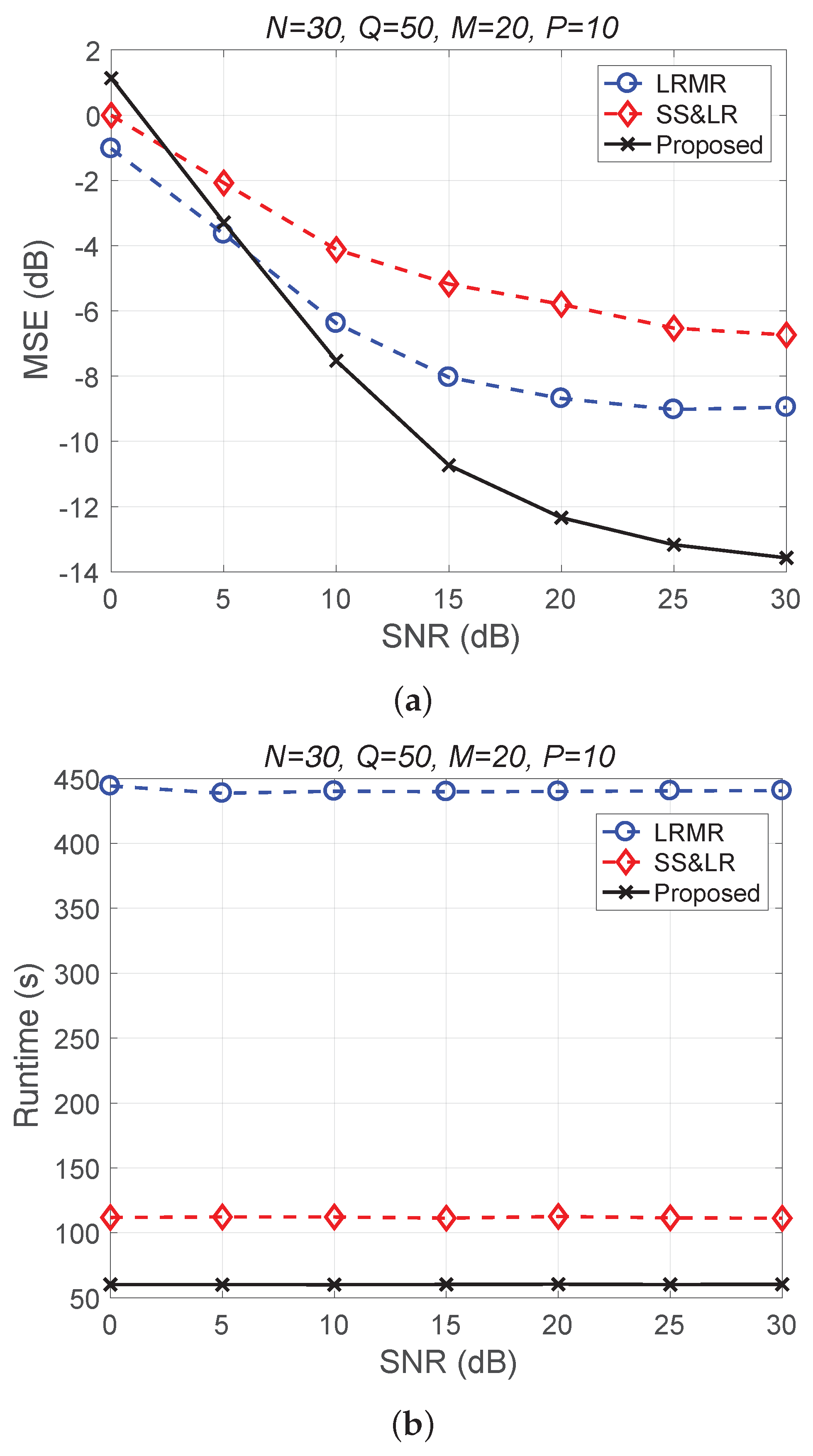

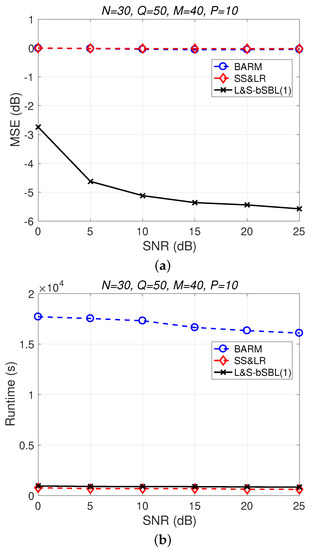

Figure 4 shows a comparison of the reconstruction performance (including mean squared error (MSE) vs. signal-to-noise ratio (SNR) and runtime vs. SNR) of all algorithms in reconstructing 2D unfolded HSI data. In Figure 4a, we observe that the proposed algorithm L&S-bSBL (1) outperforms all of the other methods because the data expansion method of the proposed algorithm increases the amount of relevant data. As shown in Figure 4b, the proposed algorithm uses less runtime than BARM and almost the same amount as SS&LR. Comprehensive consideration of the two figures shows that the proposed algorithm is better than the other two algorithms in terms of reconstruction performance and computational resource consumption.

Figure 4.

(a) MSE vs. SNR and (b) runtime vs. SNR for the reconstruction of 2D HSI data.

2.2. Two-Dimensional HSI Reconstruction Algorithm—L&S-bSBL (2)

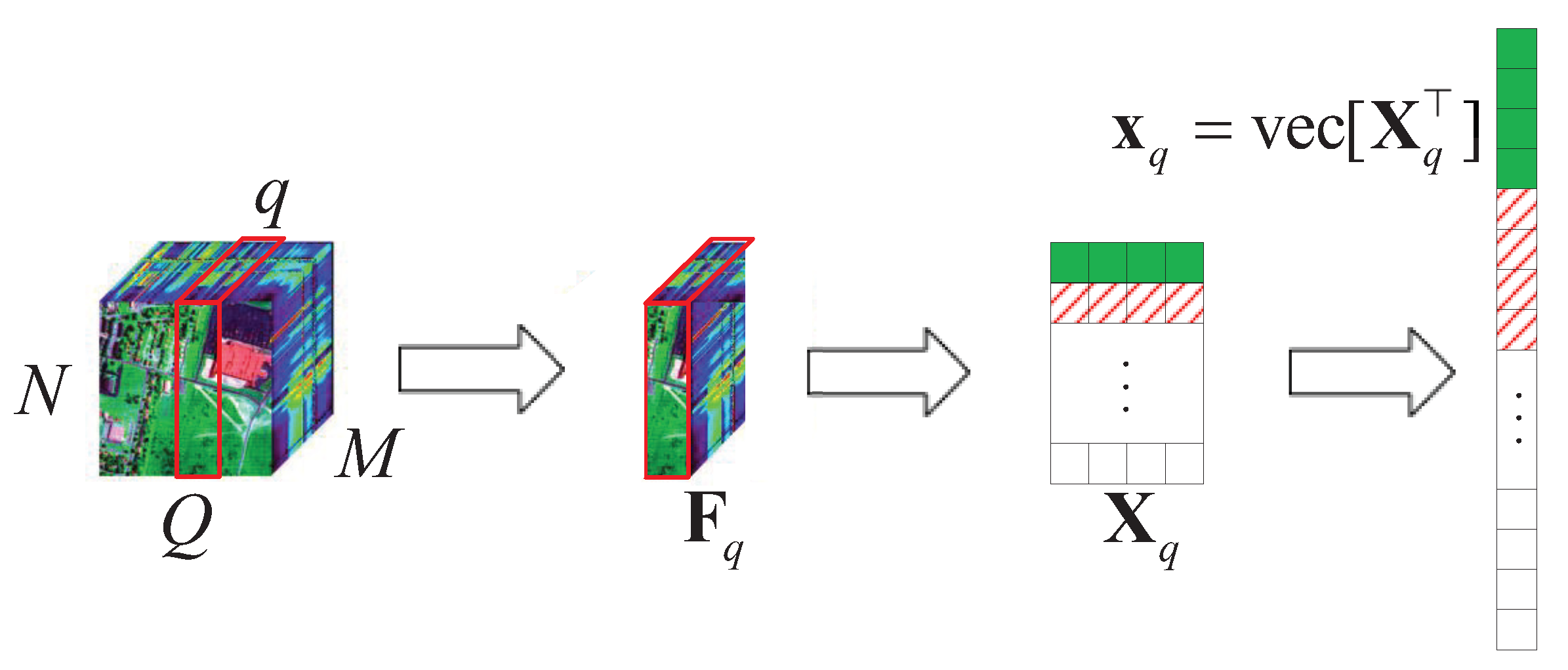

Here, we also consider a typical HSR scenario (push-broom imaging) in which there are M sensors with different wavelengths (called channels or bands; here, we have M bands) to acquire HSI data , in an area following the Q-dimension, where , . After all HSI data are collected, the proposed algorithm performs the following steps on the HSI data, as shown in Figure 5.

Figure 5.

A schematic diagram of the HSI slice decomposition according to the push-broom imaging method following Q.

- (1)

- Let slices and , as ;

- (2)

- obtain the corresponding value of in the DCT domain , , ;

- (3)

- let turn into , , by using the vec operator.

Here, we first conduct a PCA on the data . As shown in Figure 6, we can obtain 2D HSI slice data in the sparse domain, which is mainly distributed in the first two columns, as well as the corresponding and ; the other , is 0. The schematic diagram of the covariance matrix of is similar to that shown in Figure 3.

Figure 6.

(a) Two-dimensional HSI slices in the DCT domain and (b) Singular value vs. Frequency.

For the obtained , the SMV model is used to solve the problem of data compression, transmission, and reconstruction. Firstly, the acquired data are columnarized into , encoded by the linear mapping matrix , and then transmitted to the ground receiving station through a wireless channel. Here, we assume that the encoded data will be superimposed with noise in the process of channel transmission, and the data received at the ground receiving station are denoted as . Therefore, the mathematical model of the problem can be expressed as . The proposed algorithm uses the fast L&S-bSBL algorithm in Equations (1), (3), (4), (7) and (8) to iteratively reconstruct the original data ; then, we obtain , . Most existing research regards HSI signals as low-rank signals to recover, so BARM and SS&LR are selected as the algorithms for comparison.

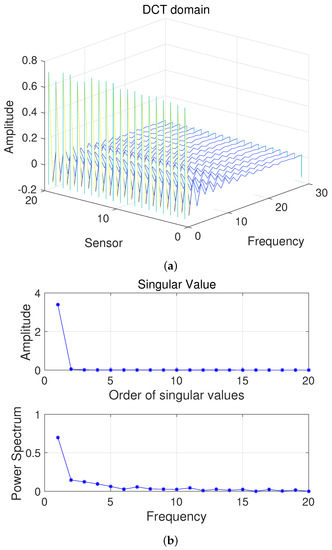

Figure 7 shows a comparison of the reconstruction performance of all algorithms for all Q slices. In Figure 7a, we can see that the reconstruction of these slices is acceptable. However, L&S-bSBL (1) has better performance than L&S-bSBL (2) in reconstructing the same data, as shown in Figure 4. The reason is that L&S-bSBL (1) greatly utilizes the correlation between all data and improves the reconstruction performance, while L&S-bSBL (2) only uses the correlation within a certain band, ignoring the correlation between the bands at the same location.

Figure 7.

(a) MSE vs. SNR and (b) runtime vs. SNR for the reconstruction performance of 2D HSI push-brooming slices in all algorithms.

Moreover, L&S-bSBL (2) only takes about 35 s compared with L&S-bSBL (1), which takes 150 s to recover the same data, as shown in Figure 4. This is because L&S-bSBL (1) has a large data dimension for a single operation and L&S-bSBL (2) has a small dimension, and the amount of calculation required for the calculation of the matrix inverse operation is not within an order of magnitude.

3. L&S Reconstruction Algorithm Combined with Acquisition Methods

3.1. Problem Formulation and Signal Model

We consider a typical HSR scenario (push-broom imaging) in which there are M sensors with different wavelengths (called channels or bands; here, we have M bands) to collect HSI data , in an area. Figure 8 shows that a spaceborne spectrometer (push-broom imaging) acquires multi-channel data , with time synchronization among different regions following the Q-dimension, where , stands for the data collected by the channel sensor and is the spatially and temporally correlated data matrix. Then, is encoded by linearly mixing with and transmitted to a ground receiving station, denoted as , after superimposing noise . Finally, a novel CS method is used to decode all at the same time at the ground processing center.

Figure 8.

An example of hyperspectral image acquisition.

Fortunately, HSI data are highly correlated with the locations and bands of their non-zero elements in a sparse domain. So, we can get an approximately L&S matrix from , where is a sparsifying basis (e.g., DCT matrix or wavelet matrix) [23], and . Thus, we have the following formulation:

where is a known dictionary matrix. Here, this problem belongs to the multiple measurement vector (MMV) [24] problem.

Following our recent work [12], we now consider a bSBL framework [25] (i.e., L&S-bSBL) to reconstruct all . By letting , , , , after transforming the MMV problem to the block SMV [24] problem, we have

Then, the original problem becomes

where , , , , and denotes a identity matrix. , , is the qth block in . The presence of K non-zero rows in means that there are non-zero blocks in . Thus, is a block sparse vector. , .

We assume that the noise elements , , follow an identical and independent distribution with . We define the Gaussian likelihood for problem (10) as

and the prior of is given by

where is a covariance matrix between and , , M. , , . , is the sparsity pattern vector of , where the support indicates , , M.

Typically, we can obtain

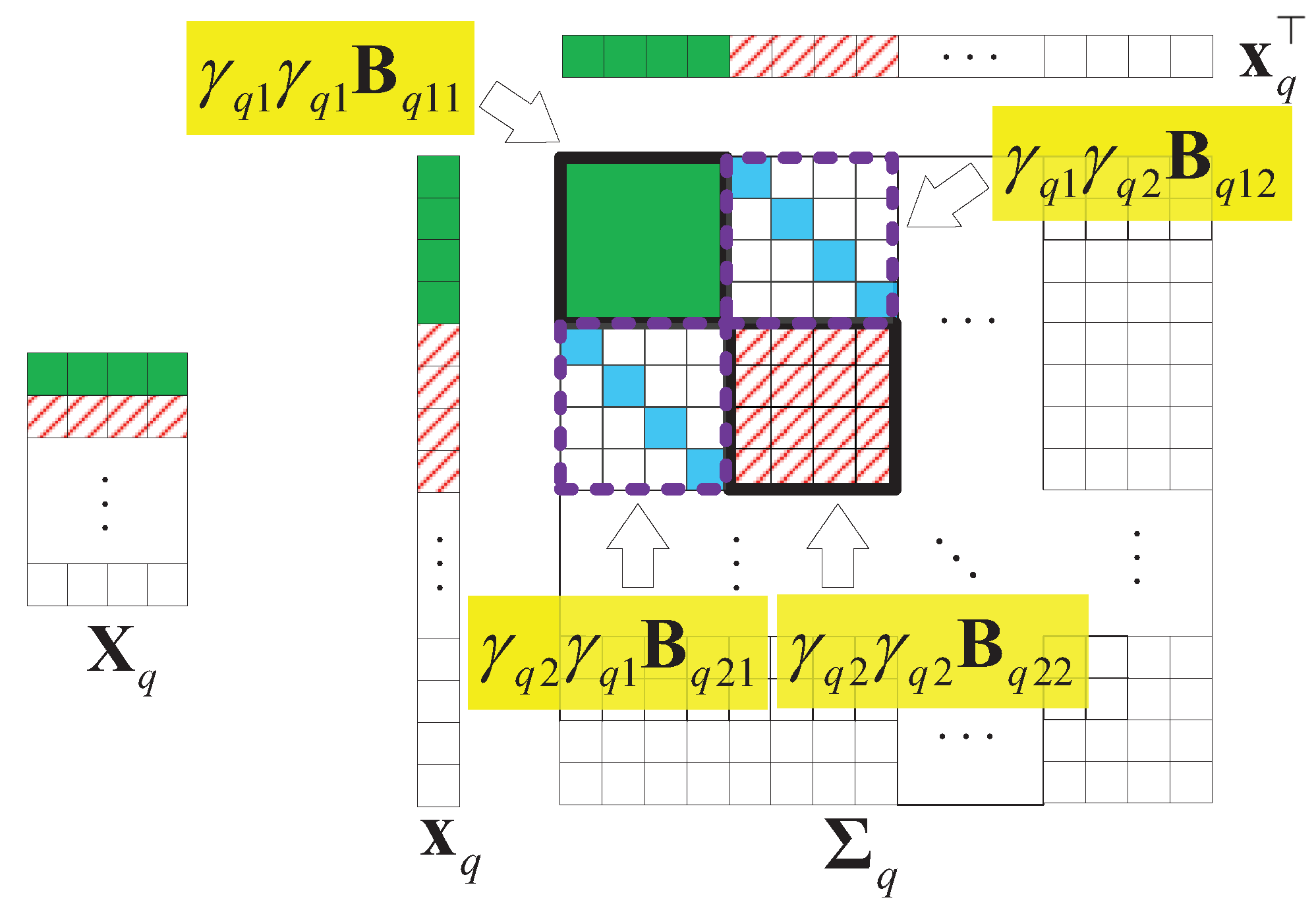

Without losing generality, we assume that is a matrix that is low-rank and joint-sparse in columns. Figure 9 illustrates an example of and the structure of the covariance matrix of with . In the figure, we find that only the first two columns have values, so only and . Thus, the parts related to , in are valuable.

Figure 9.

An example of the structure of the covariance matrix of , , .

3.2. Proposed Method

Following our last work [12], we obtain the posterior density of with the Bayesian rule:

where the mean and the covariance can be obtained by

When all of the hyperparameters are given, the maximum a posteriori (MAP) estimate of can be obtained by

where is the approximate diagonal matrix obtained by Equation (14), with most block matrices being zeros. It is clear that control the sparsity of the blocks of . When , the associated th block in becomes zero. In fact, .

Following the bSBL framework [25], to avoid overfitting, we use a common positive definite matrix to model all of the covariance matrices . In , we also use a common positive definite matrix instead of and we use a group of instead of all , and instead of . From the previous analysis, we can find that the covariance matrices induce the spatiotemporal correlation in the prior density. Thus, Equation (14) can be written as

Using the Bayesian strategy, we maximize the marginal probability of :

which is equivalent to minimizing the cost function of :

where denotes a set of positive definite matrices.

Here, denotes the covariance of given and .

Simply, ; thus, (21) turns into

Firstly, is treated as a hidden variable in the expectation maximization (EM) formulation that proceeds, and we maximize

where denotes the hyperparameters that are estimated in the previous iteration.

To estimate —only the first term in the function is correlated with —it can be simplified as

where denotes the estimation of in the previous iteration. When we calculate the derivative of Equation (25) over and set it equal to zero, is obtained:

To estimate and , is first assumed, where denotes a diagonal matrix operator. Notice that only the second term in Equation (24) is related to and . So, we can simplify the function (24) to

Then, we have

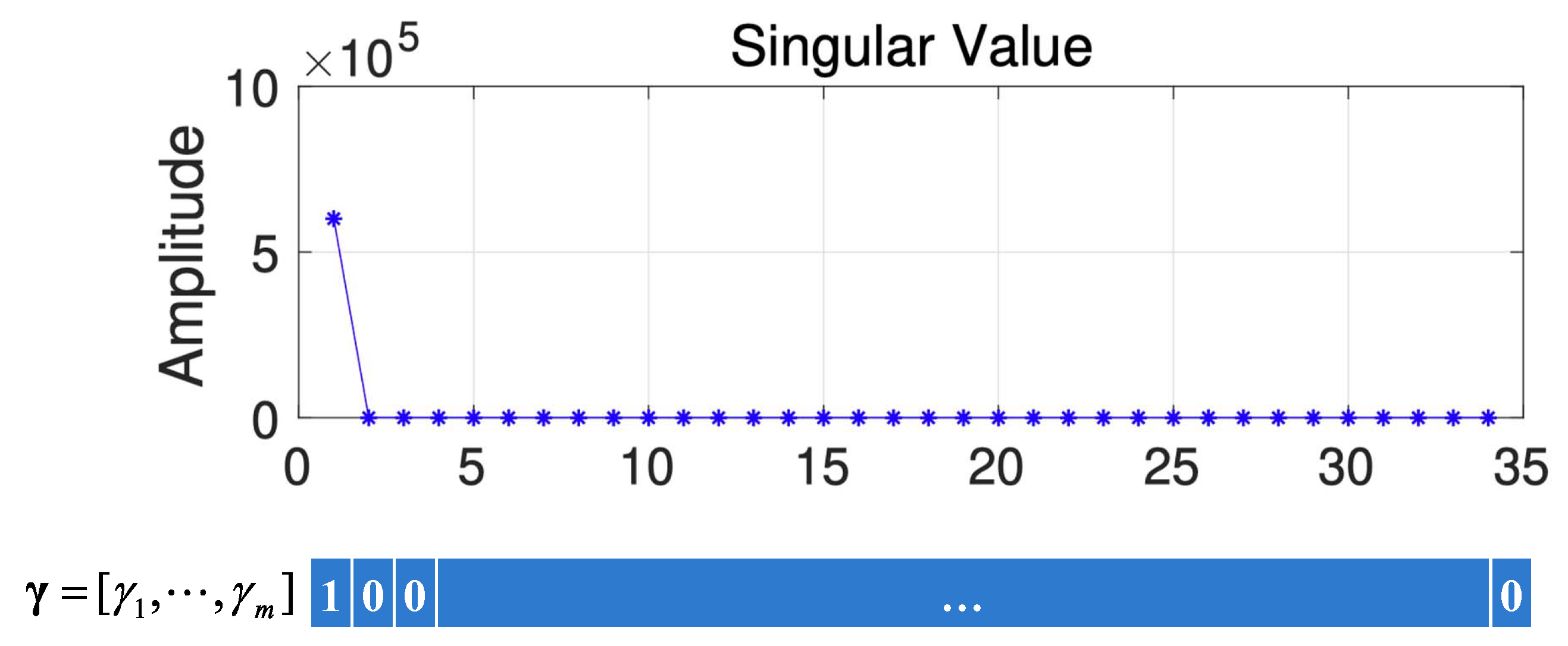

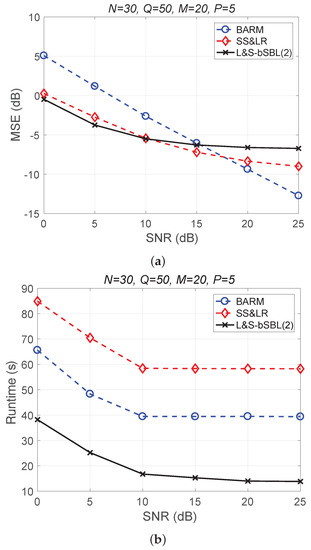

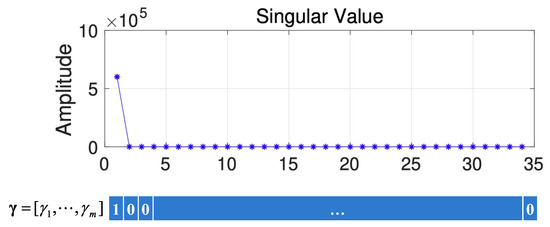

To obtain the values of , we use the feature given in [20]. Specifically, the values of corresponding to the larger singular values (the larger singular values are defined as singular values that are larger than 10% of the largest singular value) are 1; otherwise, they are 0. Figure 10 gives a schematic diagram of how is obtained from HSI data. From Figure 10, we can find that only the first singular value is larger, so .

Figure 10.

The schematic diagram of how is obtained.

To estimate , and are plugged into Equation (28). So, we can obtain the gradients of Equation (28) over , and then we can obtain .

Thus, we will get . Using the same method, we can get . Finally, we get . Here, denotes an initial value of .

In order to get a closed form of , we employ standard upper bounds to solve Equation (21), which is known as a non-convex optimization problem leading to an EM-like algorithm. For the first and second terms of , we compute their respective bounds.

For the first term in Equation (21), we can obtain

where equality is obtained when satisfies Equation (18).

For the second term, we can obtain

where, for the second term , a first-order approximation is used to approximate it with a bias term C. The equality will hold when the gradient satisfies

where and . Finally, by using the upper bounds of Equations (30) and (31) and , we can obtain the optimal in a closed form:

Starting with , we iteratively compute Equations (18), (32) and (33), then obtain an estimation for and a corresponding estimation for given by Equation (18). Here, the proposed method is outlined in Algorithm 1.

| Algorithm 1 Proposed method |

|

3.3. Simulation Experiments

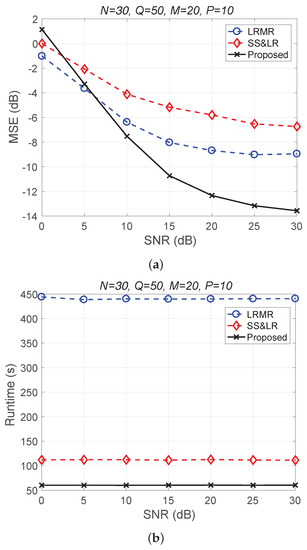

In this section, we present the results with HSI data in order to compare the performance of the proposed method with that of the prior state-of-the-art LRMR [5] and SS&LR [22] methods. For HSI data, 100 continuous-time trials were run. In each trial, data of a two-dimensional area and 20 bands from the Salinas Database (available at http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Salinas-A_scene (accessed on 10 November 2021)) and Indian Pines (available at http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes#Indian_Pines (accessed on 10 November 2021)) were used. Thus, the data matrix was a matrix.

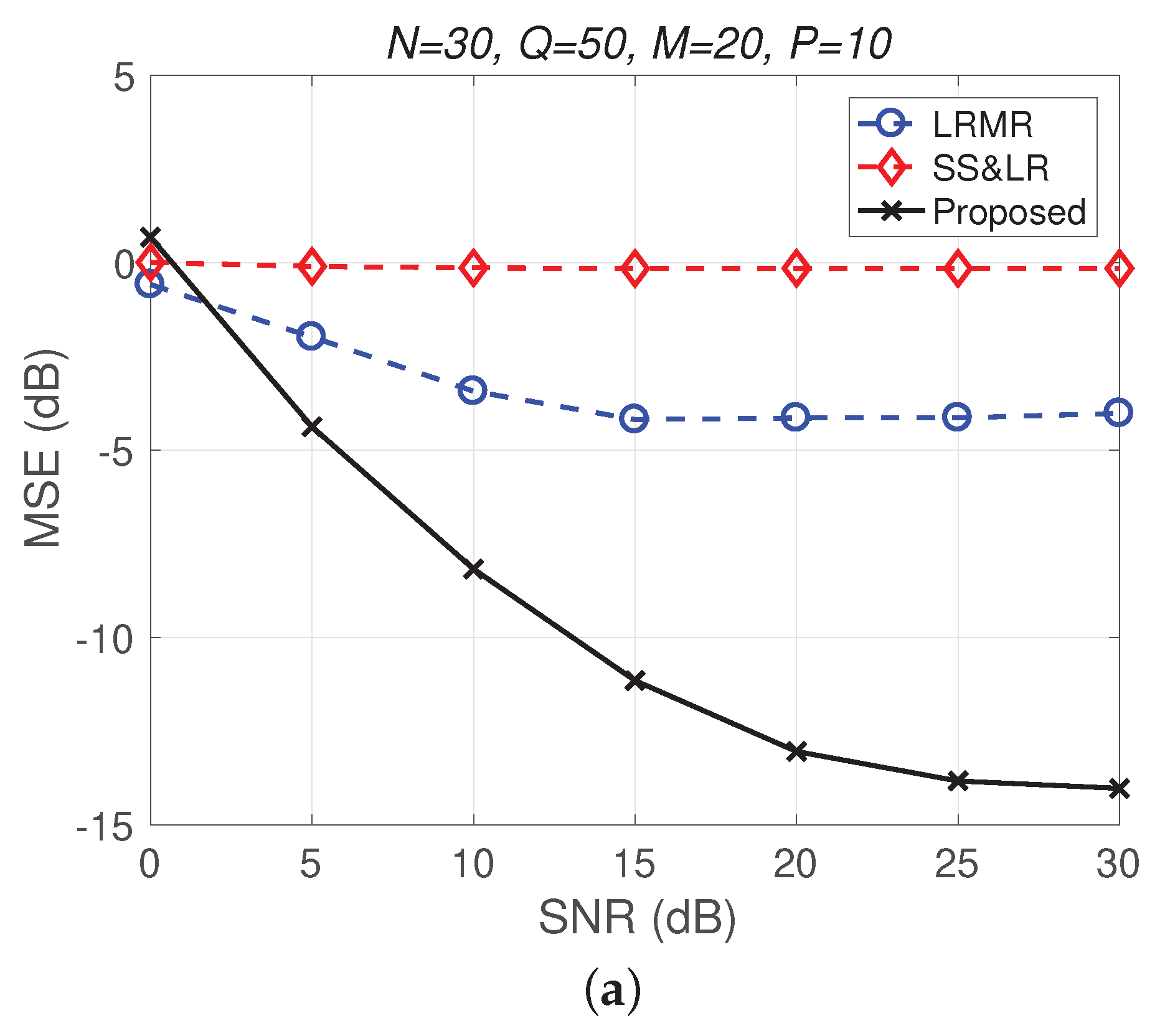

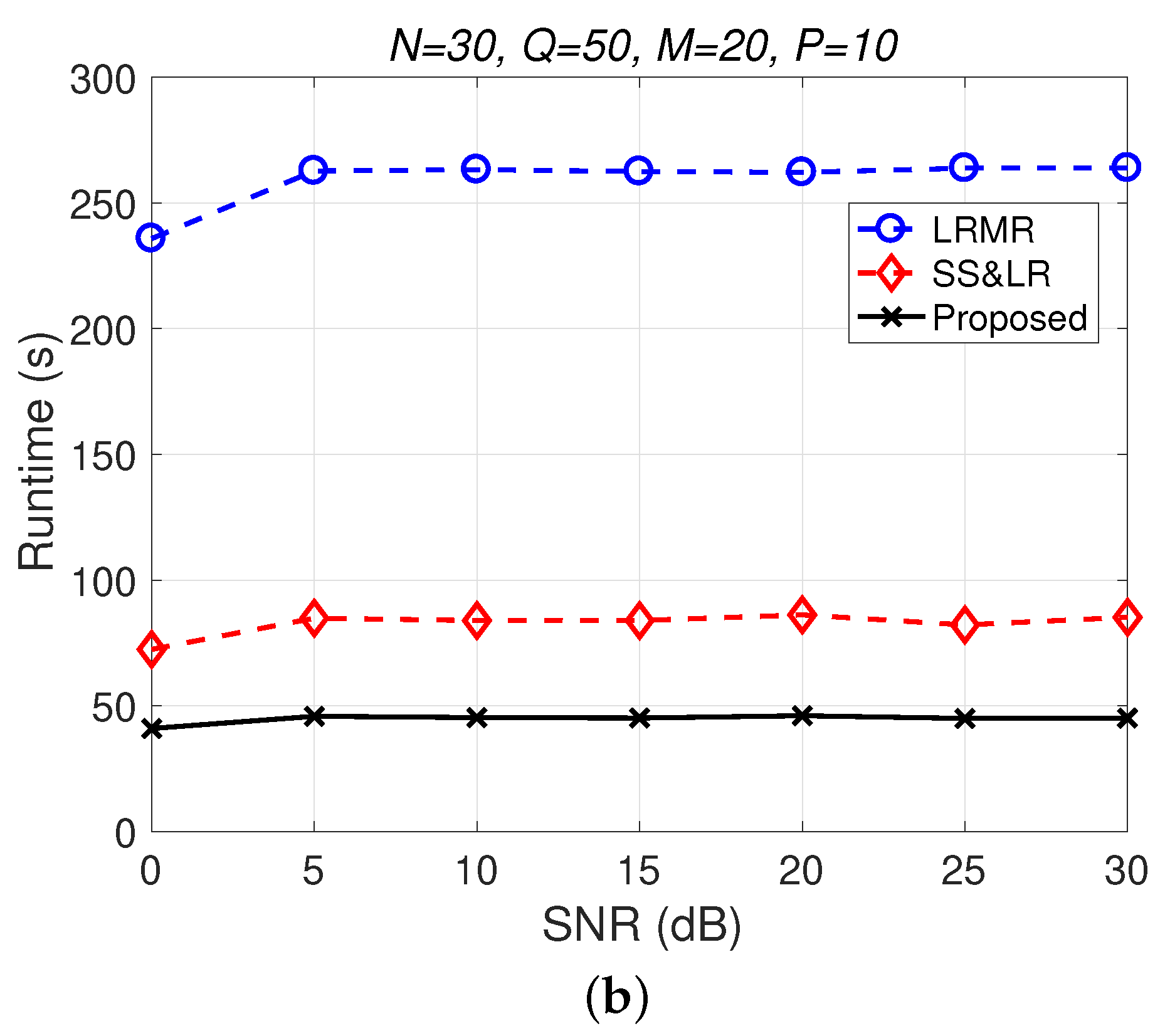

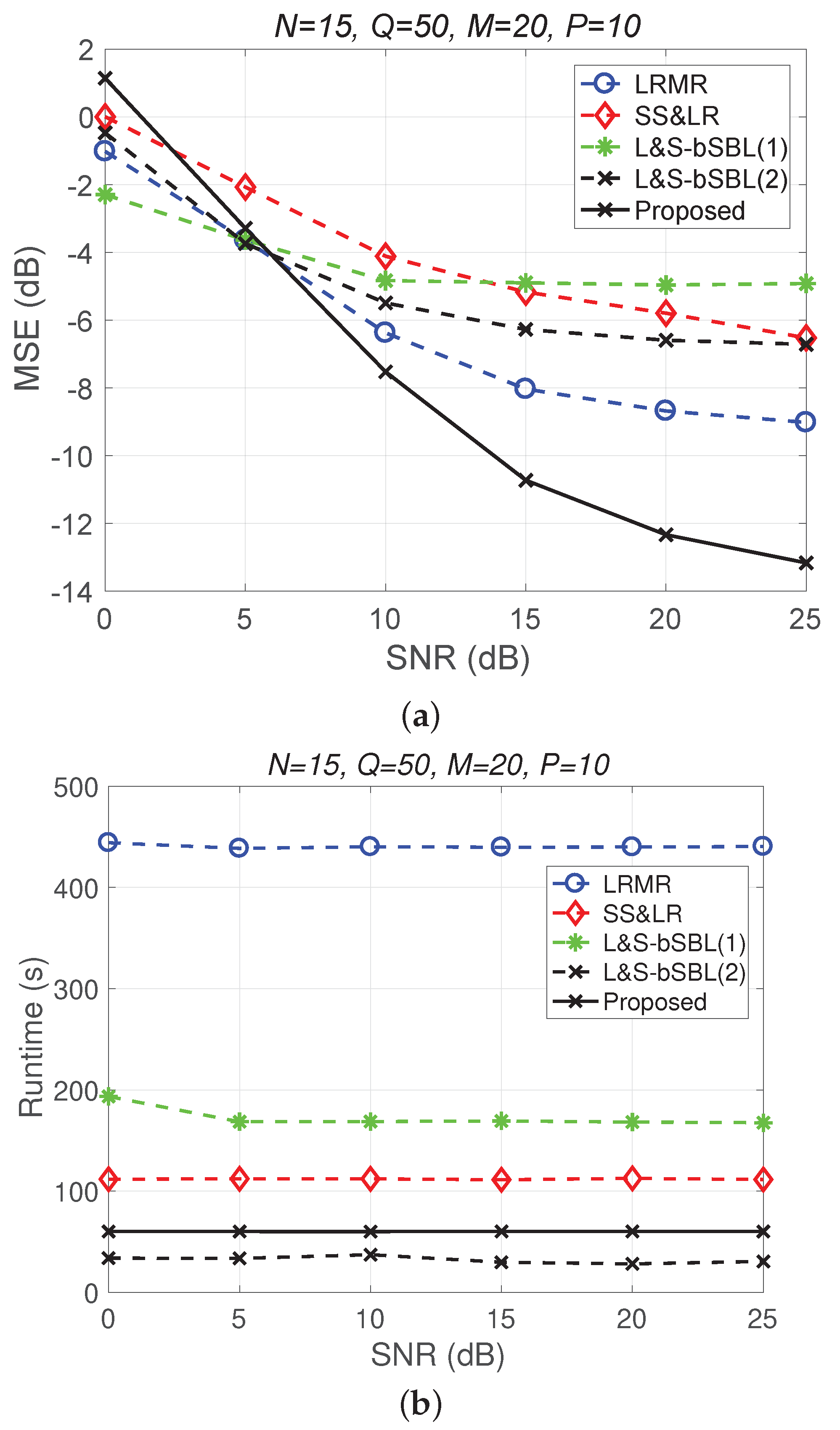

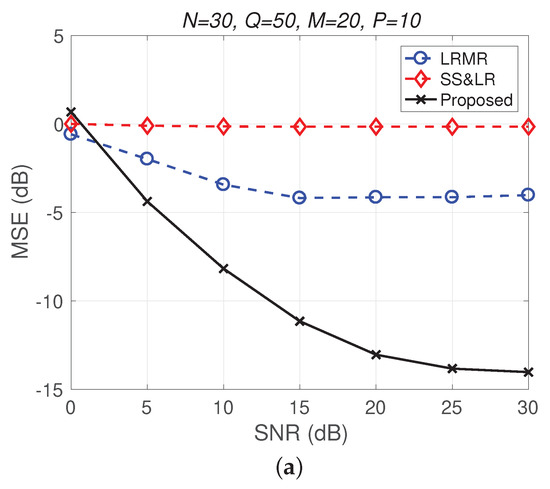

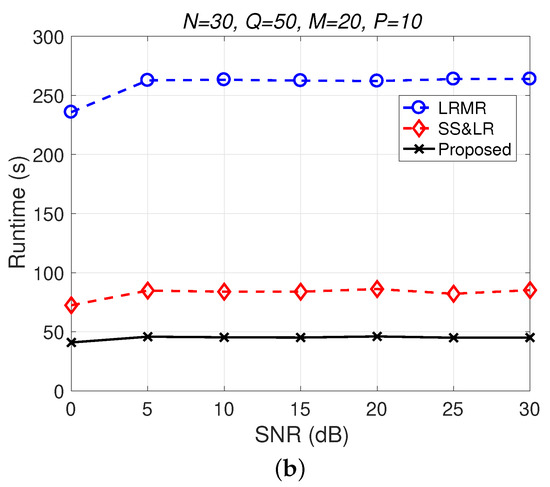

Figure 11 and Figure 12 plot the performance in terms of MSE versus SNR and runtime versus SNR, respectively. Here, , so the ratio of compression is . In Figure 11a, we can observe that proposed method outperforms all of the other methods in terms of MSE; e.g., at dB, we note that the proposed method achieves reconstruction gain at least 5 dB greater than those of the other methods. Figure 11b shows that the proposed method uses less runtime than the others. In Figure 12, we can observe that the effect of SS&LR is not good, but our proposed method works well and outperforms all of the other methods in terms of MSE; e.g., at dB, we note that the proposed method achieves a reconstruction gain at least 6 dB greater than those of the other methods. The proposed method also uses less runtime than the others.

Figure 11.

(a) MSE vs. SNR and (b) runtime vs. SNR for the reconstruction of the Salinas data.

Figure 12.

(a) MSE vs. SNR and (b) runtime vs. SNR for the reconstruction of the Indian Pines data.

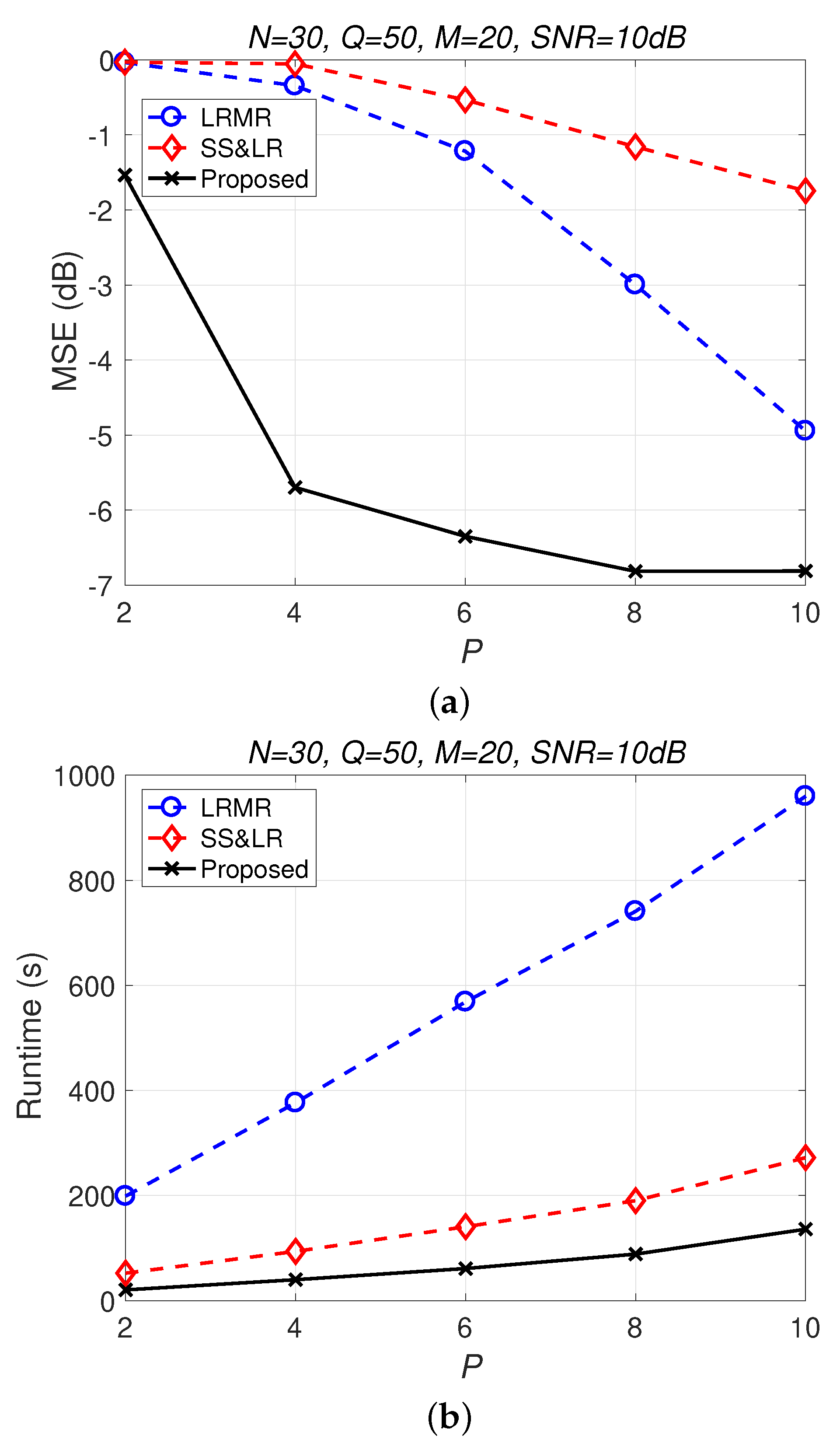

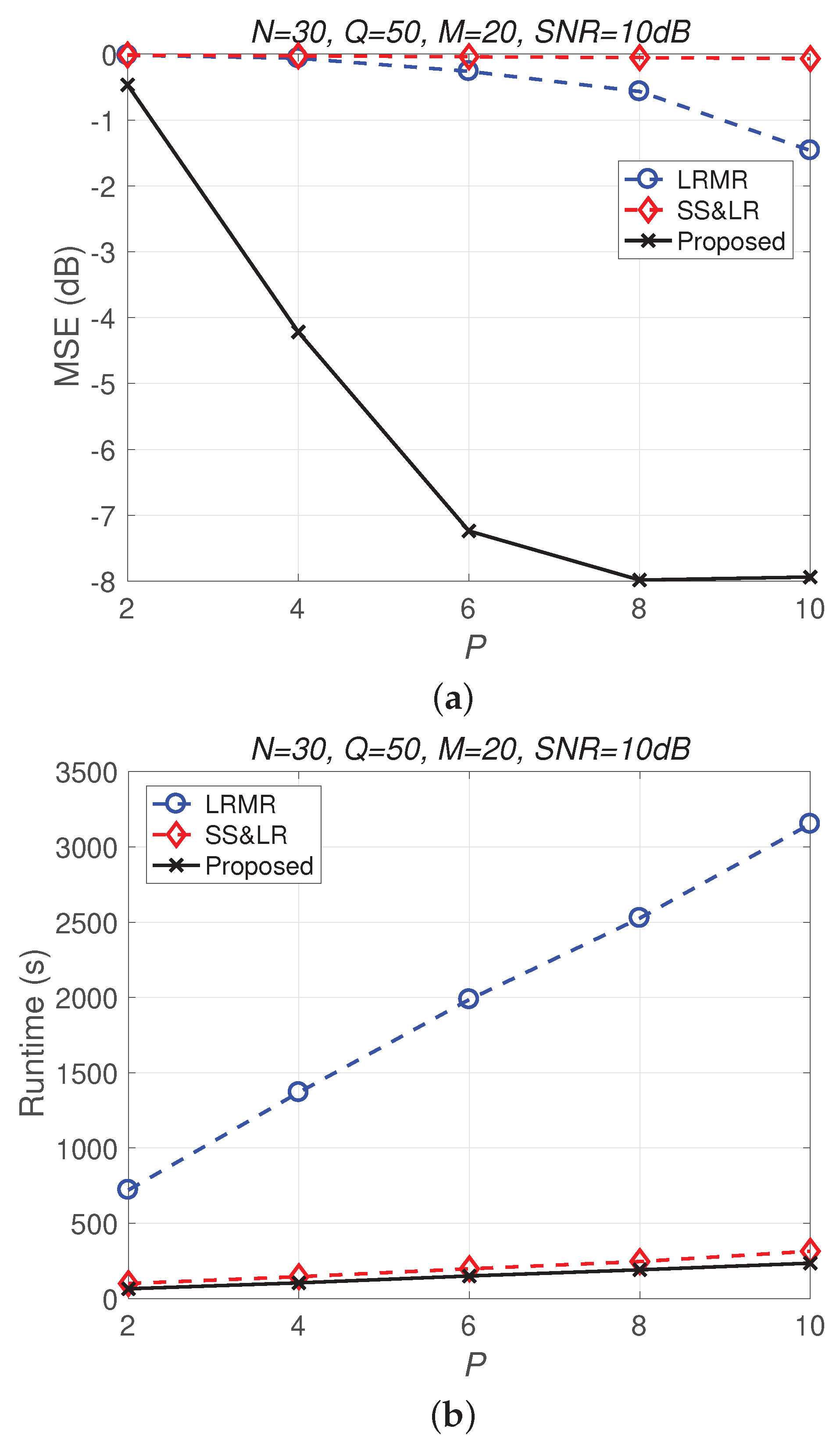

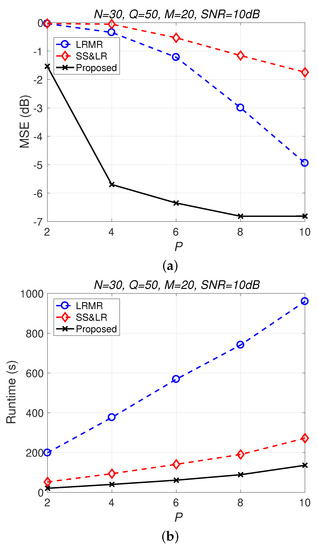

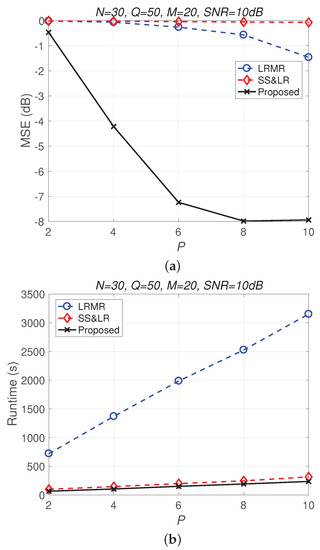

Figure 13 and Figure 14 compare all of the methods for different values of compression P. Figure 13a shows that most of the MSE curves fall as P increases. As the compression ratio decreases (from to ), the reconstruction error becomes smaller, affecting the signal reconstruction performance. Fortunately, the proposed algorithm yields better performance in all cases and has a higher compression ratio with the same reconstruction performance. Figure 13b shows that the proposed method consumes less computation time at the same compression ratio. From Figure 14, we observe that the effects of SS&LR and LRMR are not good, but our proposed method outperforms all of the other methods in terms of MSE; e.g., at , we note that the proposed method achieves a reconstruction gain at least 7 dB greater than those of the other methods. The proposed method also uses less runtime than the others.

Figure 13.

(a) MSE vs. P and (b) runtime vs. P with different compression ratios for the reconstruction of the Salinas data.

Figure 14.

(a) MSE vs. P and (b) runtime vs. P with different compression ratios for the reconstruction of the Indian Pines data.

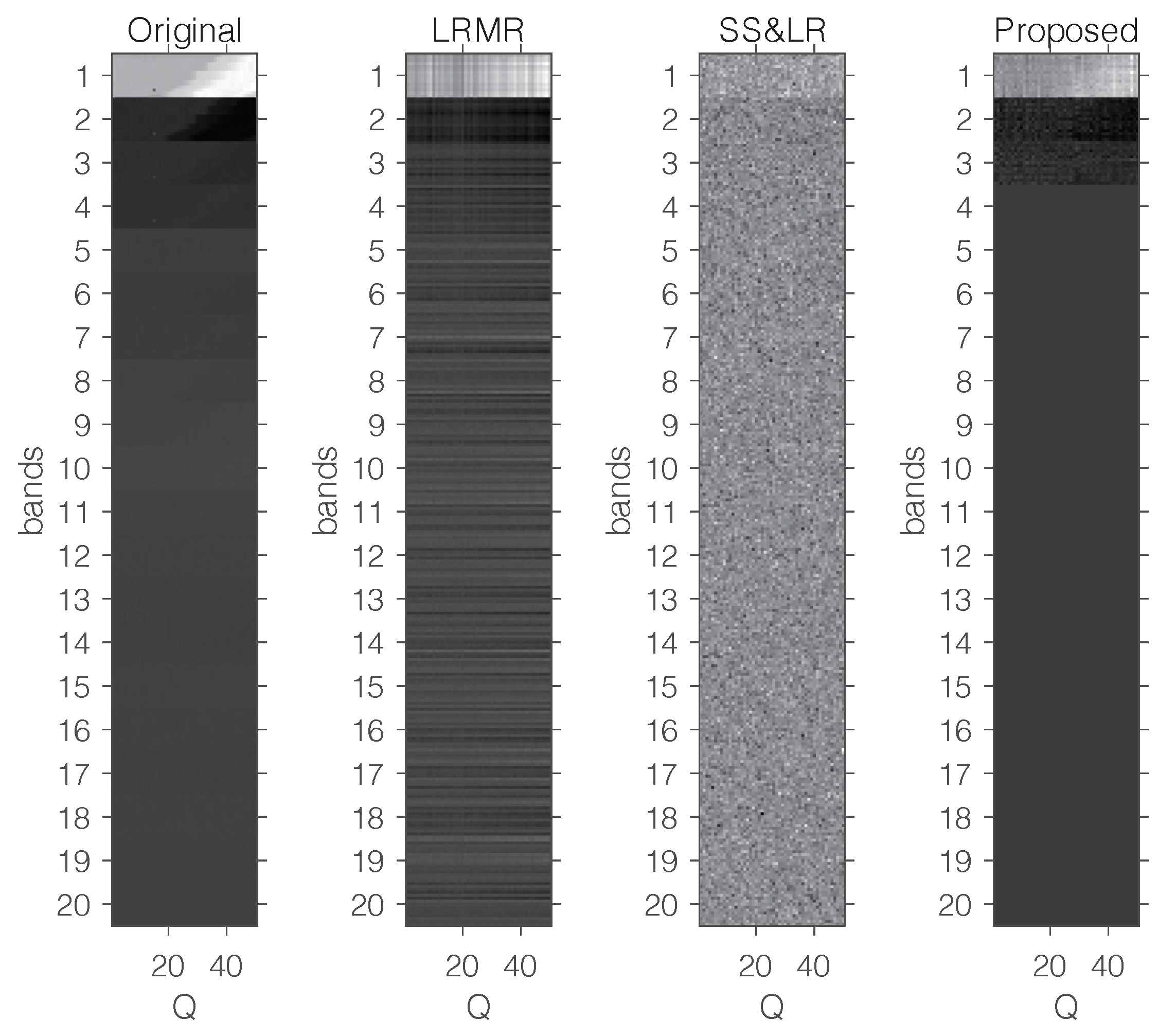

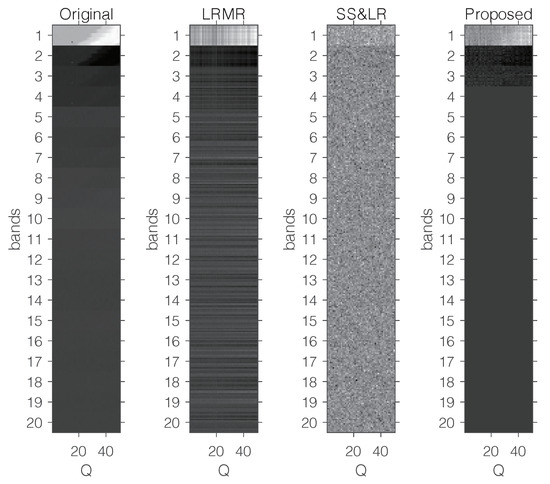

Figure 15 shows some visual reconstruction results obtained with the three methods. As expected, the proposed method preserves more fine details and better visual results than the others.

Figure 15.

Visual comparison with a fixed compression ratio and 20 bands.

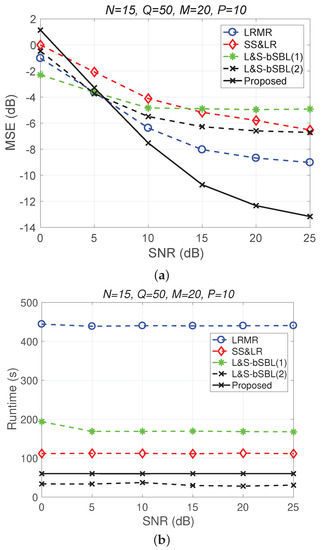

Figure 16 only shows a performance comparison chart of the various algorithms from the perspective of data recovery, and it does not start from the perspective of data collection methods, as in the beginning of this section. It can be seen in Figure 16 that the proposed 3D joint reconstruction algorithm has better performance and less time consumption than the other algorithms. What needs to be explained here is that the three methods—the proposed 3D HSI method, L&S-bSBL (1) method, and L&S-bSBL (2) method—are, in terms of the compression ratio: , , and .

Figure 16.

(a) MSE vs. SNR and (b) runtime vs. SNR for only the performance of each algorithm from the perspective of signal recovery, regardless of the HSI data acquisition method.

4. Discussion

From Section 3.3, the simulation results demonstrate that the proposed method is superior to LRMR and SS&LR methods in terms of reconstruction accuracy and computational burden under the same SNR and compression ratio. This is because LRMR only focuses on the reconstruction of low-rank signals, while SS&LR focuses on the reconstruction of low-rank and sparse signals, but it only uses the spatial relationship or interband relationship of HSI signals and does not combine the signal acquisition process.

Based on our present work, some issues that could be addressed in future research can be summarized as follows. One open problem is that of reducing, as much as possible, the computational complexity of the proposed method. In particular, unmanned aerial vehicles (UAVs) are used to monitor fields, such as in farmland monitoring, environmental monitoring, etc. While our approach has significant reconstruction performance, there is still a long way to go before we can apply it to future portable hardware. We can also take advantage of the properties of HSI data with higher-dimensional structures to design an effective Bayesian learning-based reconstruction method; namely, tensor models could be considered to compress and reconstruct HSI data. Furthermore, we may also consider the design of a sensor selection method for HSI acquisition that reduces the number of sensors in different locations that are needed so as to extend the life and energy savings of sensors.

5. Conclusions

In this work, based on the fast method proposed in our previous work, two methods for 2D compression and reconstruction of 3D HSI data are given. Then, starting from the HSI acquisition method, we analyzed the push-brooming acquisition of data slices in the hyperspectral image acquisition process, and by assuming that the HSI data met the L&S model, a joint CS compression and reconstruction method for spatial and spectral correlation was studied and an L&S structure was proposed. The proposed method combines data reconstruction with HSI data acquisition. The simulation results show that the proposed method has better performance than the other two existing methods and the two proposed 2D reconstruction methods.

Author Contributions

Conceptualization, Y.Z. and L.-T.H.; methodology, Y.Z. and Y.L.; software, Y.Z.; validation, Y.Z., L.-T.H. and C.Y.; formal analysis, Y.Z. and L.-T.H.; investigation, Y.Z.; resources, K.Z.; data curation, Y.Z.; writing—original draft preparation, Y.Z.; writing—review and editing, L.-T.H. and C.Y.; visualization, K.Z.; supervision, C.Y.; project administration, C.Y.; funding acquisition, C.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grants 61871041, 61671086, and 61629101, in part by the 111 Project under Grant B17007, in part by the Beijing Nova Program of Science and Technology under Grant Z191100001119111.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Chang, C.I. Hyperspectral Data Exploitation: Theory and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Goetz, A.F. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Environ. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Borengasser, M.; Hungate, W.S.; Watkins, R. Hyperspectral Remote Sensing: Principles and Applications, 1st ed.; CRC Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- Golbabaee, M.; Vandergheynst, P. Hyperspectral image compressed sensing via low-rank and joint-sparse matrix recovery. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2741–2744. [Google Scholar]

- Li, C.; Ma, L.; Wang, Q.; Zhou, Y.; Wang, N. Construction of sparse basis by dictionary training for compressive sensing hyperspectral imaging. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 1442–1445. [Google Scholar]

- Hou, Y.; Zhang, Y. Effective hyperspectral image block compressed sensing using thress-dimensional wavelet transform. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec, QC, Canada, 13–18 July 2014; pp. 2973–2976. [Google Scholar]

- Donoho, D. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive Sensing [Lecture Notes]. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B. An Introduction To Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Yin, C.; He, K. Low-rank and joint-sparse signal recovery for spatially and temporally correlated data using sparse Bayesian learning. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4719–4723. [Google Scholar]

- Wang, L.; Feng, Y.; Gao, Y.; Wang, Z.; He, M. Compressed Sensing Reconstruction of Hyperspectral Images Based on Spectral Unmixing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1266–1284. [Google Scholar] [CrossRef]

- Wang, Z.; Xiao, H.; He, M.; Wang, L.; Xu, K.; Nian, Y. Spatial-Spectral Joint Compressed Sensing for Hyperspectral Images. IEEE Access 2020, 8, 149661–149675. [Google Scholar] [CrossRef]

- Xiao, H.; Wang, Z.; Cui, X. Distributed Compressed Sensing of Hyperspectral Images According to Spectral Library Matching. IEEE Access 2021, 9, 112994–113006. [Google Scholar] [CrossRef]

- Yang, S.; Wang, M.; Li, P.; Jin, L.; Wu, B.; Jiao, L. Compressive Hyperspectral Imaging via Sparse Tensor and Nonlinear Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5943–5957. [Google Scholar] [CrossRef]

- He, W.; Zhang, H.; Shen, H.; Zhang, L. Hyperspectral Image Denoising Using Local Low-Rank Matrix Recovery and Global Spatial–Spectral Total Variation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 713–729. [Google Scholar] [CrossRef]

- Takeyama, S.; Ono, S. Joint Mixed-Noise Removal and Compressed Sensing Reconstruction of Hyperspectral Images via Convex Optimization. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1492–1495. [Google Scholar]

- Fu, W.; Li, S. Semi-Tensor Compressed Sensing for Hyperspectral Image. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2737–2740. [Google Scholar]

- Zhang, Y.; Huang, L.; Li, Y.; Zhang, K.; Yin, C. Fast recovery of low-rank and joint-sparse signals in wireless body area networks. In Proceedings of the 2020 IEEE/CIC International Conference on Communications in China (ICCC), Chongqing, China, 9–11 August 2020; pp. 577–581. [Google Scholar]

- Xin, B.; Wang, Y.; Gao, W.; Wipf, D. Exploring algorithmic limits of matrix rank minimization under affine constraints. IEEE Trans. Signal Process. 2016, 64, 4960–4974. [Google Scholar] [CrossRef]

- Chen, W. Simultaneously sparse and low-rank matrix reconstruction via nonconvex and nonseparable regularization. IEEE Trans. Signal Process. 2018, 66, 5313–5323. [Google Scholar] [CrossRef]

- Li, Y.; Chen, W.; Yin, C.; Han, Z. Approximate message passing for sparse recovering of spatially and temporally correlated data. In Proceedings of the 2015 IEEE/CIC International Conference on Communications in China (ICCC), Shenzhen, China, 2–4 November 2015; pp. 1–6. [Google Scholar]

- Ziniel, J.; Schniter, P. Efficient high-dimensional inference in the multiple measurement vector problem. IEEE Trans. Signal Process. 2013, 61, 340–354. [Google Scholar] [CrossRef]

- Zhang, Z.; Jung, T.P.; Makeig, S.; Rao, B.D. Compressed sensing of EEG for wireless telemonitoring with low energy consumption and inexpensive hardware. IEEE Trans. Biomed. Eng. 2013, 60, 221–224. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).