The Identification of Non-Driving Activities with Associated Implication on the Take-Over Process

Abstract

:1. Introduction

2. Methodology

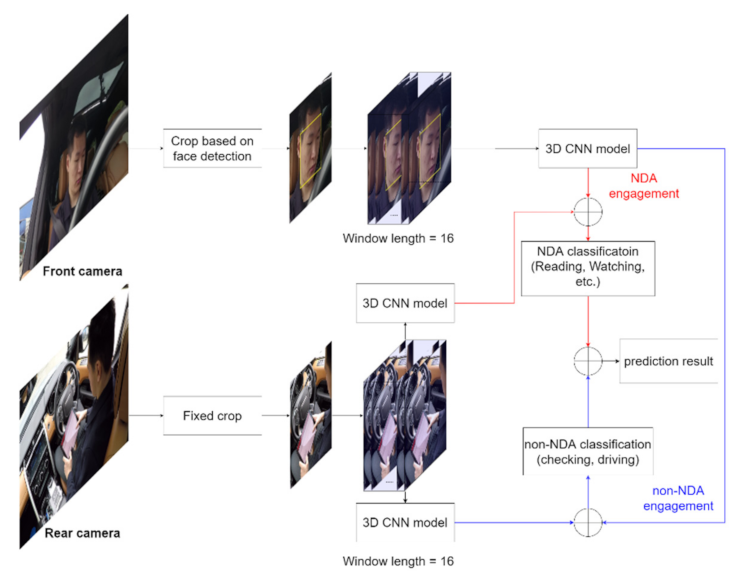

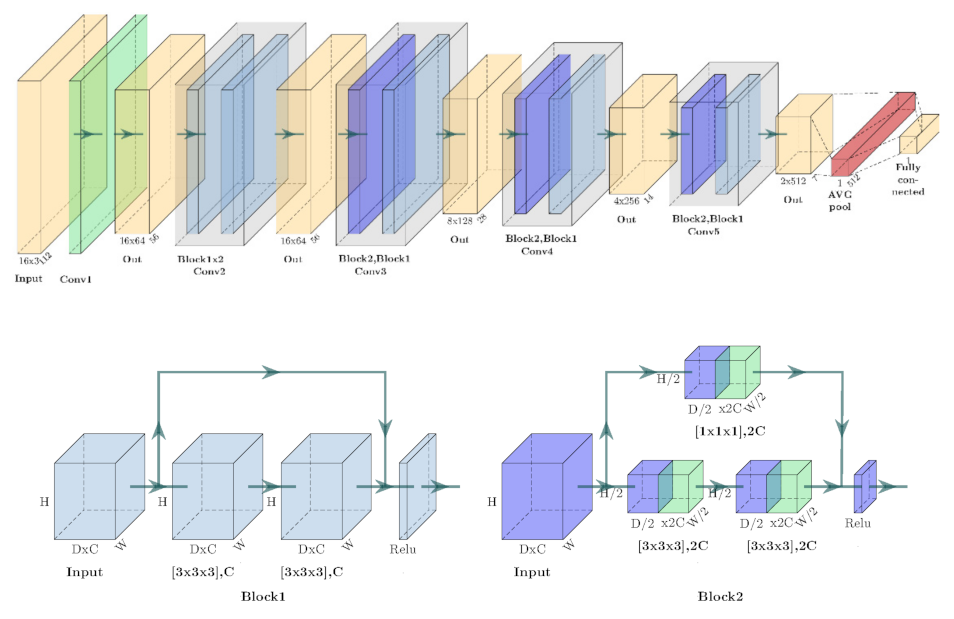

2.1. NDA Detection and Recognition System

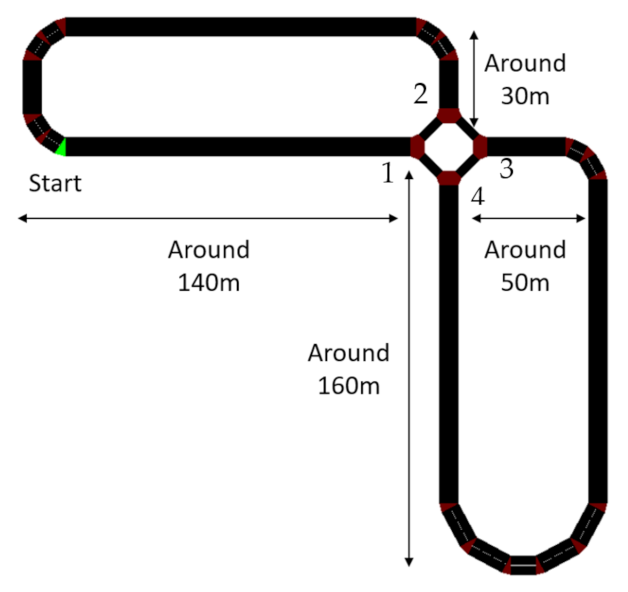

2.2. Experiment Design

2.3. Vehicle Setting

3. Results

3.1. Activity Classification

3.2. Road-Checking Behaviour Analysis

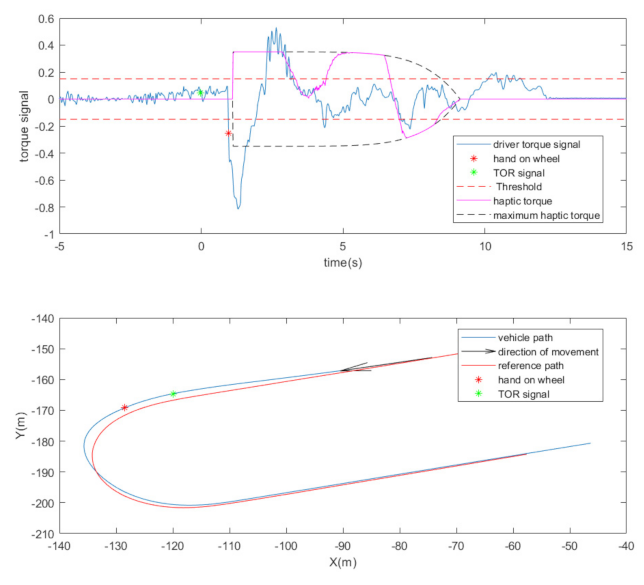

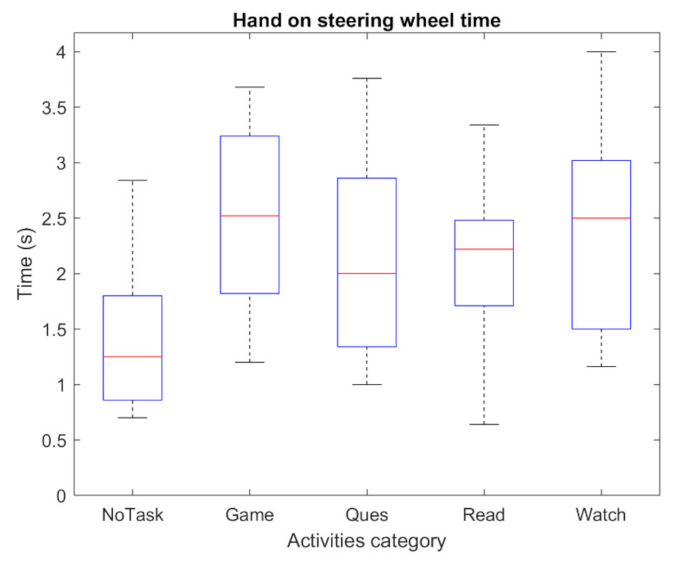

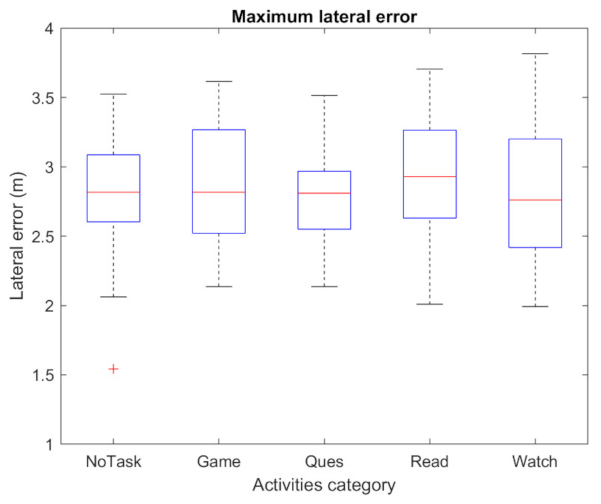

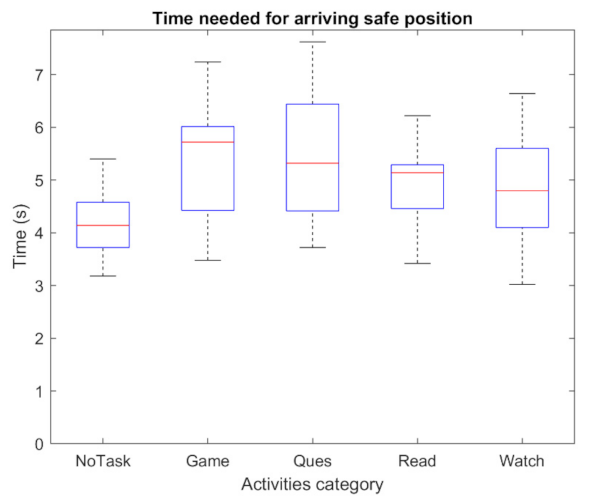

3.3. Take-Over Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- SAE International Standard J3016_201806; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE: Warrendale, PA, USA, 2018.

- National Transportation Safety Board. Collision Between a Car Operating with Automated Vehicle Control Systems and a Tractor-Semitrailer Truck; National Transportation Safety Board: Washington, DC, USA, 2017. [Google Scholar]

- National Transportation Safety Board. Collision Between a Sport Utility Vehicle Operating with Partial Driving Automation and a Crash Attenuator, Mountain View, California, March 23, 2018; National Transportation Safety Board: Washington, DC, USA, 2018. [Google Scholar]

- de Winter, J.C.F.; Happee, R.; Martens, M.H.; Stanton, N.A. Effects of adaptive cruise control and highly automated driving on workload and situation awareness: A review of the empirical evidence. Transp. Res. Part F Traffic Psychol. Behav. 2014, 27, 196–217. [Google Scholar] [CrossRef] [Green Version]

- Dogan, E.; Rahal, M.-C.; Deborne, R.; Delhomme, P.; Kemeny, A.; Perrin, J. Transition of control in a partially automated vehicle: Effects of anticipation and non-driving-related task involvement. Transp. Res. Part F Traffic Psychol. Behav. 2017, 46, 205–215. [Google Scholar] [CrossRef]

- Yoon, S.H.; Ji, Y.G. Non-driving-related tasks, workload, and takeover performance in highly automated driving contexts. Transp. Res. Part F Traffic Psychol. Behav. 2019, 60, 620–631. [Google Scholar] [CrossRef]

- Aksjonov, A.; Nedoma, P.; Vodovozov, V.; Petlenkov, E.; Herrmann, M. Detection and Evaluation of Driver Distraction Using Machine Learning and Fuzzy Logic. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2048–2059. [Google Scholar] [CrossRef]

- Osman, O.A.; Hajij, M.; Karbalaieali, S.; Ishak, S. A hierarchical machine learning classification approach for secondary task identification from observed driving behavior data. Accid. Anal. Prev. 2019, 123, 274–281. [Google Scholar] [CrossRef]

- Martin, M.; Popp, J.; Anneken, M.; Voit, M.; Stiefelhagen, R. Body Pose and Context Information for Driver Secondary Task Detection. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2015–2021. [Google Scholar]

- Xing, Y.; Lv, C.; Zhang, Z.; Wang, H.; Na, X.; Cao, D.; Velenis, E.; Wang, F.-Y. Identification and analysis of driver postures for in-vehicle driving activities and secondary tasks recognition. IEEE Trans. Comput. Soc. Syst. 2018, 5, 95–108. [Google Scholar] [CrossRef] [Green Version]

- Li, N.; Busso, C. Detecting drivers’ mirror-checking actions and its application to maneuver and secondary task recognition. IEEE Trans. Intell. Transp. Syst. 2016, 17, 980–992. [Google Scholar] [CrossRef]

- Jin, L.; Guo, B.; Jiang, Y.; Wang, F.; Xie, X.; Gao, M. Study on the impact degrees of several driving behaviors when driving while performing secondary tasks. IEEE Access 2018, 6, 65772–65782. [Google Scholar] [CrossRef]

- Yang, L.; Dong, K.; Dmitruk, A.J.; Brighton, J.; Zhao, Y. A dual-cameras-based driver gaze mapping system with an application on non-driving activities monitoring. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4318–4327. [Google Scholar] [CrossRef] [Green Version]

- Yoon, S.H.; Kim, Y.W.; Ji, Y.G. The effects of takeover request modalities on highly automated car control transitions. Accid. Anal. Prev. 2019, 123, 150–158. [Google Scholar] [CrossRef]

- Clark, H.; Feng, J. Age differences in the takeover of vehicle control and engagement in non-driving-related activities in simulated driving with conditional automation. Accid. Anal. Prev. 2017, 106, 468–479. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Blythe, P.; Guo, W.; Namdeo, A. Investigating the effects of age and disengagement in driving on driver’s takeover control performance in highly automated vehicles. Transp. Plan. Technol. 2019, 42, 470–497. [Google Scholar] [CrossRef]

- Radlmayr, J.; Gold, C.; Lorenz, L.; Farid, M.; Bengler, K. How traffic situations and non-driving related tasks affect the take-over quality in highly automated driving. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2014, 58, 2063–2067. [Google Scholar] [CrossRef] [Green Version]

- Scharfe, M.S.L.; Zeeb, K.; Russwinkel, N. The impact of situational complexity and familiarity on takeover quality in uncritical highly automated driving scenarios. Information 2020, 11, 115. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Wu, H.; Lyu, N.; Zheng, M. Take-over performance and safety analysis under different scenarios and secondary tasks in conditionally automated driving. IEEE Access 2019, 7, 136924–136933. [Google Scholar] [CrossRef]

- Wandtner, B.; Schömig, N.; Schmidt, G. Effects of non-driving related task modalities on takeover performance in highly automated driving. Hum. Factors J. Hum. Factors Ergon. Soc. 2018, 60, 870–881. [Google Scholar] [CrossRef] [PubMed]

- Jeong, H.; Liu, Y. Effects of non-driving-related-task modality and road geometry on eye movements, lane-keeping performance, and workload while driving. Transp. Res. Part F Traffic Psychol. Behav. 2019, 60, 157–171. [Google Scholar] [CrossRef]

- Kim, J.; Kim, H.-S.; Kim, W.; Yoon, D. Take-over performance analysis depending on the drivers’ non-driving secondary tasks in automated vehicles. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 17–19 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1364–1366. [Google Scholar]

- Zeeb, K.; Buchner, A.; Schrauf, M. Is take-over time all that matters? The impact of visual-cognitive load on driver take-over quality after conditionally automated driving. Accid. Anal. Prev. 2016, 92, 230–239. [Google Scholar] [CrossRef]

- Petermeijer, S.; Doubek, F.; de Winter, J. Driver response times to auditory, visual, and tactile take-over requests: A simulator study with 101 participants. In Proceedings of the 2017 IEEE International Conference on Systems, Man and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1505–1510. [Google Scholar]

- Kim, H.; Kim, W.; Kim, J.; Yoon, D. A study on the control authority transition characteristics by driver information. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1562–1563. [Google Scholar]

- Choi, D.; Sato, T.; Ando, T.; Abe, T.; Akamatsu, M.; Kitazaki, S. Effects of cognitive and visual loads on driving performance after take-over request (TOR) in automated driving. Appl. Ergon. 2020, 85, 103074. [Google Scholar] [CrossRef]

- Bueno, M.; Dogan, E.; Hadj Selem, F.; Monacelli, E.; Boverie, S.; Guillaume, A. How different mental workload levels affect the take-over control after automated driving. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2040–2045. [Google Scholar]

- Petersen, L.; Robert, L.; Yang, X.J.; Tilbury, D. Situational awareness, driver’s trust in automated driving systems and secondary task performance. SAE Int. J. Connect. Autom. Veh. 2019, 2, 129–141. [Google Scholar] [CrossRef] [Green Version]

- Wandtner, B.; Schmidt, G.; Schoemig, N.; Kunde, W. Non-driving related tasks in highly automated driving—Effects of task modalities and cognitive workload on take-over performance. In Proceedings of the AmE 2018—Automotive meets Electronics, 9th GMM-Symposium, Dortmund, Germany, 7–8 March 2018; pp. 1–6. [Google Scholar]

- Sivak, M.; Schoettle, B. Motion Sickness in Self-Driving Vehicles; University of Michigan, Transportation Research Institute: Ann Arbor, MI, USA, 2015. [Google Scholar]

- Petermeijer, S.M.; Abbink, D.A.; Mulder, M.; de Winter, J.C.F. The effect of haptic support systems on driver performance: A literature survey. IEEE Trans. Haptics 2015, 8, 467–479. [Google Scholar] [CrossRef]

- Wan, J.; Wu, C. The effects of vibration patterns of take-over request and non-driving tasks on taking-over control of automated vehicles. Int. J. Hum. Comput. Interact. 2018, 34, 987–998. [Google Scholar] [CrossRef]

- Lv, C.; Wang, H.; Cao, D.; Zhao, Y.; Auger, D.J.; Sullman, M.; Matthias, R.; Skrypchuk, L.; Mouzakitis, A. Characterization of driver neuromuscular dynamics for human–automation collaboration design of automated vehicles. IEEE/ASME Trans. Mechatron. 2018, 23, 2558–2567. [Google Scholar] [CrossRef] [Green Version]

- Petermeijer, S.M.; de Winter, J.C.F.; Bengler, K.J. Vibrotactile Displays: A survey with a view on highly automated driving. IEEE Trans. Intell. Transp. Syst. 2016, 17, 897–907. [Google Scholar] [CrossRef] [Green Version]

- Lv, C.; Wang, H.; Cao, D.; Zhao, Y.; Sullman, M.; Auger, D.J.; Brighton, J.; Matthias, R.; Skrypchuk, L.; Mouzakitis, A. A novel control framework of haptic take-over system for automated vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1596–1601. [Google Scholar]

- Lv, C.; Li, Y.; Xing, Y.; Huang, C.; Cao, D.; Zhao, Y.; Liu, Y. Human-Machine Collaboration for Automated Vehicles via an Intelligent Two-Phase Haptic Interface. arXiv 2020, arXiv:2002.03597. [Google Scholar]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Fridman, L.; Langhans, P.; Lee, J.; Reimer, B. Driver gaze region estimation without use of eye movement. IEEE Intell. Syst. 2016, 31, 49–56. [Google Scholar] [CrossRef]

- Naqvi, R.A.; Arsalan, M.; Batchuluun, G.; Yoon, H.S.; Park, K.R. Deep learning-based gaze detection system for automobile drivers using a NIR camera sensor. Sensors 2018, 18, 456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, L.; Dong, K.; Ding, Y.; Brighton, J.; Zhan, Z.; Zhao, Y. Recognition of visual-related non-driving activities using a dual-camera monitoring system. Pattern Recognit. 2021, 116, 107955. [Google Scholar] [CrossRef]

- Yang, L.; Yang, T.; Liu, H.; Shan, X.; Brighton, J.; Skrypchuk, L.; Mouzakitis, A.; Zhao, Y. A refined non-driving activity classification using a two-stream convolutional neural network. IEEE Sens. J. 2020, 21, 15574–15583. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.-Y. Driver activity recognition for intelligent vehicles: A deep learning approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D Convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning spatio-temporal features with 3D residual networks for action recognition. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3154–3160. [Google Scholar]

- Du, X.; Li, Y.; Cui, Y.; Qian, R.; Li, J.; Bello, I. Revisiting 3D ResNets for video recognition. arXiv 2021, arXiv:2109.01696. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1026–1034. [Google Scholar]

| Term | NDA Detection | DA Classification | NDA Classification | Final Prediction |

|---|---|---|---|---|

| Accuracy | 97.14% | 95.51% | 85.56% | 85.87% |

| Weighted F1 score | 97.14% | 95.49% | 85.46% | 85.88% |

| NDAs | Checking Period (s) | Percentage of Checking for Corresponding Motivation | |||

|---|---|---|---|---|---|

| Bumping | Approaching Junctions | Breakpoint | Others | ||

| Watching videos | 37.10 | 19.88% | 52.05% | 5.85% | 22.22% |

| Reading news | 51.64 | 16.78% | 51.75% | 7.69% | 23.78% |

| Playing games | 79.13 | 3.61% | 26.50% | 59.04% | 10.84% |

| Answering questionnaires | 123.00 | 18.18% | 50.00% | 13.64% | 18.18% |

| Time to Threshold | Activities | ||||

|---|---|---|---|---|---|

| No Task | Watch | Read | Ques | Game | |

| Mean (s) | 4.16 | 4.74 | 4.96 | 5.45 | 5.43 |

| Standard deviation (s) | 0.67 | 1.12 | 0.87 | 1.23 | 1.14 |

| Time to Threshold | Haptic Torque Level | ||

|---|---|---|---|

| Low | Medium | High | |

| Mean (s) | 5.32 | 4.97 | 4.83 |

| Standard deviation (s) | 1.12 | 1.55 | 1.32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Babayi Semiromi, M.; Xing, Y.; Lv, C.; Brighton, J.; Zhao, Y. The Identification of Non-Driving Activities with Associated Implication on the Take-Over Process. Sensors 2022, 22, 42. https://doi.org/10.3390/s22010042

Yang L, Babayi Semiromi M, Xing Y, Lv C, Brighton J, Zhao Y. The Identification of Non-Driving Activities with Associated Implication on the Take-Over Process. Sensors. 2022; 22(1):42. https://doi.org/10.3390/s22010042

Chicago/Turabian StyleYang, Lichao, Mahdi Babayi Semiromi, Yang Xing, Chen Lv, James Brighton, and Yifan Zhao. 2022. "The Identification of Non-Driving Activities with Associated Implication on the Take-Over Process" Sensors 22, no. 1: 42. https://doi.org/10.3390/s22010042

APA StyleYang, L., Babayi Semiromi, M., Xing, Y., Lv, C., Brighton, J., & Zhao, Y. (2022). The Identification of Non-Driving Activities with Associated Implication on the Take-Over Process. Sensors, 22(1), 42. https://doi.org/10.3390/s22010042