Super-Pixel Guided Low-Light Images Enhancement with Features Restoration

Abstract

:1. Introduction

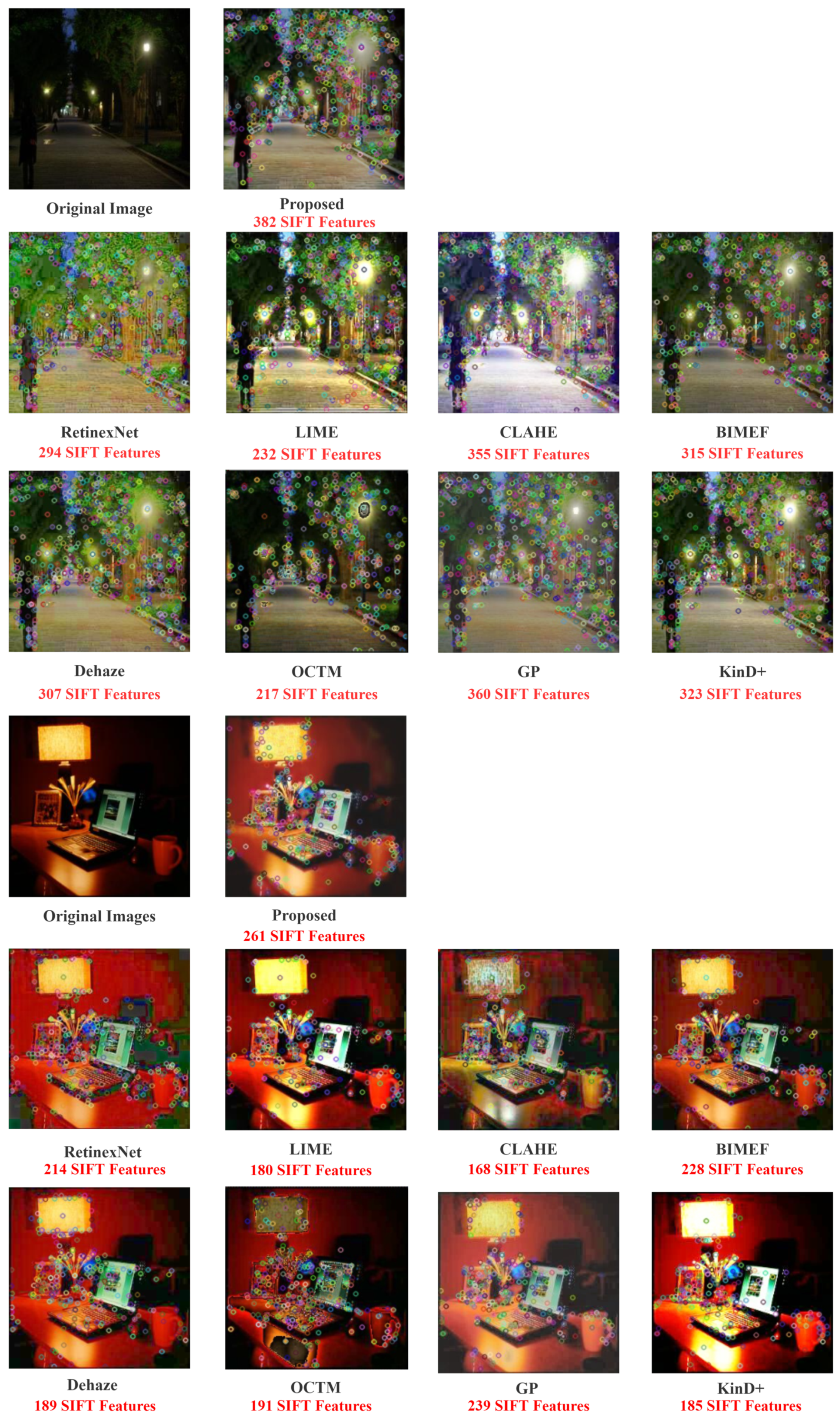

- We use CNN as the initial enhancement step, which can restore features from the original image, allowing us to retain more details in our enhancement results for subsequent feature matching, target detection, and other image processing operations.

- We propose a new local image enhancement method that utilizes super-pixel segmentation to obtain image regions and then enhances local information with ANP networks.

- Through extensive experiments on the synthesized low-light image and the real low-light image, we verify that our approach achieves excellent results in visual effect and image features.

2. Related Works

3. Proposed Method

3.1. The Overall Framework of Our Method

3.2. Initial Enhancement

3.3. Local Enhancement

3.3.1. The Super-Pixel Segmentation

3.3.2. ANP for Local Enhancement

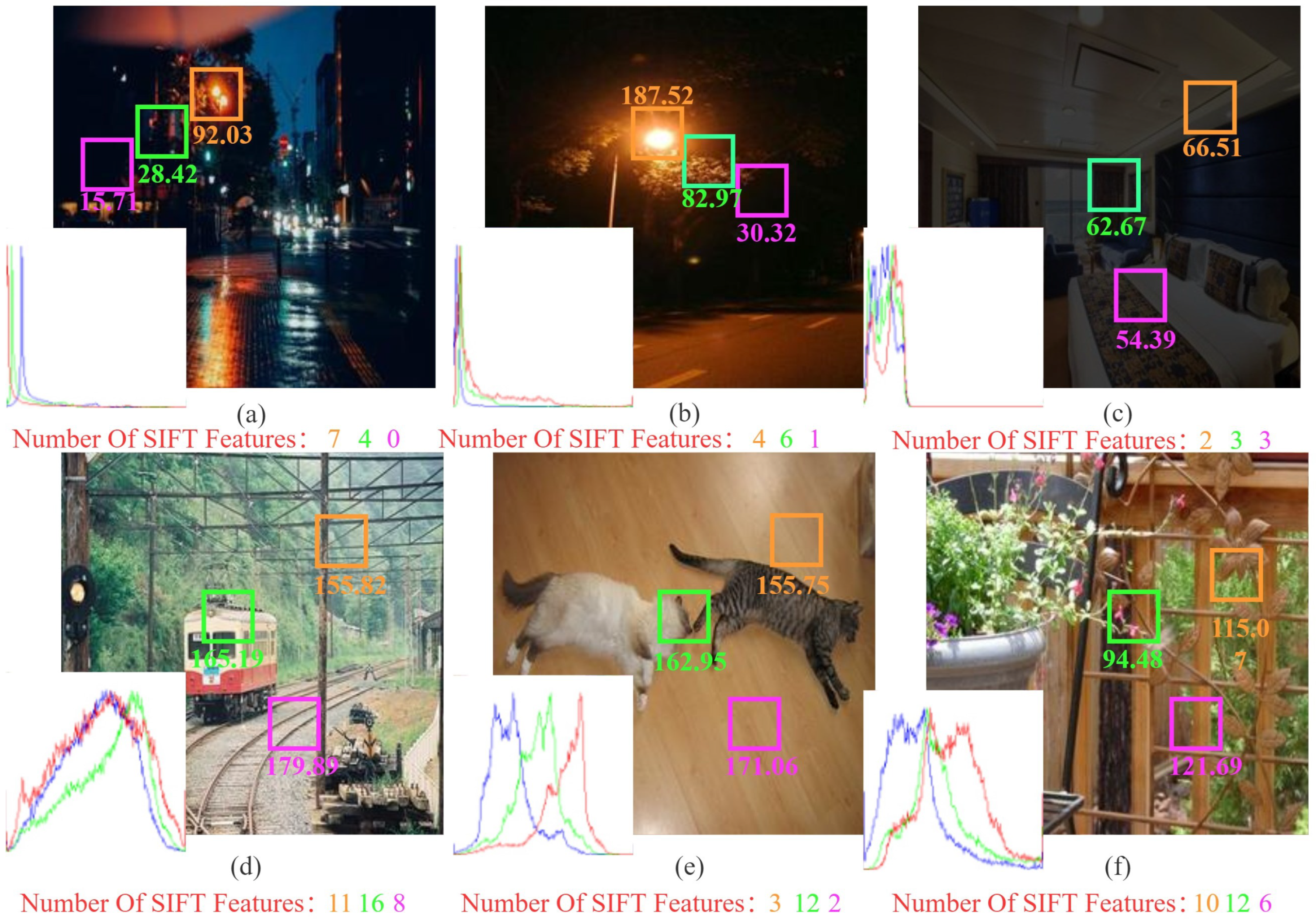

4. Experiments and Results Analysis

4.1. Implementation Details

4.2. Supervised Images Qualitative Evaluation

4.3. Real Low-Light Images Qualitative Evaluation

4.4. Object Detection Test

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| ANP | Attentive Neural Processes |

| NP | Neural Processes |

| CNP | Conditional Neural Processes |

| SSIM | Structural SIMilarity |

| PSNR | Peak Signal to Noise Ratio |

| NIQE | Natural Image Quality Evaluator |

| SIFT | Scale-Invariant Feature Transform |

| GP | Gaussian Process |

| ELBO | Evidence Lower Bound |

References

- Tang, Y.; Zhu, M.; Chen, Z.; Wu, C.; Chen, B.; Li, C.; Li, L. Seismic Performance Evaluation of Recycled aggregate Concrete-filled Steel tubular Columns with field strain detected via a novel mark-free vision method. Structures 2022, 37, 426–441. [Google Scholar] [CrossRef]

- Wang, W.; Wu, X.; Yuan, X.; Gao, Z. An Experiment-Based Review of Low-Light Image Enhancement Methods. IEEE Access 2020, 8, 87884–87917. [Google Scholar] [CrossRef]

- Li, X.; Jin, X.; Lin, J. Learning Disentangled Feature Representation for Hybrid-distorted Image Restoration. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Rahman, Z.; Aamir, M.; Pu, Y.; Ullah, F.; Dai, Q. A smart system for low-light image enhancement with color constancy and detail manipulation in complex light environments. Symmetry 2018, 10, 718. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Li, Z.G.; Wu, S.Q. Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors 2020, 20, 4614. [Google Scholar] [CrossRef] [PubMed]

- Park, S.; Kim, K.; Yu, S.; Paik, J. Contrast enhancement for low-light image enhancement: A survey. IEIE Trans. Smart Process. Comput. 2018, 7, 36–48. [Google Scholar] [CrossRef]

- Loh, Y.P.; Liang, X.F.; Chan, C.S. Low-light image enhancement using Gaussian Process for features retrieval. Signal Process. Image Commun. 2019, 74, 175–190. [Google Scholar] [CrossRef]

- Zhong, Y.H.; Gao, J.; Lei, Q. A Vision-Based Counting and Recognition System for Flying Insects in Intelligent Agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.H.; Chen, X.; Jiang, J.Y. A cascade reconstruction model with generalization ability evaluation for anomaly detection in videos. Pattern Recognit. 2021, 122, 108336. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.C.; Zou, X. High-accuracy multi-camera reconstruction enhanced by adaptive point cloud correction algorithm. Opt. Lasers Eng. 2019, 122, 170–183. [Google Scholar] [CrossRef]

- Tang, Y.; Li, L.; Wang, C. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. Integr. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Pisano, E.D.; Zong, S.; Hemminger, B.M. Contrast Limited Adaptive Histogram Equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Y.; Chen, Q. Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans. Consum. Electron. 1999, 45, 68–75. [Google Scholar] [CrossRef]

- Stephen, M.; Pizer, E.; Philip, A.; John, D. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar]

- Navdeep, S.; Kaur, L.; Singh, K. Histogram equalization techniques for enhancement of low radiance retinal images for early detection of diabetic retinopathy. Eng. Sci. Technol. Int. J. 2019, 22, 736–745. [Google Scholar]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z. Structure-Revealing Low-Light Image Enhancement Via Robust Retinex Model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, T.; Dong, J.; Yu, H. Underwater image enhancement via extended multi-scale Retinex. Neurocomputing 2017, 245, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 2002, 6, 965–976. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Yu, S.; Moon, B.; Ko, S.; Paik, J. Low-light image enhancement using variational optimization-based retinex model. IEEE Trans. Consum. Electron. 2017, 63, 178–184. [Google Scholar] [CrossRef]

- He, K.M.; Sun, J.; Tang, X.O. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Lian, X.; Pang, Y.; He, Y. Learning Tone Mapping Function for Dehazing. Cogn. Comput. 2017, 9, 95–114. [Google Scholar] [CrossRef]

- Dong, X.; Wang, G.; Pang, Y.; Li, W.X.; Wen, J.G.; Meng, W.; Lu, Y. Fast efficient algorithm for enhancement of low lighting video. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Barcelona, Spain, 11–15 July 2011. [Google Scholar]

- Parihar, A.S.; Jasuja, C.; Gupta, D. ConvNet Based Single Image Deraining Methods: A Comparative Analysis. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 500–505. [Google Scholar]

- Carré, M.; Jourlin, J. Extending Camera’s Capabilities in Low Light Conditions Based on LIP Enhancement Coupled with CNN Denoising. Sensors 2021, 21, 7906. [Google Scholar] [CrossRef] [PubMed]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. Available online: https://arxiv.org/abs/1808.04560 (accessed on 1 November 2020).

- Lv, F.; Lu, F.; Wu, J. MBLLEN: Low-Light Image/Video Enhancement Using CNNs. In Proceedings of the British MachineVision Conference (BMVC), Newcastle, UK, 3–6 September 2018. [Google Scholar]

- He, K.M.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. IEEE Int. Conf. Comput. Vision (ICCV) 2016, 1, 1026–1034. [Google Scholar]

- Hahnloser, R.H.; Sarpeshkar, R.; Mahowald, M.A.; Douglas, R.J.; Seung, H.S. Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit. Nature 2000, 405, 947–951. [Google Scholar] [CrossRef] [PubMed]

- Moore, A.P.; Prince, S.J.D.; Warrell, J.; Mohammed, U.; Jones, G. Superpixel lattices. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Ssstrunk, S. SLIC Superpixels Compared to State-of-the[1] Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pedro, F.; Daniel, H. Efficient graph-based image segmentation. Int. J. Comput. Vision IJCV 2004, 59, 167–181. [Google Scholar]

- Garnelo, M.; Schwarz, J.; Rosenbaum, D.; Viola, F.; Rezende, D.J.; Eslami, S.M.; Teh, Y.W. Neural Processes. Available online: https://arxiv.org/abs/1807.01622 (accessed on 1 November 2020).

- Garnelo, M.; Rosenbaum, D.; Maddison, C.J.; Ramalho, T.; Saxton, D.; Shanahan, M.; Teh, Y.W.; Rezende, D.J.; Eslami, S.M. Conditional Neural Processes. Available online: https://arxiv.org/abs/1807.01613 (accessed on 1 November 2020).

- Kim, H.; Mnih, A.; Schwarz, J.; Garnelo, M.; Eslami, A.; Rosenbaum, D.; Vinyals, O.; Teh, Y.W. Attentive Neural Processes. Available online: https://arxiv.org/abs/1901.05761 (accessed on 1 November 2020).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Neural Inf. Process. Syst. NIPS 2017, 17, 6000–6010. [Google Scholar]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational Inference: A Review for Statisticians. Available online: https://arxiv.org/abs/1601.00670 (accessed on 1 November 2020).

- Guo, X.J.; Li, Y.; Ling, H. LIME: Low-light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization. Graph. Gems. 1994, 4, 474–485. [Google Scholar]

- Ying, Z.Q.; Li, G.; Gao, W. A Bio-Inspired Multi-Exposure Fusion Framework for Low-Light Image Enhancement. Available online: https://arxiv.org/abs/1711.00591 (accessed on 1 November 2020).

- Wu, X.L. A Linear Programming Approach for Optimal Contrast-Tone Mapping. IEEE Trans. Image Process. 2011, 20, 1262–1272. [Google Scholar]

- Zhang, Y.; Guo, X.; Ma, J. Beyond Brightening Low-light Images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Hor, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the Exclusively Dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Bovil, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, W.; Jiang, Q.; Wang, Y. Blind Quality Assessment for Image Superresolution Using Deep Two-Stream Convolutional Networks. Inf. Sci. 2021, 570, 848. [Google Scholar] [CrossRef]

- Fang, Y.; Zhang, C.; Yang, W.; Liu, J.; Guo, Z. Blind visual quality assessment for image super-resolution by convolutional neural network. Multimed. Tools Appl. 2018, 77, 1–18. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. Available online: https://arxiv.org/abs/1804.02767 (accessed on 1 November 2020).

| Method | Dark Image | LIME | DeHaze | CLAHE | RetinexNet | KinD+ |

|---|---|---|---|---|---|---|

| PSNR | 12.523 | 16.345 | 17.369 | 19.014 | 16.223 | 23.016 |

| SSIM | 0.410 | 0.677 | 0.883 | 0.792 | 0.748 | 0.870 |

| Method | BIMEF | OCTM | GP | CNN in Stage 1 | Remove Super Pixel Segmentation | Proposed |

| PSNR | 17.629 | 13.837 | 21.442 | 22.170 | 22.845 | 23.402 |

| SSIM | 0.866 | 0.629 | 0.891 | 0.902 | 0.898 | 0.916 |

| Method | LIME | DeHaze | CLAHE | Retinex Net | GP | BIMEF | OCTM | KinD+ | Proposed |

|---|---|---|---|---|---|---|---|---|---|

| NIQE | 3.6680 | 3.3621 | 4.2952 | 3.5205 | 2.4387 | 2.5294 | 4.7017 | 2.5186 | 2.2490 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Yang, Y.; Zhong, Y.; Xiong, D.; Huang, Z. Super-Pixel Guided Low-Light Images Enhancement with Features Restoration. Sensors 2022, 22, 3667. https://doi.org/10.3390/s22103667

Liu X, Yang Y, Zhong Y, Xiong D, Huang Z. Super-Pixel Guided Low-Light Images Enhancement with Features Restoration. Sensors. 2022; 22(10):3667. https://doi.org/10.3390/s22103667

Chicago/Turabian StyleLiu, Xiaoming, Yan Yang, Yuanhong Zhong, Dong Xiong, and Zhiyong Huang. 2022. "Super-Pixel Guided Low-Light Images Enhancement with Features Restoration" Sensors 22, no. 10: 3667. https://doi.org/10.3390/s22103667

APA StyleLiu, X., Yang, Y., Zhong, Y., Xiong, D., & Huang, Z. (2022). Super-Pixel Guided Low-Light Images Enhancement with Features Restoration. Sensors, 22(10), 3667. https://doi.org/10.3390/s22103667