1. Introduction

In the last years, digitization and the Internet of Things has arrived in industry. It led to the fourth industrial revolution and, in addition to smart manufacturing and cyber-physical systems, the Industrial Internet of Things (IIoT) evolves [

1]. Due to the new organization design principles in Industry 4.0 [

1] and new business models, especially an increasing number of data-driven business models, the demand for edge computing is growing. Data analysis close to the data acquisition, processed on so-called edge devices for low latency and more data security, gains relevance compared to the currently prevailing cloud-based approaches [

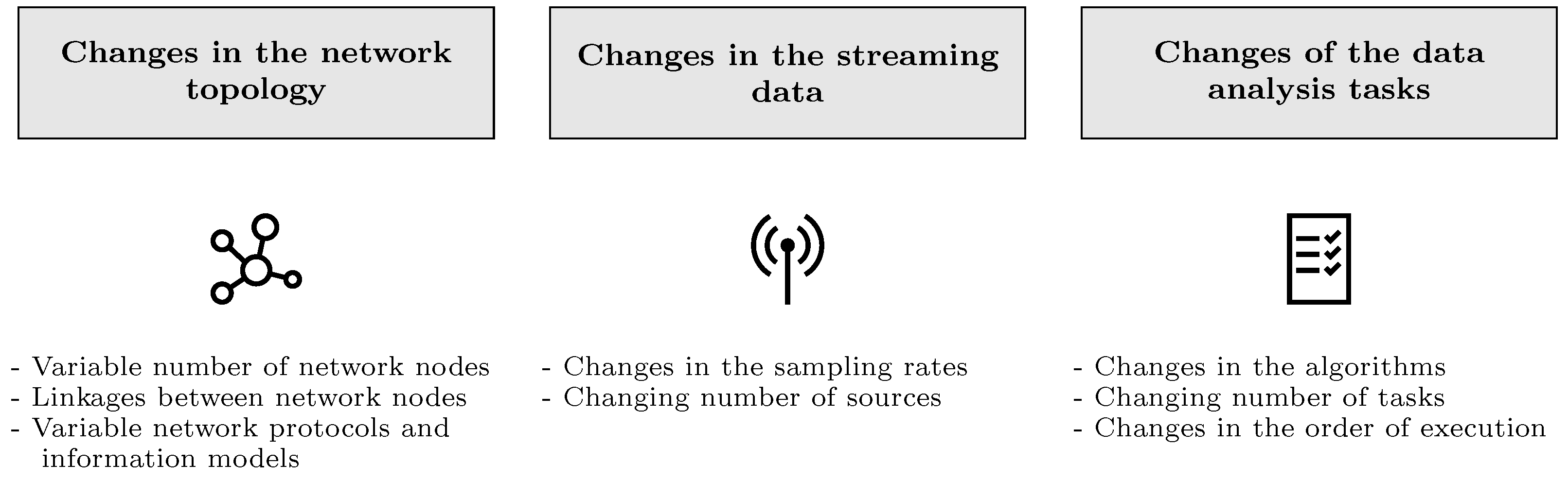

2]. An optimal usage of the generally scarce resources, namely CPU, RAM and memory, of edge devices, as well as bandwidth, is needed. Each device itself is very limited, but making optimized use of all available resources of large IIoT networks can push data analysis and other computing tasks to the edge. As the IIoT has the structure of a dynamic mesh net, one of the most important prerequisites is the capability to handle dynamic changes. In this work, a systematic overview of possible dynamic changes in IIoT networks is given (see

Figure 1). They are divided into three groups. The first group includes the most obvious changes, i.e., changes in the network topology like linkages and network nodes. The two other groups consider changes from the perspective of the purpose of the network, namely changes in the transmitted data and in the applications carried out. They are also considered increasingly relevant for the next generation of distributed stream processing systems. As the fourth generation combines processing on edge and cloud, it is expected that the main processing in the fifth generation will run in the edge.

To maximize edge computing on IIoT network devices while taking into account load balancing across the network, a system for intelligent resource allocation is proposed that meets the following requirements.

RQ1: The system should be adaptive to dynamic changes in the network.

RQ2: Data loss due to overload has to be avoided.

RQ3: No additional hardware should be required for the resource allocation.

RQ4: The maximum number of different computing tasks is not specified and must match current and future requirements.

RQ5: The system should be agnostic for different sensor signals.

RQ6: The system should be able to handle streaming data processing tasks.

RQ7: The parameterization effort should be low.

In large decentralized and dynamic systems, static programming is no longer sufficient. Modern approaches based on machine learning are said to be a more promising solution. In particular, Reinforcement Learning (RL) systems that learn via trial and error are suited for problems to be solved by sequential decision-making. So far, RL is typically applied to tasks in the fields of gaming, logistics, robotics and routing [

3].

Chen et al. [

4] identify the IIoT as one of the main scopes of application of Deep Reinforcement Learning (DRL). In the context of enabling edge computing, the computing tasks to be performed, especially data processing, can be intelligently allocated by RL agents according to the available resources of each network participant and the available bandwidth for transmission. Thus, available resources can be optimally utilized and further computations can increasingly be executed even on resource-limited devices. Different architectures, namely single-agent RL, centralized multi-agent reinforcement learning (MARL) and fully decentralized MARL, are compared and result in the latter to be the preferred approach. This article describes a possible implementation and its evaluation of the very basic idea originally sketched in [

5]. As proposed in [

5], each device runs its own agent to allocate its resources, and the decision-making takes place sequentially. This study differentiates from the previous study in the change to static-sized state and action spaces, the change of the observed resources (bandwidth, CPU, partly RAM but not hardware memory), the detailed description of the implementation, the comparison of different architectures and the evaluation. In [

5], neither bandwidth allocation nor dynamic changes in the number of network participants and network linkages were taken into account. The main contributions of this study can be summarized as follows:

A systematic overview on dynamic network environments and approaches for handling different changes during runtime are presented.

A MARL system for intelligent allocation of IIoT computing resources is described.

A MARL system for intelligent bandwidth allocation is described.

Enabling edge computing in IIoT through cooperation of two independent MASs for resource allocation is demonstrated and evaluated.

A comparison of different architectures of DRL-agent systems is drawn.

The remainder of this article is organized as follows:

Section 2 describes the state of the art of MARL for resource allocation in Industry 4.0, followed by an introduction of the relevant backgrounds of RL in

Section 3. In

Section 4, the detailed description of the proposed decentralized agent systems as well as their interactions are introduced. It is followed by comparisons of different possible architectures for problem–solution.

Section 5 contains the validation focusing the low complexity for implementation of MASs in IIoT, the resource allocation itself and the ability to handle changes in topology.

Section 6 concludes with a discussion and outlook for future work.

2. Related Work

Several RL systems for the optimization of different resources, e.g., production machine load, energy, computational load or bandwidth, with different optimization goals, e.g., energy efficiency, latency or load balancing, are already presented in literature. This section summarizes existing work on single- and multi-agent RL approaches for optimization of resource usage with focus on industrial applications.

In the industrial context, job shop scheduling (JSP) is one of the relevant tasks that is increasingly solved by RL systems. Wang et al. [

6] use DRL for dynamic scheduling of jobs for a balanced machine utilization in smart manufacturing. In [

7,

8], two examples for energy optimization in cyber-physical production systems are presented and, in [

9], real-time requirements for JSP fulfilled by a system of heterogeneous agents.

In the context of IIoT networks, the most common way to handle the resource limitations are computation offloading and mobile edge computing. In [

4], Chen et al. provide a method for single-agent based DRL for dynamic resource management for mobile edge computing with respect to latency, energy efficiency and computation offloading for industrial data processing. For task offloading in IoT via single-agent DRL, a couple of further examples [

10,

11] exist already, as well as a few approaches based on multi-agent DRL [

12,

13,

14]. For IoT networks in general, Lui et al. [

12] propose a decentralized MARL for resource allocation that is used for the decision about computation offloading to a local server. Considering the specific challenges in industry, a MAS for computation offloading is presented in [

13] but, different from the work of Liu et al. [

12], using DRL. In contrast to our work, mobile edge computing applications generally offload tasks to an edge server instead of resource-limited IIoT edge devices. The main differences of our solution to these task offloading optimization problems are, firstly, the limited computing resources of the IIoT devices instead of offloading to the nearly unlimited servers in the fog and, secondly, the assumed infinity of the streaming data processing tasks; thus, metrics like the absolute number of CPU cycles for task execution are not known a priori. These challenges of distributed streaming data processing instead of finite task processing are considered in [

15]. However, the solution is based on single-agent DRL and is not suitable to solve the challenges of dynamic networks due to the predefined size of the action space, which depends on the number of machines. A more flexible approach for data stream processing using MAS is presented in [

16], using model-based RL for intelligent resource utilization.

Apart from JSP and computation offloading, a third field of application, communications and networking, is of increasing relevance [

3]. DRL is a key technology for IIoT and, according to Chen et al. [

4], resource optimization in industrial wireless networks is one of the main application fields. It is to differ between two main challenges in the context of communication resource optimization: firstly, resource allocation, i.e., channel allocation, frequency band choice, etc., and secondly, routing problems that are solved by finding the best transmission way through the network for a defined source and destination. In the context of resource allocation, the focus is on intelligent choice of communication parameters, e.g., frequency band, considered by Ye et al. [

17] for vehicle-to-vehicle communication, where each vehicle or vehicle link represents an agent, and by Li and Guo [

18], who use MARL for spectrum allocation for device-to-device communications. Gong et al. [

19] present a multi-agent approach for minimizing energy consumption and latency in the context of perspective 6G industrial networks. The agents decide about task scheduling, transmission power and CPU cycle frequency.

Instead of allocating the resources for transmission, the choice of the best transmission path is the goal of RL systems for routing. For single-agent approaches, the agent chooses the whole path from source to sink [

20]. Liu et al. [

21] present a DRL routing method. The path is chosen under consideration of different resources like cache and bandwidth. To provide more scalability, MARL systems are often the preferred solution for decentralized applications. Thus, the systems are based on multi-hop routing as shown in [

22,

23,

24]. These approaches differ from our work as the destination is known in advance, while our system searches for a suited destination for the processing. Nevertheless, the next-hop approach is still transferable assuming that the next neighbor could be a suitable destination.

Especially in case of wireless sensor networks and energy-harvesting networks, where energy is the most limited resource, DRL applications increasingly focus on energy usage optimization as it is limiting both computation and communication [

22,

25,

26,

27].

Another RL application field of high interest is load balancing. In the recent work of Wang et al. [

28], DRL is used for latency improvement and load balancing for 5G in IIoT in a federated learning architecture. According to [

29], most existing load balancing solutions are of centralized structure in decision-making and, thus, limited in effectiveness in large networks. The authors propose a multi-edge cooperation but still stick to a single-agent approach. MARL for load balancing is examined in [

30] for controller load in software-defined networks. The problem definition is single objective as in [

31], where MARL enables a load-balancing control for smart manufacturing. However, the cloud assistance needed contradicts our requirements. Load balancing is not an explicit goal of our work but is also indirectly covered by the proposed system presented in this work. Due to the goal of maximizing usage of the available resources and the assumption that the resources are always too scarce for the computational and transmission needs, resource usage over the whole network is expected to be balanced around the specified threshold.

The main objective, the combined optimization of both computing and communication resource allocation, is of great novelty, especially in the context of the IIoT. Thus, few studies [

11,

14] have considered this optimization potential so far. Our proposed system of two interacting MASs based on DRL differentiates these two approaches as follows:

Architecture: In contrast to the proposed fully decentralized system, the existing approaches are either a single-agent [

11] or a centralized MAS [

14].

Dynamic changes: Due to the centralized architectures, the adaptivity to dynamic changes is not given in the existing studies.

Field of application: Only one of the existing algorithms is developed for application in industry [

14].

Data: Both existing algorithms are not suited for resource allocation for streaming data processing tasks.

Objective: The main objective in this work is to maximize the edge computing using available edge resource rather than minimizing routing and computation delays [

11,

14].

In summary, there is no work known that considers the allocation of IIoT edge device resources for streaming data processing tasks and further edge computations in dynamic IIoT networks. Furthermore, no approaches of two interacting MARL systems in the context of network and computational resource allocation have been presented so far. The current relevance of the topic is evidenced by the high degree of topicality of the related work.

3. Background

RL is a research field of machine learning in which an agent learns via trial and error. As shown on the left side of

Figure 2, the single agent takes an action

a depending on the current state

of the environment and receives a reward

r. This is mathematically described by the Markov Decision Process (MDP). The reward value depends on its current state

and the next state

the action

puts him into, i.e., whether the chosen action is expedient for the goal achievement or not. With the long-term goal of maximizing the discounted reward

, the agent tries to learn an optimal policy

, i.e., the mapping of current state and action for the best way to solve his mission. On the right side, the analogous structure of a system with more than one agent, i.e., a MAS, is illustrated. For MAS, the Markov Game (MG) can be used for mathematical description instead of the MDP.

3.1. Mathematical Preliminaries

In general, the simplest way to describe an RL system is the above-mentioned MDP. According to [

32,

33], the MDP is defined as 5-tupel

with

Set of states, ;

Set of actions, ;

Transition function, ;

Reward function, ;

Discount factor, .

The transition function

P describes the probability that the environment passes at time

t due to action

from state

into

, where applies

. For this transition, the agent receives a reward

r according to

. Based on the policy

, the agent chooses an action

[

32,

34]. If the transition function and the reward function are explicitly known, e.g., by a model, the optimal policy could be found with one of the standard procedures, e.g., value iteration or policy iteration. Without this prior knowledge, model-free RL methods are to be applied [

32,

35].

The MDP assumes the environment to be fully observable, i.e., the observation space

is equal to the state space

S. If only parts of the environment can be observed by the agent, a generalization of the MDP, the so-called partially observable MDP (POMDP), applies, which is defined by the 7-tupel

[

32,

33]. The 7-tupel extends the MDP as follows:

Set of observations, ;

Observation function, .

The observation function describes the probability that the agent has the observation at the time t when the environment changes due to action from in .

As the MDP and POMDP are only suited for single-agent setups, further generalizations have to be considered for the description of the interaction of more than one agent with the environment. For a mathematical description of MAS, the MG, also called Stochastic Game (SG) [

36], is used instead of the MDP. According to [

32], the MG is defined by the 6-tupel

. It applies:

Set of all agents, and ;

Set of all states, ;

Set of all actions of the; agents i, and ;

Transition function, ;

Reward function of the agent i, ;

Discount factor, .

Each agent

i has the goal to find its optimal policy

with

. Depending on the state

at the time

t, each agent

i takes an action

simultaneously. The reward function

rates the transition to

. The transition function

describes the probability of this state transition.

Figure 2 compares the MDP and MG.

Analogous to the POMDP for single agents, the POMG is a generalization of the MG that considers the partial observability of real-world applications and is defined, according to [

37], by the 8-tupel

. It extends the MG as follows:

Set of all observations of the agent i, and ;

Observation function, .

The observation function describes the probability for the observation at the time t for agent i, when the environment passes from into due to action .

Under the assumption that all agents act simultaneously and are homogeneous, and thus interchangeable, and consequently have the same reward function, a fully observable system can be classified as a so-called Team Game [

32], also called multi-agent MDP (MMDP) [

38], which is a special case of the MG. This is further generalized by the decentralized POMDP (Dec-POMDP) that is similar to the MMDP but where agents only partially observe the entire state [

38].

The mathematical model that considers a sequential—instead of simultaneous—decision-making of the agents is the Agent Environment Cycle (AEC) game [

39]. In [

37], Terry et al. prove that, for every POMG, an equivalent AEC game exists and vice versa. Thus, the methods AEC game and POMG are equivalent. The 11-tupel

,

defines the AEC game according to [

37], extended by the discount factor for the purpose of unification to previous described processes. The following adaptions are made in comparison to the POMG definition:

Transition function of the agents, ;

Transition function of the environment, ;

Set of all possible rewards for agent i, ;

Reward function for agent i, ;

Next agent function, .

At time

t, agent

i has an observation

with the probability of the observation function

and consequently takes the action

. It is to differentiate between two cases for status update from

to

. For environment steps, (

) applies that the next state

is random and occurs with the probability of the transition function

P. Otherwise, for agent steps

, a deterministic state transition according to the transition function

takes place [

39]. Afterwards, the next agent

with the probability of the next-agent function

is chosen. The reward function

gives the probability that agent

i receives the reward

r if the action

of agent

j leads to the transition from

to

.

Figure 3 clarifies the described relations of the decision processes in a venn diagram.

MASs () can be further distinguished by the following criteria:

Timing of actions: sequential or simultaneous decision-making.

Reward function: unique or shared reward function.

Agent types: homogeneous or heterogeneous agents.

Interaction: cooperative or competitive behaviour.

Training architecture: central training + central execution (CTCE), central training + decentral execution (CTDE), decentral training + decentral execution (DTDE).

3.2. Reinforcement Learning Algorithms

As the execution of DRL systems only depends on the trained policy, i.e., deep neural networks, the training of the neural networks requires further attention. The learning approaches are to be distinguished by the following criteria:

3.3. Network Topology of the Industrial Internet of Things

The IIoT is one of the main components of the Industry 4.0 [

1]. The network consists of a large number of participants, primarily industrial edge devices like smart sensors or control units, with limited resources, but it can also have linkages to private or public clouds and local servers. In general, its topology is assumed to be a mesh network. This means the participants have a variable number of linkages between each other but need not to be fully connected.

6. Discussion

The proposed MARL-based approach for optimal edge resource usage consists of two interacting multi-agent DRL systems that are able to allocate computational power and bandwidth in dynamic IIoT networks to enable edge computing in the IIoT. The requirement of adaptivity to various dynamic changes is fulfilled, firstly, by the system structure subdivided into the smallest units and sequential decision-making for full adaptivity in the number of tasks, nodes and linkages and, secondly, by the training process not limited to a specific data stream or algorithms to compute for achieving the agents to act in a generic manner. A comparison of the three approaches with different degrees of decentrality is drawn, and the preferred architecture, a fully decentralized system, is selected under consideration of the specified requirements and network topology.

The suitability of RL-based resource allocation on resource-limited IIoT devices is confirmed by evaluation results. The experiments show that the proposed system is of low computational complexity, decision-making is very fast and the models are of small size; thus, it is suited for application on resource-limited IIoT devices. Both traffic overhead and latency between the first decision about a new task and the successful forwarding to an edge device that can compute the task depend on the overall CPU and bandwidth load in the network. Both increase when the resources are highly utilized, which complicates the resource allocation, and more decisions, hops and time are needed. While agent decision-making is very fast and takes only milliseconds, the overall latency needs to be improved as it is many times higher. This is caused by the number of decisions and the time span between the request of MAS2 agents and response of the neighbor’s available computing resources (states ). To improve performance, the change to other programming languages like C or C++ should be considered.

In future work, experiments will be extended by evaluation of the success rate of task execution depending on the overall resource usage and performance measurements in dealing with dynamic network changes. Furthermore, the proposed system should be evaluated in a real IIoT network, e.g., a production site, with a higher number of nodes and linkages. In addition, a comparison to a manual, static task allocation would be helpful to be better able to classify the results. As most data processing is still done via cloud computing and there is not a state-of-the-art method for edge task allocation yet, it is difficult to draw this comparison. In a further step, the following aspects should additionally be considered: In MAS1, the state space could be extended by the resources RAM and hardware memory as well as the already needed hops. In MAS2, the priority of data or task as well as permissions for reading the data of the respective IIoT network participants should be considered. As mentioned above, the proposed global reward and a combined training of both systems would be interesting to evaluate as it is expected to improve results. A further improvement could be a managed degree of difficulty of processing an algorithm. The MARL agents could decide to execute the algorithm in a mode of heavy or light computational load.