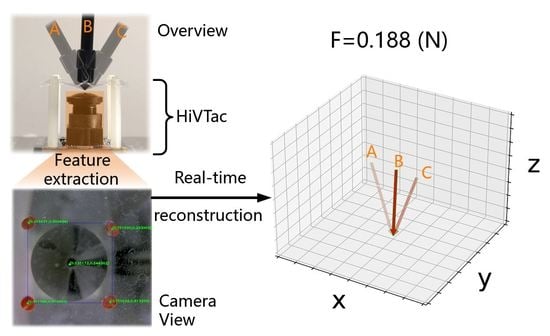

HiVTac: A High-Speed Vision-Based Tactile Sensor for Precise and Real-Time Force Reconstruction with Fewer Markers

Abstract

:1. Introduction

2. Related Work

2.1. Vision-Based Tactile Sensors with and without Markers

2.2. Elastomer in Vision-Based Tactile Sensors with Markers

2.3. Measuring the Magnitude of External Forces by Vision-Based Tactile Sensors

2.4. Measuring the Direction of External Forces by Vision-Based Tactile Sensors

2.5. Operating Frequency of Vision-Based Tactile Sensors

2.6. Device Size and Geometry

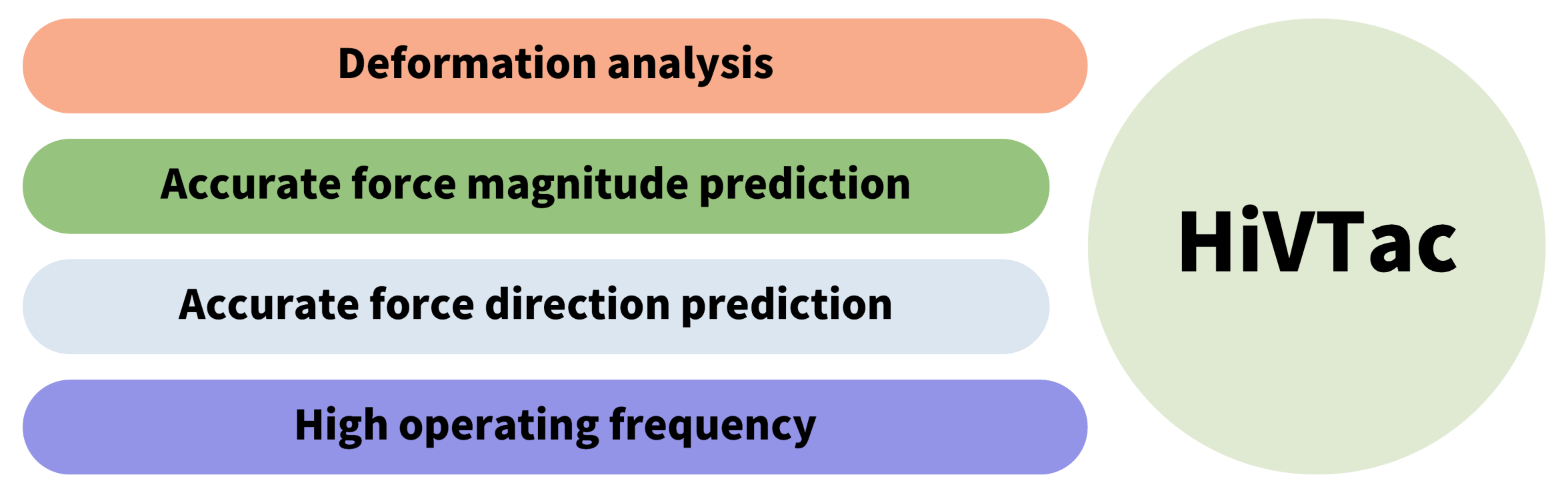

Contribution of This Work

- We establish a deformation model for a thin-film elastic layer in a vision-based tactile sensor;

- We reconstruct the vector of the external force (both magnitude and spatial direction) with high accuracy;

- We reduce the amount of markers to be tracked to achieve a higher sampling rate than vision-based tactile sensors, tracking tens or even hundreds of markers at the same time, although this brings an application limitation to the proposed device.

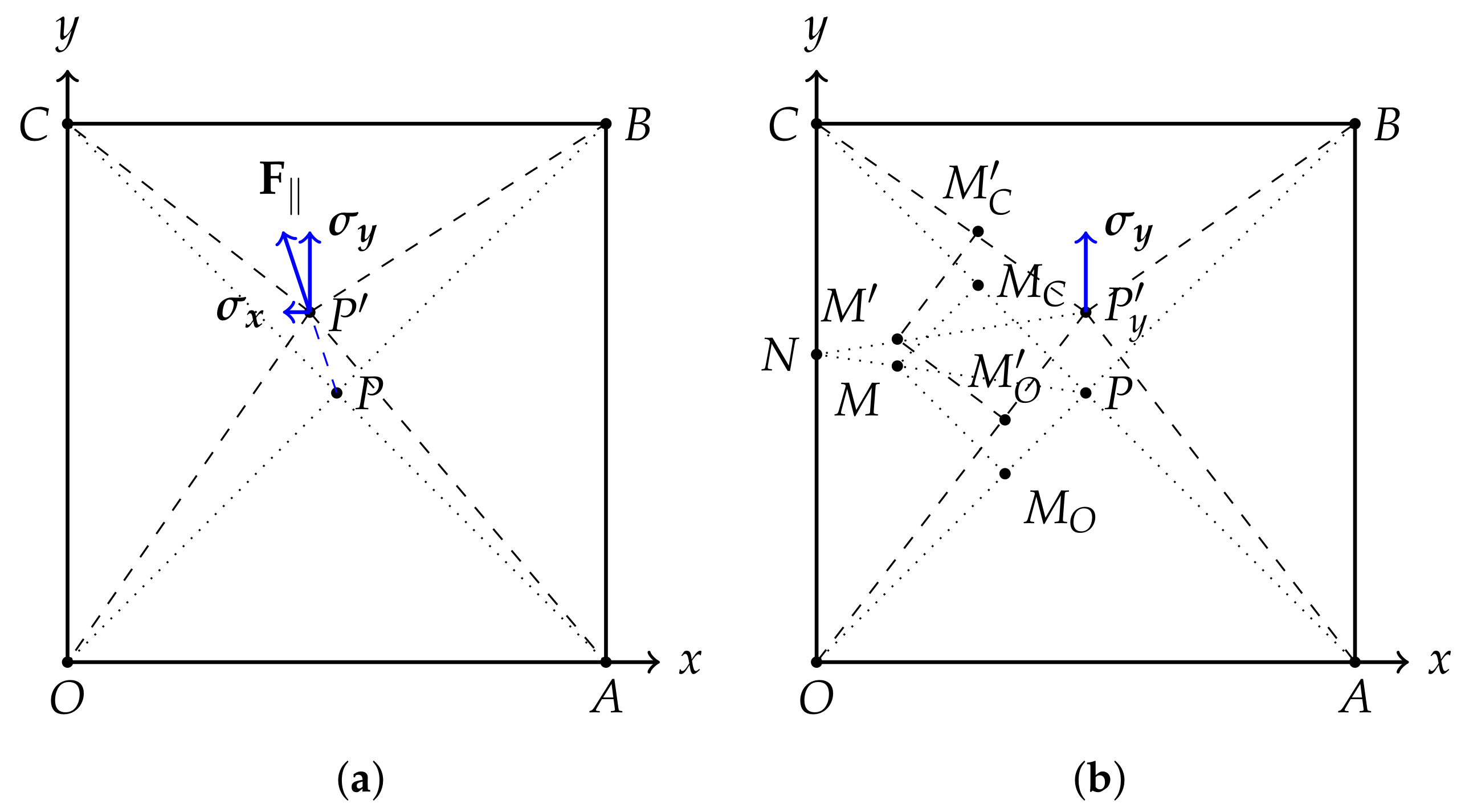

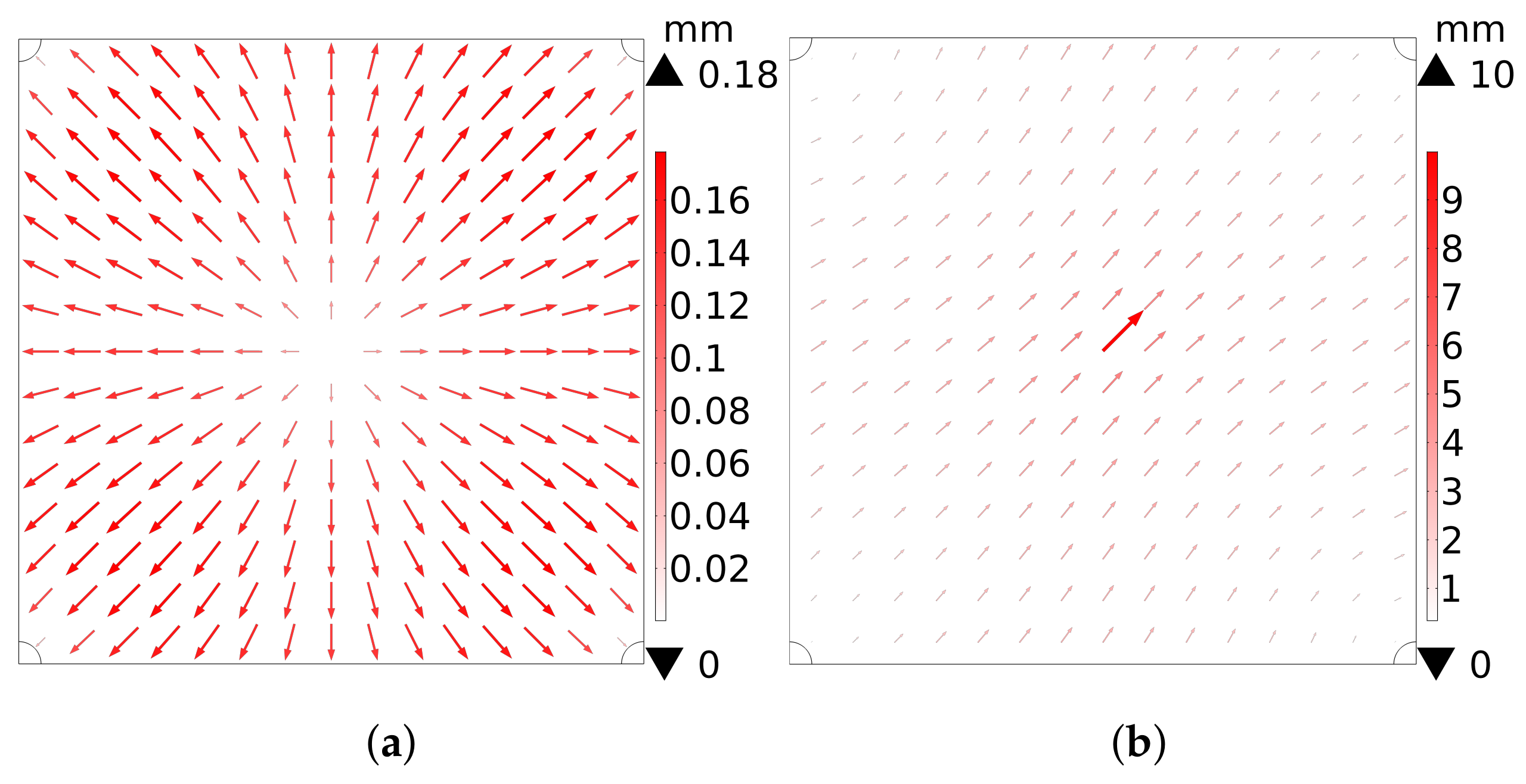

3. Deformation Model and Corresponding Simulation

- Only a stretching force between the load point and the four fixed corners of the square elastic layer contributes to the deformation of the elastic layer;

- For simplification, only deformations in the radial direction of the load point are taken into consideration. Deformations in other directions caused by internal stress are ignored;

- The external force, without torsion, is always applied near the center of the elastic layer in the proposed device;

- Deformations on the four sides of the square elastic layer are small and have little effect on the corresponding analysis, so they are ignored and not reflected in any of the following relevant figures.

3.1. Normal Force

3.2. Shear Force

3.3. Finite Element Simulation of Elastic Layer under Normal and Shear Force

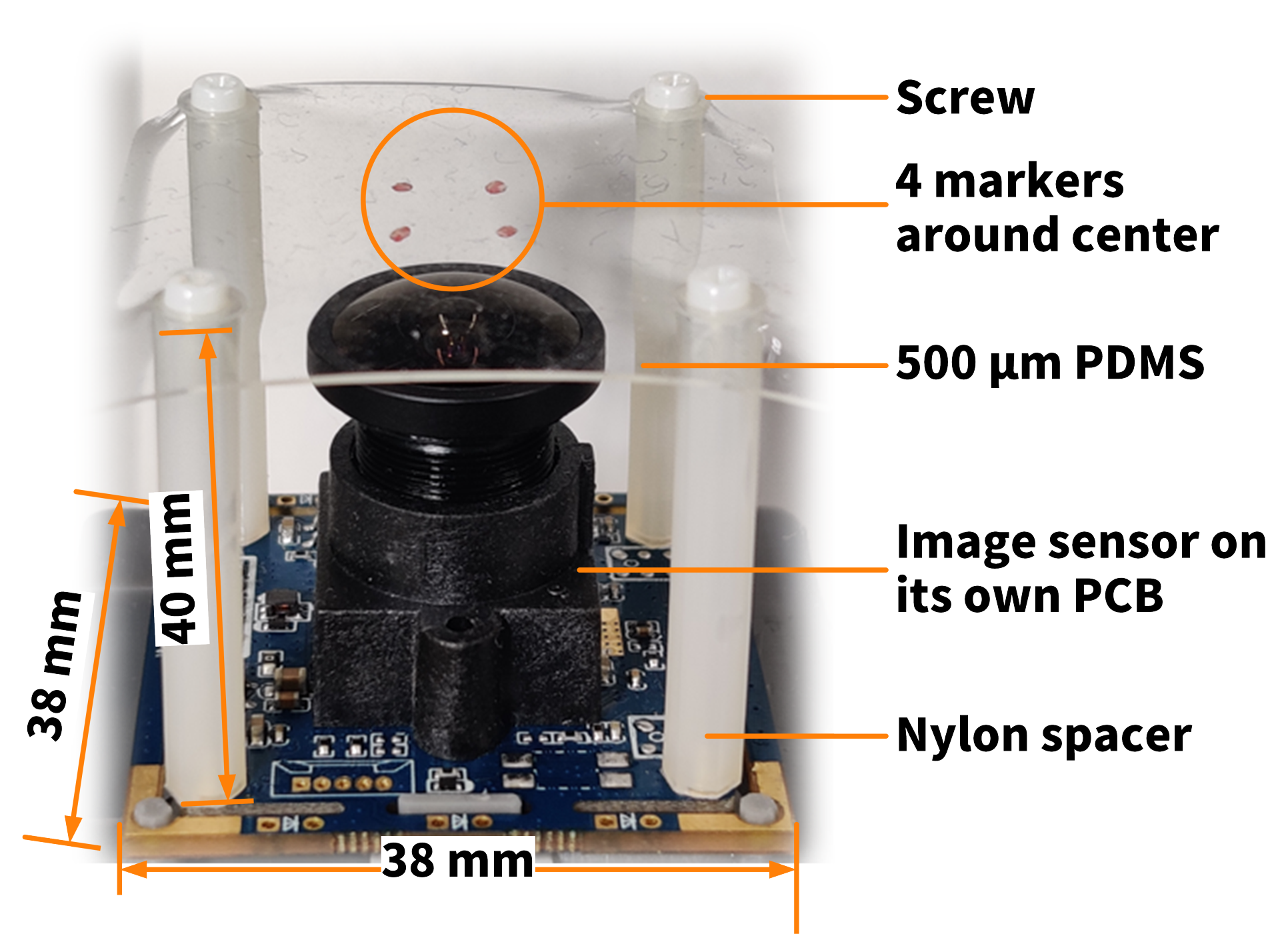

4. Design and Fabrication of the Device

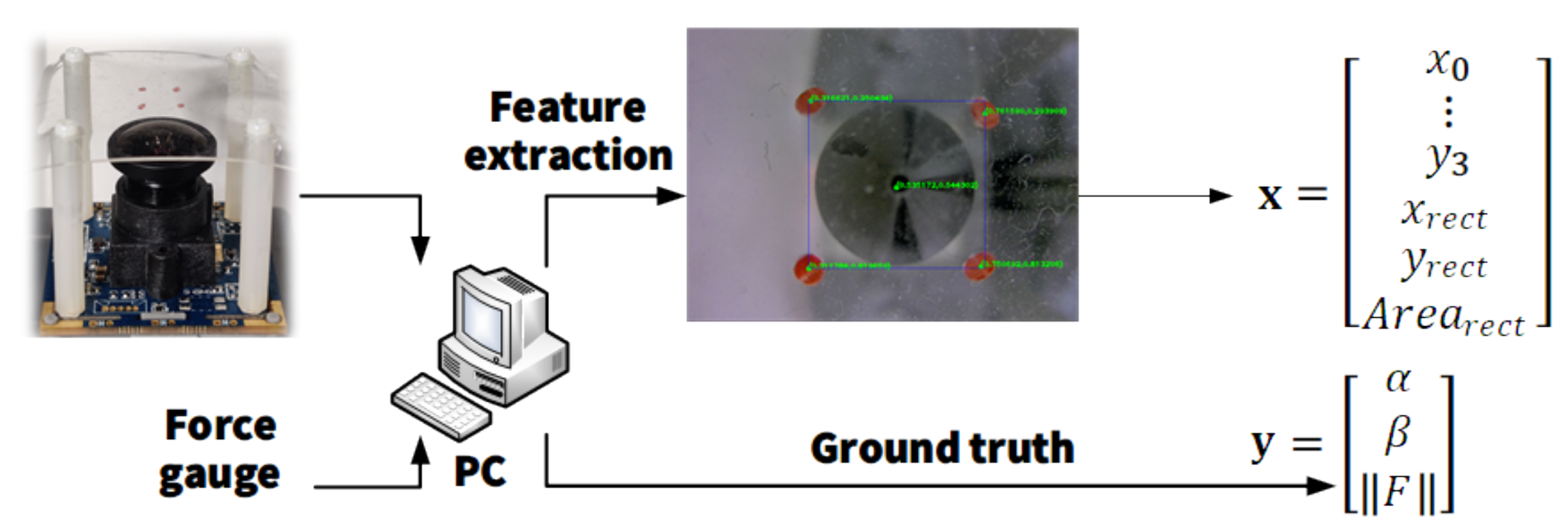

5. Force Vector Reconstruction

6. Experiment

7. Results and Discussion

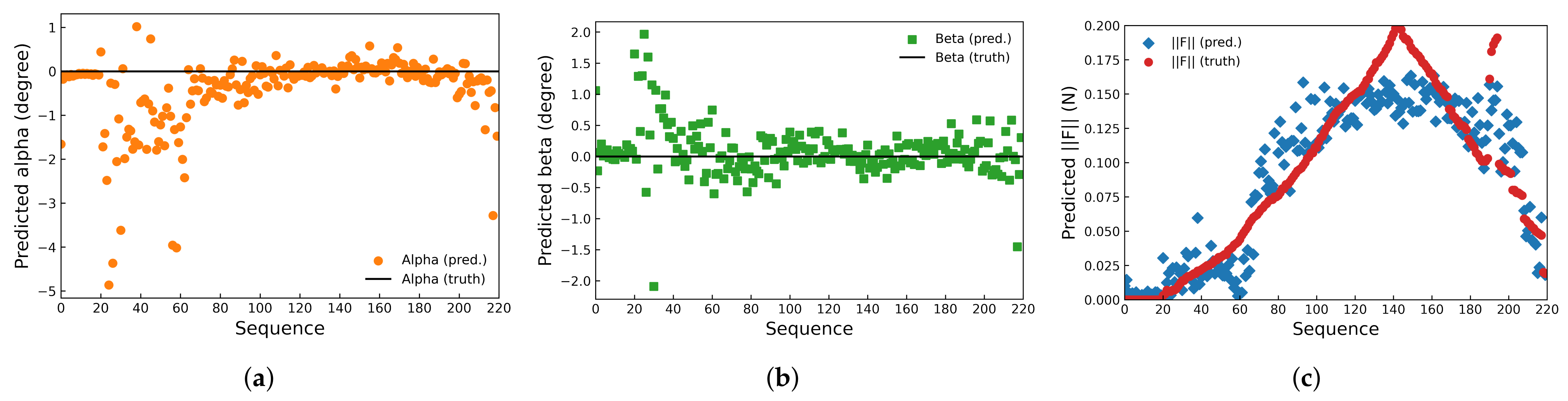

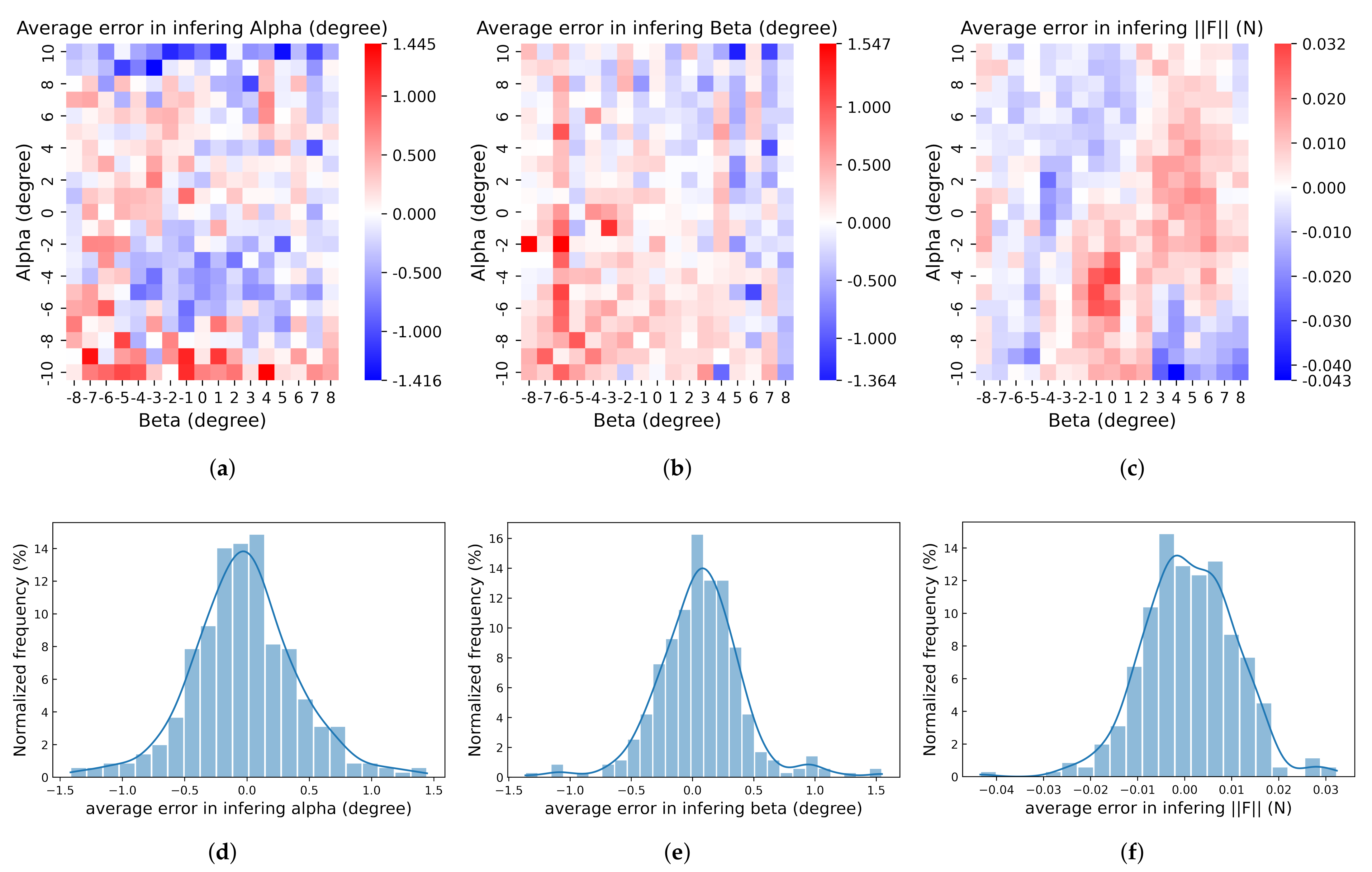

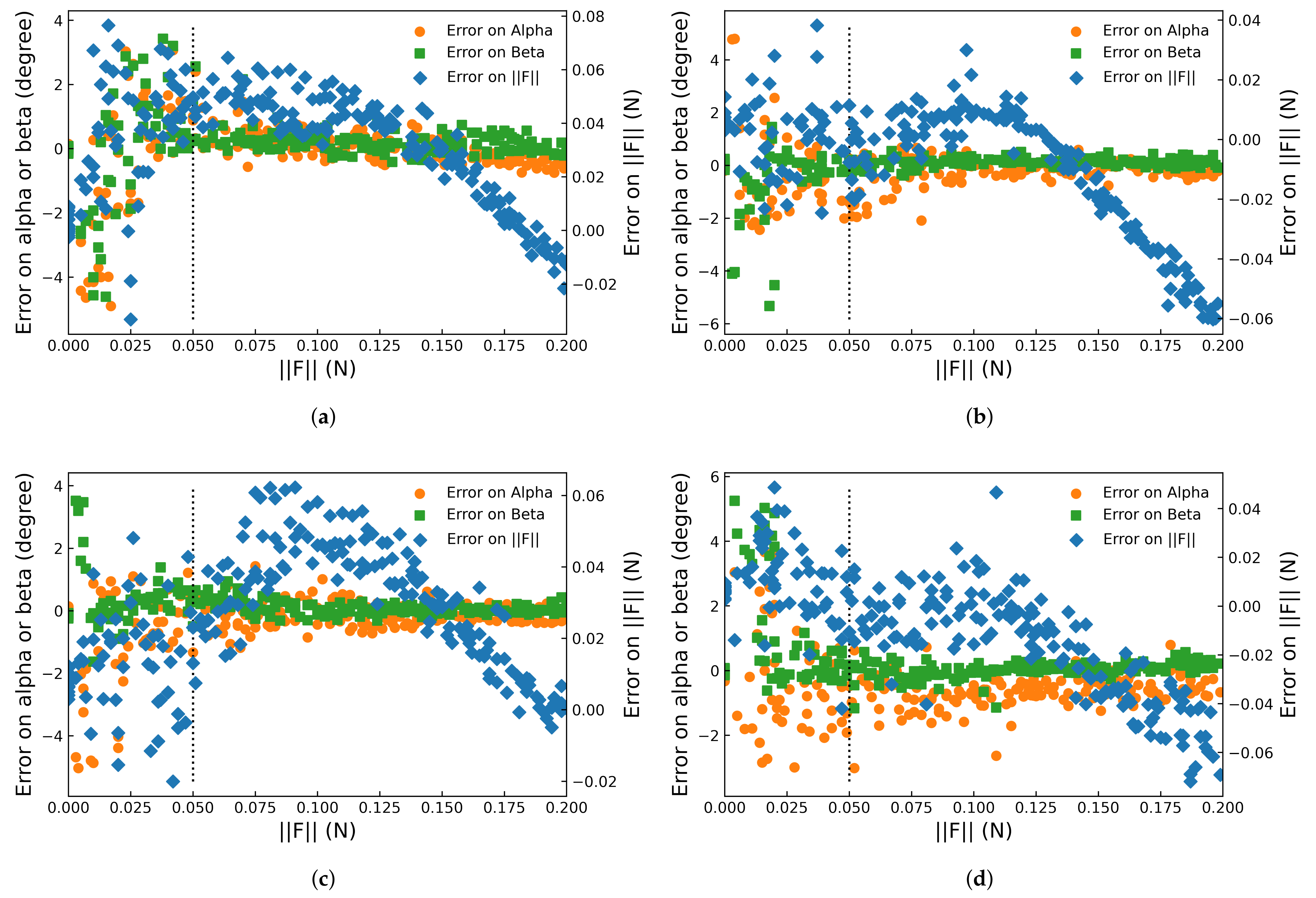

7.1. Accuracy

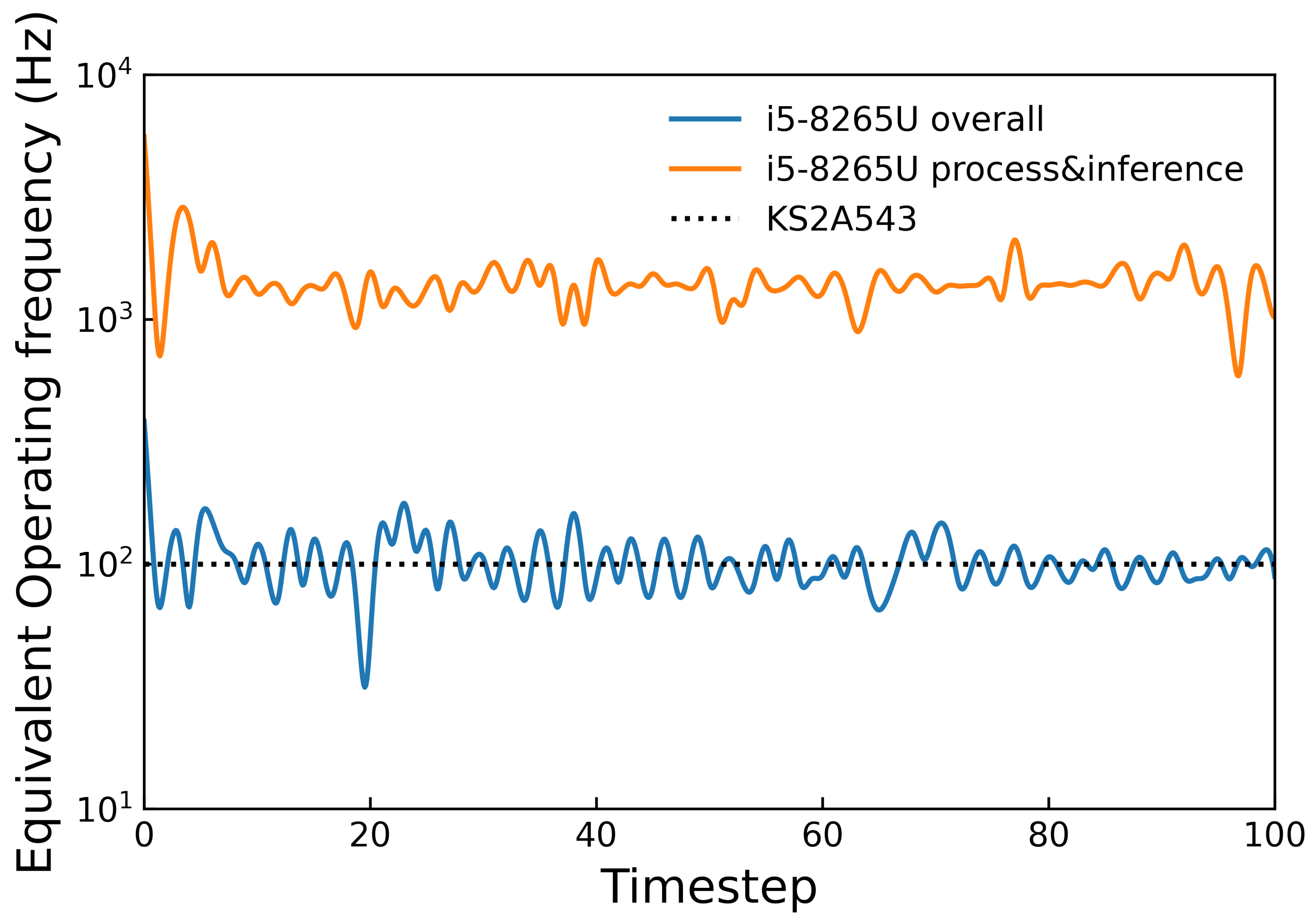

7.2. Operating Frequency

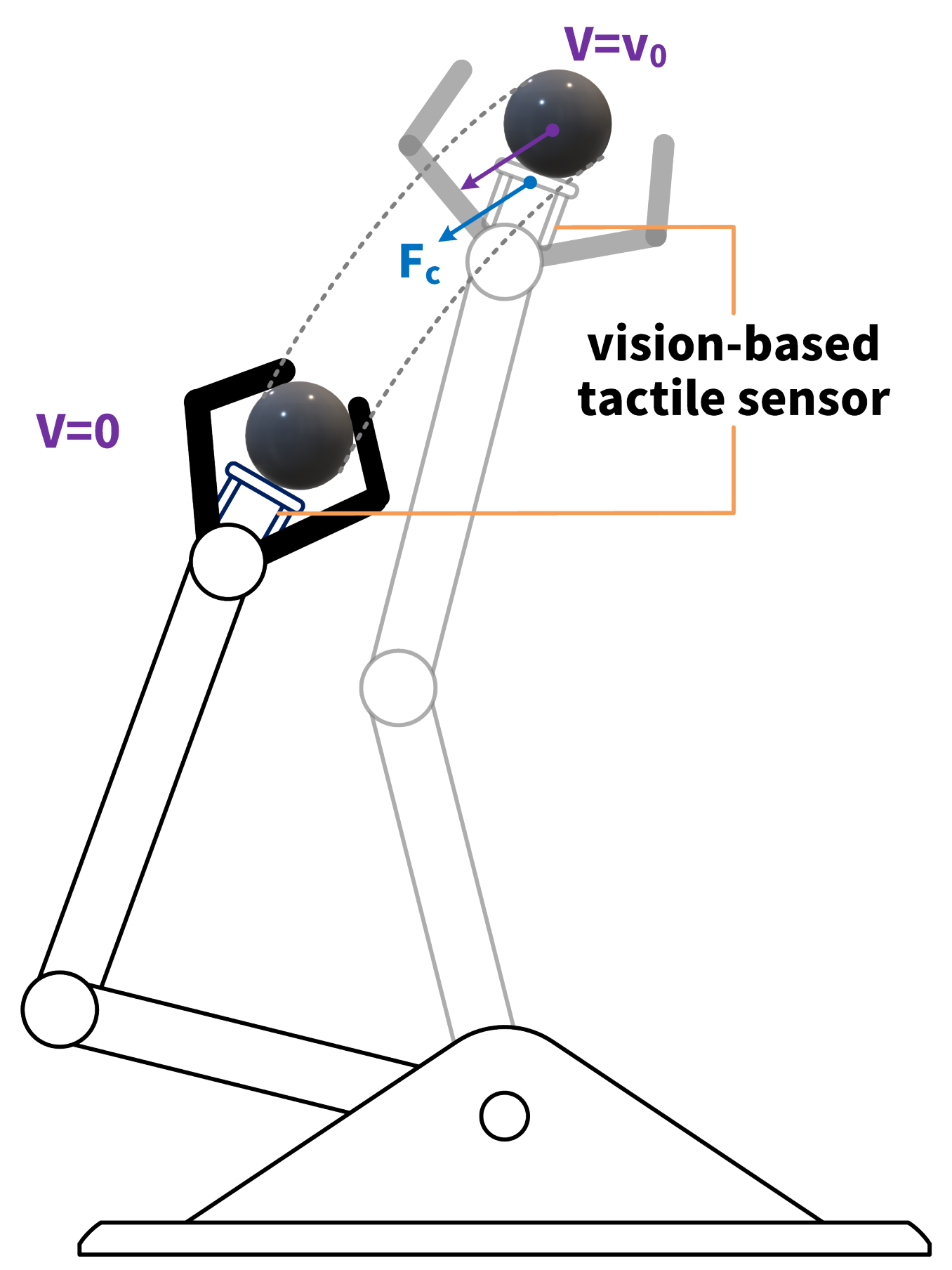

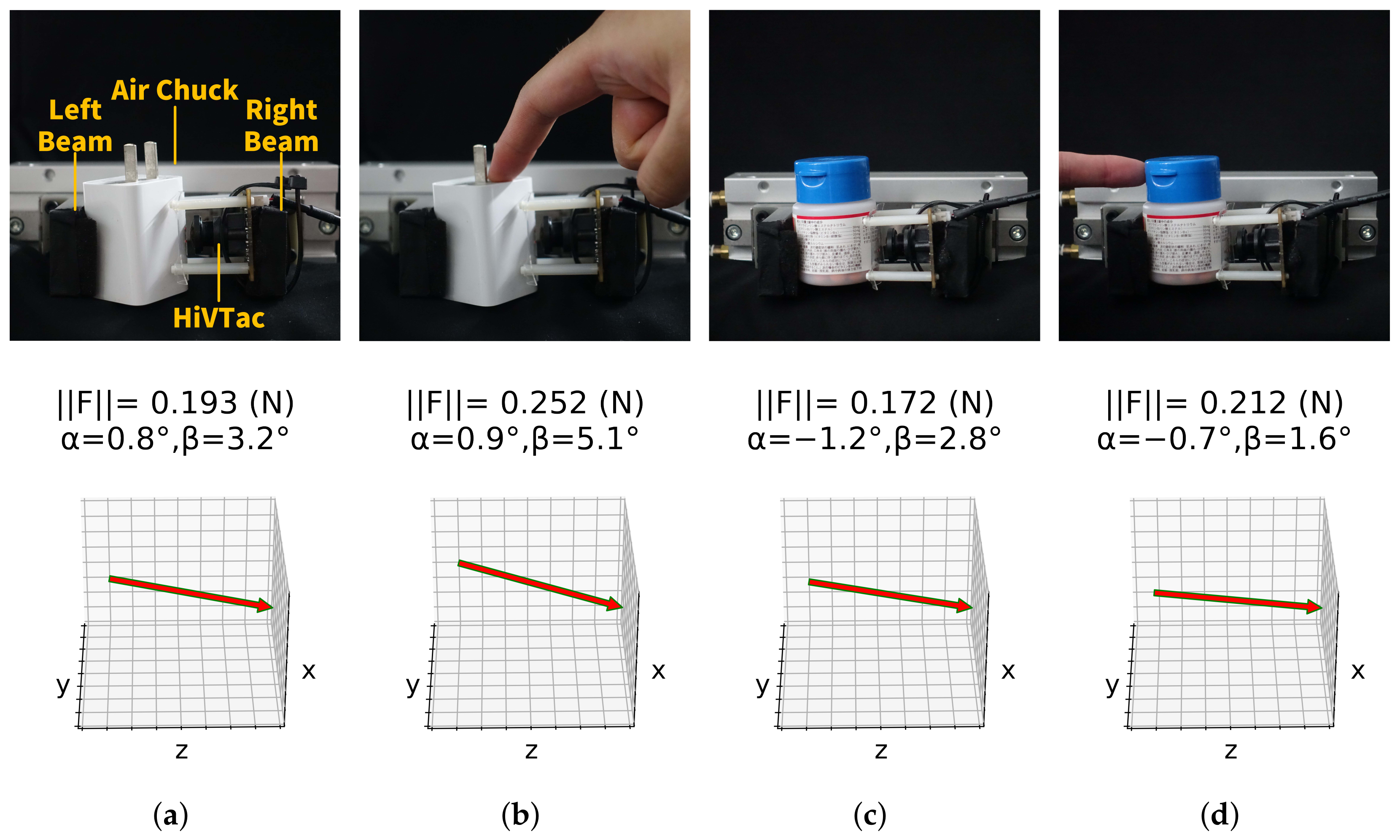

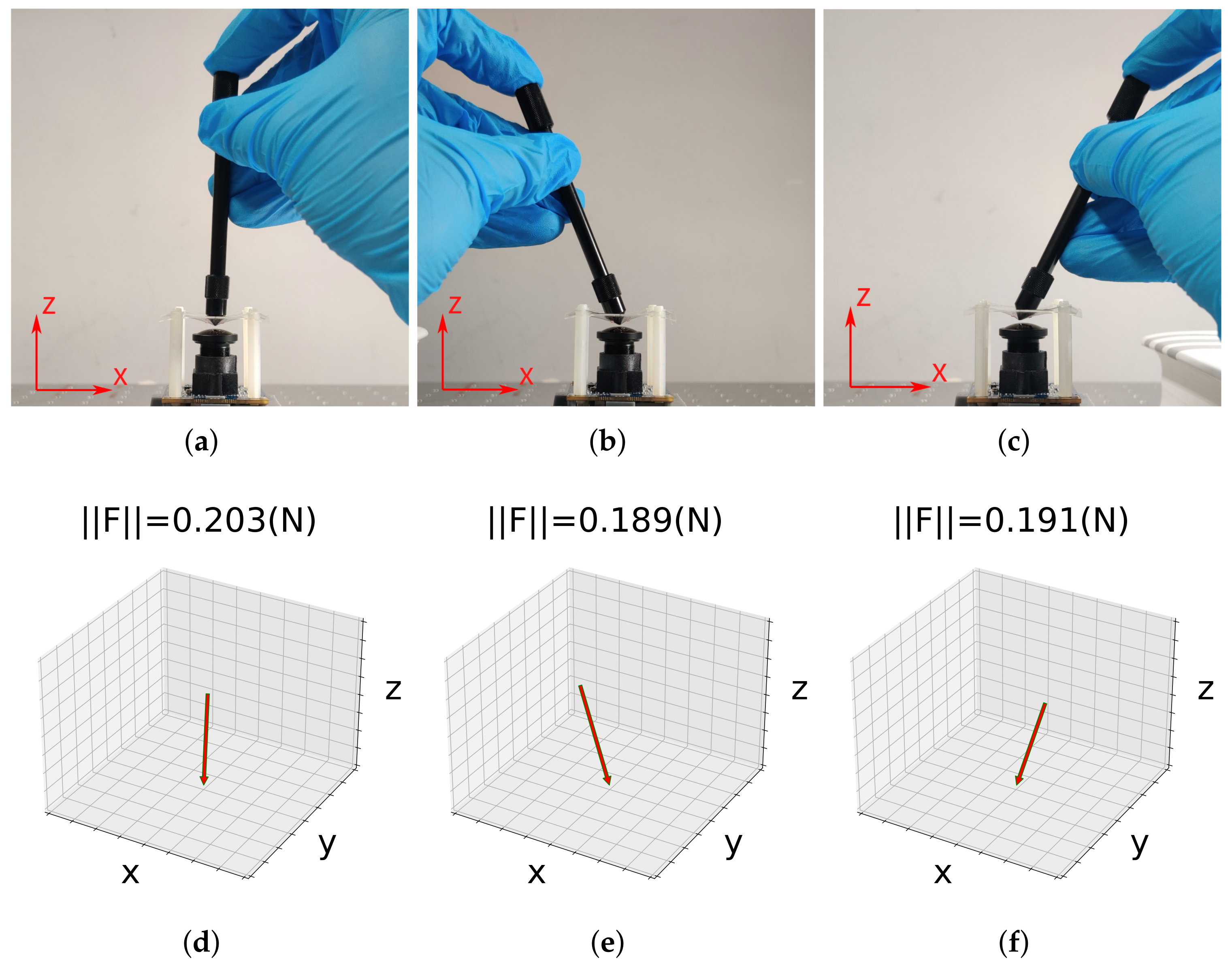

7.3. Grasping

7.4. Real-Time Reconstruction

8. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tarchanidis, K.N.; Lygouras, J.N. Data glove with a force sensor. IEEE Trans. Instrum. Meas. 2003, 52, 984–989. [Google Scholar] [CrossRef]

- Hwang, E.S.; Seo, J.h.; Kim, Y.J. A polymer-based flexible tactile sensor for both normal and shear load detections and its application for robotics. J. Microelectromech. Syst. 2007, 16, 556–563. [Google Scholar] [CrossRef]

- Krishna, G.M.; Rajanna, K. Tactile sensor based on piezoelectric resonance. IEEE Sens. J. 2004, 4, 691–697. [Google Scholar] [CrossRef]

- Motoo, K.; Arai, F.; Fukuda, T. Piezoelectric vibration-type tactile sensor using elasticity and viscosity change of structure. IEEE Sens. J. 2007, 7, 1044–1051. [Google Scholar] [CrossRef]

- Novak, J.L. Initial Design and Analysis of a Capacitive Sensor for Shear and Normal Force Measurement; Technical Report; Sandia National Laboratory (SNL-NM): Albuquerque, NM, USA, 1988.

- Salo, T.; Vančura, T.; Baltes, H. CMOS-sealed membrane capacitors for medical tactile sensors. J. Micromech. Microeng. 2006, 16, 769. [Google Scholar] [CrossRef]

- Chi, Z.; Shida, K. A new multifunctional tactile sensor for three-dimensional force measurement. Sens. Actuators A Phys. 2004, 111, 172–179. [Google Scholar] [CrossRef]

- Torres-Jara, E.; Vasilescu, I.; Coral, R. A Soft Touch: Compliant Tactile Sensors for Sensitive Manipulation; Massachusetts Institute of Technology: Cambridge, MA, USA, 2006. [Google Scholar]

- Ohka, M.; Mitsuya, Y.; Matsunaga, Y.; Takeuchi, S. Sensing characteristics of an optical three-axis tactile sensor under combined loading. Robotica 2004, 22, 213–221. [Google Scholar] [CrossRef] [Green Version]

- Heo, J.S.; Chung, J.H.; Lee, J.J. Tactile sensor arrays using fiber Bragg grating sensors. Sens. Actuators A Phys. 2006, 126, 312–327. [Google Scholar] [CrossRef]

- Tao, J.; Bao, R.; Wang, X.; Peng, Y.; Li, J.; Fu, S.; Pan, C.; Wang, Z.L. Self-powered tactile sensor array systems based on the triboelectric effect. Adv. Funct. Mater. 2019, 29, 1806379. [Google Scholar] [CrossRef]

- Cheng, Y.; Wu, D.; Hao, S.; Jie, Y.; Cao, X.; Wang, N.; Wang, Z.L. Highly stretchable triboelectric tactile sensor for electronic skin. Nano Energy 2019, 64, 103907. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Recent progress in tactile sensing and sensors for robotic manipulation: Can we turn tactile sensing into vision? Adv. Robot. 2019, 33, 661–673. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Combining finger vision and optical tactile sensing: Reducing and handling errors while cutting vegetables. In Proceedings of the 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids), Cancun, Mexico, 15–17 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1045–1051. [Google Scholar]

- Zhang, Y.; Kan, Z.; Yang, Y.; Tse, Y.A.; Wang, M.Y. Effective estimation of contact force and torque for vision-based tactile sensors with helmholtz–hodge decomposition. IEEE Robot. Autom. Lett. 2019, 4, 4094–4101. [Google Scholar] [CrossRef]

- Sundaralingam, B.; Hermans, T. In-hand object-dynamics inference using tactile fingertips. IEEE Trans. Robot. 2021, 37, 1115–1126. [Google Scholar] [CrossRef]

- Noh, Y.; Liu, H.; Sareh, S.; Chathuranga, D.S.; Würdemann, H.; Rhode, K.; Althoefer, K. Image-based optical miniaturized three-axis force sensor for cardiac catheterization. IEEE Sens. J. 2016, 16, 7924–7932. [Google Scholar] [CrossRef]

- Yun, A.; Lee, W.; Kim, S.; Kim, J.H.; Yoon, H. Development of a robot arm link system embedded with a three-axis sensor with a simple structure capable of excellent external collision detection. Sensors 2022, 22, 1222. [Google Scholar] [CrossRef]

- Sui, R.; Zhang, L.; Li, T.; Jiang, Y. Incipient Slip Detection Method with Vision-Based Tactile Sensor based on Distribution Force and Deformation. IEEE Sens. J. 2021, 21, 25973–25985. [Google Scholar] [CrossRef]

- Yamaguchi, A.; Atkeson, C.G. Tactile Behaviors with the Vision-Based Tactile Sensor FingerVision. Int. J. Humanoid Robot. 2019, 16, 1940002. [Google Scholar] [CrossRef]

- Yuan, W.; Dong, S.; Adelson, E.H. Gelsight: High-resolution robot tactile sensors for estimating geometry and force. Sensors 2017, 17, 2762. [Google Scholar] [CrossRef] [Green Version]

- Baimukashev, D.; Kappassov, Z.; Varol, H.A. Shear, Torsion and Pressure Tactile Sensor via Plastic Optofiber Guided Imaging. IEEE Robot. Autom. Lett. 2020, 5, 2618–2625. [Google Scholar] [CrossRef]

- Soter, G.; Hauser, H.; Conn, A.; Rossiter, J.; Nakajima, K. Shape reconstruction of CCD camera-based soft tactile sensors. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscataway, NJ, USA, 2020; pp. 8957–8962. [Google Scholar]

- Sato, K.; Kamiyama, K.; Nii, H.; Kawakami, N.; Tachi, S. Measurement of force vector field of robotic finger using vision-based haptic sensor. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 22–26 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 488–493. [Google Scholar]

- Kamiyama, K.; Vlack, K.; Mizota, T.; Kajimoto, H.; Kawakami, K.; Tachi, S. Vision-based sensor for real-time measuring of surface traction fields. IEEE Comput. Graph. Appl. 2005, 25, 68–75. [Google Scholar] [CrossRef]

- Sferrazza, C.; D’Andrea, R. Transfer learning for vision-based tactile sensing. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7961–7967. [Google Scholar]

- Lin, X.; Willemet, L.; Bailleul, A.; Wiertlewski, M. Curvature sensing with a spherical tactile sensor using the color-interference of a marker array. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 603–609. [Google Scholar]

- Ma, D.; Donlon, E.; Dong, S.; Rodriguez, A. Dense tactile force estimation using GelSlim and inverse FEM. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5418–5424. [Google Scholar]

- Lambeta, M.; Chou, P.W.; Tian, S.; Yang, B.; Maloon, B.; Most, V.R.; Stroud, D.; Santos, R.; Byagowi, A.; Kammerer, G.; et al. Digit: A novel design for a low-cost compact high-resolution tactile sensor with application to in-hand manipulation. IEEE Robot. Autom. Lett. 2020, 5, 3838–3845. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, X.; Zhou, Z.; Zeng, J.; Liu, H. An Enhanced FingerVision for Contact Spatial Surface Sensing. IEEE Sens. J. 2021, 21, 16492–16502. [Google Scholar] [CrossRef]

- Dydo, J.R.; Busby, H.R. Elasticity solutions for constant and linearly varying loads applied to a rectangular surface patch on the elastic half-space. J. Elast. 1995, 38, 153–163. [Google Scholar] [CrossRef]

- Johnson, K. Point loading of an elastic half-space. In An Introduction to Soil Dynamics; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- Li, W.; Alomainy, A.; Vitanov, I.; Noh, Y.; Qi, P.; Althoefer, K. F-touch sensor: Concurrent geometry perception and multi-axis force measurement. IEEE Sens. J. 2020, 21, 4300–4309. [Google Scholar] [CrossRef]

- Baghaei Naeini, F.; Makris, D.; Gan, D.; Zweiri, Y. Dynamic-vision-based force measurements using convolutional recurrent neural networks. Sensors 2020, 20, 4469. [Google Scholar] [CrossRef]

- Pang, C.; Mak, K.; Zhang, Y.; Yang, Y.; Tse, Y.A.; Wang, M.Y. Viko: An Adaptive Gecko Gripper with Vision-based Tactile Sensor. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 736–742. [Google Scholar]

- Ding, Z.; Lepora, N.F.; Johns, E. Sim-to-real transfer for optical tactile sensing. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1639–1645. [Google Scholar]

- Ward-Cherrier, B.; Pestell, N.; Lepora, N.F. Neurotac: A neuromorphic optical tactile sensor applied to texture recognition. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2654–2660. [Google Scholar]

- Chaudhury, A.N.; Man, T.; Yuan, W.; Atkeson, C.G. Using Collocated Vision and Tactile Sensors for Visual Servoing and Localization. IEEE Robot. Autom. Lett. 2022, 7, 3427–3434. [Google Scholar] [CrossRef]

- Zhang, Y.; Kan, Z.; Tse, Y.A.; Yang, Y.; Wang, M.Y. Fingervision tactile sensor design and slip detection using convolutional lstm network. arXiv 2018, arXiv:1810.02653. [Google Scholar]

- Huang, X.; Muthusamy, R.; Hassan, E.; Niu, Z.; Seneviratne, L.; Gan, D.; Zweiri, Y. Neuromorphic vision based contact-level classification in robotic grasping applications. Sensors 2020, 20, 4724. [Google Scholar] [CrossRef]

- Shimonomura, K.; Nakashima, H.; Nozu, K. Robotic grasp control with high-resolution combined tactile and proximity sensing. In Proceedings of the 2016 IEEE International Conference on Robotics and automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 138–143. [Google Scholar]

- Yu, Y.S.; Zhao, Y.P. Deformation of PDMS membrane and microcantilever by a water droplet: Comparison between Mooney–Rivlin and linear elastic constitutive models. J. Colloid Interface Sci. 2009, 332, 467–476. [Google Scholar] [CrossRef] [Green Version]

- Bourbaba, H.; Mohamed, B. Mechanical behavior of polymeric membrane: Comparison between PDMS and PMMA for micro fluidic application. Energy Procedia 2013, 36, 231–237. [Google Scholar] [CrossRef] [Green Version]

- Chang, R.; Chen, Z.; Yu, C.; Song, J. An Experimental Study on Stretchy and Tough PDMS/Fabric Composites. J. Appl. Mech. 2019, 86, 011012. [Google Scholar] [CrossRef]

- Hughes, C.; Denny, P.; Jones, E.; Glavin, M. Accuracy of fish-eye lens models. Appl. Opt. 2010, 49, 3338–3347. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bettonvil, F. Fisheye lenses. WGN J. Int. Meteor Organ. 2005, 33, 9–14. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Sferrazza, C.; Bi, T.; D’Andrea, R. Learning the sense of touch in simulation: A sim-to-real strategy for vision-based tactile sensing. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscataway, NJ, USA, 2020; pp. 4389–4396. [Google Scholar]

| Method | #Markers (Estimated) | Frequency (Hz) |

|---|---|---|

| Zhang et al. [39] | >100 | 15 |

| ∗ GelSight [21] | >100 | 30 |

| Viko [35] | >100 | 40 |

| Sferrazza et al. [48] | >100 | 50 |

| Sferrazza et al. [26] | >100 | 60 |

| ∗ Lambeta et al. [29] | 10–100 | 60 |

| Yamaguchi et al. [20] | 10–100 | 63 |

| Sato et al. [24] | 10–100 | 67 |

| HiVTac (overall) | 4 | 100 |

| HiVTac (processing) | 4 | 1300 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quan, S.; Liang, X.; Zhu, H.; Hirano, M.; Yamakawa, Y. HiVTac: A High-Speed Vision-Based Tactile Sensor for Precise and Real-Time Force Reconstruction with Fewer Markers. Sensors 2022, 22, 4196. https://doi.org/10.3390/s22114196

Quan S, Liang X, Zhu H, Hirano M, Yamakawa Y. HiVTac: A High-Speed Vision-Based Tactile Sensor for Precise and Real-Time Force Reconstruction with Fewer Markers. Sensors. 2022; 22(11):4196. https://doi.org/10.3390/s22114196

Chicago/Turabian StyleQuan, Shengjiang, Xiao Liang, Hairui Zhu, Masahiro Hirano, and Yuji Yamakawa. 2022. "HiVTac: A High-Speed Vision-Based Tactile Sensor for Precise and Real-Time Force Reconstruction with Fewer Markers" Sensors 22, no. 11: 4196. https://doi.org/10.3390/s22114196