Development of a Vision-Guided Shared-Control System for Assistive Robotic Manipulators

Abstract

1. Introduction

- In terms of blending autonomy, research has focused on human intent recognition [16] as well as different strategies to blend user and robot control for shared autonomy. For example, early work on the MANUS manipulator discussed the scheme of allowing the robot and user to control different DOFs (e.g., allowing the user to control the end-effector linear position and the robot to control the end-effector pose) [17]. Gopinath et al. proposed a blending scheme for the velocity of the robot end-effector in Cartesian space that can be tuned for the level of robot assistance [18], and found that the custom assistance was not always optimized for task performance, because some participants favored retaining more control over better performance. In general, research on blending autonomy has mostly focused on intent recognition accuracy and optimal blending schemes instead of its practical applications towards complex multi-step tasks.

- In terms of task allocation, the user and robot are each assigned a certain part of a task to perform. For example, Bhattacharjee et al. implemented a fully functional robot-assisted feeding system by allocating high-level decision-making tasks to the user (e.g., which food item to pick, how the food item should be picked up by the robot, when and how the robot should feed the user) via a touchscreen and allocating all motion planning and control to the robot, without requiring the user to teleoperate it. While user performance (which was not a focus of the study) was not reported, the system was well-received by the participants with disabilities, with relatively high perceived-usefulness and ease-of-use ratings. Our group developed a shared-control system whereby we designated the user to control the gross motion of the arm via teleoperation and the robot to take over the fine manipulation autonomously when getting close to the target object. We evaluated the system with eight individuals with disabilities and found that it improved task completion time and reduced perceived workloads for all five tasks tested. However, the five tasks, including turning a door handle, flipping a light switch on/off, turning a knob, grasping a ball, and grasping a bottle, were discrete tasks that require one-step operation [19].

2. Materials and Methods

2.1. The Robot

2.2. Vision-Guided Shared-Control (VGS) System

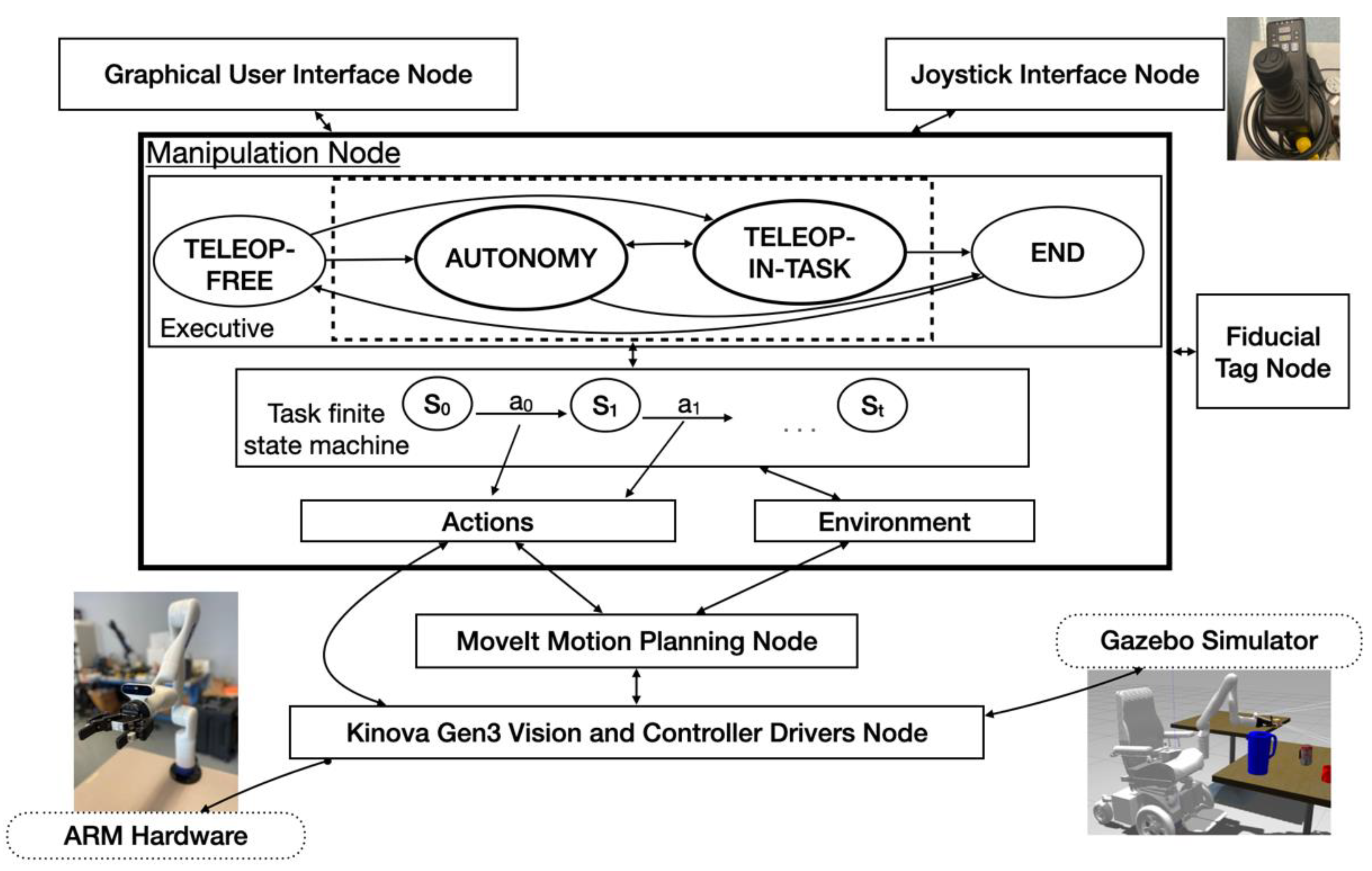

- User teleoperation is achieved through the joystick interface node. This node supports different types of joysticks including an X-box game controller, a 3-DoF joystick, and a traditional 2-DoF joystick. For example, a 2-DoF joystick along with two buttons (either on the joystick or external switches) is configured with one button for switching between Mode 1 (end-effector translational movements in forward/backward and left/right directions) and Mode 2 (end-effector translation movements in up/down directions and wrist roll rotations), and another button for switching between Mode 3 (wrist orientation in pitch and yaw directions) and Mode 4 (gripper open/close). For a 3-DoF joystick, it is also configured with two buttons, with one button for switching between Mode 1 (end-effector translation) and Mode 2 (end-effector orientation), and the other button for switching to Mode 3 (gripper open/close).

- Autonomous robot operation is initiated through the fiducial tag node. Fiducial tags offer highly distinguishable patterns with strong visual characteristics, and are often used for the identification, detection, and localization of different objects. We chose ArUco tags in this study given their great detection rate, good position and orientation estimation, and low computational cost [20]. The fiducial tag node wraps an open source ArUco library [21,22] into the ROS architecture for publishing the number, position, and orientation of each ArUco tag fixed to a target object with respect to the robot’s wrist-mounted camera. The information is published to the manipulation node and used to display a tag selection area on the graphical user interface (GUI), and to model obstacles in the environment.

- The manipulation node contains the main system state-machine, referred to as the system executor. When the VGS control is started, the system executor runs continuously for the system’s lifetime. This node contains a configuration file written in the YAML data-serialization markup language, for defining object properties including shape, size, and position relative to the ArUco tag. The node also supports the system state transitions between autonomous and user-teleoperation actions. The system executor calls various actions for each subtask that are pre-defined in an action library. For autonomous actions, once the user selects an object for interaction, the manipulation node parses the shape and size of the environment obstacles based on the ArUco tag ID. Obstacles are then added to the environment-planning scene for the robot path planning. The VGS automatically moves the robotic arm, through software, to a 6-DoF goal pose in the environment that is either achieved through motion planning or through a direct call to the Kinova Kortex driver. In both software calls, the third-party libraries (MoveIt ROS package or Kortex ROS driver package) both perform an inverse kinematic calculation utilizing their own internal kinematic solvers. A MoveIt motion-planning framework [23,24] is used to generate obstacle-free paths. The path is first generated by solving for an inverse kinematic solution using the Trac IK kinematic plugin. If a solution is not found, the planning then tries to generate a feasible path using the sample-based RRTConnect planner [25]. If all planners fail to find a solution, the robot goes back to the home position, re-plans or aborts the autonomous action, and prompts the user to teleoperate the robot. Successful paths are then executed through calls to the Kinova Gen 3 controller, which moves the arm to specific path positions. Actions that do not require motion planning, such as opening the gripper and pulling to open the cabinet, directly call the Kortex driver. As different parts of the task share common actions (e.g., reaching for an object), the executor chooses an action to call (sometimes repeated) based on a state machine that describes the current subtask.

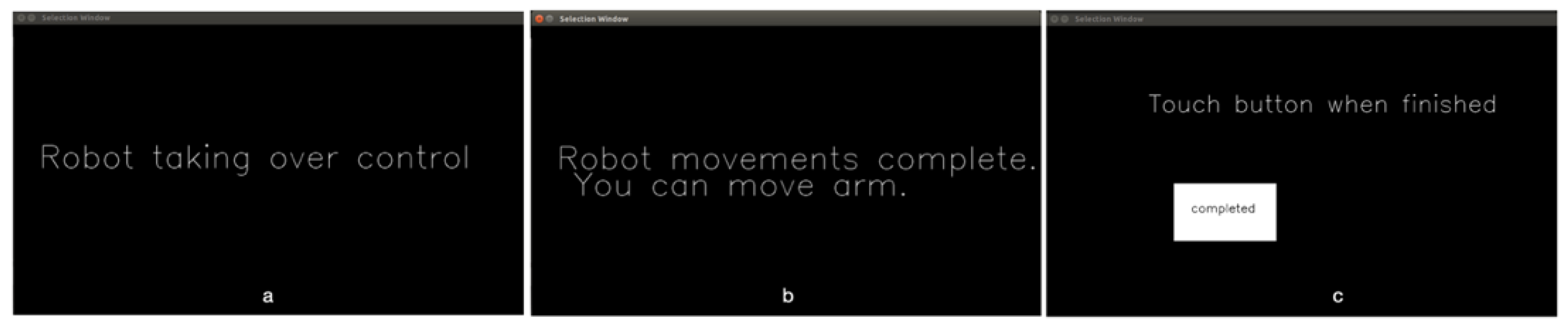

- The control-authority transition between the user and the robot is achieved through the GUI node. A touchscreen is placed in front of the user for target selection. This screen also keeps the user informed during the control-authority transition via text messages displayed on the screen. The transition happens between three system states. The Autonomy state is when the robot has full control of the system and automatically moves the arm. The Teleop Free state is when the user has joystick control, and the system is not in a task action. Lastly, the Teleop in Task state is when the user has joystick control, and the system is within a task action. Depending on the current system state, the GUI screen changes. For example, Figure 3 shows two examples during Teleop Free where a selectable circle appears over the fiducial tag for interacting with the cup (Figure 3a) or interacting with the water jug (Figure 3b). Figure 4 shows messages displayed to the user during Autonomy (Figure 4a), at the completion of Autonomy (Figure 4b), and during Teleop in Task (Figure 4c).

2.3. Experiment

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chen, T.L.; Ciocarlie, M.; Cousins, S.; Grice, P.M.; Hawkins, K.; Hsiao, K.; Kemp, C.C.; King, C.-H.; Lazewatsky, D.A.; Leeper, A.E.; et al. Robots for humanity: Using assistive robotics to empower people with disabilities. IEEE Robot. Autom. Mag. 2013, 20, 30–39. [Google Scholar] [CrossRef]

- Brose, S.W.; Weber, D.J.; Salatin, B.A.; Grindle, G.G.; Wang, H.; Vazquez, J.J.; Cooper, R.A. The role of assistive robotics in the lives of persons with disability. Am. J. Phys. Med. Rehabil. 2010, 89, 509–521. [Google Scholar] [CrossRef]

- Herlant, L.V.; Holladay, R.M.; Srinivasa, S.S. Assistive Teleoperation of Robot Arms via Automatic Time-Optimal Mode Switching. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016. [Google Scholar] [CrossRef]

- Kemp, C.; Edsinger, A.; Torres-Jara, E. Challenges for robot manipulation in human environments [Grand Challenges of Robotics]. IEEE Robot. Autom. Mag. 2007, 14, 20–29. [Google Scholar] [CrossRef]

- Chung, C.S.; Wang, H.; Cooper, R.A. Functional assessment and performance evaluation for assistive robotic manipulators: Literature review. J. Spinal Cord Med. 2013, 36, 273–289. [Google Scholar] [CrossRef] [PubMed]

- Chung, C.S.; Ka, H.W.; Wang, H.; Ding, D.; Kelleher, A.; Cooper, R.A. Performance Evaluation of a Mobile Touchscreen Interface for Assistive Robotic Manipulators: A Pilot Study. Top Spinal Cord Inj. Rehabil. 2017, 23, 131–139. [Google Scholar] [CrossRef]

- Losey, D.P.; Srinivasan, K.; Mandlekar, A.; Garg, A.; Sadigh, D. Controlling Assistive Robots with Learned Latent Actions. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 378–384. [Google Scholar] [CrossRef]

- Beaudoin, M.; Lettre, J.; Routhier, F.; Archambault, P.S.; Lemay, M.; Gélinas, I. Long-term use of the JACO robotic arm: A case series. Disabil. Rehabil. Assist. Technol. 2019, 14, 267–275. [Google Scholar] [CrossRef]

- Pulikottil, T.B.; Caimmi, M.; Dangelo, M.G.; Biffi, E.; Pellegrinelli, S.; Tosatti, L.M. A Voice Control System for Assistive Robotic Arms: Preliminary Usability Tests on Patients. In Proceedings of the IEEE RAS and EMBS International Conference on Biomedical Robotics and Biomechatronics, Enschede, The Netherlands, 26–29 August 2018. [Google Scholar] [CrossRef]

- Aronson, R.M.; Admoni, H. Semantic gaze labeling for human-robot shared manipulation. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, Denver, CO, USA, 25–28 June 2019. [Google Scholar] [CrossRef]

- Admoni, H.; Srinivasa, S. Predicting User Intent through Eye Gaze for Shared Autonomy. In Proceedings of the 2016 AAAI Fall Symposium Series: Shared Autonomy in Research and Practice, Arlington, VA, USA, 17–19 November 2016; Technical Report FS-16-05. Association for the Advancement of Artificial Intelligence: Menlo Park, CA, USA, 2016; pp. 298–303. [Google Scholar]

- Andreasen Struijk, L.N.S.; Egsgaard, L.L.; Lontis, R.; Gaihede, M.; Bentsen, B. Wireless intraoral tongue control of an assistive robotic arm for individuals with tetraplegia. J. Neuroeng. Rehabil. 2017, 14, 110. [Google Scholar] [CrossRef]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.C.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef]

- Ranganathan, R.; Lee, M.H.; Padmanabhan, M.R.; Aspelund, S.; Kagerer, F.A.; Mukherjee, R. Age-dependent differences in learning to control a robot arm using a body-machine interface. Sci. Rep. 2019, 9, 1960. [Google Scholar] [CrossRef]

- Ivorra, E.; Ortega, M.; Catalán, J.M.; Ezquerro, S.; Lledó, L.D.; Garcia-Aracil, N.; Alcañiz, M. Intelligent multimodal framework for human assistive robotics based on computer vision algorithms. Sensors 2018, 18, 2408. [Google Scholar] [CrossRef]

- Jain, S.; Argall, B. Probabilistic Human Intent Recognition for Shared Autonomy in Assistive Robotics. ACM Trans. Hum.-Robot Interact. 2020, 9, 2. [Google Scholar] [CrossRef] [PubMed]

- Driessen, B.J.F.; Liefhebber, F.; Kate, T.T.K.T.; Van Woerden, K.; Ten Kate, T.; Van Woerden, K. Collaborative Control of the MANUS Manipulator. In Proceedings of the 9th International Conference on Rehabilitation Robotics, 2005 (ICORR 2005), Chicago, IL, USA, 28 June–1 July 2005; pp. 247–451. [Google Scholar] [CrossRef]

- Gopinath, D.; Jain, S.; Argall, B.D. Human-in-the-Loop Optimization of Shared Autonomy in Assistive Robotics. IEEE Robot. Autom. Lett. 2017, 2, 247–254. [Google Scholar] [CrossRef] [PubMed]

- Ka, H.W.; Chung, C.S.; Ding, D.; James, K.; Cooper, R. Performance evaluation of 3D vision-based semi-autonomous control method for assistive robotic manipulator. Disabil. Rehabil. Assist. Technol. 2018, 13, 140–145. [Google Scholar] [CrossRef] [PubMed]

- Kalaitzakis, M.; Cain, B.; Carroll, S.; Ambrosi, A.; Whitehead, C.; Vitzilaios, N. Fiducial Markers for Pose Estimation. J. Intell. Robot. Syst. 2021, 101, 71. [Google Scholar] [CrossRef]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using Mixed Integer Linear Programming. Pattern Recognit. 2016, 51, 481–491. [Google Scholar] [CrossRef]

- Gorner, M.; Haschke, R.; Ritter, H.; Zhang, J. MoveIt! Task Constructor for Task-Level Motion Planning. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 190–196. [Google Scholar] [CrossRef]

- Coleman, D.; Sucan, I.; Chitta, S.; Correll, N. Reducing the Barrier to Entry of Complex Robotic Software: A MoveIt! Case Study. J. Softw. Eng. Robot. 2014, 5, 3–16. [Google Scholar] [CrossRef]

- Kuffner, J.J.; LaValle, S.M. RRT-connect: An efficient approach to single-query path planning. In Proceedings of the 2000 ICRA Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat No00CH37065), San Francisco, CA, USA, 24–28 April 2000; pp. 995–1001. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A quick and dirty usability scale. In Usability Evaluation in Industry; Taylor & Francis: Abingdon, UK, 1996. [Google Scholar] [CrossRef]

- Devos, H.; Gustafson, K.; Ahmadnezhad, P.; Liao, K.; Mahnken, J.D.; Brooks, W.M.; Burns, J.M. Psychometric properties of NASA-TLX and index of cognitive activity as measures of cognitive workload in older adults. Brain Sci. 2020, 10, 994. [Google Scholar] [CrossRef]

- Ka, H.W.; Ding, D.; Cooper, R. Three Dimensional Computer Vision-Based Alternative Control Method For Assistive Robotic Manipulator. Int. J. Adv. Robot. Autom. 2016, 1, 1–6. [Google Scholar] [CrossRef][Green Version]

- Kadylak, T.; Bayles, M.A.; Galoso, L.; Chan, M.; Mahajan, H.; Kemp, C.C.; Edsinger, A.; Rogers, W.A. A human factors analysis of the Stretch mobile manipulator robot. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2021, 65, 442–446. [Google Scholar] [CrossRef]

- Cohen, B.J.; Subramania, G.; Chitta, S.; Likhachev, M. Planning for Manipulation with Adaptive Motion Primitives. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5478–5485. [Google Scholar] [CrossRef]

| Success Criteria | Teleoperation Actions by User | Autonomous Actions by Robot | |

|---|---|---|---|

| Open cabinet | The cabinet door is fully open and stays open. | Move the robot to find the tag on the cabinet. | Grasp the cabinet handle and pull the cabinet open fully. |

| Retrieve cup | The cup stays upright and firmly held in the gripper, and is lifted above the surface. | Move the robot to find the tag on the cup. | Grasp the cup and lift it up. |

| Fill cup | The jug dispensing-tap is pushed back by the cup, which stays about upright. (This was performed in a simulated way in which no water was dispensed, to avoid accidental spill or overflow. Thus, the amount of water and water spill were not considered in the criteria). | Move the robot (with cup in hand) to find the tag on the jug Push the cup against the dispenser tap on the jug to fill and then move away from jug. | Move close to and align the cup with the dispenser tap on the jug. |

| Drink | The cup stops at a position feasible for drinking from it and remains upright during transport. | Move the robot to a drinking position based on individual needs and drink from cup. | Move the robot to a default drinking position. |

| Place cup on table | The cup is placed on the table and stays upright. | Move the robot back towards the table. | Place the cup back on the table. |

| Autonomous Action | Success | Failure Descriptions (# of Failed Trials) |

|---|---|---|

| Open cabinet (fixed initial gripper-orientation) | 85% | Loose grip (1) Unnatural path led to collision when approaching handle (1) Failed to find a path to reach the cabinet handle (1) |

| Open cabinet (arbitrary initial gripper-orientation) | 80% | Loose grip (3) Unnatural path led to collision when approaching handle (1) |

| Retrieve cup | 85% | Loose grip (1) ARM scratched table (1) ARM collided with cabinet door (1) |

| Fill cup | 90% | Cup collided with the table (2) |

| Drink | 95% | Unnatural path led to inappropriate cup orientation (1) |

| Place cup on table | 100% | None |

| Open Cabinet | Retrieve Cup | Fill Cup | Drink | Place Cup | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | |

| TP #1 | 80% | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| TP #2 | 60% | 80% | 80% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| TP #3 | 60% | 80% | 100% | 80% | 100% | 100% | 100% | 100% | 100% | 100% |

| Open Cabinet | Retrieve Cup | Fill Cup | Drink | Place Cup | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | |

| TP #1 | 84.7 ± 16.6 | 27.8 ± 0.5 | 22.3 ± 10.6 | 27.4 ± 3.6 | 13.9 ± 7.6 | 52.3 ± 8.9 | 36.2 ± 10.1 | 17.2 ± 2.7 | 31.9 ± 13.9 | 12.5 ± 0.0 |

| TP #2 | 116.7 ± 41.4 | 33.1 ± 9.2 | 43.1 ± 7.3 | 23.7 ± 3.0 | 40.3 ± 28.4 | 39.1 ± 3.3 | 44.1 ± 5.5 | 27.7 ± 4.8 | 27.8 ± 6.1 | 10.6 ± 0.7 |

| TP #3 | 57.9 ± 43.4 | 33.9 ± 13.4 | 19.6 ± 3.7 | 25.5 ± 5.8 | 24.7 ± 4.5 | 39.3 ± 8.2 | 29.4 ± 2.6 | 16.2 ± 3.8 | 16.2 ± 1.7 | 12.6 ± 0.1 |

| Open Cabinet | Retrieve Cup | Fill Cup | Drink | Place Cup | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | |

| TP #1 | 8.2 ± 2.3 | 0 ± 0 | 4.4 ± 3.6 | 0 ± 0 | 0 ± 0 | 0 ± 0 | 2 ± 0 | 1.2 ± 0.5 | 2.6 ± 1.5 | 1.2 ± 0.5 |

| TP #2 | 16.2 ± 5.3 | 0 ± 0 | 7.2 ± 1.3 | 0 ± 0 | 2.8 ± 2.3 | 0 ± 0 | 7 ± 1.4 | 1.2 ± 0.5 | 4.4 ± 1.5 | 1.2 ± 0.5 |

| TP #3 | 6.0 ± 3.0 | 0 ± 0 | 3.6 ± 0.9 | 0 ± 0 | 2.2 ± 0.5 | 0 ± 0 | 2 ± 0 | 1.2 ± 0.5 | 1.0 ± 0.0 | 1.4 ± 0.6 |

| Success Rate | Time Spent (s) | Mode Switch | ||||

|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | |

| TP #1 | 80% | 100% | 189.0 ± 16.7 | 137.2 ± 8.8 | 17.2 ± 4.8 | 2.4 ± 0.9 |

| TP #2 | 60% | 80% | 271.9 ± 38.2 | 134.2 ± 13.6 | 37.6 ± 7.9 | 2.4 ± 0.9 |

| TP #3 | 60% | 80% | 147.8 ± 43.7 | 127.5 ± 18.1 | 14.8 ± 3.7 | 2.6 ± 0.9 |

| Open Cabinet | Retrieve Cup | Fill Cup | Drink | Place Cup | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | Tele | VGS | |

| Success | 50% | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 50% |

| Time (s) | 132.6 ± 64.0 | 45.4 ± 9.3 | 59.9 ± 13.4 | 53.1 ± 13.1 | 39.1 ± 7.7 | 55.2 ± 12.2 | 50.9 ± 0.0 | 70.1 ± 0.1 | 47.8 ± 1.9 | 58.3 ± 7.7 |

| Mode Switch | 24.5 ± 10.6 | 1.5 ± 0.7 | 10.5 ± 0.7 | 3.0 ± 2.8 | 7.0 ± 1.4 | 4.5 ± 2.1 | 7.5 ± 5.0 | 7 ± 1.4 | 7.5 ± 2.1 | 6.5 ± 0.7 |

| Success Rate | Time Spent (s) | Mode Switch | ||||

|---|---|---|---|---|---|---|

| Tele | VGS | Tele | VGS | Tele | VGS | |

| Case | 50% | 50% | 330.3 ± 83.3 | 282.1 ± 24.7 | 57 ± 11.3 | 22.5 ± 2.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, D.; Styler, B.; Chung, C.-S.; Houriet, A. Development of a Vision-Guided Shared-Control System for Assistive Robotic Manipulators. Sensors 2022, 22, 4351. https://doi.org/10.3390/s22124351

Ding D, Styler B, Chung C-S, Houriet A. Development of a Vision-Guided Shared-Control System for Assistive Robotic Manipulators. Sensors. 2022; 22(12):4351. https://doi.org/10.3390/s22124351

Chicago/Turabian StyleDing, Dan, Breelyn Styler, Cheng-Shiu Chung, and Alexander Houriet. 2022. "Development of a Vision-Guided Shared-Control System for Assistive Robotic Manipulators" Sensors 22, no. 12: 4351. https://doi.org/10.3390/s22124351

APA StyleDing, D., Styler, B., Chung, C.-S., & Houriet, A. (2022). Development of a Vision-Guided Shared-Control System for Assistive Robotic Manipulators. Sensors, 22(12), 4351. https://doi.org/10.3390/s22124351