3.1. Extraction and Matching of Image Feature Points

A scale invariant feature transform (SIFT) algorithm is mainly composed of four parts: constructing scale space, extracting key points, assigning main directions and generating feature-point descriptors [

22]. The main idea is to filter the extreme points found in the scale space, so as to find the stable feature points. Finally, the local features of the image are extracted around each stable feature point to form a local descriptor and use it in future matching [

23].

Speeded up robust features (SURF) is an efficient variant of SIFT. The principles of SURF feature extraction and SIFT feature extraction are consistent, but the method used for SURF is different from SIFT. The determinant value of Hessian matrix is used as the feature detection of SURF, and the integral graph is used to improve the operation efficiency. The descriptor of SURF is based on the response of a 2D discrete wavelet transform and makes efficient use of the integral graph [

24], which is more efficient and accurate than SIFT in running speed and brightness change; however, SIFT works better in the case of scale and rotation transformation, so the SIFT feature-extraction results and SURF feature-extraction results are fused to learn from each other and increase the number of matching feature points [

20,

21].

After generating the feature descriptor, the nearest neighbor matching algorithm (nearest neighbor—NN) of Euclidean distance is used to complete the rough matching. The Euclidean distance measurement formula is shown in (2).

The process is as follows: each feature vector in image 1 is represented as , and a KD tree is used to search in image 2 to find the distance between all feature points in image 2 and . When the Euclidean distance is less than a threshold, the feature with the smallest distance is taken as the matching feature point. In order to improve the robustness of matching, this paper adds the constraint ratio: nearest neighbor eigenvector and next nearest neighbor distance vector ; when the ratio of nearest neighbor distance to next nearest neighbor distance is less than a given threshold, i.e., , then is the match of , otherwise it is considered that does not match in image 1. In the experiment α = 0.7.

After matching the feature points between pictures, there is often a false matching phenomenon. Then, it is necessary to verify or optimize the feature matching. The feature points with false matching are filtered. The false matching can be eliminated by camera pose estimation. The random sample consensus (RANSAC) algorithm is a random iterative process which separates internal points and noise outliers through user-defined threshold size identification. The algorithm uses the smallest possible initial data set to calculate a model, and uses the consistency number to expand this set. The goal of the algorithm is to fit a model on the data set containing outer points. In the process of each iteration, the feature points acceptable to the constraint model and within the error threshold are called inner points. After running the set number of iterations, the constraint function containing the most inner points will be returned.

3.2. Traditional SFM

In the sparse reconstruction stage, the traditional SFM algorithm is divided into incremental and global. The incremental SFM successively calculates the camera parameters and scene structure. The global SFM calculates the parameters and scene structure of all cameras according to the constraint relationship of all cameras.

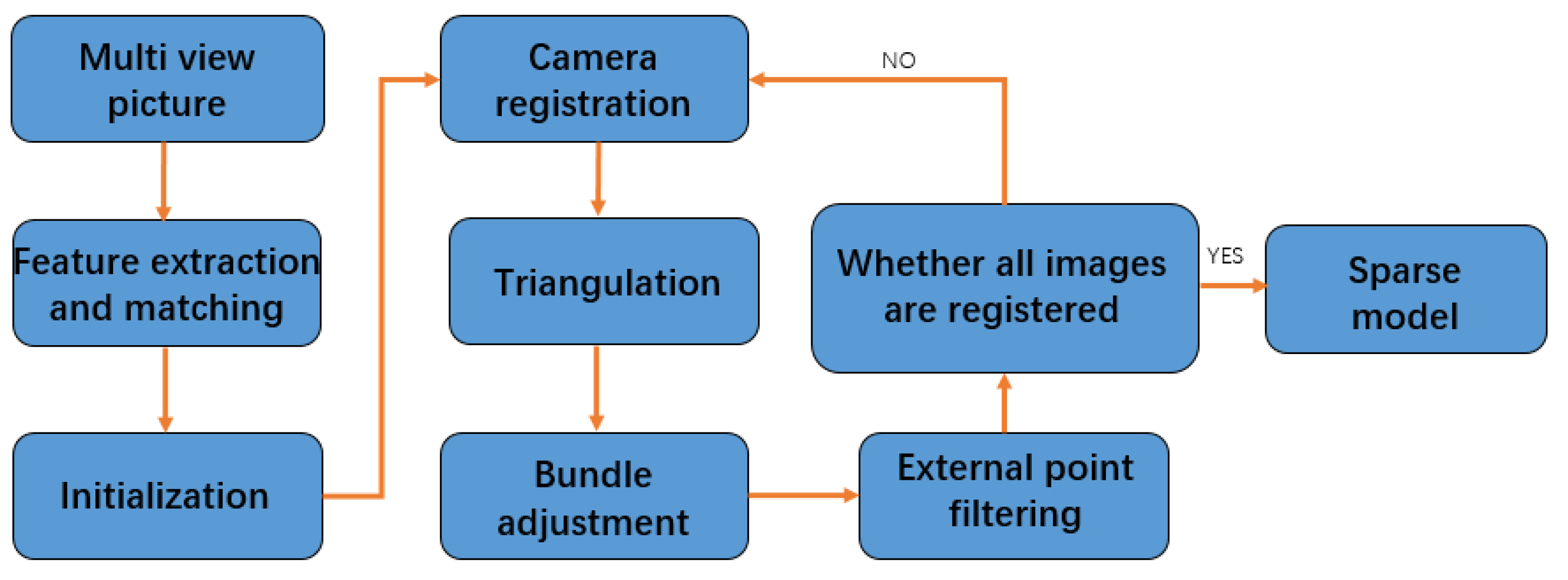

The flow of incremental SFM is shown in the

Figure 1. Firstly, initialize, select a pair of pictures as the initial picture pair, require the image pair to have enough matching points and meet the wide baseline conditions, calculate the rotation and position relationship between its cameras, triangulate the matched feature points to obtain the initial 3D points as the initial model, perform bundle adjustment, and optimize the camera parameters and initial model. Then, add new images, in turn, for registration, according to the corresponding relationship between the 2D points and 3D points in the new image, calculate the parameters of the newly registered camera through a PnP (perspective-n-point) algorithm, triangulate the new feature points, and obtain new 3D points to be added to the original model. In this process, the bundle adjustment is continuously performed to optimize the camera parameters and 3D point coordinates, and the external points are filtered until all images are reconstructed to obtain a sparse 3D model.

The flow chart of an incremental SFM algorithm is shown in the

Figure 1.

The algorithm flow of global SFM is shown in the

Figure 2. After obtaining the corresponding relationship of image feature points, first, calculate the relative rotation relationship between cameras, and use the rotation consistency to remove the wrong feature matching, then calculate the translation matrix between cameras through three-view constraints and register it in the global coordinate system to obtain all camera parameters. Finally, triangulate the 3D points corresponding to feature points, and perform a bundle adjustment to optimize camera parameters and scene structure. The sparse 3D model of the scene is obtained.

The flow chart of a global SFM algorithm is shown in

Figure 2.

Incremental SFM has high robustness, but it runs for a long time. In the case of large scenes, drift may occur due to error accumulation. Global SFM runs quickly and will not drift, but the reconstruction accuracy is low, and the robustness is not high. Here, robustness refers to the ability to resist external influences, such as lighting, whether the object texture is rich, shooting angle, etc. The higher the robustness, the more it can ignore the influence of these external factors for reconstruction; on the contrary, the reconstruction may fail.

3.3. Hybrid SFM

In view of the problems existing in the traditional incremental and global SFM, this paper outlines a hybrid SFM algorithm, which combines the advantages of incremental and global SFM. As shown in the image, the image is divided into multiple subsets: the incremental SFM algorithm is used to recover the camera parameters of the subset in the subset, which has high robustness and high precision. Then, the parameters of all cameras are calculated by using the global SFM algorithm, and the error is spread to each camera. The sparse model of the scene is obtained by triangulation. Finally, the bundle adjustment is performed to optimize the camera parameters and sparse model.

The flow chart of the hybrid SFM algorithm proposed in this paper is shown in

Figure 3.

3.3.1. Image Subset Division

After the preprocessing of image feature extraction and matching an image set , a SIFT feature point set and its corresponding relationship , where is a set of feature correspondence between two images and , each image is associated with a camera. An image set is represented as a camera geometry image , where and are sets of vertices and edges. Image clustering is to divide the image into several camera subgraphs , that is, the image set is divided into multiple subsets, and each image set needs to meet size constraints and integrity constraints.

When the scale of SFM is expanded, due to the limitation of computing power and memory, it is difficult to efficiently reconstruct with incremental or global SFM. Therefore, it is divided into several small sub-problems. The images are clustered to obtain multiple image sets, and there are enough duplicate images between all sets. In order to meet the computational performance and subsequent global camera parameter estimation requirements, each image set needs to meet the size constraints and integrity constraints.

The size constraint requires that the size of each image set is small and similar. Firstly, each image set should be small enough to adapt to the computing resources of the computer and realize efficient incremental SFM calculation. Moreover, the small-scale SFM problem can effectively avoid a lot of time consumption and possible drift caused by continuous beam adjustment.

In order to provide sufficient constraints for the calculation of global camera parameters, we introduce integrity constraints to ensure the connectivity of different subgraph cameras. However, completely preserving the connectivity between cameras will introduce too many duplicate cameras in different sets, and it is difficult to meet the size constraint. Therefore, each camera is defined as a complete set, as shown in the Formula (3).

where

is the number of images of subset

, and

is the number of identical pictures in subset

and subset

. This quantifies that a camera set

is covered by other camera sets, which limits the number of duplicate cameras, and ensures that all camera sets have enough overlapping cameras to completely reconstruct the scene with adjacent sets.

In order to meet the size constraints and integrity constraints at the same time, a graph-based camera clustering algorithm is used. The camera subset is obtained by iteratively running graph segmentation and graph expansion. The steps are as follows.

- (1)

Graph segmentation by recursively dividing the camera geometry image that violates the size constraint into smaller images to meet the size constraint. Starting from the camera image

, the normalized graph cut algorithm is iteratively applied to all subgraphs that do not meet the size constraint; is divided into two balanced subgraphs and until no subgraph violates the size constraint. Generally, image pairs with a large number of matching features have high edge weight in the graph and are unlikely to be cut.

- (2)

The graph extension satisfies the integrity constraint by introducing enough overlapping cameras between adjacent camera sets. The cut edges are arranged in descending order of weight , and is added iteratively and to its associated subgraph or until the subgraph satisfies the integrity constraint. After adding a small number of relevant vertices of discarded edges, it is not difficult to meet the integrity constraint.

After graph expansion, the size constraint may not be satisfied, so iteration between graph segmentation and graph expansion must be performed; when both constraints are satisfied, the iteration ends.

3.3.2. Subset Camera Parameter Calculation

After the image set is divided into multiple subsets, incremental SFM is used to recover the camera parameters of each subset. Firstly, initialize, select a pair of pictures as the initial images, calculate the rotation and position relationship between their cameras, triangulate the feature points to obtain the initial model, then register a new image in turn and triangulate to obtain new 3D points. In this process, bundle adjustment is continuously performed to optimize the camera parameters and 3D points until all images are registered.

In the process of registering a new image, the perspective-n-point (PnP) algorithm can effectively calculate the parameters of the newly registered camera. This algorithm is a method to solve the camera parameters from the correspondence between 3D points and 2D points, in which 3D points are the coordinates of the scene model in the world coordinate system, and 2D points are the points projected onto the image by these 3D points. Therefore, it is necessary to obtain the rotation and position relationship of the camera coordinate system relative to the world coordinate system, and align the newly registered camera with the existing scene model.

When there are three pairs of 3D points and 2D points, it is a P3P problem. As shown in

Figure 4, points A, B and C are the 3D points of the scene in the world coordinate system, and points a, b and c are the corresponding 2D points of the image.

Firstly, the coordinates of points A, B and C in the current camera coordinate system are obtained, then the rotation and position parameters of the camera are calculated according to the 3D point coordinates in the world coordinate system and the 3D point coordinates in the current camera coordinate system. According to the mathematical relationship and mathematical formula derivation, we can obtain Formula (4):

The equations have four sets of solutions, of which only one is suitable, so an additional 3D point is required for verification. According to the values of and , , and can be obtained, and then the coordinates of points A, B and C in the camera coordinate system can be calculated from the camera’s internal parameters. Finally, the rotation and position matrix of the camera are calculated according to the coordinates of points A, B and C in the camera coordinate system and their coordinates in the world coordinate system. At the same time, the selection of a set of appropriate camera external parameters is verified by additional 3D points.

After registering the new camera in the coordinate system of the existing model, the new feature points are triangulated to obtain new 3D points, and the camera parameters and scene model are optimized through bundle adjustment to reduce the re-projection error of the 3D points of the existing model. Finally, the external points are filtered, and the 3D points are connected with their corresponding 2D points. If the maximum included angle is less than 2°, the point is eliminated; the 3D points with excessive re-projection error are also eliminated.

Through the above method, the images are added successively and the camera parameters are restored. When all images are added, the work is ended, so as to obtain the camera parameters of each subset.

3.3.3. Global Camera-Parameter Calculation

After obtaining the camera parameters of each subset, all camera parameters need to be unified into the same coordinate system. The global algorithm calculates the global camera parameters in two steps. First, calculate the global rotation relationship of the camera, and then calculate the global translation relationship of the camera.

The algorithm for calculating the global rotation matrix is designed as follows:

- (1)

Set the rotation matrix of the first camera to ;

- (2)

Construct global camera rotation equation;

- (3)

Solve the global camera rotation matrix by least square method;

- (4)

Obtain the rotation matrix satisfying orthogonality by SVD.

The algorithm for calculating the global translation matrix is designed as follows:

- (1)

Set the scale of the first image subset to ;

- (2)

Set the position of the first camera to ;

- (3)

Construct global camera position equation;

- (4)

Perform convex optimization for global camera position;

- (5)

Convert global camera position to global translation matrix.

The detailed design of the algorithm for solving the global rotation matrix and translation matrix is as follows. Firstly, calculate the global rotation matrix of the camera, obtain the accurate relative rotation matrix

through the incremental formula, convert the relative rotation into the global rotation

, set the rotation matrix of the first camera to

; its relationship is shown in Formula (5).

Generally, the rotation relationship between cameras is greater than the number of cameras. Formula (5) is an overdetermined equation. However, due to the influence of noise, there is usually no solution that accurately meets the above equation, so this problem is solved by the least square method.

Convert the above problem into three sub-problems, as shown in Equation (6).

where,

is column

of

,

. The three sub-problems are solved by the least square method to obtain the global rotation matrix

. Since the rotation matrix also needs to meet the orthogonality, the appropriate rotation matrix

is obtained by singular value decomposition (SVD).

Given the global rotation relationship between cameras, their positions are expressed by a linear equation, as shown in Equation (7).

where

is two cameras

and

for the relative translation relationship between both cameras are from the

k-th camera set, and their scale factor is

. Then, Equation (7) is rewritten as

. The scale factor of all camera sets is expressed as

, representing all camera positions as

, and the linear equations are obtained, as shown in Formula (8).

where,

is a

of the matrix, whose appropriate position is

, and the rest are

,

is a

of the matrix, whose appropriate position of matrix is

and

, the rest is

, the linear equations of all camera sets are put into one equation to obtain Equation (9).

Setting , , the positions of all cameras are obtained by solving the following convex optimization problem.

After obtaining the global parameter relationship of all cameras, for a 3D point, if the number of visible cameras is greater than or equal to 3, the corresponding track is triangulated to obtain the sparse model of the scene. Finally, beam adjustment is performed to optimize the camera parameters and 3D model.

3.3.4. Prediction Results of Hybrid SFM

As the global SFM significantly depends on the robust rotation matrix, only one BA optimization is carried out in the beam adjustment, and the reconstruction speed is very fast, but the sparse reconstruction accuracy is not high; incremental SFM continuously adds images for incremental BA optimization, so the calculated rotation matrix and translation matrix are robust, and the accuracy of sparse model is good. However, due to the accumulated error of incremental multiple BA optimization, scene drift will occur. The hybrid SFM divides the image set into multiple subsets, and incremental SFM is carried out in the subsets, which not only ensures the accuracy of the model, but also avoids the cumulative error caused by adding images many times. After completing the incremental SFM in the subsets, the process of global SFM is carried out, and BA optimization is carried out again to allocate the error to each camera to increase the accuracy of the sparse model.

Here, we assume that, in terms of reconstruction accuracy, the hybrid SFM will show an improvement compared with incremental SFM and global SFM; for large data sets, hybrid SFM will require a shorter reconstruction time than incremental SFM.

3.4. Dense Reconstruction

Taking the camera parameters and sparse model obtained by hybrid SFM as input, first, take each image as the reference image, select the neighborhood image to form a stereo image pair, and then calculate the depth map of the reference image to represent a scene. After the depth map is fused, the dense point cloud of the scene is obtained, which is more convenient to observe a scene.

The MVS algorithm based on depth-map fusion is shown in the

Figure 5.

N images are selected as the neighborhood images of each reference image. In order to ensure the consistency and accuracy of dense matching, these images should have sufficient similarity and provide a large enough baseline. The criteria for selecting neighborhood images are the number of matching feature points in the sparse reconstruction process and the angle between the sparse 3D points and the optical center of the two image cameras. It is required that there are as many matching feature points as possible, and the included angle is large. N field images are selected for the reference image to form a stereo image pair, and the depth map of the reference image is calculated.

Calculating the depth map of the reference image includes two parts, a region generation framework and a matching system. The region generation framework maintains a priority queue Q, which stores candidate matching points, including the position, depth and normal vector of features in the reference image. The matching system takes the candidate matching points as the input to optimize the corresponding depth and normal vector.