Accuracy Improvement of Vehicle Recognition by Using Smart Device Sensors †

Abstract

:1. Introduction

- A literature review details each branch of the vehicle recognition literature, with a focus on both theoretical and practical elements of these works. Each article of the literature review is examined for how the smart-device sensor model may benefit its practical utility.

- Data collection, data processing, and our deep learning model and experiment are detailed.

- Results are analyzed and explained.

- A discussion with an emphasis on implications and future research is presented, noting a warning about the danger of driving while holding handheld smart devices.

2. Literature Review

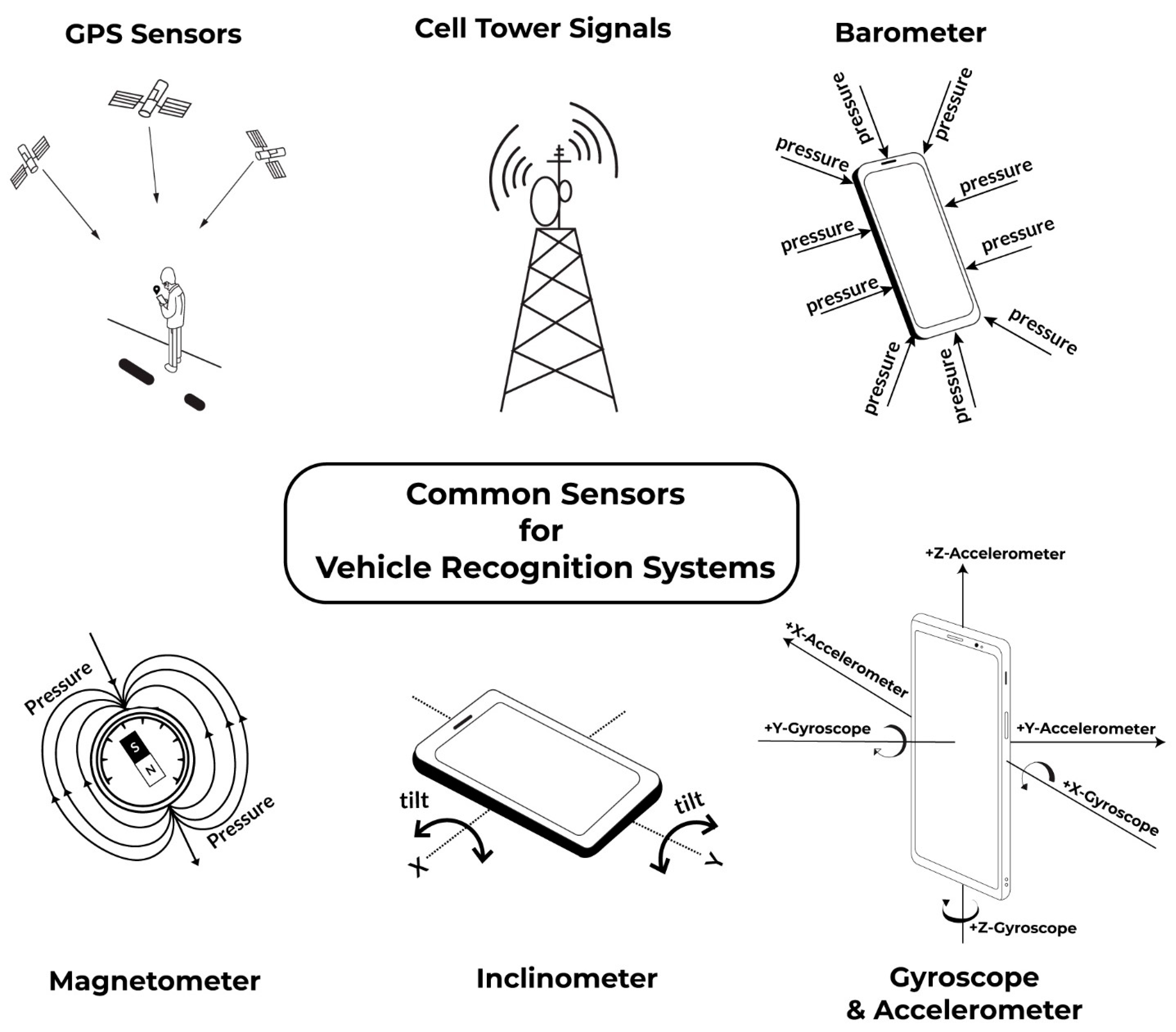

2.1. Vehicle Recognition via Smart Sensors

2.2. Intelligent Transportation Systems (ITS)

3. Methods

3.1. Explanation of Accelerometer and Gyroscope Technologies

3.2. Experimental Setup

3.3. Data Preprocessing

- The raw dataset for each class has different lengths. As such, all of them are trimmed to have the same length to reduce bias. The number of data points is considered to be 2800. Therefore, the dimension of each class data after trimming is (2800, 6), where 6 is the data channel.

- Then, this trimmed dataset is segmented into snapshots using a sliding window of the length of 100 samples, and the stride is equal to 1 sample. After this step, the data dimension of each class is (2700, 100, 6) where 6 is the data channel, 100 is the data snapshot, and 2700 is the number of snapshots. In other words, the data of each class can be considered as a set of images, where each image dimension is (100, 6), and there is a total of 2700 images in each set.[More details about the sliding window: https://www.ibm.com/docs/en/mapms/1_cloud?topic=detectors-window-size-scheduling] (accessed on 1 April 2022).

3.4. Dataset Preparation

4. Results

- Batch_size = [16, 32, 64, 512]

- Window_size = [50, 100, 150, 200, 250, 500]

- Optimizer = [SGD, RMSprop, Adam, Adadelta, Adagrad, Adamax]

- Learning rate = [0.001, 0.005, 0.01, 0.1]

- Optimizer = Adam

- Loss function = Categorical cross entropy

- Batch size = 32

- Epochs = 10

Alternative Setup Results

- Cross weather (with data are collected by Samsung S8)

- Training set: Clear weather car

- Test set: Rainy weather car

- Accuracy: 96.32%

- Cross Device and Cross Vehicle (with data collected during clear weather)

- Training set: Honda Insight 2013 using Samsung S8

- Test set: Toyota Camry 2005 using Pixel 4XL

- Accuracy: 93.2%

5. Limitations

6. Discussion and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Berger, A. Gizmos Or: The Electronic Imperative: How Digital Devices Have Transformed American Character and Culture; Springer: New York, NY, USA, 2015; Volume 1. [Google Scholar]

- Gayathri, T. A Survey on Vehicle Health Monitoring and Prediction System. IJCST 2017, 5, 191–193. [Google Scholar]

- Xia, H.; Qiao, Y.; Jian, J.; Chang, Y. Using smart phone sensors to detect transportation modes. Sensors 2014, 14, 20843–20865. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pias, T.S.; Eisenberg, D.; Islam, M.A. Vehicle Recognition Via Sensor Data from Smart Devices. In Proceedings of the 2019 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 3–6 October 2019; pp. 96–99. [Google Scholar]

- Dogan, G.; Sturdivant, J.D.; Ari, S.; Kurpiewski, E. Locomotion-Transportation Recognition via LSTM and GPS Derived Feature Engineering from Cell Phone Data. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 359–362. [Google Scholar]

- Jeyakumar, J.V.; Lee, E.S.; Xia, Z.; Sandha, S.S.; Tausik, N.; Srivastava, M. Deep Convolutional Bidirectional LSTM Based Transportation Mode Recognition. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 1606–1615. [Google Scholar]

- Iabanzhi, L.; Astrakhan, M.; Tyshevskyi, P. Location-based Human Activity Recognition Using Long-term Deep Learning Invariant Mapping. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 363–368. [Google Scholar]

- Wang, C.; Luo, H.; Zhao, F.; Qin, Y. Combining Residual and LSTM Recurrent Networks for Transportation Mode Detection Using Multimodal Sensors Integrated in Smartphones. IEEE Trans. Intell. Transp. Syst. 2021, 22, 5473–5485. [Google Scholar] [CrossRef]

- Wang, H.; Luo, H.; Zhao, F.; Qin, Y.; Zhao, Z.; Chen, Y. Detecting Transportation Modes with Low-Power-Consumption Sensors Using Recurrent Neural Network. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Guangzhou, China, 8–12 October 2018; pp. 1098–1105. [Google Scholar]

- Martin, B.D.; Addona, V.; Wolfson, J.; Adomavicius, G.; Fan, Y. Methods for Real-Time Prediction of the Mode of Travel Using Smartphone-Based GPS and Accelerometer Data. Sensors 2017, 17, 2058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shafique, M.A.; Hato, E. Travel Mode Detection with Varying Smartphone Data Collection Frequencies. Sensors 2016, 16, 716. [Google Scholar] [CrossRef] [Green Version]

- Tregel, T.; Gilbert, A.; Konrad, R.; Schäfer, P.; Göbel, S. Examining Approaches for Mobility Detection Through Smartphone Sensors. In Serious Games; Springer: Cham, Switzerland, 2018; pp. 217–228. [Google Scholar]

- Iskanderov, J.; Guvensan, M.A. Breaking the Limits of Transportation Mode Detection: Applying Deep Learning Approach with Knowledge-Based Features. IEEE Sens. J. 2020, 20, 12871–12884. [Google Scholar] [CrossRef]

- Balabka, D.; Shkliarenko, D. Human activity recognition with AutoML using smartphone radio data. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 346–352. [Google Scholar]

- Basak, P.; Tasin, S.M.; Sakib, A.H.M.N.; Uddin, S.D.; Ahad, M.A.R. A Windowless Approach to Recognize Various Modes of Locomotion and Transportation. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 353–358. [Google Scholar]

- Bjerre-Nielsen, A.; Minor, K.; Sapiezynski, P.; Lehmann, S.; Lassen, D.D. Inferring transportation mode from smartphone sensors: Evaluating the potential of Wi-Fi and Bluetooth. PLoS ONE 2020, 15, e0234003. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Ortega, D.; Diaz-Pernas, F.J.; Martinez-Zarzuela, M.; Anton-Rodriguez, M. Comparative Analysis of Kinect-Based and Oculus-Based Gaze Region Estimation Methods in a Driving Simulator. Sensors 2020, 21, 26. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, B. Transportation Mode Detection by Embedded Sensors Based on Ensemble Learning. IEEE Access 2020, 8, 145552–145563. [Google Scholar] [CrossRef]

- Ashwini, N.; Mahalakshmi, S.; Bhagya, G. Study of IoT sensors for vehicle detection. Eur. J. Mol. Clin. Med. 2020, 7, 5349–5356. [Google Scholar]

- Badii, C.; Difino, A.; Nesi, P.; Paoli, I.; Paolucci, M. Classification of users’ transportation modalities from mobiles in real operating conditions. Multimed. Tools Appl. 2022, 81, 115–140. [Google Scholar] [CrossRef]

- Gjoreski, M.; Janko, V.; Slapničar, G.; Mlakar, M.; Reščič, N.; Bizjak, J.; Drobnič, V.; Marinko, M.; Mlakar, N.; Luštrek, M.; et al. Classical and deep learning methods for recognizing human activities and modes of transportation with smartphone sensors. Inf. Fusion 2020, 62, 47–62. [Google Scholar] [CrossRef]

- Ballı, S.; Sağbaş, E.A. Diagnosis of transportation modes on mobile phone using logistic regression classification. IET Softw. 2018, 12, 142–151. [Google Scholar] [CrossRef]

- Erdelić, M.; Carić, T.; Erdelić, T.; Tišljarić, L. Transition State Matrices Approach for Trajectory Segmentation Based on Transport Mode Change Criteria. Sustainability 2022, 14, 2756. [Google Scholar] [CrossRef]

- Frigo, J.; Rosten, E.; Kulathumani, V.K.; Brennan, S.; Raby, E.Y. Sensor network based vehicle classification and license plate identification system. In Proceedings of the 2009 Sixth International Conference on Networked Sensing Systems (INSS), Pittsburgh, PA, USA, 17–19 June 2009. [Google Scholar]

- Guvensan, M.A.; Dusun, B.; Can, B.; Turkmen, H.I. A Novel Segment-Based Approach for Improving Classification Performance of Transport Mode Detection. Sensors 2017, 18, 87. [Google Scholar] [CrossRef] [Green Version]

- Kaewunruen, S.; Sresakoolchai, J.; Huang, J.; Harada, S.; Wisetjindawat, W. Human Activity Vibrations. Data 2021, 6, 104. [Google Scholar] [CrossRef]

- Lan, J.; Xiang, Y.; Wang, L.; Shi, Y. Vehicle detection and classification by measuring and processing magnetic signal. Measurement 2011, 44, 174–180. [Google Scholar] [CrossRef]

- Li, C.; Li, S.; Gao, Y.; Guo, J.; Chen, P.; Li, W. Dense CNN and IndRNN for the Sussex-Huawei Locomotion-Transportation Recognition Challenge. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 380–384. [Google Scholar]

- Lou, L.; Zhang, J.; Xiong, Y.; Jin, Y. A Novel Vehicle Detection Method Based on the Fusion of Radio Received Signal Strength and Geomagnetism. Sensors 2018, 19, 58. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.N.; Nguyen, D.N.; Nguyen, T.H.; Nguyen, H.N. Vehicle Mode and Driving Activity Detection Based on Analyzing Sensor Data of Smartphones. Sensors 2018, 18, 1036. [Google Scholar] [CrossRef] [Green Version]

- Qin, Y.; Luo, H.; Zhao, F.; Zhao, Z.; Jiang, M. A traffic pattern detection algorithm based on multimodal sensing. Int. J. Distrib. Sens. Netw. 2018, 14. [Google Scholar] [CrossRef]

- Ren, Y. Multiple Tree Model Integration for Transportation Mode Recognition. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 385–389. [Google Scholar]

- Suharjono, A.; Prabowo, Y.; Kadiran, S.A.; Wardihani, E.D. Improving the accuration of train arrival detection based-on vibration signal using accelerometer sensor. AIP Conf. Proc. 2019, 2097, 030087. [Google Scholar]

- Şengül, G.; Ozcelik, E.; Misra, S.; Damaševičius, R.; Maskeliūnas, R. Fusion of smartphone sensor data for classification of daily user activities. Multimed. Tools Appl. 2021, 80, 33527–33546. [Google Scholar] [CrossRef]

- Fang, S.H.; Liao, H.H.; Fei, Y.X.; Chen, K.H.; Huang, J.W.; Lu, Y.D.; Tsao, Y. Transportation Modes Classification Using Sensors on Smartphones. Sensors 2016, 16, 1324. [Google Scholar] [CrossRef] [Green Version]

- Tan, J.; Lai, J.-H.; Wang, C.-D.; Wang, W.-X.; Zuo, X.-X. A new handwritten character segmentation method based on nonlinear clustering. Neurocomputing 2012, 89, 213–219. [Google Scholar] [CrossRef]

- Thomas, T.; Geurs, K.T.; Koolwaaij, J.; Bijlsma, M. Automatic Trip Detection with the Dutch Mobile Mobility Panel: Towards Reliable Multiple-Week Trip Registration for Large Samples. J. Urban Technol. 2018, 25, 143–161. [Google Scholar] [CrossRef] [Green Version]

- Tian, A.; Zhang, Y.; Chen, H.; Ma, C.; Zhou, S. An Ensemble of ConvTransformer Networks for the Sussex-Huawei Locomotion-Transportation (SHL) Recognition Challenge. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 408–411. [Google Scholar]

- Wang, H.; Yu, Y.; Cai, Y.; Chen, L.; Chen, X. A Vehicle Recognition Algorithm Based on Deep Transfer Learning with a Multiple Feature Subspace Distribution. Sensors 2018, 18, 4109. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Chen, W.; Pan, L.; Gu, T.; Wu, T.; Tao, X.; Lu, J. SpiderWalk: Circumstance-aware Transportation Activity Detection Using a Novel Contact Vibration Sensor. Assoc. Comput. Mach. 2018, 2, 1–30. [Google Scholar] [CrossRef]

- Xiao, G.; Cheng, Q.; Zhang, C. Detecting travel modes from smartphone-based travel surveys with continuous hidden Markov models. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Zhu, Y.; Luo, H.; Guo, S.; Zhao, F. Data Mining for Transportation Mode Recognition from Radio-data. In Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and 2021 ACM International Symposium on Wearable Computers, Seattle, WA, USA, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 423–427. [Google Scholar]

- Vançin, S.; Erdem, E. Implementation of the vehicle recognition systems using wireless magnetic sensors. Sādhanā 2017, 42, 841–854. [Google Scholar] [CrossRef] [Green Version]

- Hodon, M.; Karpis, O.; Sevcik, P.; Kocianova, A. Which Digital-Output MEMS Magnetometer Meets the Requirements of Modern Road Traffic Survey? Sensors 2021, 21, 266. [Google Scholar] [CrossRef]

- Zhao, H.; Tan, C.; Obrien, E.J.; Uddin, N.; Zhang, B. Wavelet-Based Optimum Identification of Vehicle Axles Using Bridge Measurements. Appl. Sci. 2020, 10, 7485. [Google Scholar] [CrossRef]

- Pramanik, P.; Sinhababu, N.; Mukherjee, B.; Padmanaban, S.; Maity, A.; Upadhyaya, B.K.; Holm-Nielsen, J.B.; Choudhury, P. Power Consumption Analysis, Measurement, Management, and Issues: A State-of-the-Art Review of Smartphone Battery and Energy Usage. IEEE Access 2019, 7, 182113–182172. [Google Scholar] [CrossRef]

- Katevas, K.; Haddadi, H.; Tokarchuk, L. SensingKit: Evaluating the Sensor Power Consumption in iOS devices. In Proceedings of the 12th International Conference on Intelligent Environments, London, UK, 14–16 September 2016. [Google Scholar]

- Koenig, I.; Memon, A.Q.; David, K. Energy consumption of the sensors of Smartphones. In Proceedings of the ISWCS 2013: The Tenth International Symposium on Wireless Communication Systems, Ilmenau, Germany, 27–30 August 2013. [Google Scholar]

- Khan, I.; Khusro, S.; Ali, S.; Ahmad, J. Sensors are Power Hungry: An investigation of Smartphone Sensors Impact on Battery Power from Lifelogging Perspective. Bahria Univ. J. Infomration Commun. Technol. 2016, 9, 8–19. [Google Scholar]

- Horvath, Z.; Jenak, I.; Brachmann, F. Battery consumption of smartphone sensors. J. Reliab. Intell. Environ. 2017, 3, 131–136. [Google Scholar] [CrossRef]

- Hakkert, A.S.; Gitelman, V. The effectiveness of red-light cameras: A meta-analysis of the evaluation studies. Road Transp. Res. 2004, 13, 34. [Google Scholar]

- Juergens, C.; Crespi, M.; Maktav, D.; Goossens, R.; Jacobsen, K.; Nascetti, A. Foreword to the European journal of remote sensing special issue: Urban remote sensing—Challenges and solutions. Eur. J. Remote Sens. 2019, 52 (Suppl. 2), 1. [Google Scholar] [CrossRef]

- Gonzalez-Ortega, D.; Diaz-Pernas, F.J.; Martinez-Zarzuela, M.; Anton-Rodriguez, M. A Physiological Sensor-Based Android Application Synchronized with a Driving Simulator for Driver Monitoring. Sensors 2019, 19, 399. [Google Scholar] [CrossRef] [Green Version]

- Candamo, J.; Shreve, M.; Goldgof, D.B.; Sapper, D.B.; Kasturi, R. Understanding Transit Scenes: A Survey on Human Behavior-Recognition Algorithms. IEEE Trans. Intell. Transp. Syst. 2010, 11, 206–224. [Google Scholar] [CrossRef]

- Tripathi, R.K.; Jalal, A.S.; Agrawal, S.C. Suspicious human activity recognition: A review. Artif. Intell. Rev. 2017, 50, 283–339. [Google Scholar] [CrossRef]

- Chou, J.S.; Liu, C.H. Automated Sensing System for Real-Time Recognition of Trucks in River Dredging Areas Using Computer Vision and Convolutional Deep Learning. Sensors 2021, 21, 555. [Google Scholar] [CrossRef]

- European Parliament and the Council. Directive 2010/40/eu of the European Parliament and of the Council of 7 July 2010. Off. J. Eur. Union 2010, 207, 1–13. [Google Scholar]

- Gonzalez, B.; Jimenez, F.J.; De Frutos, J. A Virtual Instrument for Road Vehicle Classification Based on Piezoelectric Transducers. Sensors 2020, 20, 4597. [Google Scholar] [CrossRef] [PubMed]

- Chmiel, W.; Dańda, J.; Dziech, A.; Ernst, S.; Kadłuczka, P.; Mikrut, Z.; Pawlik, P.; Szwed, P.; Wojnicki, I. INSIGMA: An intelligent transportation system for urban mobility enhancement. Multimed. Tools Appl. 2016, 75, 10529–10560. [Google Scholar] [CrossRef] [Green Version]

- Lamas-Seco, J.J.; Castro, P.M.; Dapena, A.; Vazquez-Araujo, F.J. SiDIVS: Simple Detection of Inductive Vehicle Signatures with a Multiplex Resonant Sensor. Sensors 2016, 16, 1309. [Google Scholar] [CrossRef] [Green Version]

- Nam, Y.; Nam, Y.-C. Vehicle classification based on images from visible light and thermal cameras. EURASIP J. Image Video Process. 2018, 2018, 5. [Google Scholar] [CrossRef] [Green Version]

- Scheiner, N.; Kraus, F.; Wei, F. Seeing Around Street Corners: Non-Line-of-Sight Detection and Tracking In-the-Wild Using Doppler Radar. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Bedogni, L.; Cabri, G. Identification of Social Aspects by Means of Inertial Sensor Data. Information 2020, 11, 534. [Google Scholar] [CrossRef]

- Chen, Z.; Yuz, J.; Zhu, Y.; Cheny, Y.; Li, M. D3: Abnormal Driving Behaviors Detection and Identification Using Smartphone Sensors. In Proceedings of the 2015 12th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), Seattle, WA, USA, 22–25 June 2015. [Google Scholar]

- Ghazal, T.M.; Said, R.A.; Taleb, N. Internet of vehicles and autonomous systems with AI for medical things. Soft. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Xie, Z.; Li, L.; Xu, X. Real-Time Driving Distraction Recognition Through a Wrist-Mounted Accelerometer. Hum. Factors 2021. [Google Scholar] [CrossRef]

- Fukatsu, R.; Sakaguchi, K. Automated Driving with Cooperative Perception Using Millimeter-Wave V2V Communications for Safe Overtaking. Sensors 2021, 21, 2659. [Google Scholar] [CrossRef]

- Xu, Q.; Wang, B.; Zhang, F.; Regani, D.S.; Wang, F.; Liu, K.J.R. Wireless AI in Smart Car: How Smart a Car Can Be? IEEE Access 2020, 8, 55091–55112. [Google Scholar] [CrossRef]

- Fredianelli, L.; Carpita, S.; Bernardini, M.; Del Pizzo, L.G.; Brocchi, F.; Bianco, F.; Licitra, G. Traffic Flow Detection Using Camera Images and Machine Learning Methods in ITS for Noise Map and Action Plan Optimization. Sensors 2022, 22, 1929. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Ghio, A.; Oneto, L. Energy Efficient Smartphone-Based Activity Recognition using Fixed-Point Arithmetic. J. Univers. Comput. Sci. 2013, 19, 1295–1314. [Google Scholar]

- Ahmed, N.; Rafiq, J.I.; Islam, M.R. Enhanced Human Activity Recognition Based on Smartphone Sensor Data Using Hybrid Feature Selection Model. Sensors 2020, 20, 317. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chang, W.C.; Cho, C.W. Online boosting for vehicle detection. IEEE Trans. Syst. Man Cybern. Part B 2010, 40, 892–902. [Google Scholar] [CrossRef]

- Sun, Z.; Bebis, G.; Miller, R. Monocular precrash vehicle detection: Features and classifiers. IEEE Trans. Image Process. 2006, 15, 2019–2034. [Google Scholar] [PubMed]

- Arabi, S.; Haghighat, A.; Sharma, A. A deep-learning-based computer vision solution for construction vehicle detection. Comput. -Aided Civ. Infrastruct. Eng. 2020, 35, 753–767. [Google Scholar] [CrossRef]

- Lowe, S.A.; Olaighin, G. Monitoring human health behaviour in one’s living environment: A technological review. Med. Eng. Phys. 2014, 36, 147–168. [Google Scholar] [CrossRef]

- Heng, X.; Wang, Z.; Wang, J. Human activity recognition based on transformed accelerometer data from a mobile phone. Int. J. Commun. Syst. 2016, 29, 1981–1991. [Google Scholar] [CrossRef]

- Huang, E.J.; Onnela, J.P. Augmented Movelet Method for Activity Classification Using Smartphone Gyroscope and Accelerometer Data. Sensors 2020, 20, 3706. [Google Scholar] [CrossRef]

- Voicu, R.A.; Dobre, C.; Bajenaru, L.; Ciobanu, R.I. Human Physical Activity Recognition Using Smartphone Sensors. Sensors 2019, 19, 458. [Google Scholar] [CrossRef] [Green Version]

- Hasan, M.; Nishat-Anzum, R.; Yasmin, S.; Pias, T. Fine-Grained Emotion Recognition from EEG Signal Using Fast Fourier Transformation and CNN. In Proceedings of the 2021 Joint 10th International Conference on Informatics, Electronics & Vision (ICIEV) and 2021 5th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 16–20 August 2021. [Google Scholar]

- Vieyra, R. Vieyra Software. Available online: https://www.vieyrasoftware.net/ (accessed on 16 October 2021).

- Khamparia, A.; Singh, K. A systematic review on deep learning architectures and applications. Expert Syst. 2019, 36, e12400. [Google Scholar] [CrossRef]

- Livieris, I.; Pintelas, E.; Pintelas, P. A CNN–LSTM model for gold price time-series forecasting. Neural Comput. Appl. 2020, 32, 17351–17360. [Google Scholar] [CrossRef]

- Khan, Z.; Hussain, T.; Ullah, A.; Rho, S.; Lee, M.; Baik, S. Towards Efficient Electricity Forecasting in Residential and Commercial Buildings: A Novel Hybrid CNN with a LSTM-AE based Framework. Sensors 2020, 20, 1399. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Apu, M.; Akter, M.; Lubna, T.; Helaly; Pias, T. ECG Arrhythmia Classification Using 1D CNN Leveraging the Resampling Technique and Gaussian Mixture Model. In Proceedings of the 2021 Joint 10th International Conference on Informatics, Electronics & Vision (ICIEV) and 2021 5th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Kitakyushu, Japan, 16–20 August 2021. [Google Scholar]

- Pias, T.; Kabir, R.; Eisenberg, D.; Ahmed, N.; Islam, M. Gender Recognition by Monitoring Walking Patterns via Smartwatch Sensors. In Proceedings of the 2019 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 3–6 October 2019; pp. 220–223. [Google Scholar]

- Bo, C.; Jian, X.; Jung, T.; Han, J.; Li, X.-Y.; Mao, X.; Wang, Y. Detecting Driver’s Smartphone Usage via Nonintrusively Sensing Driving Dynamics. IEEE Internet Things J. 2017, 4, 340–350. [Google Scholar] [CrossRef]

| Sensor | Technical Function | Articles Using Sensor for Vehicle Recognition |

|---|---|---|

| Used only accelerometer and/or gyroscope | Equal or more cost-effective than our study, utilizing just accelerometers and/or gyroscopes | Pias et al., 2020 [4]; Suharjono et al., 2019 [33]; Kaewunruen et al., 2021 [26]; Fang et al., 2016 [35]; Sengül et al., 2021 [34] |

| Accelerometer | Measures linear acceleration, directional movement, and three-dimensional object orientation or stationing, as well as changes in the ambient environment | Xia et al., 2014 [3]; Alotaibi, 2020 [18]; Badii et al., 2021 [20]; Gjoreski et al., 2021 [21]; Erdelic et al., 2022 [23]; Guvensan et al. 2017 [25], Iskanderov and Guvensan, 2020 [13]; Jeyakumar et al., 2018 [6]; Kaewunruen et al., 2021 [26]; Martin et al., 2017 [10]; Şengül et al., 2021 [34]; Shafique and Hato, 2020 [11]; Tregel et al., 2018 [12]; Wang et al., 2018 [40]; Wang et al., 2021 [8]; Xiao et al., 2019 [41]; Lu et al., 2018 [30]; Suharjono et al., 2019 [33]; Thomas et al., 2018 [37]; Qin et al., 2018 [31] |

| Gyroscope | Measures vibrations in any direction | Alotaibi, 2020 [18]; Gjoreski et al., 2021 [21]; Balli and Sağbaş, 2017 [22]; Erdelic et al., 2022 [23]; Guvensan et al. 2017 [25]; Iskanderov and Guvensan, 2020 [13]; Jeyakumar et al., 2018 [6]; Şengül et al., 2021 [34]; Wang et al., 2018 [40]; Wang et al., 2021 [8]; Qin et al., 2018 [31]; Lu et al., 2018 [30] |

| GPS | Contains user geographic location and timestamp | Xia et al., 2014 [3]; Badii et al., 2021 [20]; Balabka and Shkliarenko., 2021 [14]; Bjerre-Nielsen et al., 2020 [16]; Dogan et al., 2021 [5]; Iabanzhi et al., 2021 [7]; Li et al., 2021 [28]; Martin et al., 2017 [10]; Ren 2021 [32]; Tregel et al., 2018 [12]; Tian et al., 2021 [38], Thomas et al., 2018 [37]; Xiao et al., 2019 [41]; Zhu et al., 2021 [42]; Shafique and Hato, 2020 [11] |

| Magnetometer | Measures and processes magnetic signals as a result of changes in the ambient magnetic field | González et al., 2020 [17]; Gjoreski et al., 2021 [21]; Balli and Sağbaş, 2017 [22]; Erdelic et al., 2022 [23]; Guvensan et al. 2017 [13]; Iskanderov and Guvensan, 2020 [13]; Jeyakumar et al., 2018 [6]; Lou et al., 2018 [29]; Wang et al., 2018 [40]; Wang et al., 2021 [8]; Lu et al., 2018 [30]; Lan et al., 2010 [27]; Qin et al., 2018 [31], |

| Wi-Fi sensor | Contains the user’s identification and wireless fidelity signal strength | Bjerre-Nielsen et al., 2020 [16]; Dogan et al., 2021 [5]; Iabanzhi et al., 2021 [7]; Li et al., 2021 [28]; Tian et al., 2021 [38]; Thomas et al., 2018 [37]; Ren 2021 [32]; Xiao et al., 2019 [41], Zhu et al., 2021 [42]. |

| Gravity sensors | Measures gravitational force | Erdelic et al., 2022 [23], Jeyakumar et al., 2018 [6] |

| Barometer | Measures ambient and inertial pressure. | Wang et al., 2018 [40]; Qin et al., 2018 [31] |

| Bluetooth sensor | Contains user identification, timestamp, and signal strength | Bjerre-Nielsen et al., 2020 [16] |

| Classifier | Accuracy |

|---|---|

| Logistic Regression | 45.36% |

| Naive Bayes | 58.13% |

| SVM | 76.12% |

| XGBoost | 84.73% |

| CNN | 98% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pias, T.S.; Eisenberg, D.; Fresneda Fernandez, J. Accuracy Improvement of Vehicle Recognition by Using Smart Device Sensors. Sensors 2022, 22, 4397. https://doi.org/10.3390/s22124397

Pias TS, Eisenberg D, Fresneda Fernandez J. Accuracy Improvement of Vehicle Recognition by Using Smart Device Sensors. Sensors. 2022; 22(12):4397. https://doi.org/10.3390/s22124397

Chicago/Turabian StylePias, Tanmoy Sarkar, David Eisenberg, and Jorge Fresneda Fernandez. 2022. "Accuracy Improvement of Vehicle Recognition by Using Smart Device Sensors" Sensors 22, no. 12: 4397. https://doi.org/10.3390/s22124397