An Improved MobileNet Network with Wavelet Energy and Global Average Pooling for Rotating Machinery Fault Diagnosis

Abstract

:1. Introduction

- The method is able to process one-dimensional vibration signals directly and does not require other methods to convert one-dimensional signals into two-dimensional signals. Based on the end-to-end idea, the deep learning method is applied to the fault diagnosis of equipment.

- The introduction of wavelet convolution layers and energy pooling layers enhances the adaptive extraction capability and robustness of the model to features, demonstrating good noise immunity.

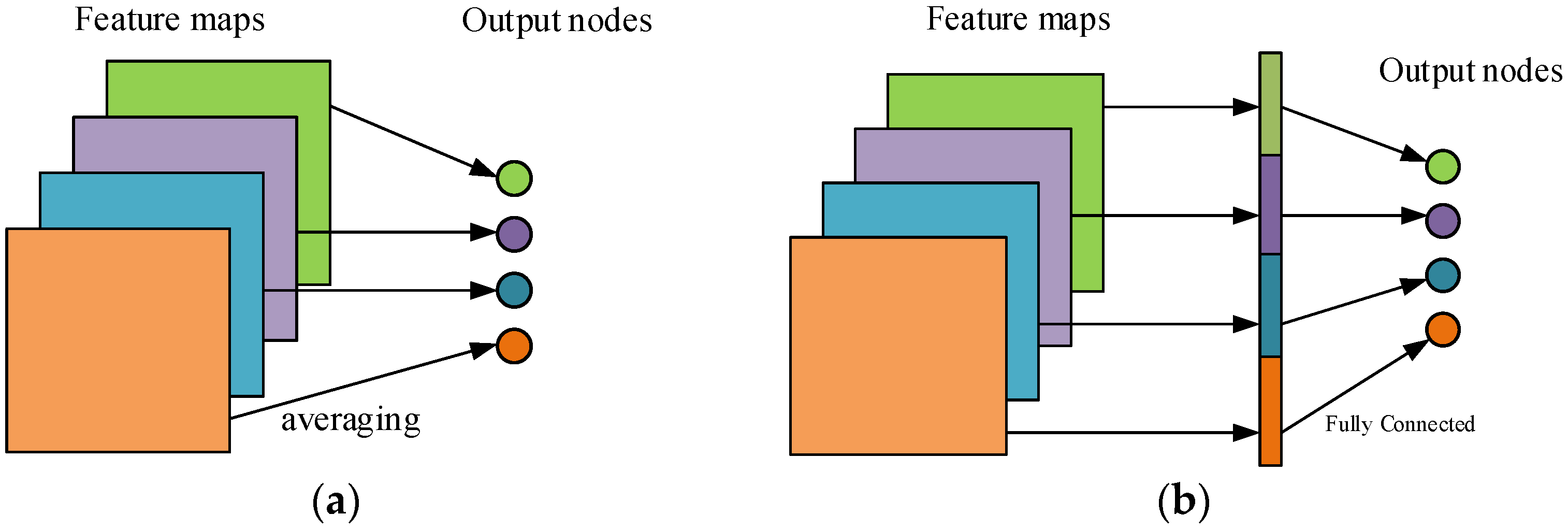

- The impact of using a fully connected layer for fusion classification on the whole network is analyzed, and the use of GAP instead of a fully connected layer for the classification task effectively reduces the number of parameters in the model while ensuring accuracy.

- The model is deployed in the Raspberry Pi lightweight embedded system, and the applicability of the method on the light-resource embedded platform is verified, which provides a feasible solution for the real-time status online monitoring of key equipment in the development of light-resource embedded systems.

2. Theoretical Background

2.1. Wavelet Convolution

2.2. Energy Pooling

2.3. MobileNet Network

2.4. Global Average Pooling (GAP)

3. The Proposed Fault Diagnosis Method

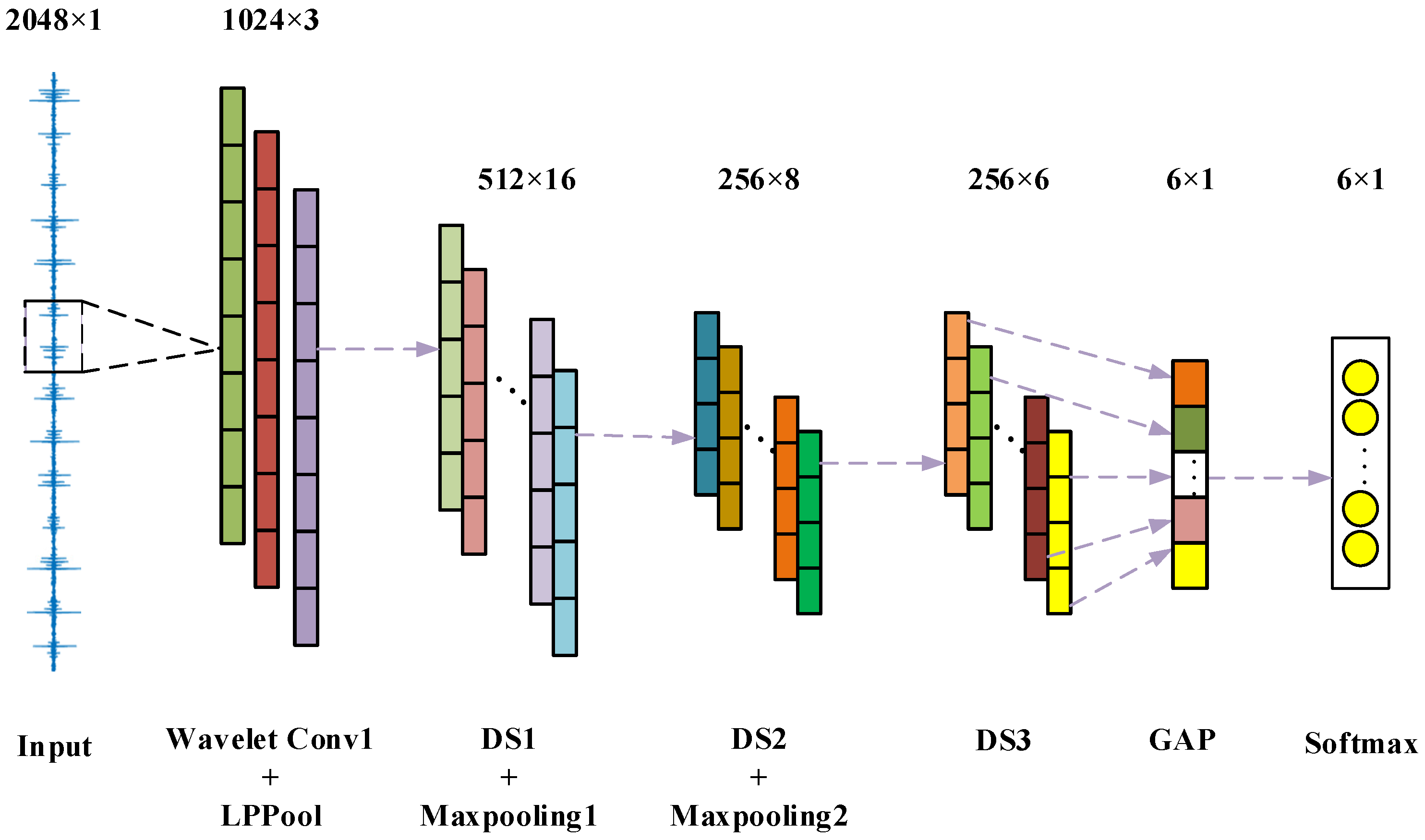

3.1. 1D-WL-G-MN Model Structure

3.2. Data Preprocessing

3.3. Model Training

4. Experimental Analysis and Discussion

4.1. Experimental Environment

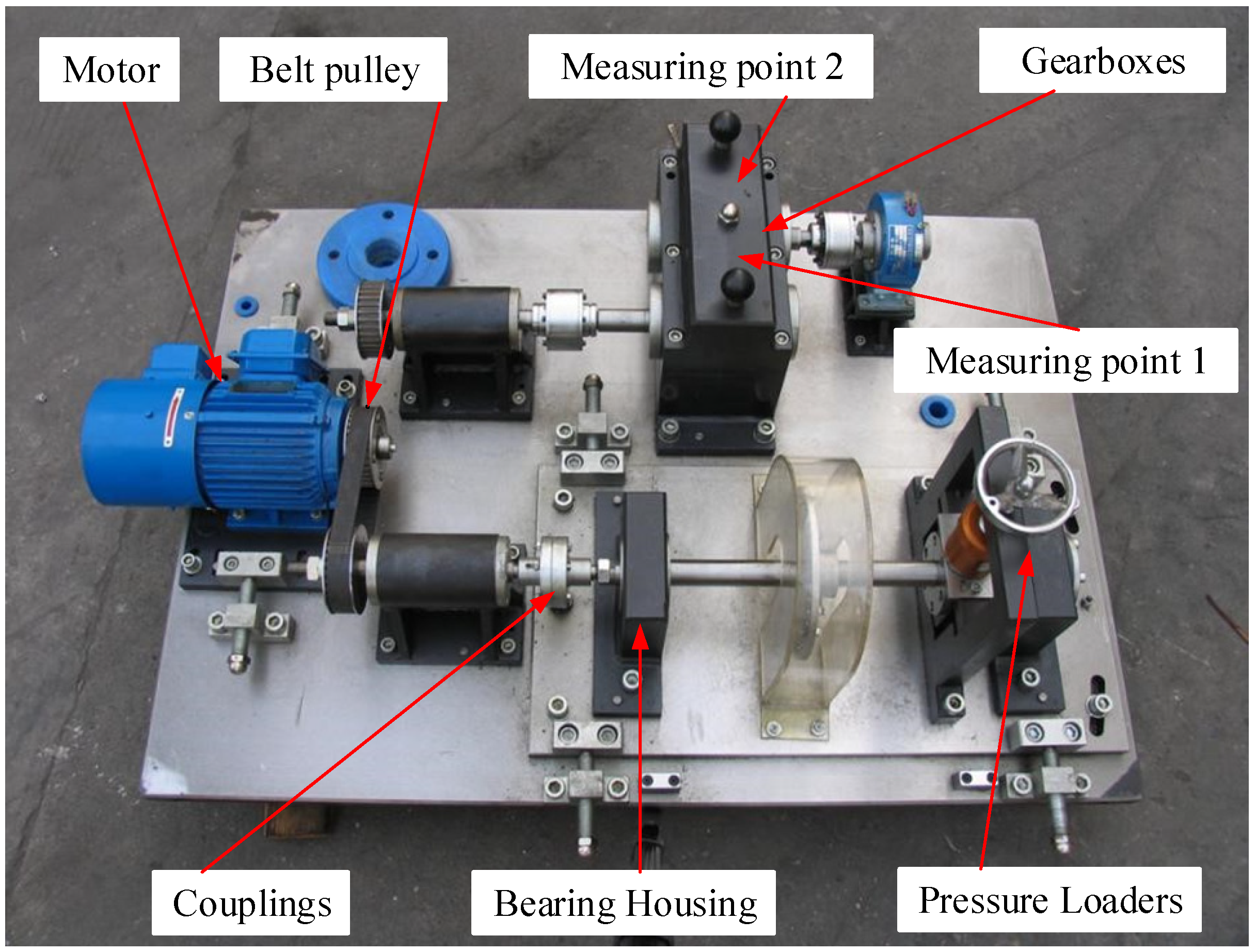

4.2. Gearbox Experiment of QPZZ-II Fault Simulation Test Bench

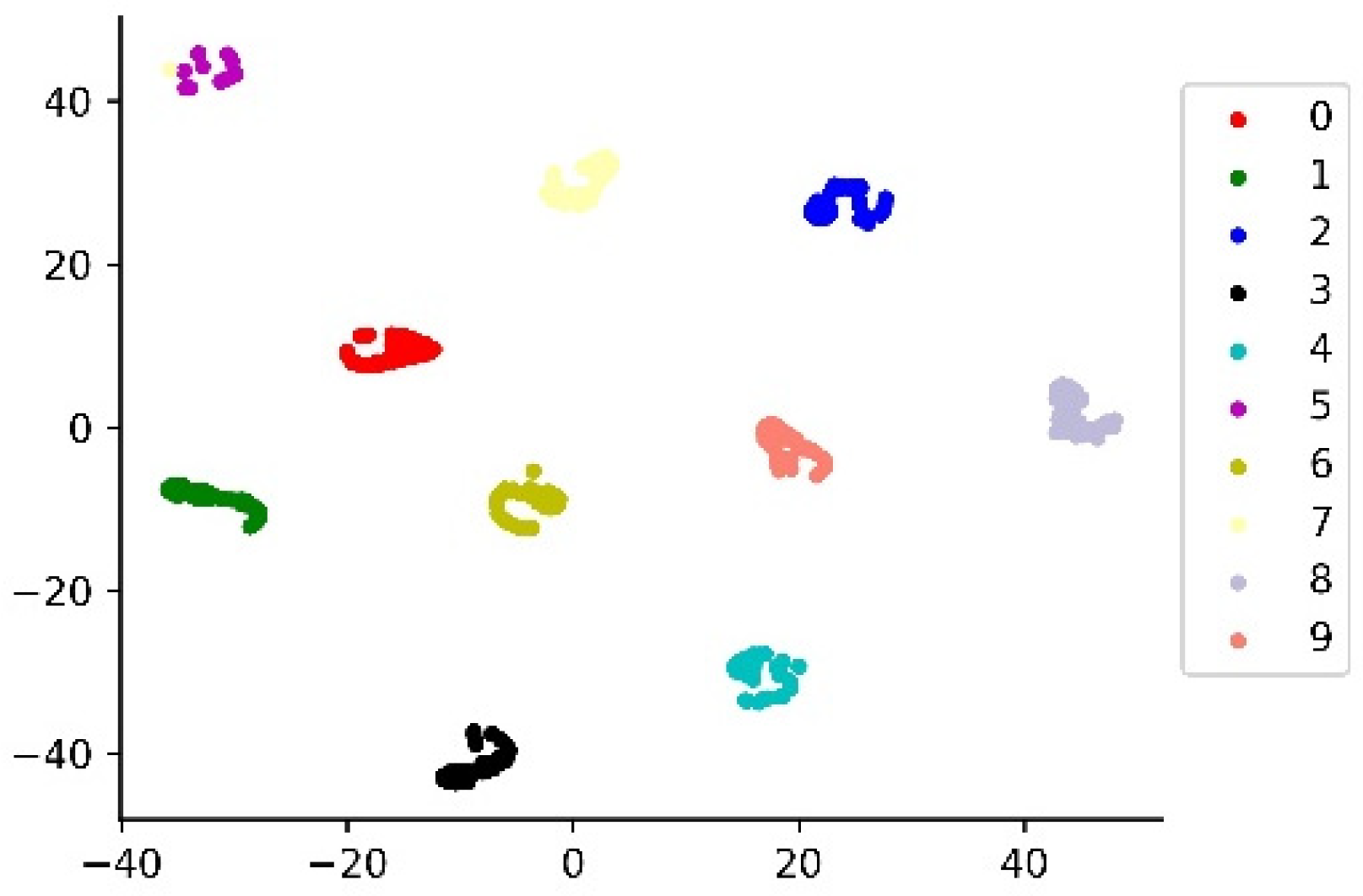

4.3. Western Reserve University Bearing Experiment

4.4. End-to-End Fault Diagnosis Application

5. Conclusions, Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gong, W.; Chen, H.; Zhang, Z.; Zhang, M.; Wang, R.; Guan, C.; Wang, Q. A Novel Deep Learning Method for Intelligent Fault Diagnosis of Rotating Machinery Based on Improved CNN-SVM and Multichannel Data Fusion. Sensors 2019, 19, 1693. [Google Scholar] [CrossRef] [PubMed]

- Rai, V.K.; Mohanty, A.R. Bearing fault diagnosis using FFT of intrinsic mode functions in Hilbert–Huang transform. Mech. Syst. Signal Process. 2007, 21, 2607–2615. [Google Scholar] [CrossRef]

- Lee, W.; Park, C.G. Double Fault Detection of Cone-Shaped Redundant IMUs Using Wavelet Transformation and EPSA. Sensors 2014, 14, 3428–3444. [Google Scholar] [CrossRef] [PubMed]

- Seyrek, P.; Şener, B.; Özbayoğlu, A.M.; Ünver, H.Ö. An Evaluation Study of EMD, EEMD, and VMD For Chatter Detection in Milling. Procedia Comput. Sci. 2022, 200, 160–174. [Google Scholar] [CrossRef]

- Zhang, X.; Miao, Q.; Zhang, H.; Wang, L. A parameter-adaptive VMD method based on grasshopper optimization algorithm to analyze vibration signals from rotating machinery. Mech. Syst. Signal Process. 2018, 108, 58–72. [Google Scholar] [CrossRef]

- Han, T.; Liu, Q.; Zhang, L.; Tan, A.C.C. Fault feature extraction of low speed roller bearing based on Teager energy operator and CEEMD. Measurement 2019, 138, 400–408. [Google Scholar] [CrossRef]

- Ye, T.; Jian, M.; Chen, L.; Wang, Z. Rolling bearing fault diagnosis under variable conditions using LMD-SVD and extreme learning machine. Mech. Mach. Theory 2015, 90, 175–186. [Google Scholar]

- Pandya, D.H.; Upadhyay, S.H.; Harsha, S.P. Fault diagnosis of rolling element bearing with intrinsic mode function of acoustic emission data using APF-KNN. Expert Syst. Appl. 2013, 40, 4137–4145. [Google Scholar] [CrossRef]

- Cao, H.; Sun, P.; Zhao, L. PCA-SVM method with sliding window for online fault diagnosis of a small pressurized water reactor. Ann. Nucl. Energy 2022, 171, 109036. [Google Scholar] [CrossRef]

- Li, C.; Sánchez, R.-V.; Zurita, G.; Cerrada, M.; Cabrera, D. Fault Diagnosis for Rotating Machinery Using Vibration Measurement Deep Statistical Feature Learning. Sensors 2016, 16, 895. [Google Scholar] [CrossRef]

- Long, Y.; Zhou, W.; Luo, Y. A fault diagnosis method based on one-dimensional data enhancement and convolutional neural network. Measurement 2021, 180, 109532. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Z.; Wang, S.; Li, W.; Sarkodie-Gyan, T.; Feng, S. A hybrid deep-learning model for fault diagnosis of rolling bearings. Measurement 2021, 169, 108502. [Google Scholar] [CrossRef]

- Zhong, S.-S.; Fu, S.; Lin, L. A novel gas turbine fault diagnosis method based on transfer learning with CNN. Measurement 2019, 137, 435–453. [Google Scholar] [CrossRef]

- Long, W.; Liang, G.; Li, X. A New Deep Transfer Learning Based on Sparse Auto-Encoder for Fault Diagnosis. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 136–144. [Google Scholar]

- Hasan, M.J.; Sohaib, M.; Kim, J.-M. An Explainable AI-Based Fault Diagnosis Model for Bearings. Sensors 2021, 21, 4070. [Google Scholar] [CrossRef]

- Shafiee, M.J.; Li, F.; Chwyl, B.; Wong, A. SquishedNets: Squishing SqueezeNet further for edge device scenarios via deep evolutionary synthesis. arXiv 2017, arXiv:1711.07459. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Le, Q. MnasNet: Platform-Aware Neural Architecture Search for Mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Asari, V.K. The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar]

- Cunha, C.D.; Rosário, M.D.; Rosado, A.S.; Leite, S.G.F. Serratia sp. SVGG16: A promising biosurfactant producer isolated from tropical soil during growth with ethanol-blended gasoline. Process Biochem. 2004, 39, 2277–2282. [Google Scholar]

- Wang, B.; Li, H.-S. Lane Detection Algorithm Based on MoblieNet+ UNet Lightweight Network. In Proceedings of the 2021 3rd International Symposium on Robotics & Intelligent Manufacturing Technology (ISRIMT), Changzhou, China, 25–26 September 2021; pp. 352–356. [Google Scholar]

- Yu, W.; Lv, P. An End-to-End Intelligent Fault Diagnosis Application for Rolling Bearing Based on MobileNet. IEEE Access 2021, 9, 41925–41933. [Google Scholar] [CrossRef]

- Pham, M.T.; Kim, J.M.; Kim, C.H. Deep Learning-Based Bearing Fault Diagnosis Method for Embedded Systems. Sensors 2020, 20, 6886. [Google Scholar] [CrossRef]

- Yao, D.; Li, G.; Liu, H.; Yang, J. An intelligent method of roller bearing fault diagnosis and fault characteristic frequency visualization based on improved MobileNet V3. Meas. Sci. Technol. 2021, 32, 124009. [Google Scholar] [CrossRef]

- Kantor, P.B. Foundations of Statistical Natural Language Processing. Inf. Retr. 2001, 4, 80–81. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Kaiser, L.; Gomez, A.N.; Chollet, F. Depthwise Separable Convolutions for Neural Machine Translation. arXiv 2017, arXiv:1706.03059. [Google Scholar]

- Siddiqi, R. Efficient Pediatric Pneumonia Diagnosis Using Depthwise Separable Convolutions. SN Comput. Sci. 2020, 1, 343. [Google Scholar] [CrossRef]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional, Long Short-Term Memory, Fully Connected Deep Neural Networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 4580–4584. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

| Layer | Kernel Size (Length × Width) | Input Size (Length × Width) | Activation Function | Output Size (Length × Width) |

|---|---|---|---|---|

| Wavelet-Con1 | 55 × 1 | 2048 × 1 | ReLu 6.0 | 2048 × 3 |

| LPPool | 2 × 1 | 2048 × 3 | - | 1024 × 3 |

| DW1 | 5 × 1 | 1024 × 3 | ReLu 6.0 | 1024 × 16 |

| Maxpooling2 | 2 × 1 | 1024 × 16 | - | 512 × 16 |

| DW2 | 5 × 1 | 512 × 16 | ReLu 6.0 | 512 × 8 |

| Maxpooling3 | 2 × 1 | 512 × 8 | - | 256 × 8 |

| DW3 | 5 × 1 | 256 × 8 | ReLu 6.0 | 256 × 6 |

| GAP1 | - | 256 × 6 | - | 6 × 1 |

| - | - | 6 × 1 | Softmax | 6 × 1 |

| Parameter Name | Parameter Description |

|---|---|

| Training set:Test set | 7:3 |

| Optimizer | Adam |

| Batch size | 70 |

| Learning rate | 0.001 |

| Loss function | Cross-Entropy Loss |

| Fault Type | Number of Samples | Sample Length | Labels |

|---|---|---|---|

| The normal | 1000 | 2048 | 0 |

| Local wear | 1000 | 2048 | 1 |

| Tooth profile | 1000 | 2048 | 2 |

| Broken gear teeth | 1000 | 2048 | 3 |

| Tooth root fracture | 1000 | 2048 | 4 |

| Pitting | 1000 | 2048 | 5 |

| NO. | Network | Description | Main Features |

|---|---|---|---|

| 1 | 1D-CNN | One Dimensional-Convolutional Neural Network | 1D Standard Convolutional Neural Network |

| 2 | 1D-WL-F-MN | One Dimensional-Wavelet LPPool-FC-MobileNet | Network with wavelet convolution kernel and energy pooling in the first layer and a fully connected layer in the last layer |

| 3 | 1D-C-F-MN | One Dimensional-CNN-FC-MobileNet | Network with standard convolutional kernel and maximum pooling in the first layer and a fully connected layer in the last layer |

| 4 | 1D-C-G-MN | One Dimensional-CNN-GAP-MobileNet | Network with standard convolutional kernel and maximum pooling in the first layer and a global average pooling layer in the last layer |

| 5 | SVM | — | — |

| Model | Accuracy (%) | Parameter Quantity | Inference Time (s) |

|---|---|---|---|

| 1D-WL-G-MN | 99.94 | 1997 | 0.898 |

| 1D-WL-F-MN | 99.28 | 11,219 | 1.380 |

| 1D-CNN | 99.57 | 21,776 | 2.341 |

| 1D-C-G-MN | 98.25 | 1197 | 0.910 |

| 1D-C-F-MN | 99.13 | 11,219 | 1.351 |

| SVM | 41.27 | - | - |

| Test Subject | Load | Speed (r/min) | Number of Samples | Sample Length | Fault Type | Fault Diameter | Labels |

|---|---|---|---|---|---|---|---|

| 6205-2RS JEM SKF | 2HP | 1750 | 200 | 2048 | Normal | 0 | 0 |

| 200 | 2048 | IF | 0.007 | 1 | |||

| 200 | 2048 | IF | 0.014 | 2 | |||

| 200 | 2048 | IF | 0.021 | 3 | |||

| 200 | 2048 | OF | 0.007 | 4 | |||

| 200 | 2048 | OF | 0.014 | 5 | |||

| 200 | 2048 | OF | 0.021 | 6 | |||

| 200 | 2048 | BF | 0.007 | 7 | |||

| 200 | 2048 | BF | 0.014 | 8 | |||

| 200 | 2048 | BF | 0.021 | 9 |

| Model | Accuracy (%) | Parameter Quantity | Inference Time (s) |

|---|---|---|---|

| 1D-WL-G-MN | 100.00 | 2037 | 0.886 |

| 1D-WL-F-MN | 100.00 | 27,647 | 1.393 |

| 1D-CNN | 99.61 | 39,932 | 2.459 |

| 1D-C-G-MN | 99.52 | 2037 | 0.894 |

| 1D-C-F-MN | 99.33 | 27,647 | 1.350 |

| SVM | 89.00 | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, F.; Liu, C.; Yang, J.; Wang, S. An Improved MobileNet Network with Wavelet Energy and Global Average Pooling for Rotating Machinery Fault Diagnosis. Sensors 2022, 22, 4427. https://doi.org/10.3390/s22124427

Zhu F, Liu C, Yang J, Wang S. An Improved MobileNet Network with Wavelet Energy and Global Average Pooling for Rotating Machinery Fault Diagnosis. Sensors. 2022; 22(12):4427. https://doi.org/10.3390/s22124427

Chicago/Turabian StyleZhu, Fu, Chang Liu, Jianwei Yang, and Sen Wang. 2022. "An Improved MobileNet Network with Wavelet Energy and Global Average Pooling for Rotating Machinery Fault Diagnosis" Sensors 22, no. 12: 4427. https://doi.org/10.3390/s22124427

APA StyleZhu, F., Liu, C., Yang, J., & Wang, S. (2022). An Improved MobileNet Network with Wavelet Energy and Global Average Pooling for Rotating Machinery Fault Diagnosis. Sensors, 22(12), 4427. https://doi.org/10.3390/s22124427