Recognition Method of Knob Gear in Substation Based on YOLOv4 and Darknet53-DUC-DSNT

Abstract

:1. Introduction

- Firstly, the quality of images collected in the actual scene of the substation has different levels. The main reason is that the poor lighting conditions cause some images to have a low signal–noise ratio (SNR), which affects the image extraction features. As shown in Figure 1c,d the image in (c) is evident due to the excellent lighting conditions, and the image features are easier to extract. In contrast, it is already challenging to see the features of the knob in (d) due to poor lighting conditions.

- Secondly, many oblique views are in the images collected from the substation. The knob will be deformed to a certain extent, resulting in inaccurate feature extraction and weak spatial generalization ability. Figure 2 shows the original image collected from the substation. There are three knobs in the same image, of which (a) and (b) are oblique views, and (c) is a front view. Experiments show that if we use the two-stage gear detection model in the article [21] which first locates the knob area and then directly classifies the knob, the model will have a high probability of misidentifying (a) and (b) as the upper left direction, the direction of (a) is up, and the direction of (b) is to the left.

- Thirdly, due to the variety of knobs, the spatial distribution of key points of each kind of knob is different, as shown in Figure 3. This results in a relatively weak spatial generalization ability of the trained model. Even if the regression coordinate error is only a few pixels, it will still cause misjudgment of the knob gear.

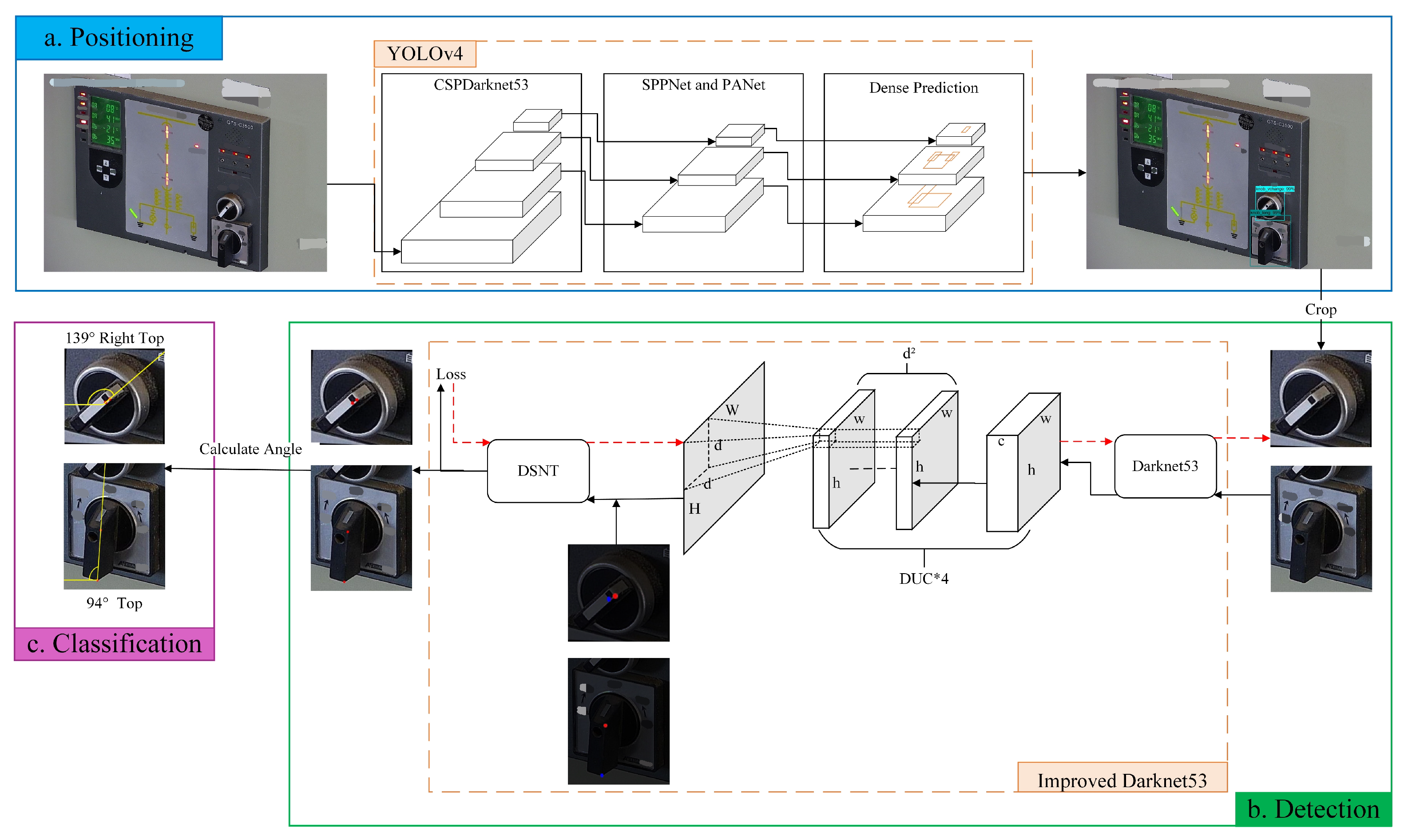

- We propose a three-stage knob gear detection method YOLOv4 and Darknet53-DUC-DSNT model for the first time and apply key point detection of deep learning to the knob gear detection for the first time, and the results are more accurate than the two-stage detection.

- We combine the DUC structure to solve the loss of detail information due to low image SNR.

- We use the DSNT structure to solve the problem of the key point coordinate regression deviation and weak spatial generalization ability caused by image squint and different feature distributions of key points.

2. Related Work

3. Knob Gear Recognition Model

3.1. The Knob Area Positioning Based on YOLOv4

3.2. The Knob Key Point Detection Based on Darknet53-DUC-DSNT

3.2.1. DUC

3.2.2. DSNT

- The fully connected layer is used to return the coordinate points directly. The advantage of this approach is that the training speed is breakneck, and it is an end-to-end full differential training. The disadvantage is that the spatial generalization ability is weak, the weight obtained by the full connection method depends heavily on the distribution of training data, which is very easy to cause overfitting, and the accuracy is not high in the case of high resolution.

- The predictive Gaussian heatmap method. The advantage of this method is that the accuracy is usually higher than that of method 1. The disadvantage is that the method is not a fully differential model from input to output, and the accuracy is lower in low resolution.

3.3. Knob Gear Classification

4. Experiment

4.1. Experimental Environment

4.2. Experimental Dataset and Data Augmentation

4.3. Performance Evaluation Criteria

4.4. YOLOv4 Knob Area Positioning Results

4.5. Darknet53-DUC-DSNT Knob Key Point Detection Results and Comparison

- The knob key point model proposed in our article has the best prediction effect, the predicted points almost coincide with the marked points, and the average RMSE of all knobs is less than 1. All types of knobs achieved good detection results, whether front or oblique.

- Although the accuracy rates of the Darknet53, Darknet53-DUC, and Darknet53-DSNT models are very low, the average RMSE is not high, indicating that the average prediction error of each picture is not high. Still, the error exceeds 1 pixel, leading to low accuracy.

- Compared with the Darknet53 model, Darknet53-DUC improves the detection accuracy by 0.301 pixels on average. For each kind of knob, the average RMSE of the Darknet53-DUC model is smaller than the average RMSE predicted by the Darknet53 model, indicating that DUC effectively enhances the feature extraction ability, which improves the detection accuracy.

- Compared with the Darknet53 model, the average RMSE of Darknet53-DSNT is higher, indicating that using DSNT under the premise of losing detailed features of the image will cause the opposite effect. Hence, DUC is essential, and we must first restore the image feature information.

- The average RMSE of the Darknet53-DUC-DSNT model is 0.549 pixels lower than that of Darknet53-DUC, indicating that the use of DSNT in the case of image detail feature recovery can effectively enhance the spatial generalization ability, so DSNT is also essential.

4.6. Knob Gear Classification Results and Comparison

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, X.; Dong, B.; Li, P.; Yuan, B.; Wang, K. Research and application of image recognition of substation inspection robots based on edge computing and incremental learning. Res. Sq. 2021. [Google Scholar] [CrossRef]

- Wang, C.; Yin, L.; Zhao, Q.; Wang, W.; Li, C.; Luo, B. An intelligent robot for indoor substation inspection. Ind. Robot. Int. J. Robot. Res. Appl. 2020, 47, 705–712. [Google Scholar] [CrossRef]

- Wu, X.; Gao, X.; Gong, J. Intelligent Instrument Recognition Scheme Based on Unattended Substation Inspection. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–29 July 2020; pp. 6550–6555. [Google Scholar]

- Liu, Y.; Liu, J.; Ke, Y. A detection and recognition system of pointer meters in substations based on computer vision. Measurement 2020, 152, 107333. [Google Scholar] [CrossRef]

- Cai, R.; Ren, X.; Deng, J.; Wang, J.; Wang, S.; Huang, L.; Guo, Q. The application of live-operated robot system in the high voltage switch cabinet. Mach. Des. Manuf. Eng. 2020, 34, 15. [Google Scholar]

- Wu, Y.; Zhao, S.; Gao, X.; Zhan, C. Design of Control System of Intelligent Emergency Operation Robot for Transformation and Distribution. Mech. Eng. Autom. 2021, 163–165. [Google Scholar]

- Wu, Y. Research on Image Recognition System of Aircraft Cockpit Knob. Commun. Power Technol. 2020, 37, 192–194. [Google Scholar]

- Siciliano, B.; Khatib, O.; Kröger, T. Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008; Volume 200. [Google Scholar]

- Zuo, L.; He, P.; Zhang, C.; Zhang, Z. A robust approach to reading recognition of pointer meters based on improved mask-RCNN. Neurocomputing 2020, 388, 90–101. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Stateline, NV, USA, 3–8 December 2012; Volume 25. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems Advances in Neural Information Processing Systems, Montreal, QC, Canada, 1 June 2017; Volume 28. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Jiang, Z.; Zhao, L.; Li, S.; Jia, Y. Real-time object detection method based on improved YOLOv4-tiny. arXiv 2020, arXiv:2011.04244. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Shi, M.; Lu, Z. Power station rotary switch status recognition based on YOLO-tiny-RFB model. Comput. Appl. 2020, 40, 3679–3686. [Google Scholar]

- Zhu, Z.; Zhou, Z.; Zhao, Y.; Wang, Y.; Wang, L. Measurement method of knob pose based on edge detection and deep network. Electron. Meas. Technol. 2021, 44, 26–32. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Nibali, A.; He, Z.; Morgan, S.; Prendergast, L. Numerical coordinate regression with convolutional neural networks. arXiv 2018, arXiv:1801.07372. [Google Scholar]

- Sun, X.; Xiao, B.; Wei, F.; Liang, S.; Wei, Y. Integral human pose regression. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 529–545. [Google Scholar]

- Zhang, F.; Zhu, X.; Dai, H.; Ye, M.; Zhu, C. Distribution-aware coordinate representation for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 14–19 June 2020; pp. 7093–7102. [Google Scholar]

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Park, K.; Patten, T.; Prankl, J.; Vincze, M. Multi-task template matching for object detection, segmentation and pose estimation using depth images. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 7207–7213. [Google Scholar]

- Yang, J.; Rahardja, S.; Fränti, P. Mean-shift outlier detection and filtering. Pattern Recognit. 2021, 115, 107874. [Google Scholar] [CrossRef]

- Schneider, V.J. Real time circle detection by simplified Hough transform on smartphones. In Proceedings of the Real-Time Image Processing and Deep Learning 2021; International Society for Optics and Photonics: Bellingham, WA, USA, 2021; Volume 11736, p. 117360F. [Google Scholar]

- Zhao, K.; Han, Q.; Zhang, C.B.; Xu, J.; Cheng, M.M. Deep Hough Transform for Semantic Line Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 1. [Google Scholar] [CrossRef]

- Srinivasu, P.N.; SivaSai, J.G.; Ijaz, M.F.; Bhoi, A.K.; Kim, W.; Kang, J.J. Classification of skin disease using deep learning neural networks with MobileNet V2 and LSTM. Sensors 2021, 21, 2852. [Google Scholar] [CrossRef]

- Wu, F.; Zhu, C.; Xu, J.; Bhatt, M.W.; Sharma, A. Research on image text recognition based on canny edge detection algorithm and k-means algorithm. Int. J. Syst. Assur. Eng. Manag. 2021, 13, 72–80. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, L. Fruit classification model based on improved Darknet53 convolutional neural network. In Proceedings of the 2020 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Vientiane, Laos, 11–12 January 2020; pp. 881–884. [Google Scholar]

- Yi, X.; Song, Y.; Zhang, Y. Enhanced Darknet53 Combine MLFPN Based Real-Time Defect Detection in Steel Surface. In Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Proceedings of the Third Chinese Conference, PRCV 2020, Nanjing, China, 16–18 October 2020; Springer: Cham, Switzerland, 2020; pp. 303–314. [Google Scholar]

- Pathak, D.; Raju, U. Content-based image retrieval using feature-fusion of GroupNormalized-Inception-Darknet-53 features and handcraft features. Optik 2021, 246, 167754. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Purkait, P.; Zhao, C.; Zach, C. SPP-Net: Deep absolute pose regression with synthetic views. arXiv 2017, arXiv:1712.03452. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Al-Haija, Q.A.; Smadi, M.; Al-Bataineh, O.M. Identifying Phasic dopamine releases using DarkNet-19 Convolutional Neural Network. In Proceedings of the 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 21–24 April 2021; pp. 1–5. [Google Scholar]

- ÖZCAN, A.; DÖNMEZ, E. Bacterial Disease Detection for Pepper Plant by Utilizing Deep Features Acquired from DarkNet-19 CNN Model. Dicle Üniversitesi Mühendislik Fakültesi Mühendislik Dergisi 2021, 12, 573–579. [Google Scholar] [CrossRef]

- Sharif, M.; Amin, J.; Siddiqa, A.; Khan, H.U.; Malik, M.S.A.; Anjum, M.A.; Kadry, S. Recognition of different types of leukocytes using YOLOv2 and optimized bag-of-features. IEEE Access 2020, 8, 167448–167459. [Google Scholar] [CrossRef]

- Zhang, Q. A novel ResNet101 model based on dense dilated convolution for image classification. SN Appl. Sci. 2022, 4, 1–13. [Google Scholar] [CrossRef]

- Xu, Z.; Sun, K.; Mao, J. Research on ResNet101 Network Chemical Reagent Label Image Classification Based on Transfer Learning. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; pp. 354–358. [Google Scholar]

- Nguyen, L.D.; Lin, D.; Lin, Z.; Cao, J. Deep CNNs for microscopic image classification by exploiting transfer learning and feature concatenation. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar]

- Šulc, M.; Mishkin, D.; Matas, J. Very deep residual networks with maxout for plant identification in the wild. Working Notes of CLEF. In Proceedings of the Conference and Labs of the Evaluation Forum, Évora, Portugal, 5–8 September 2016. [Google Scholar]

- Han, S.S.; Park, G.H.; Lim, W.; Kim, M.S.; Na, J.I.; Park, I.; Chang, S.E. Deep neural networks show an equivalent and often superior performance to dermatologists in onychomycosis diagnosis: Automatic construction of onychomycosis datasets by region-based convolutional deep neural network. PLoS ONE 2018, 13, e0191493. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reenadevi, R.; Sathiya, T.; Sathiyabhama, B. Breast Cancer Histopathological Image Classification Using Augmentation Based on Optimized Deep ResNet-152 Structure. Ann. Rom. Soc. Cell Biol. 2021, 25, 5866–5874. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

| Direction | Angle Range/ |

|---|---|

| Top | (85, 95) |

| Bottom | (−95, −85) |

| Left | (−5, 5) |

| Right | (−180, −175) or (175, 180) |

| Left Top | (40, 50) |

| Left Bottom | (−50, −40) |

| Right Top | (130, 140) |

| Right Bottom | (−140, −130) |

| Dataset | Quantity |

|---|---|

| Initial knob total dataset | 1480 |

| Augmented knob total dataset | 20,766 |

| Augmented knob training set | 16,618 |

| Augmented knob verification set | 2079 |

| Augmented knob test set | 2069 |

| Name | Picture | Before | After | Name | Picture | Before | After |

|---|---|---|---|---|---|---|---|

| Knob1 |  | 479 | 2146 | Knob6 |  | 116 | 2070 |

| Knob2 |  | 539 | 2152 | Knob7 |  | 24 | 2040 |

| Knob3 |  | 140 | 2070 | Knob8 |  | 24 | 2040 |

| Knob4 |  | 66 | 2080 | Knob9 |  | 29 | 2025 |

| Knob5 |  | 60 | 2100 | Knob10 |  | 3 | 2043 |

| Model | Darknet53 | Darknet53-DUC | Darknet53-DSNT | Darknet53-DUC-DSNT | |

|---|---|---|---|---|---|

| Knob | |||||

| Knob1 | Accuracy | 17.76% | 22.90% | 12.62% | 80.84% |

| Avg RMSE | 2.190 | 1.862 | 12.847 | 0.717 | |

| Result |  |  |  |  | |

| Knob2 | Accuracy | 63.80% | 76.47% | 63.80% | 98.19% |

| Avg RMSE | 1.059 | 0.810 | 2.260 | 0.394 | |

| Result |  |  |  |  | |

| Knob3 | Accuracy | 44.77% | 42.26% | 97.91% | 98.74% |

| Avg RMSE | 1.572 | 1.171 | 0.414 | 0.413 | |

| Result |  |  |  |  | |

| Knob4 | Accuracy | 84.44% | 100.00% | 100.00% | 100.00% |

| Avg RMSE | 0.700 | 0.416 | 0.162 | 0.154 | |

| Result |  |  |  |  | |

| Knob5 | Accuracy | 100.00% | 99.03% | 90.82% | 100.00% |

| Avg RMSE | 0.371 | 0.339 | 0.404 | 0.156 | |

| Result |  |  |  |  | |

| Knob6 | Accuracy | 81.69% | 93.90% | 51.64% | 100.00% |

| Avg RMSE | 1.054 | 0.547 | 1.146 | 0.200 | |

| Result |  |  |  |  | |

| Knob7 | Accuracy | 46.60% | 70.68% | 73.30% | 100.00% |

| Avg RMSE | 1.103 | 0.762 | 1.352 | 0.320 | |

| Result |  |  |  |  | |

| Knob8 | Accuracy | 66.67% | 78.51% | 71.49% | 100.00% |

| Avg RMSE | 0.924 | 0.775 | 1.395 | 0.292 | |

| Result |  |  |  |  | |

| Knob9 | Accuracy | 36.36% | 55.08% | 64.17% | 99.47% |

| Avg RMSE | 1.271 | 0.997 | 2.990 | 0.382 | |

| Result |  |  |  |  | |

| Knob10 | Accuracy | 8.99% | 46.03% | 67.20% | 100.00% |

| Avg RMSE | 1.515 | 1.067 | 4.992 | 0.274 | |

| Result |  |  |  |  | |

| All knobs | Accuracy | 54.20% | 66.63% | 67.79% | 96.35% |

| Avg RMSE | 1.185 | 0.884 | 2.795 | 0.335 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, R.; Hua, Z.; Sun, Z.; He, R. Recognition Method of Knob Gear in Substation Based on YOLOv4 and Darknet53-DUC-DSNT. Sensors 2022, 22, 4722. https://doi.org/10.3390/s22134722

Qin R, Hua Z, Sun Z, He R. Recognition Method of Knob Gear in Substation Based on YOLOv4 and Darknet53-DUC-DSNT. Sensors. 2022; 22(13):4722. https://doi.org/10.3390/s22134722

Chicago/Turabian StyleQin, Ronglin, Zexi Hua, Ziwei Sun, and Rujiang He. 2022. "Recognition Method of Knob Gear in Substation Based on YOLOv4 and Darknet53-DUC-DSNT" Sensors 22, no. 13: 4722. https://doi.org/10.3390/s22134722