Active 2D-DNA Fingerprinting of WirelessHART Adapters to Ensure Operational Integrity in Industrial Systems

Abstract

:1. Introduction

1.1. Operational Motivation

- Operation based on the legacy wired HART protocol—users can take full advantage of prior experience, prior training, prior tool purchases, etc.;

- Considerable reduction in deployment, installation, and maintenance cost—minimal to no additional infrastructure cabling required;

- Network architectural flexibility—expansion easily accommodated by adding connectivity to additional field devices and/or other nearby networks;

- Dramatic reduction in device commissioning times—device benchtop programing and field installation completed in hours versus days.

1.2. Technical Motivation

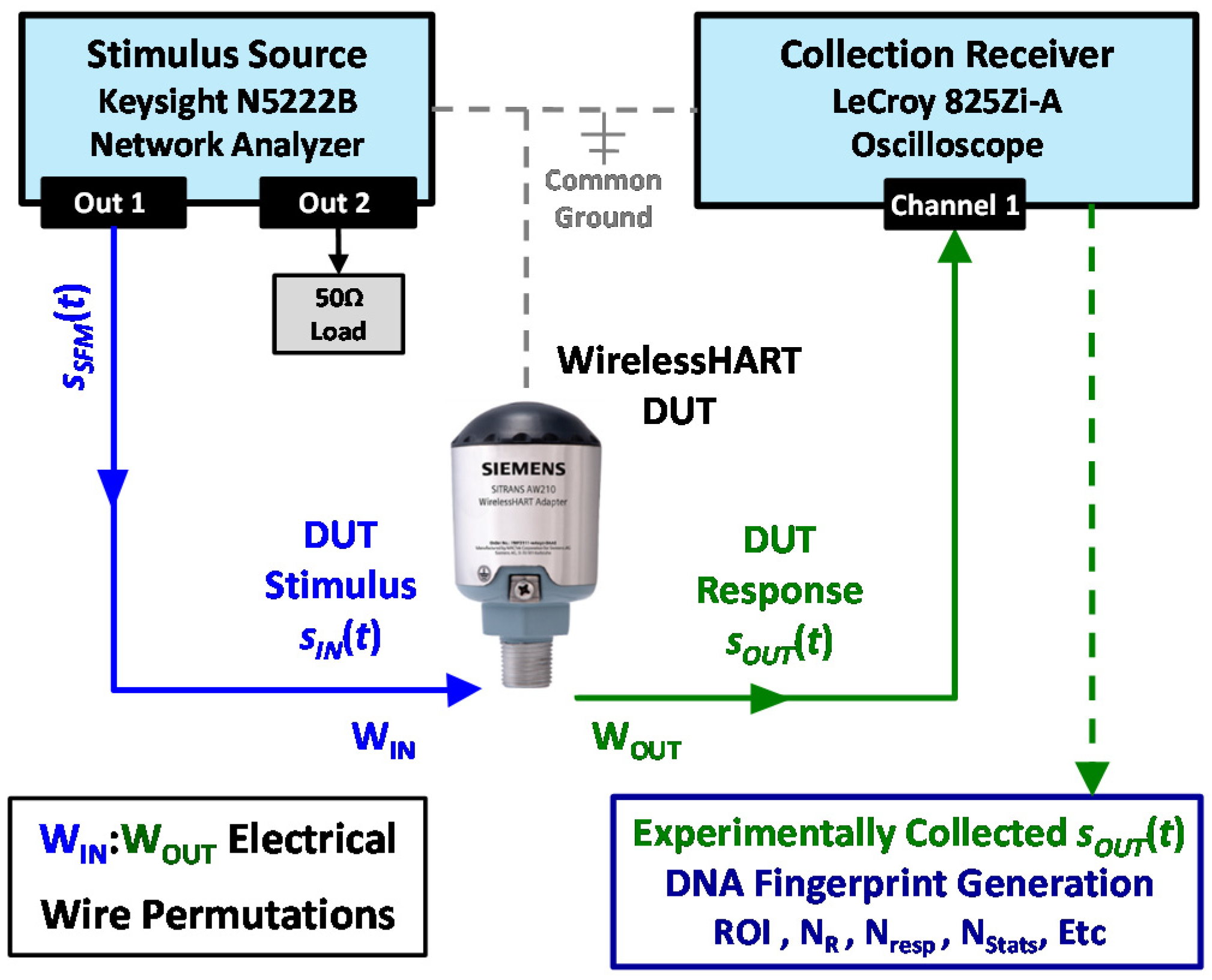

2. Demonstration Methodology

- Response Collection and Processing in Section 2.1—this includes Device Under Test (DUT) emplacement, DUT stimulation, DUT stimulated output collection, and pre-fingerprint generation signal processing (filtering and decimation) to reduce computational complexity and improve discriminability;

- 1D Time Domain DNA (TdDna) Fingerprint Generation in Section 2.2—this includes generation of device TdDna fingerprints used to provide a performance baseline representing the pre-existing 1D-DNA fingerprinting capability;

- 2D Gabor Transform DNA (GtDna) Fingerprint Generation in Section 2.3—this includes generation of device GtDna fingerprints used to demonstrate performance benefits of 2D-DNA fingerprinting considered herein;

- Multiple Discriminant Analysis (MDA) in Section 2.4—this includes cross-validated training of the MDA models required for device discrimination assessments;

- Device Discrimination in Section 2.5—this includes implementation of multi-model MDA device classification as a necessary precursor to implementing the device ID verification process to perform counterfeit detection and estimate %CDR;

- Dimensional Reduction Analysis (DRA) in Section 2.5—this includes final actions taken to reduce the number of fingerprint features required to achieve a given level of discrimination performance while improving computational efficiency;

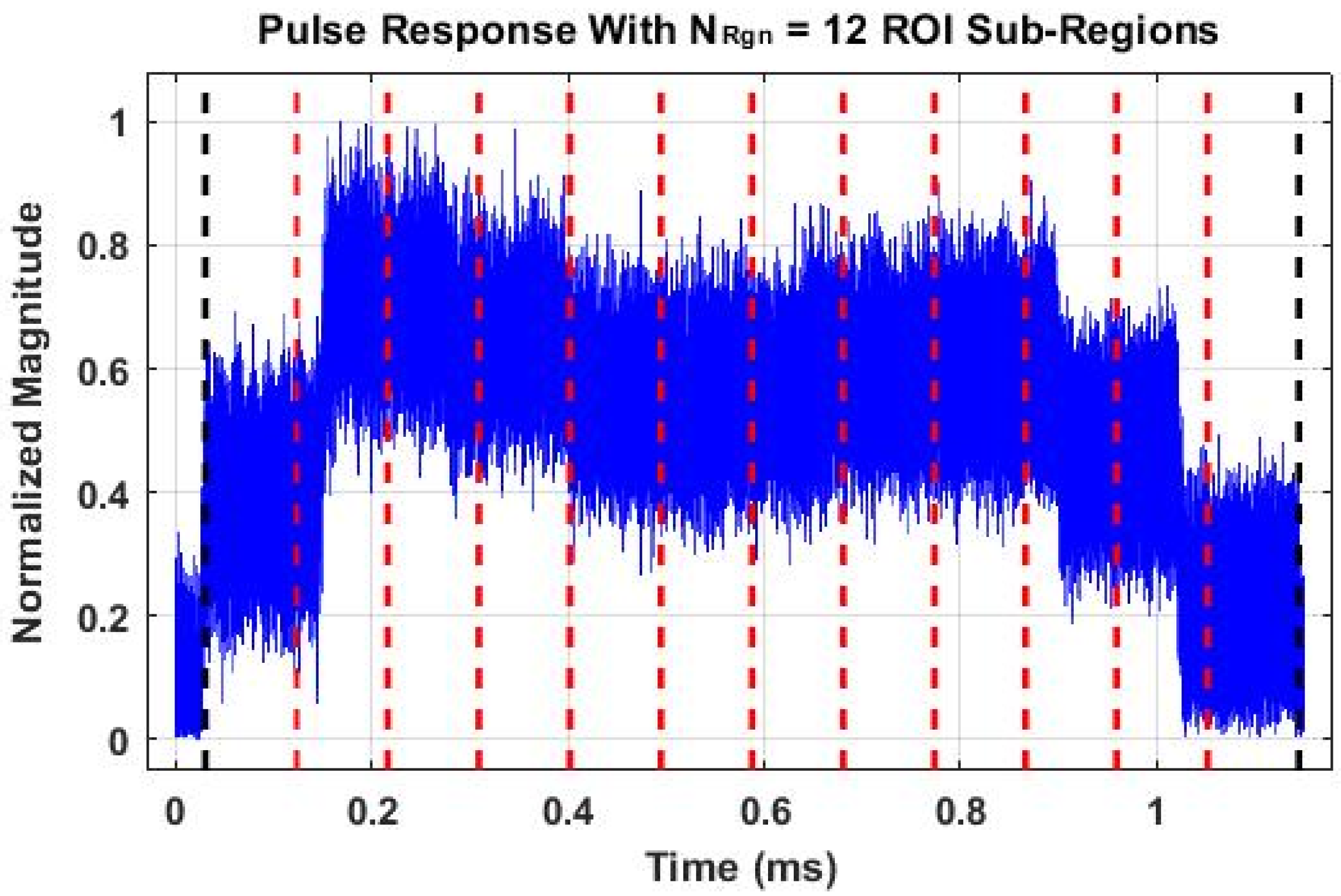

2.1. Response Collection and Processing

2.2. 1D Time Domain DNA (TdDna) Fingerprint Generation

2.3. 2D Gabor Transform DNA (GtDna) Fingerprint Generation

2.4. Multiple Discriminant Analysis (MDA)

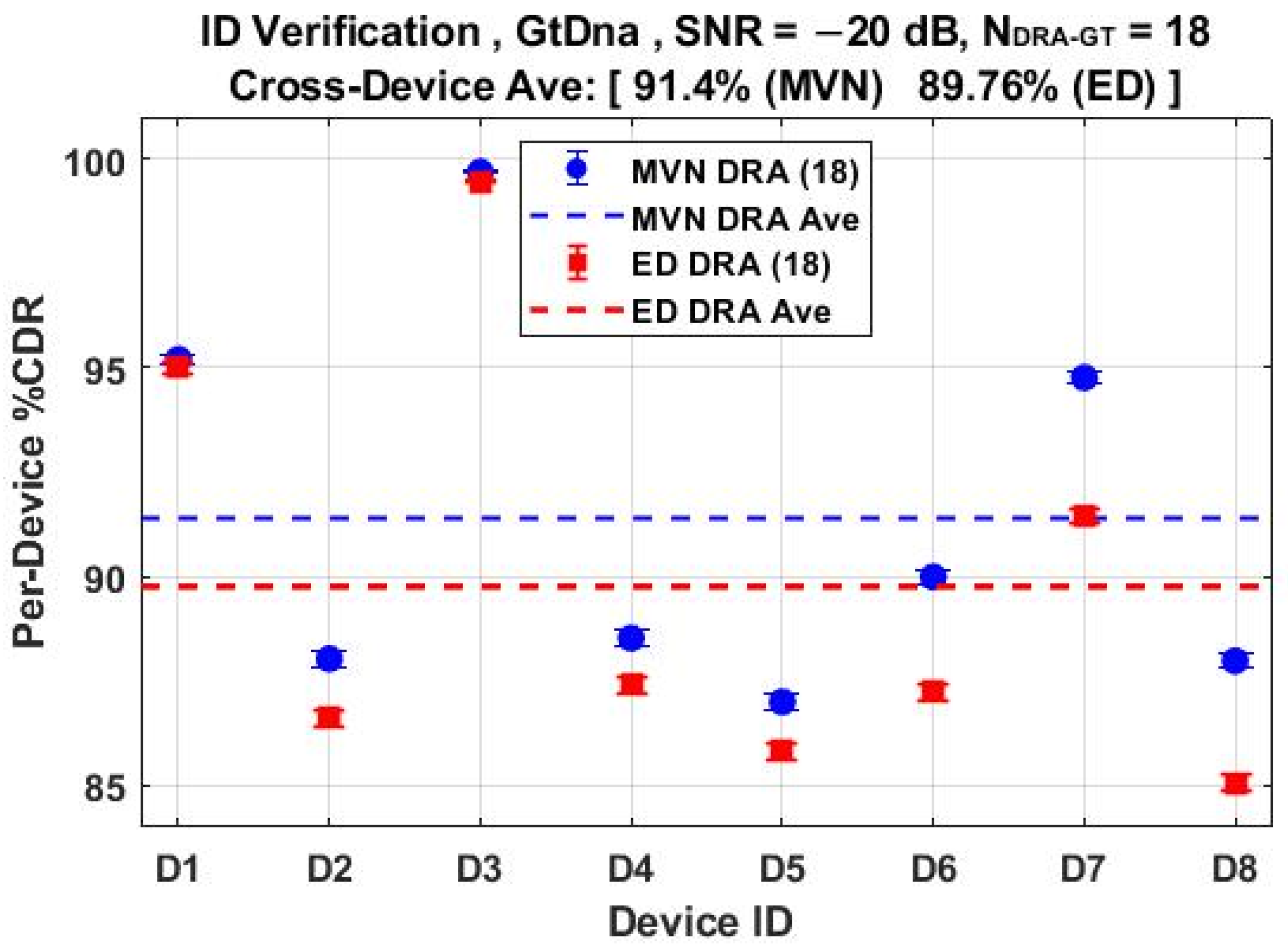

2.5. Device Discrimination

- Generating a fingerprint projection for each of the NTst fingerprints from the counterfeit device;

- Calculating the test statistic associated with the claimed authentic device using each of the counterfeit projections;

- Performing a ⪋ threshold comparison, where is the device-dependent ID verification for the claimed authentic device and the ⪋ inequality condition is set as (a) greater than (>) for a higher-is-better match statistic (e.g., MVN probability), or (b) less than (<) for a lower-is-better match statistic (e.g., Euclidean Distance);

- Making a binary accept/reject declaration based on threshold criteria with (a) an accept (false positive) being an undesirable outcome—counterfeit not detected, and (b) a reject (true negative) being a desirable outcome—counterfeit detected;

- Calculating %CDR = [(NTst − NRej)/NTst] × 100 as an estimate of counterfeit detectability, where NRej is the total number of binary reject decisions.

2.6. Dimensional Reduction Analysis (DRA)

3. Device Discrimination Results

3.1. 1D vs. 2D Classification Performance

3.2. Multi-Model Discrimination

4. Summary

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| %C | Average Cross-Class Percent Correct Classification |

| ANN | Artificial Neural Network |

| %CDR | Counterfeit Detection Rate Percentage |

| CI95% | 95% Confidence Interval |

| ED | Euclidean Distance |

| FD | Full Dimensional |

| DNA | Distinct Native Attribute |

| DRA | Dimensional Reduction Analysis |

| FPGA | Field Programmable Gate Array |

| GT | Gabor Transform |

| GtDna | Gabor Transform DNA |

| GSps | Giga-Samples Per Second |

| ID | Identity/Identification |

| IoT | Internet of Things |

| IIoT | Industrial Internet of Things |

| IR 4.0 | Industrial Revolution 4.0 |

| LVQ | Learning Vector Quantized |

| MDA | Multiple Discriminant Analysis |

| MHz | Megahertz |

| MSps | Mega-Samples Per Second |

| MVN | Multivariate Normal |

| RFID | Radio Frequency Identification |

| RndF | Random Forest |

| SDR | Software Defined Radio |

| SFM | Stepped Frequency Modulated |

| TD | Time Domain |

| TdDna | Time Domain DNA |

| HART | Highway Addressable Remote Transducer |

References

- FieldComm Group. WirelessHART: Proven and Growing Technology with a Promising Future; Global Control; FieldComm Group: Austin, TX, USA, 2018; Available online: https://tinyurl.com/fcgwirelesshartglobalcontrol (accessed on 22 June 2022).

- Devan, P.A.M.; Hussin, F.A.; Ibrahim, R.; Bingi, K.; Khanday, F.A. A Survey on the Application of WirelessHART for Industrial Process Monitoring and Control. Sensors 2021, 21, 4951. [Google Scholar] [CrossRef] [PubMed]

- Majid, M.; Habib, S.; Javed, A.R.; Rizwan, M.; Srivastava, G.; Gadekallu, T.R.; Lin, J.C.W. Applications of Wireless Sensor Networks and Internet of Things Frameworks in Industry Revolution 4.0: A Systematic Literature Review. Sensors 2022, 22, 2087. [Google Scholar] [CrossRef] [PubMed]

- Rondeau, C.M.; Temple, M.A.; Betances, J.A.; Schubert Kabban, C.M. Extending Critical Infrastructure Element Longevity Using Constellation-Based ID Verification. J. Comput. Secur. 2020, 100, 102073. [Google Scholar] [CrossRef]

- Cyber Security and Infrastructure Agency (CISA). Cybersecurity and Physical Security Convergence. 2021. Available online: https://www.cisa.gov/cybersecurity-and-physical-security-convergence (accessed on 22 June 2022).

- Soltanieh, N.; Norouzi, Y.; Yang, Y.; Karmakar, N.C. A Review of Radio Frequency Fingerprinting Techniques. IEEE J. Radio Freq. Identif. 2020, 4, 222–233. [Google Scholar] [CrossRef]

- Suski, W.C., II; Temple, M.A.; Mendenhall, M.J.; Mills, R.F. Using Spectral Fingerprints to Improve Wireless Network Security. In Proceedings of the IEEE Global Telecommunications Conference (GLOBECOM), New Orleans, LA, USA, 30 November–4 December 2008. [Google Scholar] [CrossRef]

- Reising, D.R.; Temple, M.A.; Mendenhall, M.J. Improved Wireless Security for GMSK-based Devices Using RF Fingerprinting. Int. J. Electron. Security Digit. Forensics 2010, 3, 41–59. [Google Scholar] [CrossRef]

- Reising, D.R.; Temple, M.A.; Mendenhall, M.J. Improving Intracellular Security Using Air Monitoring with RF Fingerprints. In Proceedings of the IEEE Wireless Communication and Networking Conference (WCNC), Sydney, Australia, 18–21 April 2010. [Google Scholar] [CrossRef] [Green Version]

- Williams, M.D.; Temple, M.A.; Reising, D.R. Augmenting Bit Level Network Security Using Physical Layer RF-DNA Fingerprinting. In Proceedings of the IEEE Global Telecommunications Conference (GLOBECOM), Miami, FL, USA, 6–10 December 2010. [Google Scholar] [CrossRef] [Green Version]

- Lopez, J.; Liefer, N.C.; Busho, C.R.; Temple, M.A. Enhancing Critical Infrastructure and Key Resources (CIKR) Level-0 Physical Process Security Using Field Device Distinct Native Attribute Features. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1215–1229. [Google Scholar] [CrossRef]

- Reising, D.R.; Temple, M.A.; Jackson, J.A. Authorized and Rogue Device Discrimination Using Dimensionally Reduced RF-DNA Fingerprints. IEEE Trans. Inf. Forensics Secur. 2015, 10, 1180–1192. [Google Scholar] [CrossRef]

- Reising, D.R.; Temple, M.A. WiMAX Mobile Subscriber Verification Using Gabor-Based RF-DNA Fingerprints. In Proceedings of the IEEE International Conference on Communications (ICC), Ottawa, ON, Canada, 10–15 June 2012. [Google Scholar] [CrossRef] [Green Version]

- Rondeau, C.M.; Temple, M.A.; Schubert Kabban, C.M. TD-DNA Feature Selection for Discriminating WirelessHART IIoT Devices. In Proceedings of the 53rd Hawaii International Conference on System Sciences (HICSS), Maui, HI, USA, 7–10 January 2020; Available online: https://scholarspace.manoa.hawaii.edu/bitstreams/35252979-27c2-4ae0-b8fb-35529f731e5a/download (accessed on 22 June 2022).

- Talbot, C.M.; Temple, M.A.; Carbino, T.J.; Betances, J.A. Detecting Rogue Attacks on Commercial Wireless Insteon Home Automation Systems. J. Comput. Secur. 2018, 74, 296–307. [Google Scholar] [CrossRef]

- Stone, S.J.; Temple, M.A. RF-Based Anomaly Detection for PLCs in Critical Infrastructure Apps. Int. J. Crit. Infrastruct. Prot. 2012, 5, 66–73. [Google Scholar] [CrossRef]

- Stone, S.J.; Temple, M.A.; Baldwin, R.O. Detecting Anomalous PLC Behavior Using RF-Based Hilbert Transform Features and a Correlation-Based Verification Process. Int. J. Crit. Infrastruct. Prot. 2015, 9, 41–51. [Google Scholar] [CrossRef]

- Lukacs, M.W.; Zeqolari, A.J.; Collins, P.J.; Temple, M.A. RF-DNA Fingerprinting for Antenna Classification. IEEE Antennas Wirel. Propag. Lett. 2015, 14, 1455–1458. [Google Scholar] [CrossRef]

- Maier, M.J.; Hayden, H.S.; Temple, M.A.; Betances, J.A.; Fickus, M.C. Improving WirelessHART Trust in Critical Infrastructure Applications. In Critical Infrastructure Protection XVI; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Paul, A.J.; Collins, P.J.; Temple, M.A. Enhancing Microwave System Health Assessment Using Artificial Neural Networks. IEEE Antennas Wirel. Propag. Lett. 2019, 18, 2230–2234. [Google Scholar] [CrossRef]

- Voetberg, B.; Carbino, T.; Temple, M.; Buskohl, P.; Denault, J.; Glavin, N. Evolution of DNA Fingerprinting for Discriminating Conductive Ink Specimens. In Proceedings of the Government Microcircuit Applications & Critical Technology Conference (GOMACTech), Albuquerque, NM, USA, 25–28 March 2019. [Google Scholar]

- FieldComm Group. WirelessHART User Case Studies; Technical Report; FieldComm Group: Austin, TX, USA, 2019; Available online: https://tinyurl.com/fcgwirelesscs (accessed on 22 June 2022).

- Yang, K.; Forte, D.; Tehranipoor, M.M. CDTA: A Comprehensive Solution for Counterfeit Detection, Traceability, and Authentication in the IoT Supply Chain. ACM Trans. Des. Autom. Electron. Syst. 2017, 22, 1–31. [Google Scholar] [CrossRef]

- Raut, R.D.; Gotmare, A.; Narkhede, B.E.; Govindarajan, U.H.; Bokade, S.U. Enabling Technologies for Industry 4.0 Manufacturing and Supply Chain: Concepts, Current Status, and Adoption Challenges. IEEE Eng. Manag. Rev. 2020, 48, 83–102. [Google Scholar] [CrossRef]

- Siemens. WirelessHART Adapter, SITRANS AW210, 7MP3111, User Manual; Siemens: Munich, Germany, 2012; Available online: https://tinyurl.com/yyjbgybm (accessed on 22 June 2022).

- Pepperl+Fuchs. WHA-BLT-F9D0-N-A0-*, WirelessHART Adapter, Manual. Available online: https://tinyurl.com/pepplusfucwirelesshart (accessed on 22 June 2022).

- Keysight Technologies. PNA Family Microwave Network Analyzer (N522x/3x/4xB), Configuration Guide, Doc ID: 5992-1465EN. 10 September 2021. Available online: https://www.keysight.com/us/en/assets/7018-05185/configuration-guides/5992-1465.pdf (accessed on 22 June 2022).

- LeCroy. WaveMaster® 8 Zi-A Series: 4 GHz-45GHz Doc ID: WM8Zi-A-DS-09May11. 2011. Available online: https://docs.rs-online.com/035e/0900766b8127e31c.pdf (accessed on 22 June 2022).

- Granados-Lieberman, D.; Valtierra-Rodriguez, M.; Morales-Hernandez, L.A.; Romero-Troncoso, R.J.; Roque, A. Osornio-Rios, R.A. A Hilbert Transform-Based Smart Sensor for Detection, Classification, and Quantification of Power Quality Disturbances. Sensors 2013, 13, 5507–5527. [Google Scholar] [CrossRef] [Green Version]

- Soberon, A.; Stute, W. Assessing Skewness, Kurtosis and Normality in Linear Mixed Models. J. Multivar. Anal. 2017, 161, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Cho, S.; Jang, G.; Kwon, S. Time-Frequency Analysis for Power-Quality Disturbances via the Gabor-Wigner Transform. IEEE Trans. Power Deliv. 2010, 25, 494–499. [Google Scholar] [CrossRef]

- Zibulski, M.; Zeevi, Y. Oversampling in the Gabor Scheme. IEEE Trans. Signal Process. 1993, 41, 2679–2687. [Google Scholar] [CrossRef]

- Bastiaans, M.J.; Geilen, M.C.W. On the Discrete Gabor Transform and The Discrete Zak Transform. Signal Process. 1996, 49, 151–166. [Google Scholar] [CrossRef]

- Tharwat, A. Classification Assessment Methods. Appl. Comput. Inform. 2020, 17, 168–192. [Google Scholar] [CrossRef]

- Park, H.; Leemis, L.M. Ensemble Confidence Intervals for Binomial Proportions. Stat. Med. 2019, 38, 3460–3475. [Google Scholar] [CrossRef] [PubMed]

| Device ID | Device Label | Firmware | Serial Number |

|---|---|---|---|

| D1 | Siemens AW210 | 198 | 003095 |

| D2 | Siemens AW210 | 200 | 003159 |

| D3 | Siemens AW210 | 198 | 003097 |

| D4 | Siemens AW210 | 200 | 003150 |

| D5 | Pepperl+Fuchs Bullet | 200 | 1A32DA |

| D6 | Pepperl+Fuchs Bullet | 200 | 1A32B3 |

| D7 | Pepperl+Fuchs Bullet | 200 | 1A3226 |

| D7 | Pepperl+Fuchs Bullet | 200 | 1A32A4 |

| Model ID | D1 | D2 | D3 | D4 | D5 | D6 | D7 | D8 |

|---|---|---|---|---|---|---|---|---|

| M1 | A | A | A | A | A | C | C | C |

| M2 | A | A | A | A | C | A | C | C |

| M3 | A | A | A | A | C | C | A | C |

| ⁝ | ⁝ | ⁝ | ⁝ | ⁝ | ⁝ | ⁝ | ⁝ | ⁝ |

| M54 | C | C | A | A | C | A | A | A |

| M55 | C | C | A | C | A | A | A | A |

| M56 | C | C | C | A | A | A | A | A |

| Called Class | |||||

|---|---|---|---|---|---|

| Input Class | Class 1 | Class 2 | Class 3 | Class 4 | Class 5 |

| Class 1 | 526 | 0 | 10 | 29 | 0 |

| Class 2 | 0 | 438 | 27 | 0 | 100 |

| Class 3 | 5 | 17 | 539 | 0 | 4 |

| Class 4 | 32 | 0 | 1 | 532 | 0 |

| Class 5 | 0 | 152 | 10 | 0 | 403 |

| %CCls | 93.1% | 77.5% | 95.4% | 94.2% | 71.3% |

| ±CI95% | 2.1% | 3.4% | 1.7% | 1.9% | 3.7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mims, W.H.; Temple, M.A.; Mills, R.F. Active 2D-DNA Fingerprinting of WirelessHART Adapters to Ensure Operational Integrity in Industrial Systems. Sensors 2022, 22, 4906. https://doi.org/10.3390/s22134906

Mims WH, Temple MA, Mills RF. Active 2D-DNA Fingerprinting of WirelessHART Adapters to Ensure Operational Integrity in Industrial Systems. Sensors. 2022; 22(13):4906. https://doi.org/10.3390/s22134906

Chicago/Turabian StyleMims, Willie H., Michael A. Temple, and Robert F. Mills. 2022. "Active 2D-DNA Fingerprinting of WirelessHART Adapters to Ensure Operational Integrity in Industrial Systems" Sensors 22, no. 13: 4906. https://doi.org/10.3390/s22134906

APA StyleMims, W. H., Temple, M. A., & Mills, R. F. (2022). Active 2D-DNA Fingerprinting of WirelessHART Adapters to Ensure Operational Integrity in Industrial Systems. Sensors, 22(13), 4906. https://doi.org/10.3390/s22134906