Image Segmentation Using Active Contours with Hessian-Based Gradient Vector Flow External Force

Abstract

1. Introduction

2. Backgrounds

2.1. Traditional Model: Active Contours

2.2. Gradient Vector Flow (GVF)

2.3. Virtual Electric Field (VEF)

2.4. Gradient Vector Flow in Normal Direction (NGVF)

2.5. Component-Normalized Generalized Gradient Vector Flow (CN-GGVF)

3. The HBGVF Model

3.1. Gradient Vector Flow Expressed in Matrix Form

3.2. Using the Hessian Matrix to Construct Diffusion Matrix

4. Corresponding Comparative Experiments

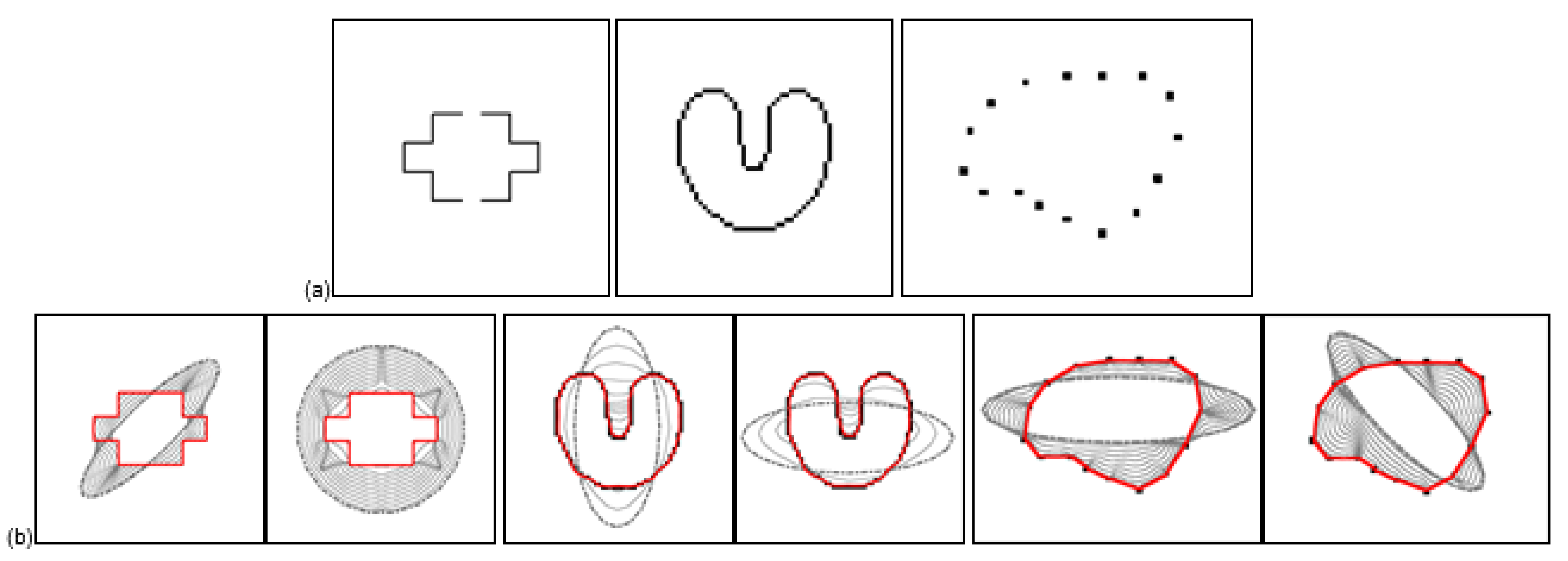

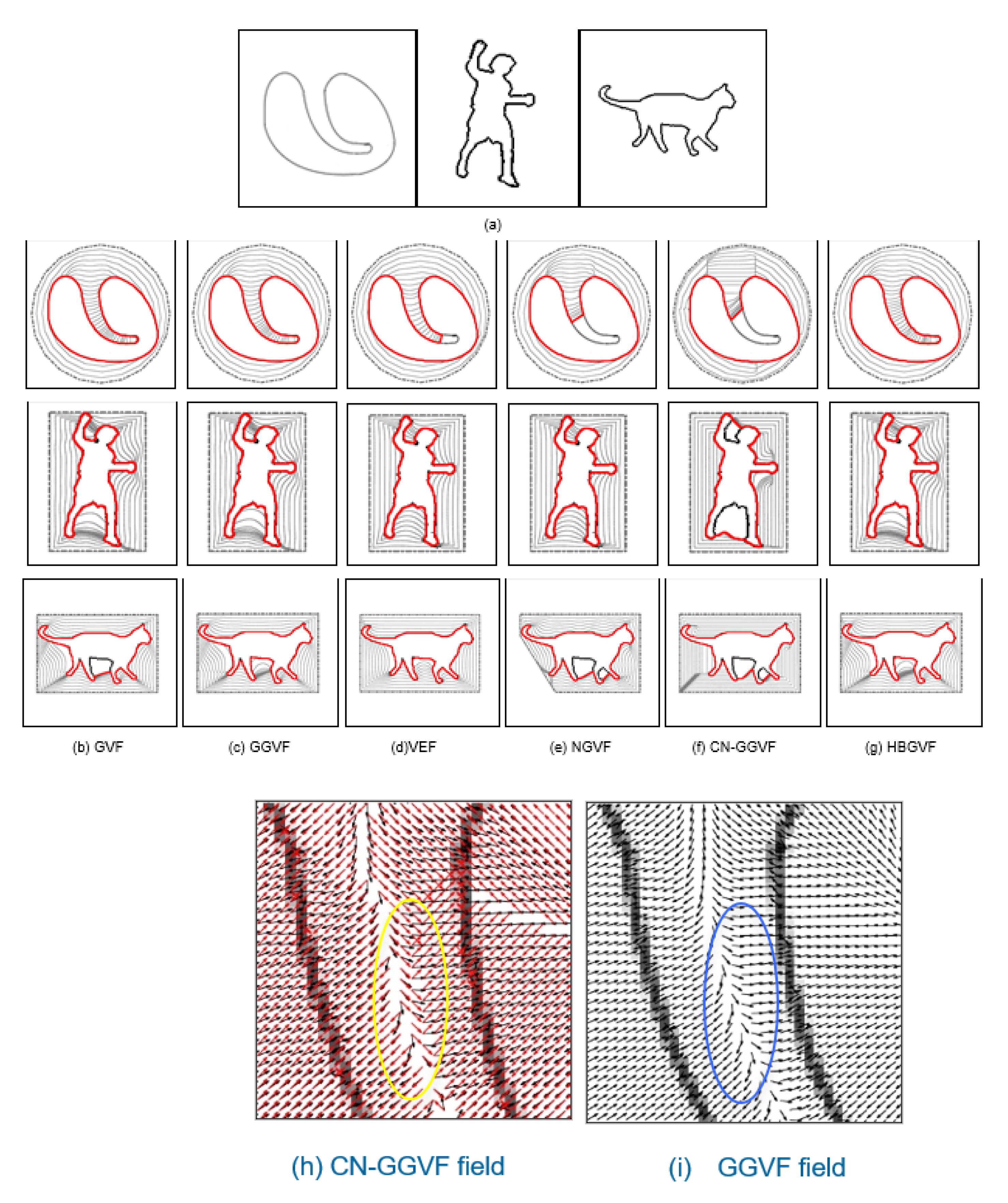

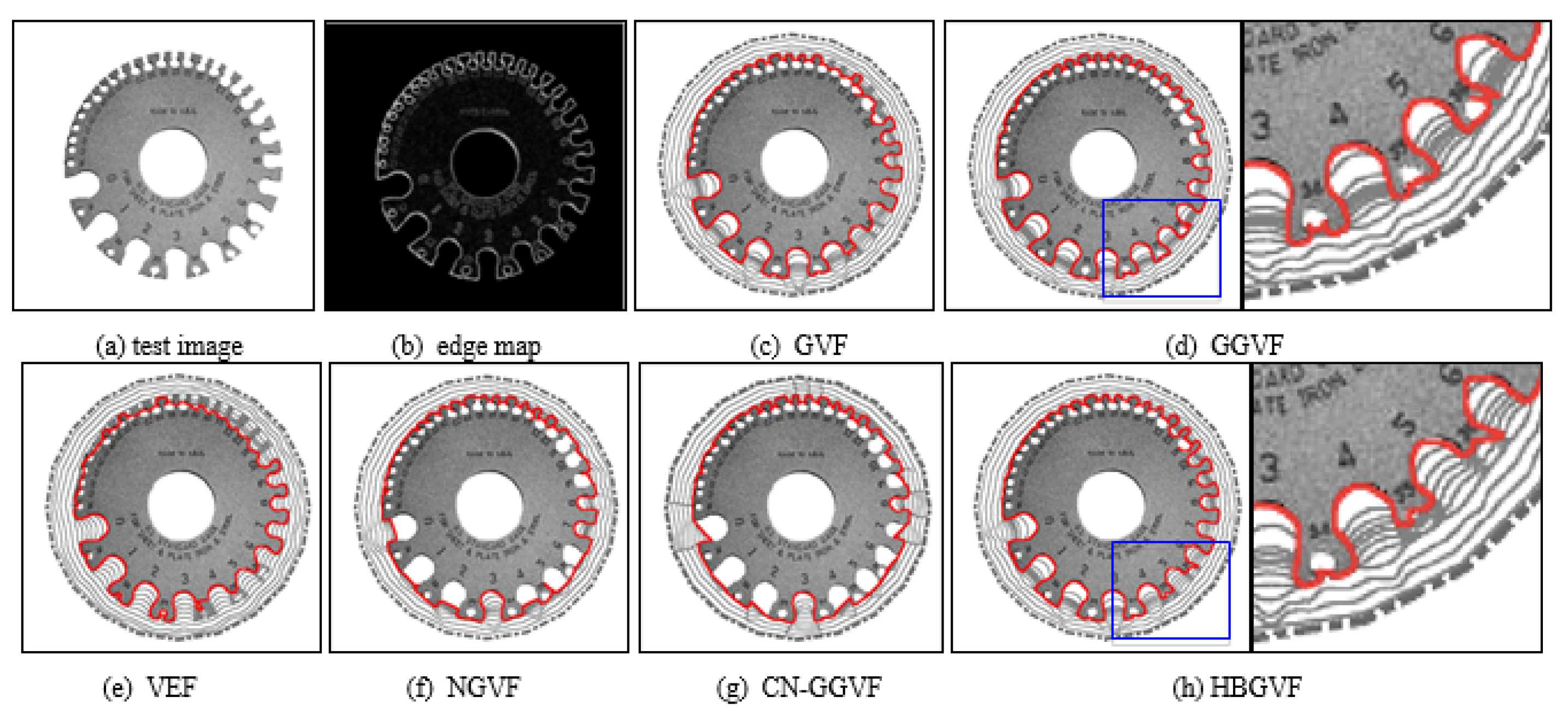

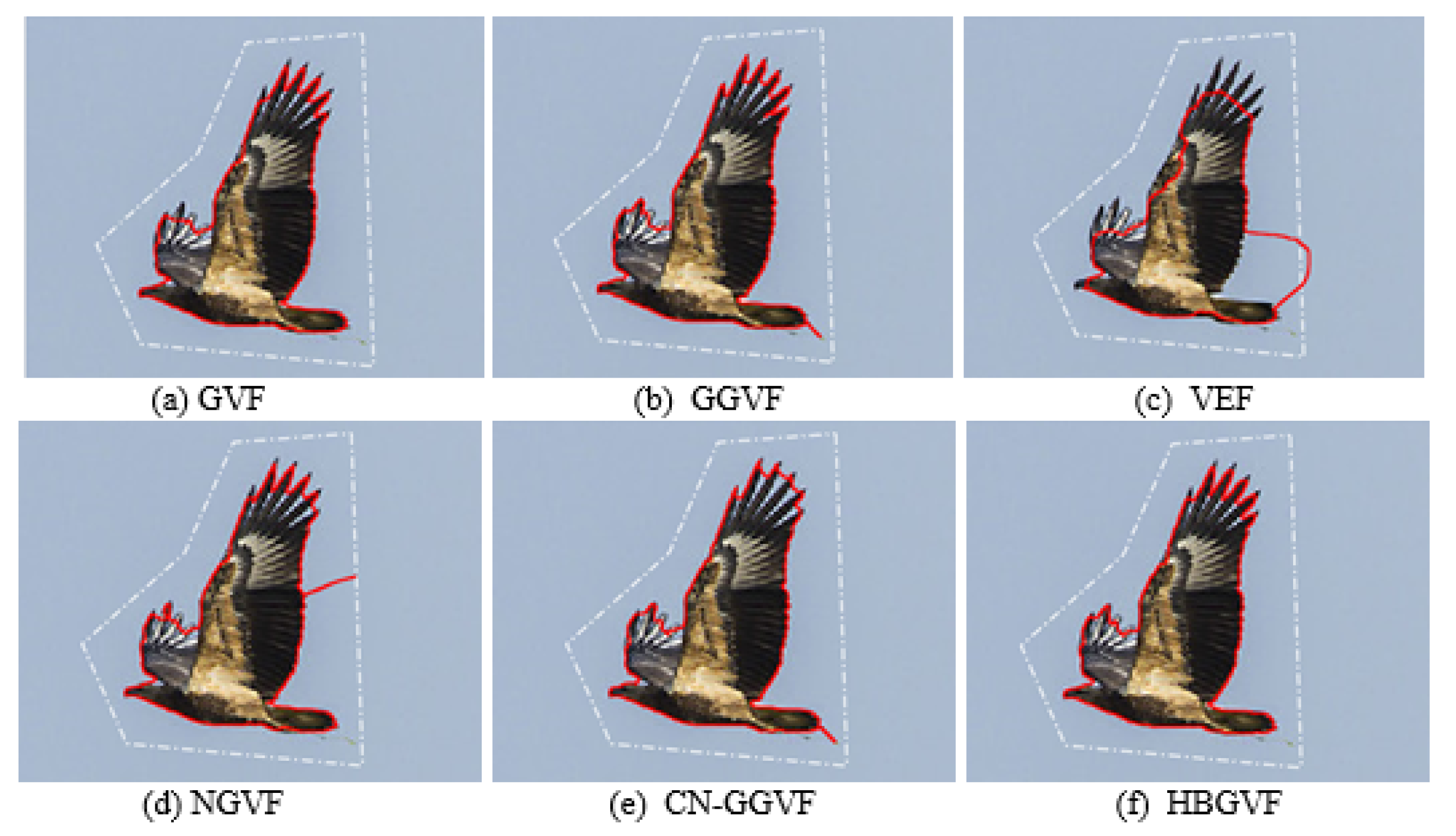

4.1. Common Concerns for the GVF-Like Snakes

4.2. Convergence to Concavities

4.3. Weak Edge Preserving

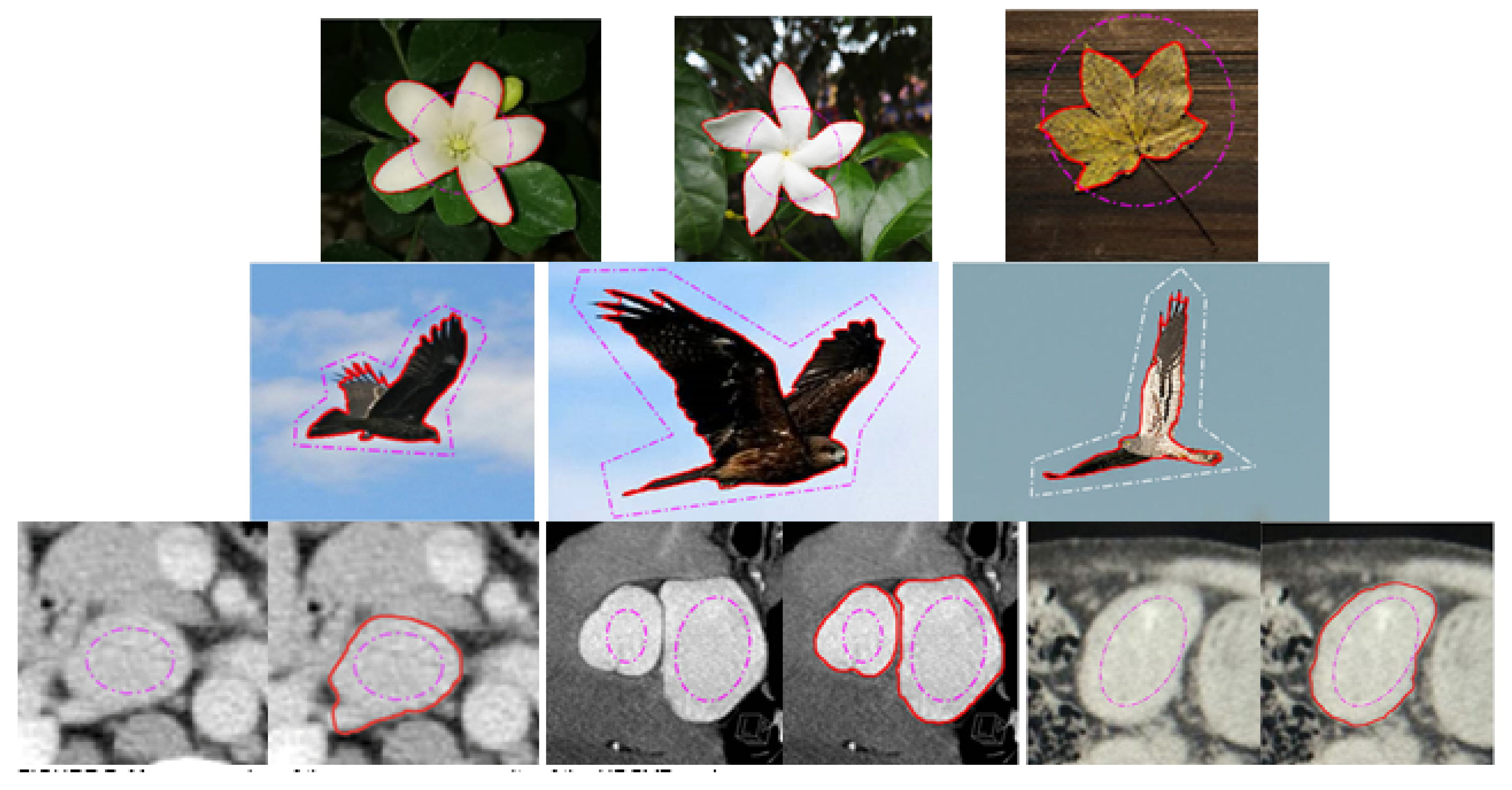

4.4. Test Results of HBGVF Model on Real Images

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sahoo, P.K.; Soltani, S.; Wong, A.K.C.; Chen, Y.C. A Survey of Thresholding Techniques. Comput. Vis. Graph. Image Process. 1998, 41, 142–149. [Google Scholar] [CrossRef]

- Cai, W.L.; Chen, S.C.; Zhang, D.Q. Fast and robust fuzzy c-means clustering algorithms incorporating local information for image segmentation. Pattern Recognit. 2007, 40, 825–838. [Google Scholar] [CrossRef]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Shih, F.Y.; Cheng, S.X. Automatic seeded region growing for color image segmentation. Image Vis. Comput. 2005, 23, 877–886. [Google Scholar] [CrossRef]

- Yu, S.; Lu, Y.; Molloy, D. A Dynamic-Shape-Prior Guided Snake Model With Application in Visually Tracking Dense Cell Populations. IEEE Trans. Image Process. 2019, 8, 1513–1527. [Google Scholar] [CrossRef]

- Zhou, S.; Li, B.; Wang, Y.; Wen, T.; Li, N. The Line- and Block-like Structures Extraction via Ingenious Snake. Pattern Recognit. Lett. 2018, 112, 324–331. [Google Scholar] [CrossRef]

- Nakhmani, A.; Tannenbaum, A. Self-Crossing Detection and Location for Parametric Active Contours. IEEE Trans. Image Process. 2012, 21, 3150–3156. [Google Scholar] [CrossRef]

- Zhao, S.; Li, G.; Zhang, W.; Gu, J. Automatical Intima-media Border Segmentation on Ultrasound Image Sequences using a Kalman filter snake. IEEE Access 2018, 6, 40804–40810. [Google Scholar] [CrossRef]

- Manno-Kovacs, A. Direction Selective Contour Detection for Salient Objects. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 375–389. [Google Scholar] [CrossRef]

- Paragios, N.; Deriche, R. Geodesic Active Contours and Level Sets for the Detection and Tracking of Moving Objects. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 266–280. [Google Scholar] [CrossRef]

- Zhu, S.C.; Yuille, A. Region Competition: Unifying Snakes, Region Growing, and Bayes/MDL for Multi-band Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 884–900. [Google Scholar]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef]

- Brox, T.; Cremers, D. On Local Region Models and a Statistical Interpretation of the Piecewise Smooth Mumford-Shah Functional. Int. J. Comput. Vis. 2009, 84, 184–193. [Google Scholar] [CrossRef]

- Adam, A.; Kimmel, R.; Rivlin, E. On Scene Segmentation and Histograms-Based Curve Evolution. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1708–1714. [Google Scholar] [CrossRef]

- Ni, K.; Bresson, X.; Chan, T.; Esedoglu, S. Local Histogram Based Segmentation Using the Wasserstein Distance. Int. J. Comput. Vis. 2009, 84, 97–111. [Google Scholar] [CrossRef]

- Zhao, W.; Xu, X.; Zhu, Y.; Xu, F. Active contour model based on local and global Gaussian fitting energy for medical image segmentation. Optik 2018, 158, 1160–1169. [Google Scholar] [CrossRef]

- Ge, Q.; Li, C.; Shao, W.; Li, H. A hybrid active contour model with structured feature for image segmentation. Signal Process. 2015, 108, 147–158. [Google Scholar] [CrossRef]

- Wang, H.; Huang, T.; Du, Y. An adaptive weighting parameter selection for improved integrated active contour model. Optik 2015, 126, 5331–5335. [Google Scholar] [CrossRef]

- Li, C.; Kao, C.Y.; Gore, J.C.; Ding, Z. Minimization of Region-Scalable Fitting Energy for Image Segmentation. IEEE Trans. Image Process. 2008, 17, 1940–1949. [Google Scholar]

- Darolti, C.; Mertins, A.; Bodensteiner, C.; Hofmann, U.G. Local region descriptors for active contours evolution. IEEE Trans. Image Process. 2008, 17, 2275–2288. [Google Scholar] [CrossRef]

- Zhang, K.; Song, H.; Zhang, L. Active contours driven by local image fitting energy. Pattern Recognit. 2010, 43, 1199–1206. [Google Scholar] [CrossRef]

- Estellers, V.; Zosso, D.; Bresson, X.; Thiran, J.P. Harmonic active contours. IEEE Trans. Image Process. 2014, 23, 69–82. [Google Scholar] [CrossRef]

- Gao, Y.; Bouix, S.; Shenton, M.; Tannenbaum, A. Sparse Texture Active Contour. IEEE Trans. Image Process. 2013, 22, 3866–3878. [Google Scholar] [CrossRef]

- Caselles, V.; Kimmel, R.; Sapiro, G. Geodesic Active Contours. Int. J. Comput. Vis. 1997, 22, 61–79. [Google Scholar] [CrossRef]

- Xu, C.; Prince, J. Snakes, shapes, and gradient vector flow. IEEE Trans. Image Process 1998, 7, 359–369. [Google Scholar]

- Li, B.; Acton, S. Active contour external force using vector field convolution for image segmentation. IEEE Trans. Image Process. 2007, 16, 2096–2106. [Google Scholar] [CrossRef]

- Sum, K.W.; Cheung, P.Y.S. Boundary vector field for parametric active contours. Pattern Recognit. 2007, 40, 1635–1645. [Google Scholar] [CrossRef]

- Ren, D.; Zuo, W.; Zhao, X.; Lin, Z.; Zhang, D. Fast gradient vector flow computation based on augmented Lagrangian method. Pattern Recognit. Lett. 2013, 34, 219–225. [Google Scholar] [CrossRef]

- Han, X.; Xu, C.; Prince, J. Fast numerical scheme for gradient vector flow computation using a multigrid method. IET Image Process. 2007, 1, 48–55. [Google Scholar] [CrossRef]

- Boukerroui, D. Efficient numerical schemes for gradient vector flow. Pattern Recognit. 2012, 45, 626–636. [Google Scholar] [CrossRef][Green Version]

- Zhao, F.; Zhao, J.; Zhao, W.; Qu, F. Guide filter-based gradient vector flow module for infrared image segmentation. Appl. Opt. 2015, 54, 9809–9817. [Google Scholar] [CrossRef] [PubMed]

- Zhu, S.; Bu, X.; Zhou, Q. A Novel Edge Preserving Active Contour Model Using Guided Filter and Harmonic Surface Function for Infrared Image Segmentation. IEEE Access 2018, 6, 5493–5510. [Google Scholar] [CrossRef]

- Cheng, J.; Foo, S.W. Dynamic directional gradient vector flow for snakes. IEEE Trans. Image Process. 2006, 15, 1563–1571. [Google Scholar] [CrossRef] [PubMed]

- Ray, N.; Acton, S.T.; Ley, K. Tracking leukocytes in vivo with shape and size constrained active contours. IEEE Trans. Med. Imaging 2002, 21, 1222–1235. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Jia, Y.; Liu, L. Harmonic gradient vector flow external force for snake model. Electron. Lett. 2008, 44, 105–106. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Jia, Y. Adaptive diffusion flow active contours for image segmentation. Comput. Vis. Image Underst. 2013, 117, 1421–1435. [Google Scholar] [CrossRef]

- Jaouen, V.; González, P.; Stute, S. Variational Segmentation of Vector-Valued Images With Gradient Vector Flow. IEEE Trans. Image Process. 2014, 3, 4773–4785. [Google Scholar] [CrossRef]

- Ning, J.; Wu, C.; Liu, S.; Yang, S. NGVF: An improved external force field for active contour model. Pattern Recognit. Lett. 2007, 28, 58–63. [Google Scholar]

- Li, C.; Liu, J.; Fox, M.D. Segmentation of external force field for automatic initialization and splitting of snakes. Pattern Recognit. 2005, 38, 1947–1960. [Google Scholar] [CrossRef]

- Ray, N.; Acton, S.T. Motion gradient vector flow: An external force for tracking rolling leukocytes with shape and size constrained active contours. IEEE Trans. Med. Imaging 2004, 23, 1466–1478. [Google Scholar] [CrossRef]

- Qin, L.; Zhu, C.; Zhao, Y.; Bai, H.; Tian, H. Generalized Gradient Vector Flow for Snakes: New Observations, Analysis, and Improvement. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 883–897. [Google Scholar] [CrossRef]

- Kirimasthong, K.; Rodtook, A.; Lohitvisate, W.; Makhanov, S.S. Automatic initialization of active contours in ultrasound images of breast cancer. Pattern Anal. Appl. 2018, 21, 491–500. [Google Scholar] [CrossRef]

- Rodtook, A.; Kirimasthong, K.; Lohitvisate, W. Automatic Initialization of Active Contours and Level Set Method in Ultrasound Images of Breast Abnormalities. Pattern Recognit. 2018, 79, 172–182. [Google Scholar] [CrossRef]

- Jaouen, V.; Bert, J.; Boussion, N.; Fayad, H.; Hatt, M.; Visvikis, D. Image enhancement with PDEs and nonconservative advection flow fields. IEEE Trans. Image Process. 2019, 28, 3075–3088. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Wang, W.; Wu, Y.W.Y.; Li, S.; Chen, B. Quantification of Full Left Ventricular Metrics via Deep Regression Learning with Contour-Guidance. IEEE Access 2019, 7, 47918–47928. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. A Full-Level Context Squeeze-and-Excitation ROI Extractor for SAR Ship Instance Segmentation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Shen, W.; Xu, W.; Sun, Z.; Ma, J.; Ma, X.; Zhou, S.; Guo, S.; Wang, Y. Automatic Segmentation of the Femur and Tibia Bones from X-ray Images Based on Pure Dilated Residual U-Net. Inverse Probl. Imaging 2021, 15, 1333–1346. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, W.; Shen, W.; Li, N.; Chen, Y.; Li, S.; Chen, B.; Guo, S.; Wang, Y. Automatic segmentation of the left ventricle from MR images based on nested U-Net with dense block. Biomed. Signal Process. Control. 2021, 68, 102684. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. ShipDeNet-20: An Only 20 Convolution Layers and <1-MB Lightweight SAR Ship Detector. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1234–1238. [Google Scholar]

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S.; Wang, J.; Li, J.; Su, H.; Zhou, Y. Balance Scene Learning Mechanism for Offshore and Inshore Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2020, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. Squeeze-and-Excitation Laplacian Pyramid Network With Dual-Polarization Feature Fusion for Ship Classification in SAR Images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Liu, C.; Xu, X.; Zhan, X.; Wang, C.; Ahmad, I.; Zhou, Y.; Pan, D.; et al. HOG-ShipCLSNet: A Novel Deep Learning Network With HOG Feature Fusion for SAR Ship Classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–22. [Google Scholar] [CrossRef]

- Carmona, R.; Zhong, S. Adaptive Smoothing Respecting Feature Directions. IEEE Trans. Image Process. 1998, 7, 353–358. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, W.; Yu, T.; Zhang, Y. Hessian based image structure adaptive gradient vector flow for parametric active contours. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 649–652. [Google Scholar]

- Cheng, K.; Xiao, T.; Chen, Q.; Wang, Y. Image segmentation using active contours with modified convolutional virtual electric field external force with an edge-stopping function. PLoS ONE 2020, 15, e0230581. [Google Scholar] [CrossRef]

- Xu, C.; Prince, J.L. Generalized gradient vector flow external forces for active contours. Signal Process. 1998, 71, 131–139. [Google Scholar] [CrossRef]

- Park, H.K.; Chung, M.J. External force of snake: Virtual electric field. Electron. Lett. 2002, 38, 1500–1502. [Google Scholar] [CrossRef]

- You, Y.; Xu, W.; Tannenbaum, A.; Kaveh, M. Behavioral analysis of anisotropic diffusion in image processing. IEEE Trans. Image Process. 1996, 5, 1539–1552. [Google Scholar]

- Caselles, V.; Morel, J.; Sbert, C. An axiomatic approach to image interpolation. IEEE Trans. Image Process. 1998, 7, 376–386. [Google Scholar] [CrossRef]

- Weickert, J. Coherence-enhancing diffusion filtering. Int. J. Comput. Vis. 1999, 31, 111–127. [Google Scholar] [CrossRef]

- Yan, M.; Li, S.; Chan, C.A.; Shen, Y.; Yu, Y. Mobility prediction using a weighted Markov model based on mobile user classification. Sensors 2021, 21, 1740. [Google Scholar] [CrossRef]

- Yu, H.; Chua, C. GVF-based anisotropic diffusion models. IEEE Trans. Image Process. 2006, 15, 1517–1524. [Google Scholar]

- Hassouna, M.; Farag, A. Variational curve skeletons using gradient vector flow. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 2257–2274. [Google Scholar] [CrossRef]

- Prasad, V.; Yegnanarayana, B. Finding axes of symmetry from potential fields. IEEE Trans. Image Process. 2004, 13, 1559–1566. [Google Scholar] [CrossRef]

- Battiato, S.; Farinella, G.M.; Puglisi, G. Saliency-Based Selection of Gradient Vector Flow Paths for Content Aware Image Resizing. IEEE Trans. Image Process. 2014, 23, 2081–2095. [Google Scholar] [CrossRef]

- Shivakumara, P.; Phan, T.; Lu, S.; Tan, C.L. Gradient vector flow and grouping based method for arbitrarily-oriented scene text detection in video images. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1729–1739. [Google Scholar] [CrossRef]

- Wang, Y.; Jia, Y.; Wu, Y. Segmentation of the left ventricle in cardiac cine MRI using a shape constrained snake model. Comput. Vis. Image Underst. 2013, 117, 990–1003. [Google Scholar]

- Zhu, S.; Gao, J.; Li, Z. Video object tracking based on improved gradient vector flow snake and intra-frame centroids tracking method. Comput. Electr. Eng. 2014, 40, 174–185. [Google Scholar] [CrossRef]

- Li, Q.; Deng, T.; Xie, W. Active contours driven by divergence of gradient vector flow. Signal Process. 2016, 120, 185–199. [Google Scholar] [CrossRef]

- Abdullah, M.; Dlay, S.; Woo, W.; Chambers, J. Robust Iris Segmentation Method Based on a New Active Contour Force with a Noncircular Normalization. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 3128–3142. [Google Scholar] [CrossRef]

- Miri, M.S.; Robles, V.A.; Abràmoff, M.D.; Kwon, Y.H.; Garvin, M.K. Incorporation of gradient vector flow field in a multimodal graph-theoretic approach for segmenting the internal limiting membrane from glaucomatous optic nerve head-centered SD-OCT volumes. Comput. Med. Imaging Graph. 2017, 55, 87–94. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qian, Q.; Cheng, K.; Qian, W.; Deng, Q.; Wang, Y. Image Segmentation Using Active Contours with Hessian-Based Gradient Vector Flow External Force. Sensors 2022, 22, 4956. https://doi.org/10.3390/s22134956

Qian Q, Cheng K, Qian W, Deng Q, Wang Y. Image Segmentation Using Active Contours with Hessian-Based Gradient Vector Flow External Force. Sensors. 2022; 22(13):4956. https://doi.org/10.3390/s22134956

Chicago/Turabian StyleQian, Qianqian, Ke Cheng, Wei Qian, Qingchang Deng, and Yuanquan Wang. 2022. "Image Segmentation Using Active Contours with Hessian-Based Gradient Vector Flow External Force" Sensors 22, no. 13: 4956. https://doi.org/10.3390/s22134956

APA StyleQian, Q., Cheng, K., Qian, W., Deng, Q., & Wang, Y. (2022). Image Segmentation Using Active Contours with Hessian-Based Gradient Vector Flow External Force. Sensors, 22(13), 4956. https://doi.org/10.3390/s22134956