Detection of Partially Structural Collapse Using Long-Term Small Displacement Data from Satellite Images

Abstract

:1. Introduction

2. Proposed Methods

2.1. Data Augmentation by Markov Chain Monte Carlo

2.2. Feature Normalization of MCMC-ANN-MSD

2.2.1. Unsupervised Auto-Associative Neural Network

2.2.2. ANN Hyperparameter Selection

| Algorithm 1. The main steps of the hyperparameter selection of the number of neurons of the hidden layers of an auto-associative neural network. |

| Inputs: Input data X, and , where > |

| For i = 1: Do |

| For j = 1: Do |

| 1. Learn a network through the ith and jth number of neurons of the mapping/de-mapping and bottleneck layers. |

| 2. Determine the output of the network, that is . |

| 3. Calculate the residual . |

| 4. Apply the residual matrix to the MSD equation as a new training dataset |

| 5. Determine distance values using all samples in Ex. |

| 6. Calculate the variance of the calculated distance quantities regarding the ith and jth numbers. |

| 7. Save the calculate variance amount in a matrix, where the ith row and jth column belong to this amount. |

| End |

| End |

| 8. Select the smallest quantity of the stored variances from Step 7. |

| 9. Check the occurrence of overfitting by Equations (7) and (8). In the case of an occurring overfitting problem, go to Step 8 and choose the next smallest variance amount to make sure that the overfitting problem does not occur. |

| 10. Select the numbers associated with the stored matrix row and column with the smallest variance amount that pertains to the optimal number of neurons of the mapping/de-mapping (lm) and bottleneck (lb) layers, respectively. |

| Outputs: The optimal number of neurons lm and lb |

2.3. Feature Normalization of MCMC-TSL-MSD

2.3.1. TSL Hyperparameter Selection

2.4. Novelty Detection by MSD

- If each of the distance values exceeds the threshold boundary, one should trigger the emergence of damage

- If the distance values are under the threshold boundary, one should ensure the safe condition of the structure.

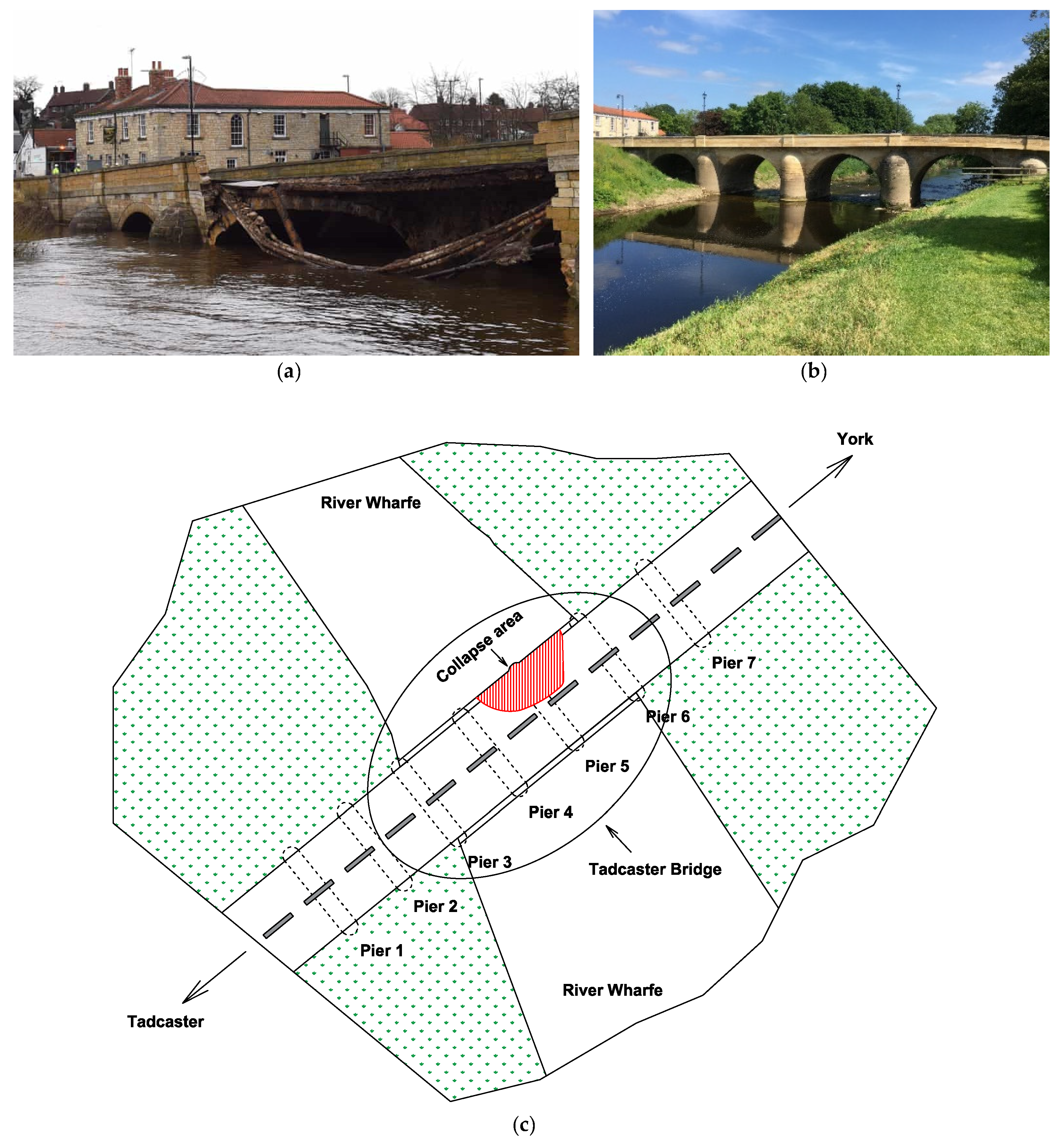

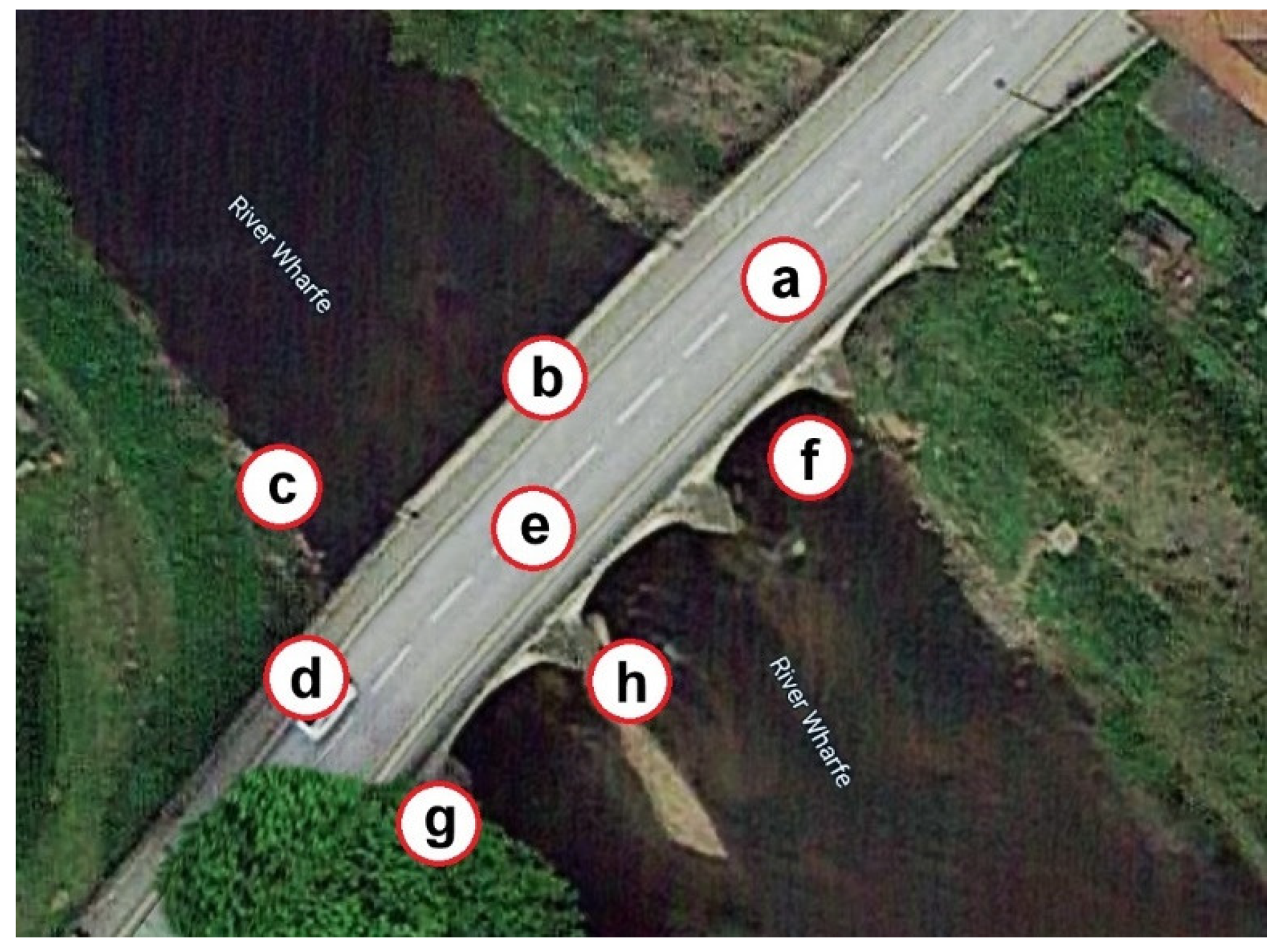

3. Application: The Tadcaster Bridge

3.1. A brief Description of the Bridge

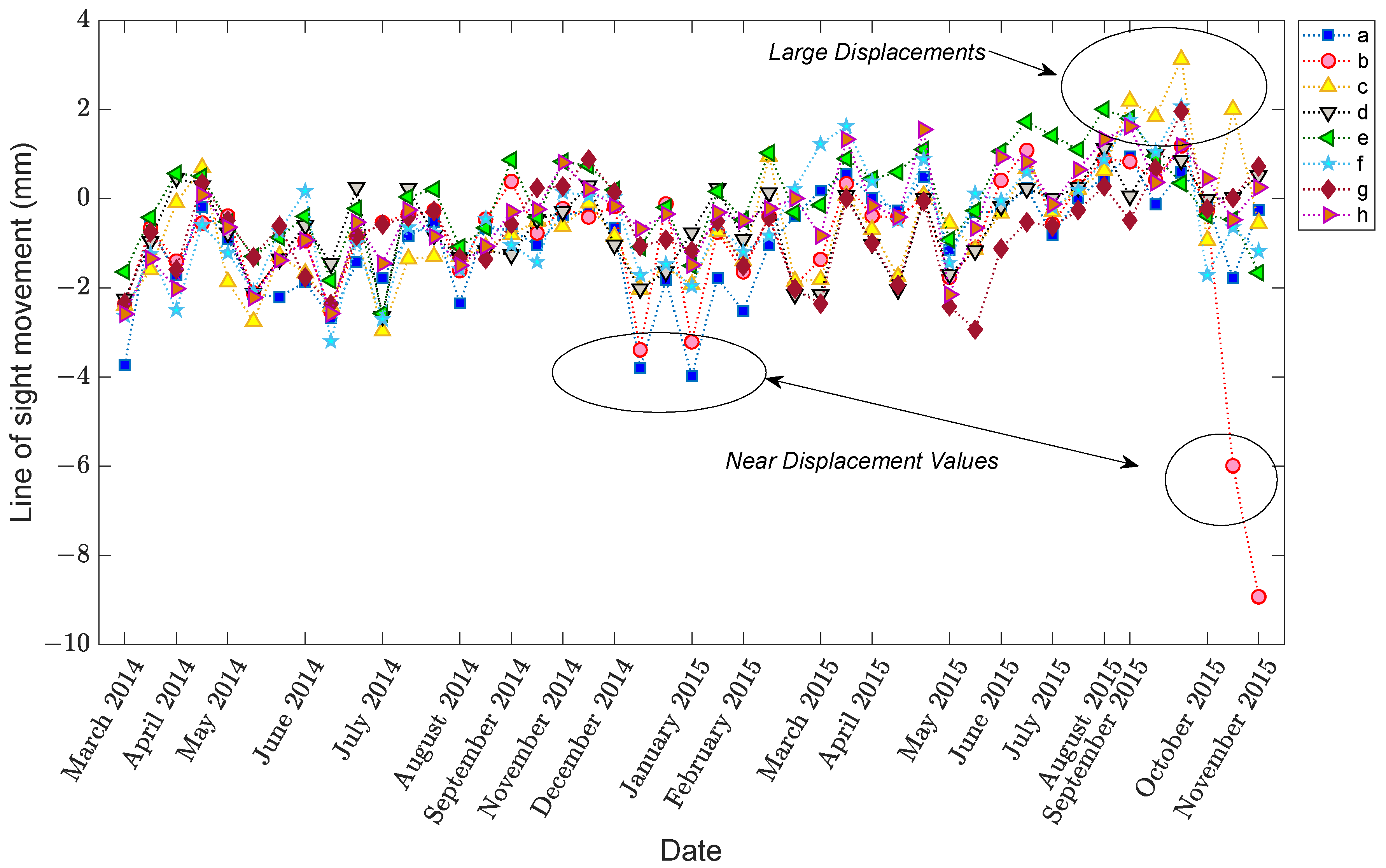

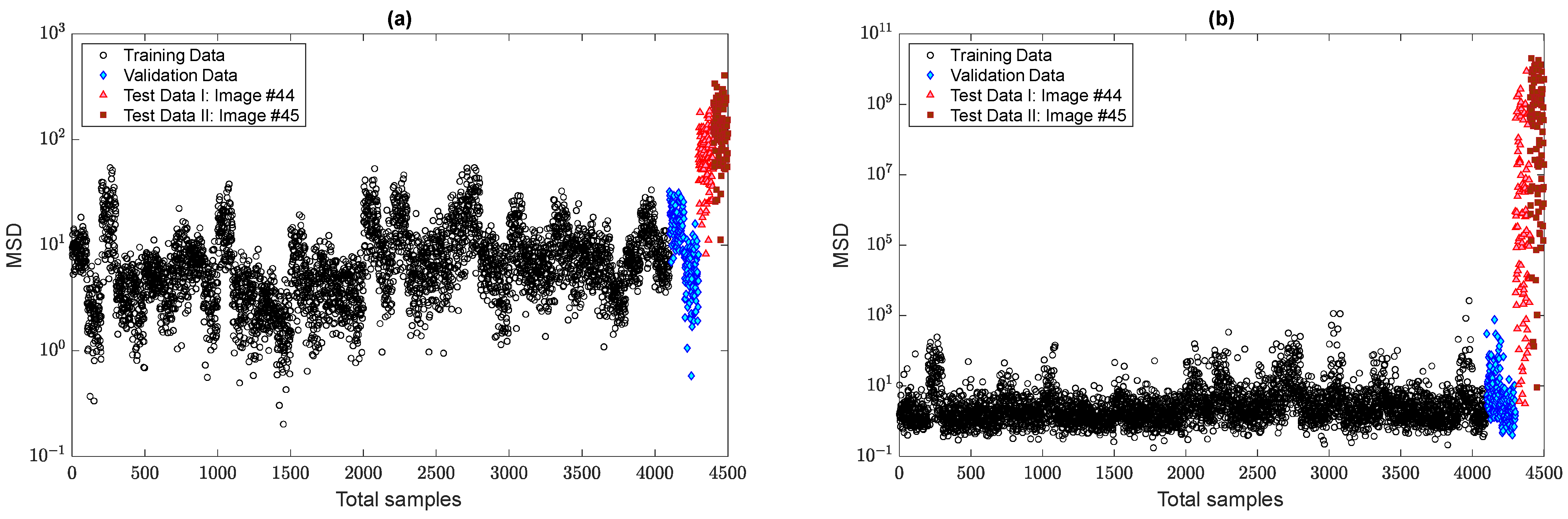

3.2. Data Augmentation and EOV Evaluation

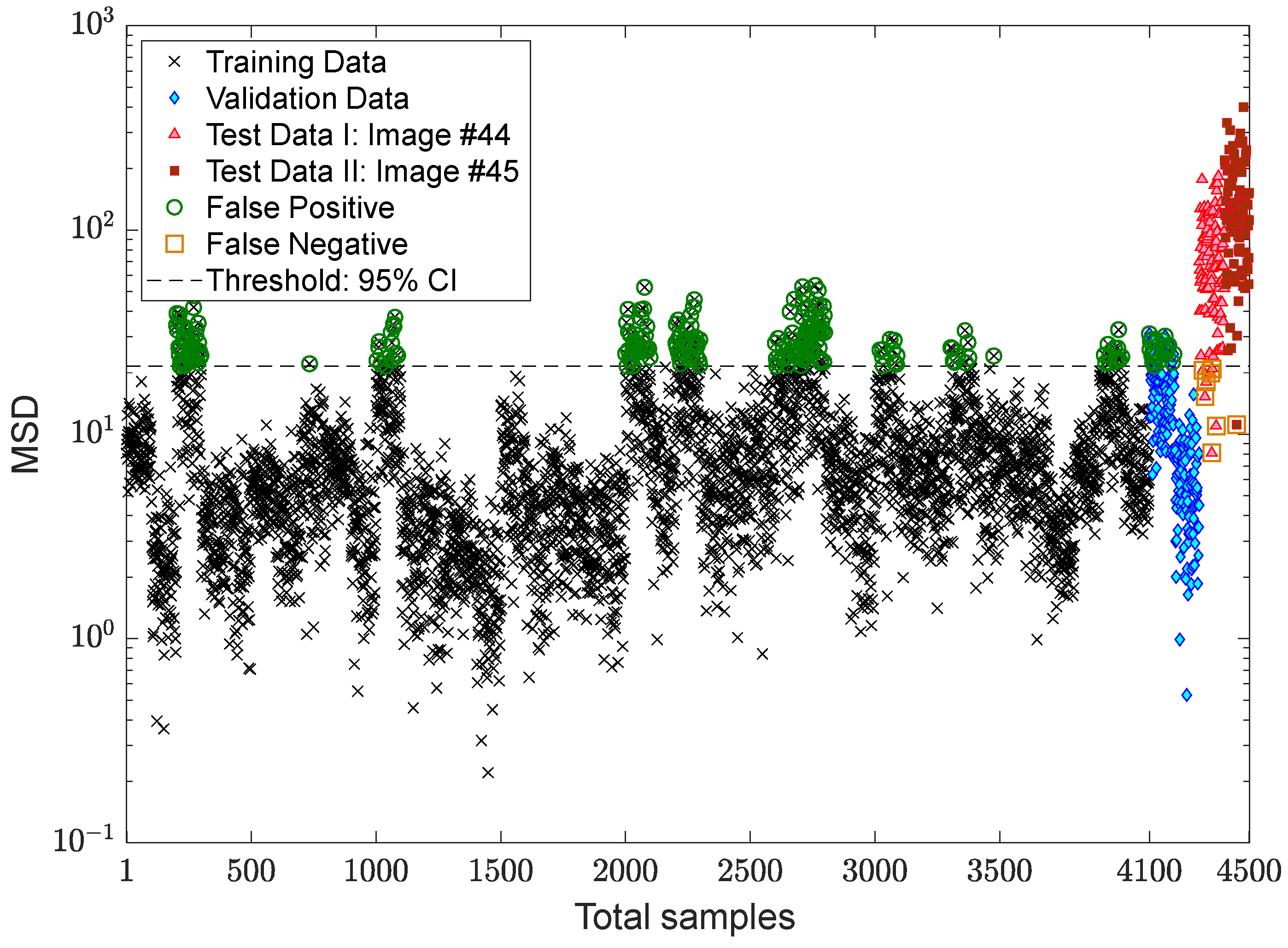

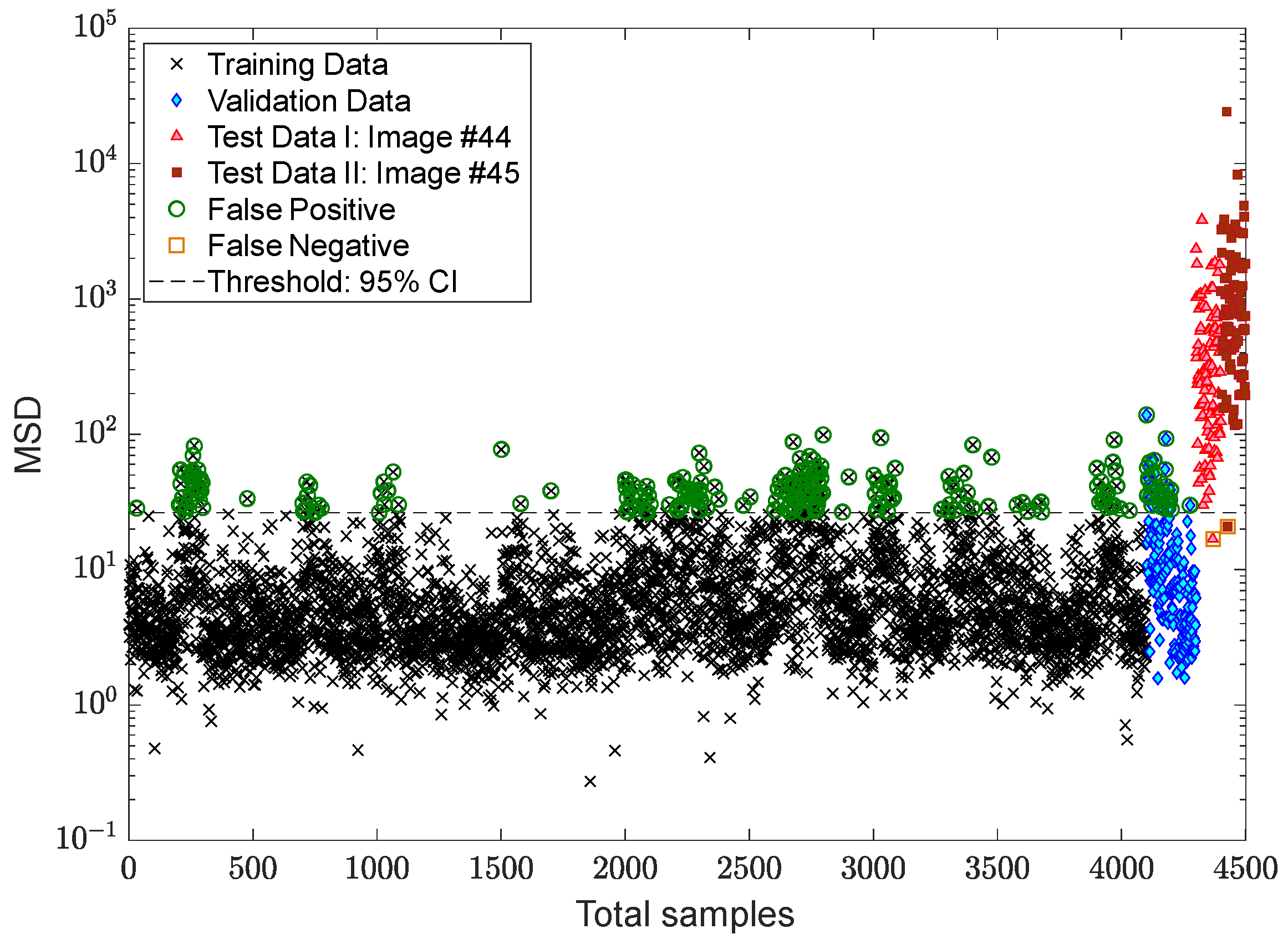

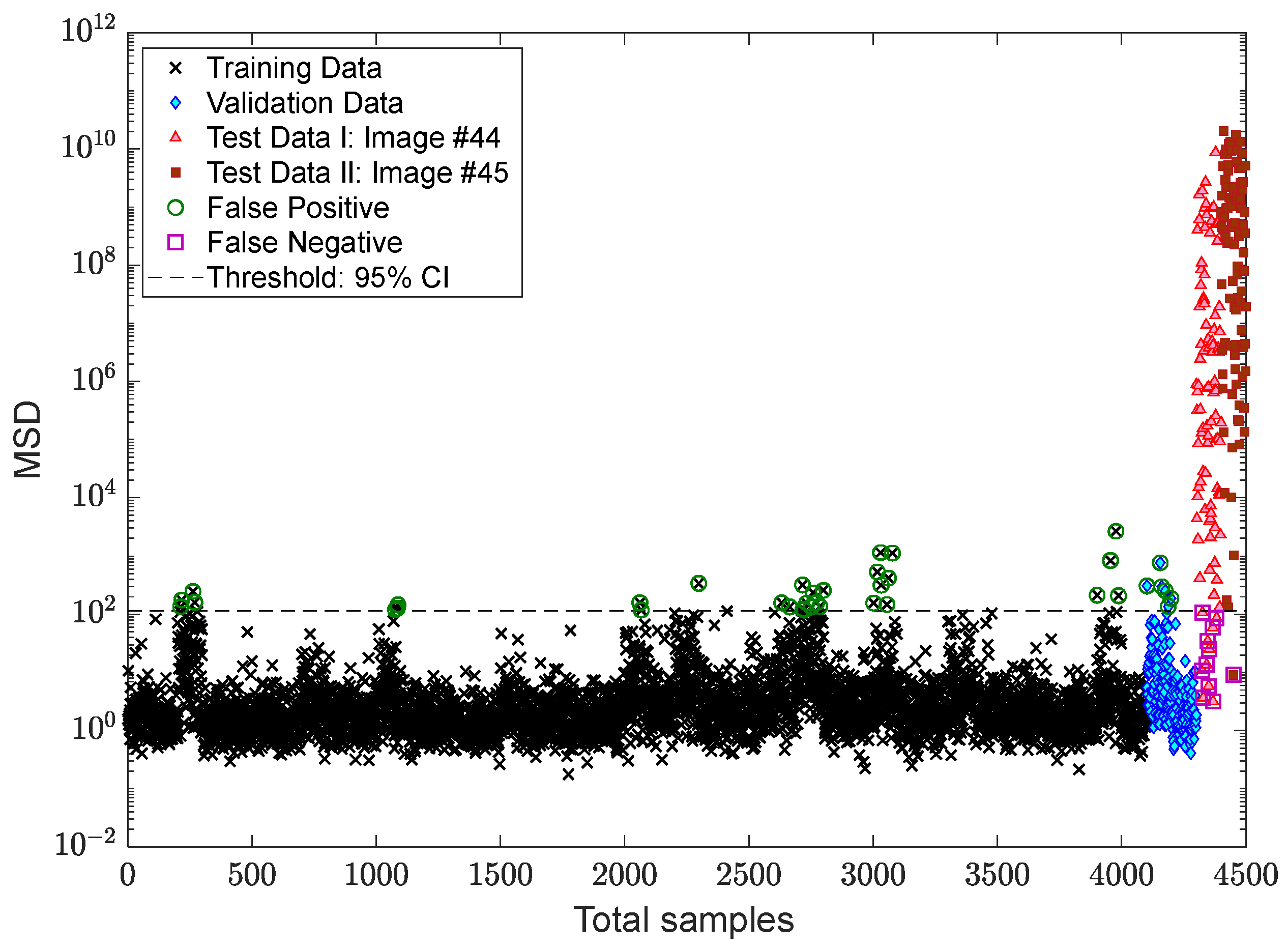

3.3. Verification of the Proposed MCMC-ANN-MSD Method

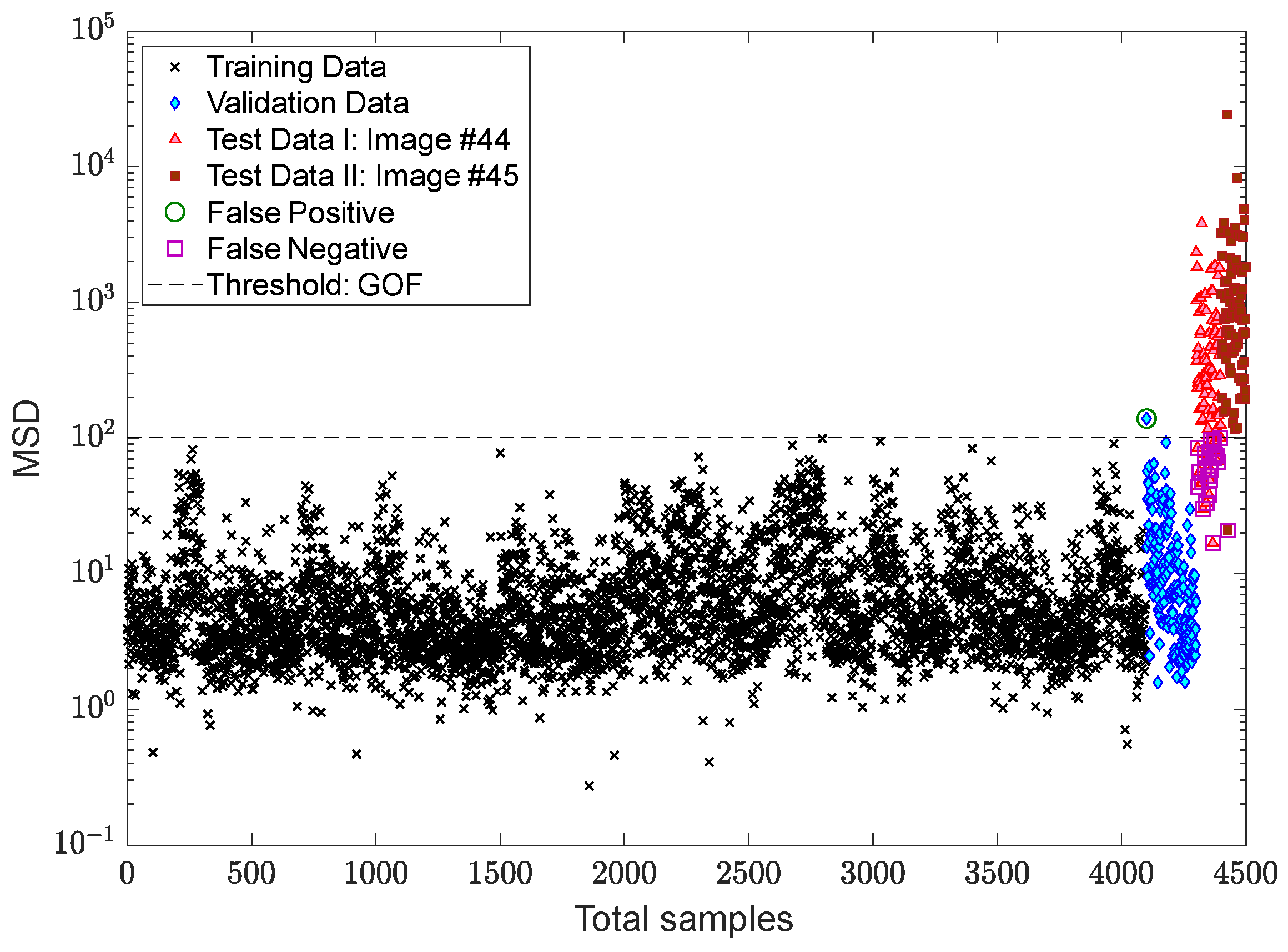

3.4. Verification of the Proposed MCMC-TSL-MSD Method

4. Conclusions

- (1)

- In general, a long-term monitoring strategy contains the EOV conditions in measured data or features (i.e., displacement samples) extracted from raw measurements (i.e., SAR images). However, when there are inadequate or few data/feature samples, it is difficult to graphically observe their variations. The proposed strategy for augmenting the small data enabled us to better visualize the variations caused by the EOV conditions and any unknown variability sources.

- (2)

- The proposed MCMC-ANN-MSD could handle the problem of the variability attributable to the environmental/operational conditions by obtaining smoother novelty scores. It was observed that the ANN based on the auto-associative neural network significantly reduced the EOV effects so that the results became unreliable without this tool.

- (3)

- Due to some false alarms in the novelty scores of the training and validation samples, the MCMC-ANN-MSD method performed better via the EVT-based threshold estimator against the CLT.

- (4)

- The proposed MCMC-TSL-MSD method better decreased the effects of the EOV conditions via obtaining smaller rates of false positive and false negative against the previous proposed method.

- (5)

- Due to better performance of the MCMC-TSL-MSD method in terms of smaller rates of false positive and false negative, it was still successful in accurately making decisions via the classical CLT-based threshold estimator.

Author Contributions

Funding

Conflicts of Interest

References

- Rizzo, P.; Enshaeian, A. Challenges in Bridge Health Monitoring: A Review. Sensors 2021, 21, 4336. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, H.; La, H.M.; Gucunski, N. Review of Non-Destructive Civil Infrastructure Evaluation for Bridges: State-of-the-Art Robotic Platforms, Sensors and Algorithms. Sensors 2020, 20, 3954. [Google Scholar] [CrossRef] [PubMed]

- Hassani, S.; Mousavi, M.; Gandomi, A.H. Structural Health Monitoring in Composite Structures: A Comprehensive Review. Sensors 2022, 22, 153. [Google Scholar] [CrossRef] [PubMed]

- Deng, L.; Wang, W.; Yu, Y. State-of-the-Art Review on the Causes and Mechanisms of Bridge Collapse. J. Perform. Constr. Facil. 2016, 30, 04015005. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H.; Behkamal, B.; Mariani, S. Big data analytics and structural health monitoring: A statistical pattern recognition-based approach. Sensors 2020, 20, 2328. [Google Scholar] [CrossRef] [Green Version]

- Entezami, A.; Shariatmadar, H.; Mariani, S. Early damage assessment in large-scale structures by innovative statistical pattern recognition methods based on time series modeling and novelty detection. Adv. Eng. Softw. 2020, 150, 102923. [Google Scholar] [CrossRef]

- Sarmadi, H.; Yuen, K.-V. Early damage detection by an innovative unsupervised learning method based on kernel null space and peak-over-threshold. Comput. Aided Civ. Inf. 2021, 36, 1150–1167. [Google Scholar] [CrossRef]

- Daneshvar, M.H.; Sarmadi, H. Unsupervised learning-based damage assessment of full-scale civil structures under long-term and short-term monitoring. Eng. Struct. 2022, 256, 114059. [Google Scholar] [CrossRef]

- Sarmadi, H.; Yuen, K.-V. Structural health monitoring by a novel probabilistic machine learning method based on extreme value theory and mixture quantile modeling. Mech. Syst. Sig. Process. 2022, 173, 109049. [Google Scholar] [CrossRef]

- Bado, M.F.; Casas, J.R. A Review of Recent Distributed Optical Fiber Sensors Applications for Civil Engineering Structural Health Monitoring. Sensors 2021, 21, 1818. [Google Scholar] [CrossRef]

- Wang, M.L.; Lynch, J.P.; Sohn, H. Sensor Technologies for Civil Infrastructures: Applications in Structural Health Monitoring; Woodhead Publishing (Elsevier): Cambridge, UK, 2014. [Google Scholar]

- Sony, S.; Laventure, S.; Sadhu, A. A literature review of next-generation smart sensing technology in structural health monitoring. Struct. Contr. Health Monit. 2019, 26, e2321. [Google Scholar] [CrossRef]

- Dong, C.-Z.; Catbas, F.N. A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit. 2021, 20, 692–743. [Google Scholar] [CrossRef]

- Biondi, F.; Addabbo, P.; Ullo, S.L.; Clemente, C.; Orlando, D. Perspectives on the Structural Health Monitoring of Bridges by Synthetic Aperture Radar. Remote Sens. 2020, 12, 3852. [Google Scholar] [CrossRef]

- Qin, X.; Yang, M.; Zhang, L.; Yang, T.; Liao, M. Health Diagnosis of Major Transportation Infrastructures in Shanghai Metropolis Using High-Resolution Persistent Scatterer Interferometry. Sensors 2017, 17, 2770. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Chang, L.; Markine, V. Structural Health Monitoring of Railway Transition Zones Using Satellite Radar Data. Sensors 2018, 18, 413. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Selvakumaran, S.; Plank, S.; Geiß, C.; Rossi, C.; Middleton, C. Remote monitoring to predict bridge scour failure using Interferometric Synthetic Aperture Radar (InSAR) stacking techniques. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 463–470. [Google Scholar] [CrossRef]

- Qin, X.; Liao, M.; Yang, M.; Zhang, L. Monitoring structure health of urban bridges with advanced multi-temporal InSAR analysis. Ann. GIS 2017, 23, 293–302. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, L.; Yang, M.; Luo, H.; Liao, M.; Ding, X. Mapping surface deformation and thermal dilation of arch bridges by structure-driven multi-temporal DInSAR analysis. Remote Sens. Environ. 2018, 216, 71–90. [Google Scholar] [CrossRef]

- Milillo, P.; Perissin, D.; Salzer, J.T.; Lundgren, P.; Lacava, G.; Milillo, G.; Serio, C. Monitoring dam structural health from space: Insights from novel InSAR techniques and multi-parametric modeling applied to the Pertusillo dam Basilicata, Italy. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 221–229. [Google Scholar] [CrossRef]

- Huang, Q.; Crosetto, M.; Monserrat, O.; Crippa, B. Displacement monitoring and modelling of a high-speed railway bridge using C-band Sentinel-1 data. ISPRS J. Photogramm. Remote Sens. 2017, 128, 204–211. [Google Scholar] [CrossRef]

- Milillo, P.; Giardina, G.; Perissin, D.; Milillo, G.; Coletta, A.; Terranova, C. Pre-collapse space geodetic observations of critical infrastructure: The Morandi Bridge, Genoa, Italy. Remote Sens. 2019, 11, 1403. [Google Scholar] [CrossRef] [Green Version]

- Sarmadi, H.; Entezami, A.; Salar, M.; De Michele, C. Bridge health monitoring in environmental variability by new clustering and threshold estimation methods. J. Civ. Struct. Health Monit. 2021, 11, 629–644. [Google Scholar] [CrossRef]

- Sarmadi, H.; Karamodin, A. A novel anomaly detection method based on adaptive Mahalanobis-squared distance and one-class kNN rule for structural health monitoring under environmental effects. Mech. Syst. Sig. Process. 2020, 140, 106495. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H.; Salar, M.; De Michele, C.; Nadir Arslan, A. A novel data-driven method for structural health monitoring under ambient vibration and high dimensional features by robust multidimensional scaling. Struct. Health Monit. 2021. [Google Scholar] [CrossRef]

- Zhou, Y.; Sun, L. Effects of environmental and operational actions on the modal frequency variations of a sea-crossing bridge: A periodicity perspective. Mech. Syst. Sig. Process. 2019, 131, 505–523. [Google Scholar] [CrossRef]

- Zonno, G.; Aguilar, R.; Boroschek, R.; Lourenço, P.B. Analysis of the long and short-term effects of temperature and humidity on the structural properties of adobe buildings using continuous monitoring. Eng. Struct. 2019, 196, 109299. [Google Scholar] [CrossRef]

- Entezami, A.; Mariani, S.; Shariatmadar, H. Damage Detection in Largely Unobserved Structures under Varying Environmental Conditions: An AutoRegressive Spectrum and Multi-Level Machine Learning Methodology. Sensors 2022, 22, 1400. [Google Scholar] [CrossRef]

- Sarmadi, H.; Entezami, A.; Saeedi Razavi, B.; Yuen, K.-V. Ensemble learning-based structural health monitoring by Mahalanobis distance metrics. Struct. Contr. Health Monit. 2021, 28, e2663. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H.; Behkamal, B.; Mariani, S. Health Monitoring of Large-Scale Civil Structures: An Approach Based on Data Partitioning and Classical Multidimensional Scaling. Sensors 2021, 21, 1646. [Google Scholar] [CrossRef]

- Sarmadi, H. Investigation of machine learning methods for structural safety assessment under variability in data: Comparative studies and new approaches. J. Perform. Constr. Facil. 2021, 35, 04021090. [Google Scholar] [CrossRef]

- Hashemi, F.; Naderi, M.; Jamalizadeh, A.; Bekker, A. A flexible factor analysis based on the class of mean-mixture of normal distributions. Comput. Stat. Data Anal. 2021, 157, 107162. [Google Scholar] [CrossRef]

- Van Ravenzwaaij, D.; Cassey, P.; Brown, S.D. A simple introduction to Markov Chain Monte–Carlo sampling. Psychon. Bull. Rev. 2018, 25, 143–154. [Google Scholar] [CrossRef] [Green Version]

- Neal, R.M. MCMC using Hamiltonian dynamics. In Handbook of Markov Chain Monte Carlo; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Entezami, A.; Sarmadi, H.; De Michele, C. Probabilistic damage localization by empirical data analysis and symmetric information measure. Meas 2022, 198, 111359. [Google Scholar] [CrossRef]

- Gelman, A.; Rubin, D.B. Inference from iterative simulation using multiple sequences. Stat. Sci. 1992, 7, 457–472. [Google Scholar] [CrossRef]

- Farrar, C.R.; Worden, K. Structural Health Monitoring: A Machine Learning Perspective; John Wiley & Sons Ltd.: Chichester, UK, 2013. [Google Scholar]

- Priddy, K.L.; Keller, P.E. Artificial Neural Networks: An Introduction; SPIE Press: Bellingham, DC, USA, 2005. [Google Scholar]

- Kramer, M.A. Autoassociative neural networks. Comput. Chem. Eng. 1992, 16, 313–328. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Kramer, M.A. Nonlinear principal component analysis using autoassociative neural networks. AICHE J. 1991, 37, 233–243. [Google Scholar] [CrossRef]

- Entezami, A.; Sarmadi, H.; Mariani, S. An Unsupervised Learning Approach for Early Damage Detection by Time Series Analysis and Deep Neural Network to Deal with Output-Only (Big) Data. Eng. Proc. 2020, 2, 17. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Entezami, A.; De Michele, C.; Arslan, A.N.; Behkamal, B. Detection of Partially Structural Collapse Using Long-Term Small Displacement Data from Satellite Images. Sensors 2022, 22, 4964. https://doi.org/10.3390/s22134964

Entezami A, De Michele C, Arslan AN, Behkamal B. Detection of Partially Structural Collapse Using Long-Term Small Displacement Data from Satellite Images. Sensors. 2022; 22(13):4964. https://doi.org/10.3390/s22134964

Chicago/Turabian StyleEntezami, Alireza, Carlo De Michele, Ali Nadir Arslan, and Bahareh Behkamal. 2022. "Detection of Partially Structural Collapse Using Long-Term Small Displacement Data from Satellite Images" Sensors 22, no. 13: 4964. https://doi.org/10.3390/s22134964

APA StyleEntezami, A., De Michele, C., Arslan, A. N., & Behkamal, B. (2022). Detection of Partially Structural Collapse Using Long-Term Small Displacement Data from Satellite Images. Sensors, 22(13), 4964. https://doi.org/10.3390/s22134964