Would Your Clothes Look Good on Me? Towards Transferring Clothing Styles with Adaptive Instance Normalization

Abstract

:1. Introduction

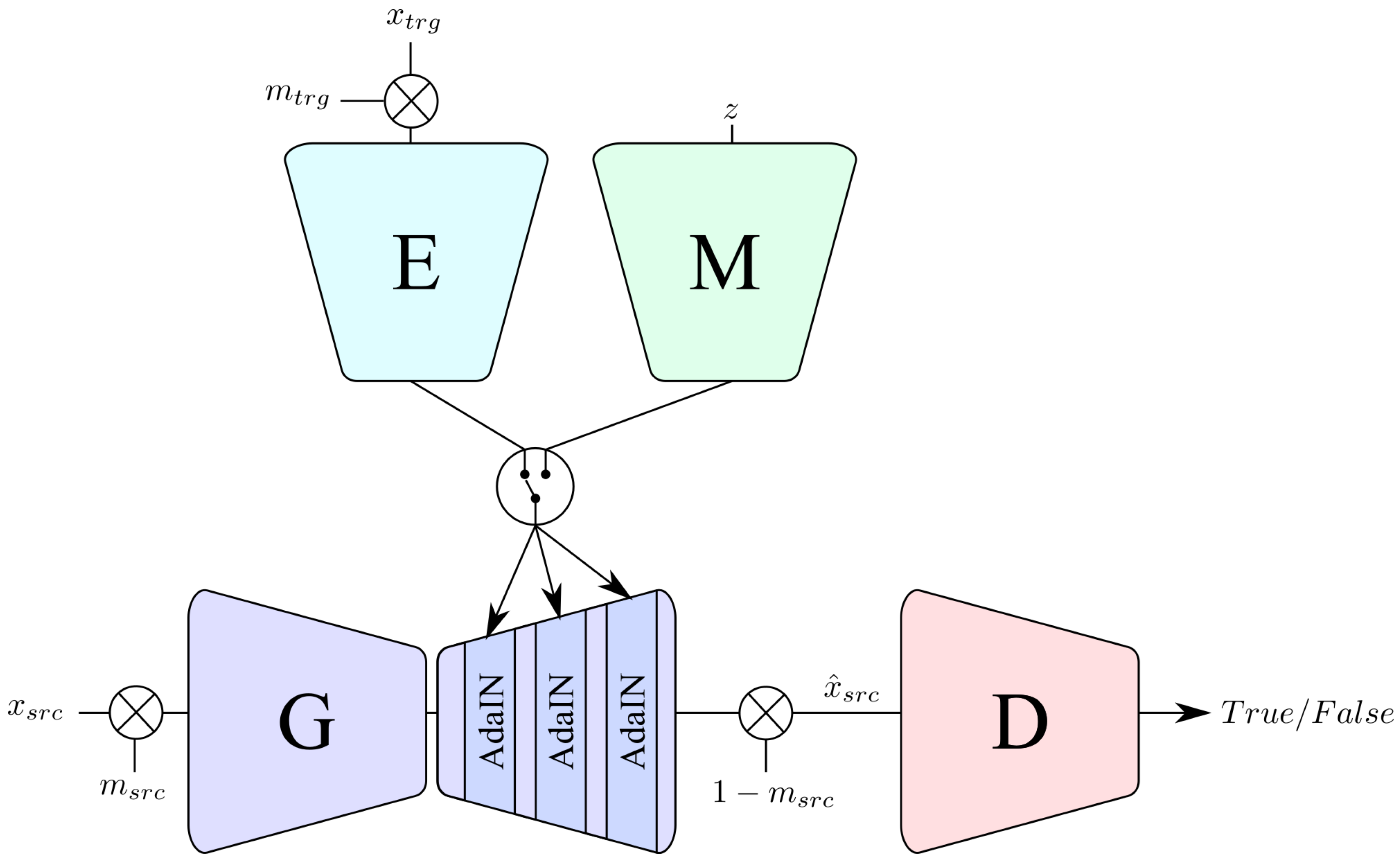

- A system that is able to extract a “clothing style” from a person wearing a set of clothes and transfer it to a source person without additional training steps required during inference;

- The model is trained in an end-to-end matter and employs clothes masks in order to localize the style transfer only to specific areas of the images, leaving the background and person’s identity untouched;

- Extensive experiments performed on a particularly-challenging dataset, i.e., DeepFashion2 [12].

2. Related Work

- Generative Adversarial Networks. In recent years, Generative Adversarial Networks (GANS) have become the state-of-the-art for generative tasks. They were first introduced by Ian Goodfellow et al. in [13] and were quickly adopted for multiple tasks. For instance, in noise-to-image generation, the objective is to sample images starting from a random distribution z. In this field, DCGAN [14] presented the first fully convolutional GAN architecture. In addition, BiGAN [15] introduced an inverse mapping for the generated image, and conditional GANs [16] were introduced in order to control the output of a GAN using additional information. Then, BigGAN [17] proved that GANs could successfully generate high-resolution, diverse samples. Recently, StyleGAN [18] and StyleGANv2 [4] generated style codes starting from the noise distribution and used them to guide the generation process, reaching impressive results. Indeed, GANs can be employed to solve several challenges such as text-to-image generation [19], sign image generation [20] or removing masks from faces [21] Another task in which GANs can be used with great success is image-to-image translation, where an image is mapped from a source domain to a target domain. This can be performed both in a paired [22] or an unpaired [23] way. In addition, StarGAN [24] proposed a solution in order to use a single generator even when transferring multiple attributes. However, of these works can be adapted to perform arbitrary style transfer in fashion, as they do not employ style codes as part of the generation process and focus more on performing manipulation over a small set of domains. Finally, with the introduction of Adaptive Instance Normalization [10], several GANs architectures were proposed to perform domain transfer. Some examples are MUNIT [25], FUNIT [26], or StarGANv2 [11]. In particular, StarGANv2 is able to generate images starting from a style extracted from an image or generated with a latent code and represents the main baseline for this work. Nevertheless, these methods are not specifically designed for fashion style transfer, as they also apply changes to the shape of the input image. For this reason, in this paper, we chose to adapt StarGANv2 architecture to perform this new task.

- Neural Style Transfer. Style transfer has the objective of transferring the style of a target image on a source image, leaving its content untouched. Gatys et al. [27] were the first to use a convolutional neural network to tackle this task. Then, Refs. [28,29] managed to solve the optimization problem proposed by Gatys et al. in real-time. Since then, several other works were proposed [30,31,32,33,34,35]. Then, Chen and Schmid [36] were able to perform arbitrary style transfer, but their proposed method was very slow. None of these methods were tested with fashion images, and they are conceived for transferring styles typically from a painting to a picture and, therefore, cannot be used in this work. On the other hand, for this paper, the fundamental step is represented by the introduction of Adaptive Instance Normalization (AdaIN) [10] that, for the first time, allowed us to perform fast arbitrary style transfer in real-time without being limited to a specific set of styles as in previous works.

- Deep Learning for Fashion. In the fashion industry, deep learning can be used in several applications. Firstly, the task of the classification of the clothing fashion styles was explored in several works [1,37,38]. Another fundamental topic is clothing retrieval [2,39,40,41], which can be used to find a specific clothes in an online shop using a picture taken in the wild. In addition to that, recommendation systems are also of great interest in order to suggest particular clothes based on the user’s preferences [3,42,43,44,45,46,47]. The task that is most relevant to this paper is that of synthesizing clothes images. This can be achieved by selecting a clothes image and generating an image of a person wearing the same clothes [5,48]. In addition, some works generated clothes starting from segmentation maps [49] or text [6]. Finally, virtual try-on systems have the objective of altering the clothes worn by a single person. Firstly, CAGAN [50] performed automatic swapping of clothing on fashion model photos using cycle-consistency loss. Next, VITON [7] generated a coarse-synthesized image with the target clothing item overlaid on the same person in the same pose. Then, the initial blurry clothing area is enhanced with a refinement network. In addition, Kim et al. [8] performed the try-on disentangling geometry and style of the target and source image, respectively. Furthermore, Jiang et al. ([9,51]) used a spatial mask to restrict different styles to different areas of a garment. Finally, Lewis et al. [52] employed a pose conditioned StyleGAN2 architecture and a layered latent space interpolation method.

3. Proposed System

3.1. Network Architecture

3.1.1. Style Encoder Architecture

3.1.2. Transferring a Style with Adaptive Instance Normalization

3.2. Training

4. Results and Discussion

4.1. Dataset

4.2. Network Configuration and Parameters

4.3. Experiments

4.4. Ablation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ma, Y.; Jia, J.; Zhou, S.; Fu, J.; Liu, Y.; Tong, Z. Towards better understanding the clothing fashion styles: A multimodal deep learning approach. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Jiang, S.; Wu, Y.; Fu, Y. Deep bi-directional cross-triplet embedding for cross-domain clothing retrieval. In Proceedings of the 24th ACM international Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 52–56. [Google Scholar]

- Li, X.; Wang, X.; He, X.; Chen, L.; Xiao, J.; Chua, T.S. Hierarchical fashion graph network for personalized outfit recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 25–30 July 2020; pp. 159–168. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8110–8119. [Google Scholar]

- Liu, Y.; Chen, W.; Liu, L.; Lew, M.S. Swapgan: A multistage generative approach for person-to-person fashion style transfer. IEEE Trans. Multimed. 2019, 21, 2209–2222. [Google Scholar] [CrossRef] [Green Version]

- Zhu, S.; Urtasun, R.; Fidler, S.; Lin, D.; Change Loy, C. Be your own prada: Fashion synthesis with structural coherence. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1680–1688. [Google Scholar]

- Han, X.; Wu, Z.; Wu, Z.; Yu, R.; Davis, L.S. VITON: An Image-based Virtual Try-on Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Kim, B.K.; Kim, G.; Lee, S.Y. Style-Controlled Synthesis of Clothing Segments for Fashion Image Manipulation. IEEE Trans. Multimed. 2020, 22, 298–310. [Google Scholar] [CrossRef]

- Jiang, S.; Li, J.; Fu, Y. Deep Learning for Fashion Style Generation. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–13. [Google Scholar] [CrossRef]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.W. Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8188–8197. [Google Scholar]

- Ge, Y.; Zhang, R.; Wang, X.; Tang, X.; Luo, P. Deepfashion2: A versatile benchmark for detection, pose estimation, segmentation and re-identification of clothing images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5337–5345. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Donahue, J.; Krähenbühl, P.; Darrell, T. Adversarial feature learning. arXiv 2016, arXiv:1605.09782. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Brock, A.; Donahue, J.; Simonyan, K. Large scale GAN training for high fidelity natural image synthesis. arXiv 2018, arXiv:1809.11096. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Zhang, H.; Xu, T.; Li, H.; Zhang, S.; Wang, X.; Huang, X.; Metaxas, D.N. Stackgan++: Realistic image synthesis with stacked generative adversarial networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1947–1962. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dewi, C.; Chen, R.C.; Liu, Y.T.; Yu, H. Various generative adversarial networks model for synthetic prohibitory sign image generation. Appl. Sci. 2021, 11, 2913. [Google Scholar] [CrossRef]

- Din, N.U.; Javed, K.; Bae, S.; Yi, J. A novel GAN-based network for unmasking of masked face. IEEE Access 2020, 8, 44276–44287. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Choi, Y.; Choi, M.; Kim, M.; Ha, J.W.; Kim, S.; Choo, J. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8789–8797. [Google Scholar]

- Huang, X.; Liu, M.Y.; Belongie, S.; Kautz, J. Multimodal unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 172–189. [Google Scholar]

- Liu, M.Y.; Huang, X.; Mallya, A.; Karras, T.; Aila, T.; Lehtinen, J.; Kautz, J. Few-shot unsupervised image-to-image translation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 10551–10560. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 2414–2423. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Ulyanov, D.; Lebedev, V.; Vedaldi, A.; Lempitsky, V.S. Texture networks: Feed-forward synthesis of textures and stylized images. In Proceedings of the International Conference on Machine Learning (ICML), New York City, NY, USA, 19–24 June 2016; Volume 1, p. 4. [Google Scholar]

- Li, C.; Wand, M. Combining markov random fields and convolutional neural networks for image synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 2479–2486. [Google Scholar]

- Dumoulin, V.; Shlens, J.; Kudlur, M. A learned representation for artistic style. arXiv 2016, arXiv:1610.07629. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M.; Hertzmann, A.; Shechtman, E. Controlling perceptual factors in neural style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3985–3993. [Google Scholar]

- Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; Yang, M.H. Universal style transfer via feature transforms. Adv. Neural Inf. Process. Syst. 2017, 30, 385–395. [Google Scholar]

- Li, Y.; Wang, N.; Liu, J.; Hou, X. Demystifying neural style transfer. arXiv 2017, arXiv:1701.01036. [Google Scholar]

- Zhang, Y.; Fang, C.; Wang, Y.; Wang, Z.; Lin, Z.; Fu, Y.; Yang, J. Multimodal style transfer via graph cuts. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5943–5951. [Google Scholar]

- Chen, T.Q.; Schmidt, M. Fast patch-based style transfer of arbitrary style. arXiv 2016, arXiv:1612.04337. [Google Scholar]

- Kiapour, M.H.; Yamaguchi, K.; Berg, A.C.; Berg, T.L. Hipster wars: Discovering elements of fashion styles. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 472–488. [Google Scholar]

- Jiang, S.; Shao, M.; Jia, C.; Fu, Y. Learning consensus representation for weak style classification. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 2906–2919. [Google Scholar] [CrossRef]

- Hadi Kiapour, M.; Han, X.; Lazebnik, S.; Berg, A.C.; Berg, T.L. Where to buy it: Matching street clothing photos in online shops. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3343–3351. [Google Scholar]

- Huang, J.; Feris, R.S.; Chen, Q.; Yan, S. Cross-domain image retrieval with a dual attribute-aware ranking network. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1062–1070. [Google Scholar]

- Liu, Z.; Luo, P.; Qiu, S.; Wang, X.; Tang, X. Deepfashion: Powering robust clothes recognition and retrieval with rich annotations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 1096–1104. [Google Scholar]

- Fu, J.; Liu, Y.; Jia, J.; Ma, Y.; Meng, F.; Huang, H. A virtual personal fashion consultant: Learning from the personal preference of fashion. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Jiang, S.; Wu, Y.; Fu, Y. Deep bidirectional cross-triplet embedding for online clothing shopping. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–22. [Google Scholar] [CrossRef]

- Yang, X.; Ma, Y.; Liao, L.; Wang, M.; Chua, T.S. Transnfcm: Translation-based neural fashion compatibility modeling. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 403–410. [Google Scholar]

- Becattini, F.; Song, X.; Baecchi, C.; Fang, S.T.; Ferrari, C.; Nie, L.; Del Bimbo, A. PLM-IPE: A Pixel-Landmark Mutual Enhanced Framework for Implicit Preference Estimation. In ACM Multimedia Asia; Association for Computing Machinery: New York, NY, USA, 2021; Article 42; pp. 1–5. [Google Scholar]

- De Divitiis, L.; Becattini, F.; Baecchi, C.; Bimbo, A.D. Disentangling Features for Fashion Recommendation. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2022. [CrossRef]

- Divitiis, L.D.; Becattini, F.; Baecchi, C.; Bimbo, A.D. Garment recommendation with memory augmented neural networks. In Proceedings of the International Conference on Pattern Recognition, Virtual, 10–15 January 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 282–295. [Google Scholar]

- Yoo, D.; Kim, N.; Park, S.; Paek, A.S.; Kweon, I.S. Pixel-level domain transfer. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 517–532. [Google Scholar]

- Lassner, C.; Pons-Moll, G.; Gehler, P.V. A generative model of people in clothing. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 853–862. [Google Scholar]

- Jetchev, N.; Bergmann, U. The conditional analogy gan: Swapping fashion articles on people images. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2287–2292. [Google Scholar]

- Raffiee, A.H.; Sollami, M. Garmentgan: Photo-realistic adversarial fashion transfer. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3923–3930. [Google Scholar]

- Lewis, K.M.; Varadharajan, S.; Kemelmacher-Shlizerman, I. Tryongan: Body-aware try-on via layered interpolation. ACM Trans. Graph. (TOG) 2021, 40, 1–10. [Google Scholar] [CrossRef]

- Li, P.; Xu, Y.; Wei, Y.; Yang, Y. Self-correction for human parsing. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 3260–3271. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 6629–6640. [Google Scholar]

| Training Parameters | |

|---|---|

| Style dimension | 512 |

| 1 | |

| 1 | |

| 1 | |

| 10 |

| Method | LPIPS ↑ | |

|---|---|---|

| All Image | StarGANv2 [11] | 0.524 |

| Ours | 0.551 | |

| Clothes Area | StarGANv2 [11] | 0.162 |

| Ours | 0.267 |

| LPIPS ↑ | |

|---|---|

| Ours | 0.551 |

| StarGANv2 Encoder | 0.540 |

| no | 0.542 |

| no | 0.539 |

| = 1 | 0.545 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fontanini, T.; Ferrari, C. Would Your Clothes Look Good on Me? Towards Transferring Clothing Styles with Adaptive Instance Normalization. Sensors 2022, 22, 5002. https://doi.org/10.3390/s22135002

Fontanini T, Ferrari C. Would Your Clothes Look Good on Me? Towards Transferring Clothing Styles with Adaptive Instance Normalization. Sensors. 2022; 22(13):5002. https://doi.org/10.3390/s22135002

Chicago/Turabian StyleFontanini, Tomaso, and Claudio Ferrari. 2022. "Would Your Clothes Look Good on Me? Towards Transferring Clothing Styles with Adaptive Instance Normalization" Sensors 22, no. 13: 5002. https://doi.org/10.3390/s22135002

APA StyleFontanini, T., & Ferrari, C. (2022). Would Your Clothes Look Good on Me? Towards Transferring Clothing Styles with Adaptive Instance Normalization. Sensors, 22(13), 5002. https://doi.org/10.3390/s22135002