A Novel Method for Improved Network Traffic Prediction Using Enhanced Deep Reinforcement Learning Algorithm

Abstract

:1. Introduction

2. Related Works

The Applications of Network Traffic Analysis

3. Materials and Methods

3.1. The EDRL Algorithm

3.2. Dataset Pre-Processing

3.3. Feature Engineering

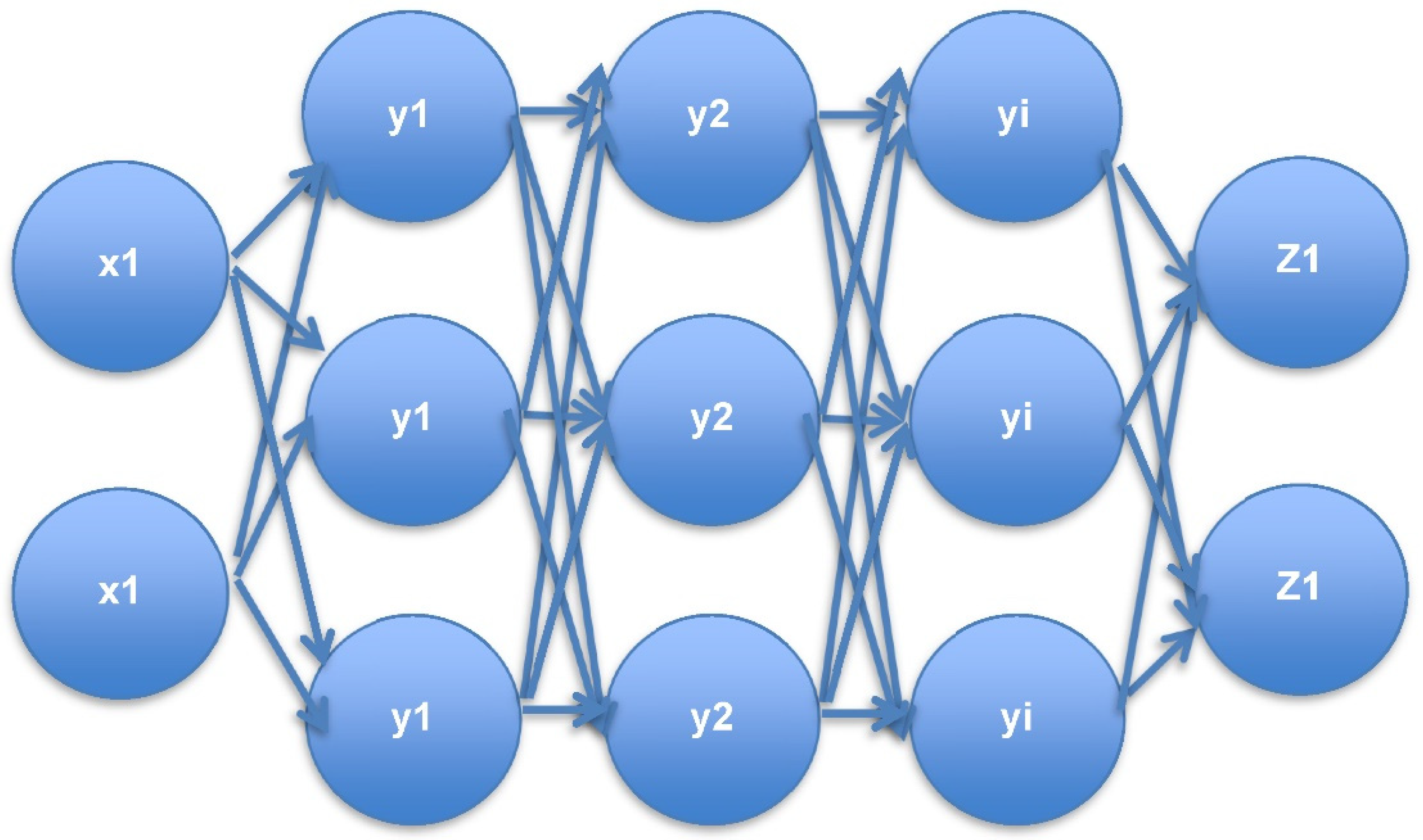

3.4. The DNN Multi-Layer Perceptron Method

3.5. Monte Carlo Learning for Network Traffic Analysis

| Algorithm 1: Monte Carlo Learning for Network Traffic Analysis |

| Pre-requisite: Pre-processed dataset (X) for number of iterations Assure: Max (R(X, Ai) X = (X−Xmin)/(Xmax−Xmin) QL = Xmax − Xmin*Xmax+1 − Xmin+1 Ri(Si, Si+1) = max(1, Ri(Si, Si+1)) F = {f1, f2, f3…f7} C = {c1, c2, c3…c5} For each Si ∈ X do Q(si,ai) = RF(max(Q(si+1, ai+1) + R(si, ai) Q(si,ai) = (Δ − 1)Q(si+1, ai+1) + Δ(R(si, ai)+RF(max(Q(si+1, ai+1)) Ri+1(Si, Si+1) = Ri+1(Si, Si+1) +1 End for Max (R(X,Ai) |

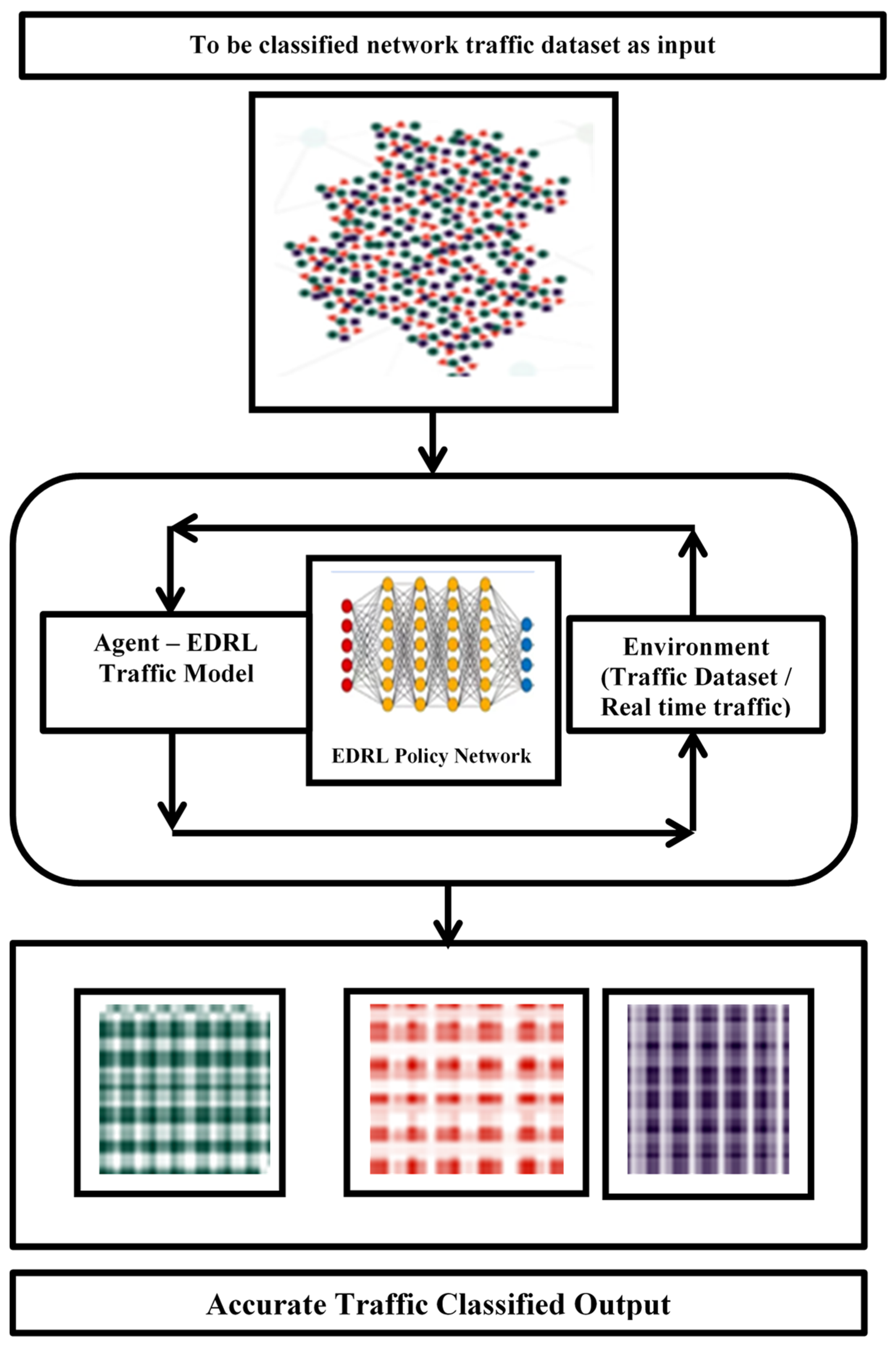

3.6. Agent—EDRL Traffic Model

| Algorithm 2: EDRL Algorithm for Network Traffic Prediction |

| Pre-requisite: pre-processed network traffic data from dataset Assure: max (precision), max (accuracy), min (falsepositive), min (falsenegative) QL = Xmax − Xmin*Xmax+1 − Xmin+1 Call feature engineering function Call DNN multilayer perceptron method Call Monte Carlo learning for network traffic analysis Algorithm 1 |

3.7. Accuracy and Precision for Network Traffic Analysis

3.8. Statistical Analysis

4. Numerical Results

4.1. Accuracy Comparison

4.2. Precision Comparison

4.3. False Positive Comparison

4.4. False Negative Comparison

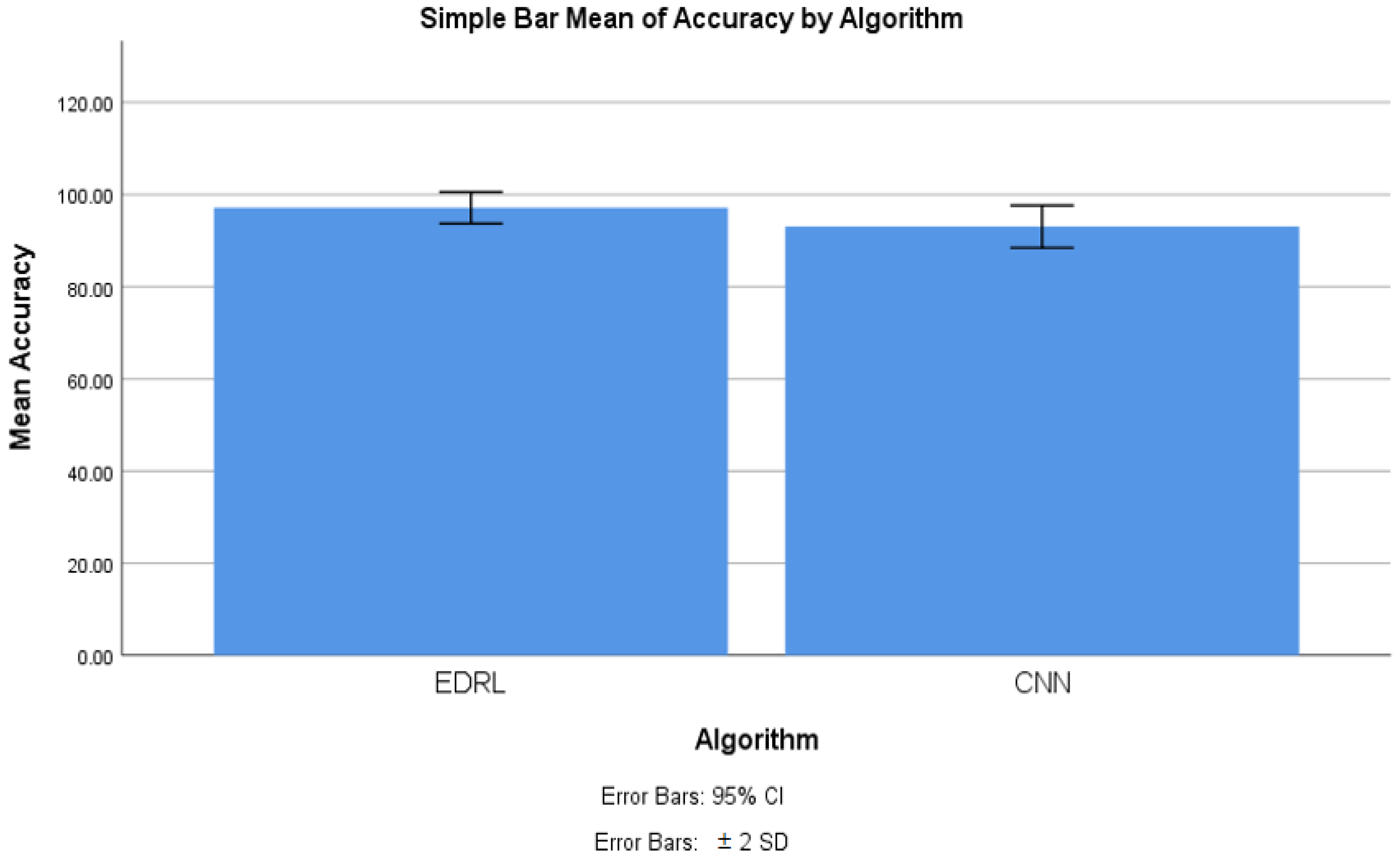

4.5. Accuracy Comparison for EDRL and CNN Algorithms

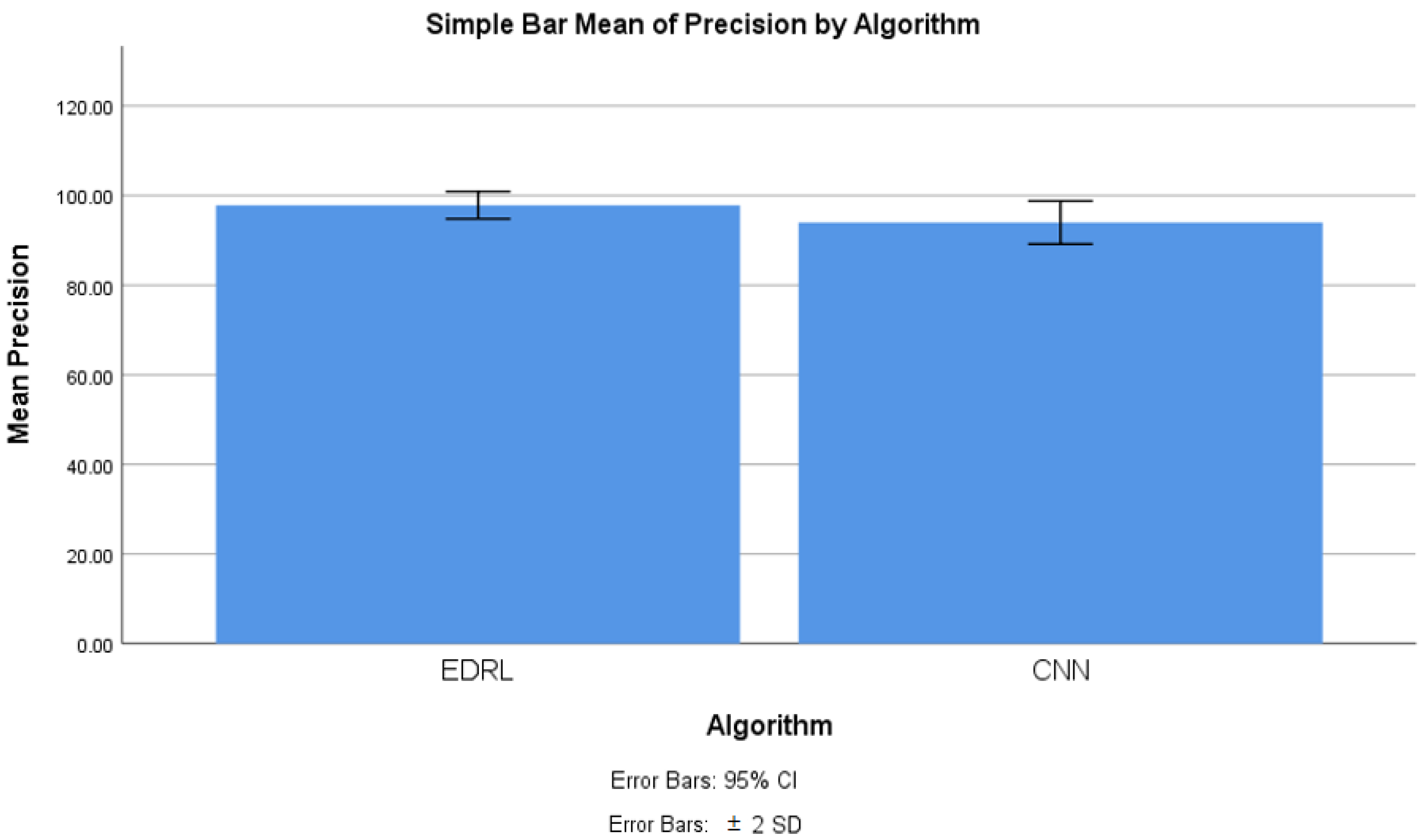

4.6. Precision Comparison for EDRL and CNN Algorithms

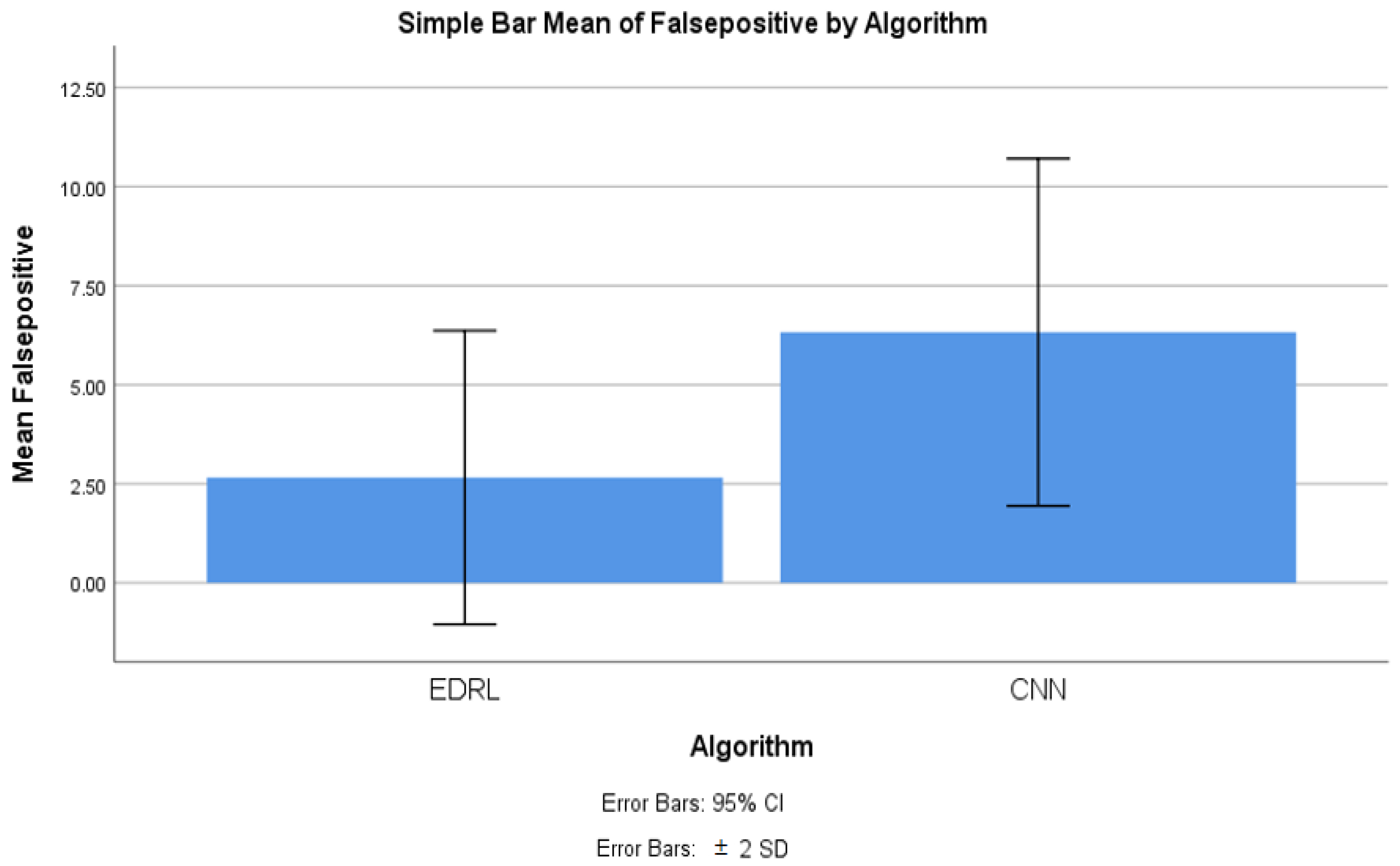

4.7. False Positive Comparison for EDRL and CNN Algorithms

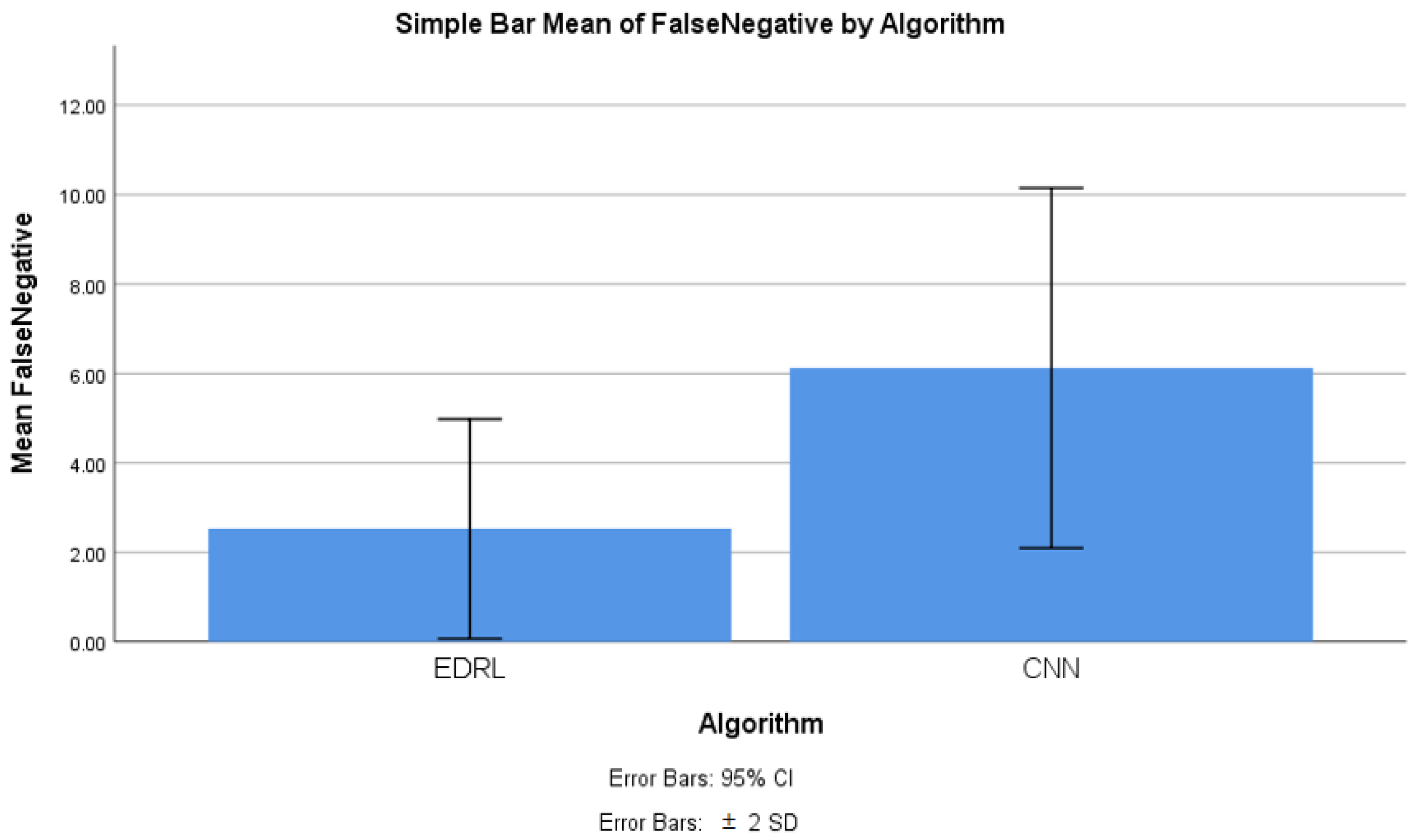

4.8. False Negative Comparison for EDRL and CNN Algorithms

5. Discussion of Work

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adekitan, A.I.; Abolade, J.; Shobayo, O. Data mining approach for predicting the daily Internet data traffic of a smart university. J. Big Data 2019, 6, 11. [Google Scholar] [CrossRef]

- Alsharif, M.H.; Younes, M.K.; Kim, J. Time Series ARIMA Model for Prediction of Daily and Monthly Average Global Solar Radiation: The Case Study of Seoul, South Korea. Symmetry 2019, 11, 240. [Google Scholar] [CrossRef] [Green Version]

- Alsharif, M.H.; Kelechi, A.H.; Yahya, K.; Chaudhry, S.A. Machine Learning Algorithms for Smart Data Analysis in Internet of Things Environment: Taxonomies and Research Trends. Symmetry 2020, 12, 88. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Bai, Y.; Yu, C.; Gu, Y.; Feng, P.; Wang, X.; Wang, R. A network traffic flow prediction with deep learning approach for large-scale metropolitan area network. In Proceedings of the 2018 IEEE/IFIP Network Operations and Management Symposium (NOMS 2018), Taipei, Taiwan, 23–27 April 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Cortez, P.; Rio, M.; Rocha, M.; Sousa, P. Internet Traffic Forecasting using Neural Networks. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006; pp. 2635–2642. [Google Scholar] [CrossRef] [Green Version]

- Narejo, S.; Pasero, E. An Application of Internet Traffic Prediction with Deep Neural Network. In Multidisciplinary Approaches to Neural Computing; Esposito, A., Faudez-Zanuy, M., Morabito, F., Pasero, E., Eds.; Smart Innovation, Systems and Technologies; Springer: Cham, Switzerland, 2018; Volume 69. [Google Scholar] [CrossRef]

- Vinayakumar, R.; Soman, K.P.; Poornachandran, P. Applying deep learning approaches for network traffic prediction. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Udupi, India, 13–16 September 2017; pp. 2353–2358. [Google Scholar] [CrossRef]

- Park, J.; Yi, D.; Ji, S. Analysis of Recurrent Neural Network and Predictions. Symmetry 2020, 12, 615. [Google Scholar] [CrossRef]

- Oliveira, T.P.; Barbar, J.S.; Soares, A.S. Computer network traffic prediction: A comparison between traditional and deep learning neural networks. Int. J. Big Data Intell. 2016, 3, 28. [Google Scholar] [CrossRef]

- Yoo, W.; Sim, A. Network bandwidth utilization forecast model on high bandwidth networks. In Proceedings of the 2015 International Conference on Computing, Networking and Communications (ICNC), Garden Grove, CA, USA, 16–19 February 2015; pp. 494–498. [Google Scholar] [CrossRef] [Green Version]

- Chen, A.; Law, J.; Aibin, M. A Survey on Traffic Prediction Techniques Using Artificial Intelligence for Communication Networks. Telecom 2021, 2, 518–535. [Google Scholar] [CrossRef]

- Rezaei, S.; Liu, X. Multitask learning for network traffic classification. In Proceedings of the International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; pp. 1–9. [Google Scholar]

- Lotfollahi, M.; Zade, R.S.H.; Siavoshani, M.J.; Saberian, M. Deep packet: A novel approach for encrypted traffic classification using deep learning. Soft Comput. 2020, 24, 1999–2012. [Google Scholar] [CrossRef] [Green Version]

- Lopez-Martin, M.; Carro, B.; Sanchez-Esguevillas, A.; Lloret, J. Network traffic classifier with convolutional and recurrent neural networks for internet of things. IEEE Access 2017, 5, 42–50. [Google Scholar] [CrossRef]

- Zeng, Y.; Gu, H.; Wei, W.; Guo, Y. Deep-Full-Range: A deep learning based network encrypted traffic classification and intrusion detection framework. IEEE Access 2019, 7, 182–190. [Google Scholar] [CrossRef]

- Labayen, V.; Magana, E.; Morato, D.; Izal, M. Online classification of user activities using machine learning on network traffic. Comput. Netw. 2020, 181, 557–569. [Google Scholar] [CrossRef]

- Chang, L.-H.; Lee, T.-H.; Chu, H.-C.; Su, C.-W. Application-based online traffic classification with deep learning models on sdn networks. Adv. Technol. Innov. 2020, 5, 216–229. [Google Scholar] [CrossRef]

- Gil, G.D.; Lashkari, A.H.; Mamun, M.; Ghorbani, A.A. Characterization of Encrypted and VPN Traffic Using Time-Related Features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy (ICISSP 2016), Rome, Italy, 19–21 February 2016; pp. 407–414. [Google Scholar]

- Browne, C.B.; Powley, E.; Whitehouse, D.; Lucas, S.M.; Cowling, P.I.; Rohlfshagen, P.; Tavener, S.; Perez, D.; Samothrakis, S.; Colton, S. A survey of Monte Carlo tree search methods. IEEE Trans. Comput. Intell. AI Games 2012, 4, 1–43. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, A.A.; Agunsoye, G. A Real-Time Network Traffic Classifier for Online Applications Using Machine Learning. Algorithms 2021, 14, 250. [Google Scholar] [CrossRef]

- Troia, S.; Alvizu, R.; Zhou, Y.; Maier, G.; Pattavina, A. Deep Learning-Based Traffic Prediction for Network Optimization. In Proceedings of the 2018 20th International Conference on Transparent Optical Networks (ICTON), Bucharest, Romania, 1–5 July 2018; pp. 1–4. [Google Scholar] [CrossRef] [Green Version]

- Pacheco, F.; Exposito, E.; Gineste, M.; Baudoin, C.; Aguilar, J. Towards the deployment of Machine Learning solutions in network traffic classification: A systematic survey. IEEE Commun. Surv. Tutor. 2018, 21, 1988–2014. [Google Scholar] [CrossRef] [Green Version]

- Mohammed, A.R.; Mohammed, S.A.; Shirmohammadi, S. Machine Learning and Deep Learning Based Traffic Classification and Prediction in Software Defined Networking. In Proceedings of the 2019 IEEE International Symposium on Measurements & Networking (M&N), Catania, Italy, 8–10 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Dong, S. Multi class SVM algorithm with active learning for network traffic classification. Expert Syst. Appl. 2021, 176, 114885. [Google Scholar] [CrossRef]

- Balamurugan, N.M.; Kannadasan, R.; Alsharif, M.H.; Uthansakul, P. A Novel Forward-Propagation Workflow Assessment Method for Malicious Packet Detection. Sensors 2022, 22, 4167. [Google Scholar] [CrossRef] [PubMed]

| Class | Method | Learning Technique |

|---|---|---|

| LSTM | Discriminative | Supervised |

| CNN | ||

| RNN | ||

| MLP |

| Method | Data Input | |

|---|---|---|

| Know Answer | Policy/Problems | |

| Supervised learning | Learned output with supervision | Learning reward-based output with supervision |

| Reinforcement learning method | Maximize reward-based output | Feedback trained maximized reward-based output |

| Deep learning method | Deep learning-based output | Deep learning and feedback trained-based output |

| Deep reinforcement learning method | Deep-based maximize reward-based output | Deep and feedback trained-based maximize reward-based output |

| Enhanced deep reinforcement learning method | Accurate deep-based maximize reward-based output | Accurate output, based on deep and feedback trained maximize reward |

| Feature Index | Notation | Feature Description |

|---|---|---|

| f1 | avg_seg_sz | Average size of segment |

| f2 | win_sz | Window size |

| f3 | r_t_t | Round Trip delay Time |

| f4 | var_pack | Variance in packets |

| f5 | Act_dt_pkt | Actual data packet |

| f6 | clt_pn | Client port number |

| f7 | svr_pn | Server port number |

| Class Index | Notation | Class Description | Applications |

|---|---|---|---|

| c1 | www_pkt | www packet | General browsing data |

| c2 | p2p_pkt | P2P network packet | Torrent streaming |

| c3 | ml_pkt | Mail service packet | SMTP, POP, MIME, IMAP |

| c4 | db_pkt | Database packet | SQL net |

| c5 | mul_pkt | Multimedia packet | Video storage server YouTube |

| Group Statistics | |||||

|---|---|---|---|---|---|

| Algorithm | N | Mean | Std. Deviation | Std. Error Mean | |

| Accuracy | EDRL | 10 | 97.200 | 1.71156 | 0.538 |

| CNN | 10 | 93.055 | 2.29835 | 0.727 | |

| Independent Samples Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Accuracy | Equal variances assumed | 1.111 | 0.306 | 4.519 | 18 | 0.000 | 4.095 | 0.90619 | 2.191 | 5.999 |

| Equal variances not assumed | 4.519 | 16.634 | 0.000 | 4.095 | 0.90619 | 2.180 | 6.010 | |||

| Group Statistics | |||||

|---|---|---|---|---|---|

| Algorithm | N | Mean | Std. Deviation | Std. Error Mean | |

| Precision | EDRL | 10 | 97.343 | 1.519 | 0.480 |

| CNN | 10 | 93.972 | 2.403 | 0.760 | |

| Independent Samples Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| Precision | Equal variances assumed | 2.351 | 0.143 | 4.295 | 18 | 0.000 | 3.861 | 0.899 | 1.972 | 5.750 |

| Equal variances not assumed | 4.295 | 15.20 | 0.001 | 3.861 | 0.899 | 1.947 | 5.780 | |||

| Group Statistics | |||||

|---|---|---|---|---|---|

| Algorithm | N | Mean | Std. Deviation | Std. Error Mean | |

| False positive | EDRL | 10 | 2.657 | 1.85335 | 0.586 |

| CNN | 10 | 6.325 | 2.19063 | 0.693 | |

| Independent Samples Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| False positive | Equal variances assumed | 0.372 | 0.550 | −4.042 | 18 | 0.001 | −3.668 | 0.907 | −5.574 | −1.762 |

| Equal variances not assumed | −4.042 | 17.51 | 0.001 | −3.668 | 0.907 | −5.578 | −1.758 | |||

| Group Statistics | |||||

|---|---|---|---|---|---|

| Algorithm | N | Mean | Std. Deviation | Std. Error Mean | |

| False negative | EDRL | 10 | 2.5270 | 1.22734 | 0.38812 |

| CNN | 10 | 5.6750 | 1.9920 | 0.61643 | |

| Independent Samples Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Levene’s Test for Equality of Variances | t-Test for Equality of Means | |||||||||

| F | Sig. | t | df | Sig. (2-Tailed) | Mean Difference | Std. Error Difference | 95% Confidence Interval of the Difference | |||

| Lower | Upper | |||||||||

| False negative | Equal variances assumed | 3.113 | 0.095 | −4.826 | 18 | 0.00 | −3.598 | 0.746 | −5.164 | −2.032 |

| Equal variances not assumed | −4.826 | 14.87 | 0.00 | −3.598 | 0.746 | −5.188 | −2.008 | |||

| Work Name | Algorithm Used | Accuracy |

|---|---|---|

| EDONKEY application network traffic [20] | KNN and RF | 72.08% and 90.53% |

| FTP_CONTROL [20,22] | ANN | 78.00% |

| The network traffic of FTP and P2P [13,23] | KNN | 94% |

| The CNN based application identification task [21] | CNN | 94% |

| Traffic classification was less with UNB ISCX VPN-Non-VPN dataset [24] | SVM | 94.2% |

| Orange platform of Nigerian University [1] | KNN, RF, NN, and NB | 79.6%, 84.8%, 84.6%, and 87.6% |

| Internet traffic of different applications | The proposed EDRL algorithm | 97.20% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balamurugan, N.M.; Adimoolam, M.; Alsharif, M.H.; Uthansakul, P. A Novel Method for Improved Network Traffic Prediction Using Enhanced Deep Reinforcement Learning Algorithm. Sensors 2022, 22, 5006. https://doi.org/10.3390/s22135006

Balamurugan NM, Adimoolam M, Alsharif MH, Uthansakul P. A Novel Method for Improved Network Traffic Prediction Using Enhanced Deep Reinforcement Learning Algorithm. Sensors. 2022; 22(13):5006. https://doi.org/10.3390/s22135006

Chicago/Turabian StyleBalamurugan, Nagaiah Mohanan, Malaiyalathan Adimoolam, Mohammed H. Alsharif, and Peerapong Uthansakul. 2022. "A Novel Method for Improved Network Traffic Prediction Using Enhanced Deep Reinforcement Learning Algorithm" Sensors 22, no. 13: 5006. https://doi.org/10.3390/s22135006

APA StyleBalamurugan, N. M., Adimoolam, M., Alsharif, M. H., & Uthansakul, P. (2022). A Novel Method for Improved Network Traffic Prediction Using Enhanced Deep Reinforcement Learning Algorithm. Sensors, 22(13), 5006. https://doi.org/10.3390/s22135006