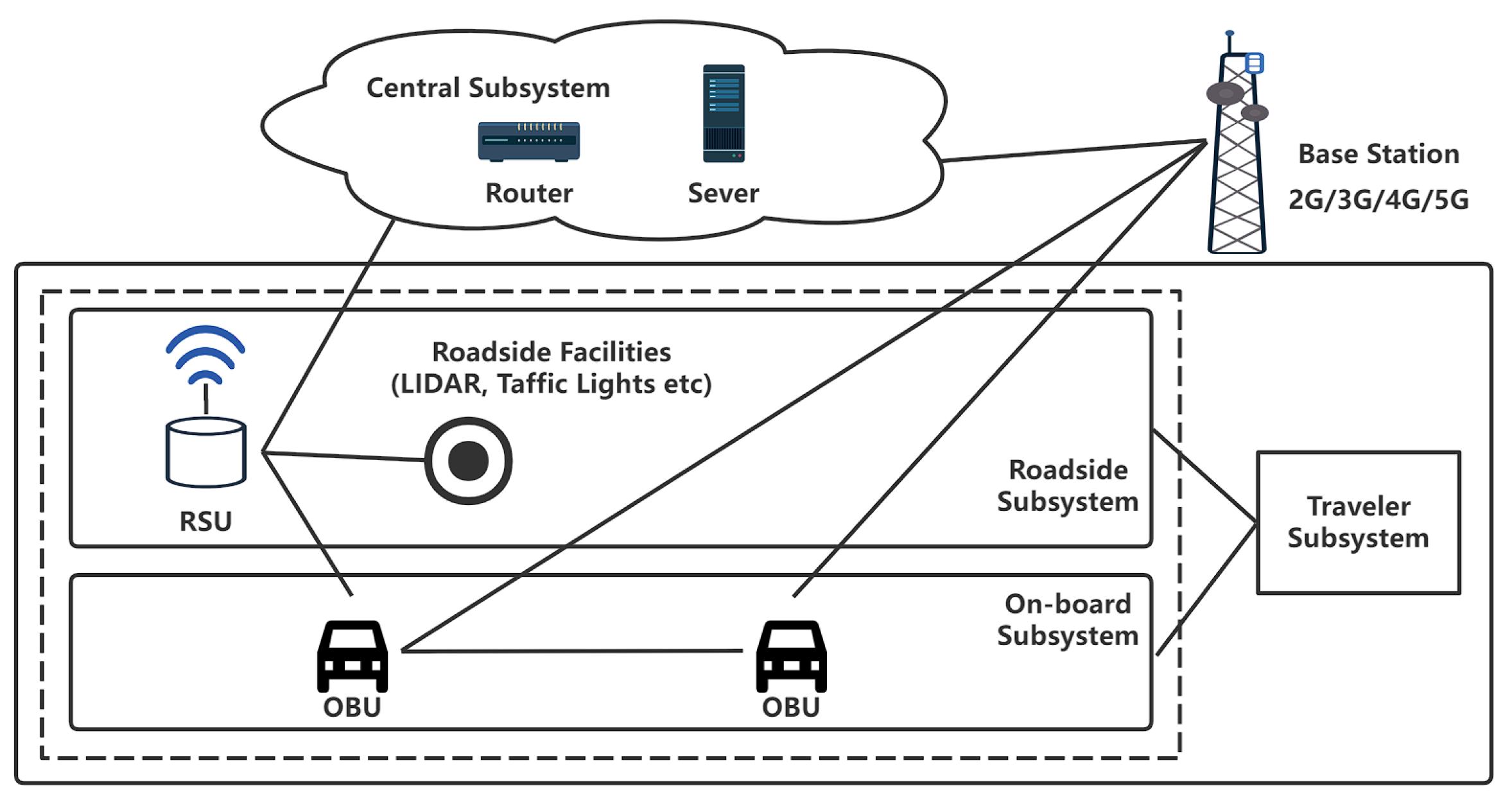

Figure 1.

ITS system model; vehicles can obtain more accurate information.

Figure 1.

ITS system model; vehicles can obtain more accurate information.

Figure 2.

Basic components of the OBU.

Figure 2.

Basic components of the OBU.

Figure 3.

CarTest system model.

Figure 3.

CarTest system model.

Figure 4.

CarTest data flow chart.

Figure 4.

CarTest data flow chart.

Figure 5.

CarTest test flow chart.

Figure 5.

CarTest test flow chart.

Figure 6.

GNSS simulation hardware connection schematic.

Figure 6.

GNSS simulation hardware connection schematic.

Figure 7.

Transformation of coordinates, the star represents a point in the map.

Figure 7.

Transformation of coordinates, the star represents a point in the map.

Figure 8.

GNSS simulation in VTD simulator.

Figure 8.

GNSS simulation in VTD simulator.

Figure 9.

Hardware architecture diagram of V2X simulation test: (1) V2X signal generator, (2) OBU as signal generator.

Figure 9.

Hardware architecture diagram of V2X simulation test: (1) V2X signal generator, (2) OBU as signal generator.

Figure 10.

ICW test cases in VTD simulator.

Figure 10.

ICW test cases in VTD simulator.

Figure 11.

Indoor testing setup.

Figure 11.

Indoor testing setup.

Figure 12.

Indoor test data flow and software components.

Figure 12.

Indoor test data flow and software components.

Figure 13.

Design of outdoor test trolley.

Figure 13.

Design of outdoor test trolley.

Figure 14.

(a) Test case administration interface (b) Signal generators and server (c) Client display in safe driving situations (d) Client-side display of forward collision warning and sensor effect.

Figure 14.

(a) Test case administration interface (b) Signal generators and server (c) Client display in safe driving situations (d) Client-side display of forward collision warning and sensor effect.

Figure 15.

FCW test: (a) safe at 3.3 s, (b) warning at 6.4 s.

Figure 15.

FCW test: (a) safe at 3.3 s, (b) warning at 6.4 s.

Figure 16.

Comparison of ICW test pass rates with 1160 cases.

Figure 16.

Comparison of ICW test pass rates with 1160 cases.

Figure 17.

ICW test: (a) Top view, (b) Main view with unsuitable warning in VTD simulator.

Figure 17.

ICW test: (a) Top view, (b) Main view with unsuitable warning in VTD simulator.

Figure 18.

CACC test: (a) Top view of a CACC case, (b) Main view of a CACC case in VTD simulator.

Figure 18.

CACC test: (a) Top view of a CACC case, (b) Main view of a CACC case in VTD simulator.

Figure 19.

Speed variation curves of 5 vehicles in the convoy using different PID model control (no acceleration control): (a) P1 (b) P2 .

Figure 19.

Speed variation curves of 5 vehicles in the convoy using different PID model control (no acceleration control): (a) P1 (b) P2 .

Figure 20.

Speed variation curves of 5 vehicles in the convoy using different PID model control (with acceleration control): (a) P1 (b) P2 .

Figure 20.

Speed variation curves of 5 vehicles in the convoy using different PID model control (with acceleration control): (a) P1 (b) P2 .

Figure 21.

Outdoor Large-scale Test with HV and BOBUs.

Figure 21.

Outdoor Large-scale Test with HV and BOBUs.

Figure 22.

(a) 80 OBU for indoor testing, (b) background vehicle, (c) 8 background vehicles, (d) Box of the vehicle and 8 OBUs.

Figure 22.

(a) 80 OBU for indoor testing, (b) background vehicle, (c) 8 background vehicles, (d) Box of the vehicle and 8 OBUs.

Figure 23.

Large-scale test result (PER with 10,40,160 BOBU).

Figure 23.

Large-scale test result (PER with 10,40,160 BOBU).

Figure 24.

Large-scale test result (CBR with 10,40,160 BOBU).

Figure 24.

Large-scale test result (CBR with 10,40,160 BOBU).

Figure 25.

Comparison of CBR and PER with 10-160 BOBU.

Figure 25.

Comparison of CBR and PER with 10-160 BOBU.

Figure 26.

GNSS test: (a) Cold start test, (b) Hot start test.

Figure 26.

GNSS test: (a) Cold start test, (b) Hot start test.

Figure 27.

GNSS test hardware and test situation.

Figure 27.

GNSS test hardware and test situation.

Figure 28.

GNSS cold and hot start test result.

Figure 28.

GNSS cold and hot start test result.

Figure 29.

Test case switching causes OBU “Cold start” and solution in VTD simulator.

Figure 29.

Test case switching causes OBU “Cold start” and solution in VTD simulator.

Figure 30.

Road scenarios for continuity testing in VTD simulator.

Figure 30.

Road scenarios for continuity testing in VTD simulator.

Table 1.

CAN .dbc example.

Table 1.

CAN .dbc example.

| Message | Unit | Minimum | Maximum |

|---|

| TransmissionState | - | 0 | 15 |

| ABS Active | - | 0 | 1 |

| Traction Control Active | - | 0 | 1 |

| Brakes Active | - | 0 | 1 |

Panic brake active

Hard Braking | - | 0 | 1 |

| Longitudinal Acceleration | m/s | | 15.33 |

| Steering Wheel Angle | degree | | 2047.88 |

| Vehicle Speed | km/h | 0 | 511.984 |

| LF Wheel Speed | km/h | 0 | 511.969 |

| RF Wheel Speed | km/h | 0 | 511.969 |

| LR Wheel Speed | km/h | 0 | 511.969 |

| RR Wheel Speed | km/h | 0 | 511.969 |

| Left Turn Signal | - | 0 | 3 |

| Right Turn Signal | - | 0 | 3 |

| hazard lights on | - | 0 | 1 |

| fog lights on | - | 0 | 1 |

| LF Wheel RPM | - | | 32,767 |

| RF Wheel RPM | - | | 32,767 |

| LR Wheel RPM | - | | 32,767 |

| RR Wheel RPM | - | | 32,767 |

Table 2.

V2X applications.

Table 2.

V2X applications.

| Category | Full Name | Simple Name |

|---|

| V2V | Forward Collision Warning | FCW |

| V2V/V2I | Intersection Collision Warning | ICW |

| V2V/V2I | Left Turn Assist | LTA |

| V2V | Blind Spot Warning-Lane Change Warning | BSW-LCW |

| V2V | Do Not Pass Warning | DNPW |

| V2V-Event | Emergency Brake Warning | EBW |

| V2V-Event | Abnormal Vehicle Warning | AVW |

| V2V-Event | Control Loss Warning | CLW |

| V2I | Hazardous Location Warning | HLW |

| V2I | Speed Limit Warning | SLW |

| V2I | Red Light Violation Warning | RLVW |

| V2P/V2I | Vulnerable Road User Collision Warning | VRUCW |

| V2I | Green Light Optimal Speed Advisory | GLOSA |

| V2I | In-Vehicle Signage | IVS |

| V2I | Traffic Jam Warning | TJW |

| V2V | Emergency Vehicle Warning | EVW |

| V2I | Vehicle Near-Field Payment | VNFP |

Table 3.

Notations of CACC evaluation (1).

Table 3.

Notations of CACC evaluation (1).

| Variables | Notations |

|---|

| Timestamp of the mth frame |

| Flag of steady gap |

| Flag of steady speed |

| Flag of steady acceleration |

| F | Flag of steady status |

| In frame m, the gap of the ith car |

| In frame m, the speed of the ith car |

| In frame m, the acceleration of the ith car |

| In frame m, average gap |

| In frame m, average speed |

| In frame m, average acceleration |

| N | Total number of vehicles |

| M | End frame ID |

Table 4.

Notations of CACC evaluation (2).

Table 4.

Notations of CACC evaluation (2).

| Variables | Notations |

|---|

| Average speed of the ith vehicle |

| Average acceleration of the ith vehicle |

| Average gap of the ith vehicle |

| Standard deviation of the speed of the ith vehicle |

| Standard deviation of the acceleration of the ith vehicle |

| Standard deviation of the gap of the ith vehicle |

| Overall speed standard deviation |

| Overall acceleration standard deviation |

| Overall gap standard deviation |

Table 5.

Notations of CACC evaluation (3).

Table 5.

Notations of CACC evaluation (3).

| Variables | Notations |

|---|

| Times for speed to reach steady state |

| Times for acceleration to reach steady state |

| Time for gap to reach steady state |

| Overall time for reaching steady state |

Table 6.

Notations of PID-based CACC algorithm.

Table 6.

Notations of PID-based CACC algorithm.

| Variables | Notations |

|---|

| Acceleration of the car in front |

| a | Acceleration of HV |

| Acceleration strategies of HV |

| Speed of the car in front |

| v | Speed of HV |

| g | Gap with the front car |

| Minimum safety gap |

| Expected time gap |

| Scale factor of acceleration |

| Scale factor of speed |

| Scale factor of gap |

Table 7.

Parameter setting for CACC test.

Table 7.

Parameter setting for CACC test.

| | P1 | P2 | P3 | P4 |

|---|

| 0.200 | 0.200 | 1.000 | 0.750 |

| - | - | 0.800 | 0.700 |

| 0.100 | 1.000 | 4.000 | 4.125 |

Table 8.

CACC test results and evaluation indicators.

Table 8.

CACC test results and evaluation indicators.

| | P1 | P2 | P3 | P4 |

|---|

| 2.127 | 1.951 | 1.891 | 1.894 |

| 0.747 | 0.710 | 0.671 | 0.674 |

| 4.109 | 3.465 | 3.410 | 3.411 |

| 14.889 | 15.889 | 15.889 | 15.889 |

| 13.889 | 13.889 | 13.889 | 13.889 |

| 74.911 | 46.011 | 43.494 | 26.250 |

| 70.150 | 44.500 | 26.833 | 25.967 |

| 81.433 | 49.783 | 77.167 | 27.233 |

| 73.150 | 43.750 | 26.483 | 25.550 |

| 150.221 | 184.952 | 188.180 | 205.402 |

Table 9.

CACC test result.

Table 9.

CACC test result.

| Moving Track | Longitude | Latitude |

|---|

| Barcelona | 2.23817 N | 41.40908 E |

| Melbourne | 37.80819 S | 144.96783 E |

| Tokyo | 35.66667 N | 139.77492 E |

| Munich | 48.14550 N | 11.57856 E |

| NewYork | 40.75957 N | 73.98498 W |

| Nuerburgring | 50.33275 N | 6.93630 E |

| Shanghai | 31.230416 N | 121.473701 E |

| Beijing | 39.904211 N | 116.407395 E |

| Nepal | 28.394857 N | 84.124008 E |

| Stockholm | 59.329323 N | 18.068581 E |