Innovative Image Processing Method to Improve Autofocusing Accuracy

Abstract

:1. Introduction

2. Adopted Autofocusing Structure and Experimental Setup

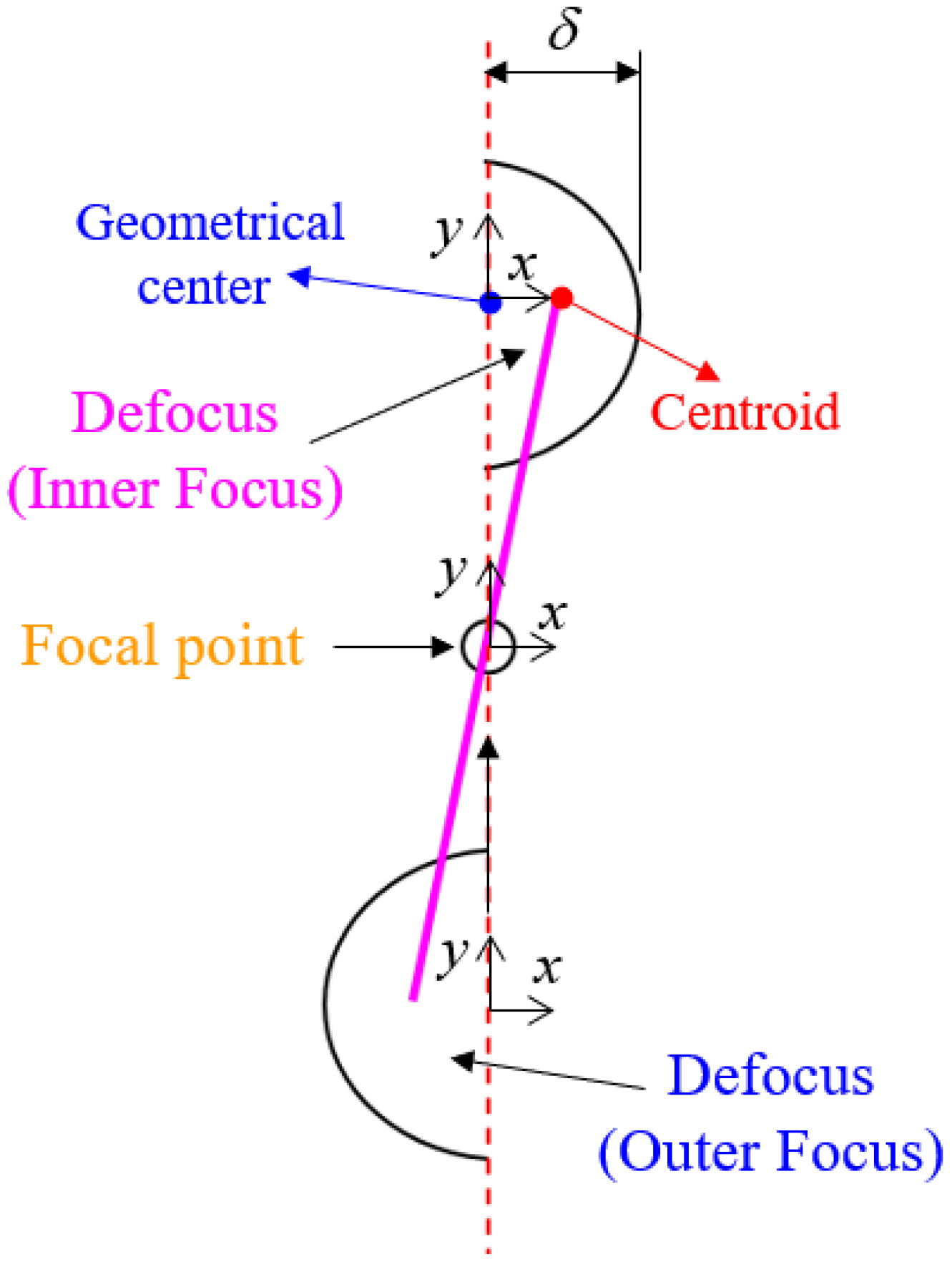

2.1. Adopted Autofocusing Structure

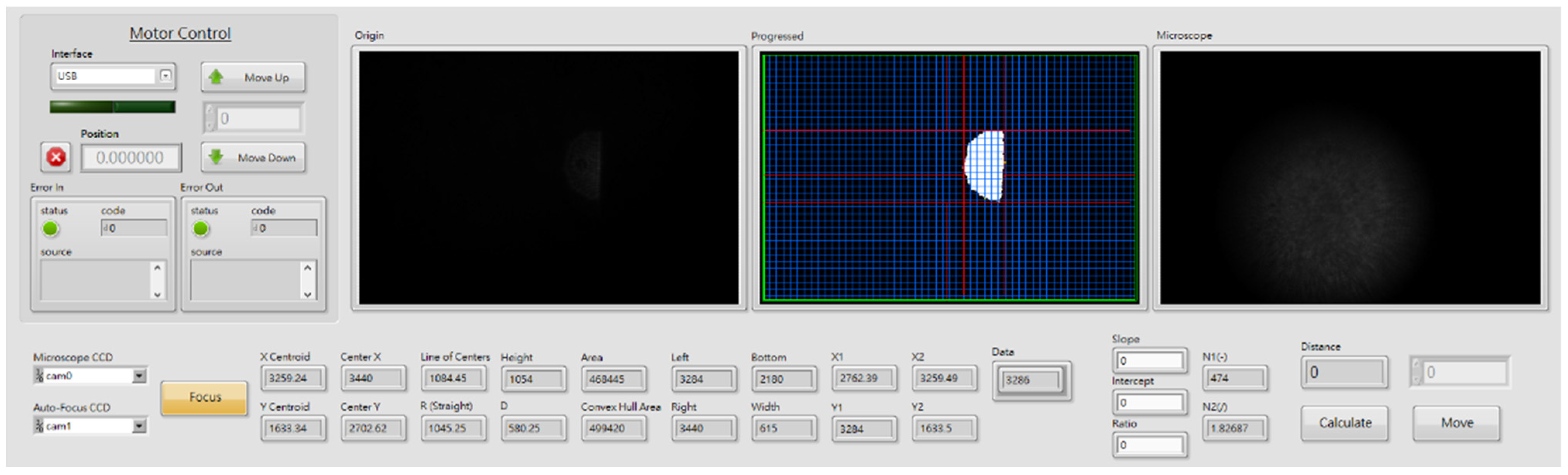

2.2. Experimental Setup of Prototype Autofocusing Microscope

3. Proposed Algorithm

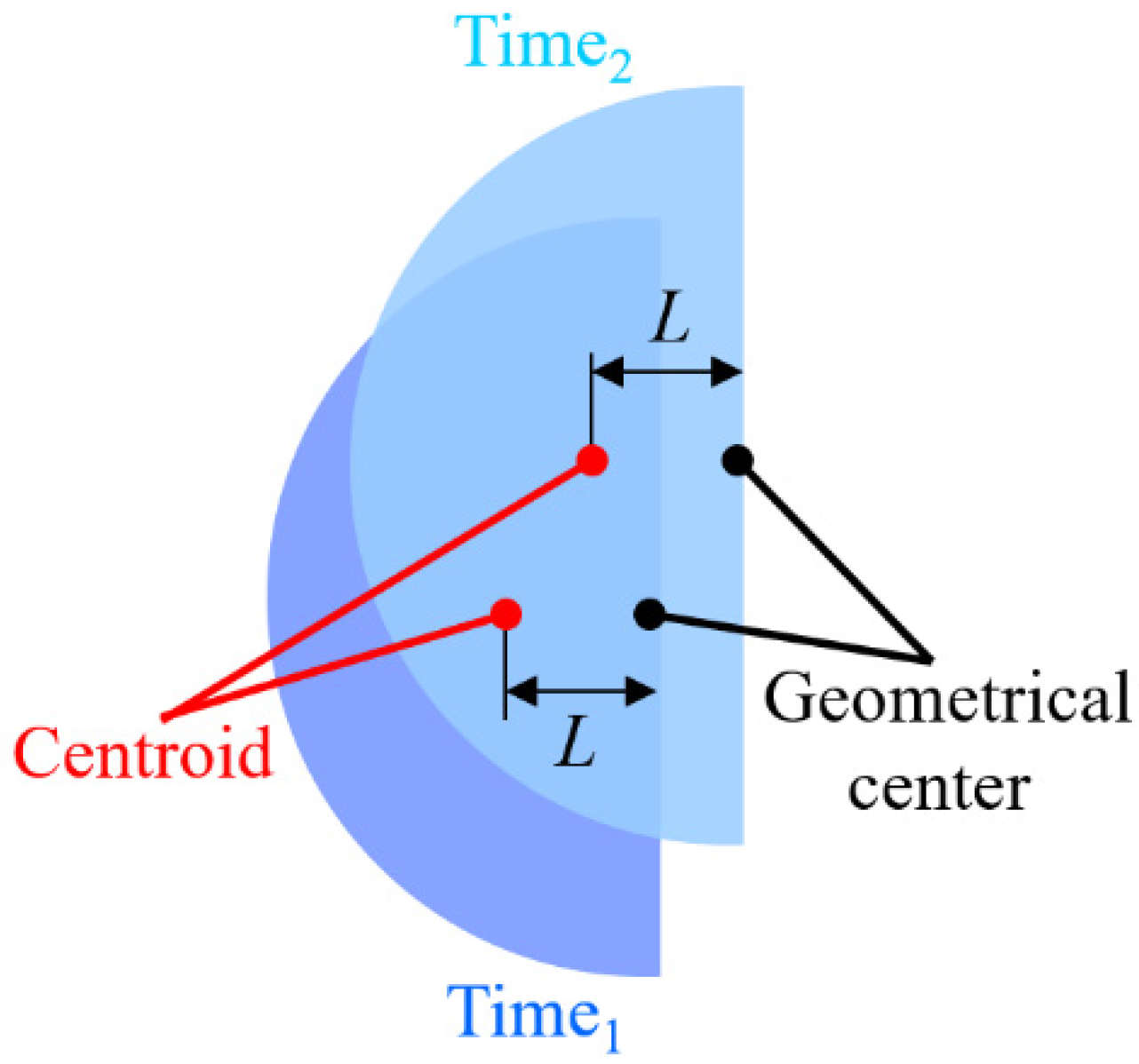

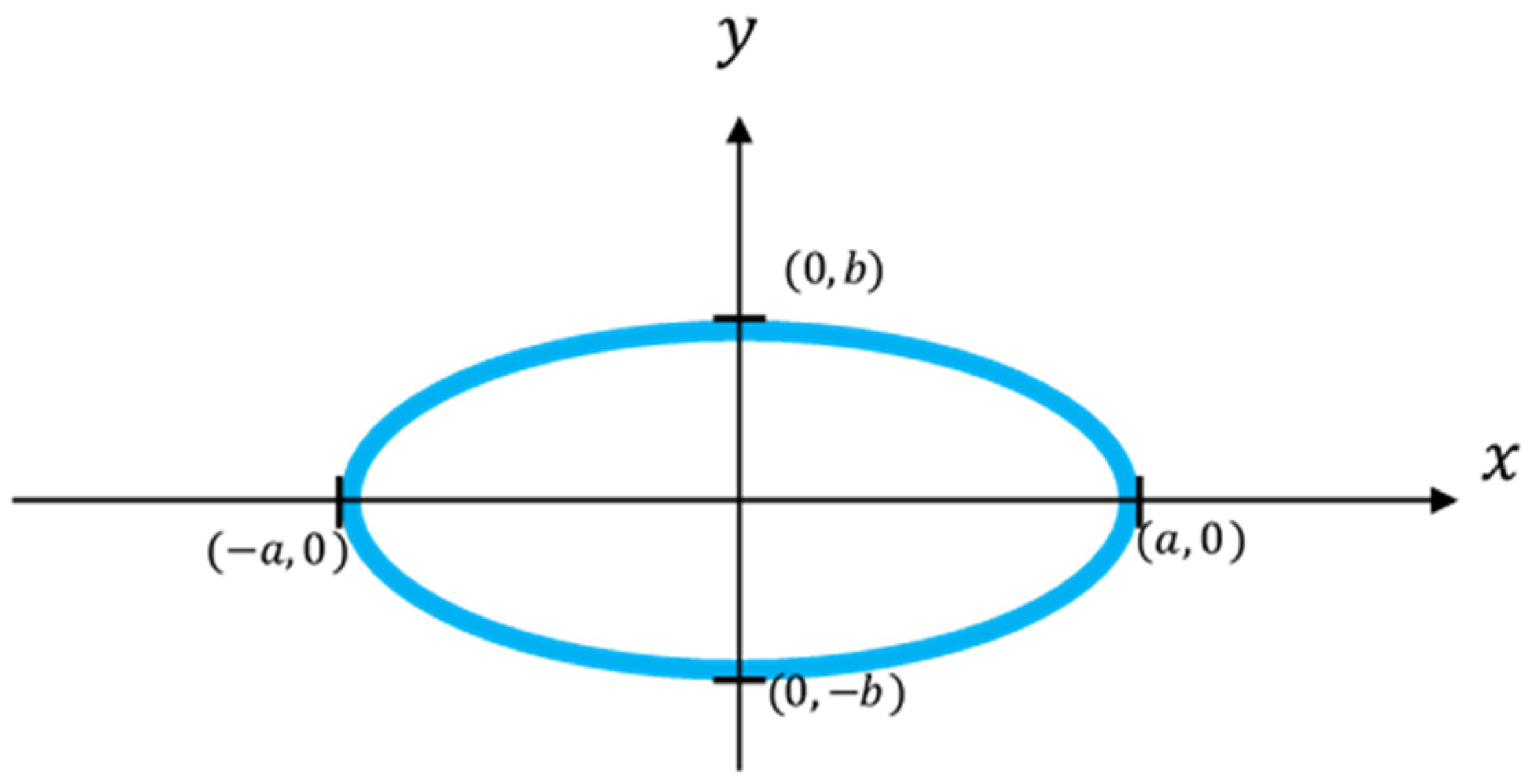

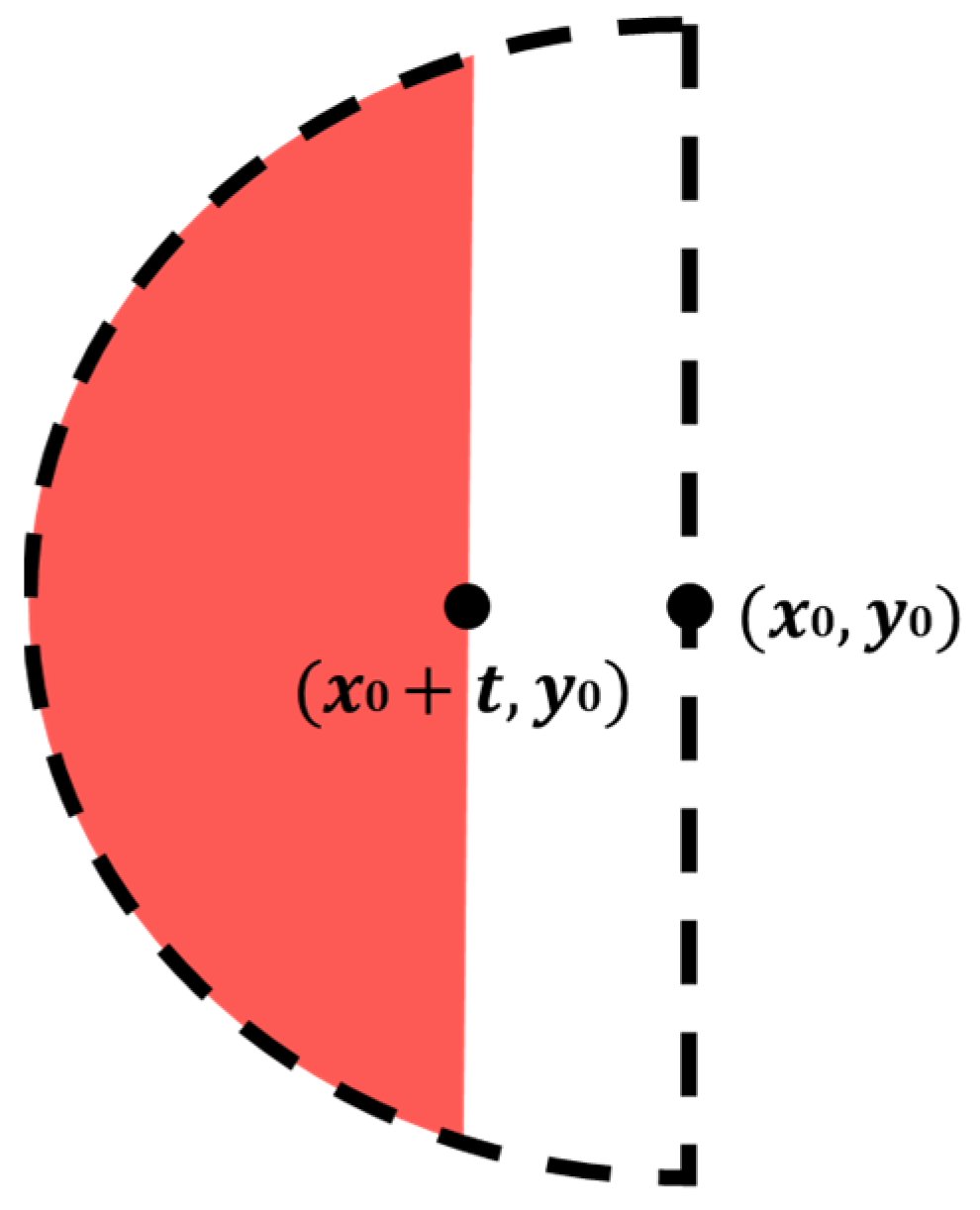

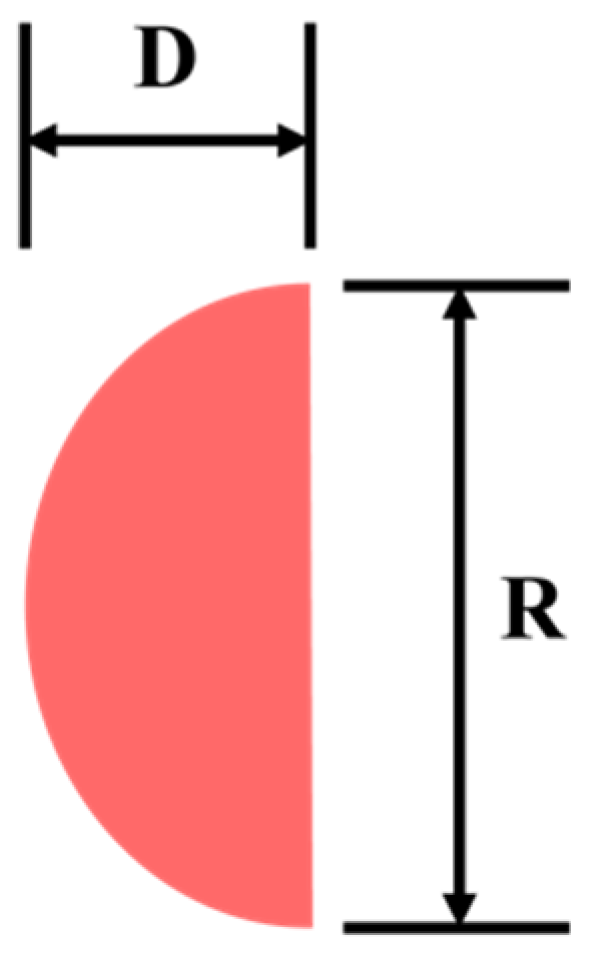

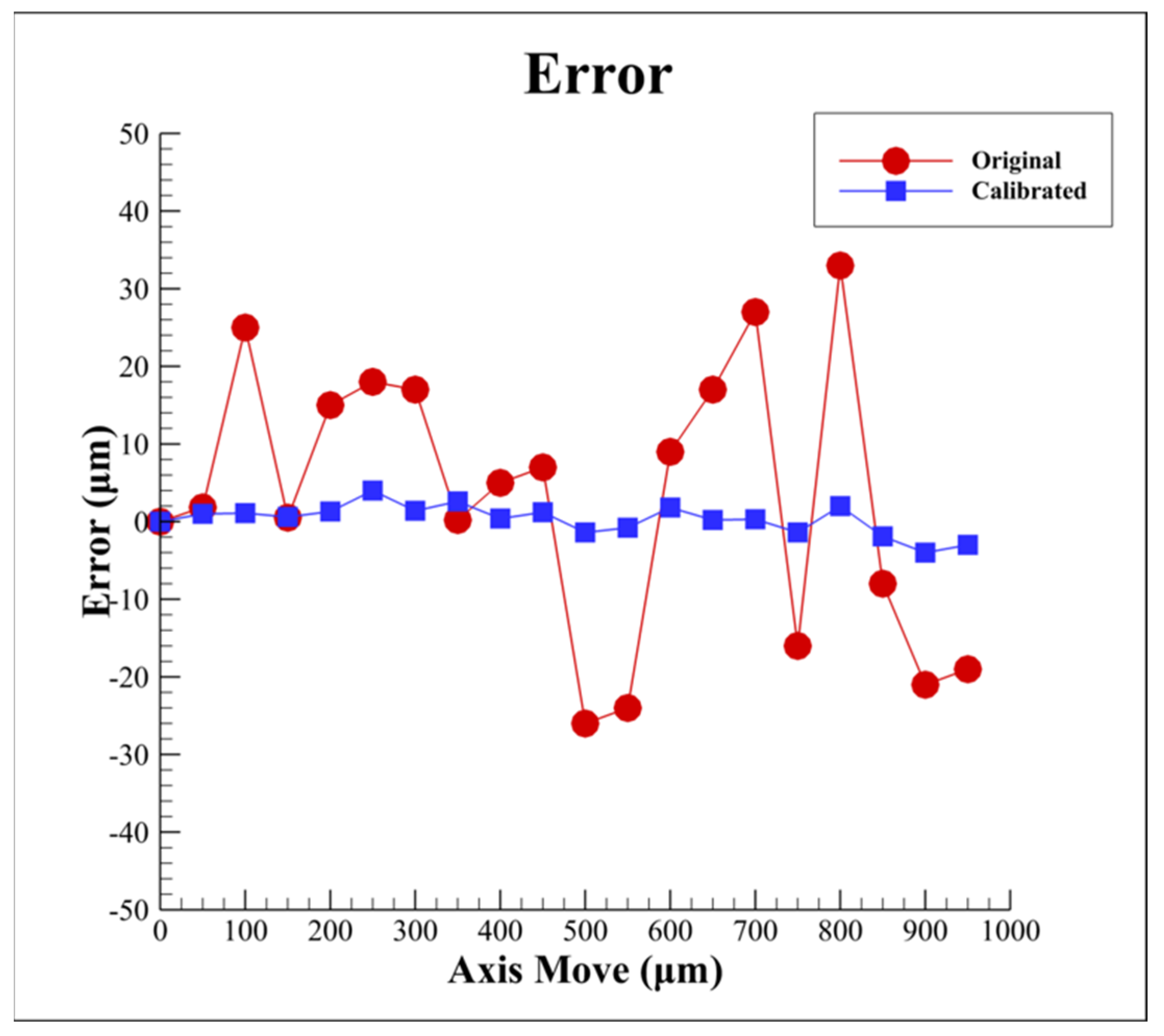

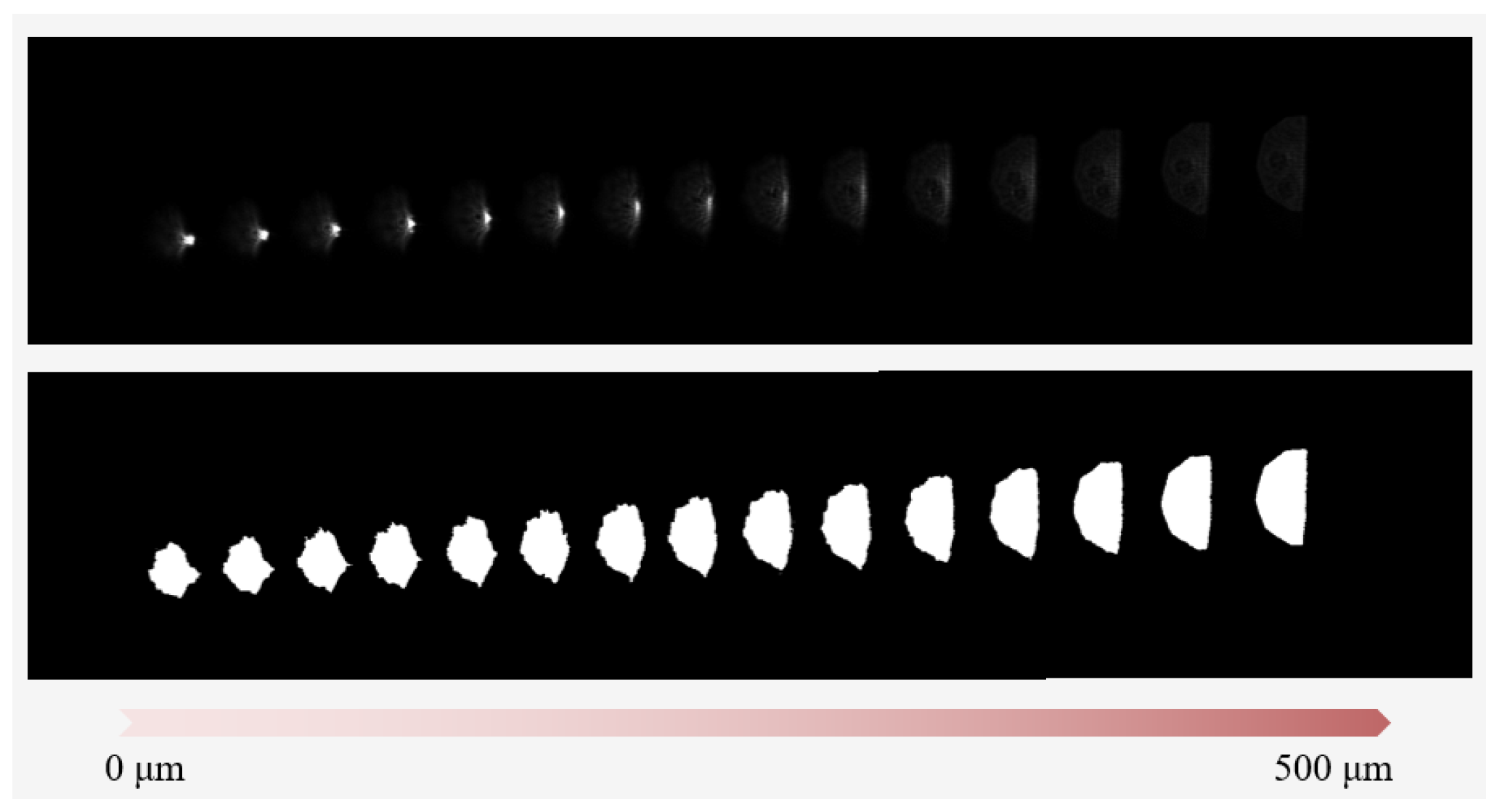

3.1. Proposed Ellipse Spot Compensation Algorithm

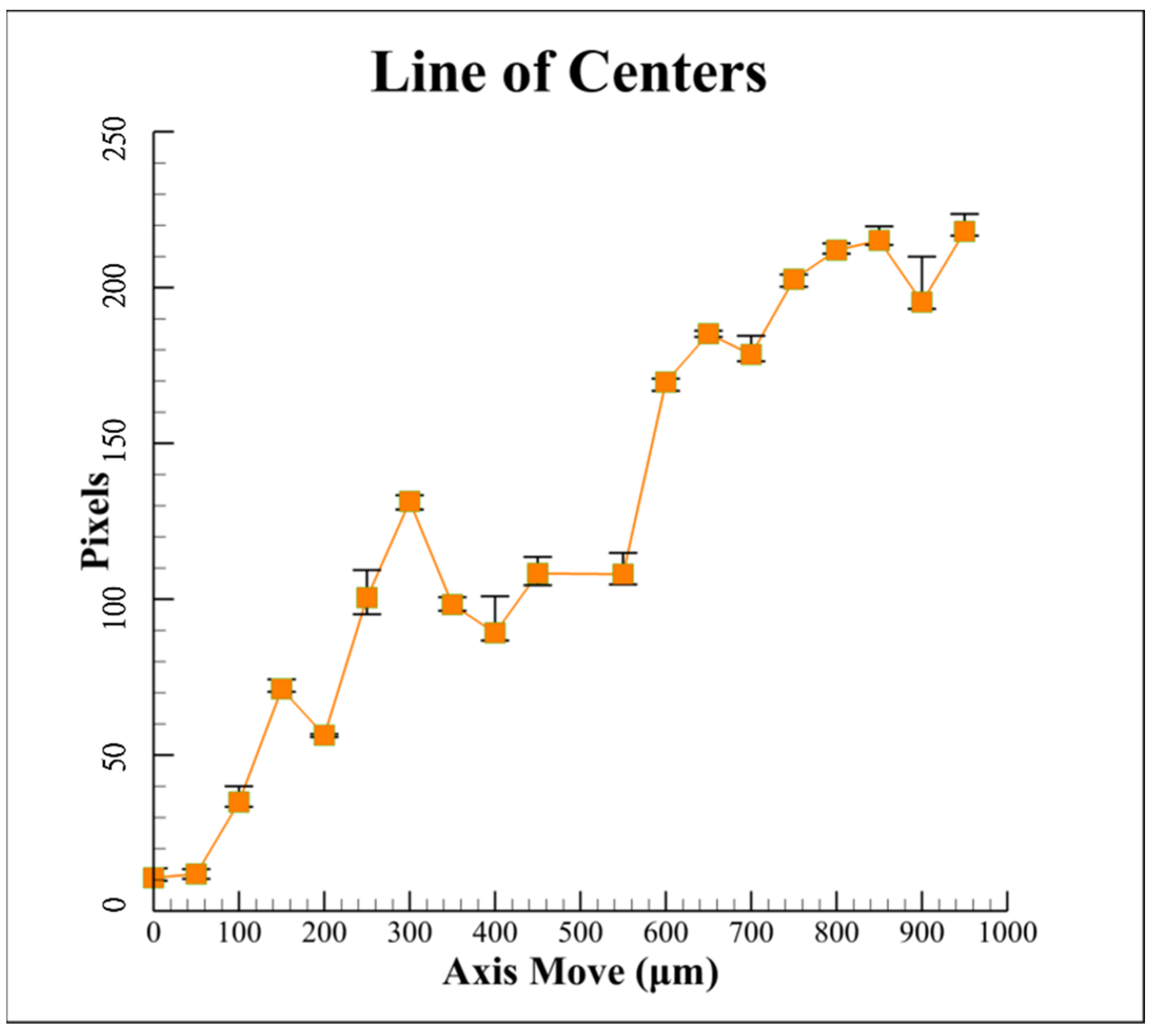

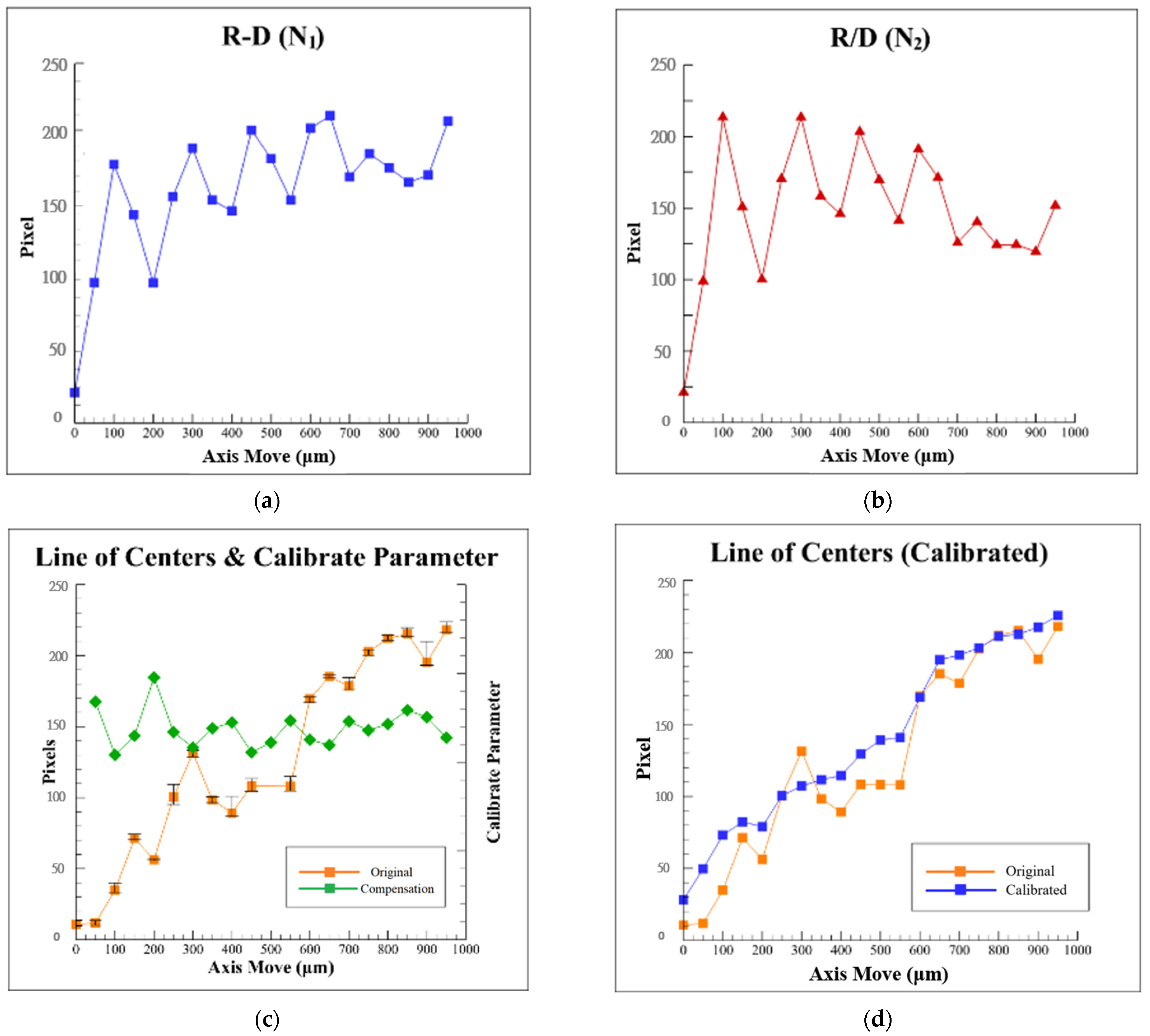

3.2. Proposed Data Compensation Algorithm

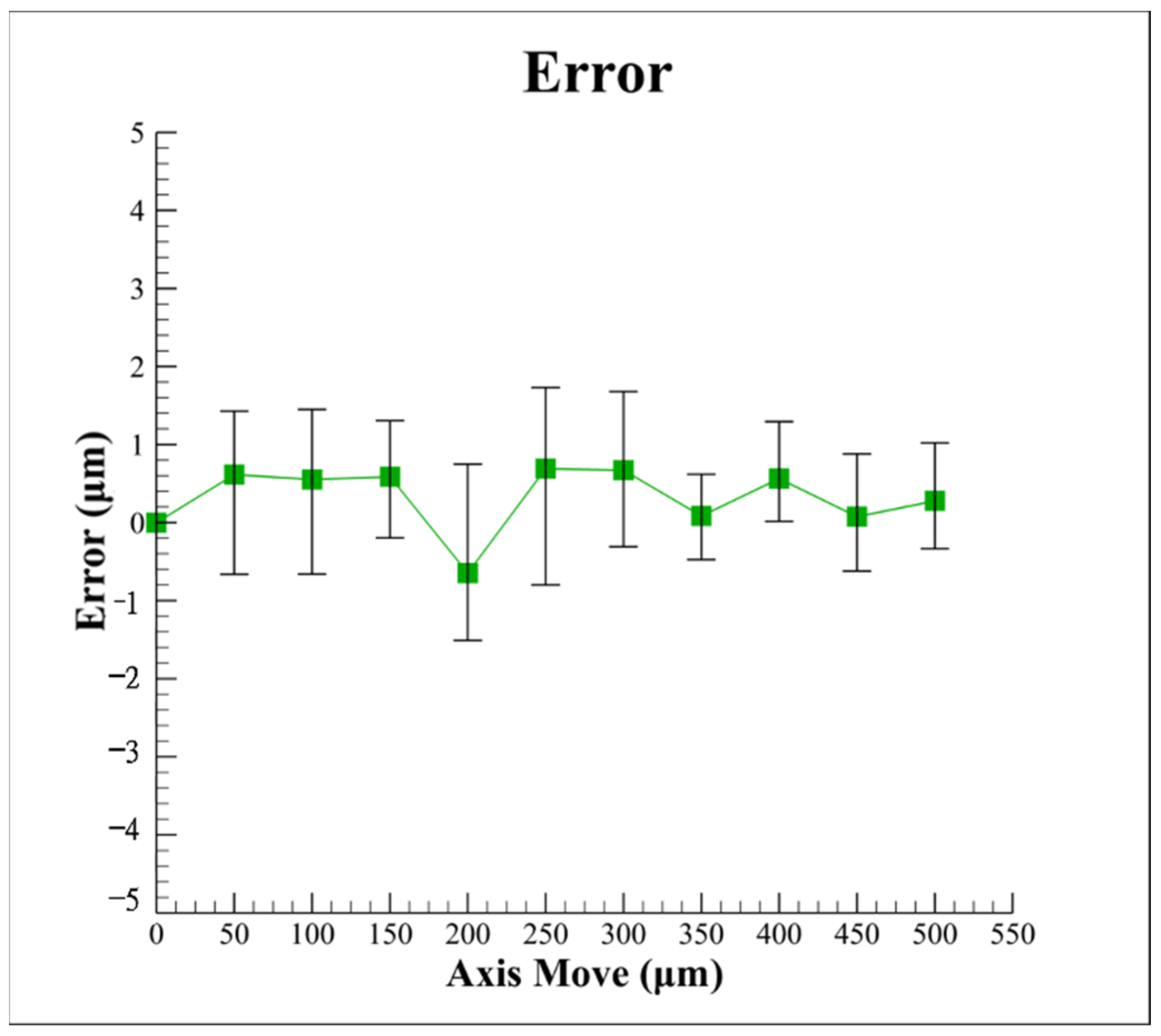

4. Experimental Results of Proposed Algorithm

5. Conclusions

- Calculating the center position of the ideal ellipse through the boundary of the light spot can improve the accuracy of the defocus distance calculation of the subsequent autofocus system.

- By using the proposed compensation algorithm, the linearity of the characteristic curve of the focusing system can be effectively improved, thereby achieving better accuracy of the optics-based autofocusing microscope.

- The proposed innovative algorithm can effectively remove the noise interference caused by environmental factors such as laser disturbance, instruments, and air temperature to compensate the measurement data based on the current spot shape, which not only reduces the equipment cost but also improves the system efficiency.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Petruck, P.; Riesenberg, R.; Kowarschik, R. Optimized coherence parameters for high-resolution holographic microscopy. Appl. Phys. A 2012, 106, 339–348. [Google Scholar] [CrossRef]

- Liu, C.-S.; Jiang, S.-H. Precise autofocusing microscope with rapid response. Opt. Lasers Eng. 2015, 66, 294–300. [Google Scholar] [CrossRef]

- Wang, F.; Cao, P.; Zhang, Y.; Hu, H.; Yang, Y. A Machine Vision Method for Correction of Eccentric Error Based on Adaptive Enhancement Algorithm. IEEE Trans. Instrum. Meas. 2021, 70, 5002311. [Google Scholar] [CrossRef]

- Luo, Y.; Huang, L.; Rivenson, Y.; Ozcan, A. Single-Shot Autofocusing of Microscopy Images Using Deep Learning. ACS Photon. 2021, 8, 625–638. [Google Scholar] [CrossRef]

- Kreft, M.; Stenovec, M.; Zorec, R. Focus-Drift Correction in Time-Lapse Confocal Imaging. Ann. N. Y. Acad. Sci. 2005, 1048, 321–330. [Google Scholar] [CrossRef]

- Chang, H.-C.; Shih, T.-M.; Zu Chen, N.; Pu, N.-W. A microscope system based on bevel-axial method auto-focus. Opt. Lasers Eng. 2009, 47, 547–551. [Google Scholar] [CrossRef]

- Lamadie, F.; Bruel, L.; Himbert, M. Digital holographic measurement of liquid–liquid two-phase flows. Opt. Lasers Eng. 2012, 50, 1716–1725. [Google Scholar] [CrossRef]

- Xu, S.-J.; Duan, Y.-Z.; Yu, Y.-H.; Tian, Z.-N.; Chen, Q.-D. Machine vision-based high-precision and robust focus detection for femtosecond laser machining. Opt. Express 2021, 29, 30952–30960. [Google Scholar] [CrossRef]

- Liu, C.-S.; Wang, Z.-Y.; Chang, Y.-C. Design and characterization of high-performance autofocusing microscope with zoom in/out functions. Appl. Phys. A 2015, 121, 69–80. [Google Scholar] [CrossRef]

- Jan, C.-M.; Liu, C.-S.; Yang, J.-Y. Implementation and Optimization of a Dual-confocal Autofocusing System. Sensors 2020, 20, 3479. [Google Scholar] [CrossRef]

- Fujishiro, Y.; Furukawa, T.; Maruo, S. Simple autofocusing method by image processing using transmission images for large-scale two-photon lithography. Opt. Express 2020, 28, 12342–12351. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.-S.; Song, R.-C.; Fu, S.-J. Design of a laser-based autofocusing microscope for a sample with a transparent boundary layer. Appl. Phys. A 2019, 125, 199. [Google Scholar] [CrossRef]

- Bezzubik, V.V.; Ustinov, S.N.; Belashenkov, N.R. Optimization of algorithms for autofocusing a digital microscope. J. Opt. Technol. 2009, 76, 603–608. [Google Scholar] [CrossRef]

- Hsu, W.-Y.; Lee, C.-S.; Chen, P.-J.; Chen, N.-T.; Chen, F.-Z.; Yu, Z.-R.; Kuo, C.-H.; Hwang, C.-H. Development of the fast astigmatic auto-focus microscope system. Meas. Sci. Technol. 2009, 20, 045902. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Hwang, R.-C.; Chen, Y.-J. A passive auto-focus camera control system. Appl. Soft Comput. 2010, 10, 296–303. [Google Scholar] [CrossRef]

- Liu, C.-S.; Hu, P.-H.; Lin, Y.-C. Design and experimental validation of novel optics-based autofocusing microscope. Appl. Phys. A 2012, 109, 259–268. [Google Scholar] [CrossRef]

- Zhang, X.; Fan, F.; Gheisari, M.; Srivastava, G. A Novel Auto-Focus Method for Image Processing Using Laser Triangulation. IEEE Access 2019, 7, 64837–64843. [Google Scholar] [CrossRef]

- Kia, M.M.M.; Alzubi, J.A.; Gheisari, M.; Zhang, X.; Rahimi, M.; Qin, Y. A Novel Method for Recognition of Persian Alphabet by Using Fuzzy Neural Network. IEEE Access 2018, 6, 77265–77271. [Google Scholar]

- Strzecha, K.; Koszmider, T.; Zarębski, D.; Łobodziński, W. Passive Auto-Focus Algorithm for Correcting Image Distortions Caused by Gas Flow in High-Temperature Measurements of Surface Phenomena. Image Process. Commun. 2012, 17, 379–384. [Google Scholar] [CrossRef] [Green Version]

- Dastidar, T.R.; Ethirajan, R. Whole slide imaging system using deep learning-based automated focusing. Biomed. Opt. Express 2020, 11, 480–491. [Google Scholar] [CrossRef]

- Pengo, T.; Muñoz-Barrutia, A.; Ortiz-De-Solórzano, C. Halton sampling for autofocus. J. Microsc. 2009, 235, 50–58. [Google Scholar] [CrossRef] [PubMed]

- Hedde, P.N.; Gratton, E. Active focus stabilization for upright selective plane illumination microscopy. Opt. Express 2015, 23, 14707–14714. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, G.-J.; Yu, Z.-M.; Yu, J.-H. Auto-focus windows selection algorithm for optical microscope. J. Appl. Opt. 2015, 36, 550–558. [Google Scholar]

- Selami, Y.; Tao, W.; Gao, Q.; Yang, H.; Zhao, H. A Scheme for Enhancing Precision in 3-Dimensional Positioning for Non-Contact Measurement Systems Based on Laser Triangulation. Sensors 2018, 18, 504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, C.; Bian, Z.; Alhudaithy, S.; Jiang, S.; Tomizawa, Y.; Song, P.; Wang, T.; Shao, X. Brightfield, fluorescence, and phase-contrast whole slide imaging via dual-LED autofocusing. Biomed. Opt. Express 2021, 12, 4651–4660. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.-Q.; Shen, B.; Wang, J.-H.; Zheng, D.-T.; Fleischer, J. Superresolution algorithm for laser triangulation measurement. Lasers Eng. 2017, 8, 385–395. [Google Scholar]

- Fan, K.-C.; Chu, C.-L.; Mou, J.-I. Development of a low-cost autofocusing probe for profile measurement. Meas. Sci. Technol. 2001, 12, 2137–2146. [Google Scholar] [CrossRef]

- Jung, B.J.; Kong, H.J.; Jeon, B.G.; Yang, D.-Y.; Son, Y.; Lee, K.-S. Autofocusing method using fluorescence detection for precise two-photon nanofabrication. Opt. Express 2011, 19, 22659–22668. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Kuang, C.; Xiu, P.; Li, S.; Hao, X.; Liu, X. A lateral differential confocal microscopy for accurate detection and localization of edge contours. Opt. Lasers Eng. 2013, 53, 12–18. [Google Scholar] [CrossRef]

- Liu, C.-S.; Jiang, S.-H. Design and experimental validation of novel enhanced-performance autofocusing microscope. Appl. Phys. A 2014, 117, 1161–1171. [Google Scholar] [CrossRef]

- Pinkard, H.; Phillips, Z.; Babakhani, A.; Fletcher, D.A.; Waller, L. Deep learning for single-shot autofocus microscopy. Optica 2019, 6, 794–797. [Google Scholar] [CrossRef]

- Yao, S.; Li, H.; Pang, S.; Zhu, B.; Zhang, X.; Fatikow, S. A Review of Computer Microvision-Based Precision Motion Measurement: Principles, Characteristics, and Applications. IEEE Trans. Instrum. Meas. 2021, 70, 5007928. [Google Scholar] [CrossRef]

- Weiss, A.; Obotnine, A.; Lasinski, A. Method and Apparatus for the Auto-Focusing Infinity Corrected Microscopes. U.S. Patent 7700903, 20 April 2010. [Google Scholar]

- Liu, C.-S.; Lin, Y.-C.; Hu, P.-H. Design and characterization of precise laser-based autofocusing microscope with reduced geometrical fluctuations. Microsyst. Technol. 2013, 19, 1717–1724. [Google Scholar] [CrossRef]

- Wang, S.H.; Tay, C.J.; Quan, C.; Shang, H.M.; Zhou, Z.F. Laser integrated measurement of surface roughness and micro-displacement. Meas. Sci. Technol. 2000, 11, 454–458. [Google Scholar] [CrossRef]

- Rodwell, M.J.W.; Baer, T.; Kolner, B.H.; Weingarten, K.J.; Bloom, D.M. Reduction of timing fluctuations in a mode-locked Nd:YAG laser by electronic feedback. Opt. Lett. 1986, 11, 638–640. [Google Scholar] [CrossRef]

- Andronova, I.A.; Bershtein, I.L. Suppression of fluctuations of the intensity of radiation emitted by semiconductor lasers. Sov. J. Quantum Electron. 1991, 21, 616–618. [Google Scholar] [CrossRef]

- Liu, C.-S.; Jiang, S.-H. A novel laser displacement sensor with improved robustness toward geometrical fluctuations of the laser beam. Meas. Sci. Technol. 2013, 24, 105101-1–105101-8. [Google Scholar] [CrossRef]

- Liu, C.-S.; Lin, K.-W. Numerical and experimental characterization of reducing geometrical fluctuations of laser beam based on rotating optical diffuser. Opt. Eng. 2014, 53, 122408. [Google Scholar] [CrossRef]

- Hsu, W.-Y. Automatic Compensation for Defects of Laser Reflective Patterns in Optics-Based Auto-Focusing Microscopes. IEEE Sens. J. 2020, 20, 2034–2044. [Google Scholar] [CrossRef]

| Variable | Brand | Model |

|---|---|---|

| Laser | Thorlabs | HL6501MG |

| Beam Splitter | Thorlabs | BSW29 (50:50) |

| Biconvex Lens | Thorlabs | UB1945K |

| 45° Red Dichroic Filter | Edmund | PBSW-633R |

| Sample | Thorlabs | Mirror |

| Objective Lens | Olympus | f0 = 18 mm |

| CCD1, CCD2 | Basler | 5472 px × 3648 px, 17 fps |

| Infinity-Corrected Optics System | Navita | 1-60255 |

| Motor | PI | UPL120 stroke of 13 mm |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.-S.; Tu, H.-D. Innovative Image Processing Method to Improve Autofocusing Accuracy. Sensors 2022, 22, 5058. https://doi.org/10.3390/s22135058

Liu C-S, Tu H-D. Innovative Image Processing Method to Improve Autofocusing Accuracy. Sensors. 2022; 22(13):5058. https://doi.org/10.3390/s22135058

Chicago/Turabian StyleLiu, Chien-Sheng, and Ho-Da Tu. 2022. "Innovative Image Processing Method to Improve Autofocusing Accuracy" Sensors 22, no. 13: 5058. https://doi.org/10.3390/s22135058

APA StyleLiu, C.-S., & Tu, H.-D. (2022). Innovative Image Processing Method to Improve Autofocusing Accuracy. Sensors, 22(13), 5058. https://doi.org/10.3390/s22135058