Lightweight Single Image Super-Resolution with Selective Channel Processing Network

Abstract

:1. Introduction

- (1)

- We propose the convolution neural network with selective channel processing strategy (SCPN). Extensive experiments prove that our model can achieve higher performance than previous works in remote sensing images in the SR field;

- (2)

- We propose the selective channel processing module (SCPM), which contains trainable parameters in a channel selection matrix to decide whether to process the corresponding channels in the feature map in the next convolution layer. This strategy markedly reduces the calculation consumption and the model size;

- (3)

- We propose the differential channel attention (DCA) block, which is more suitable for the SR tasks in restoring more high-frequency details and further improves the representation ability of the networks.

2. Related Work

2.1. Deep-Learning SR Methods

2.2. Attention Mechanisms for SR

2.3. Adaptive Inference

3. Visualization of Feature Maps in Classic Deep-Learning-Based SR Methods

4. Selective Channel Processing Network (SCPN)

4.1. Network Architecture

4.2. Selective Channel Processing Module (SCPM)

4.2.1. SCPM in the Training Phase

4.2.2. SCPM in the Inference Phase

4.2.3. Differential Channel Attention Block

4.2.4. Implementation Details

4.2.5. Pseudocode of the Proposed Network

| Algorithm 1 The PyTorch-like pseudocode of SCPN. |

| ###The basic module SCPM of SCPN. def SCPM(input): if model.training: c1=ReLU(conv1(input)) c1_0=c1*matrix1[0];c1_1=c1*matrix1[1] c2=ReLU(conv2(c1_0)) c2_0=c2*matrix2[0];c2_1=c2*matrix2[1] c3=ReLU(conv3(c2_0)) c3_0=c3*matrix3[0];c3_1=c3*matrix3[1] c4=ReLU(conv4(c3)) c_out=c1_1+c2_1+c3_1+c4 out=conv5(CCA(c_out))+input return out if model.inference: pos1_0=position(matrix1[0]==1);pos1_1=position(matrix1[1]==1) pos2_0=position(matrix2[0]==1);pos2_1=position(matrix2[1]==1) pos3_0=position(matrix3[0]==1);pos3_1=position(matrix3[1]==1) c1=F.conv2d(input,conv1.weight) c1_0=split(c1,pos1_0);c1_1=split(c1,pos1_1) c2=F.conv2d(c1_0,conv2.weight[pos1_0]) c2_0=split(c2,pos2_0);c2_1=split(c2,pos2_1) c3=F.conv2d(c2_0,conv3.weight[pos2_0]) c3_0=split(c3,pos3_0);c3_1=split(c3,pos3_1) c4=F.conv2d(c3_0,conv4.weight[pos3_0]) c_out=c1_1+c2_1+c3_1+c4 out=conv5(CCA(c_out))+input return out ###The deep feature extraction part, which contains 6 SCPMs. def P_DFE(input): out1=SCPM1(input) out2=SCPM2(out1) out3=SCPM3(out2) out4=SCPM4(out3) out5=SCPM5(out4) out6=SCPM6(out5) ###The whole SCPN model. if model.training: F0=P_SFE(LR) #shallow feature extraction F1=P_DFE(F0) #deep feature extraction SR=P_UP(F0+F1) #upsampling reconstruction loss=sum(|HR-SR|)/(h*w) loss.backward() optimizer.step() if model.inference: F0=P_SFE(LR) F1= F1=P_DFE(F0) SR=P_UP(F0+F1) imshow(SR) |

5. Experiments on General Images

5.1. Datasets and Evaluation Metrics

5.2. Training Details

5.3. Effectiveness of Selective Channel Processing Strategy

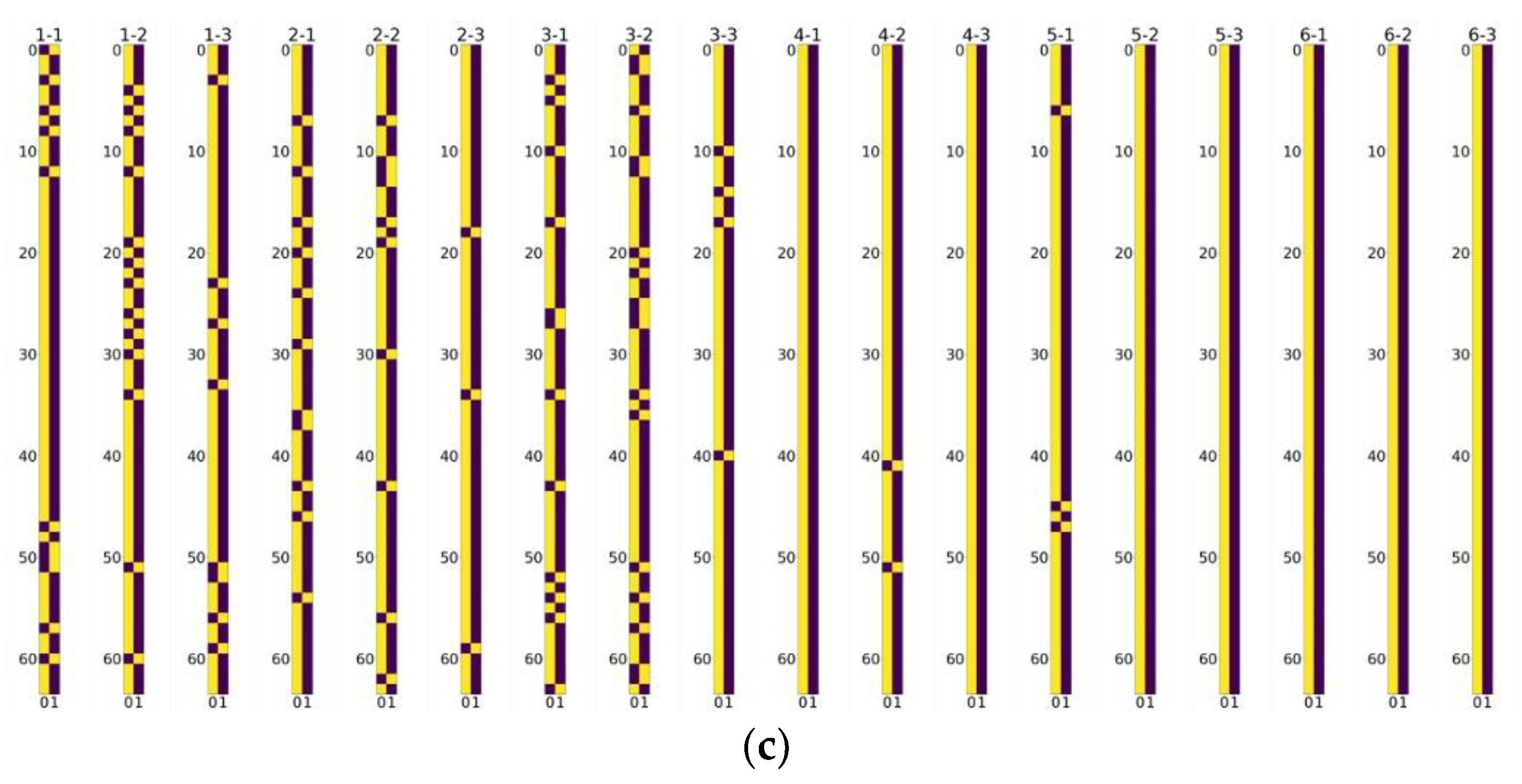

5.4. Visualization of Channel Selection Matrixes

5.5. Quantitative Evaluation and Visual Comparision

5.5.1. Quantitative Results

5.5.2. Qualitative Results

6. Remote Sensing Image Super-Resolution

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Anwar, S.; Khan, S.; Barnes, N. A Deep Journey into Super-resolution: A Survey. ACM Comput. Surv. 2020, 53, 34. [Google Scholar] [CrossRef]

- Xie, C.; Zeng, W.L.; Lu, X.B. Fast Single-Image Super-Resolution via Deep Network With Component Learning. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3473–3486. [Google Scholar] [CrossRef]

- Dong, X.; Xi, Z.; Sun, X.; Gao, L. Transferred Multi-Perception Attention Networks for Remote Sensing Image Super-Resolution. Remote Sens. 2019, 11, 2857. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.; Sun, X.; Zhang, Y.; Fu, K.; Wang, L. Deep Residual Squeeze and Excitation Network for Remote Sensing Image Super-Resolution. Remote Sens. 2019, 11, 1817. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Zhang, S.; Jiao, L.; Liu, F.; Yang, S.; Tang, X. Semi-Coupled Convolutional Sparse Learning for Image Super-Resolution. Remote Sens. 2019, 11, 2593. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Zhang, L.; You, J. Domain Transfer Learning for Hyperspectral Image Super-Resolution. Remote Sens. 2019, 11, 694. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zhao, L.; Liu, L.; Hu, H.; Tao, W. URNet: A U-Shaped Residual Network for Lightweight Image Super-Resolution. Remote Sens. 2021, 13, 3848. [Google Scholar] [CrossRef]

- Xie, C.; Zeng, W.L.; Jiang, S.Q.; Lu, X.B. Multiscale self-similarity and sparse representation based single image super-resolution. Neurocomputing 2017, 260, 92–103. [Google Scholar] [CrossRef]

- Keys, R. Cubic convolution interpolation for digital image processing. IEEE Trans. Acoust. Speech Signal Process. 1981, 29, 1153–1160. [Google Scholar] [CrossRef] [Green Version]

- Lin, Z.C.; Shum, H.Y. Fundamental limits of reconstruction-based superresolution algorithms under local translation. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 83–97. [Google Scholar] [CrossRef] [PubMed]

- Freeman, W.T.; Jones, T.R.; Pasztor, E.C. Example-based super-resolution. IEEE Comput. Graph. Appl. 2002, 22, 56–65. [Google Scholar] [CrossRef] [Green Version]

- Freeman, W.T.; Pasztor, E.C.; Carmichael, O.T. Learning low-level vision. Int. J. Comput. Vis. 2000, 40, 25–47. [Google Scholar] [CrossRef]

- Sung Cheol, P.; Min Kyu, P.; Moon Gi, K. Super-resolution image reconstruction: A technical overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef] [Green Version]

- Irani, M.; Peleg, S. Super Resolution from Image Sequences. In Proceedings of the 1990 10th International Conference on Pattern Recognition, Vienna, Austria, 4–6 February 2021; pp. 115–120. [Google Scholar]

- Irani, M.; Peleg, S. Improving resolution by image registration. CVGIP Graph. Models Image Process. 1991, 53, 231–239. [Google Scholar] [CrossRef]

- Irani, M.; Peleg, S. Image Sequence Enhancement Using Multiple Motions Analysis; Hebrew University of Jerusalem, Leibniz Center for Research in Computer: Jerusalem, Israel, 1991. [Google Scholar]

- Irani, M.; Peleg, S. Motion analysis for image enhancement: Resolution, occlusion, and transparency. J. Vis. Commun. Image Represent. 1993, 4, 324–335. [Google Scholar] [CrossRef] [Green Version]

- Yan, X.A.; Liu, Y.; Xu, Y.D.; Jia, M.P. Multistep forecasting for diurnal wind speed based on hybrid deep learning model with improved singular spectrum decomposition. Energy Convers. Manag. 2020, 225, 113456. [Google Scholar] [CrossRef]

- Yan, X.A.; Liu, Y.; Xu, Y.D.; Jia, M.P. Multichannel fault diagnosis of wind turbine driving system using multivariate singular spectrum decomposition and improved Kolmogorov complexity. Renew. Energy 2021, 170, 724–748. [Google Scholar] [CrossRef]

- Chang, H.; Yeung, D.-Y.; Xiong, Y. Super-Resolution through Neighbor Embedding. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Xie, C.; Zeng, W.L.; Jiang, S.Q.; Lu, X.B. Bidirectionally Aligned Sparse Representation for Single Image Super-Resolution. Multimed. Tools Appl. 2018, 77, 7883–7907. [Google Scholar] [CrossRef]

- Timofte, R.; Smet, V.D.; Gool, L.J.V. A+: Adjusted Anchored Neighborhood Regression for Fast Super-Resolution. In Proceedings of the Asian Conference on Computer Vision, Paris, France, 11 January 2014; pp. 111–126. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.; Lee, J.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Hawaii, USA, 26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision ECCV, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [Green Version]

- Ahn, N.; Kang, B.; Sohn, K.A. Fast, Accurate, and Lightweight Super-Resolution with Cascading Residual Network. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 256–272. [Google Scholar]

- Hui, Z.; Wang, X.M.; Gao, X.B. Fast and Accurate Single Image Super-Resolution via Information Distillation Network. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 723–731. [Google Scholar]

- Hui, Z.; Gao, X.B.; Yang, Y.C.; Wang, X.M. Lightweight Image Super-Resolution with Information Multi-distillation Network. In Proceedings of the 27th ACM International Conference on Multimedia (MM), Nice, France, 21–25 October 2019; pp. 2024–2032. [Google Scholar]

- Wang, L.G.; Dong, X.Y.; Wang, Y.Q.; Ying, X.Y.; Lin, Z.P.; An, W.; Guo, Y.L. Exploring Sparsity in Image Super-Resolution for Efficient Inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Virtual, 19–25 June 2021; pp. 4915–4924. [Google Scholar]

- Si, W.; Xiong, J.; Huang, Y.P.; Jiang, X.S.; Hu, D. Quality Assessment of Fruits and Vegetables Based on Spatially Resolved Spectroscopy: A Review. Foods 2022, 11, 1198. [Google Scholar] [CrossRef]

- Yan, X.A.; Liu, Y.; Jia, M.P. Multiscale cascading deep belief network for fault identification of rotating machinery under various working conditions. Knowl.-Based Syst. 2020, 193, 105484. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.-B.; Xia, S.; Zhang, L. Second-Order Attention Network for Single Image Super-Resolution. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11057–11066. [Google Scholar]

- Liu, J.; Zhang, W.; Tang, Y.; Tang, J.; Wu, G. Residual feature aggregation network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2359–2368. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Zhong, B.; Fu, Y. Residual Non-local Attention Networks for Image Restoration. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 1 January 2019. [Google Scholar]

- Mei, Y.; Fan, Y.; Zhou, Y.; Huang, L.; Huang, T.; Shi, H. Image Super-Resolution With Cross-Scale Non-Local Attention and Exhaustive Self-Exemplars Mining. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5689–5698. [Google Scholar]

- Liang, J.Y.; Cao, J.Z.; Sun, G.L.; Zhang, K.; Van Gool, L.; Timofte, R.; Soc, I.C. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCVW), online, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Wu, Z.X.; Nagarajan, T.; Kumar, A.; Rennie, S.; Davis, L.S.; Grauman, K.; Feris, R. BlockDrop: Dynamic Inference Paths in Residual Networks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8817–8826. [Google Scholar]

- Mullapudi, R.T.; Mark, W.R.; Shazeer, N.; Fatahalian, K. HydraNets: Specialized Dynamic Architectures for Efficient Inference. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8080–8089. [Google Scholar]

- Figurnov, M.; Collins, M.D.; Zhu, Y.K.; Zhang, L.; Huang, J.; Vetrov, D.; Salakhutdinov, R. Spatially Adaptive Computation Time for Residual Networks. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1790–1799. [Google Scholar]

- Liu, M.; Zhang, Z.; Hou, L.; Zuo, W.; Zhang, L. Deep adaptive inference networks for single image super-resolution. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 131–148. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the 23rd British Machine Vision Conference, University of Surrey, Guildford, UK, 3–7 September 2012. [Google Scholar]

- Jang, E.; Gu, S.; Poole, B. Categorical reparameterization with gumbel-softmax. arXiv 2016, arXiv:1611.01144. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In Proceedings of the International Conference on Curves and Surfaces, Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; pp. 416–423. [Google Scholar]

- Huang, J.-B.; Singh, A.; Ahuja, N. Single Image Super-Resolution from Transformed Self-Exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Fujimoto, A.; Ogawa, T.; Yamamoto, K.; Matsui, Y.; Yamasaki, T.; Aizawa, K. Manga109 dataset and creation of metadata. In Proceedings of the 1st International Workshop on coMics ANalysis, Processing and Understanding, Cancun, Mexico, 4 December 2016. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision ECCV, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5835–5843. [Google Scholar]

- Li, Z.; Yang, J.L.; Liu, Z.; Yang, X.M.; Jeon, G.; Wu, W.; Soc, I.C. Feedback Network for Image Super-Resolution. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3862–3871. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-Visual-Words and spatial Extensions for Land-Use Classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Ma, Y.C.A.; Lv, P.Y.; Liu, H.; Sun, X.H.; Zhong, Y.F. Remote Sensing Image Super-Resolution Based on Dense Channel Attention Network. Remote Sens. 2021, 13, 2966. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.W.; Lu, X.Q. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Xie, C.; Zhu, H.Y.; Fei, Y.Q. Deep coordinate attention network for single image super-resolution. IET Image Process. 2022, 16, 273–284. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, H.R.; Zhao, W.; Zhao, X.L. Shape Optimum Design by Basis Vector Method Considering Partial Shape Dependence. Appl. Sci. 2020, 10, 7848. [Google Scholar] [CrossRef]

- Xiong, T.Y.; Gu, Z. Observer-Based Fixed-Time Consensus Control for Nonlinear Multi-Agent Systems Subjected to Measurement Noises. IEEE Access 2020, 8, 174191–174199. [Google Scholar] [CrossRef]

- Zhang, Y.Y.; Jiang, L.; Yang, W.X.; Ma, C.B.; Yu, Q.P. Investigations of Adhesion under Different Slider-Lube/Disk Contact States at the Head-Disk Interface. Appl. Sci. 2020, 10, 5899. [Google Scholar] [CrossRef]

| Model | Parameters | FLOPs | PSNR |

|---|---|---|---|

| Network with Module-A | 1.01 M | 115.5 G | 28.56 |

| Network with Module-B | 0.72 M | 82.0 G | 28.56 |

| SCPN | 0.95 M | 115.3 G | 28.60 |

| Method | Scale | #Params | Set5 | Set14 | B100 | Urban100 | Manga109 |

|---|---|---|---|---|---|---|---|

| Bicubic [9] | ×2 | - | 33.66/0.9299 | 30.24/0.8688 | 29.56/0.8431 | 26.88/0.8403 | 30.80/0.9339 |

| SRCNN [23] | 8 K | 36.66/0.9542 | 32.45/0.9067 | 31.36/0.8879 | 29.50/0.8946 | 35.60/0.9663 | |

| FSRCNN [57] | 13 K | 37.05 0.9560 | 32.66 0.9090 | 31.53 0.8920 | 29.88 0.9020 | 36.67 0.9710 | |

| VDSR [24] | 665 K | 37.53/0.9590 | 33.05/0.9130 | 31.90/0.8960 | 30.77/0.9140 | 37.22/0.9750 | |

| DRCN [58] | 1774 K | 37.63/0.9588 | 33.04/0.9118 | 31.85/0.8942 | 30.75/0.9133 | 37.55/0.9732 | |

| LapSRN [59] | 813 K | 37.52/0.9591 | 33.08/0.9130 | 31.08/0.8950 | 30.41/0.9101 | 37.27/0.9740 | |

| SRFBN-S [60] | 282 K | 37.78/0.9597 | 33.35/0.9156 | 32.00/0.8970 | 31.41/0.9207 | 38.06/0.9757 | |

| CARN [30] | 1592 K | 37.76/0.9590 | 33.52/0.9166 | 32.09/0.8978 | 31.92/0.9256 | 38.36/0.9765 | |

| IDN [31] | 553 K | 37.83/0.9600 | 33.30/0.9148 | 32.08/0.8985 | 31.27/0.9196 | 38.01/0.9749 | |

| IMDN [32] | 694 K | 38.00/0.9605 | 33.54/0.9172 | 32.16/0.8994 | 32.09/0.9279 | 38.73/0.9771 | |

| SCPN (ours) | 938 K | 38.08/0.9607 | 33.65/0.9177 | 32.19/0.89967 | 32.23/0.9288 | 38.89/0.9774 | |

| Bicubic [9] | ×3 | - | 30.39/0.8682 | 27.55/0.7742 | 27.21/0.7385 | 24.46/0.7349 | 26.95/0.8556 |

| SRCNN [23] | 8 K | 32.75/0.9090 | 29.30/0.8215 | 28.41/0.7863 | 26.24/0.7989 | 30.48/0.9117 | |

| FSRCNN [57] | 13 K | 33.18/0.9140 | 29.37/0.8240 | 28.53/0.7910 | 26.43/0.8080 | 31.10/0.9210 | |

| VDSR [24] | 665 K | 33.66/0.9213 | 29.77/0.8314 | 28.82/0.7976 | 27.14/0.8279 | 32.01/0.9340 | |

| DRCN [58] | 1774 K | 33.82/0.9226 | 29.76/0.8311 | 28.80/0.7963 | 27.15/0.8276 | 32.24/0.9343 | |

| LapSRN [59] | 502 K | 33.81/0.9220 | 29.79/0.8325 | 28.82/0.7980 | 27.07/0.8275 | 32.21/0.9350 | |

| SRFBN-S [60] | 375 K | 34.20/0.9255 | 30.10/0.8372 | 28.96/0.8010 | 27.66/0.8415 | 33.02/0.9404 | |

| CARN [30] | 1592 K | 34.29/0.9255 | 30.29/0.8407 | 29.06/0.8034 | 28.06/0.8493 | 33.50/0.9440 | |

| IDN [31] | 553 K | 34.11/0.9253 | 29.99/0.8354 | 28.95/0.8013 | 27.42/0.8359 | 32.71/0.9381 | |

| IMDN [32] | 703 K | 34.42/0.9275 | 30.25/0.8401 | 29.06/0.8041 | 28.12/0.8507 | 33.49/0.9440 | |

| SCPN (ours) | 934 K | 34.44/0.9275 | 30.33/0.8420 | 29.09/0.8046 | 28.18/0.8522 | 33.62/0.9445 | |

| Bicubic [9] | ×4 | - | 28.42/0.8104 | 26.00/0.7027 | 25.96/0.6675 | 23.14/0.6577 | 24.89/0.7866 |

| SRCNN [23] | 8 K | 30.48/0.8628 | 27.50/0.7513 | 26.90/0.7101 | 24.52/0.7221 | 27.58/0.8555 | |

| FSRCNN [57] | 13 K | 30.72/0.8660 | 27.61/0.7550 | 26.98/0.7150 | 24.62/0.7280 | 27.90/0.8610 | |

| VDSR [24] | 665 K | 31.35/0.8838 | 28.01/0.7674 | 27.29/0.7251 | 25.18/0.7524 | 28.83/0.8870 | |

| DRCN [58] | 1774 K | 31.53/0.8854 | 28.02/0.7670 | 27.23/0.7233 | 25.14/0.7510 | 28.93/0.8854 | |

| LapSRN [59] | 502 K | 31.54/0.8850 | 28.19/0.7720 | 27.32/0.7270 | 25.21/0.7560 | 29.09/0.8900 | |

| SRFBN-S [60] | 483 K | 31.98/0.8923 | 28.45/0.7779 | 27.44/0.7313 | 25.71/0.7719 | 29.91/0.9008 | |

| CARN [30] | 1592 K | 32.13/0.8937 | 28.60/0.7806 | 27.58/0.7349 | 26.07/0.7837 | 30.47/0.9084 | |

| IDN [31] | 553 K | 31.82/0.8903 | 28.25/0.7730 | 27.41/0.7297 | 25.41/0.7632 | 29.41/0.8942 | |

| IMDN [32] | 715 K | 32.19/0.8943 | 28.56/0.7807 | 27.53/0.7345 | 26.02/0.7825 | 30.32/0.9057 | |

| SCPN (ours) | 952 K | 32.20/0.8948 | 28.60/0.7819 | 27.55/0.7354 | 26.10/0.7857 | 30.49/0.9080 |

| Method | UCTest | RESISCTest | ||

|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | |

| Bicubic | 26.77 | 0.6968 | 26.43 | 0.6300 |

| IMDN-T | 29.19 | 0.7920 | 26.44 | 0.6369 |

| SCPN-T | 29.32 | 0.7961 | 26.53 | 0.6406 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Tang, H.; Hu, Y.; Tao, H.; Xie, C. Lightweight Single Image Super-Resolution with Selective Channel Processing Network. Sensors 2022, 22, 5586. https://doi.org/10.3390/s22155586

Zhu H, Tang H, Hu Y, Tao H, Xie C. Lightweight Single Image Super-Resolution with Selective Channel Processing Network. Sensors. 2022; 22(15):5586. https://doi.org/10.3390/s22155586

Chicago/Turabian StyleZhu, Hongyu, Hao Tang, Yaocong Hu, Huanjie Tao, and Chao Xie. 2022. "Lightweight Single Image Super-Resolution with Selective Channel Processing Network" Sensors 22, no. 15: 5586. https://doi.org/10.3390/s22155586