LLDNet: A Lightweight Lane Detection Approach for Autonomous Cars Using Deep Learning

Abstract

:1. Introduction

- Developing a lightweight, accurate CNN model for lane detection.

- Assessing the numerical results of the proposed model and comparing them with other state-of-the-art methods.

- Testing the model’s performance in real-life scenarios considering Bangladesh’s structured and defected roads.

2. Literature Review

2.1. Traditional Methods

2.2. Deep Learning-Based Methods

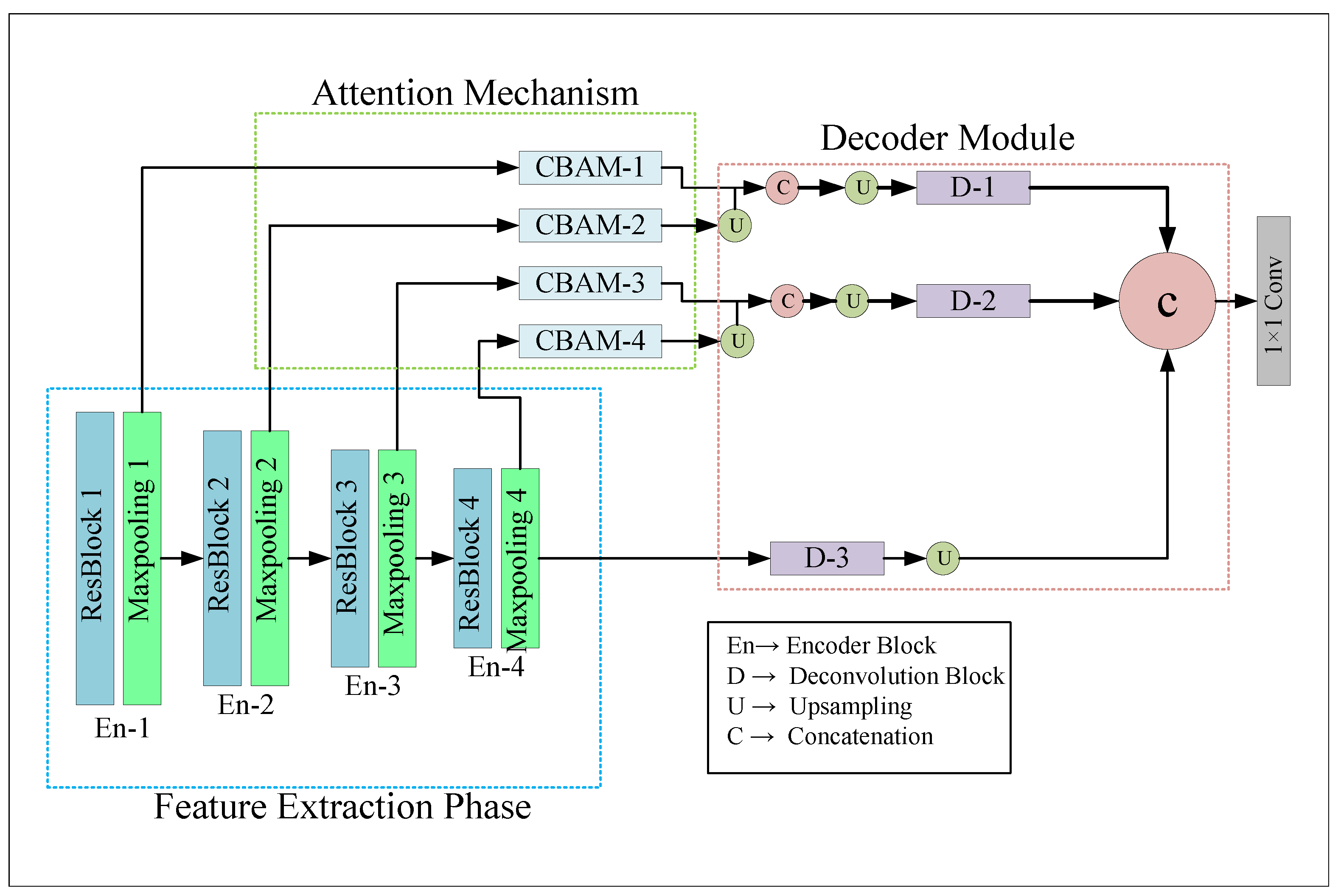

3. Proposed Model

3.1. Encoder Module

3.2. Attention Mechanism

3.2.1. Channel Attention Module

3.2.2. Spatial Attention Module

3.3. Decoder Module

4. Experiments and Results

4.1. Dataset

4.2. Implementation Details

4.3. Performance & Robustness of the Model

4.3.1. Quantitative Results

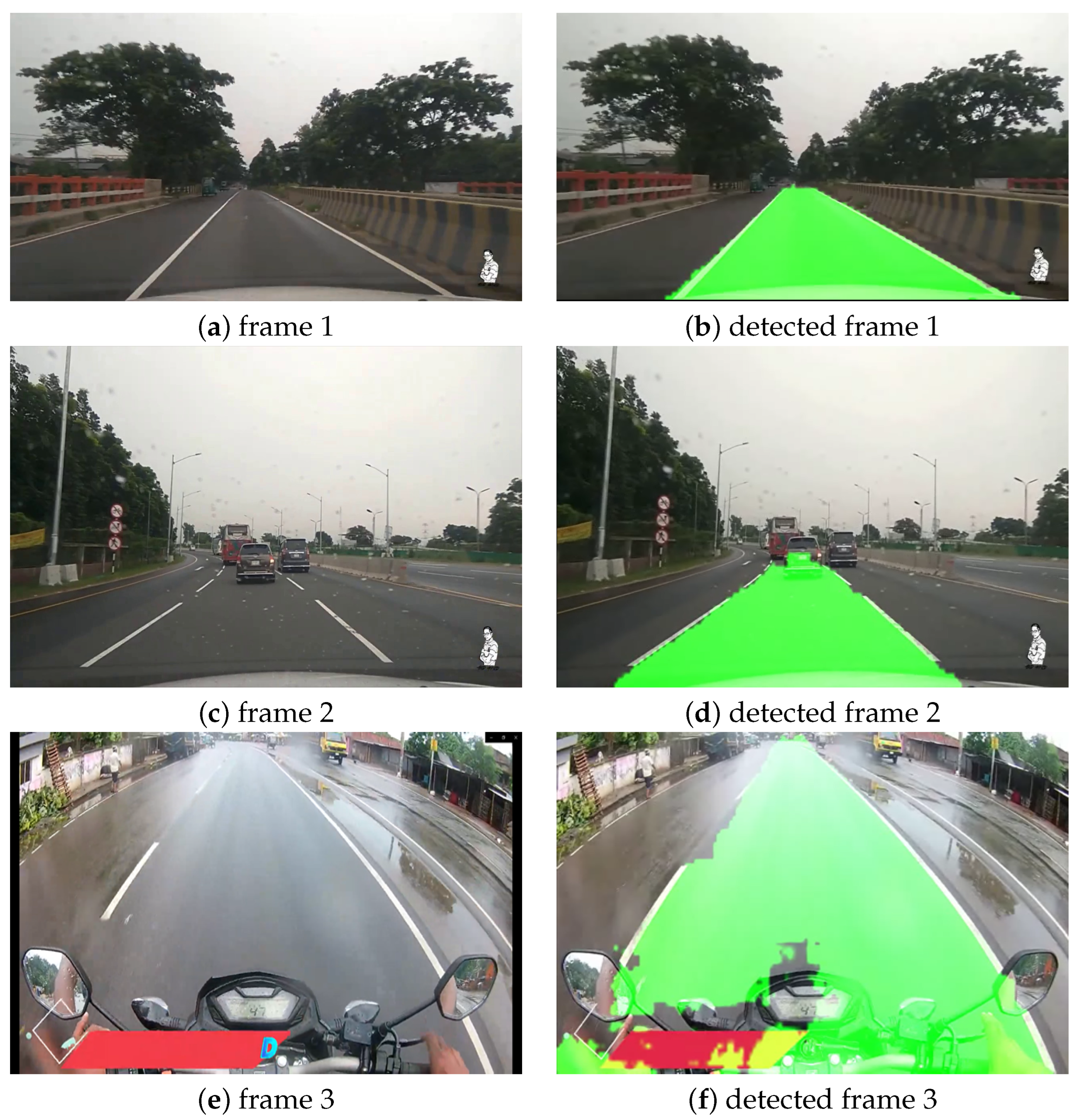

4.3.2. Qualitative Results

- Perfect road with normal weather condition;

- Curvy road condition;

- Rainy condition;

- Night condition;

- Defected pavement and occluded lane line condition.

Perfect Road with Normal Weather Condition

Curvy Road Condition

Rainy Weather Condition

Night Condition

Defected Pavement and Occuladed Lane Line Condition

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Road Safety Annual Report 2018; International Transport Forum: Paris, France, 2018.

- Road Accidents in Bangladesh: An Alarming Issue. World Bank Blogs. Available online: https://blogs.worldbank.org/endpovertyinsouthasia/road-accidents-bangladesh-alarming-issue (accessed on 31 May 2022).

- Ahsan, M.J.; Roy, S.; Huq, A.S. An In-Depth Estimation of Road Traffic Accident Cost In Bangladesh. In Proceedings of the International Conference on Sustainable Development in Technology for 4th Industrial Revolution 2021 (ICSDTIR-2021), Chittagong, Bangladesh, 12–13 March 2021. [Google Scholar]

- Vidorreta, I.M.; Chiva, E.H. Design and Construction of an Electric Autonomous Driving Vehicle. Master’s Thesis, Universitat Politecnica de Catalunya, Barcelona, Spain, 2017. [Google Scholar]

- Chetan, N.B.; Gong, J.; Zhou, H.; Bi, D.; Lan, J.; Qie, L. An overview of recent progress of Lane detection for autonomous driving. In Proceedings of the 2019 6th International Conference on Dependable Systems and Their Applications (DSA), Harbin, China, 3–6 January 2020. [Google Scholar]

- Voisin, V.; Avila, M.; Emile, B.; Begot, S.; Bardet, J.-C. Road markings detection and tracking using Hough Transform and Kalman filter. In Proceedings of the Advanced Concepts for Intelligent Vision Systems 7th International Conference, ACIVS 2005, Antwerp, Belgium, 20–23 September 2005; pp. 76–83. [Google Scholar]

- Singal, G.; Singhal, H.; Kushwaha, R.; Veeramsetty, V.; Badal, T.; Lamba, S. Roadway. Multimed. Tools Appl. 2022, 1–4. [Google Scholar] [CrossRef]

- Wang, W.; Lin, H.; Wang, J. CNN based Lane Detection with instance segmentation in edge-cloud computing. J. Cloud Comput. 2020, 9, 1–10. [Google Scholar] [CrossRef]

- Kang, D.-J.; Jung, M.-H. Road Lane segmentation using dynamic programming for active safety vehicles. Pattern Recognit. Lett. 2003, 24, 3177–3185. [Google Scholar] [CrossRef]

- Kim, S.; Park, J.H.; Cho, S.I.; Park, S.; Lee, K.; Choi, K. Robust lane detection for video-based navigation systems. In Proceedings of the 19th IEEE International Conference on Tools with Artificial Intelligence (ICTAI 2007), Patras, Greece, 29–31 October 2007. [Google Scholar]

- Wang, Y.; Teoh, E.K.; Shen, D. Lane detection and tracking using B-snake. Image Vis. Comput. 2004, 22, 269–280. [Google Scholar] [CrossRef]

- Zhang, R.; Wu, Y.; Gou, W.; Chen, J. RS-lane: A robust lane detection method based on Resnest and self-attention distillation for challenging traffic situations. J. Adv. Transp. 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Zou, Q.; Jiang, H.; Dai, Q.; Yue, Y.; Chen, L.; Wang, Q. Robust lane detection from continuous driving scenes using Deep Neural Networks. IEEE Trans. Veh. Technol. 2020, 69, 41–54. [Google Scholar] [CrossRef] [Green Version]

- Hou, Y.; Ma, Z.; Liu, C.; Loy, C.C. Learning lightweight lane detection CNNS by self attention distillation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Lee, D.-H.; Liu, J.-L. End-to-end deep learning of Lane Detection and path prediction for real-time autonomous driving. arXiv 2021, arXiv:2102.04738. [Google Scholar] [CrossRef]

- Aly, M. Real time detection of Lane Markers in Urban Streets. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008. [Google Scholar]

- Kamble, A.; Potadar, S. Lane departure warning system for advanced drivers assistance. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018. [Google Scholar]

- Wennan, Z.; Qiang, C.; Hong, W. Lane detection in some complex conditions. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006. [Google Scholar]

- Hu, J.; Xiong, S.; Sun, Y.; Zha, J.; Fu, C. Research on lane detection based on global search of dynamic region of interest (DROI). Appl. Sci. 2020, 10, 2543. [Google Scholar] [CrossRef] [Green Version]

- Gao, Q.; Feng, Y.; Wang, L. A real-time lane detection and tracking algorithm. In Proceedings of the 2017 IEEE 2nd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 December 2017. [Google Scholar]

- Xu, M.; Wei, Y. Detection of lane line based on Robert Operator. J. Meas. Eng. 2021, 9, 156–166. [Google Scholar] [CrossRef]

- Andrei, M.-A.; Boiangiu, C.-A.; Tarbă, N.; Voncilă, M.-L. Robust lane detection and tracking algorithm for Steering Assist Systems. Machines 2021, 10, 10. [Google Scholar] [CrossRef]

- Wang, Y.; Shen, D. Lane detection using catmull-rom spline. In Proceedings of the IEEE Intelligent Vehicles Symposium, Stuttgart, Germany, 28–30 October 1998; pp. 51–57. [Google Scholar]

- Fan, G.T.; Li, B.; Han, Q.; Jiao, R.; Qu, G. Robust lane detection and tracking based on machine vision. ZTE Commun. 2020, 18, 69–77. [Google Scholar] [CrossRef]

- Li, X.; Fang, X.; Wang, C.; Zhang, W. Lane detection and tracking using a parallel-snake approach. J. Intell. Robot. Syst. 2014, 77, 597–609. [Google Scholar] [CrossRef]

- Yoo, J.H.; Lee, S.-W.; Park, S.-K.; Kim, D.H. A robust lane detection method based on Vanishing Point estimation using the relevance of line segments. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3254–3266. [Google Scholar] [CrossRef]

- Kim, J.; Park, C. End-to-end ego lane estimation based on Sequential Transfer Learning for self-driving cars. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Neven, D.; Brabandere, B.D.; Georgoulis, S.; Proesmans, M.; Gool, L.V. Towards end-to-end lane detection: An instance segmentation approach. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018. [Google Scholar]

- Bruls, T.; Maddern, W.; Morye, A.A.; Newman, P. Mark yourself: Road marking segmentation via weakly-supervised annotations from Multimodal Data. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Khan, M.A.-M.; Kee, S.-H.; Sikder, N.; Mamun, M.A.A.; Zohora, F.T.; Hasan, M.T.; Bairagi, A.K.; Nahid, A.-A. A Vision-based lane detection approach for autonomous vehicles using a convolutional neural network architecture. In Proceedings of the 2021 Joint 10th International Conference on Informatics, Electronics & Vision (ICIEV) and 2021 5th International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Online, 16–19 August 2021. [Google Scholar]

- Chng, Z.M.; Lew, J.M.H.; Lee, J.A. RONELDv2: A faster, improved lane tracking method. arXiv 2022, arXiv:2202.13137v1. [Google Scholar]

- Cheng, Y.-T.; Patel, A.; Wen, C.; Bullock, D.; Habib, A. Intensity Thresholding and deep learning based lane marking extraction and lane width estimation from Mobile Light Detection And Ranging (LIDAR) point clouds. Remotesensing 2020, 12, 1379. [Google Scholar] [CrossRef]

- Li, J.; Zhang, D.; Ma, Y.; Liu, Q. Lane image detection based on convolution neural network multi-task learning. Electronics 2021, 10, 2356. [Google Scholar] [CrossRef]

- Chen, Y.; Xiang, Z. Lane mark detection with pre-aligned spatial-temporal attention. Sensors 2022, 22, 794. [Google Scholar] [CrossRef]

- Lee, D.-G. Fast drivable areas estimation with multi-task learning for real-time Autonomous Driving assistant. Appl. Sci. 2021, 11, 10713. [Google Scholar] [CrossRef]

- Perng, J.W.; Hsu, Y.W.; Yang, Y.Z.; Chen, C.Y.; Yin, T.K. Development of an embedded road boundary detection system based on Deep Learning. Image Vis. Comput. 2020, 100, 103935. [Google Scholar] [CrossRef]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; Souza, A.F.D.; Oliveira-Santos, T. PolyLaneNet: Lane estimation via deep polynomial regression. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- Munir, F.; Azam, S.; Jeon, M.; Lee, B.-G.; Pedrycz, W. LDNet: End-to-end lane marking detection approach using a dynamic vision sensor. IEEE Trans. Intell. Transp. Syst. 2021, 23, 1–17. [Google Scholar] [CrossRef]

- Tabelini, L.; Berriel, R.; Paixao, T.M.; Badue, C.; Souza, A.F.D.; Oliveira-Santos, T. Keep your eyes on the lane: Real-time attention-guided Lane Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021. [Google Scholar]

- Ko, Y.; Lee, Y.; Azam, S.; Munir, F.; Jeon, M.; Pedrycz, W. Key points estimation and point instance segmentation approach for Lane Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 8949–8958. [Google Scholar] [CrossRef]

- Haris, M.; Glowacz, A. Lane line detection based on object feature distillation. Electronics 2021, 10, 1102. [Google Scholar] [CrossRef]

- Haris, M.; Hou, J.; Wang, X. Multi-scale spatial convolution algorithm for Lane Line Detection and lane offset estimation in complex road conditions. Signal Process. Image Commun. 2021, 99, 116413. [Google Scholar] [CrossRef]

- Li, H.T.; Todd, Z.; Bielski, N.; Carroll, F. 3D Lidar Point-Cloud Projection Operator and transfer machine learning for Effective Road surface features detection and segmentation. Vis. Comput. 2021, 38, 1759–1774. [Google Scholar] [CrossRef]

- Haris, M.; Hou, J.; Wang, X. Lane Line Detection and departure estimation in a complex environment by using an asymmetric kernel convolution algorithm. Vis. Comput. 2022, 1–20. [Google Scholar] [CrossRef]

- Passos, B.T.; Cassaniga, M.; Fernandes, A.M.R.; Medeiros, K.B.; Comunello, E. Cracks and Potholes in Road Images. Mendeley Data, V4. 2020. Available online: https://data.mendeley.com/datasets/t576ydh9v8/4 (accessed on 23 July 2022).

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Tf.keras.layers.conv2dtranspose; Tensorflow Core v2.9.1. TensorFlow. Available online: https://www.tensorflow.org/api_docs/python/tf/keras/layers/Conv2DTranspose (accessed on 12 July 2022).

- Mvirgo. Mvirgo/MLND-Capstone: Lane Detection with Deep Learning—My Capstone Project for Udacity’s ML Nanodegree. GitHub. Available online: https://github.com/mvirgo/MLND-Capstone (accessed on 12 July 2022).

- Sultana, F.; Sufian, A.; Dutta, P. Evolution of Image Segmentation Using Deep Convolutional Neural Network: A Survey. Knowl.-Based Syst. 2020, 201–202, 106062. [Google Scholar] [CrossRef]

- Bayezid Link Road Chittagong Latest Update. YouTube. 10 March 2021. Available online: https://www.youtube.com/watch?v=Pg4FDaF4d14 (accessed on 20 June 2022).

- Exciting Dhaka Tangail—Sirajgonj Highway Road Views. YouTube. 4 July 2019. Available online: https://www.youtube.com/watch?v=3UaaWd9Mn1I (accessed on 20 June 2022).

- Asian Highway AH1 Scenic Drive 4K BD Building Emerging Bangladesh. YouTube. 24 September 2020. Available online: https://www.youtube.com/watch?v=k16hHldKdas (accessed on 20 June 2022).

- Dhaka—Chittagong Highway | Long Drive in Highway | Long Drive in rAin Status. YouTube. 11 August 2020. Available online: https://www.youtube.com/watch?v=p1jLrA4GV9g (accessed on 13 July 2022).

- The City Of Magura Soaked In Rain 360° Traveller, YouTube. 24 January 2022. Available online: https://www.youtube.com/watch?v=o4iLqFuImTc (accessed on 20 June 2022).

- Dhaka Chittagong Highway Midnight Journey. YouTube. 6 October 2021. Available online: https://www.youtube.com/watch?v=0uiXOXBAjs0 (accessed on 20 June 2022).

- Night driving on Dhaka Mawa Expressway. YouTube. 19 February 2021. Available online: https://www.youtube.com/watch?v=2hjZ8VQdMt0 (accessed on 20 June 2022).

- Mawa Highway Road Night View 2021. YouTube. 30 April 2021. Available online: http://youtube.com/watch?v=hz_D3akSLyM (accessed on 13 July 2022).

| Model | Accuracy (%) | Dice Coefficient (%) | IoU (%) | Dice Loss(%) | Number of Parameters (Million) | File Size (Mb) |

|---|---|---|---|---|---|---|

| PSPNet | 95.89 | 95.46 | 94.82 | 5.01 | 0.33 | 4.08 |

| U-net | 96.27 | 98.02 | 96.98 | 1.98 | 1.94 | 22.97 |

| FCN | 96.30 | 98.13 | 97.19 | 1.87 | 1.37 | 16.35 |

| Ours | 96.31 | 98.18 | 97.33 | 1.82 | 0.26 | 1.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, M.A.-M.; Haque, M.F.; Hasan, K.R.; Alajmani, S.H.; Baz, M.; Masud, M.; Nahid, A.-A. LLDNet: A Lightweight Lane Detection Approach for Autonomous Cars Using Deep Learning. Sensors 2022, 22, 5595. https://doi.org/10.3390/s22155595

Khan MA-M, Haque MF, Hasan KR, Alajmani SH, Baz M, Masud M, Nahid A-A. LLDNet: A Lightweight Lane Detection Approach for Autonomous Cars Using Deep Learning. Sensors. 2022; 22(15):5595. https://doi.org/10.3390/s22155595

Chicago/Turabian StyleKhan, Md. Al-Masrur, Md Foysal Haque, Kazi Rakib Hasan, Samah H. Alajmani, Mohammed Baz, Mehedi Masud, and Abdullah-Al Nahid. 2022. "LLDNet: A Lightweight Lane Detection Approach for Autonomous Cars Using Deep Learning" Sensors 22, no. 15: 5595. https://doi.org/10.3390/s22155595

APA StyleKhan, M. A.-M., Haque, M. F., Hasan, K. R., Alajmani, S. H., Baz, M., Masud, M., & Nahid, A.-A. (2022). LLDNet: A Lightweight Lane Detection Approach for Autonomous Cars Using Deep Learning. Sensors, 22(15), 5595. https://doi.org/10.3390/s22155595