Research on Positioning Method in Underground Complex Environments Based on Fusion of Binocular Vision and IMU

Abstract

:1. Introduction

- (1)

- The combination of the Harris algorithm and the optical flow pyramid tracking method enhances the robustness of the visual positioning system and then solves the problem of the front end being difficult in terms of extracting feature points in weak texture and it being easy to lose tracking.

- (2)

- The interpolation algorithm of non-uniform rational B-spline is used to transform the discrete data of IMU into a continuous track that can be second-order differentiated, to align the visual and inertial information, and to improve the anti-dynamic interference ability by integrating the visual and inertial data.

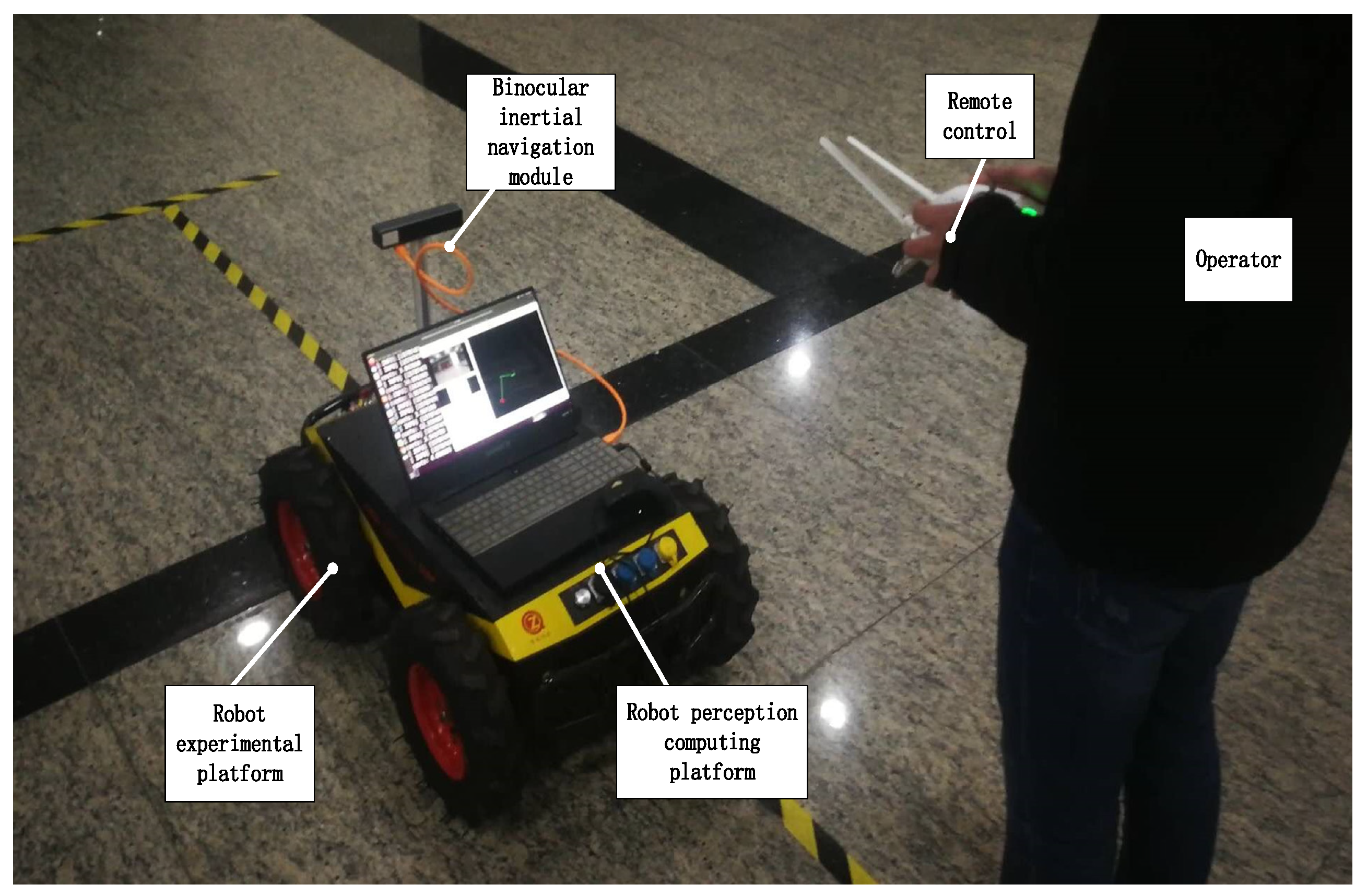

2. Hardware Framework

3. Core Algorithm Design of Binocular Vision and Interval Navigation Fusion

- (1)

- Through the study of traditional visual odometers, it is found that the front end based on ORB feature points is not suitable for a weakly textured environment. The improved Harris algorithm with a stronger ability to extract corner points ensures that the front end can extract more feature points from weak texture.

- (2)

- Due to the high sampling frequency of the inertial navigation system, it is difficult to align and fuse high-frequency data with low-frequency data. To solve these problems, firstly, we studied and analyzed various factors of inertial navigation error, simplified the inertial navigation model, and reduced the difficulty of calculation. Secondly, non-uniform rational B-spline algorithm was used to transform high-frequency discrete IMU data into a second-derivable continuous trajectory, which is convenient for fusion with visual information.

- (3)

- As for integration of vision and inertial navigation, first, since every update of back-end optimization needs to recalculate the inertial derivative data in the world coordinate system, the original inertial derivative data can be preprocessed to obtain the pre-integral, which can reduce the number of repeated calculation and deduce the Jacobian matrix and covariance. Secondly, visual and inertial navigation information were integrated in a tightly coupled way to construct optimization objective function and state variables. Thirdly, a more reasonable Levenberg–Marquardt method was introduced to solve the non-singular ill-condition problems in the fusion optimization problem. Fourthly, sliding windows and marginalization were adopted to delete the old state quantity and release the computing power in view of the growing computing scale of the back end.

3.1. Binocular Vision Algorithm

- (1)

- Extract Harris feature points of the first frame [13].

- (2)

- LK multilayer optical flow was used to calculate the corresponding feature points in the second frame [14].

- (3)

- DLT (direct linear transformation) was used to calculate the pose transformation of the camera through matched pairs of feature points [15].

- (1)

- A number of pictures around the field scene were collected in advance, FAST feature points of each image were extracted, BRIEF descriptors of feature points were calculated to construct words of the image [17], DBoW3 was used as a dictionary to store words [18,19], and the TF-IDF algorithm was used to add different weights to different words [20].

- (2)

- At the same time, for each image obtained, the characteristics of the image were described as words in BoW, the frequency of words was counted, and an image was converted into a group of words.

3.2. Inertial Navigation Algorithm

3.2.1. Inertial Error Model

3.2.2. Inertial Derivatives Calculated

3.2.3. Nonuniform Rational B Spline

3.3. A Tightly Coupled Visual and Inertial Fusion Algorithm

3.3.1. IMU Data Preprocessing

3.3.2. Construction of Residual Equation

- (a)

- The state vector

- (b)

- Inertial constraint

- (c)

- Visual constraints

- (d)

- Global objective function

3.3.3. Nonlinear Optimization Solution

3.3.4. Sliding Windows and Marginalization

4. Experimental Results and Analysis

4.1. Experimental on Dataset

4.2. Experimental in Simulated Real Scene

4.2.1. Positioning Accuracy Test

4.2.2. Robustness Experiments under Dynamic Disturbances

4.2.3. Robustness Experiments with Missing Textures

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, X.Z.; Wang, J.C.; Zhang, L.X. Physics of Frozen Soils; Science Press: Beijing, China, 2001. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. Robot. Sci. Syst. 2014, 2, 1–9. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Kim, G.; Kim, A. Scan context: Egocentric spatial descriptor for place recognition within 3d point cloud map. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4802–4809. [Google Scholar]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5135–5142. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Wang, R.; Schworer, M.; Cremers, D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras. arXiv 2017, arXiv:1708.07878. [Google Scholar]

- Forster, C.; Zhang, Z.; Gassner, M.; Werlberger, M.; Scaramuzza, D. SVO: Semidirect visual odometry for monocular and multicamera systems. IEEE Trans. Robot. 2016, 33, 249–265. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM3: An Accurate OpenSource Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 2021, 9440682. [Google Scholar] [CrossRef]

- Sikka, P.; Asati, A.R.; Shekhar, C. Real time FPGA implementation of a high speed and area optimized Harris corner detection algorithm. Microprocess. Microsyst. 2021, 80, 103514. [Google Scholar] [CrossRef]

- Jaiseeli, C.; Raajan, N.R. SLKOF: Subsampled Lucas-Kanade Optical Flow for Opto Kinetic Nystagmus detection. J. Intell. Fuzzy Syst. 2021, 41, 5265–5274. [Google Scholar] [CrossRef]

- Přibyl, B.; Zemčík, P.; Čadík, M. Absolute pose estimation from line correspondences using direct linear transformation. Comput. Vis. Image Underst. 2017, 161, 130–144. [Google Scholar] [CrossRef] [Green Version]

- Zheng, M.; Zhang, F.; Zhu, J.; Zuo, Z. A fast and accurate bundle adjustment method for very large-scale data. Comput. Geosci. 2020, 142, 104539. [Google Scholar] [CrossRef]

- Tsintotas, K.A.; Bampis, L.; Gasteratos, A. Assigning visual words to places for loop closure detection. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 5979–5985. [Google Scholar]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Galvez-Lopez, D.; Tardos, J.D. Real-time loop detection with bags of binary words. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 51–58. [Google Scholar]

- Sivic, J.; Zisserman, A. Video Google: A text retrieval approach to object matching in videos. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; p. 1470. [Google Scholar]

- Kourabbaslou, S.S.; Zhang, A.; Atia, M.M. A Novel Design Framework for Tightly Coupled IMU/GNSS Sensor Fusion Using Inverse-Kinematics, Symbolic Engines, and Genetic Algorithms. IEEE Sens. J. 2019, 19, 11424–11436. [Google Scholar] [CrossRef]

- Ling, Y.; Bao, L.; Jie, Z.; Zhu, F.; Li, Z.; Tang, S.; Liu, Y.; Liu, W.; Zhang, T. Modeling varying camera-imu time offset in optimization-based visual-inertial odometry. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 484–500. [Google Scholar]

- Lovegrove, S.; Patron-Perez, A.; Sibley, G. Spline Fusion: A continuous-time representation for visual-inertial fusion with application to rolling shutter cameras. BMVC 2013, 2, 8. [Google Scholar]

- Yang, Z.; Shen, S. Monocular visual–inertial state estimation with online initialization and camera–IMU extrinsic calibration. IEEE Trans. Autom. Sci. Eng. 2016, 14, 39–51. [Google Scholar] [CrossRef]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual-inertial odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef] [Green Version]

- Kaiser, J.; Martinelli, A.; Fontana, F.; Scaramuzza, D. Simultaneous state initialization and gyroscope bias calibration in visual inertial aided navigation. IEEE Robot. Autom. Lett. 2016, 2, 18–25. [Google Scholar] [CrossRef] [Green Version]

- Nasiri, S.M.; Hosseini, R.; Moradi, H. A recursive least square method for 3D pose graph optimization problem. arXiv 2018, arXiv:1806.00281. [Google Scholar]

- Wu, Z.; Zhou, T.; Li, L.; Chen, L.; Ma, Y. A New Modified Efficient Levenberg–Marquardt Method for Solving Systems of Nonlinear Equations. Math. Probl. Eng. 2021, 2021, 5608195. [Google Scholar] [CrossRef]

- Wang, H.; Li, H.; Zhang, W.; Zuo, J.; Wang, H. Derivative-free Huber–Kalman smoothing based on alternating minimization. Signal Process. 2019, 163, 115–122. [Google Scholar] [CrossRef]

- Carlone, L.; Calafiore, G.C. Convex relaxations for pose graph optimization with outliers. IEEE Robot. Autom. Lett. 2018, 3, 1160–1167. [Google Scholar] [CrossRef] [Green Version]

| Coefficient | Mathematical Formula |

|---|---|

| Number | Pure Inertial Odometer | Fusion Algorithm Odometer | ||||

|---|---|---|---|---|---|---|

| Origin | Destination | Error | Origin | Destination | Error | |

| 1 | X: 0.01, Y: 0.04 | X: −0.03, Y: −0.05 | 0.041 | X: 0.03, Y: 0.04 | X: 0.01, Y: 0.05 | 0.022 |

| 2 | X: 0.02, Y: 0.01 | X: −0.10, Y: −0.12 | 0.177 | X: 0.02, Y: 0.04 | X: 0.04, Y: 0.06 | 0.028 |

| 3 | X: 0.05, Y: 0.03 | X: −0.13, Y: −0.04 | 0.193 | X: 0.07, Y: 0.02 | X: 0.03, Y: 0.05 | 0.05 |

| 4 | X: 0.03, Y: 0.02 | X: −0.03, Y: −0.04 | 0.085 | X: 0.06, Y: 0.02 | X: 0.05, Y: 0.04 | 0.022 |

| 5 | X: 0.04, Y: 0.01 | X: −0.09, Y: −0.05 | 0.143 | X: 0.02, Y: 0.04 | X: 0.03, Y: 0.07 | 0.032 |

| 6 | X: 0.02, Y: 0.05 | X: −0.03, Y: −0.08 | 0.139 | X: 0.05, Y: 0.04 | X: 0.07, Y: 0.03 | 0.022 |

| Average error | 0.130 | 0.029 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, J.; Jin, Y.; Zhai, Z.; Liu, X.; Zhou, K. Research on Positioning Method in Underground Complex Environments Based on Fusion of Binocular Vision and IMU. Sensors 2022, 22, 5711. https://doi.org/10.3390/s22155711

Cheng J, Jin Y, Zhai Z, Liu X, Zhou K. Research on Positioning Method in Underground Complex Environments Based on Fusion of Binocular Vision and IMU. Sensors. 2022; 22(15):5711. https://doi.org/10.3390/s22155711

Chicago/Turabian StyleCheng, Jie, Yinglian Jin, Zhen Zhai, Xiaolong Liu, and Kun Zhou. 2022. "Research on Positioning Method in Underground Complex Environments Based on Fusion of Binocular Vision and IMU" Sensors 22, no. 15: 5711. https://doi.org/10.3390/s22155711