Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

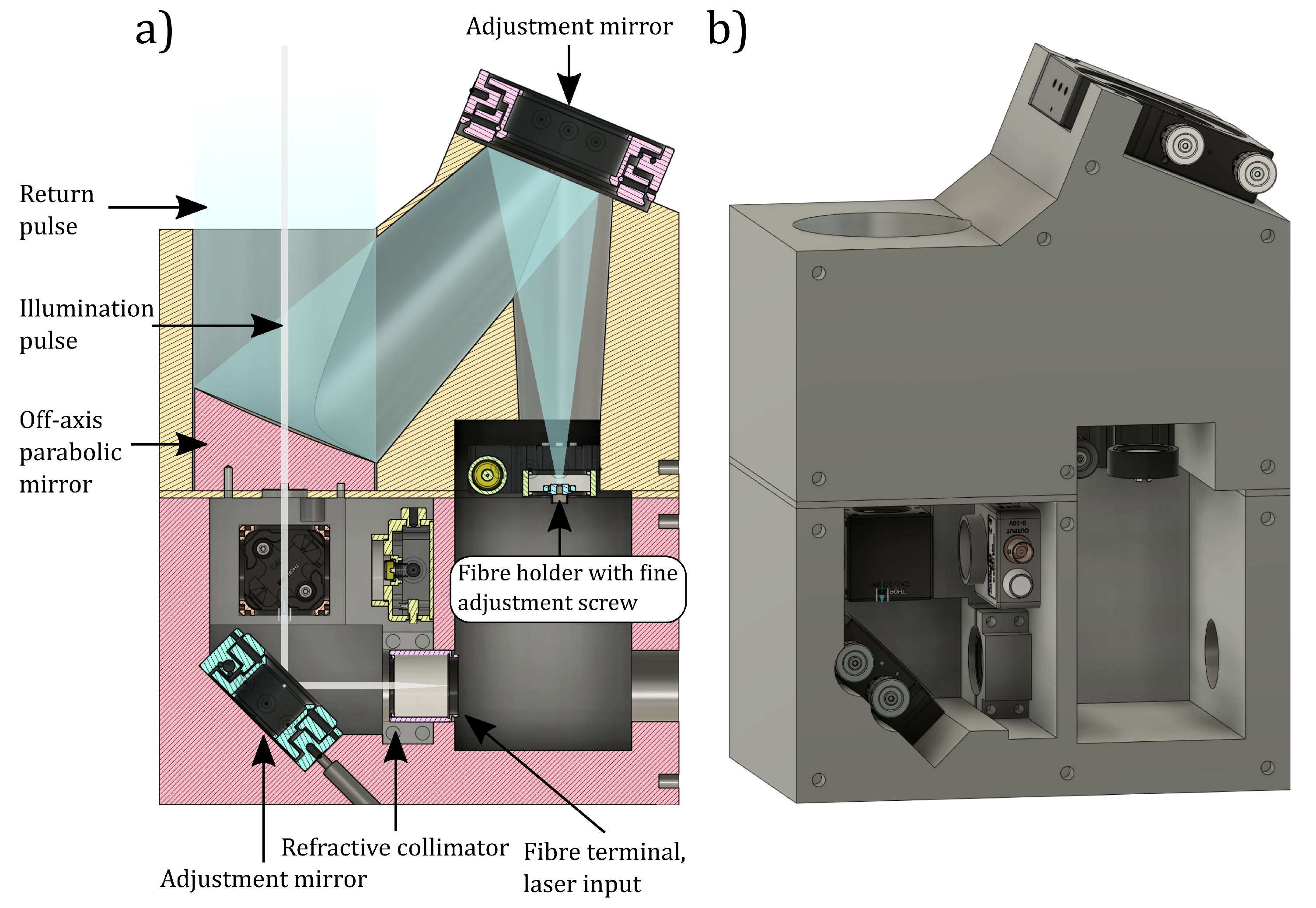

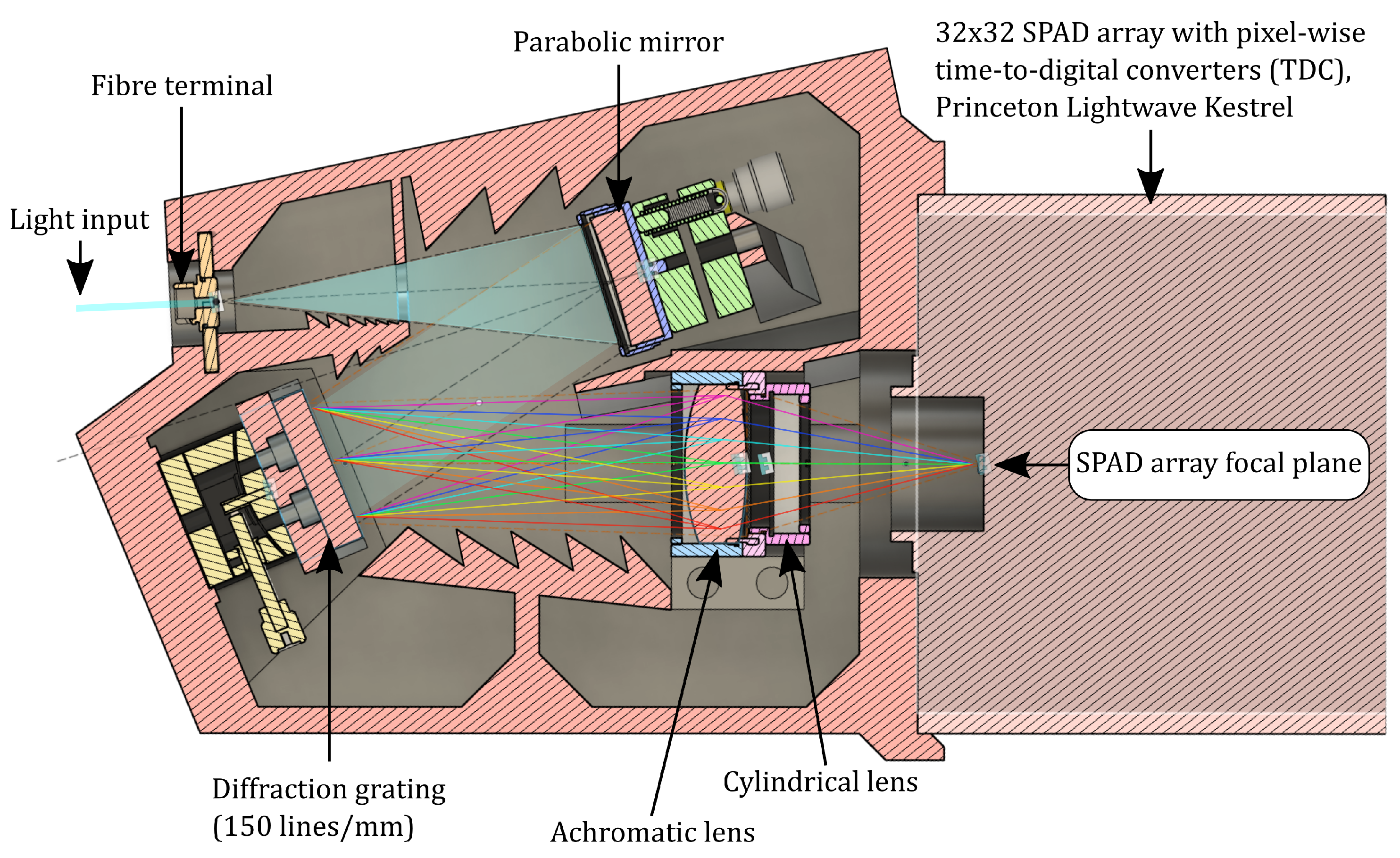

3.1. The Prototype Hyperspectral Single Photon Lidar and Its Operating Principle

3.2. Our Statistical Model for Spectral Reflectance Measurement Accuracy in the Low-Photon Flux Regime

3.3. Experiments

3.3.1. The Dataset and System Calibration

3.3.2. Spectral Reflectance Measurement Accuracy in the Low-Photon Flux Regime

3.3.3. Separability of the Hyperspectral Single Photon Data

3.3.4. Classification with Random Forest Classifier

3.4. Data Processing

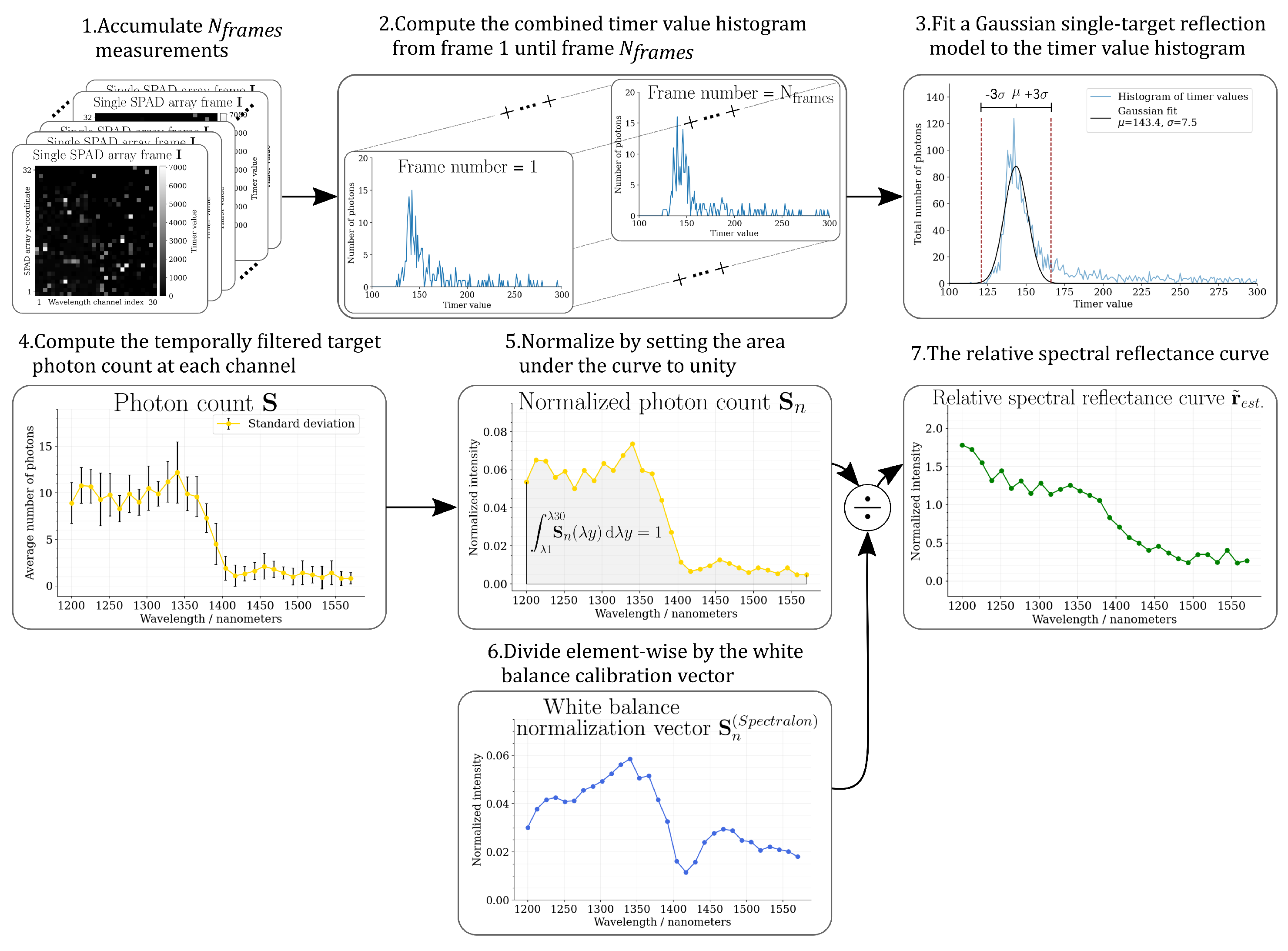

3.4.1. Spectrum Measurement from a Single Frame

3.4.2. Signal Acquisition over Consecutive Frames

3.4.3. Channel Binning

4. Results

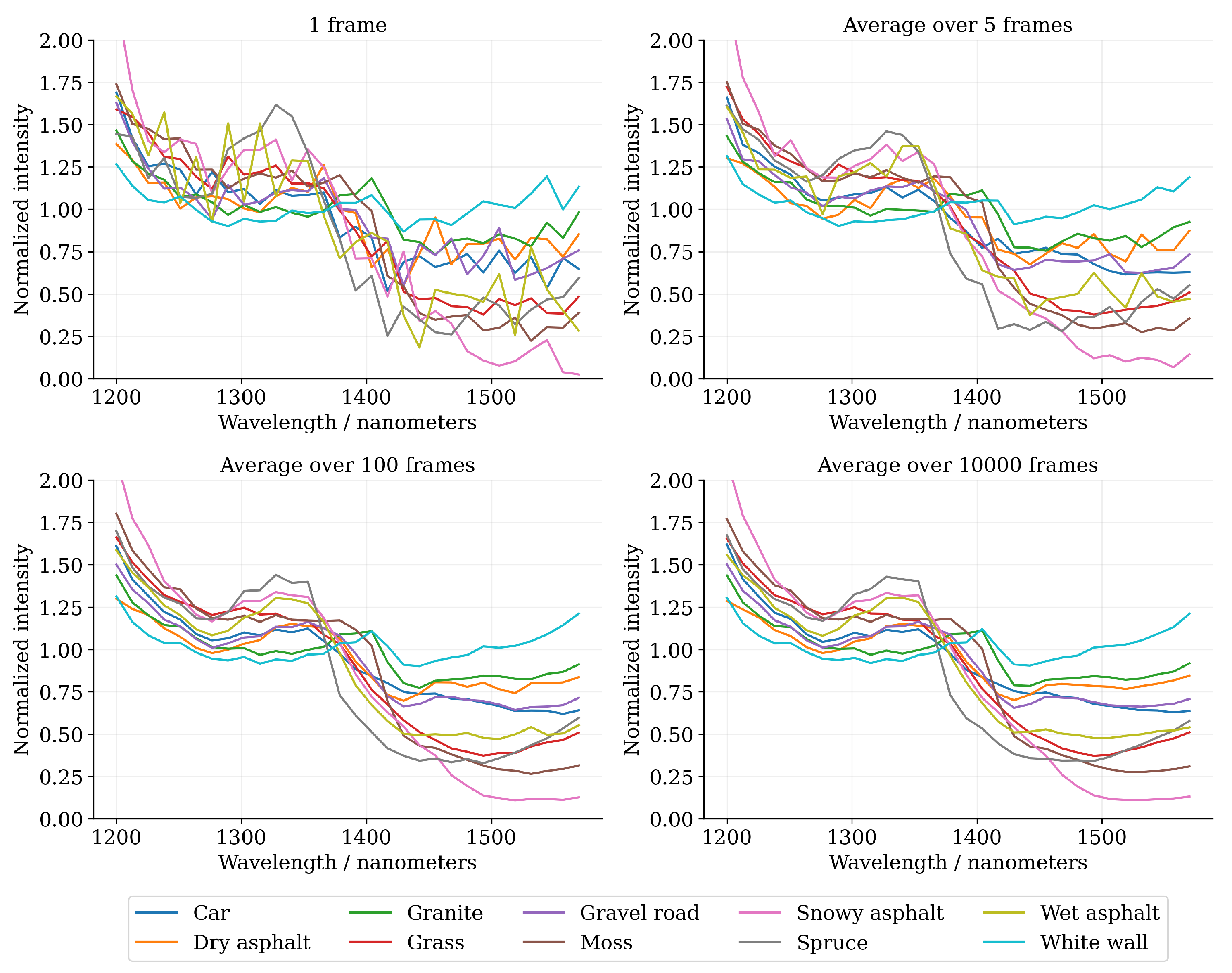

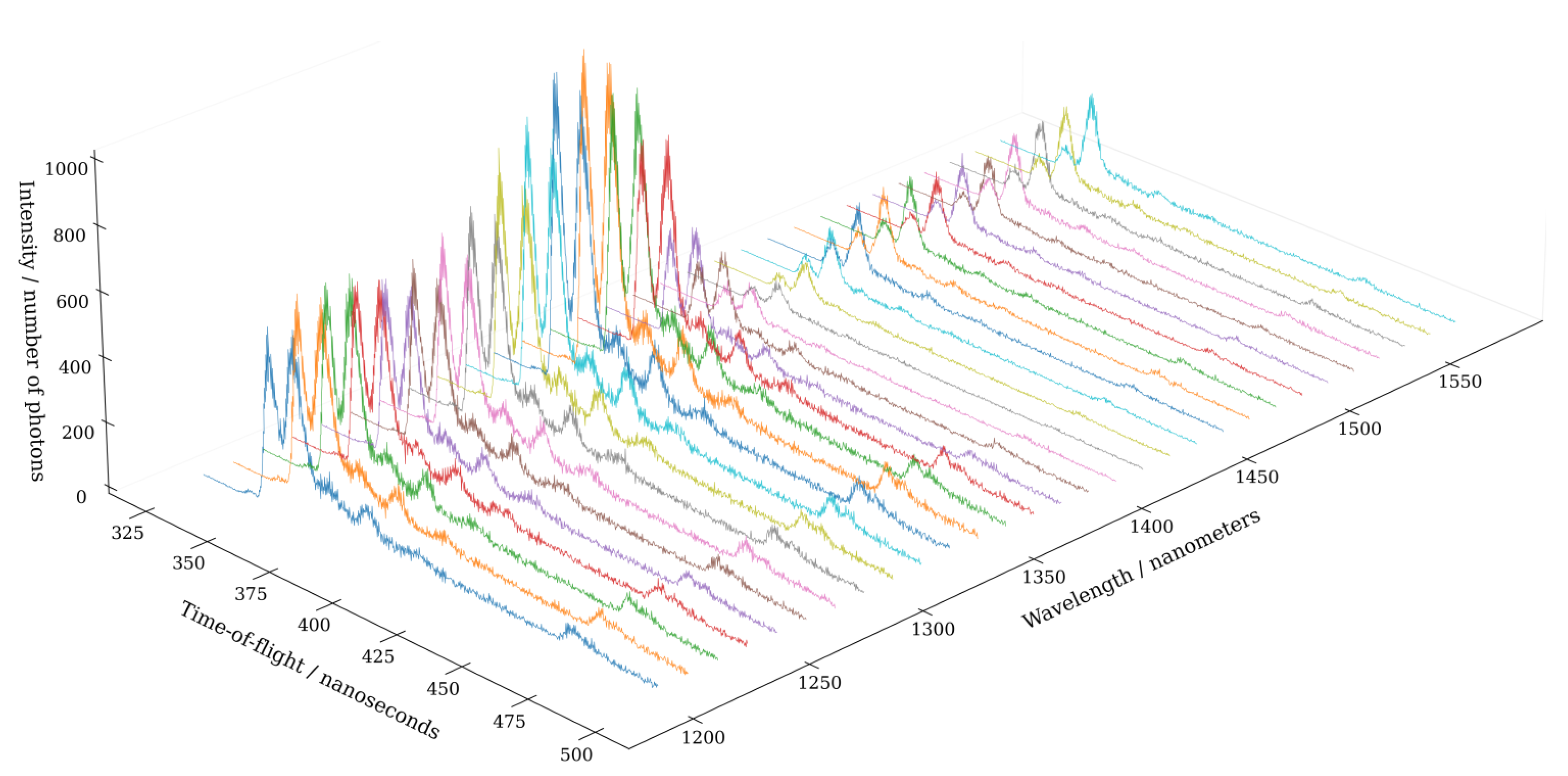

4.1. The Dataset and Calibration Measurements

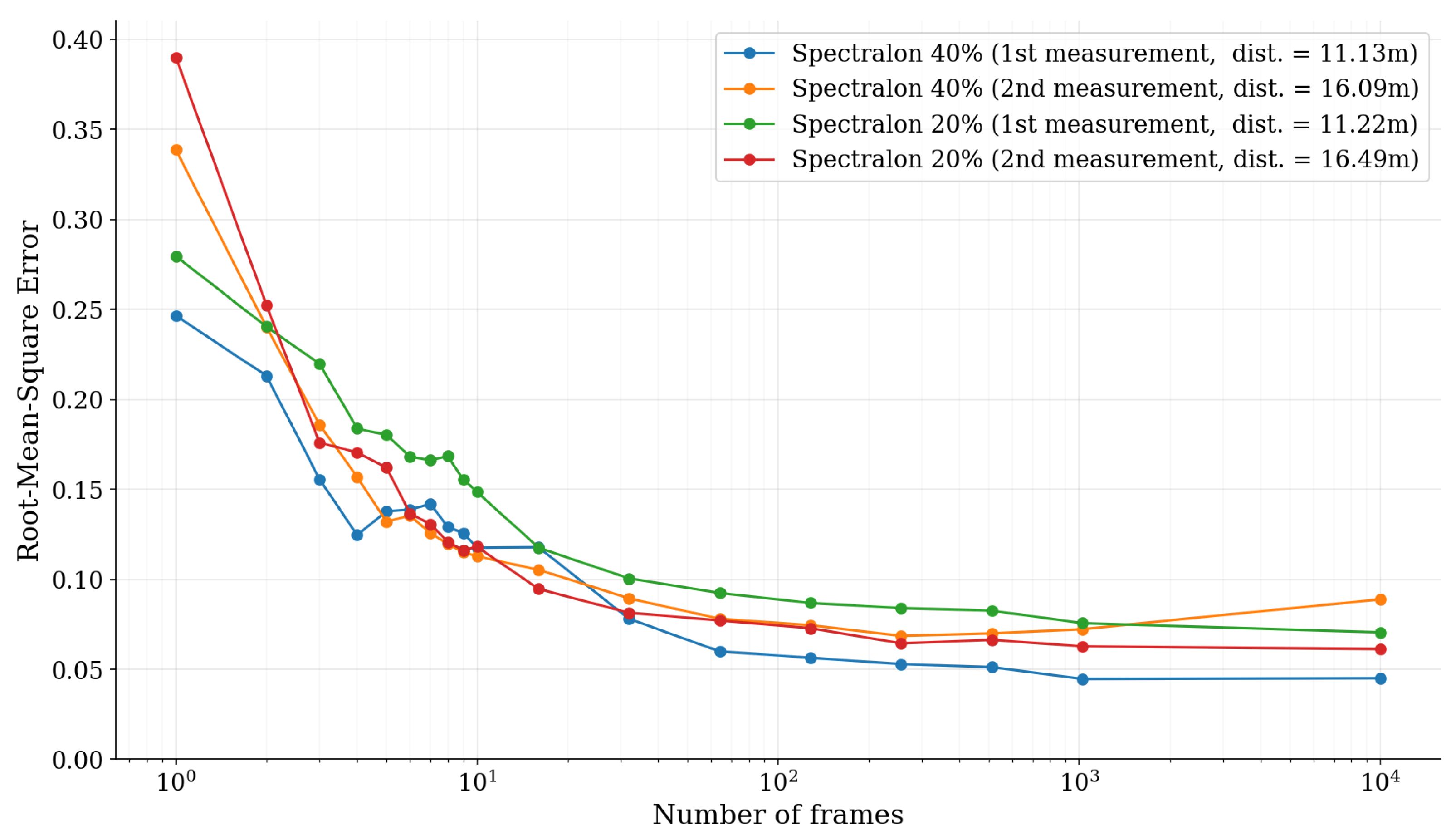

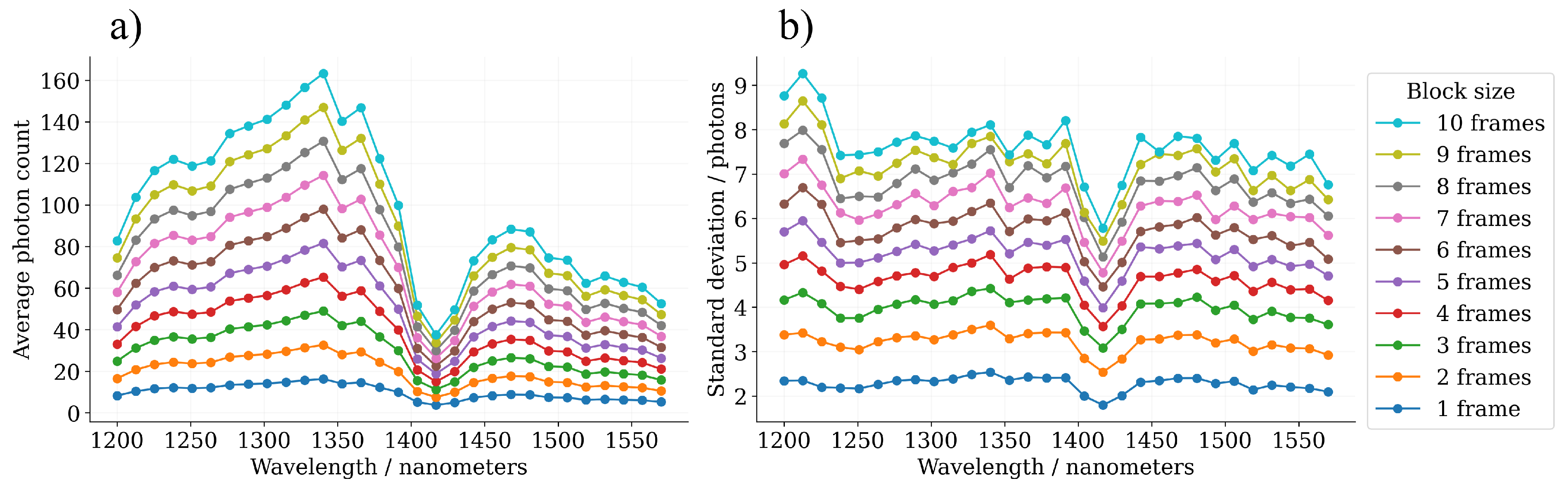

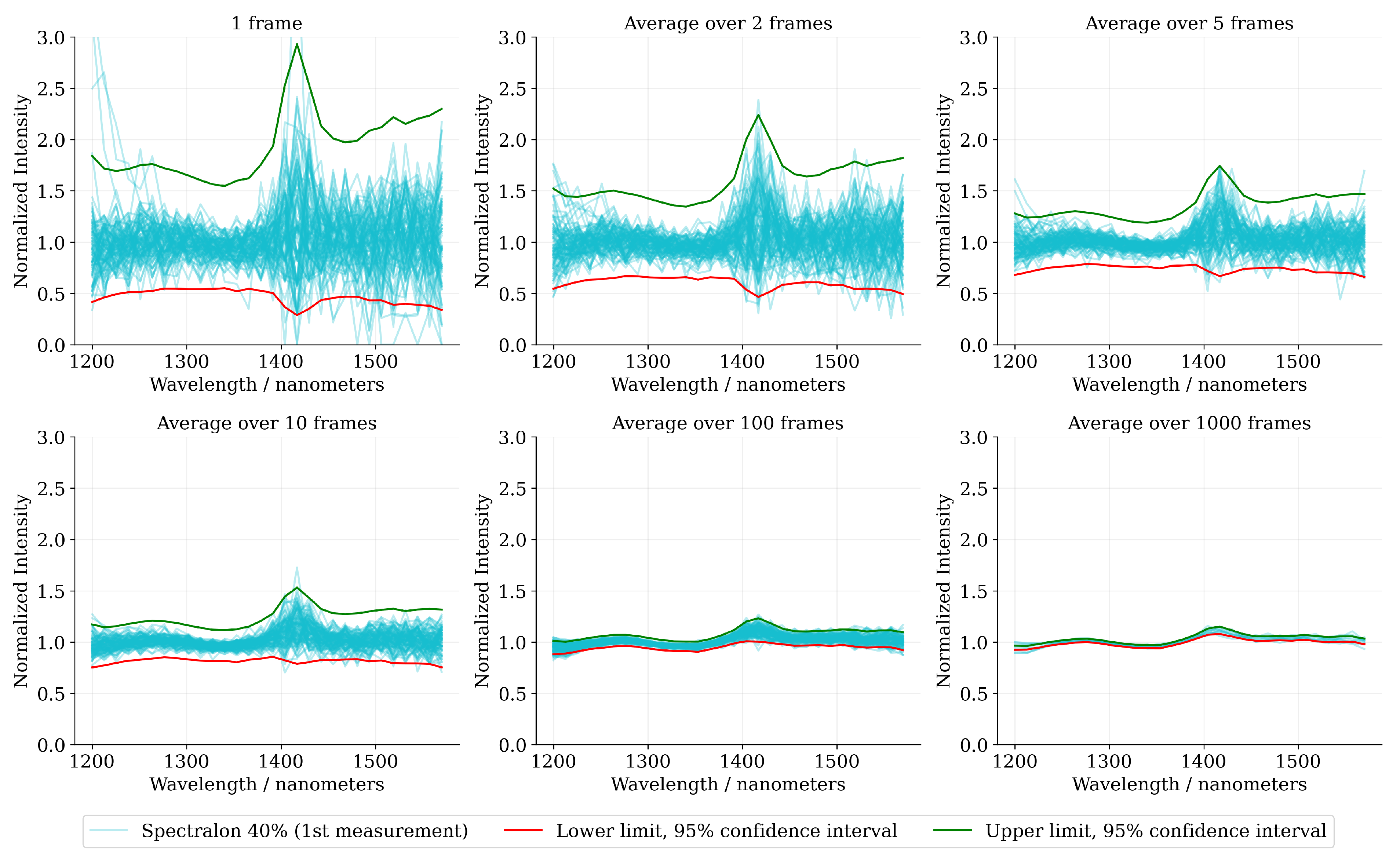

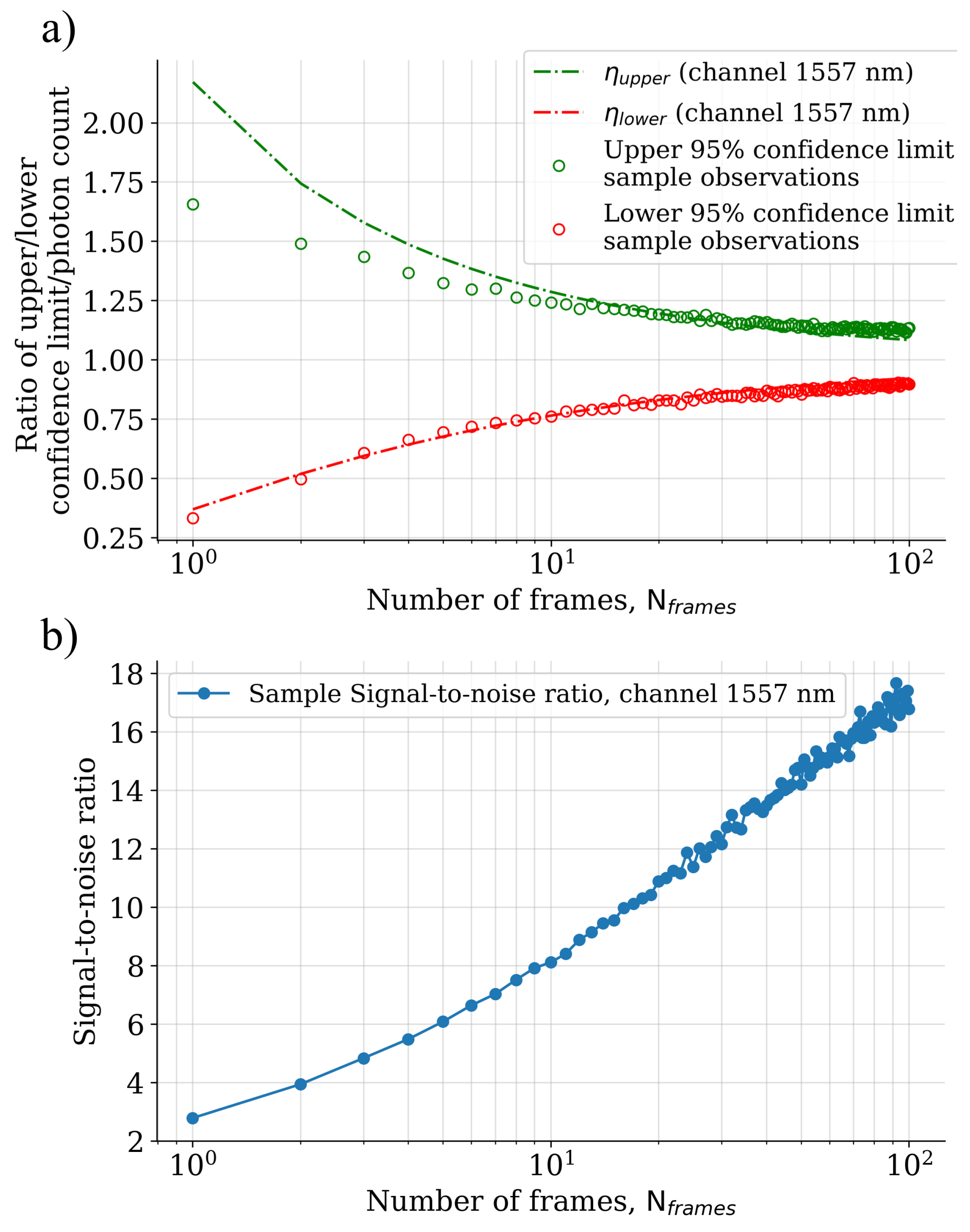

4.2. Spectral Reflectance Measurement Accuracy in the Low-Photon Flux Regime

4.3. Separability of the Hyperspectral Single Photon Data

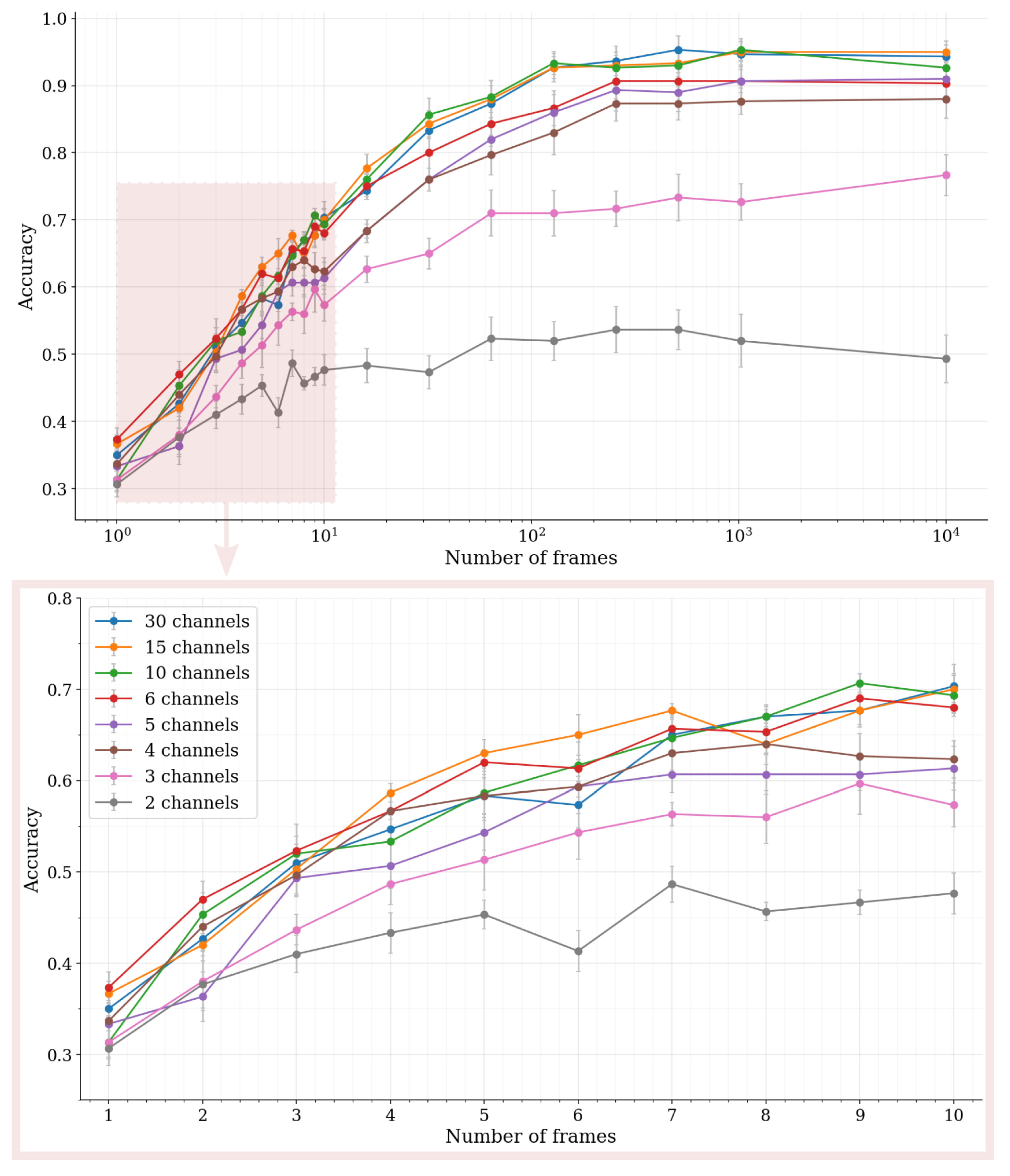

4.4. Classification with Random Forest Classifier

5. Discussion

5.1. Spectral Reflectance Measurement Accuracy in the Low-Photon Flux Regime

5.2. Hyperspectral Single Photon Data Separability and Feasibility for Classification Purposes

5.3. Principal Implications for Autonomous Vehicle Perception Systems

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Payre, W.; Cestac, J.; Delhomme, P. Intention to use a fully automated car: Attitudes and a priori acceptability. Transp. Res. Part Traffic Psychol. Behav. 2014, 27, 252–263. [Google Scholar] [CrossRef] [Green Version]

- Weyer, J.; Fink, R.D.; Adelt, F. Human–machine cooperation in smart cars. An empirical investigation of the loss-of-control thesis. Saf. Sci. 2015, 72, 199–208. [Google Scholar] [CrossRef]

- Alessandrini, A.; Campagna, A.; Delle Site, P.; Filippi, F.; Persia, L. Automated vehicles and the rethinking of mobility and cities. Transp. Res. Procedia 2015, 5, 145–160. [Google Scholar] [CrossRef] [Green Version]

- Rudin-Brown, C.M.; Parker, H.A. Behavioural adaptation to adaptive cruise control (ACC): Implications for preventive strategies. Transp. Res. Part Traffic Psychol. Behav. 2004, 7, 59–76. [Google Scholar] [CrossRef]

- Shanker, R.; Jonas, A.; Devitt, S.; Huberty, K.; Flannery, S.; Greene, W.; Swinburne, B.; Locraft, G.; Wood, A.; Weiss, K.; et al. Autonomous cars: Self-driving the new auto industry paradigm. Morgan Stanley Blue Pap. 2013, 1–109. [Google Scholar]

- Rasshofer, R.H.; Gresser, K. Automotive radar and lidar systems for next generation driver assistance functions. Adv. Radio Sci. 2005, 3, 205–209. [Google Scholar] [CrossRef] [Green Version]

- Rapp, J.; Tachella, J.; Altmann, Y.; McLaughlin, S.; Goyal, V.K. Advances in single-photon lidar for autonomous vehicles: Working principles, challenges, and recent advances. IEEE Signal Process. Mag. 2020, 37, 62–71. [Google Scholar] [CrossRef]

- Pasquinelli, K.; Lussana, R.; Tisa, S.; Villa, F.; Zappa, F. Single-photon detectors modeling and selection criteria for high-background LiDAR. IEEE Sens. J. 2020, 20, 7021–7032. [Google Scholar] [CrossRef]

- Du, P.; Zhang, F.; Li, Z.; Liu, Q.; Gong, M.; Fu, X. Single-photon detection approach for autonomous vehicles sensing. IEEE Trans. Veh. Technol. 2020, 69, 6067–6078. [Google Scholar] [CrossRef]

- Halimi, A.; Maccarone, A.; Lamb, R.A.; Buller, G.S.; McLaughlin, S. Robust and guided bayesian reconstruction of single-photon 3d lidar data: Application to multispectral and underwater imaging. IEEE Trans. Comput. Imaging 2021, 7, 961–974. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for autonomous driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Takai, I.; Matsubara, H.; Soga, M.; Ohta, M.; Ogawa, M.; Yamashita, T. Single-photon avalanche diode with enhanced NIR-sensitivity for automotive LIDAR systems. Sensors 2016, 16, 459. [Google Scholar] [CrossRef]

- Powers, M.A.; Davis, C.C. Spectral LADAR: Towards active 3D multispectral imaging. In Proceedings of the Laser Radar Technology and Applications XV, International Society for Optics and Photonics, Saint Petersburg, Russia, 5–9 July 2010; Volume 7684, p. 768409. [Google Scholar]

- Tabirian, A.M.; Jenssen, H.P.; Buchter, S.; Hoffman, H.J. Multi-Wavelengths Infrared Laser. U.S. Patent 6,567,431, 2003. [Google Scholar]

- Buchter, S.C.; Ludvigsen, H.E.; Kaivola, M. Method of Generating Supercontinuum Optical Radiation, Supercontinuum Optical Radiation Source, and Use Thereof. U.S. Patent 8,000,574, 2011. [Google Scholar]

- Kaasalainen, S.; Lindroos, T.; Hyyppa, J. Toward hyperspectral lidar: Measurement of spectral backscatter intensity with a supercontinuum laser source. IEEE Geosci. Remote Sens. Lett. 2007, 4, 211–215. [Google Scholar] [CrossRef]

- Chen, Y.; Räikkönen, E.; Kaasalainen, S.; Suomalainen, J.; Hakala, T.; Hyyppä, J.; Chen, R. Two-channel hyperspectral LiDAR with a supercontinuum laser source. Sensors 2010, 10, 7057–7066. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20, 7119–7127. [Google Scholar] [CrossRef]

- Vauhkonen, J.; Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Nevalainen, O.; Vastaranta, M.; Holopainen, M.; Hyyppä, J. Classification of spruce and pine trees using active hyperspectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1138–1141. [Google Scholar] [CrossRef]

- Du, L.; Gong, W.; Shi, S.; Yang, J.; Sun, J.; Zhu, B.; Song, S. Estimation of rice leaf nitrogen contents based on hyperspectral LIDAR. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 136–143. [Google Scholar] [CrossRef]

- Nevalainen, O.; Hakala, T.; Suomalainen, J.; Mäkipää, R.; Peltoniemi, M.; Krooks, A.; Kaasalainen, S. Fast and nondestructive method for leaf level chlorophyll estimation using hyperspectral LiDAR. Agric. For. Meteorol. 2014, 198, 250–258. [Google Scholar] [CrossRef]

- Du, L.; Jin, Z.; Chen, B.; Chen, B.; Gao, W.; Yang, J.; Shi, S.; Song, S.; Wang, M.; Gong, W.; et al. Application of Hyperspectral LiDAR on 3-D Chlorophyll-Nitrogen Mapping of Rohdea Japonica in Laboratory. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 9667–9679. [Google Scholar] [CrossRef]

- Sun, J.; Shi, S.; Yang, J.; Chen, B.; Gong, W.; Du, L.; Mao, F.; Song, S. Estimating leaf chlorophyll status using hyperspectral lidar measurements by PROSPECT model inversion. Remote Sens. Environ. 2018, 212, 1–7. [Google Scholar] [CrossRef]

- Shao, H.; Chen, Y.; Yang, Z.; Jiang, C.; Li, W.; Wu, H.; Wang, S.; Yang, F.; Chen, J.; Puttonen, E.; et al. Feasibility study on hyperspectral LiDAR for ancient Huizhou-style architecture preservation. Remote Sens. 2019, 12, 88. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Jiang, C.; Hyyppä, J.; Qiu, S.; Wang, Z.; Tian, M.; Li, W.; Puttonen, E.; Zhou, H.; Feng, Z.; et al. Feasibility study of ore classification using active hyperspectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1785–1789. [Google Scholar] [CrossRef]

- Puttonen, E.; Hakala, T.; Nevalainen, O.; Kaasalainen, S.; Krooks, A.; Karjalainen, M.; Anttila, K. Artificial target detection with a hyperspectral LiDAR over 26-h measurement. Opt. Eng. 2015, 54, 013105. [Google Scholar] [CrossRef]

- Suomalainen, J.; Hakala, T.; Kaartinen, H.; Räikkönen, E.; Kaasalainen, S. Demonstration of a virtual active hyperspectral LiDAR in automated point cloud classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, 637–641. [Google Scholar] [CrossRef]

- Chen, B.; Shi, S.; Sun, J.; Gong, W.; Yang, J.; Du, L.; Guo, K.; Wang, B.; Chen, B. Hyperspectral lidar point cloud segmentation based on geometric and spectral information. Opt. Express 2019, 27, 24043–24059. [Google Scholar] [CrossRef]

- Jiang, C.; Chen, Y.; Wu, H.; Li, W.; Zhou, H.; Bo, Y.; Shao, H.; Song, S.; Puttonen, E.; Hyyppä, J. Study of a high spectral resolution hyperspectral LiDAR in vegetation red edge parameters extraction. Remote Sens. 2019, 11, 2007. [Google Scholar] [CrossRef] [Green Version]

- Evans, B.J.; Mitra, P. Multi-spectral LADAR. U.S. Patent 6,882,409, 19 April 2005. [Google Scholar]

- Niclass, C.; Favi, C.; Kluter, T.; Monnier, F.; Charbon, E. Single-photon synchronous detection. IEEE J. Solid-State Circuits 2009, 44, 1977–1989. [Google Scholar] [CrossRef]

- Li, Z.P.; Ye, J.T.; Huang, X.; Jiang, P.Y.; Cao, Y.; Hong, Y.; Yu, C.; Zhang, J.; Zhang, Q.; Peng, C.Z.; et al. Single-photon imaging over 200 km. Optica 2021, 8, 344–349. [Google Scholar] [CrossRef]

- Pawlikowska, A.M.; Halimi, A.; Lamb, R.A.; Buller, G.S. Single-photon three-dimensional imaging at up to 10 km range. Opt. Express 2017, 25, 11919–11931. [Google Scholar] [CrossRef]

- Bronzi, D.; Zou, Y.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Automotive three-dimensional vision through a single-photon counting SPAD camera. IEEE Trans. Intell. Transp. Syst. 2015, 17, 782–795. [Google Scholar] [CrossRef] [Green Version]

- Buller, G.S.; Harkins, R.D.; McCarthy, A.; Hiskett, P.A.; MacKinnon, G.R.; Smith, G.R.; Sung, R.; Wallace, A.M.; Lamb, R.A.; Ridley, K.D.; et al. Multiple wavelength time-of-flight sensor based on time-correlated single-photon counting. Rev. Sci. Instrum. 2005, 76, 083112. [Google Scholar] [CrossRef]

- Altmann, Y.; Maccarone, A.; McCarthy, A.; Buller, G.; McLaughlin, S. Joint spectral clustering and range estimation for 3D scene reconstruction using multispectral Lidar waveforms. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 513–517. [Google Scholar]

- Altmann, Y.; Maccarone, A.; McCarthy, A.; Newstadt, G.; Buller, G.S.; McLaughlin, S.; Hero, A. Robust spectral unmixing of sparse multispectral lidar waveforms using gamma Markov random fields. IEEE Trans. Comput. Imaging 2017, 3, 658–670. [Google Scholar] [CrossRef] [Green Version]

- Matikainen, L.; Karila, K.; Litkey, P.; Ahokas, E.; Hyyppä, J. Combining single photon and multispectral airborne laser scanning for land cover classification. ISPRS J. Photogramm. Remote Sens. 2020, 164, 200–216. [Google Scholar] [CrossRef]

- Morsy, S.; Shaker, A.; El-Rabbany, A. Using multispectral airborne LiDAR data for land/water discrimination: A case study at Lake Ontario, Canada. Appl. Sci. 2018, 8, 349. [Google Scholar] [CrossRef] [Green Version]

- Wallace, A.M.; McCarthy, A.; Nichol, C.J.; Ren, X.; Morak, S.; Martinez-Ramirez, D.; Woodhouse, I.H.; Buller, G.S. Design and evaluation of multispectral lidar for the recovery of arboreal parameters. IEEE Trans. Geosci. Remote Sens. 2013, 52, 4942–4954. [Google Scholar] [CrossRef] [Green Version]

- Johnson, K.; Vaidyanathan, M.; Xue, S.; Tennant, W.E.; Kozlowski, L.J.; Hughes, G.W.; Smith, D.D. Adaptive LaDAR receiver for multispectral imaging. In Proceedings of the Laser Radar Technology and Applications VI, SPIE, Orlando, FL, USA, 17–19 April 2001; Volume 4377, pp. 98–105. [Google Scholar]

- Shin, D.; Xu, F.; Wong, F.N.; Shapiro, J.H.; Goyal, V.K. Computational multi-depth single-photon imaging. Opt. Express 2016, 24, 1873–1888. [Google Scholar] [CrossRef]

- Tachella, J.; Altmann, Y.; Márquez, M.; Arguello-Fuentes, H.; Tourneret, J.Y.; McLaughlin, S. Bayesian 3D reconstruction of subsampled multispectral single-photon Lidar signals. IEEE Trans. Comput. Imaging 2019, 6, 208–220. [Google Scholar] [CrossRef] [Green Version]

- Tachella, J.; Altmann, Y.; Mellado, N.; McCarthy, A.; Tobin, R.; Buller, G.S.; Tourneret, J.Y.; McLaughlin, S. Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers. Nat. Commun. 2019, 10, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Malkamäki, T.; Kaasalainen, S.; Ilinca, J. Portable hyperspectral lidar utilizing 5 GHz multichannel full waveform digitization. Opt. Express 2019, 27, A468–A480. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Li, W.; Hyyppä, J.; Wang, N.; Jiang, C.; Meng, F.; Tang, L.; Puttonen, E.; Li, C. A 10-nm spectral resolution hyperspectral LiDAR system based on an acousto-optic tunable filter. Sensors 2019, 19, 1620. [Google Scholar] [CrossRef] [Green Version]

- Ren, X.; Altmann, Y.; Tobin, R.; Mccarthy, A.; Mclaughlin, S.; Buller, G.S. Wavelength-time coding for multispectral 3D imaging using single-photon LiDAR. Opt. Express 2018, 26, 30146–30161. [Google Scholar] [CrossRef] [PubMed]

- Connolly, P.W.; Valli, J.; Shah, Y.D.; Altmann, Y.; Grant, J.; Accarino, C.; Rickman, C.; Cumming, D.R.; Buller, G.S. Simultaneous multi-spectral, single-photon fluorescence imaging using a plasmonic colour filter array. J. Biophotonics 2021, 14, e202000505. [Google Scholar] [CrossRef] [PubMed]

- Ulku, A.C.; Bruschini, C.; Antolović, I.M.; Kuo, Y.; Ankri, R.; Weiss, S.; Michalet, X.; Charbon, E. A 512× 512 SPAD image sensor with integrated gating for widefield FLIM. IEEE J. Sel. Top. Quantum Electron. 2018, 25, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Morimoto, K.; Ardelean, A.; Wu, M.L.; Ulku, A.C.; Antolovic, I.M.; Bruschini, C.; Charbon, E. Megapixel time-gated SPAD image sensor for 2D and 3D imaging applications. Optica 2020, 7, 346–354. [Google Scholar] [CrossRef]

- Fox, M. Quantum Optics: An Introduction; Oxford University Press: Oxford, UK, 2006; Volume 15. [Google Scholar]

- Shin, D.; Kirmani, A.; Goyal, V.K.; Shapiro, J.H. Photon-efficient computational 3-D and reflectivity imaging with single-photon detectors. IEEE Trans. Comput. Imaging 2015, 1, 112–125. [Google Scholar] [CrossRef] [Green Version]

- Yang, F.; Lu, Y.M.; Sbaiz, L.; Vetterli, M. Bits from photons: Oversampled image acquisition using binary poisson statistics. IEEE Trans. Image Process. 2011, 21, 1421–1436. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buchner, A.; Hadrath, S.; Burkard, R.; Kolb, F.M.; Ruskowski, J.; Ligges, M.; Grabmaier, A. Analytical Evaluation of Signal-to-Noise Ratios for Avalanche-and Single-Photon Avalanche Diodes. Sensors 2021, 21, 2887. [Google Scholar] [CrossRef] [PubMed]

- Hanley, J.A. A more intuitive and modern way to compute a small-sample confidence interval for the mean of a Poisson distribution. Stat. Med. 2019, 38, 5113–5119. [Google Scholar] [CrossRef] [PubMed]

- Jupp, D.L.; Culvenor, D.; Lovell, J.; Newnham, G.; Strahler, A.; Woodcock, C. Estimating forest LAI profiles and structural parameters using a ground-based laser called ‘Echidna®. Tree Physiol. 2009, 29, 171–181. [Google Scholar] [CrossRef]

- Okhrimenko, M.; Coburn, C.; Hopkinson, C. Multi-spectral lidar: Radiometric calibration, canopy spectral reflectance, and vegetation vertical SVI profiles. Remote Sens. 2019, 11, 1556. [Google Scholar] [CrossRef] [Green Version]

- Pawlikowska, A.M.; Pilkington, R.M.; Gordon, K.J.; Hiskett, P.A.; Buller, G.S.; Lamb, R.A. Long-range 3D single-photon imaging lidar system. In Proceedings of the Electro-Optical Remote Sensing, Photonic Technologies, and Applications VIII; and Military Applications in Hyperspectral Imaging and High Spatial Resolution Sensing II, Amsterdam, The Netherlands, 22–23 September 2014; SPIE: Bellingham, WA, USA, 2014; Volume 9250, pp. 21–30. [Google Scholar]

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9. [Google Scholar]

- Wattenberg, M.; Viégas, F.; Johnson, I. How to Use t-SNE Effectively. Distill 2016. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ho, T.K. Random decision forests. In Proceedings of the Proceedings of 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 278–282. [Google Scholar]

- Oshiro, T.M.; Perez, P.S.; Baranauskas, J.A. How many trees in a random forest? In Proceedings of the International Workshop on Machine Learning and Data Mining in Pattern Recognition, Berlin, Germany, 13–20 July 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 154–168. [Google Scholar]

- Straka, I.; Grygar, J.; Hloušek, J.; Ježek, M. Counting statistics of actively quenched SPADs under continuous illumination. J. Light. Technol. 2020, 38, 4765–4771. [Google Scholar] [CrossRef]

- Kindt, W.; Van Zeijl, H.; Middelhoek, S. Optical cross talk in geiger mode avalanche photodiode arrays: Modeling, prevention and measurement. In Proceedings of the 28th European Solid-State Device Research Conference, Bordeaux, France, 8–10 September 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 192–195. [Google Scholar]

- Rech, I.; Ingargiola, A.; Spinelli, R.; Labanca, I.; Marangoni, S.; Ghioni, M.; Cova, S. Optical crosstalk in single photon avalanche diode arrays: A new complete model. Opt. Express 2008, 16, 8381–8394. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Braga, L.H.; Stoppa, D.; Pancheri, L. Characterization of single-photon avalanche diode arrays in 150nm CMOS technology. In Proceedings of the 2015 XVIII AISEM Annual Conference, Trento, Italy, 3–5 February 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–4. [Google Scholar]

- Prochazka, I.; Hamal, K.; Kral, L.; Blazej, J. Silicon photon counting detector optical cross-talk effect. In Proceedings of the Photonics, Devices, and Systems III, Prague, Czech Republic, 8–11 June 2005; SPIE: Bellingham, WA, USA, 2006; Volume 6180, p. 618001. [Google Scholar]

- Chandrasekharan, H.K.; Izdebski, F.; Gris-Sánchez, I.; Krstajić, N.; Walker, R.; Bridle, H.L.; Dalgarno, P.A.; MacPherson, W.N.; Henderson, R.K.; Birks, T.A.; et al. Multiplexed single-mode wavelength-to-time mapping of multimode light. Nat. Commun. 2017, 8, 1–10. [Google Scholar] [CrossRef]

- Wrzesinski, P.J.; Pestov, D.; Lozovoy, V.V.; Gord, J.R.; Dantus, M.; Roy, S. Group-velocity-dispersion measurements of atmospheric and combustion-related gases using an ultrabroadband-laser source. Opt. Express 2011, 19, 5163–5170. [Google Scholar] [CrossRef] [PubMed]

- Tontini, A.; Gasparini, L.; Perenzoni, M. Numerical model of spad-based direct time-of-flight flash lidar CMOS image sensors. Sensors 2020, 20, 5203. [Google Scholar] [CrossRef]

- Incoronato, A.; Locatelli, M.; Zappa, F. Statistical Model for SPAD-based Time-of-Flight systems and photons pile-up correction. In Proceedings of the The European Conference on Lasers and Electro-Optics. Optical Society of America, Munich, Germany, 21–25 June 2021; p. ch_p_10. [Google Scholar]

- Nasarudin, N.E.M.; Shafri, H.Z.M. Development and utilization of urban spectral library for remote sensing of urban environment. J. Urban Environ. Eng. 2011, 5, 44–56. [Google Scholar] [CrossRef]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1887–1893. [Google Scholar]

- Maanpää, J.; Taher, J.; Manninen, P.; Pakola, L.; Melekhov, I.; Hyyppä, J. Multimodal end-to-end learning for autonomous steering in adverse road and weather conditions. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2020; IEEE: Piscataway, NJ, USA, 2021; pp. 699–706. [Google Scholar]

- Ghallabi, F.; Nashashibi, F.; El-Haj-Shhade, G.; Mittet, M.A. Lidar-based lane marking detection for vehicle positioning in an hd map. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2209–2214. [Google Scholar]

- Biasutti, P.; Lepetit, V.; Brédif, M.; Aujol, J.F.; Bugeau, A. LU-Net: A Simple Approach to 3D LiDAR Point Cloud Semantic Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Ouster, I. Webinar: Introducing the L2X chip—Up to 2X the Data Output to power Ouster’s Most Reliable and Rugged Sensors. 2021. Available online: https://ouster.com/resources/webinars/l2x-lidar-chip/ (accessed on 1 June 2022).

- Villa, F.; Severini, F.; Madonini, F.; Zappa, F. SPADs and sipms arrays for long-range high-speed light detection and ranging (LiDAR). Sensors 2021, 21, 3839. [Google Scholar] [CrossRef] [PubMed]

- Busck, J.; Heiselberg, H. Gated viewing and high-accuracy three-dimensional laser radar. Appl. Opt. 2004, 43, 4705–4710. [Google Scholar] [CrossRef]

- Busck, J. Underwater 3-D optical imaging with a gated viewing laser radar. Opt. Eng. 2005, 44, 116001. [Google Scholar] [CrossRef]

- Andersson, P. Long-range three-dimensional imaging using range-gated laser radar images. Opt. Eng. 2006, 45, 034301. [Google Scholar] [CrossRef]

- Ullrich, A.; Pfennigbauer, M. Linear LIDAR versus Geiger-mode LIDAR: Impact on data properties and data quality. In Proceedings of the Laser Radar Technology and Applications XXI, Baltimore, MD, USA, 19–20 April 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9832, pp. 29–45. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taher, J.; Hakala, T.; Jaakkola, A.; Hyyti, H.; Kukko, A.; Manninen, P.; Maanpää, J.; Hyyppä, J. Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception. Sensors 2022, 22, 5759. https://doi.org/10.3390/s22155759

Taher J, Hakala T, Jaakkola A, Hyyti H, Kukko A, Manninen P, Maanpää J, Hyyppä J. Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception. Sensors. 2022; 22(15):5759. https://doi.org/10.3390/s22155759

Chicago/Turabian StyleTaher, Josef, Teemu Hakala, Anttoni Jaakkola, Heikki Hyyti, Antero Kukko, Petri Manninen, Jyri Maanpää, and Juha Hyyppä. 2022. "Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception" Sensors 22, no. 15: 5759. https://doi.org/10.3390/s22155759

APA StyleTaher, J., Hakala, T., Jaakkola, A., Hyyti, H., Kukko, A., Manninen, P., Maanpää, J., & Hyyppä, J. (2022). Feasibility of Hyperspectral Single Photon Lidar for Robust Autonomous Vehicle Perception. Sensors, 22(15), 5759. https://doi.org/10.3390/s22155759