1. Introduction

As cities develop, energy-saving and environmentally friendly neon lights have become a meaningful way to enhance the image of a city [

1,

2] and an essential part of the urban night scene [

3]. Although the patterns vary in style, the vital elements are all irregular curves. Measuring the length of the distinctive curves that make up the pattern from the neon design renderings is necessary before production. It significantly improves efficiency, saves raw materials, and guides production. In addition, in the field of construction work, measuring and analyzing the number and length of cracks on building surfaces based on captured pictures of bridges, tunnels, roads, and other facilities is a significant way to assess their risk and quality [

4,

5]. Since there are various background interferences in addition to the target curve in the design drawings and captured pictures, it is of great significance in engineering practice to measure the length of the curves in the images with background interference.

Since separating the background noise from the binarized image is difficult, it is necessary to remove the noise interference before the length measurement. The traditional segmentation method is manual tracing, which has low measurement efficiency and significant error in the results. The blue light and ultraviolet rays from the computer screen will cause damage to the staff’s eyes [

6,

7]. With the development of computer technology, image segmentation technology to segment and extract target curves in design drawings has gradually become a better way to replace manual tracing. Threshold segmentation is a technique with the most straightforward principle and the most comprehensive application range in image segmentation technology. Two classical threshold segmentation algorithms, the bimodal method [

8] and OTSU [

9], directly perform image segmentation according to the grayscale difference between the target and the background. The principle is simple, but it only considers the target segmentation under a single background, and the segmentation effect on images with complex backgrounds is poor. AL-Smadi et al. proposed a foreground bimodal segmentation algorithm based on the bimodal algorithm. The algorithm can accurately segment images in urban traffic scenes, but the operation steps are cumbersome, and the algorithm’s complexity is very high [

10]. Researchers combined the classical OTSU algorithm with the grayscale histogram and proposed a new unsupervised segmentation algorithm. The algorithm runs quickly, but the main application scenario is the rough estimation of the target, and the segmentation accuracy is low [

11]. In addition, some researchers improve the OTSU algorithm by modifying the weight factor [

12,

13]. Compared with the foreground bimodal segmentation algorithm, these improved methods maintain a lower time complexity and improve the segmentation accuracy to a certain extent. However, it is only suitable for images with less background interference, and the accuracy of target segmentation is low in images with more complex backgrounds. The algorithms in [

11,

12,

13] all belong to improved OTSU segmentation algorithms, which are relatively common in complexity compared to the algorithm in [

10]. Still, none of them solve the problem of low accuracy when extracting targets from complex backgrounds.

After getting the target curve, the next step is to measure the length. There are two ways to measure the length of irregular curves: direct measurement and indirect measurement. Direct measurements generally begin with the refinement of the curve to obtain a single-pixel skeleton. Then the line length is measured by measuring the size of the single-pixel structure. Many researchers have investigated direct measurement methods. Kim et al. proposed using the coordinate difference between the two endpoints of the crack skeleton in the X or Y direction as the measured value when measuring the length of the concrete fracture area. This method is suitable for a rough estimate of extent, but cannot accurately measure fracture length [

14]. Then, after obtaining the single-pixel skeleton of the target curve, the researchers first received the total number of pixels on the structure by counting or integrating, and then multiplied the length of a single pixel to calculate the size of the curve [

15,

16,

17]. This method is simple in logic and easy to implement, but oversimplifies the problem, resulting in low calculation accuracy. To further improve the accuracy, the researchers improved the method which was used in the literature [

15,

16,

17] by enhancing the integrand [

18], introducing the idea of displacement [

19,

20], and classifying the pixels [

21,

22]. Although the angle of improvement is different, these methods essentially use “

” and “

” to replace the length represented by a pixel and calculate the total distance by accumulating the sizes of all pixels on the skeleton. These methods embody the idea of classification and improve measurement accuracy to a certain extent. However, the measurement accuracy still needs further improvement. In addition to directly measuring the length of the curve through the single-pixel skeleton, researchers also use indirect measurement to measure the length. Some researchers use image thinning to extract the edges of the original curve and use half the length as a measurement [

23,

24]. Due to the cumbersome steps in this method, the algorithm is complex and time-consuming. The measurement methods proposed in the above literature have low accuracy or time-consuming extended defects. A new measurement method with low complexity and high accuracy is urgently needed.

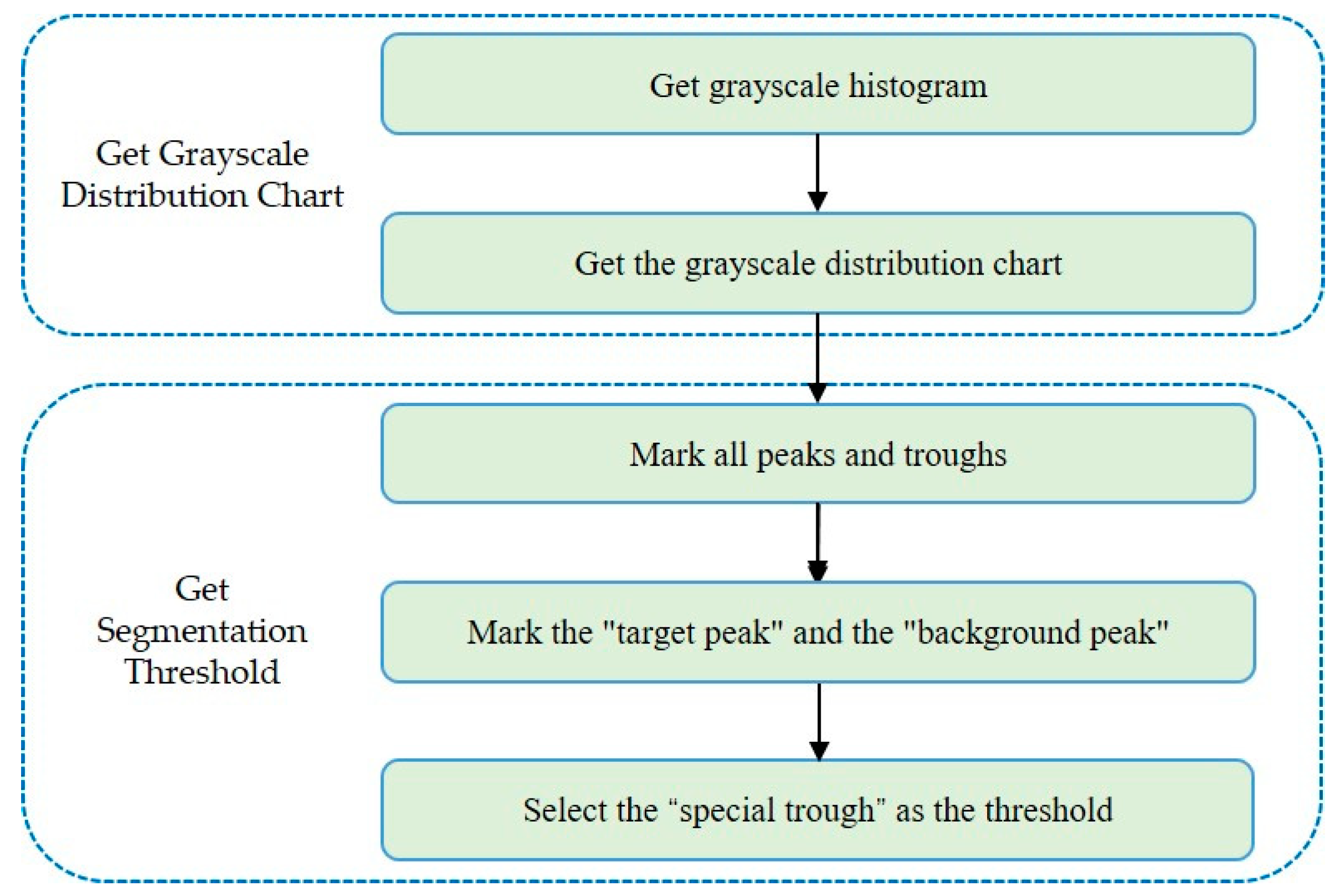

To improve the speed and accuracy of curve segmentation and length measurement, we first convert the “multimodal histogram” into a “quasi-bimodal histogram” to quickly determine the threshold and segment the target curve. Then we refine the curve and accurately calculate the length of the skeleton through the 8-neighborhood features of the pixels on the structure. Finally, we calculate the length of the target curve through the size transformation. The main contributions of this paper are as follows:

- (1)

We propose the QBTS algorithm based on a grayscale histogram, which can quickly and accurately segment the target curve from the neon light design renderings with background interference.

- (2)

We propose the SPSLM algorithm based on the 8-neighborhood model, which improves the accuracy of irregular curve length measurement.

- (3)

We constructed three new image datasets for performance testing of the two proposed algorithms.

The rest of the paper is organized as follows. The steps of the proposed method are described in

Section 2.

Section 3 discusses experiments on two original datasets in this paper and analyzes the experimental results.

Section 4 summarizes the work of this paper.

3. Experiments and Results

This section shows the experimental results of the proposed QBTS algorithm and SPSLM algorithm on the original datasets and compares them with the results of other algorithms. Furthermore, all experiments were performed on an Intel Core i5-9400 2.9 GHz desktop with 8 GB of RAM.

3.1. Performance Metrics

To evaluate the proposed method, we choose accuracy and running speed as evaluation metrics. The segmentation accuracy of the QBTS algorithm is defined as , where represents the number of pixels with the same pixel value in the binary image obtained after image segmentation by the QBTS algorithm and the binary image of the standard segmented image, and represents the total number of pixels. According to the expression of , its range is [0, 1]. The measurement accuracy of the SPSLM algorithm is defined as , where represents the length measurement value of the SPSLM algorithm, and represents the reference value obtained by manual measurement. Since is a manual measurement value, there is also a particular error, so in some cases, the value of may be greater than 1. For the entire dataset, the closer is to 1, the higher the measurement accuracy of the SPSLM algorithm.

In addition, the running speed of an algorithm is usually measured in terms of running time. The shorter the time it takes for the algorithm to complete the segmentation or length measurement, the faster it is.

3.2. Dataset

We conduct experiments on three original datasets to analyze these two algorithms’ accuracy and running speed. Additionally, all images are less than 2000 × 2000 in size and have different pixel dimensions.

Mini. This dataset contains 12 original neon design renderings, as shown in

Figure 13. In addition, the dataset also includes 12 standard target curves that were segmented manually by multiple researchers. This dataset is used to test the segmentation accuracy of the QBTS algorithm.

Neon Rendering. This dataset contains 198 images, all sourced from the Internet. These images are actual neon design renderings with patterned curves and backgrounds with many types of noise inside. This dataset is used to test the running speed of the QBTS algorithm.

Neon Curve. This dataset contains 139 images of neon pattern curves without noise interference and 139 corresponding single-pixel skeleton images. Hunan Kangxuan Technology Co., Ltd. provides the original images and the corresponding curve length value. The single-pixel skeleton image is obtained by refining the original picture through the improved Zhang-Suen algorithm. This dataset is used to test the measurement accuracy and running speed of the SPSLM algorithm.

3.3. Experimental Results

3.3.1. Performance Analysis of the QBTS Algorithm

To test the segmentation accuracy of the QBTS algorithm, we compared it with the OTSU algorithm and the bimodal method. We used three threshold segmentation algorithms to segment the images of the mini dataset, and the experimental results are shown in

Figure 14. Each image segmentation result consists of four images. From left to right are the original image, the binary image of the standard target curve, and the binary image obtained by dividing the QBTS algorithm, the OTSU algorithm, and the bimodal method, respectively. It can be seen intuitively from

Figure 14 that the similarity between the binary image segmented by the QBTS algorithm and the standard binary image is the highest. The images segmented by the other two algorithms still have varying degrees of noise interference, and even the target curve cannot be seen in some segmented images. The results show that the segmentation result of the QBTS algorithm is better, and it can accurately remove noise interference and segment the target curve.

To conduct a more accurate quantitative analysis of the segmentation accuracy of the QBTS algorithm, we calculated the segmentation accuracy of the three algorithms according to the definition of

, as shown in

Figure 15. The ordinate in the figure is the segmentation accuracy of the three algorithms.

The results show that the average segmentation accuracy of the QBTS algorithm is 97.9%, much higher than the 89.6% and 64.4% of the other two algorithms. At the same time, the distribution of the segmentation accuracy of the algorithm is also more concentrated, indicating that the QBTS algorithm has higher robustness. At the same time, we also noticed that the segmented images obtained using the QBTS algorithm are not entirely accurate. There are two main reasons for this: on the one hand, the QBTS algorithm belongs to the global threshold segmentation algorithm and can’t segment all the boundaries of the target curve very finely and accurately. On the other hand, researchers manually segment the reference images of the dataset, and in this process, errors will inevitably occur and affect the experimental results.

In addition to testing segmentation accuracy, this paper also conducts experiments on the running speed of the QBTS algorithm on the Neon rendering dataset.

Figure 16 shows the time required for each of the three algorithms to segment images in the dataset Neon rendering. To compare the segmentation speed more intuitively, we calculated the ratio of the time required by the QBTS algorithm and the OTSU algorithm for segmentation to the time needed for the Bimodal algorithm, as shown in

Figure 17.

We can see from

Figure 16 that the time consumed by the QBTS algorithm to segment images is generally shorter than the other two algorithms. In addition, the figure has some discrete points outside the 1.5IQR range. The main reason is that the time complexity of the QBTS algorithm is

, where

represents the size of the image. The running time is closely related to the image size, so some images with larger sizes will take longer to process. We can see from

Figure 17 that the average values of the split time ratios of the OTSU algorithm, the QBTS algorithm, and the Bimodal algorithm are 0.98 and 0.47, respectively. It shows that for the same image, the segmentation time of the QBTS algorithm is shorter, the segmentation speed is faster, and the segmentation speed has increased by about 50%.

To compare the performance of these three image segmentation algorithms more intuitively and clearly, we summarize and extract the key data from

Figure 15,

Figure 16 and

Figure 17, as shown in

Table 1. It can be seen from

Table 1 that the performance of the QBTS algorithm is much better than that of the OTSU algorithm and the Bimodal algorithm in terms of average segmentation accuracy and segmentation speed.

According to the above experimental results, the QBTS algorithm proposed in this paper performs better when segmenting the target curve from the neon sign design drawing with complex background noise interference. Compared with the OTSU and Bimodal algorithms, it has better segmentation accuracy, robustness, and faster segmentation speed.

3.3.2. Performance Analysis of the SPSLM Algorithm

Figure 18 shows the length measurement accuracy of the SPSLM algorithm and the other four methods. The SPSLM algorithm in the figure is a single-pixel skeleton length measurement algorithm based on the 8-neighborhood model proposed in this paper. Method 1, Method 2, Method 3, and Method 4 are methods for measuring curve lengths used in [

13,

14,

15], [

16,

17,

18,

19,

20], [

12], and [

21,

22], respectively.

We can see from

Figure 18 that the average measurement accuracy of the SPSLM algorithm proposed in this paper is 99.1%, and the average measurement accuracy of the other four methods is 88.3%, 92.5%, 19.9%, and 88.1%, respectively; it shows that the accuracy of the SPSLM algorithm is higher. At the same time, we can also see that some values are greater than 100%. This phenomenon is also consistent with our analysis of

.

After the accuracy test, we ran the SPSLM algorithm’s speed test on the Neon Curve dataset.

Figure 19 shows the running speed of the length measurement algorithms. The results show that the average measurement time of Method 3 is 0.001s, which is much shorter than other methods. Because Method 3 mainly estimates the length through the area range, it has the advantage of low time complexity, but the measurement accuracy is also very low. The average measurement time of Method 4 is 6.90s, much higher than the other four methods. The method includes canny edge detection, image refinement, and skeleton length measurement. The processing process is cumbersome and time-consuming.

Figure 20 shows the running speeds of SPSLM, Method 1, and Method 2 separately. Their average measurement times were 0.615s, 0.565s, and 0.577s, respectively, which were the same and had similar distributions. Because the measurement principle of these three methods is first to perform image refinement to obtain a single-pixel skeleton and then measure the length of the structure, their time complexity is the same, so the measurement speed is the same.

To compare the performance of these five length measurement methods more intuitively and clearly, we summarize and extract the pivotal data from

Figure 18,

Figure 19 and

Figure 20, as shown in

Table 2. As can be seen from

Table 2, in terms of measurement accuracy, the average accuracy of the SPSLM algorithm is much higher than that of other algorithms. In terms of measurement speed, on the premise of ensuring the necessary accuracy, the average running speed of the SPSLM algorithm is comparable to Method 1 and Method 2, and both are much faster than Method 4.

Based on the above analysis of the running speed and the measurement accuracy, the SPSLM algorithm dramatically improves the measurement accuracy while maintaining a low algorithm complexity, and its overall performance is better than the other length measurement methods.

4. Conclusions

This paper used digital image processing techniques to measure the length of irregular curves in neon design renderings. Firstly, a new QBTS algorithm was proposed to segment and extract the target curve. Then, a single-pixel skeleton length measurement algorithm based on the 8-neighborhood model was proposed to measure the length of the skeleton of the target curve. Finally, we conducted tests on the three original datasets of this paper, respectively. The results showed that the average segmentation accuracy of the QBTS algorithm was 97.9%, and the segmentation speed was more than 50% higher than the other two algorithms. The average measurement accuracy of the length measurement algorithm was 99.1%, higher than the four existing length measurement algorithms, and the measurement speed was comparable.

The above results demonstrate that the two algorithms proposed in this paper can be used for the problem of “accurately segmenting irregular target curves from images with background interference and measuring their length accurately”, and can be applied in engineering practice. Subsequent research will further improve the segmentation accuracy and applicability of the threshold segmentation algorithm, laying a solid foundation for future applications in more areas of target curve segmentation.