Image Classification of Wheat Rust Based on Ensemble Learning

Abstract

:1. Introduction

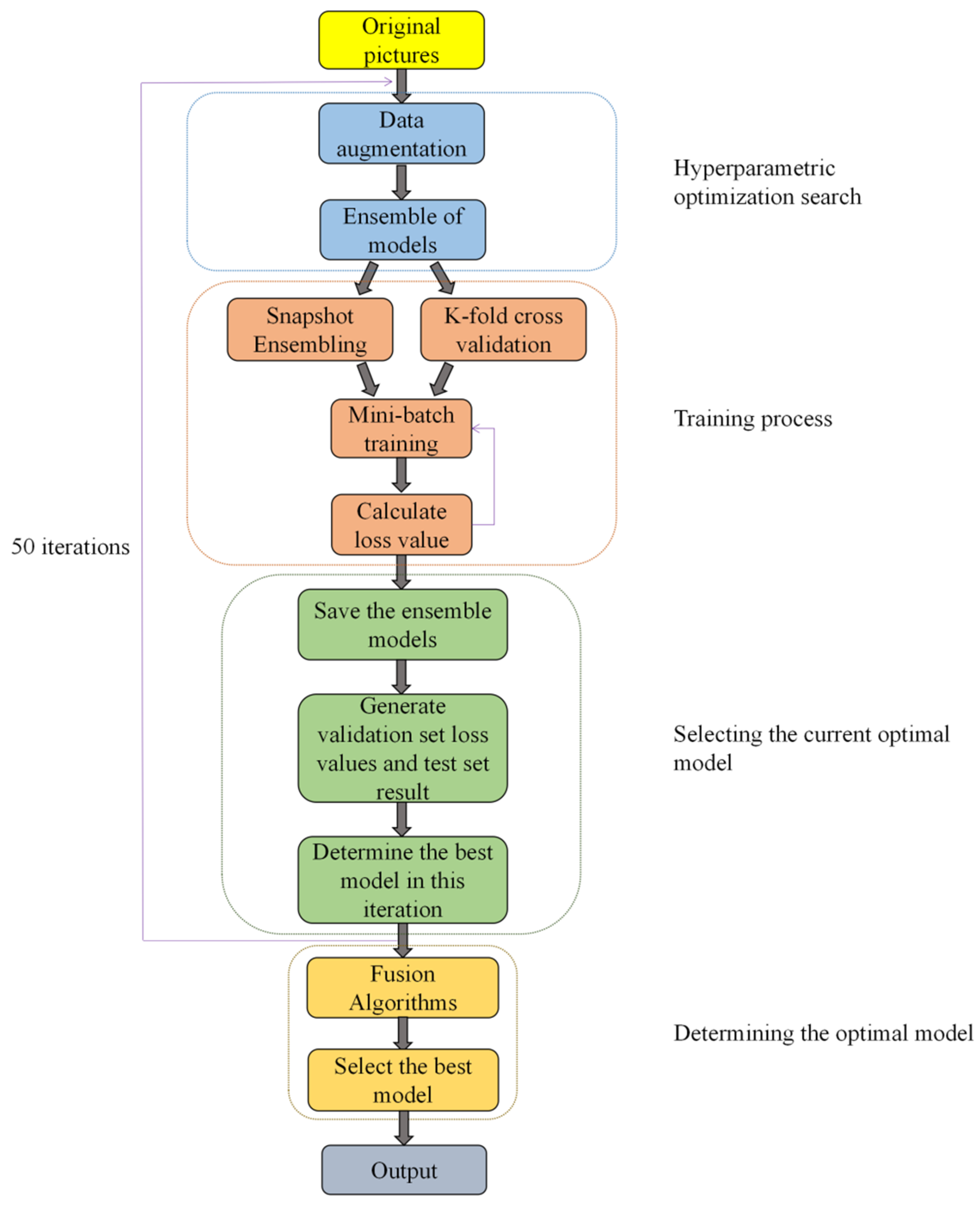

2. Material

3. Methods

3.1. Data Augmentation

3.2. Multiple Convolutional Neural Networks for Ensemble

3.3. SGDR Algorithm

3.4. WCE Loss Function

3.5. Fusion Algorithm

3.6. Performance Metrics

4. Results and Analysis

4.1. The Performance of the SGDR-S

4.2. Advantages of WCE Loss Function

4.3. Performance Comparison of Individual Models and Wheat Rust Based on Ensemble Learning (WR-EL)

5. Discussion

5.1. Comparison of WR-EL and Single Model

5.2. The Superiority of SGDR-S Algorithm

5.3. Contribution of WCE Loss Function

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shiferaw, B.; Smale, M.; Braun, H.J.; Duveiller, E.; Reynolds, M.; Muricho, G. Crops that feed the world 10. Past successes and future challenges to the role played by wheat in global food security. Food Secur. 2013, 5, 291–317. [Google Scholar] [CrossRef]

- El Solh, M.; Nazari, K.; Tadesse, W.; Wellings, C. The growing threat of stripe rust worldwide. In Proceedings of the BGRI 2012 Technical Workshop, Beijing, China, 1–4 September 2012; pp. 1–10. [Google Scholar]

- Bhardwaj, S.C.; Prasad, P.; Gangwar, O.P.; Khan, H.; Kumar, S. Wheat rust research-then and now. Indian J. Agric. Sci. 2016, 86, 1231–1244. [Google Scholar]

- Figueroa, M.; Hammond-Kosack, K.E.; Solomon, P.S. A review of wheat diseases-a field perspective. Mol. Plant Pathol. 2018, 19, 1523–1536. [Google Scholar] [CrossRef] [PubMed]

- Allen-Sader, C.; Thurston, W.; Meyer, M.; Nure, E.; Bacha, N.; Alemayehu, Y.; Stutt, R.; Safka, D.; Craig, A.P.; Derso, E.; et al. An early warning system to predict and mitigate wheat rust diseases in Ethiopia. Environ. Res. Lett. 2019, 14, 115004. [Google Scholar] [CrossRef] [PubMed]

- Patricio, D.I.; Rieder, R. Computer vision and artificial intelligence in precision agriculture for grain crops: A systematic review. Comput. Electron. Agric. 2018, 153, 69–81. [Google Scholar] [CrossRef]

- Pierson, E.A.; Weller, D.M. Use of mixtures of fluorescent pseudomonads to suppress take-all and improve the growth of wheat. Phytopathology 1994, 84, 940–947. [Google Scholar] [CrossRef]

- Martinelli, F.; Scalenghe, R.; Davino, S.; Panno, S.; Scuderi, G.; Ruisi, P.; Villa, P.; Stroppiana, D.; Boschetti, M.; Goulart, L.R.; et al. Advanced methods of plant disease detection. A review. Agron. Sustain. Dev. 2015, 35, 1–25. [Google Scholar] [CrossRef]

- Barbedo, J. A review on the main challenges in automatic plant disease identification based on visible range images. Biosyst. Eng. 2016, 144, 52–60. [Google Scholar] [CrossRef]

- Pujari, J.D.; Yakkundimath, R.; Byadgi, A.S. Detection and classification of fungal disease with Radon transform and support vector machine affected on cereals. Int. J. Comput. Vis. Robot. 2014, 4, 261–280. [Google Scholar] [CrossRef]

- Xiaoli, Z. Diagnosis for Main Disease of Winter Wheat Leaf Based on Image Recognition. Master’s Thesis, Henan Agricultural University, Zhengzhou, China, 2014. [Google Scholar]

- Johannes, A.; Picon, A.; AlVarez-Gila, A.; Echazarra, J.; Rodriguez-Vaamonde, S.; Navajas, A.D.; Ortiz-Barredo, A. Automatic plant disease diagnosis using mobile capture devices, applied on a wheat use case. Comput. Electron. Agric. 2017, 138, 200–209. [Google Scholar] [CrossRef]

- Xu, P.F.; Wu, G.S.; Guo, Y.J.; Chen, X.Y.; Yang, H.T.; Zhan, R.B. Automatic Wheat Leaf Rust Detection and Grading Diagnosis via Embedded Image Processing System. Procedia Comput. Sci. 2017, 107, 836–841. [Google Scholar] [CrossRef]

- Weiwei, H. Study on Feature Extraction and Recognition Medthod for Wheat Disease Image. Master’s Thesis, Anhui Agricultural University, Hefei, China, 2017. [Google Scholar]

- Al-Hiary, H.; Bani-Ahmad, S.; Reyalat, M.; Braik, M.; ALRahamneh, Z. Fast and accurate detection and classification of plant diseases. Int. J. Comput. Appl. 2011, 17, 31–38. [Google Scholar] [CrossRef]

- Hang, Z.; Qing, C.; Yingjie, W.; Yaxin, W.; Chengming, Z.; Fuwei, Y. A method of wheat disease identification based on convolutional neural network. Shandong Agric. Sci. 2018, 50, 137–141. [Google Scholar]

- Qiu, R.C.; Yang, C.; Moghimi, A.; Zhang, M.; Steffenson, B.J.; Hirsch, C.D. Detection of Fusarium Head Blight in Wheat Using a Deep Neural Network and Color Imaging. Remote Sens. 2019, 11, 2658. [Google Scholar] [CrossRef]

- Picon, A.; Alvarez-Gila, A.; Seitz, M.; Ortiz-Barredo, A.; Echazarra, J.; Johannes, A. Deep convolutional neural networks for mobile capture device-based crop disease classification in the wild. Comput. Electron. Agric. 2019, 161, 280–290. [Google Scholar] [CrossRef]

- Wenxia, B.; Qing, S.; Qing, S.; Linsheng, H.; Dong, L.; Jian, Z. Image recognition of field wheat scab based on multi-way convolutional neural network. Trans. Chin. Soc. Agric. Eng. 2020, 36, 174–181. [Google Scholar]

- Zhang, D.Y.; Wang, D.Y.; Gu, C.Y.; Jin, N.; Zhao, H.T.; Chen, G.; Liang, H.Y.; Liang, D. Using Neural Network to Identify the Severity of Wheat Fusarium Head Blight in the Field Environment. Remote Sens. 2019, 11, 2375. [Google Scholar] [CrossRef]

- Ros, A.S.; Doshi-Velez, F. Improving the Adversarial Robustness and Interpretability of Deep Neural Networks by Regularizing Their Input Gradients. In Proceedings of the AAAI’18: AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1660–1669. [Google Scholar]

- Tran, T.T.; Choi, J.W.; Le, T.; Kim, J.W. A Comparative Study of Deep CNN in Forecasting and Classifying the Macronutrient Deficiencies on Development of Tomato Plant. Appl. Sci. 2019, 9, 1601. [Google Scholar] [CrossRef]

- Prasath, G.A.; Panaiyappan, K.A. Design of an integrated learning approach to assist real-time deaf application using voice recognition system. Comput. Electron. Eng. 2022, 102, 108145. [Google Scholar]

- Genaev, M.; Ekaterina, S.; Afonnikov, D. Application of neural networks to image recognition of wheat rust diseases. In Proceedings of the 2020 Cognitive Sciences, Genomics and Bioinformatics (CSGB), Novosibirsk, Russia, 6–10 July 2020; pp. 40–42. [Google Scholar]

- Sood, S.; Singh, H. An implementation and analysis of deep learning models for the detection of wheat rust disease. In Proceedings of the 2020 3rd International Conference on Intelligent Sustainable Systems (ICISS), Thoothukudi, India, 3–5 December 2020; pp. 341–347. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Huang, G.; Li, Y.; Pleiss, G.; Liu, Z.; Hopcroft, J.E.; Weinberger, K.Q. Snapshot Ensembles: Train 1, get M for free. arXiv 2017, arXiv:1704.00109. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2017, arXiv:1608.03983. [Google Scholar]

- Yu, I.J.; Ahn, W.; Nam, S.H.; Lee, H.K. BitMix: Data augmentation for image steganalysis. Electron. Lett. 2020, 56, 1311–1314. [Google Scholar] [CrossRef]

- Cecotti, H.; Rivera, A.; Farhadloo, M.; Pedroza, M.A. Grape detection with convolutional neural networks. Expert Syst. Appl. 2020, 159, 113588. [Google Scholar] [CrossRef]

- Pawara, P.; Okafor, E.; Schomaker, L.; Wiering, M. Data augmentation for plant classification. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Antwerp, Belgium, 18–21 September 2017; Springer: Cham, Switzerland, 2017; pp. 615–626. [Google Scholar]

- Sajjad, M.; Khan, S.; Muhammad, K.; Wu, W.; Ullah, A.; Baik, S.W. Multi-grade brain tumor classification using deep CNN with extensive data augmentation. J. Comput. Sci. 2019, 30, 174–182. [Google Scholar] [CrossRef]

- Yadav, S.S.; Jadhav S, M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Zhao, H.H.; Liu, H. Multiple classifiers fusion and CNN feature extraction for handwritten digits recognition. Granul. Comput. 2020, 5, 411–418. [Google Scholar] [CrossRef]

- Jia, S.J.; Wang, P.; Jia, P.Y.; Hu, S.P. Research on Data Augmentation for Image Classification Based on Convolution Neural Networks. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 4165–4170. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Abd Elaziz, M.; Mabrouk, A.; Dahou, A.; Chelloug, S.A. Medical Image Classification Utilizing Ensemble Learning and Levy Flight-Based Honey Badger Algorithm on 6G-Enabled Internet of Things. Comput. Intell. Neurosci. 2022, 2022, 5830766. [Google Scholar] [CrossRef]

- Hekal, A.A.; Moustafa, H.; Elnakib, A. Ensemble deep learning system for early breast cancer detection. Evol. Intell. 2022. [Google Scholar] [CrossRef]

- Chen, Y.X.; Chi, Y.J.; Fan, J.Q.; Ma, C. Gradient descent with random initialization: Fast global convergence for nonconvex phase retrieval. Math. Program. 2019, 176, 5–37. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.D.; Simchowitz, M.; Jordan, M.I.; Recht, B. Gradient Descent Converges to Minimizers. arXiv 2016, arXiv:1602.04915. [Google Scholar]

- Bottou, L. Stochastic Gradient Descent Tricks. In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. [Google Scholar]

- Kivinen, J.; Warmuth, M.K. Relative loss bounds for multidimensional regression problems. Mach. Learn. 2001, 45, 301–329. [Google Scholar] [CrossRef]

- Caelen, O. A Bayesian interpretation of the confusion matrix. Ann. Math. Artif. Intell. 2017, 81, 429–450. [Google Scholar] [CrossRef]

- Dogan, S.; Barua, P.D.; Kutlu, H.; Baygin, M.; Fujita, H.; Tuncer, T.; Acharya, U.R. Automated accurate fire detection system using ensemble pretrained residual network. Expert Syst. Appl. 2022, 203, 117407. [Google Scholar] [CrossRef]

- Too, E.C.; Li, Y.J.; Njuki, S.; Liu, Y.C. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Zhou, T.; Lu, X.Q.; Ren, G.Y.; Gu, Y.; Zhang, M.; Li, J. Facial Expression Classification Based on Ensemble Convolutional Neural Network. Laser Optoelectron. Prog. 2020, 57, 324–335. [Google Scholar]

- Dede, M.A.; Aptoula, E.; Genc, Y. Deep Network Ensembles for Aerial Scene Classification. IEEE Geosci. Remote Sens. 2019, 16, 732–735. [Google Scholar] [CrossRef]

- Wilson, A.C.; Roelofs, R.; Stern, M.; Srebro, N.; Recht, B. The Marginal Value of Adaptive Gradient Methods in Machine Learning. Adv. Neural Inf. Process. 2017, 30, 4151–4161. [Google Scholar]

- Zhu, H.; Liu, H.; Fu, A.M. Class-weighted neural network for monotonic imbalanced classification. Int. J. Mach. Learn. Cybern. 2021, 12, 1191–1201. [Google Scholar] [CrossRef]

| Method | Class | Precision | Recall | F1 Score | MCC |

|---|---|---|---|---|---|

| Adam | Health | 0.80 | 0.77 | 0.78 | 0.74 |

| Stem | 0.51 | 0.68 | 0.58 | 0.22 | |

| Leaf | 0.56 | 0.39 | 0.46 | 0.17 | |

| SGDR | Health | 0.92 | 0.88 | 0.90 | 0.87 |

| Stem | 0.92 | 0.86 | 0.89 | 0.82 | |

| Leaf | 0.85 | 0.91 | 0.88 | 0.79 | |

| SGDR-S | Health | 0.96 | 0.89 | 0.93 | 0.91 |

| Stem | 0.95 | 0.90 | 0.93 | 0.87 | |

| Leaf | 0.87 | 0.95 | 0.91 | 0.85 |

| Methods | Accuracy | Loss | Training Time | Params |

|---|---|---|---|---|

| VGG 16 | 0.60 | 2.29 | 547 min | 138 M |

| ResNet 101 | 0.73 | 0.56 | 559 min | 45 M |

| ResNet 152 | 0.77 | 0.49 | 575 min | 60 M |

| DenseNet 169 | 0.81 | 0.45 | 570 min | 14 M |

| DenseNet 201 | 0.84 | 0.32 | 595 min | 20 M |

| WR-EL | 0.92 | 0.29 | 589 min | 14 M |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Q.; Gao, M.; Wu, P.; Yan, J.; AbdelRahman, M.A.E. Image Classification of Wheat Rust Based on Ensemble Learning. Sensors 2022, 22, 6047. https://doi.org/10.3390/s22166047

Pan Q, Gao M, Wu P, Yan J, AbdelRahman MAE. Image Classification of Wheat Rust Based on Ensemble Learning. Sensors. 2022; 22(16):6047. https://doi.org/10.3390/s22166047

Chicago/Turabian StylePan, Qian, Maofang Gao, Pingbo Wu, Jingwen Yan, and Mohamed A. E. AbdelRahman. 2022. "Image Classification of Wheat Rust Based on Ensemble Learning" Sensors 22, no. 16: 6047. https://doi.org/10.3390/s22166047