Multimodal Hybrid Deep Learning Approach to Detect Tomato Leaf Disease Using Attention Based Dilated Convolution Feature Extractor with Logistic Regression Classification

Abstract

:1. Introduction

- In this study, we have introduced sequential image pre-processing steps. The tomato leaf images have been pre-processed using the color conversion, filtering method for denoising the images. To handle the larger features, we have used the bilateral filter method which helps to make images smoother with fine spatial parameters. Furthermore, the noises have been removed from the filtered data using the fast and simple Otsu segmentation method. Then, we use the CGAN model to generate synthetic image from the image to handle imbalance and noisy or wrongly labeled data to obtain good prediction results.

- To extract the most informative feature in a short time, we have designed a lightweight dilated CNN architecture and attention mechanism in which the multiple hidden layers of the architecture allow them to learn hierarchical representations from the images. Then, the extracted features have been classified using the fast and simple logistic regression model.

- To check the validation and robustness of the proposed hybrid architecture, we have also implemented eleven popular transfer learning algorithms on the same dataset and compared the performance with the proposed ADCLR model. The experimental analysis clearly shows that the proposed hybrid ADCLR provides superior performance for detecting tomato leaf disease.

2. Related Study

2.1. Machine Learning Methods

2.2. Deep Learning Methods

2.3. Deep Learning with Machine Learning

3. Materials and Methods

3.1. Data Description

3.2. Data Preprocessing

| Algorithm 1 Preprocessing Algorithm for ADCLR Model |

|

3.3. Image Filtering

3.4. Image Segmentation

3.5. Synthetic Image Generation

3.6. Proposed Hybrid Classification Model

| Algorithm 2 General Algorithm of our ADCLR Model |

|

- Initially, the inputted data is preprocessed by color conversion, filtering, and denoising. Bilateral filtering is used and can handle larger features to make the image smoother with fine spatial parameters. Noise from the preprocessed filter is also removed by the fast and simple Otsu segmentation method.

- Then, we use the Conditional Generative Adversarial Network (CGAN) model to generate synthetic image from the image those are preprocessed in previous stage. The synthetic image is generated to handle imbalance and noisy or wrongly labeled data to obtain good prediction results.

- Then, the synthetic image is sent to our proposed ADCLR model. In the ADCLR model, the attention-based Dilated CNN is used to extract the informative feature extraction. Dilated convolution has the advantage of capturing the level of internal sequence data first by increasing the region of the convolution kernel without raising the model’s parameter amount. The attention layer simply concentrates on the memory block, instead of focusing on the entire feature space, attention mechanism has the benefit of dramatically reducing the number of parameters and sharing the weights among diverse regional places.

- After that, the ADCLR method is trained with the training dataset and it tests the model robustness with the validation dataset. The Logistic Regression classifier is used to classify the images based on the extracted feature. Logistic regression classifier is simple, takes less time in training, and it performs well in multiclass prediction.

- Finally, the validation of the proposed model is tested with different performance evaluation metrics and comparison on disease image.

- To test the validation and effectiveness of the proposed approach, we also implemented eleven popular deep learning methods with the dataset, whereas our proposed method shows superior performance.

3.6.1. Dilated CNN Layer for Feature Extraction

| Algorithm 3 Feature Extraction Algorithm of DCLR Model | |

|

|

3.6.2. Hierarchical Attention Layer

3.6.3. Classification Layer

| Algorithm 4 Main Classification Algorithm of ADCLR Model Using Logistic Regression | |

|

|

3.7. Evaluation Metrics

- A.

- AccuracyThe average of all true cases is used to determine the Accuracy of the prediction. It is calculated with the specified equation:

- B.

- PrecisionThe amount of true positives divided by the total of positive predictions is known as Precision. The following equation shows the calculation of Precision.

- C.

- RecallThe Recall is a measurement of how well our model detects True Positives. As a result, Recall informs us how many tomato plants we accurately identified as having leaf disease out of those that have it.

- D.

- F1 ScoreThe F1 score elegantly summarizes a model’s predictive efficiency and measured by two normally competing metrics, precision and recall.

Experimental Setup

4. Result Analysis

4.1. Qualitative Analysis

4.2. Confusion Matrix

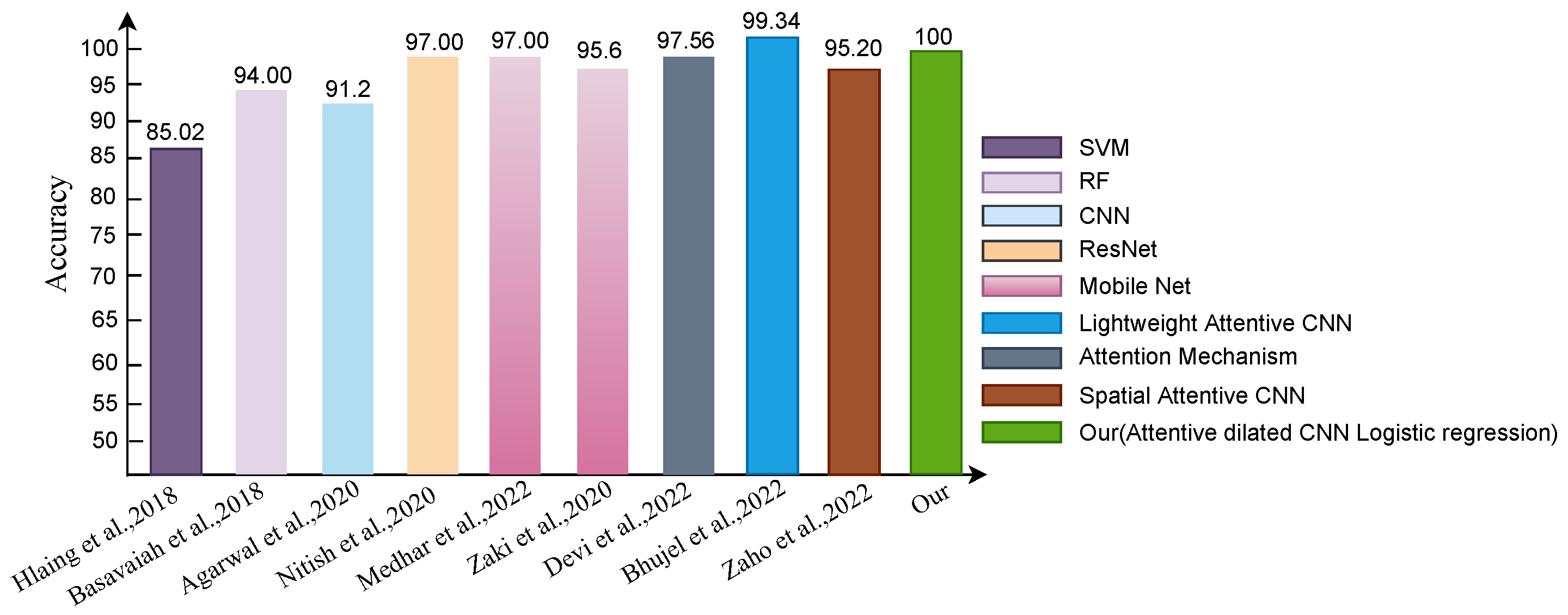

4.3. Comparisons with State-of-the-Art Methods

Comparison of Pre-Network Recognition Accuracy

4.4. Discussion

4.5. Real Time Test Result on New Image

4.6. Complexity Analysis

4.7. Limitation and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gangadevi, G.; Jayakumar, C. Review of Deep Learning Architectures Used for Identification and Classification of Plant Leaf Diseases. In Artificial Intelligent Techniques for Wireless Communication and Networking; Wiley: Hoboken, NJ, USA, 2022; pp. 75–90. [Google Scholar]

- Khandokar, I.; Hasan, M.; Ernawan, F.; Islam, S.; Kabir, M. Handwritten character recognition using convolutional neural network. J. Phys. Conf. Ser. 2021, 1918, 042152. [Google Scholar] [CrossRef]

- Dora, S.; Terrett, O.M.; Sánchez-Rodríguez, C. Plant–microbe interactions in the apoplast: Communication at the plant cell wall. Plant Cell 2022, 34, 1532–1550. [Google Scholar] [CrossRef] [PubMed]

- Scharr, H.; Pridmore, T.P.; Tsaftaris, S.A. Computer Vision Problems in Plant Phenotyping, CVPPP 2017–Introduction to the CVPPP 2017 Workshop Papers. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2020–2021. [Google Scholar]

- Al Farid, F.; Hashim, N.; Abdullah, J.; Bhuiyan, M.R.; Shahida Mohd Isa, W.N.; Uddin, J.; Haque, M.A.; Husen, M.N. A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System. J. Imaging 2022, 8, 153. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Sultana, S.; Kumar Roy, U.; Al Mahmud, J. A review on video classification with methods, findings, performance, challenges, limitations and future work. J. Ilm. Tek. Elektro Komput. Dan Inform. (JITEKI) 2020, 6, 47–57. [Google Scholar] [CrossRef]

- Islam, M.S.; Sultana, S.; Roy, U.K.; Al Mahmud, J.; Jahidul, S. HARC-New Hybrid Method with Hierarchical Attention Based Bidirectional Recurrent Neural Network with Dilated Convolutional Neural Network to Recognize Multilabel Emotions from Text. J. Ilm. Tek. Elektro Komput. Dan Inform. (JITEKI) 2021, 7, 142–153. [Google Scholar] [CrossRef]

- Shofiqul, M.S.I.; Ab Ghani, N.; Ahmed, M.M. A review on recent advances in Deep learning for Sentiment Analysis: Performances, Challenges and Limitations. J. Ilm. Tek. Elektro Komput. Dan Inform. (JITEKI) 2020, 9, 3775–3783. [Google Scholar]

- Islam, M.; Ghani, N.A. A Novel BiGRUBiLSTM Model for Multilevel Sentiment Analysis Using Deep Neural Network with BiGRU-BiLSTM. In Recent Trends in Mechatronics Towards Industry 4.0; Springer: Berlin/Heidelberg, Germany, 2022; pp. 403–414. [Google Scholar]

- Islam, M.S.; Sultana, S.; Islam, M.J. New Hybrid Deep Learning Method to Recognize Human Action from Video. J. Ilm. Tek. Elektro Komput. Dan Inform. 2021, 7, 306–313. [Google Scholar] [CrossRef]

- Islam, M.N.; Ahmed, F.; Ahammed, M.T.; Rashid, M.; Bari, B.S. Rice Disease Identification Through Leaf Image and IoT Based Smart Rice Field Monitoring System. In Enabling Industry 4.0 through Advances in Mechatronics; Springer: Berlin/Heidelberg, Germany, 2022; pp. 529–539. [Google Scholar]

- Bari, B.S.; Islam, M.N.; Rashid, M.; Hasan, M.J.; Razman, M.A.M.; Musa, R.M.; Ab Nasir, A.F.; Majeed, A.P.A. A real-time approach of diagnosing rice leaf disease using deep learning-based faster R-CNN framework. PeerJ Comput. Sci. 2021, 7, e432. [Google Scholar] [CrossRef]

- Wijesinha-Bettoni, R.; Mouillé, B. The contribution of potatoes to global food security, nutrition and healthy diets. Am. J. Potato Res. 2019, 96, 139–149. [Google Scholar] [CrossRef]

- Grieneisen, M.L.; Aegerter, B.J.; Scott Stoddard, C.; Zhang, M. Yield and fruit quality of grafted tomatoes, and their potential for soil fumigant use reduction. A meta-analysis. Agron. Sustain. Dev. 2018, 38, 29. [Google Scholar] [CrossRef]

- Meng, F.; Li, Y.; Li, S.; Chen, H.; Shao, Z.; Jian, Y.; Mao, Y.; Liu, L.; Wang, Q. Carotenoid biofortification in tomato products along whole agro-food chain from field to fork. Trends Food Sci. Technol. 2022, 124, 296–308. [Google Scholar] [CrossRef]

- Savary, S.; Ficke, A.; Aubertot, J.N.; Hollier, C. Crop losses due to diseases and their implications for global food production losses and food security. Food Secur. 2012, 4, 519–537. [Google Scholar] [CrossRef]

- Islam, M.; Dinh, A.; Wahid, K.; Bhowmik, P. Detection of potato diseases using image segmentation and multiclass support vector machine. In Proceedings of the 2017 IEEE 30th Canadian Conference on Electrical and Computer Engineering (CCECE), Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–4. [Google Scholar]

- Azlah, M.A.F.; Chua, L.S.; Rahmad, F.R.; Abdullah, F.I.; Wan Alwi, S.R. Review on techniques for plant leaf classification and recognition. Computers 2019, 8, 77. [Google Scholar] [CrossRef]

- Ramya, V.; Lydia, M.A. Leaf disease detection and classification using neural networks. Int. J. Adv. Res. Comput. Commun. Eng. 2016, 5, 207–210. [Google Scholar]

- Kantale, P.; Thakare, S. Pomegranate disease classification using Ada-Boost ensemble algorithm. Int. J. Eng. Res. Technol. 2020, 9, 612–620. [Google Scholar]

- Johannes, A.; Picon, A.; Alvarez-Gila, A.; Echazarra, J.; Rodriguez-Vaamonde, S.; Navajas, A.D.; Ortiz-Barredo, A. Automatic plant disease diagnosis using mobile capture devices, applied on a wheat use case. Comput. Electron. Agric. 2017, 138, 200–209. [Google Scholar] [CrossRef]

- Mohanty, R.; Wankhede, P.; Singh, D.; Vakhare, P. Tomato Plant Leaves Disease Detection using Machine Learning. In Proceedings of the 2022 IEEE International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 9–11 May 2022; pp. 544–549. [Google Scholar]

- Sujatha, R.; Chatterjee, J.M.; Jhanjhi, N.; Brohi, S.N. Performance of deep learning vs machine learning in plant leaf disease detection. Microprocess. Microsyst. 2021, 80, 103615. [Google Scholar] [CrossRef]

- Rumpf, T.; Mahlein, A.K.; Steiner, U.; Oerke, E.C.; Dehne, H.W.; Plümer, L. Early detection and classification of plant diseases with support vector machines based on hyperspectral reflectance. Comput. Electron. Agric. 2010, 74, 91–99. [Google Scholar] [CrossRef]

- Hasan, R.I.; Yusuf, S.M.; Alzubaidi, L. Review of the state of the art of deep learning for plant diseases: A broad analysis and discussion. Plants 2020, 9, 1302. [Google Scholar] [CrossRef]

- Hong, H.; Lin, J.; Huang, F. Tomato disease detection and classification by deep learning. In Proceedings of the 2020 IEEE International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Fuzhou, China, 12–14 June 2020; pp. 25–29. [Google Scholar]

- Chen, Z.; Wu, R.; Lin, Y.; Li, C.; Chen, S.; Yuan, Z.; Chen, S.; Zou, X. Plant disease recognition model based on improved YOLOv5. Agronomy 2022, 12, 365. [Google Scholar] [CrossRef]

- Al-gaashani, M.S.; Shang, F.; Muthanna, M.S.; Khayyat, M.; Abd El-Latif, A.A. Tomato leaf disease classification by exploiting transfer learning and feature concatenation. IET Image Process. 2022, 16, 913–925. [Google Scholar] [CrossRef]

- Hlaing, C.S.; Zaw, S.M.M. Tomato plant diseases classification using statistical texture feature and color feature. In Proceedings of the 2018 IEEE/ACIS 17th International Conference on Computer and Information Science (ICIS), Singapore, 6–8 June 2018; pp. 439–444. [Google Scholar]

- Basavaiah, J.; Arlene Anthony, A. Tomato leaf disease classification using multiple feature extraction techniques. Wirel. Pers. Commun. 2020, 115, 633–651. [Google Scholar] [CrossRef]

- Kalyoncu, C.; Toygar, Ö. GTCLC: Leaf classification method using multiple descriptors. IET Comput. Vis. 2016, 10, 700–708. [Google Scholar] [CrossRef]

- Kaur, S.; Pandey, S.; Goel, S. Semi-automatic leaf disease detection and classification system for soybean culture. IET Image Process. 2018, 12, 1038–1048. [Google Scholar] [CrossRef]

- Batool, A.; Hyder, S.B.; Rahim, A.; Waheed, N.; Asghar, M.A.; Fawad. Classification and identification of tomato leaf disease using deep neural network. In Proceedings of the 2020 IEEE International Conference on Engineering and Emerging Technologies (ICEET), Lahore, Pakistan, 22–23 February 2020; pp. 1–6. [Google Scholar]

- Zaki, S.Z.M.; Zulkifley, M.A.; Stofa, M.M.; Kamari, N.A.M.; Mohamed, N.A. Classification of tomato leaf diseases using MobileNet v2. IAES Int. J. Artif. Intell. 2020, 9, 290. [Google Scholar] [CrossRef]

- Agarwal, M.; Singh, A.; Arjaria, S.; Sinha, A.; Gupta, S. ToLeD: Tomato leaf disease detection using convolution neural network. Procedia Comput. Sci. 2020, 167, 293–301. [Google Scholar] [CrossRef]

- Wang, L.; Sun, J.; Wu, X.; Shen, J.; Lu, B.; Tan, W. Identification of crop diseases using improved convolutional neural networks. IET Comput. Vis. 2020, 14, 538–545. [Google Scholar] [CrossRef]

- Agarwal, M.; Gupta, S.K.; Biswas, K. Development of Efficient CNN model for Tomato crop disease identification. Sustain. Comput. Inform. Syst. 2020, 28, 100407. [Google Scholar] [CrossRef]

- Geetharamani, G.; Pandian, A. Identification of plant leaf diseases using a nine-layer deep convolutional neural network. Comput. Electr. Eng. 2019, 76, 323–338. [Google Scholar]

- Nithish Kannan, E.; Kaushik, M.; Prakash, P.; Ajay, R.; Veni, S. Tomato leaf disease detection using convolutional neural network with data augmentation. In Proceedings of the 2020 5th IEEE International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 10–12 June 2020; pp. 1125–1132. [Google Scholar]

- Hussain, N.; Khan, M.A.; Tariq, U.; Kadry, S.; Yar, M.A.E.; Mostafa, A.M.; Alnuaim, A.A.; Ahmad, S. Multiclass Cucumber Leaf Diseases Recognition Using Best Feature Selection. Comput. Mater. Contin. 2022, 70, 3281–3294. [Google Scholar] [CrossRef]

- Fisher, M.S.; Arab, N. Apparatus, System, and Method for Image Normalization Using a Gaussian Residual of Fit Selection Criteria. U.S. Patent 9,245,169, 22 January 2016. [Google Scholar]

- Ahmad, I.; Hamid, M.; Yousaf, S.; Shah, S.T.; Ahmad, M.O. Optimizing pretrained convolutional neural networks for tomato leaf disease detection. Complexity 2020, 2020, 8812019. [Google Scholar] [CrossRef]

- Aversano, L.; Bernardi, M.L.; Cimitile, M.; Iammarino, M.; Rondinella, S. Tomato diseases Classification Based on VGG and Transfer Learning. In Proceedings of the 2020 IEEE International Workshop on Metrology for Agriculture and Forestry (MetroAgriFor), Virtual, 4–6 November 2020; pp. 129–133. [Google Scholar]

- Andrychowicz, M.; Denil, M.; Gomez, S.; Hoffman, M.W.; Pfau, D.; Schaul, T.; Shillingford, B.; De Freitas, N. Learning to learn by gradient descent by gradient descent. In Advances in Neural Information Processing Systems 29 (NIPS 2016); Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Neural Information Processing Systems (NeurIPS): San Diego, CA, USA, 2016; pp. 3981–3989. [Google Scholar]

- Wu, Y.; Jiang, B.; Lu, N. A descriptor system approach for estimation of incipient faults with application to high-speed railway traction devices. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 2108–2118. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, B.; Wang, Y. Incipient winding fault detection and diagnosis for squirrel-cage induction motors equipped on CRH trains. ISA Trans. 2020, 99, 488–495. [Google Scholar] [CrossRef] [PubMed]

- Lei, X.; Pan, H.; Huang, X. A dilated CNN model for image classification. IEEE Access 2019, 7, 124087–124095. [Google Scholar] [CrossRef]

- Devi, S.N.; Muthukumaravel, A. A Novel Salp Swarm Algorithm With Attention-Densenet Enabled Plant Leaf Disease Detection And Classification In Precision Agriculture. In Proceedings of the 2022 IEEE International Conference on Advanced Computing Technologies and Applications (ICACTA), Coimbatore, India, 4–5 March 2022; pp. 1–7. [Google Scholar]

- Bhujel, A.; Kim, N.E.; Arulmozhi, E.; Basak, J.K.; Kim, H.T. A lightweight Attention-based convolutional neural networks for tomato leaf disease classification. Agriculture 2022, 12, 228. [Google Scholar] [CrossRef]

- Zhao, Y.; Sun, C.; Xu, X.; Chen, J. RIC-Net: A plant disease classification model based on the fusion of Inception and residual structure and embedded attention mechanism. Comput. Electron. Agric. 2022, 193, 106644. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef]

- Hughes, D.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Chen, B.H.; Cheng, H.Y.; Tseng, Y.S.; Yin, J.L. Two-pass bilateral smooth filtering for remote sensing imagery. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Bird, J.J.; Barnes, C.M.; Manso, L.J.; Ekárt, A.; Faria, D.R. Fruit quality and defect image classification with conditional GAN data augmentation. Sci. Hortic. 2022, 293, 110684. [Google Scholar] [CrossRef]

- Trivedi, V.K.; Shukla, P.K.; Pandey, A. Automatic segmentation of plant leaves disease using min-max hue histogram and k-mean clustering. Multimed. Tools Appl. 2022, 81, 20201–20228. [Google Scholar] [CrossRef]

| Leaf Disease Class | Amount | Percentage % |

|---|---|---|

| Bacterial spot | 2126 | 13.29 |

| Early blight | 1000 | 6.25 |

| Target Spot | 1403 | 8.77 |

| Yellow Leaf Curl Virus | 3107 | 19.43 |

| Mosaic virus | 372 | 2.32 |

| Late blight | 2005 | 12.54 |

| Leaf Mold | 951 | 5.94 |

| Septoria leaf spot | 1760 | 11 |

| Two spotted spider mite | 1675 | 10.48 |

| Healthy | 1590 | 9.94 |

| Total | 15,989 | 100 |

| Layer No | Layer Name | Layer Information | Image Size | Output Size |

|---|---|---|---|---|

| 01 | 2d Convolution | Dilated Convolution | 256 × 256 × 3 | (None, 256, 256, 64) |

| 02 | Activation | ReLU | 256 × 253 × 3 | (256, 64) |

| 03 | Normalization | Batch Normalization (256) | 256 × 253 × 3 | (256, 256, 64) |

| 04 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (256, 256, 64) |

| 05 | 2d Convolution | Dilated Convolution | 256 × 253 × 3 | (128, 128, 64) |

| 06 | Activation | ReLU | 256 × 253 × 3 | (128, 64) |

| 07 | Normalization | Batch Normalization (128) | 256 × 253 × 3 | (128, 128, 64) |

| 08 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (128, 128, 128) |

| 09 | 2d Convolution | Dilated Convolution | 256 × 253 × 3 | (64, 64, 64) |

| 10 | Activation | ReLU | 256 × 253 × 3 | (64, 64) |

| 11 | Normalization | Batch Normalization (64) | 256 × 253 × 3 | (64, 64, 64) |

| 12 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (64, 64, 256) |

| 13 | 2d Convolution | Dilated Convolution | 256 × 253 × 3 | (32, 32, 64) |

| 14 | Activation | ReLU | 256 × 253 × 3 | (32, 64) |

| 15 | Normalization | Batch Normalization (32) | 256 × 253 × 3 | (32, 32, 64) |

| 16 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (32, 32, 512) |

| 17 | 2d Convolution | Dilated Convolution | 256 × 253 × 3 | (16, 16, 64) |

| 18 | Activation | ReLU | 256 × 253 × 3 | (16, 64) |

| 19 | Normalization | Batch Normalization (16) | 256 × 253 × 3 | (16, 16, 64) |

| 20 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (16, 16, 1024) |

| 21 | 2d Convolution | Dilated Convolution | 256 × 253 × 3 | (8, 8, 64) |

| 22 | Activation | ReLU | 256 × 253 × 3 | (8, 64) |

| 23 | Normalization | Batch Normalization (16) | 256 × 253 × 3 | (8,8, 64) |

| 24 | Pooling | MaxPoool2D (Kernel 2, Stride 2, Dilation 1) | 256 × 253 × 3 | (8, 8, 2048) |

| 25 | Activation | ReLU | 256 × 253 × 3 | (256, 64) |

| 26 | Pooling | AdaptiveMaxPool2d | 256 × 253 × 3 | (8, 512) |

| 27 | Dropout | Drop out (0.5) | 256 × 253 × 3 | |

| 28 | Attention | Attention | 256 × 253 × 3 | (100,10) |

| 29 | Flattern | Flatten Layer | 256 × 253 × 3 | (64 × 10) |

| 30 | Logistic Regression | Logistic Regression (LR) Classifier (N = 10, Number of features, Random State = 100) | 256 × 253 × 3 | 10 |

| Model LR | Metrics | Training Performance | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | H | I | J | ||

| LR | Accuracy | 0.96 | 0.95 | 0.96 | 0.97 | 0.98 | 0.96 | 0.97 | 0.95 | 0.97 | 0.96 |

| Precession | 0.98 | 0.95 | 0.94 | 0.98 | 1.00 | 0.96 | 0.98 | 0.93 | 0.96 | 0.99 | |

| Recall | 0.96 | 0.95 | 0.96 | 0.97 | 0.98 | 0.96 | 0.92 | 0.98 | 0.98 | 0.98 | |

| F1 | 0.96 | 0.98 | 0.93 | 0.96 | 0.99 | 0.96 | 0.95 | 0.96 | 0.97 | 0.98 | |

| CNN-LR | Accuracy | 0.97 | 0.97 | 0.96 | 0.97 | 0.97 | 0.97 | 0.98 | 0.98 | 0.97 | 0.96 |

| Precession | 0.99 | 0.95 | 0.94 | 0.98 | 1.00 | 0.96 | 0.98 | 0.93 | 0.96 | 0.99 | |

| Recall | 0.99 | 0.93 | 0.98 | 1.00 | 0.97 | 0.96 | 0.94 | 0.98 | 0.99 | 0.98 | |

| F1 | 0.98 | 0.95 | 0.96 | 0.99 | 0.98 | 0.96 | 0.94 | 0.96 | 0.97 | 0.98 | |

| Attention-Dilated CNN- LR | Accuracy | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Precession | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| Recall | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| F1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| Training supports as 80% data | 1701 | 800 | 1123 | 2486 | 298 | 1604 | 761 | 1416 | 1340 | 1272 | |

| Model | Metrics | Validation Performance | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | H | I | J | ||

| LR | Accuracy | 0.97 | 0.96 | 0.97 | 0.97 | 0.98 | 0.96 | 0.97 | 0.95 | 0.97 | 0.96 |

| Precession | 0.99 | 0.96 | 0.95 | 0.99 | 1.00 | 0.96 | 0.98 | 0.94 | 0.96 | 0.99 | |

| Recall | 0.97 | 0.98 | 0.96 | 0.97 | 0.99 | 0.96 | 0.92 | 0.98 | 0.98 | 0.98 | |

| F1 | 0.96 | 0.98 | 0.94 | 0.98 | 0.99 | 0.97 | 0.98 | 0.97 | 0.97 | 0.98 | |

| CNN-LR | Accuracy | 0.98 | 0.97 | 0.97 | 0.98 | 0.98 | 0.98 | 0.98 | 0.97 | 0.97 | 0.96 |

| Precession | 0.99 | 0.96 | 0.98 | 0.99 | 1.00 | 0.97 | 0.97 | 0.97 | 0.98 | 0.99 | |

| Recall | 0.99 | 0.94 | 0.99 | 1.00 | 0.97 | 0.99 | 0.98 | 0.98 | 0.99 | 0.98 | |

| F1 | 0.98 | 0.95 | 0.96 | 0.99 | 0.99 | 0.98 | 0.99 | 0.99 | 0.98 | 0.98 | |

| Attention-Dilated CNN-LR | Accuracy | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Precession | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| Recall | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| F1 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 | |

| Validation supports as 20% data | 425 | 200 | 280 | 621 | 74 | 401 | 190 | 354 | 335 | 318 | |

| Model | Metrics | Testing Performance | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | H | I | J | ||

| LR | Accuracy | 0.96 | 0.94 | 0.94 | 0.95 | 0.97 | 0.96 | 0.97 | 0.93 | 0.95 | 0.94 |

| Precession | 0.98 | 0.91 | 0.9 | 0.96 | 0.98 | 0.96 | 0.98 | 0.95 | 0.96 | 0.97 | |

| Recall | 0.97 | 0.89 | 0.94 | 0.98 | 0.98 | 0.93 | 0.9 | 0.92 | 0.94 | 0.96 | |

| F1 | 0.95 | 0.92 | 0.95 | 0.98 | 0.95 | 0.94 | 0.95 | 0.99 | 0.96 | 0.94 | |

| CNN- LR | Accuracy | 0.98 | 0.95 | 0.95 | 0.96 | 0.98 | 0.97 | 0.96 | 0.94 | 0.96 | 0.95 |

| Precession | 0.99 | 0.94 | 0.93 | 0.97 | 0.99 | 0.95 | 0.97 | 0.94 | 0.95 | 0.98 | |

| Recall | 0.97 | 0.92 | 0.95 | 0.99 | 0.99 | 0.94 | 0.91 | 0.94 | 0.96 | 0.97 | |

| F1 | 0.96 | 0.93 | 0.95 | 0.97 | 0.97 | 0.95 | 0.94 | 0.98 | 0.98 | 0.95 | |

| Attention-Dilated CNN- LR | Accuracy | 0.99 | 0.97 | 0.96 | 0.97 | 0.97 | 0.96 | 0.97 | 0.95 | 0.97 | 0.96 |

| Precession | 0.98 | 0.95 | 0.94 | 0.98 | 1.00 | 0.96 | 0.98 | 0.93 | 0.96 | 0.99 | |

| Recall | 0.98 | 0.93 | 0.97 | 1.00 | 0.97 | 0.96 | 0.92 | 0.98 | 0.98 | 0.98 | |

| F1 | 0.99 | 0.95 | 0.96 | 0.99 | 0.98 | 0.96 | 0.95 | 0.96 | 0.97 | 0.98 | |

| Supports 100 new image per class | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| Training Performance | |||

| Model | Input Size | Sample | Accuracy |

| LR | 256 × 256 × 3 | 12,801 | 96.30% |

| CNN-LR | 256 × 256 × 3 | 12,801 | 97.00% |

| Attention-Dilatd CNN LR(ADCLR) | 256 × 253 × 3 | 12,801 | 100.0% |

| Validation Performance | |||

| Model | Input Size | Sample | Accuracy |

| LR | 256 × 256 × 3 | 3198 | 96.6% |

| CNN-LR | 256 × 253 × 3 | 3198 | 97.4% |

| Attention-Dilatd CNN LR(ADCLR) | 256 × 253 × 3 | 3198 | 100.00% |

| Testing Performance | |||

| Model | Input Size | Sample | Accuracy |

| LR | 256 × 256 × 3 | 1000 | 95.20% |

| CNN-LR | 256 × 253 × 3 | 1000 | 96.00% |

| Attention-Dilatd CNN LR(ADCLR) | 256 × 256 × 3 | 1000 | 96.60% |

| Type | Author | Method | Features | Class | Samples | Data | Performance |

|---|---|---|---|---|---|---|---|

| ML | Hlaing et al. [29] | SVM | SIFT and color conversion Features | 7 | 3535 | Plant Village (Tomato) | Accuracy 85.02% |

| Basavaiah et al. [29] | Random forest and decision tree | Hu Moments, pattern and colour histograms. | 5 | 300 | Plant Village (Tomato) | Accuracy 94%(RF) Accuracy 90%(DT) | |

| DL | Agarwal et al. [37] | CNN | CNN model | 10 | 10,100 | Plant Village (Tomato) | Accuracy 91.2% |

| Nitish et al. [39] | ResNet | ResNet-50 model | 6 | 12,206 | Plant Village (Tomato) | Accuracy 97% | |

| MLDL | Medhar et al. [28] | MobileNetV2 or NASNetMobile and Logistic Regression | MobileNetV2 or NASNetMobile feature extractor | 6 | 1,152 | Plant Village (Tomato) | Accuracy 97% (MobileNetV2) Accuracy 97% (NASNetMobile) |

| Zaki et al. [34] | MobileNetV2 | Fine-tune MobileNetV2 | 4 | 3471 | Plant Village (Tomato) | Accuracy 95.6% | |

| Devi et al. [48] | Attention mechanism | Dense net with Attention | 5 | 9281 | Plant Village (Tomato) | Accuracy 97.56% | |

| Bhujel et al. [49] | Lightweight Attention-Based CNN | Attentive CNN | 10 | 19,510 | Plant Village (Tomato) | Accuracy 99.34% | |

| Zaho et al. [50] | Spatial attention with CNN | Fully connected layer | 10 | 18,160 | Plant Village (Tomato) | Accuracy 95.20% | |

| Proposed ADCLR (Our) | Attention-Dilated CNN and Logistic Regression with synthetic image | Attention-based Dilated CNN | 10 | 15,989 | Plant Village (Tomato) | Accuracy 100.00% F1 100.00% Precession 100.00% Recall 100.00% |

| Model | Input Size | Accuracy | Precessoin | Recall | F1-Score |

|---|---|---|---|---|---|

| CNN | 256, 256, 3 | 88.70% | 86.76% | 88.71% | 87.30% |

| AlexNet | 256, 256, 3 | 91.87% | 89.93% | 91.88% | 90.47% |

| EfficientNet | 256, 256, 3 | 92.25% | 90.31% | 92.26% | 90.75% |

| Xception | 256, 256, 3 | 97.61% | 95.67% | 88.70% | 96.21% |

| Inception-Resnet-V2 | 256, 256, 3 | 97.80% | 95.86% | 95.87% | 96.41% |

| MLP | 256, 256, 3 | 97.99% | 96.05% | 97.99% | 96.59% |

| LSTM | 256, 256, 3 | 98.50% | 96.56% | 98.50% | 97.11% |

| GRU | 256, 256, 3 | 98.74% | 96.80% | 98.75% | 97.34% |

| DenseNet | 256, 256, 3 | 98.88% | 96.94% | 98.89% | 97.48% |

| VGG | 256, 256, 3 | 99.00% | 97.06% | 99.01% | 97.61% |

| Dilated CNN-RNN | 256, 256, 3 | 99.15% | 97.21% | 99.15% | 97.75% |

| ADCLR (Our) | 256, 256, 3 | 100.00% | 100.00% | 100.00% | 100.00% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, M.S.; Sultana, S.; Farid, F.A.; Islam, M.N.; Rashid, M.; Bari, B.S.; Hashim, N.; Husen, M.N. Multimodal Hybrid Deep Learning Approach to Detect Tomato Leaf Disease Using Attention Based Dilated Convolution Feature Extractor with Logistic Regression Classification. Sensors 2022, 22, 6079. https://doi.org/10.3390/s22166079

Islam MS, Sultana S, Farid FA, Islam MN, Rashid M, Bari BS, Hashim N, Husen MN. Multimodal Hybrid Deep Learning Approach to Detect Tomato Leaf Disease Using Attention Based Dilated Convolution Feature Extractor with Logistic Regression Classification. Sensors. 2022; 22(16):6079. https://doi.org/10.3390/s22166079

Chicago/Turabian StyleIslam, Md Shofiqul, Sunjida Sultana, Fahmid Al Farid, Md Nahidul Islam, Mamunur Rashid, Bifta Sama Bari, Noramiza Hashim, and Mohd Nizam Husen. 2022. "Multimodal Hybrid Deep Learning Approach to Detect Tomato Leaf Disease Using Attention Based Dilated Convolution Feature Extractor with Logistic Regression Classification" Sensors 22, no. 16: 6079. https://doi.org/10.3390/s22166079

APA StyleIslam, M. S., Sultana, S., Farid, F. A., Islam, M. N., Rashid, M., Bari, B. S., Hashim, N., & Husen, M. N. (2022). Multimodal Hybrid Deep Learning Approach to Detect Tomato Leaf Disease Using Attention Based Dilated Convolution Feature Extractor with Logistic Regression Classification. Sensors, 22(16), 6079. https://doi.org/10.3390/s22166079