Author Contributions

Conceptualization, S.K. and S.J.; methodology, S.K.; software, S.K. and S.J.; validation, S.K. and S.J.; formal analysis, S.K. and S.J.; investigation, S.K.; writing—original draft preparation, S.K.; writing—review and editing, S.J. and F.S.; visualization, S.K.; supervision, F.S. All authors have read and agreed to the published version of the manuscript.

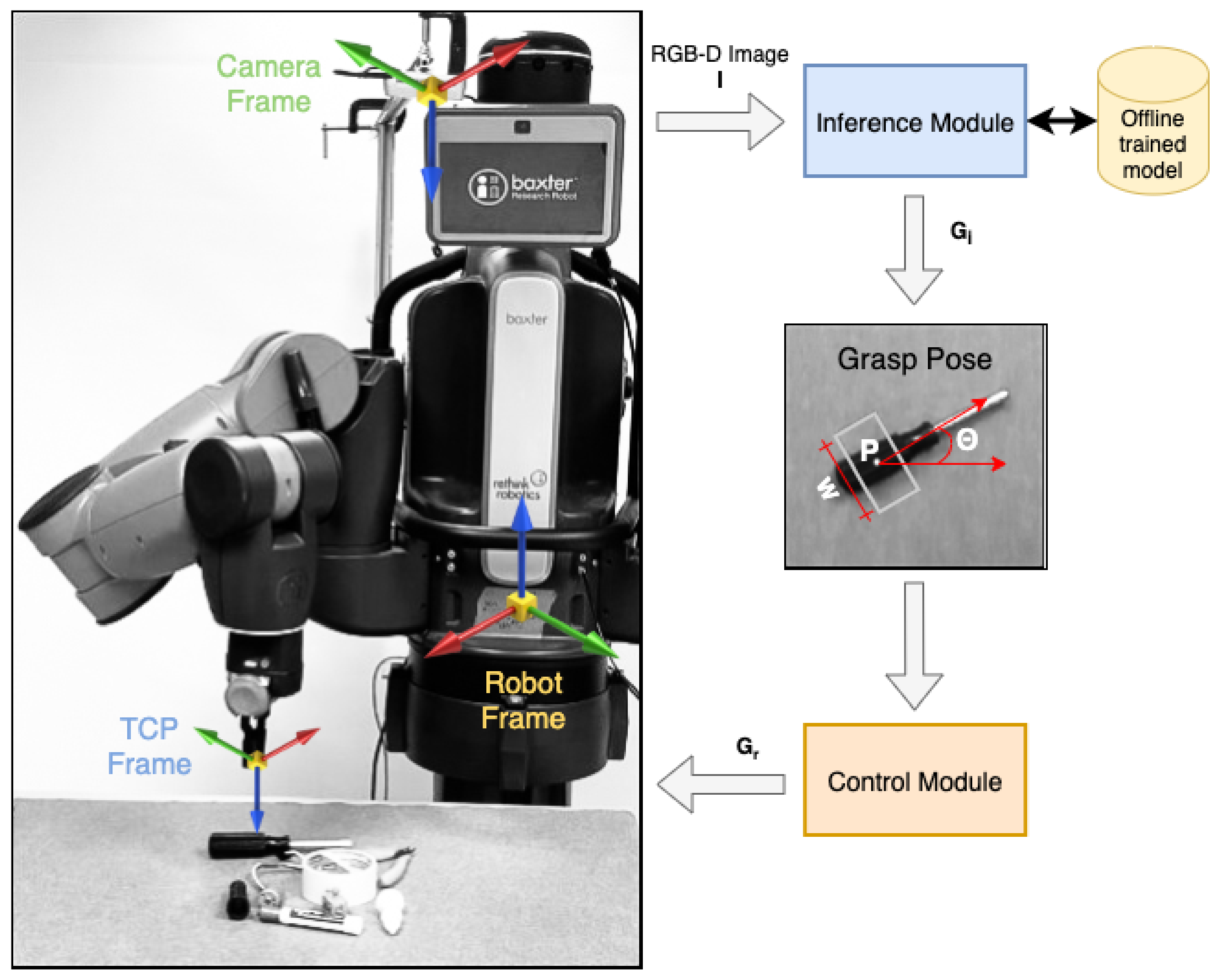

Figure 1.

Overview. A real-time multi-grasp detection framework to predict, plan and perform robust antipodal grasps for the objects in the camera’s field of view using an offline trained GR-ConvNet model. Our system can grasp novel objects in isolation as well as in clutter. Video (accessed on 20 July 2022):

https://youtu.be/cwlEhdoxY4U.

Figure 1.

Overview. A real-time multi-grasp detection framework to predict, plan and perform robust antipodal grasps for the objects in the camera’s field of view using an offline trained GR-ConvNet model. Our system can grasp novel objects in isolation as well as in clutter. Video (accessed on 20 July 2022):

https://youtu.be/cwlEhdoxY4U.

Figure 2.

Performance comparison of GR-ConvNet on Cornell Grasping Dataset with prior work.

Figure 2.

Performance comparison of GR-ConvNet on Cornell Grasping Dataset with prior work.

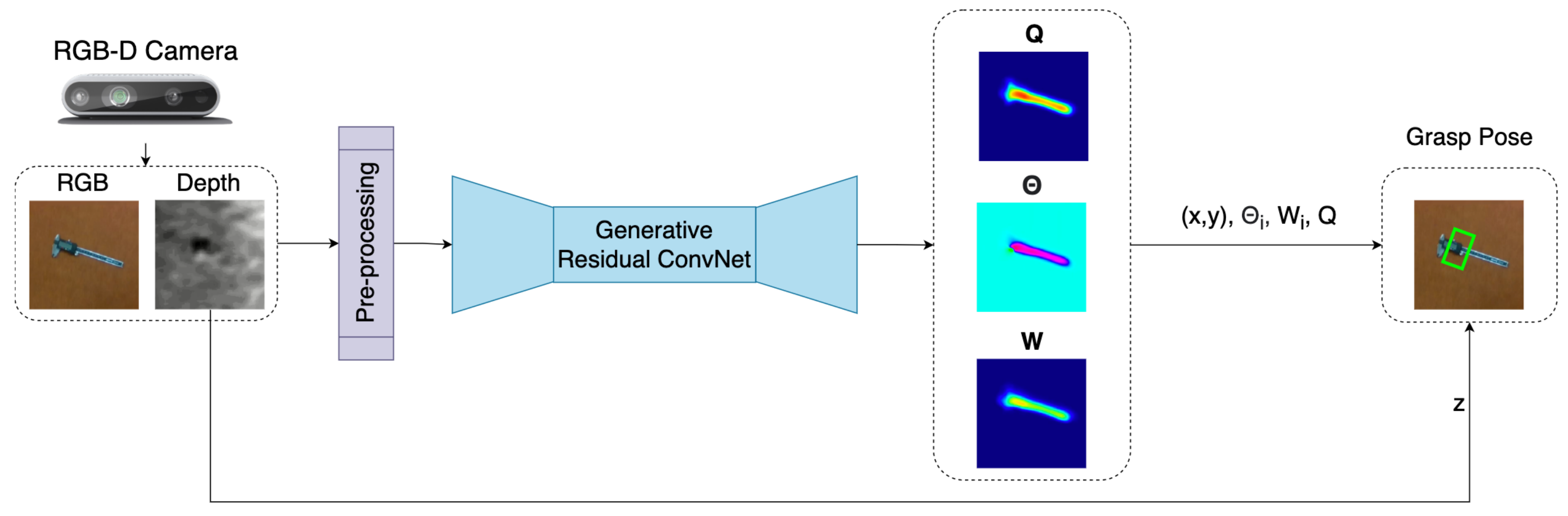

Figure 3.

Inference module predicts suitable grasp poses for the objects in the camera’s field of view.

Figure 3.

Inference module predicts suitable grasp poses for the objects in the camera’s field of view.

Figure 4.

Control module uses the grasp poses generated by the inference module to plan and execute robot trajectories to perform antipodal grasps.

Figure 4.

Control module uses the grasp poses generated by the inference module to plan and execute robot trajectories to perform antipodal grasps.

Figure 5.

Network architecture of the Generative Residual Convolutional Neural Network v2, where n is the number of input channels, k is the number of filters, and d is the dropout rate. The network takes in an n-channel input image of size and generates pixel-wise grasps in the form of grasp quality, grasp angle and grasp width.

Figure 5.

Network architecture of the Generative Residual Convolutional Neural Network v2, where n is the number of input channels, k is the number of filters, and d is the dropout rate. The network takes in an n-channel input image of size and generates pixel-wise grasps in the form of grasp quality, grasp angle and grasp width.

Figure 6.

Flat + Cosine anneal learning rate curve used for training.

Figure 6.

Flat + Cosine anneal learning rate curve used for training.

Figure 7.

Qualitative results on Cornell Grasping Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 7.

Qualitative results on Cornell Grasping Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 8.

Qualitative results on Jacquard Grasping Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 8.

Qualitative results on Jacquard Grasping Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 9.

Qualitative results on Graspnet 1-billion Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 9.

Qualitative results on Graspnet 1-billion Dataset. The top three rows (quality, angle and width) are the output of GR-Convnet. The bottom two rows are the predicted and ground truth grasps in rectangle grasp representation.

Figure 10.

Ablation study results for GR-Convnet by training on different filter sizes (k), input channels (n), dropout (d), optimizers and learning rates. The model is evaluated using the 25% IoU metric against the Cornell dataset. Green indicates the selected parameter. (a–c) Number of parameters, execution time and accuracy for different number of filters. (d) Grasp prediction accuracy for different optimizers. (e–f) Execution time and prediction accuracy of the network for different modalities. (g) Grasp prediction accuracy for different dropout percentage. (h) Grasp prediction accuracy for different learning rates.

Figure 10.

Ablation study results for GR-Convnet by training on different filter sizes (k), input channels (n), dropout (d), optimizers and learning rates. The model is evaluated using the 25% IoU metric against the Cornell dataset. Green indicates the selected parameter. (a–c) Number of parameters, execution time and accuracy for different number of filters. (d) Grasp prediction accuracy for different optimizers. (e–f) Execution time and prediction accuracy of the network for different modalities. (g) Grasp prediction accuracy for different dropout percentage. (h) Grasp prediction accuracy for different learning rates.

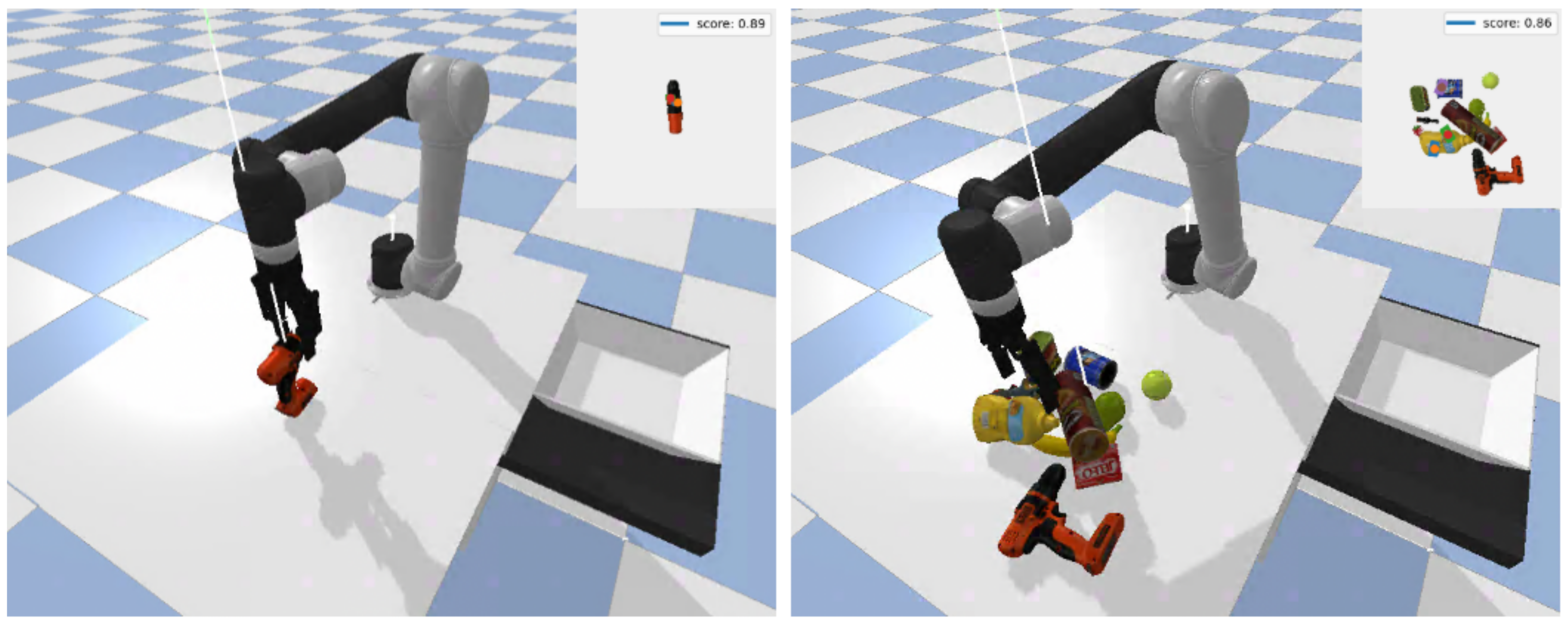

Figure 11.

Examples of antipodal grasping in simulation. (Left) Grasping YCB objects in isolation. (Right) Grasping YCB objects in clutter.

Figure 11.

Examples of antipodal grasping in simulation. (Left) Grasping YCB objects in isolation. (Right) Grasping YCB objects in clutter.

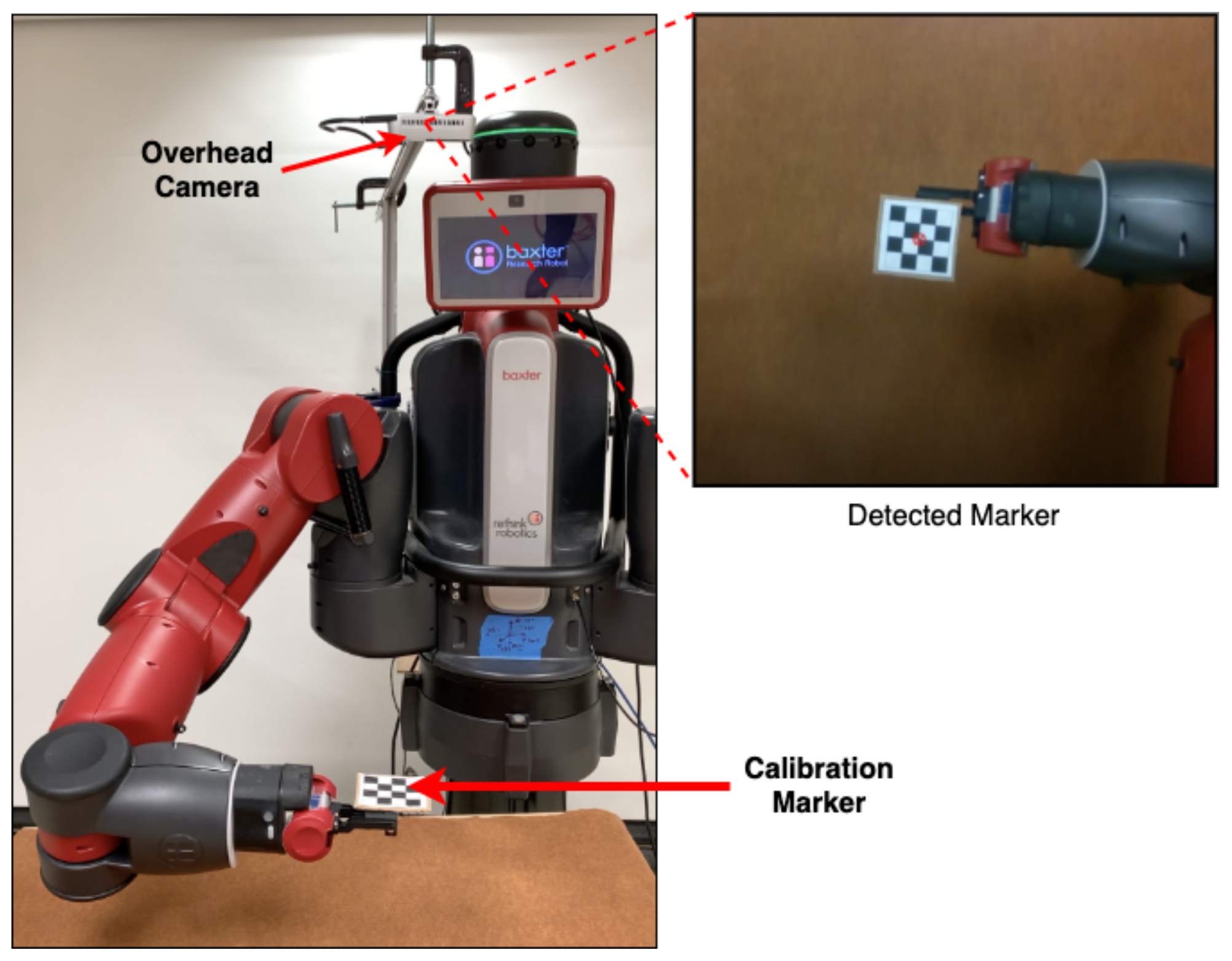

Figure 12.

Setup for hand-eye calibration procedure.

Figure 12.

Setup for hand-eye calibration procedure.

Figure 13.

Objects used for robotic grasping experiments. (a) Household test objects. (b) Adversarial test objects.

Figure 13.

Objects used for robotic grasping experiments. (a) Household test objects. (b) Adversarial test objects.

Figure 14.

Visualization of the household objects clutter scene removal task in the real world using our GR-Convnet model trained on Cornell dataset. See attached video for a complete run. (a–l) show the grasp pose generated by inference module (top), robot grasping the object (bottom left), and robot retracting after successful grasp (bottom right) for each object.

Figure 14.

Visualization of the household objects clutter scene removal task in the real world using our GR-Convnet model trained on Cornell dataset. See attached video for a complete run. (a–l) show the grasp pose generated by inference module (top), robot grasping the object (bottom left), and robot retracting after successful grasp (bottom right) for each object.

Figure 15.

Visualization of the adversarial objects clutter scene removal task in the real world using our GR-Convnet model trained on Cornell dataset. See attached video for a complete run. (a–f) show the grasp pose generated by inference module (top), robot grasping the object (bottom left), and robot retracting after successful grasp (bottom right) for each object.

Figure 15.

Visualization of the adversarial objects clutter scene removal task in the real world using our GR-Convnet model trained on Cornell dataset. See attached video for a complete run. (a–f) show the grasp pose generated by inference module (top), robot grasping the object (bottom left), and robot retracting after successful grasp (bottom right) for each object.

Figure 16.

Examples of Ravens-10 benchmark tasks. Left to right: block insertion, palletizing boxes, place red in green, assembling kits.

Figure 16.

Examples of Ravens-10 benchmark tasks. Left to right: block insertion, palletizing boxes, place red in green, assembling kits.

Table 1.

A comparison of related work.

Table 1.

A comparison of related work.

| Author | Cornell Results | Jacquard Results | Graspnet Results | Real Robot | Household Objects | Adversarial Objects | Cluttered Objects | Code Available |

|---|

| Lenz et al. [1] | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ |

| Redmon et al. [2] | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Wang et al. [12] | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Kumra et al. [4] | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Depierre et al. [6] | ✓ | ✓ | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ |

| Chu et al. [13] | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ |

| Zhou et al. [14] | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✗ |

| Asif et al. [15] | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | ✓ | ✗ |

| Morrison et al. [9] | ✓ | ✗ | ✗ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Zhang et al. [16] | ✓ | ✓ | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ |

| Ours | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

Table 2.

Summary of antipodal robotic grasping datasets.

Table 2.

Summary of antipodal robotic grasping datasets.

| Dataset | Modality | Type | Objects | Images | Grasps |

|---|

| Cornell [5] | RGB-D | Real | 240 | 1035 | 8k |

| Multi-Object [13] | RGB-D | Real | - | 96 | 2.9k |

| Jacquard [6] | RGB-D | Sim | 11k | 54k | 1.1M |

| Dexnet [33] | Depth | Sim | 1500 | 6.7M | 6.7M |

| VR-Grasping [43] | RGB-D | Sim | 101 | 10k | 4.8M |

| VMRD [16] | RGB | Real | 100 | 4.6k | 100k |

| Graspnet [7] | RGB-D | Real | 88 | 97k | 1.2B |

Table 3.

Comparative results on Cornell grasping dataset.

Table 3.

Comparative results on Cornell grasping dataset.

| Authors | Algorithm | Accuracy (%) with IoU > 25% | Speed

(ms) |

|---|

| IW | OW |

|---|

| Jiang et al. [5] | Fast Search | 60.5 | 58.3 | 5000 |

| Lenz et al. [1] | SAE, struct. reg. | 73.9 | 75.6 | 1350 |

| Redmon et al. [2] | AlexNet, MultiGrasp | 88.0 | 87.1 | 76 |

| Wang et al. [12] | Two-stage closed-loop | 85.3 | - | 140 |

| Asif et al. [52] | STEM-CaRFs | 88.2 | 87.5 | - |

| Kumra et al. [4] | ResNet-50x2 | 89.2 | 88.9 | 103 |

| Guo et al. [32] | ZF-net | 93.2 | 89.1 | - |

| Zhou et al. [14] | FCGN, ResNet-101 | 97.7 | 96.6 | 117 |

| Asif et al. [15] | GraspNet | 90.2 | 90.6 | 24 |

| Chu et al. [13] | Multi-grasp Res-50 | 96.0 | 96.1 | 120 |

| Morrison et al. [53] | GG-CNN | 73.0 | 69.0 | 19 |

| Morrison et al. [9] | GG-CNN2 | 84.0 | 82.0 | 20 |

| Karaoguz et al. [54] | GRPN | 88.7 | - | 200 |

| Zhang et al. [16] | ROI-GD, ResNet-101 | 93.6 | 93.5 | 39 |

| Wang et al. [55] | DD-Net, Hourglass | 97.2 | 96.1 | - |

| Shi et al. [56] | EDINet-RGBD | 97.7 | 96.6 | 25 |

| Yu et al. [57] | SE-ResUNet | 98.2 | 97.1 | 25 |

| | GR-ConvNet | 97.7 | 96.6 | 20 |

| Ours | GR-ConvNet v2 | 98.8 | 97.7 | 20 |

Table 4.

Grasp prediction accuracy (%) for Cornell dataset at different Jaccard thresholds.

Table 4.

Grasp prediction accuracy (%) for Cornell dataset at different Jaccard thresholds.

| Approach | IoU > 25% | IoU > 30% | IoU > 35% | IoU > 40% |

|---|

| Guo et al. [32] | 93.2 | 91.0 | 85.3 | - |

| Chu et al. [13] | 96.0 | 92.7 | 87.6 | 82.6 |

| Wang et al. [58] | 94.4 | 92.8 | 90.2 | 85.7 |

| Shi et al. [56] | 97.7 | 97.6 | 97.1 | 96.5 |

| Ours | 98.8 | 98.8 | 98.8 | 96.6 |

Table 5.

Comparative results on the Jacquard dataset.

Table 5.

Comparative results on the Jacquard dataset.

| Authors | Algorithm | Accuracy (%) |

|---|

| IoU | SGT |

|---|

| Depierre et al. [6] | AlexNet | 74.2 | 72.4 |

| Morrison et al. [9] | GG-CNN2 | 84.0 | 85.0 |

| Zhou et al. [14] | FCGN, ResNet-101 | 92.8 | 81.9 |

| Zhang et al. [16] | ROI-GD, ResNet-101 | 93.6 | - |

| Wang [55] | DD-Net, Hourglass | 97.0 | 89.4 |

| Yu et al. [57] | SE-ResUNet | 95.7 | - |

| | GR-ConvNet (b = 8, d = 0.0) | 94.6 | 89.5 |

| Ours | GR-ConvNet v2 (b = 16, d = 0.1) | 95.1 | 91.4 |

Table 6.

Comparative results for grasp prediction accuracy (%) on Graspnet 1-billion dataset for different validation splits.

Table 6.

Comparative results for grasp prediction accuracy (%) on Graspnet 1-billion dataset for different validation splits.

| Approach | 5-Fold | Seen | Similar | Novel |

|---|

| GGCNN [9] | 82.3 | 83.0 | 79.4 | 76.3 |

| Multi-grasp [13] | 86.0 | 82.7 | 77.8 | 72.7 |

| GR-ConvNet (k = 32, d = 0.0) | 96.1 | 96.2 | 94.8 | 87.9 |

| GR-ConvNet v2 (k = 32, d = 0.1) | 98.7 | 97.9 | 96.0 | 90.5 |

Table 7.

Pick success rate (%) on YCB objects in simulation.

Table 7.

Pick success rate (%) on YCB objects in simulation.

| Approach | Training Dataset | Isolated | Cluttered |

|---|

| GGCNN | Cornell | 79.0 * | 74.5 * |

| GGCNN | Jacquard | 85.5 * | 82.0 * |

| GR-ConvNet v2 | Cornell | 98.0 | 92.0 |

| GR-ConvNet v2 | Jacquard | 97.5 | 96.5 |

Table 8.

Comparative results for grasp success rate (%) in real-world for different scenarios.

Table 8.

Comparative results for grasp success rate (%) in real-world for different scenarios.

| Approach | Training Dataset | Household Isolated | Adversarial Isolated | Household Cluttered | Adversarial Cluttered |

|---|

| SAE, struct. reg. [1] | Cornell | 89.0 (89/100) | - | - | - |

| Alexnet based CNN [3] | Custom | 66.0 (99/150) | - | 38.4 (50/130) | - |

| Robust Best Grasp [36] | ModelNet in Sim | 80.0 (80/100) | - | - | - |

| Multi-grasp Res-50 [13] | Cornell | 89.0 (89/100) | - | - | - |

| DexNet 2.0 [33] | DexNet in Sim | 80.0 (40/50) | 92.5 (74/80) | - | - |

| GPD [61] | CAD models | - | - | 77.3 (116/138) | - |

| CTR [60] | Custom in OpenRAVE | 97.5 (39/40) | - | 88.8 (66/74) | - |

| GGCNN [9] | Cornell | 91.6 (110/120) | 83.7 (67/80) | 86.4 (83/96) | - |

| GR-ConvNet | Cornell | 95.4 (334/350) | 93.0 (93/100) | 93.5 (187/200) | 91.0 (91/100) |

Table 9.

GR-ConvNet performance on Ravens-10 benchmark tasks. Task success rate (mean %) vs. demonstration used in training.

Table 9.

GR-ConvNet performance on Ravens-10 benchmark tasks. Task success rate (mean %) vs. demonstration used in training.

| | align-box-corner | assembling-kits | block-insertion | manipulating-rope | packing-boxes |

| Approach | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 |

| GR-ConvNet | 62.0 | 91.0 | 100 | 100 | 56.8 | 83.2 | 99.4 | 99.6 | 100 | 100 | 100 | 100 | 25.7 | 87.0 | 99.9 | 100 | 96.1 | 99.9 | 99.9 | 100 |

| Transporter [10] | 35.0 | 85.0 | 97.0 | 98.3 | 28.4 | 78.6 | 90.4 | 94.6 | 100 | 100 | 100 | 100 | 21.9 | 73.2 | 85.4 | 92.1 | 56.8 | 58.3 | 72.1 | 81.3 |

| Form2Fit [62] | 7.0 | 2.0 | 5.0 | 16.0 | 3.4 | 7.6 | 24.2 | 37.6 | 17.0 | 19.0 | 23.0 | 29.0 | 11.9 | 38.8 | 36.7 | 47.7 | 29.9 | 52.5 | 62.3 | 66.8 |

| | palletizing-boxes | place-red-in-green | stack-block-pyramid | sweeping-piles | towers-of-hanoi |

| | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 | 1 | 10 | 100 | 1000 |

| GR-ConvNet | 84.2 | 98.2 | 100 | 100 | 92.3 | 100 | 100 | 100 | 23.5 | 79.3 | 94.6 | 97.1 | 98.2 | 99.1 | 99.2 | 98.9 | 98.2 | 99.9 | 99.9 | 99.9 |

| Transporter [10] | 63.2 | 77.4 | 91.7 | 97.9 | 84.5 | 100 | 100 | 100 | 13.3 | 42.6 | 56.2 | 78.2 | 52.4 | 74.4 | 71.5 | 96.1 | 73.1 | 83.9 | 97.3 | 98.1 |

| Form2Fit [62] | 21.6 | 42.0 | 52.1 | 65.3 | 83.4 | 100 | 100 | 100 | 19.7 | 17.5 | 18.5 | 32.5 | 13.2 | 15.6 | 26.7 | 38.4 | 3.6 | 4.4 | 3.7 | 7.0 |