Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles

Abstract

:1. Introduction

- (1)

- Using the block processing strategy, the anchor frame size is reduced globally to increase the proportion of power lines in the feature map and to reduce the accuracy degradation caused by the original negative anchor frames being misclassified as positive anchor frames.

- (2)

- Further processing of the initially extracted power lines uses the connected domain group fitting algorithm to solve the problem of power line breakage and mis-extraction.

- (3)

- Compared with the traditional Mask RCNN method, the extraction accuracy, precision, and anti-interference performance of the algorithm in this paper are greatly improved.

2. Related Work

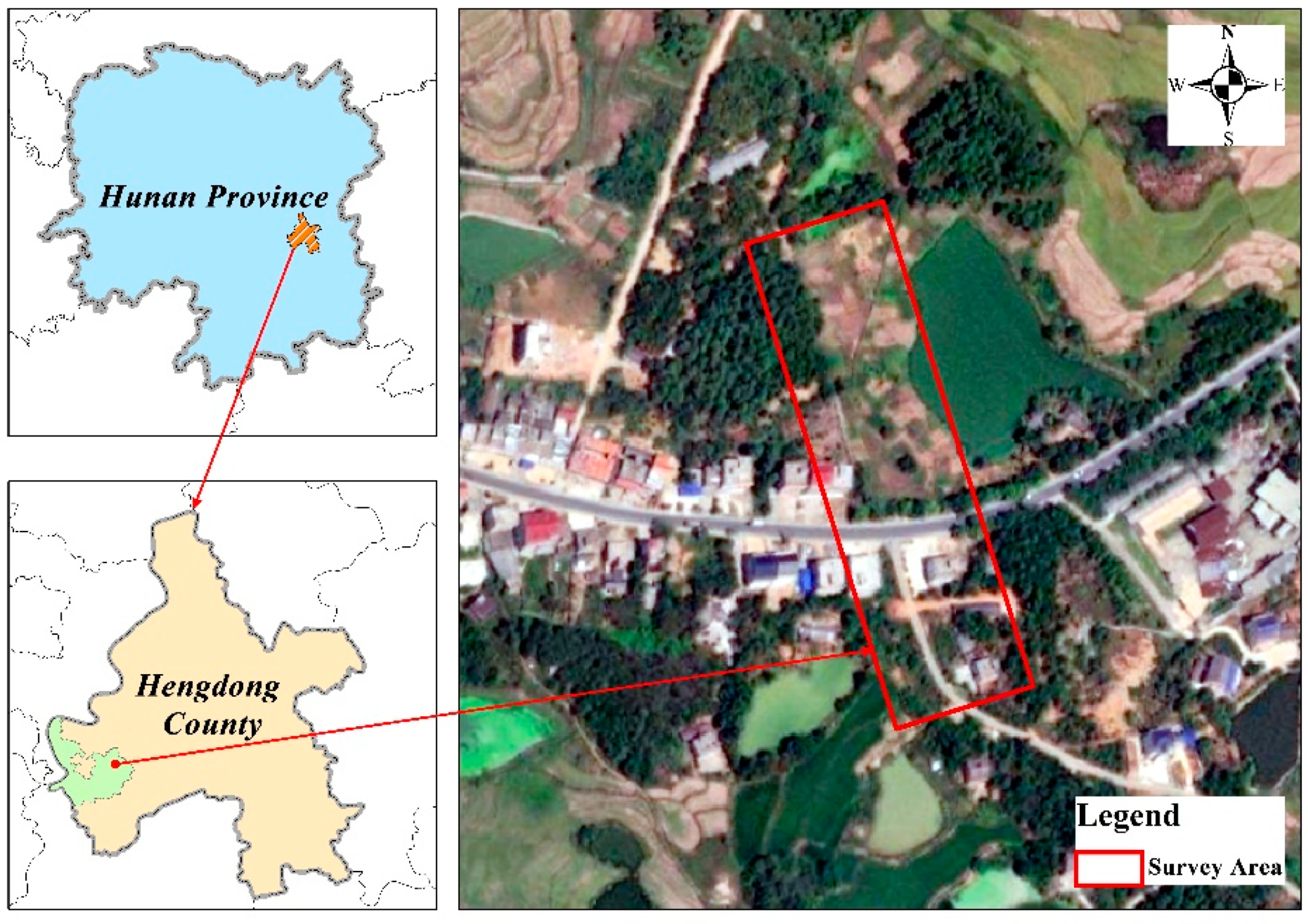

2.1. Data Acquisition

2.2. Operation Equipment

2.3. Dataset Production

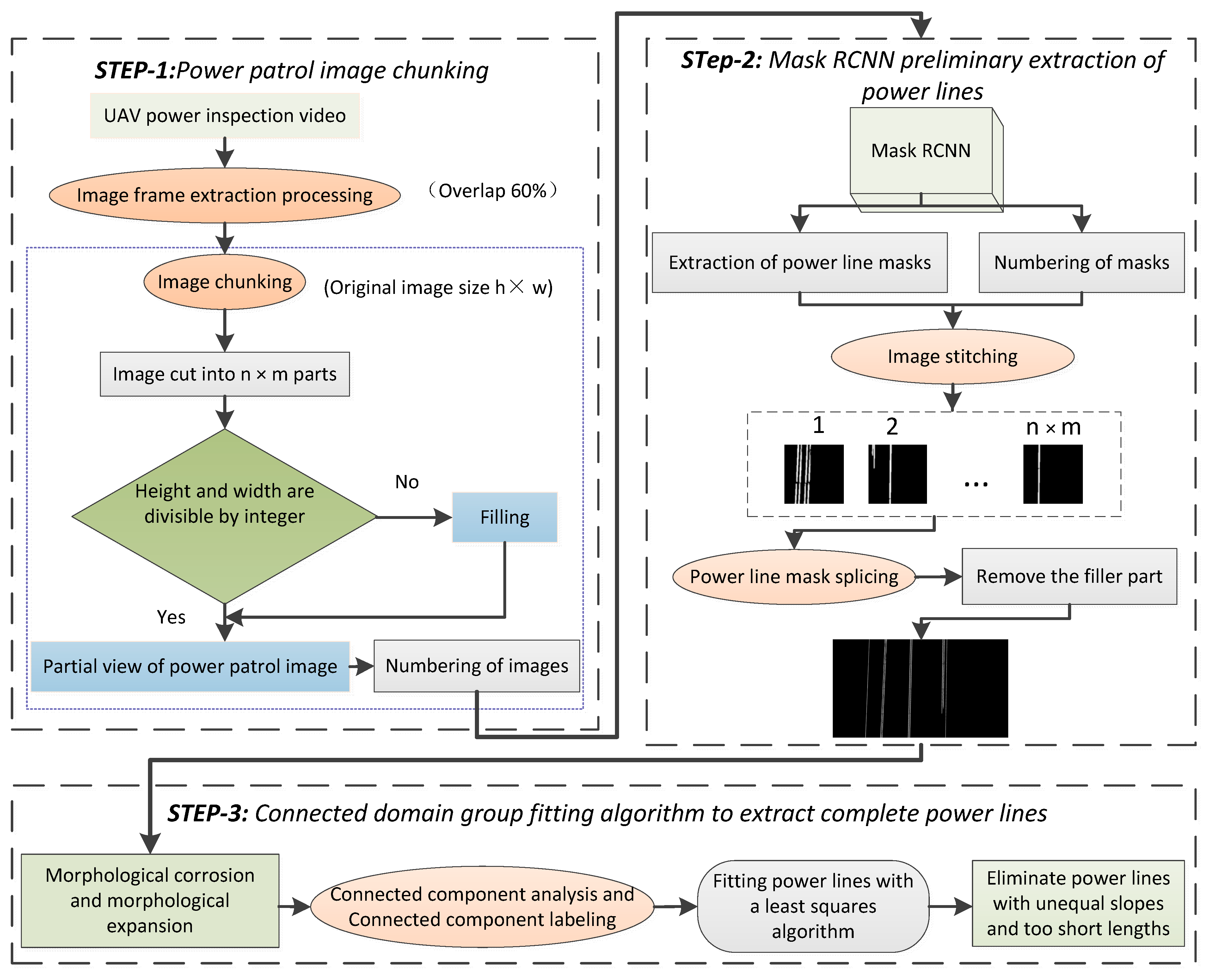

3. Methodology

3.1. Power Patrol Image Chunking

3.2. Mask RCNN Preliminary Extraction of Power Line

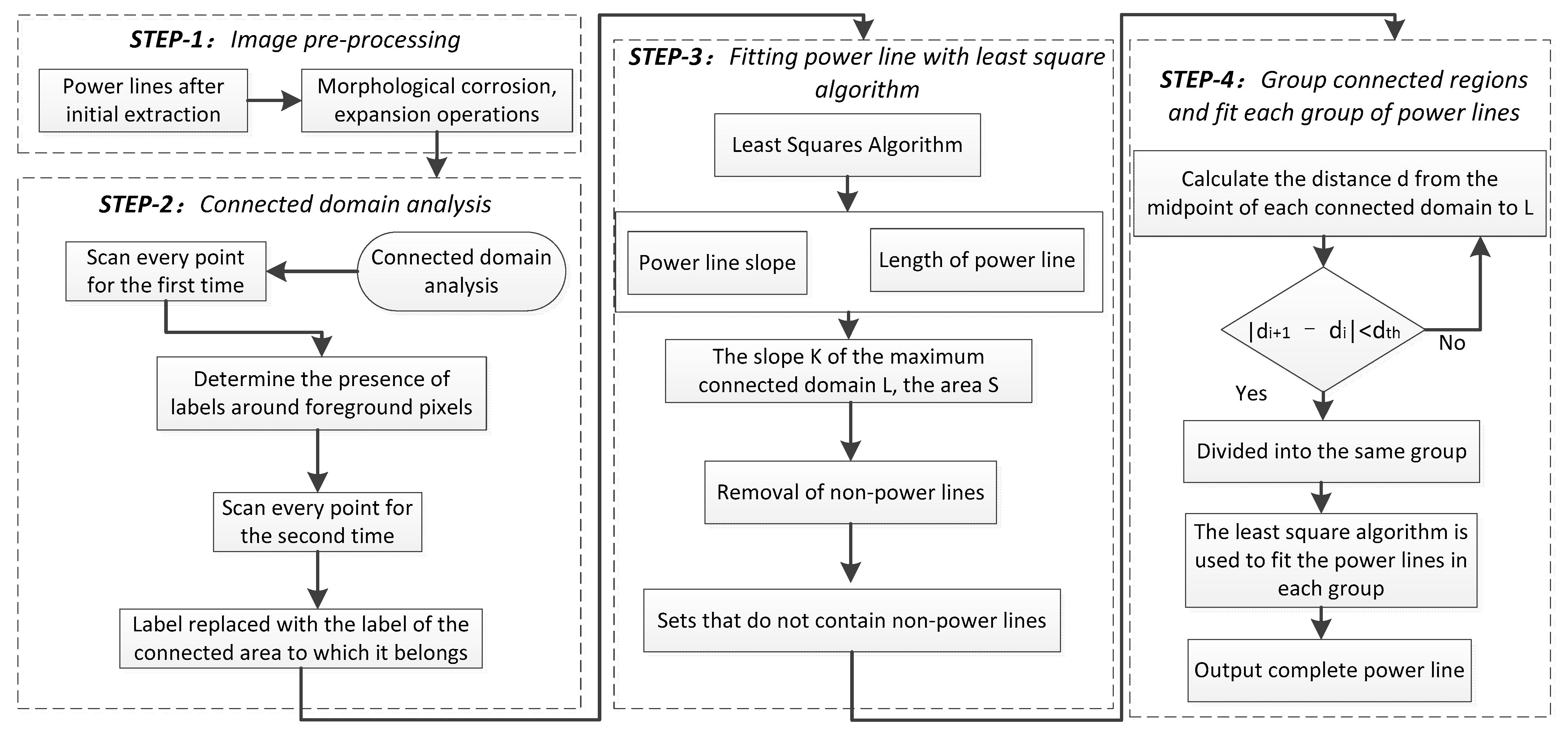

3.3. Connected Domain Group Fitting Algorithm

4. Experimental Results

4.1. Model Training

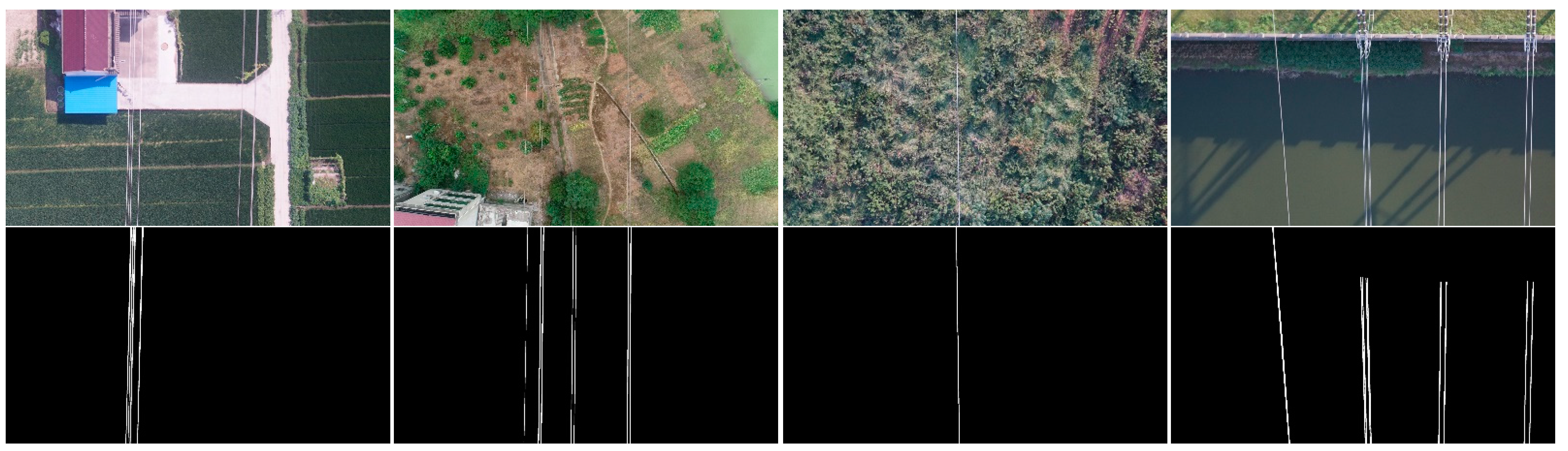

4.2. Experimental Data

4.3. Evaluation Parameters

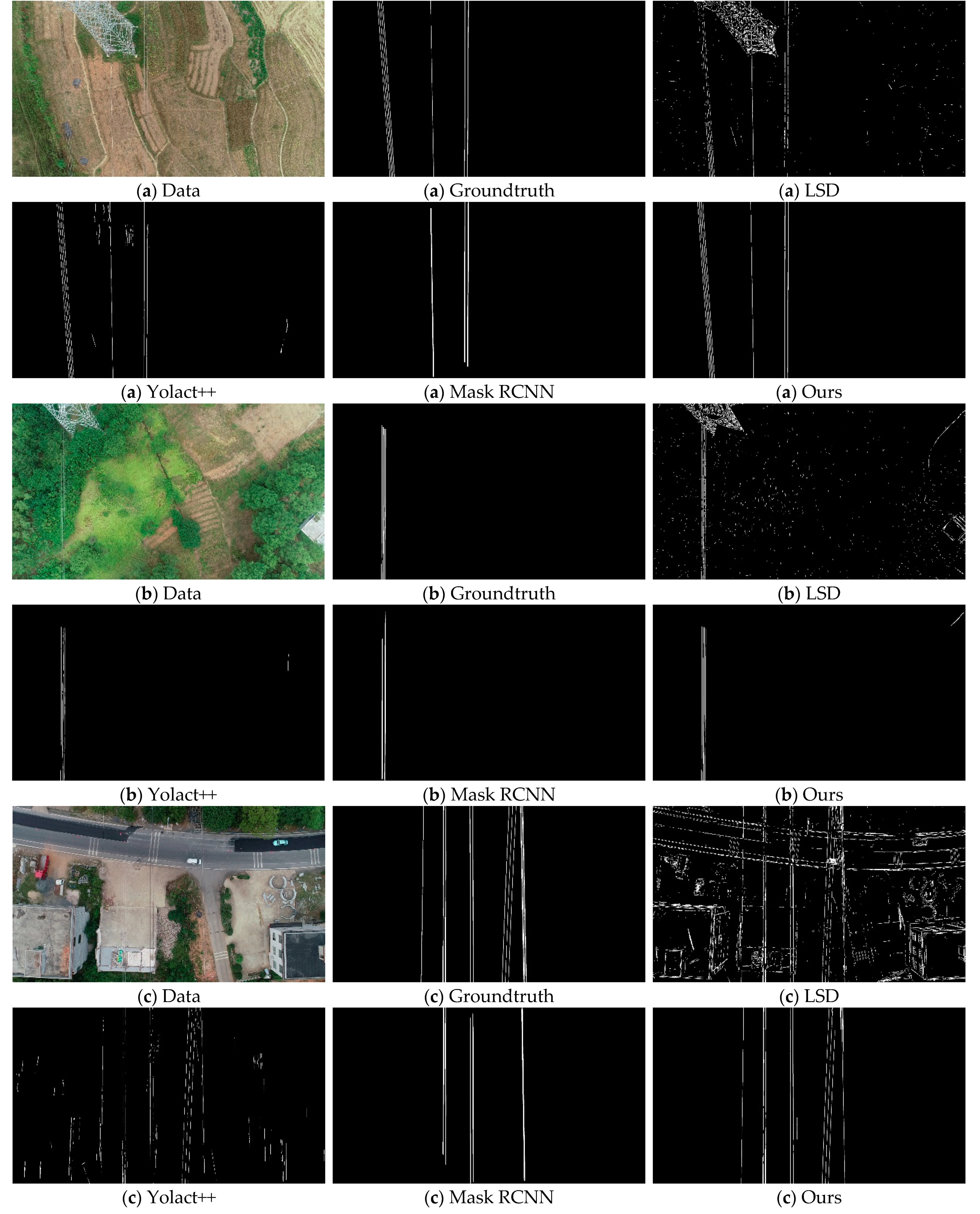

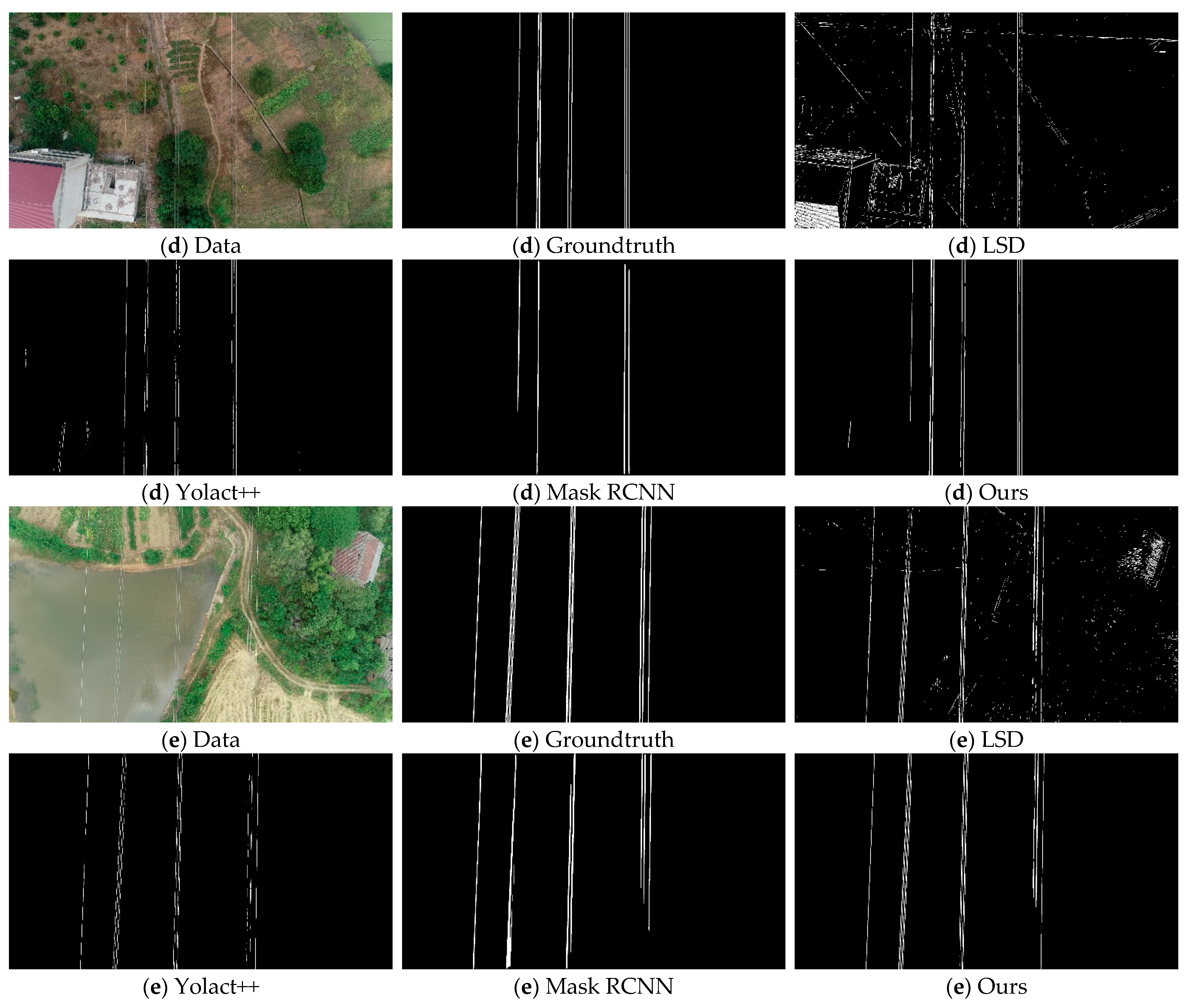

4.4. Comparison with Other Methods

5. Discussions

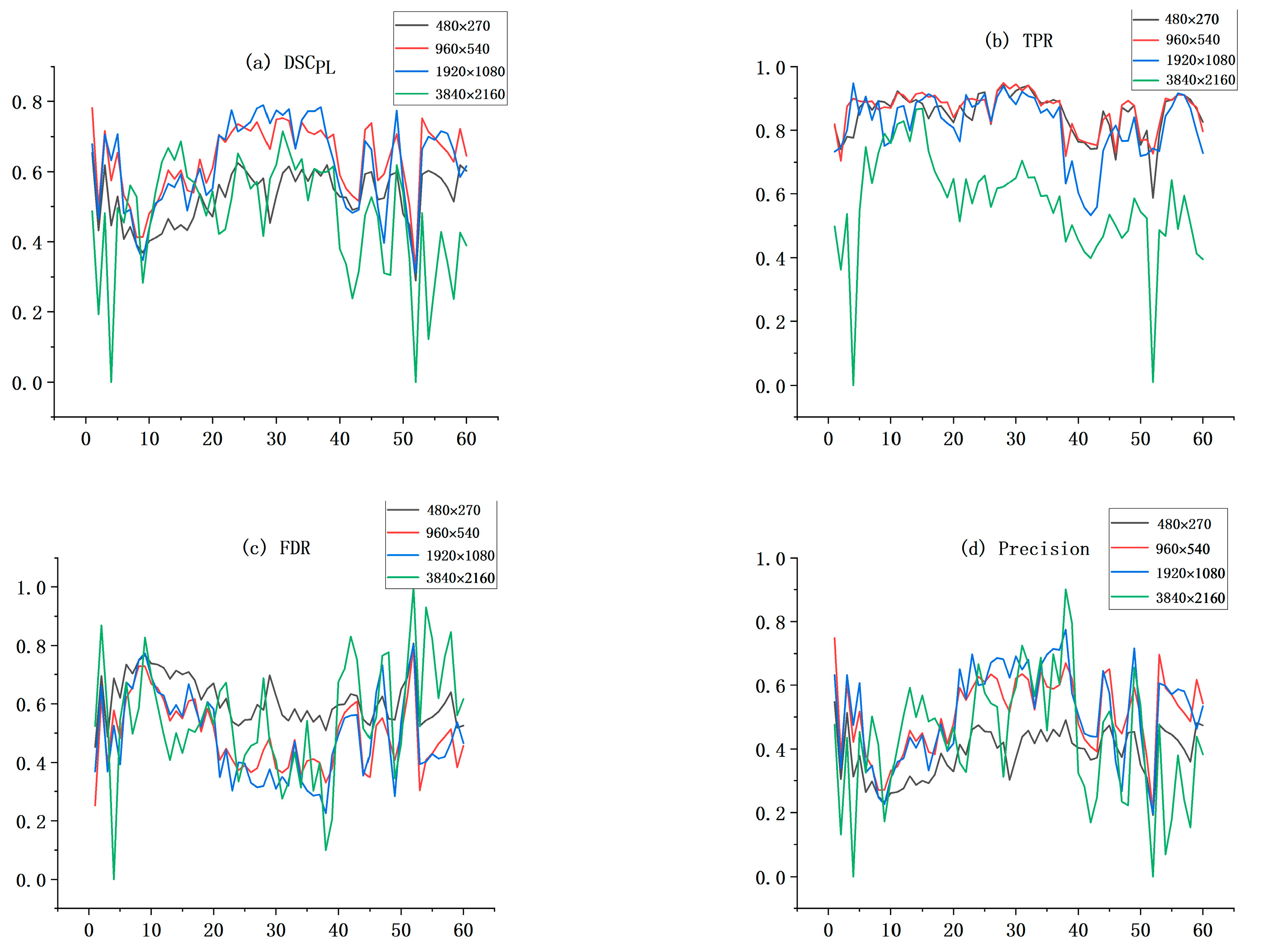

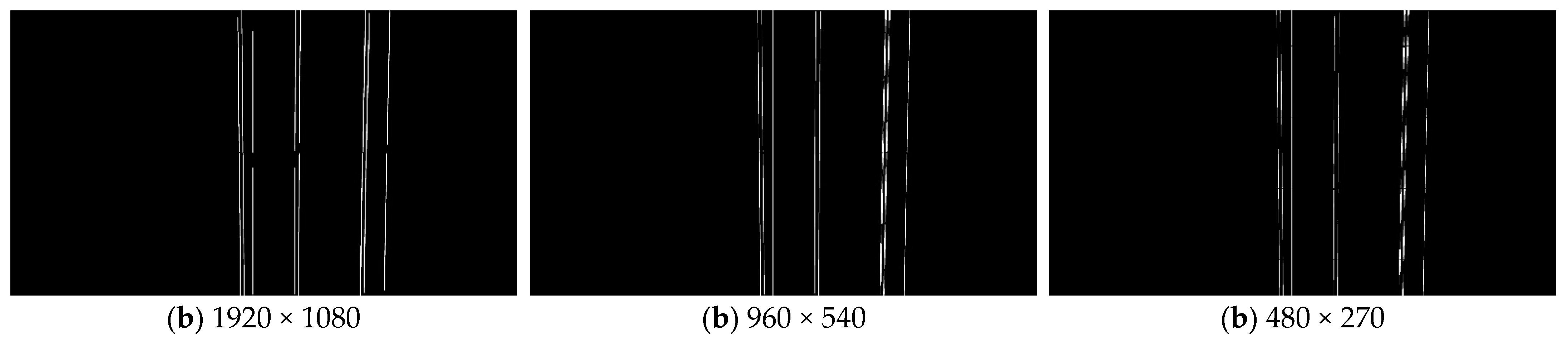

5.1. Effectiveness of Chunking Strategies and the Impact of Chunk Size on Performance

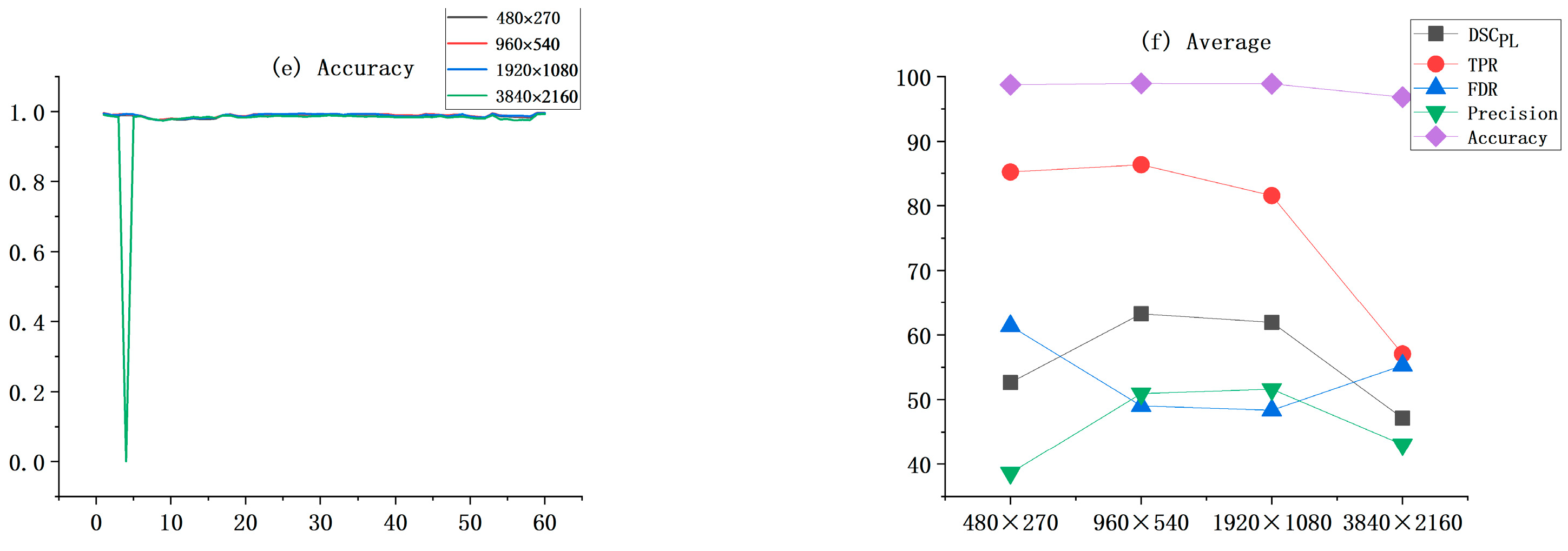

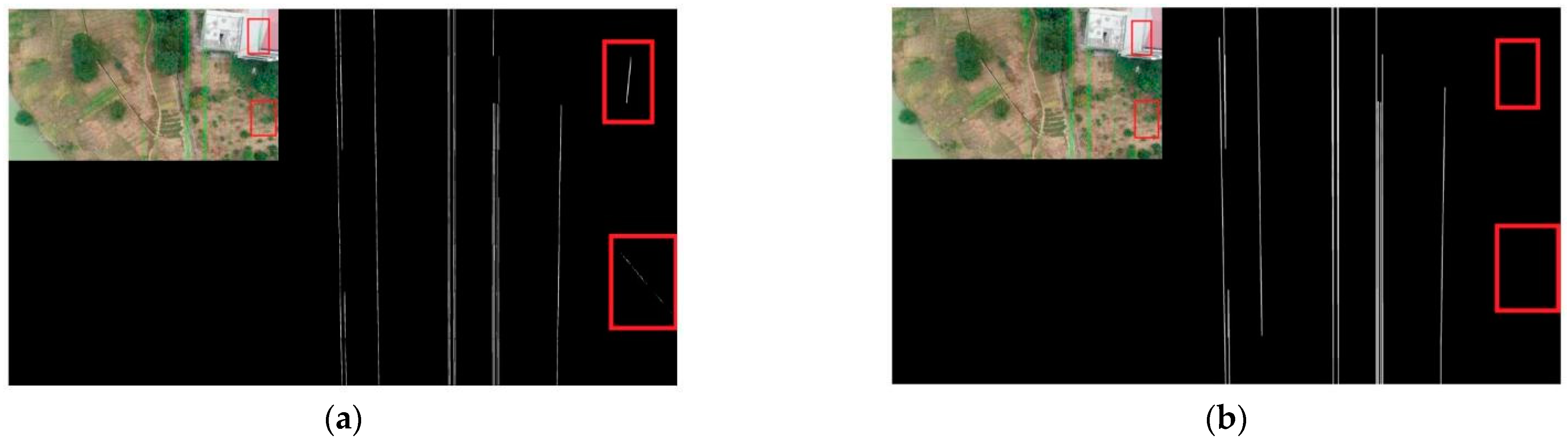

5.2. Performance of the Connected Domain Group Fitting Algorithm

6. Conclusions

- (1)

- To address the problems of power lines running through the whole map and the difficulty of extraction due to the faint target, this paper adopts a chunking extraction strategy to globally reduce the anchor frame size to increase the proportion of power lines in the feature map. In addition, this strategy can reduce the accuracy degradation caused by the original negative anchor frame being misclassified as a positive anchor frame.

- (2)

- The proposed connected group fitting algorithm can effectively solve the problems of breakage and mis-extraction after the initial extraction of power lines.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pouliot, N.; Richard, P.; Montambault, S. LineScout Technology Opens the Way to Robotic Inspection and Maintenance of High-Voltage Power Lines. IEEE Power Energy Technol. Syst. J. 2015, 2, 1–11. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring From an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Li, C. Extraction of High Voltage Line Corridor Features and Elevation Calculation Study. Ph.D. Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2006. [Google Scholar]

- Mu, C. Research on the Extraction Method of Power Line Corridor Features Based on Multiple Remote Sensing Data. Ph.D. Thesis, Wuhan University, Wuhan, China, 2010. [Google Scholar]

- Zhang, Y. Research on UAV Low Altitude Photogrammetry Method for Overhead Transmission Line Obstacle Inspection. Ph.D. Thesis, Wuhan University, Wuhan, China, 2017. [Google Scholar]

- Zhang, Z.; Zhang, W.; Li, Z.; Xiao, Y.; Deng, J.; Xia, G. UV imaging inspection of wires with different defect types. Grid Technol. 2015, 39, 2647–2652. [Google Scholar] [CrossRef]

- Yan, Y.; Sheng, G.; Chen, Y.; Guo, Z.; Du, X.; Wang, Q. Construction of key parameters system for transmission line condition evaluation based on association rules and principal component analysis. High Volt. Technol. 2015, 41, 2308–2314. [Google Scholar] [CrossRef]

- Jiang, X.; Xia, Y.; Zhang, Z.; Hu, J.; Hu, q. Transmission conductor broken strand image detection based on optimized Gabor filter. Power Syst. Autom. 2011, 35, 78–83. [Google Scholar]

- Hu, Q.; Yu, H.; Xu, X.; Shu, L.; Jiang, X.; Qiu, g.; Li, H. Analysis of ice-cover torsional characteristics of split conductors and calculation of equivalent ice-cover thickness. Grid Technol. 2016, 40, 3615–3620. [Google Scholar] [CrossRef]

- Hu, Q.; Yu, H.; Li, Y.; Shu, L.; Jiang, X.; Liang, j. Simulation calculation and experimental verification of ice-cover growth of split conductors. High Volt. Technol. 2017, 43, 900–908. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Y.; He, D.; Guo, C.; Chen, Y.; Li, M. Analysis of the current situation of power grid disaster prevention and mitigation and suggestions. Grid Technol. 2016, 40, 2838–2844. [Google Scholar] [CrossRef]

- Shi, J.; Li, Z.; Gu, C.; Sheng, G.; Jiang, X. Faster R-CNN based sample expansion for foreign object monitoring in power grids. Grid Technol. 2020, 44, 44–51. [Google Scholar] [CrossRef]

- Lu, J.; Zhou, T.; Wu, C.; Li, B.; Tao, Y.; Zhu, Y. Fault statistics and analysis of transmission lines of 220kV and above in a provincial power grid. High Volt. Technol. 2016, 42, 200–207. [Google Scholar] [CrossRef]

- Tong, Q.; Li, B.; Fan, J.; Zhao, S. Transmission line arc sag measurement method based on aerial sequence images. Chin. J. Electr. Eng. 2011, 31, 115–120. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Huang, H.; Zhang, G.; Ma, Y. A new model for calculating the safety limit distance of overhead transmission lines. Power Sci. Eng. 2015, 31, 60–65. [Google Scholar]

- Lu, Q.; Chen, W.; Wang, W. Research on arc sag measurement of transmission lines based on aerial images. Comput. Appl. Softw. 2019, 36, 108–111. [Google Scholar]

- Mongus, D.; Brumen, M.; Žlaus, D.; Kohek, Š.; Tomažič, R.; Kerin, U.; Kolmanič, S. A Complete Environmental Intelligence System for LiDAR-Based Vegetation Management in Power-Line Corridors. Remote Sens. 2021, 13, 5159. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Huang, C.; Wu, Q.; Wu, J. Automated extraction of ground surface along urban roads from mobile laser scanning point clouds. Remote Sens. Lett. 2016, 7, 170–179. [Google Scholar] [CrossRef]

- Li, X.; Wang, R.; Chen, X.; Li, Y.; Duan, Y. Classification of Transmission Line Corridor Tree Species Based on Drone Data and Machine Learning. Sustainability 2022, 14, 8273. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Li, J.; Liu, P.; Zhao, H.; Wang, C. Automated extraction of manhole covers using mobile LiDAR data. Remote Sens. Lett. 2014, 5, 1042–1050. [Google Scholar] [CrossRef]

- Ma, W.; Wang, C.; Wang, J.; Zhou, J.; Ma, Y. Residual clustering method for fine extraction of laser point cloud transmission lines. J. Surv. Mapp. 2020, 49, 883–892. [Google Scholar]

- Yang, L.; Fan, J.; Huo, B.; Li, E.; Liu, Y. PLE-Net: Automatic power line extraction method using deep learning from aerial images. Expert Syst. Appl. 2022, 198, 116771. [Google Scholar] [CrossRef]

- Zhao, L.; Yao, H.; Tian, M.; Wang, X. Robust power line extraction from aerial image using object-based Gaussian–Markov random field with gravity property parameters. Signal Process. Image Commun. 2022, 103, 116634. [Google Scholar] [CrossRef]

- Zhu, L.; Cao, W.; Han, J.; Du, Y. A double-side filter based power line recognition method for UAV vision system. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 2655–2660. [Google Scholar]

- Yan, G.; Li, C.; Zhou, G.; Zhang, W.; Li, X. Automatic Extraction of Power Lines From Aerial Images. IEEE Geosci. Remote Sens. Lett. 2007, 4, 387–391. [Google Scholar] [CrossRef]

- Li, Z.; Liu, Y.; Hayward, R.; Zhang, J.; Cai, J. Knowledge-based power line detection for UAV surveillance and inspection systems. In Proceedings of the 2008 23rd International Conference Image and Vision Computing New Zealand, Christchurch, New Zealand, 26–28 November 2008; pp. 1–6. [Google Scholar]

- Shuai, C.; Wang, H.; Zhang, G.; Kou, Z.; Zhang, W. Power Lines Extraction and Distance Measurement from Binocular Aerial Images for Power Lines Inspection Using UAV. In Proceedings of the 2017 9th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2017; pp. 69–74. [Google Scholar]

- Li, Z.; Liu, Y.; Walker, R.; Hayward, R.; Zhang, J. Towards automatic power line detection for a UAV surveillance system using pulse coupled neural filter and an improved Hough transform. Mach. Vis. Appl. 2010, 21, 677–686. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, X.; Dai, D.; Long, J.; Tian, M.; Zhu, G. Automatic power line extraction algorithm in complex background. High Volt. Technol. 2019, 45, 218–227. [Google Scholar] [CrossRef]

- Li, C.; Feng, Z.; Deng, X.; Han, L. Power line extraction method in complex feature background. Comput. Eng. Appl. 2016, 52, 198–202. [Google Scholar]

- Tan, L.; Wang, Y.; Shen, C. Transmission line de-icing robot obstacle visual detection recognition algorithm. J. Instrum. 2011, 32, 2564–2571. [Google Scholar] [CrossRef]

- Zhang, J.; Shan, H.; Cao, X.; Yan, P.; Li, X. Pylon line spatial correlation assisted transmission line detection. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 2890–2905. [Google Scholar] [CrossRef]

- Golightly, I.; Jones, D. Corner detection and matching for visual tracking during power line inspection. Image Vis. Comput. 2003, 21, 827–840. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Nguyen, V.N.; Jenssen, R.; Roverso, D. LS-Net: Fast single-shot line-segment detector. Mach. Vis. Appl. 2020, 32, 12. [Google Scholar] [CrossRef]

- Pan, C.; Cao, X.; Wu, D. Power line detection via background noise removal. In Proceedings of the 2016 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Washington, DC, USA, 7–9 December 2016; pp. 871–875. [Google Scholar]

- Benlıgıray, B.; Gerek, Ö.N. Visualization of power lines recognized in aerial images using deep learning. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Zhu, K.; Xu, C.; Wei, Y.; Cai, G. Fast-PLDN: Fast power line detection network. J. Real-Time Image Process. 2022, 19, 3–13. [Google Scholar] [CrossRef]

- Gubbi, J.; Varghese, A.; Balamuralidhar, P. A new deep learning architecture for detection of long linear infrastructure. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya, Japan, 8–12 May 2017; pp. 207–210. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Yao, F.; Wang, S.; Li, R.; Chen, L.; Gao, F.; Dong, J. An accurate box localization method based on rotated-RPN with weighted edge attention for bin picking. Neurocomputing 2022, 482, 264–277. [Google Scholar] [CrossRef]

- Zhu, L.; Xie, Z.; Liu, L.; Tao, B.; Tao, W. IoU-uniform R-CNN: Breaking through the limitations of RPN. Pattern Recognit. 2021, 112, 107816. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective Fusion Factor in FPN for Tiny Object Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1159–1167. [Google Scholar]

- Yang, C.; Wu, Z.; Zhou, B.; Lin, S. Instance Localization for Self-supervised Detection Pretraining. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 3986–3995. [Google Scholar]

- Krueangsai, A.; Supratid, S. Effects of Shortcut-Level Amount in Lightweight ResNet of ResNet on Object Recognition with Distinct Number of Categories. In Proceedings of the 2022 International Electrical Engineering Congress (iEECON), Pattaya, Thailand, 9–11 March 2022; pp. 1–4. [Google Scholar]

- Showkat, S.; Qureshi, S. Efficacy of Transfer Learning-based ResNet models in Chest X-ray image classification for detecting COVID-19 Pneumonia. Chemom. Intell. Lab. Syst. 2022, 224, 104534. [Google Scholar] [CrossRef]

- Sun, T.; Ding, S.; Guo, L. Low-degree term first in ResNet, its variants and the whole neural network family. Neural Netw. 2022, 148, 155–165. [Google Scholar] [CrossRef]

- Zhang, Z. ResNet-Based Model for Autonomous Vehicles Trajectory Prediction. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 565–568. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Yang, G.; Wang, Z.; Zhuang, S. PFF-FPN: A Parallel Feature Fusion Module Based on FPN in Pedestrian Detection. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 377–381. [Google Scholar]

- Liu, D.; Cheng, F. SRM-FPN: A Small Target Detection Method Based on FPN Optimized Feature. In Proceedings of the 2021 18th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 17–19 December 2021; pp. 506–509. [Google Scholar]

- Ahmad, R.; Naz, S.; Razzak, I. Efficient skew detection and correction in scanned document images through clustering of probabilistic hough transforms. Pattern Recognit. Lett. 2021, 152, 93–99. [Google Scholar] [CrossRef]

- Sun, F.; Liu, J. Fast Hough transform algorithm. J. Comput. Sci. 2001, 24, 1102–1109. [Google Scholar]

- Bober, M.; Kittler, J. A Hough transform based hierarchical algorithm for motion segmentation and estimation. In Proceedings of the IEEE Colloquium on Hough Transforms, London, UK, 7 May 1993; pp. 12/11–12/14. [Google Scholar]

- Liang, X.; Liu, L.; Luo, M.; Yan, Z.; Xin, Y. Robust infrared small target detection using Hough line suppression and rank-hierarchy in complex backgrounds. Infrared Phys. Technol. 2022, 120, 103893. [Google Scholar] [CrossRef]

- Kimori, Y. A morphological image processing method to improve the visibility of pulmonary nodules on chest radiographic images. Biomed. Signal Process. Control. 2020, 57, 101744. [Google Scholar] [CrossRef]

- Naderi, H.; Fathianpour, N.; Tabaei, M. MORPHSIM: A new multiple-point pattern-based unconditional simulation algorithm using morphological image processing tools. J. Pet. Sci. Eng. 2019, 173, 1417–1437. [Google Scholar] [CrossRef]

- Hu, L.; Qi, C.; Wang, Q. Spectral-Spatial Hyperspectral Image Classification Based on Mathematical Morphology Post-Processing. Procedia Comput. Sci. 2018, 129, 93–97. [Google Scholar] [CrossRef]

- Yan, J.; Liang, Q.; Li, Z.; Geng, B.; Kou, X.; Hu, Y. Application of Connected Domain Identification Method for Quantitative Cave Information Pickup in FMI Images. J. Geophys. 2016, 59, 4759–4770. [Google Scholar]

- Zhao, Z.; Zhang, T.; Zhang, Z. A new algorithm for threshold segmentation based on visual model and connected domain statistics. J. Electron. 2005, 22, 793–797. [Google Scholar]

- Yeung, M.; Sala, E.; Schönlieb, C.-B.; Rundo, L. Unified Focal loss: Generalising Dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Gioi, R.G.v.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef] [PubMed]

- Shi, P.; Fang, Y.; Lin, C.; Liu, Y.; Zhai, R. A new line detection algorithm—Automatic measurement of character parameter of rapeseed plant by LSD. In Proceedings of the 2015 Fourth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Istanbul, Turkey, 20–24 July 2015; pp. 257–262. [Google Scholar]

- Li, M.; Yang, Z.; Zhao, B.; Ma, X.; Han, J. Research on transmission conductor extraction method based on mainline projection LSD algorithm. In Proceedings of the 2022 2nd International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 14–16 January 2022; pp. 747–750. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++ Better Real-Time Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1108–1121. [Google Scholar] [CrossRef]

- Abdelfattah, R.; Wang, X.; Wang, S. TTPLA: An Aerial-Image Dataset for Detection and Segmentation of Transmission Towers and Power Lines. In Proceedings of the Computer Vision—ACCV 2020, Cham, Switzerland, 20 October 2020; pp. 601–618. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 9156–9165. [Google Scholar]

- Vemula, S.; Frye, M. Mask R-CNN Powerline Detector: A Deep Learning approach with applications to a UAV. In Proceedings of the 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), San Antonio, TX, USA, 11–15 October 2020; pp. 1–6. [Google Scholar]

- Addai, P.; Mohd, T.K. Power and Telecommunication Lines Detection and Avoidance for Drones. In Proceedings of the 2022 IEEE World AI IoT Congress (AIIoT), Seattle, DC, USA, 6–9 June 2022; pp. 118–123. [Google Scholar]

| Camera GNSS | Parameters |

|---|---|

| Image sensor | inch CMOS; 20.0 million effective pixels (204.8 million total pixels) |

| Video resolution | H.264, 4 K: 3840 × 2160 30 p |

| Maximum photo resolution | 4864 × 3648 (4:3) |

| Frequency of use | GPS: L1/L2 GLONASS: L1/L2 BeiDou: B1/B2 Galileo: E1/E5 |

| Positioning accuracy | Vertical 1.5 cm + 1 ppm (RMS) Horizontal 1 cm + 1 ppm (RMS) |

| Parameter | Value |

|---|---|

| weight decay | 0.0001 |

| learning rate | 0.001 |

| maximum iteration | 36,000 |

| ims_per_batch | 4 |

| batch_size_per_image | 128 |

| Method | DSCPL (%) | TPR (%) | FDR (%) | Precision (%) | Accuracy (%) |

|---|---|---|---|---|---|

| LSD | 49.74 | 52.90 | 49.26 | 50.74 | 98.20 |

| Yolact++ | 48.56 | 82.10 | 64.79 | 35.21 | 98.66 |

| Mask RCNN | 47.16 | 57.05 | 55.28 | 43.05 | 96.81 |

| Ours | 73.95 | 81.75 | 30.72 | 69.28 | 99.15 |

| Chunk Size | DSCPL (%) | TPR (%) | FDR (%) | Precision (%) | Accuracy (%) |

|---|---|---|---|---|---|

| 480 × 270 | 52.63 | 85.19 | 61.38 | 38.62 | 98.74 |

| 960 × 540 | 63.26 | 86.31 | 49.05 | 50.95 | 98.94 |

| 1920 × 1080 | 61.92 | 81.56 | 48.37 | 51.63 | 98.88 |

| 3840 × 2160 | 47.16 | 57.05 | 55.28 | 43.05 | 96.81 |

| Method | DSCPL (%) | TPR (%) | FDR (%) | Precision (%) | Accuracy (%) |

|---|---|---|---|---|---|

| Mask RCNN | 63.26 | 86.31 | 49.05 | 50.95 | 98.94 |

| Mask RCNN + CDGFA | 73.95 | 81.75 | 30.72 | 69.28 | 99.15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, J.; Qian, J.; Li, Y.; Liu, Z.; Chen, Y.; Chen, J. Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles. Sensors 2022, 22, 6431. https://doi.org/10.3390/s22176431

Song J, Qian J, Li Y, Liu Z, Chen Y, Chen J. Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles. Sensors. 2022; 22(17):6431. https://doi.org/10.3390/s22176431

Chicago/Turabian StyleSong, Jiang, Jianguo Qian, Yongrong Li, Zhengjun Liu, Yiming Chen, and Jianchang Chen. 2022. "Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles" Sensors 22, no. 17: 6431. https://doi.org/10.3390/s22176431

APA StyleSong, J., Qian, J., Li, Y., Liu, Z., Chen, Y., & Chen, J. (2022). Automatic Extraction of Power Lines from Aerial Images of Unmanned Aerial Vehicles. Sensors, 22(17), 6431. https://doi.org/10.3390/s22176431