Images Enhancement of Ancient Mural Painting of Bey’s Palace Constantine, Algeria and Lacuna Extraction Using Mahalanobis Distance Classification Approach

Abstract

:1. Introduction

- -

- In the literature, most studies have focused on detecting and removing cracks from old paintings. However, lacuna extraction has not received the necessary consideration and is not well-explored. Furthermore, most recent studies have focused on using deep learning for mural protection and restoration but deep learning requires a large amount of data and computational resources which is not always available in heritage institutions;

- -

- The proposed Mahalanobis Distance classification approach is simple and robust. This approach does not require a large dataset, moreover it decreases the computational complexity and time-consuming manual classification and feature extraction of lacunae regions in ancient mural paintings of Bey’s Palace. Automatic extraction of large, degraded portions in ancient mural paintings of Bey’s Palace is a very challenging task. This is the first conducted study using image processing techniques to extract and map deterioration regions of Algerian mural paintings of various colors, sizes, and shapes in an automatic way, enhancing the quality and improving the visibility of the murals in terms of brightness and illumination by using Dark Channel Prior (DCP).

2. Related Works

3. Materials and Methods

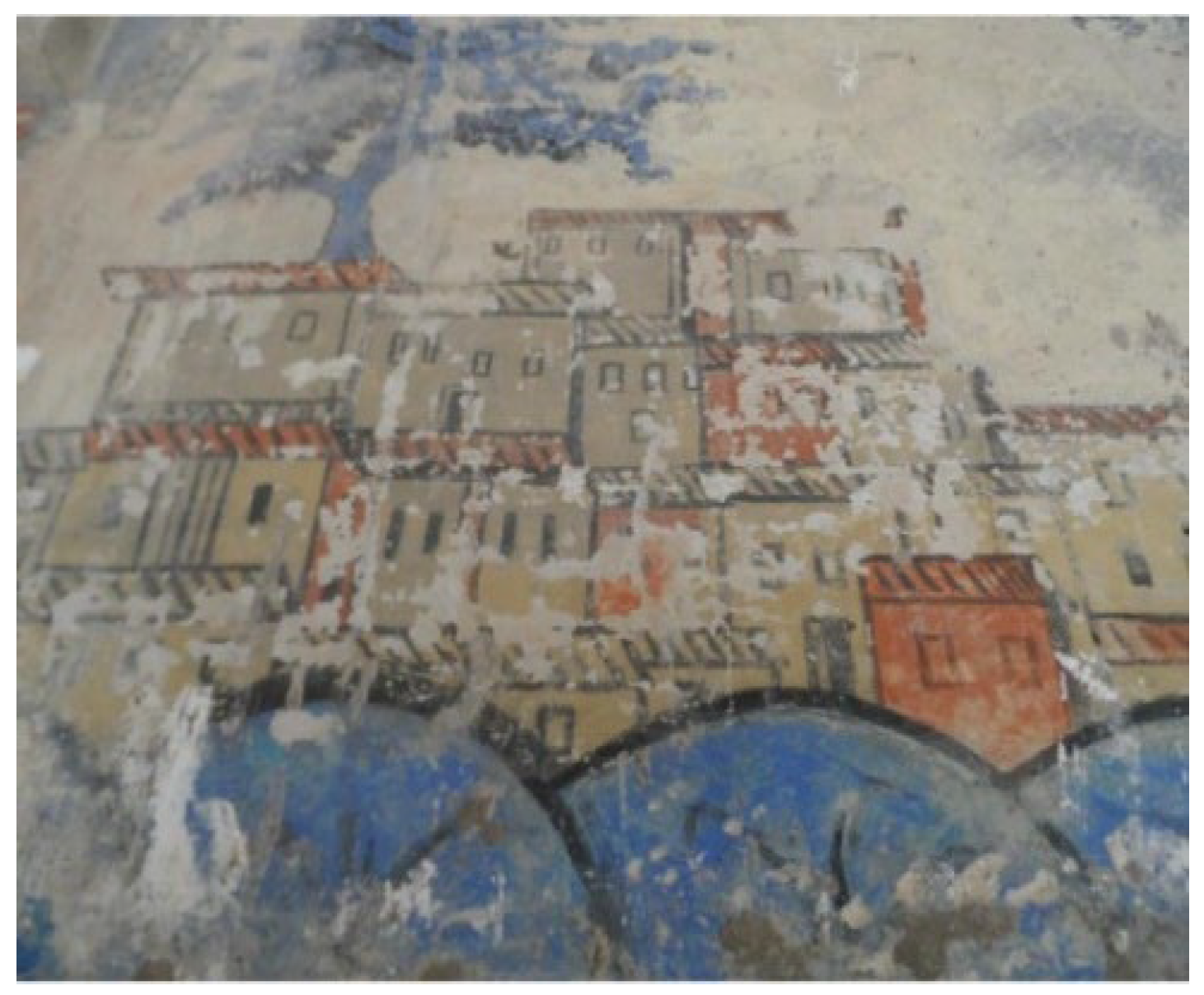

3.1. The Case Study

3.2. Methods

- (1)

- Data collection and preprocessing using haze removal techniques [27] and Dark Channel Prior. Estimating the Atmospheric Light and the transmission, Soft Matting, and Scene Radiance Recovering;

- (2)

- We started with the determination of the training sample by visual observation of the difference between the original colors of mural painting and the color of degraded areas as well as by manually examining the pixel intensity value of damaged regions. After Pixels grouping was performed by determining the closeness of each image pixel to each of the samples selected from the image, the pixels in RGB images were assigned to their closest sample based on Mahalanobis Distance. That measure was then used to determine the class of non-classified regions. Finally, we performed the evaluation of the obtained results. The details of each step are discussed in the following subsections.

3.3. Data Collection and Preprocessing

3.4. Low-Light Image Enhancement

3.4.1. Dark Channel Prior

3.4.2. Estimating the Atmospheric Light and the Transmission

3.4.3. Soft Matting and Scene Radiance Recovering

3.5. Classification and Training Samples Selection

3.5.1. Lacuna’s Extraction Based on Discriminant Analysis

3.5.2. Mahalanobis Distance

3.6. Accuracy Evaluation

4. Results and Discussion

4.1. Image Enhancement

4.2. Lacuna Extraction Based on Mahalanobis Distance

Method Evaluation on Ancient Mural Paintings of Mogao Caves

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Colta, I.P. The conservation of a painting. case study: Cornel minişan,“landscape from caransebeş”(oil on canvas, 20th century). Procedia Chem. 2013, 8, 72–77. [Google Scholar] [CrossRef]

- Sun, M.; Zhang, D.; Wang, Z.; Ren, J.; Chai, B.; Sun, J. What’s wrong with the murals at the Mogao Grottoes: A near-infrared hyperspectral imaging method. Sci. Rep. 2015, 5, 14371. [Google Scholar] [CrossRef] [PubMed]

- Mol, V.R.; Maheswari, P.U. The digital reconstruction of degraded ancient temple murals using dynamic mask generation and an extended exemplar-based region-filling algorithm. Herit. Sci. 2021, 9, 137. [Google Scholar] [CrossRef]

- Borg, B.; Dunn, M.; Ang, A.; Villis, C.J. The application of state-of-the-art technologies to support artwork conservation: Literature review. J. Cult. Herit. 2020, 44, 239–259. [Google Scholar] [CrossRef]

- Daffara, C.; Parisotto, S.; Mariotti, P.I.; Ambrosini, D. Dual mode imaging in mid infrared with thermal signal reconstruction for innovative diagnostics of the “Monocromo” by Leonardo da Vinci. Sci. Rep. 2021, 11, 22482. [Google Scholar] [CrossRef]

- Otero, J.J. Heritage Conservation Future: Where We Stand, Challenges Ahead, and a Paradigm Shift. Glob. Chall. 2022, 6, 2100084. [Google Scholar] [CrossRef] [PubMed]

- Li, F.-F.; Fergus, R.; Perona, P. Learning generative visual models from few training examples: An incremental bayesian approach tested on 101 object categories. In Proceedings of the 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 178. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 Object Category Dataset. In Technical Report 7694; California Institute of Technology: Pasadena, CA, USA, 2007. [Google Scholar]

- Huang, R.; Zhang, G.; Chen, J. Semi-supervised discriminant Isomap with application to visualization, image retrieval and classification. Int. J. Mach. Learn. Cybern. 2019, 10, 1269–1278. [Google Scholar] [CrossRef]

- Santosh, K.C.; Roy, P.P. Arrow detection in biomedical images using sequential classifier. Int. J. Mach. Learn. Cybern. 2018, 9, 993–1006. [Google Scholar] [CrossRef]

- Abry, P.; Klein, A.G.; Sethares, W.A.; Johnson, C.R. Signal Processing for Art Investigation [From the Guest Editors]. IEEE Signal Process. Mag. 2015, 32, 14–16. [Google Scholar] [CrossRef]

- Johnson, C.R.; Hendriks, E.; Berezhnoy, I.J.; Brevdo, E.; Hughes, S.M.; Daubechies, I.; Li, J.; Postma, E.; Wang, J.Z. Image processing for artist identification. IEEE Signal Process. Mag. 2008, 25, 37–48. [Google Scholar] [CrossRef]

- Platiša, L.; Cornells, B.; Ružić, T.; Pižurica, A.; Dooms, A.; Martens, M.; De Mey, M.; Daubechies, I. Spatiogram features to characterize pearls in paintings. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 801–804. [Google Scholar]

- Anwar, H.; Zambanini, S.; Kampel, M.; Vondrovec, K. Ancient coin classification using reverse motif recognition: Image-based classification of roman republican coins. IEEE Signal Process. Mag. 2015, 32, 64–74. [Google Scholar] [CrossRef]

- Giakoumis, I.; Nikolaidis, N.; Pitas, I. Digital image processing techniques for the detection and removal of cracks in digitized paintings. IEEE Trans. Image Process. 2005, 15, 178–188. [Google Scholar] [CrossRef]

- Kaur, S.; Kaur, A. Restoration of historical wall paintings using improved nearest neighbour algorithm. Int. J. Eng. Comput. Sci. 2014, 3, 9581–9586. [Google Scholar]

- Picard, D.; Gosselin, P.-H.; Gaspard, M.-C. Challenges in content-based image indexing of cultural heritage collections. IEEE Signal Process. Mag. 2015, 32, 95–102. [Google Scholar] [CrossRef]

- Nasri, A.; Huang, X. A missing color area extraction approach from high-resolution statue images for cultural heritage documentation. Sci. Rep. 2020, 10, 21939. [Google Scholar] [CrossRef] [PubMed]

- Pei, S.-C.; Zeng, Y.-C.; Chang, C.-H. Virtual restoration of ancient Chinese paintings using color contrast enhancement and lacuna texture synthesis. IEEE Trans. Image Process. 2004, 13, 416–429. [Google Scholar] [CrossRef]

- Liu, J.; Lu, D. Knowledge based lacunas detection and segmentation for ancient paintings. In Proceedings of the International Conference on Virtual Systems and Multimedia, Brisbane, Australia, 23–26 September 2007; pp. 121–131. [Google Scholar]

- Cornelis, B.; Ružić, T.; Gezels, E.; Dooms, A.; Pižurica, A.; Platiša, L.; Cornelis, J.; Martens, M.; De Mey, M.; Daubechies, I. Crack detection and inpainting for virtual restoration of paintings: The case of the Ghent Altarpiece. Signal Process. 2013, 93, 605–619. [Google Scholar] [CrossRef]

- Karianakis, N.; Maragos, P. An integrated system for digital restoration of prehistoric theran wall paintings. In Proceedings of the 2013 18th International Conference on Digital Signal Processing (DSP), Fira, Greece, 1–3 July 2013; pp. 1–6. [Google Scholar]

- Sinduja, R. Watermarking Based on the Classification of Cracks in Paintings. In Proceedings of the 2009 International Conference on Advanced Computer Control, Singapore, 22–24 January 2009; pp. 594–598. [Google Scholar]

- Huang, S.; Cornelis, B.; Devolder, B.; Martens, M.; Pizurica, A. Multimodal target detection by sparse coding: Application to paint loss detection in paintings. IEEE Trans. Image Process. 2020, 29, 7681–7696. [Google Scholar] [CrossRef]

- Cao, J.; Jia, Y.; Chen, H.; Yan, M.; Chen, Z. Ancient mural classification methods based on a multichannel separable network. Herit. Sci. 2021, 9, 1–17. [Google Scholar] [CrossRef]

- Masilamani, G.K.; Valli, R. Art Classification with Pytorch Using Transfer Learning. In Proceedings of the 2021 International Conference on System, Computation, Automation and Networking (ICSCAN), Puducherry, India, 30–31 July 2021; pp. 1–5. [Google Scholar]

- Sanda Mahama, A.T.; Dossa, A.S.; Gouton, P. Choice of distance metrics for RGB color image analysis. Electron. Imaging 2016, 2016, 1–4. [Google Scholar] [CrossRef]

- Dong, X.; Wang, G.; Pang, Y.; Li, W.; Wen, J.; Meng, W.; Lu, Y. Fast efficient algorithm for enhancement of low lighting video. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Sandoub, G.; Atta, R.; Ali, H.A.; Abdel-Kader, R.F. A low-light image enhancement method based on bright channel prior and maximum colour channel. Inst. Eng. Technol. 2021, 15, 1759–1772. [Google Scholar] [CrossRef]

- Ranota, H.K.; Kaur, P. Review and analysis of image enhancement techniques. J. Inf. Comput. Technol. 2014, 4, 583–590. [Google Scholar]

- Chavez, P.S., Jr. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Baxi, A.; Pandya, M.; Kalubarme, M.; Potdar, M. Supervised classification of satellite imagery using Enhanced seeded region growing technique. In Proceedings of the 2011 Nirma University International Conference on Engineering, Ahmedabad, India, 8–10 December 2011; pp. 1–4. [Google Scholar]

- Al-Otum, H.M. Morphological operators for color image processing based on Mahalanobis distance measure. Opt. Eng. 2003, 42, 2595–2606. [Google Scholar] [CrossRef]

- Khongkraphan, K. An efficient color edge detection using the mahalanobis distance. J. Inf. Process. Syst. 2014, 10, 589–601. [Google Scholar] [CrossRef] [Green Version]

- Mahalanobis, P.C. On the Generalized Distance in Statistics. Proc. Natl. Inst. Sci. 1936, 2, 49–55. [Google Scholar]

- Wuhan Dashi Smart Technology Company. Available online: https://www.daspatial.com/ (accessed on 20 July 2022).

| Image | Classes | Number of Registered Pixels | Red Mean | Green Mean | Blue Mean |

|---|---|---|---|---|---|

| Mural one | Class 01 | 86,240 | 158.31 | 118.02 | 112.58 |

| Class 02 | 4112 | 201.16 | 208.94 | 209.24 | |

| Mural two | Class 01 | 124,788 | 150.30 | 59.81 | 44.08 |

| Class 02 | 28,002 | 245.58 | 236.36 | 214.84 | |

| Mural three | Class 01 | 115,604 | 166.97 | 135.04 | 113.84 |

| Class 02 | 4358 | 226.96 | 214.30 | 205.86 | |

| Mural four | Class 01 | 55,869 | 165.42 | 108.41 | 96.95 |

| Class 02 | 4937 | 223.17 | 211.20 | 189.72 | |

| Mural five | Class 01 | 26,538 | 146.69 | 94.61 | 87.84 |

| Class 02 | 3479 | 229.46 | 221.52 | 202.06 | |

| Mural six | Class 01 | 65,723 | 141.31 | 129.68 | 127.86 |

| Class 02 | 2730 | 228.75 | 219.51 | 203.16 |

| Image | Classes | Number of Registered Pixels | Total Number of Registered Pixels | Number of Extracted Pixels | % Correct | Misclassification Rate (% Error) |

|---|---|---|---|---|---|---|

| Mural one | Class 01 | 86,240 | 182,828 | |||

| Class 02 | 4112 | 90,352 | 8717 | 97.88 | 2.12 | |

| Mural two | Class 01 | 124,788 | 41,180 | 99.67 | 0.33 | |

| Class 02 | 28,002 | 152,790 | 9240 | |||

| Mural three | Class 01 | 115,604 | 895,931 | |||

| Class 02 | 4358 | 119,962 | 33,774 | 92.25 | 7.75 | |

| Mural four | Class 01 | 55,869 | 507,849 | |||

| Class 02 | 4937 | 60,806 | 44,877 | 90.91 | 9.09 | |

| Mural five | Class 01 | 26,538 | 148,082 | |||

| Class 02 | 3479 | 30,017 | 19,412 | 94.42 | 5.58 | |

| Mural six | Class 01 | 65,723 | 68,453 | 599,393 | 90.88 | 9.12 |

| Class 02 | 2730 | 24,897 |

| Image | Classes | Number of Registered Pixels | Total Number of Registered Pixels | Number of Extracted Pixels | % Correct | Misclassification Rate (% Error) |

|---|---|---|---|---|---|---|

| Mural one | Class 01 | 787,347 | 818,038 | 4,007,596 | 94.91 | 5.09 |

| Class 02 | 30,691 | 156,217 | ||||

| Mural two | Class 01 | 639,917 | 654,041 | 10,353,857 | 83.82 | 16.18 |

| Class 02 | 14,124 | 228,526 | ||||

| Mural three | Class 01 | 318,799 | 342,280 | 321,986 | 98.99 | 1.01 |

| Class 02 | 23,481 | 23,715 | ||||

| Mural four | Class 01 | 314,031 | 333,978 | 813,340 | 97.41 | 2.59 |

| Class 02 | 19,947 | 51,662 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasri, A.; Huang, X. Images Enhancement of Ancient Mural Painting of Bey’s Palace Constantine, Algeria and Lacuna Extraction Using Mahalanobis Distance Classification Approach. Sensors 2022, 22, 6643. https://doi.org/10.3390/s22176643

Nasri A, Huang X. Images Enhancement of Ancient Mural Painting of Bey’s Palace Constantine, Algeria and Lacuna Extraction Using Mahalanobis Distance Classification Approach. Sensors. 2022; 22(17):6643. https://doi.org/10.3390/s22176643

Chicago/Turabian StyleNasri, Adel, and Xianfeng Huang. 2022. "Images Enhancement of Ancient Mural Painting of Bey’s Palace Constantine, Algeria and Lacuna Extraction Using Mahalanobis Distance Classification Approach" Sensors 22, no. 17: 6643. https://doi.org/10.3390/s22176643

APA StyleNasri, A., & Huang, X. (2022). Images Enhancement of Ancient Mural Painting of Bey’s Palace Constantine, Algeria and Lacuna Extraction Using Mahalanobis Distance Classification Approach. Sensors, 22(17), 6643. https://doi.org/10.3390/s22176643