Multi-scale Fusion of Stretched Infrared and Visible Images

Abstract

:1. Introduction

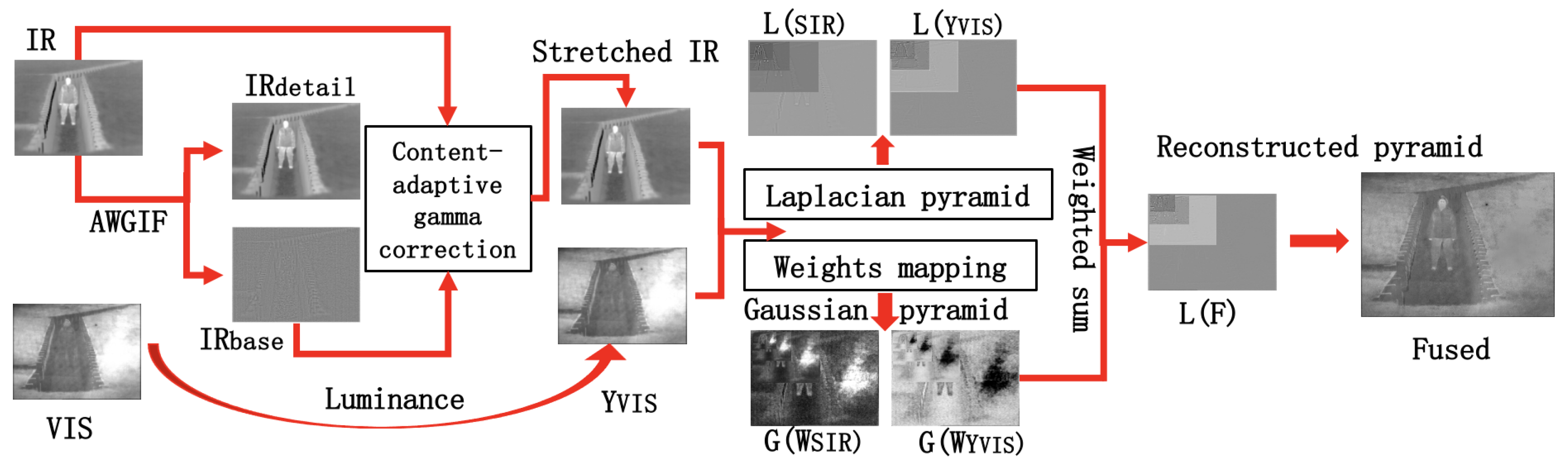

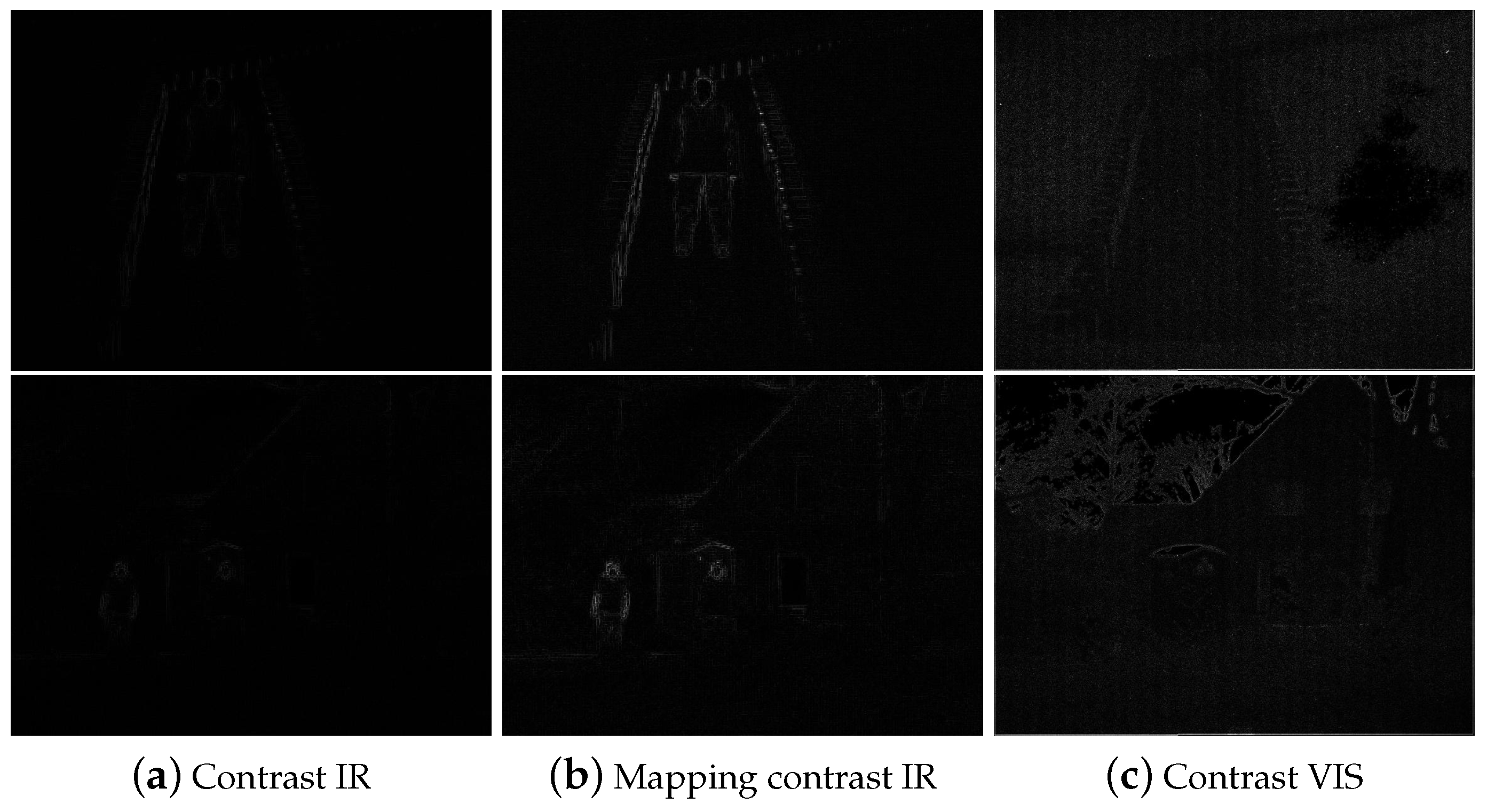

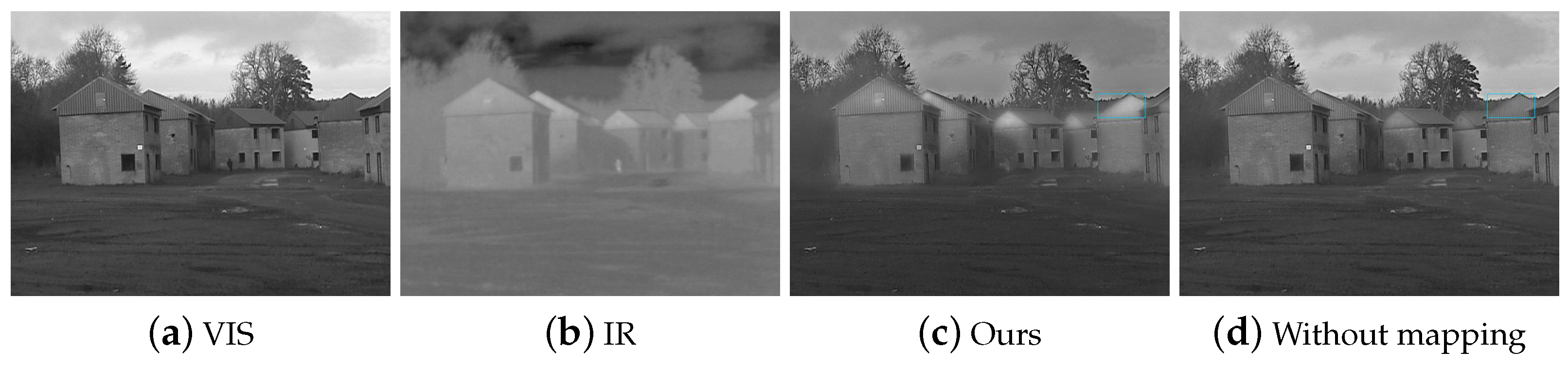

2. Edge-Preserving Stretch of the IR Images

3. Multi-Scale Fusion of the IR and VIS Images

3.1. Weights of the Stretched IR and VIS Images

3.2. Fusion of the Stretched IR and VIS Images

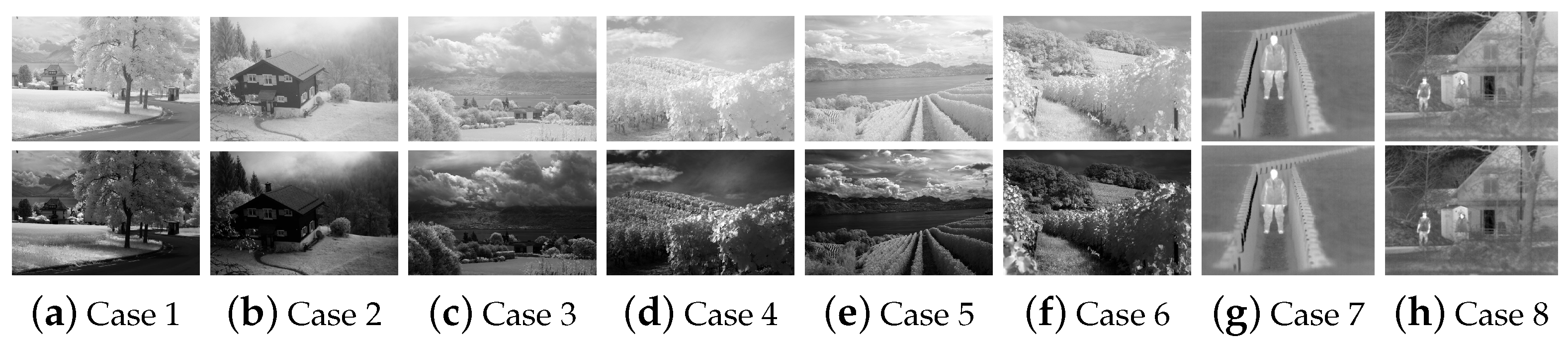

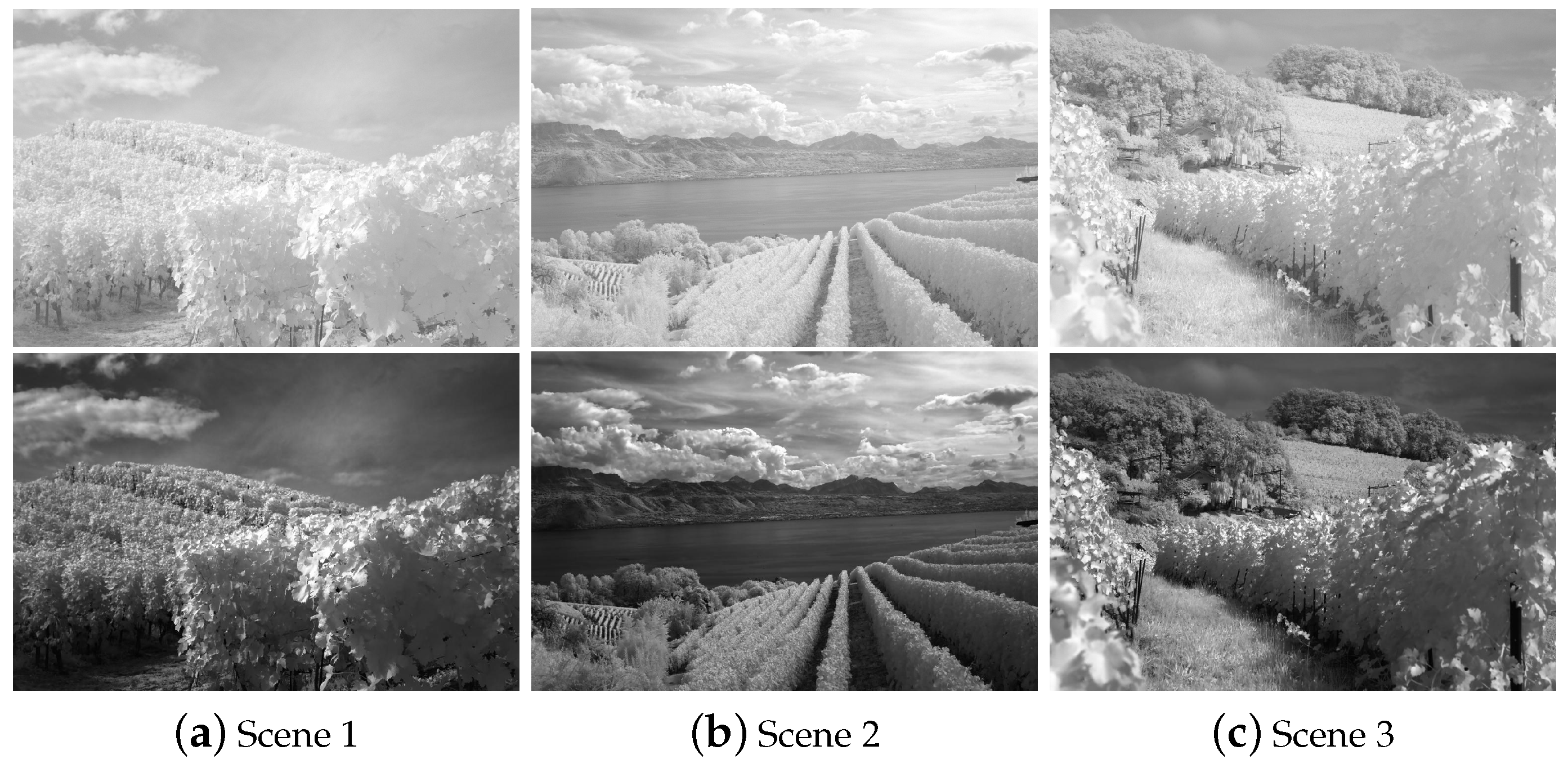

4. Experimental Results

4.1. Performance Evaluation Indices

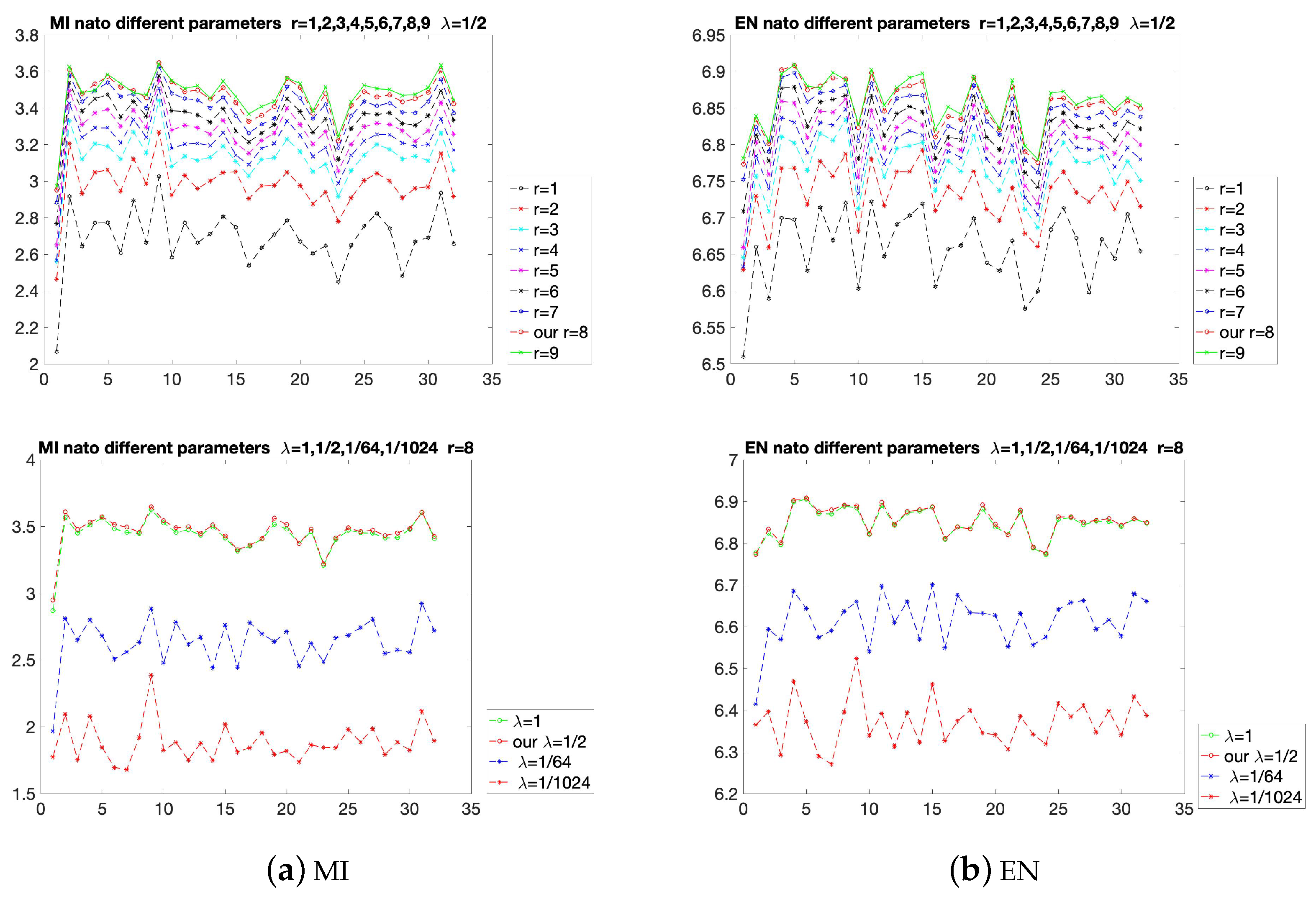

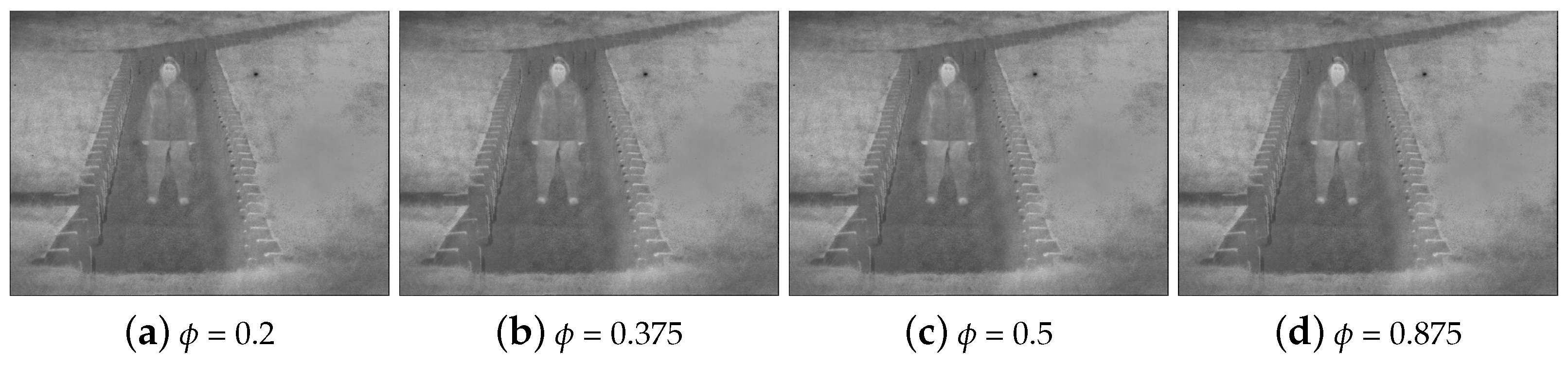

4.2. Selection of Parameters

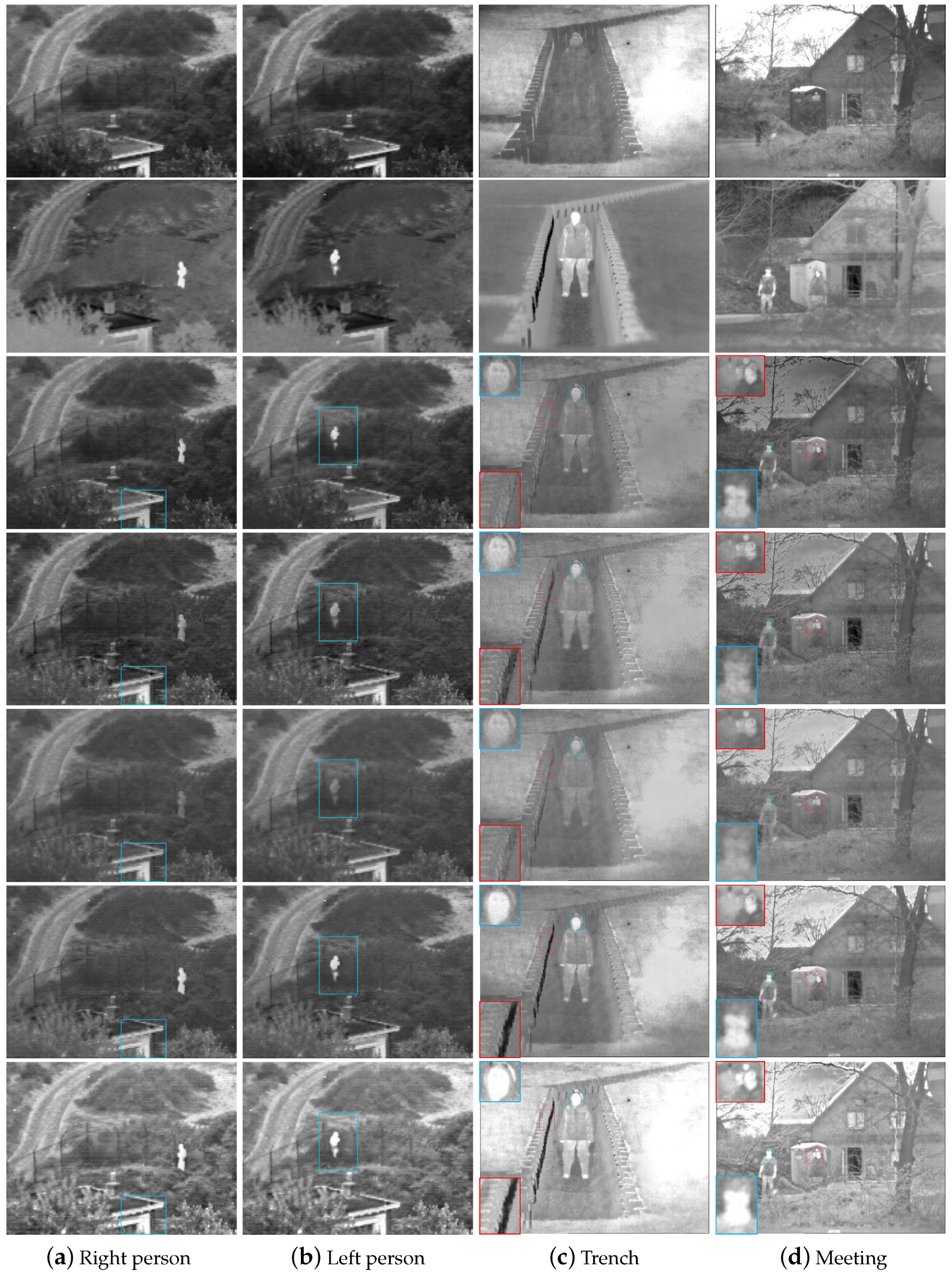

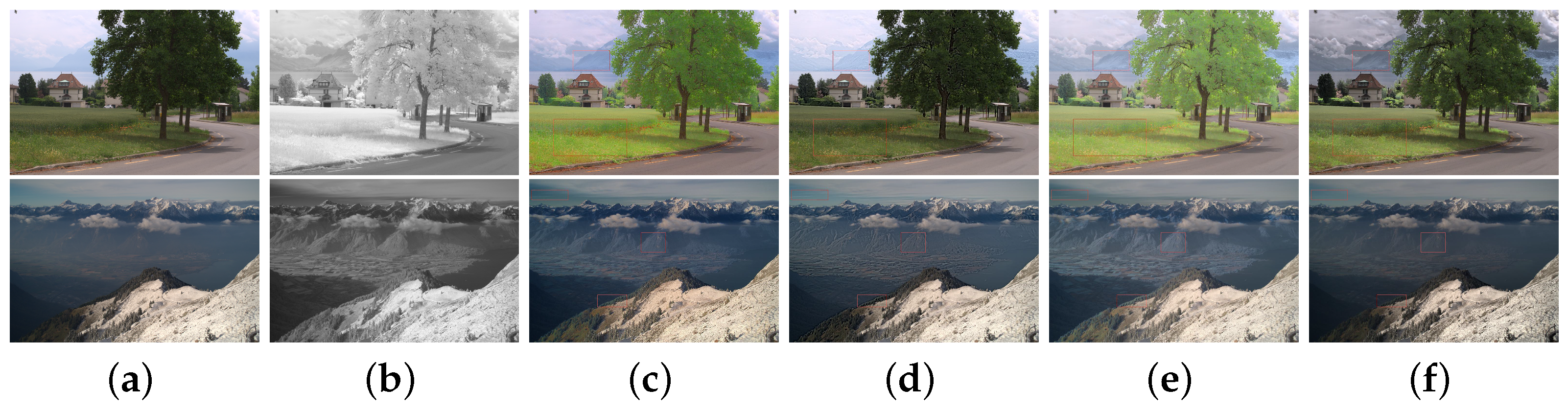

4.3. Comparison with State-of-the-Art Methods

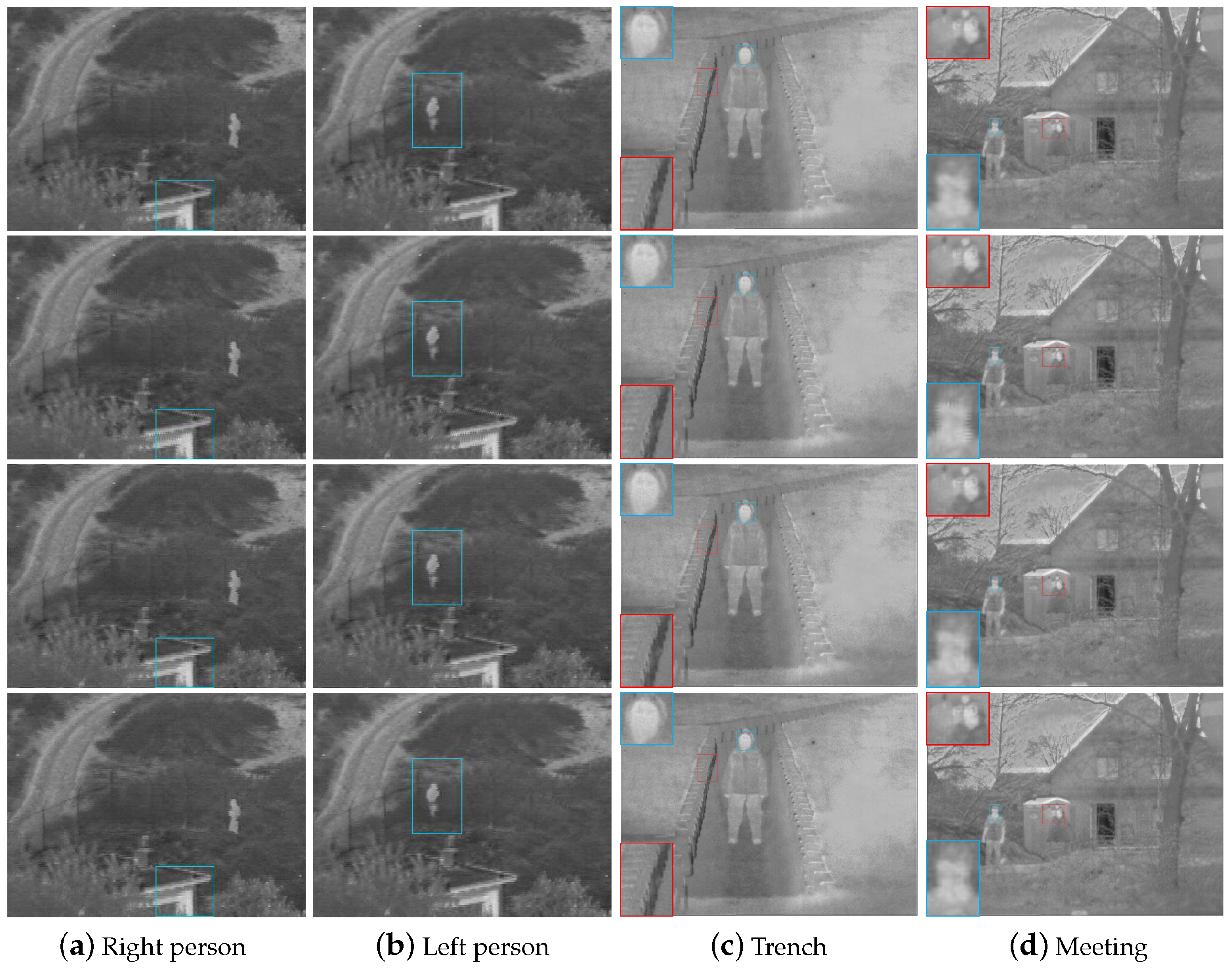

4.4. Fusion of RGB VIS and IR Images

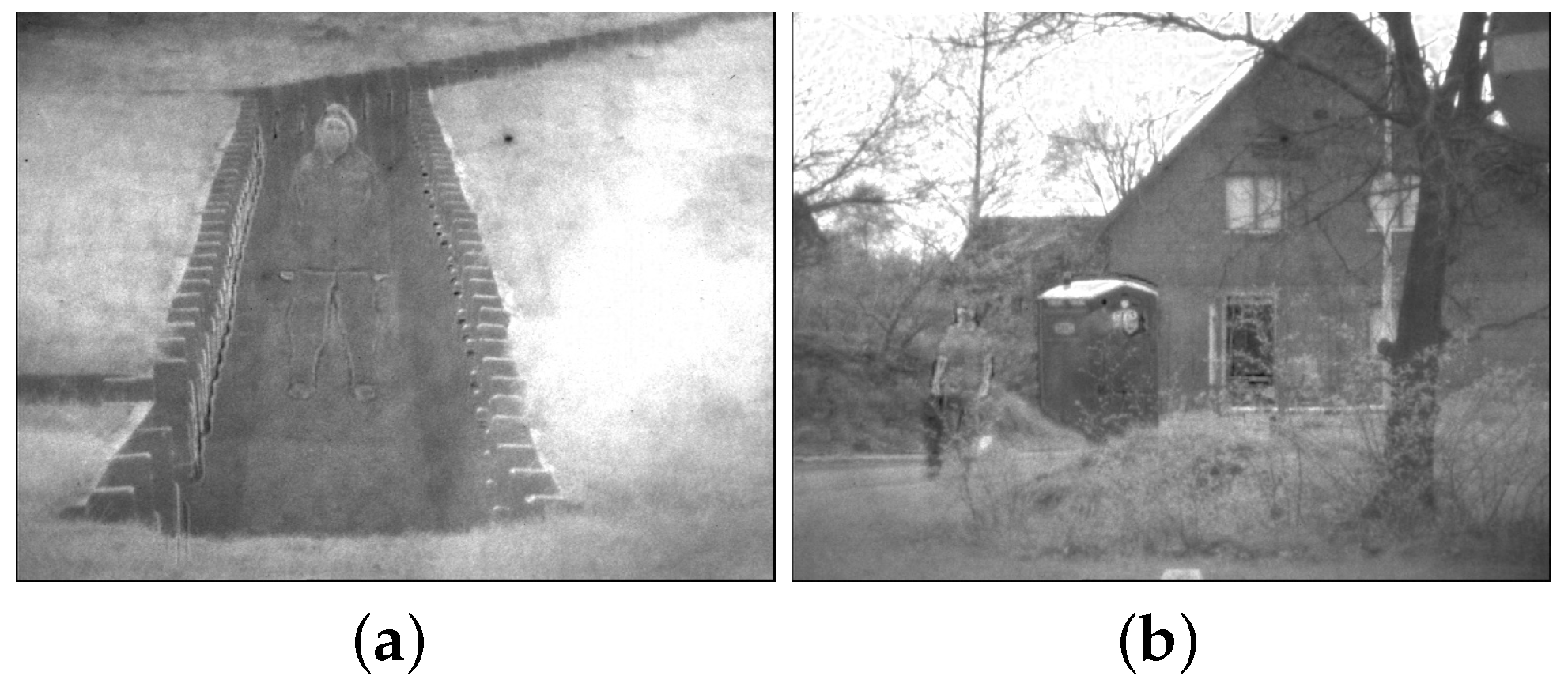

4.5. Limitation of the Proposed Algorithm

5. Conclusions Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Kumar, B.K.S. Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform. Signal Image Video Process. 2013, 7, 1125–1143. [Google Scholar] [CrossRef]

- Li, Q.; Han, G.; Liu, P.; Yang, H.; Luo, H.; Wu, J. An Infrared-Visible Image Registration Method Based on the Constrained Point Feature. Sensors 2021, 21, 1188. [Google Scholar] [CrossRef]

- Chen, J.; Yang, Z.; Chan, T.N.; Li, H.; Hou, J.; Chau, L.P. Attention-Guided Progressive Neural Texture Fusion for High Dynamic Range Image Restoration. IEEE Trans. Image Process. 2022, 31, 2661–2672. [Google Scholar] [CrossRef] [PubMed]

- Bai, H.; Pan, J.; Xiang, X.; Tang, J. Self-guided image dehazing using progressive feature fusion. IEEE Trans. Image Process. 2022, 31, 1217–1229. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Li, Z.; Yang, Y.; Wu, S. Single Image Brightening via Multi-Scale Exposure Fusion with Hybrid Learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1425–1435. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, X.; Jiang, J.; Zhao, D.; Chen, Z.; Ji, X. High-Resolution Depth Maps Imaging via Attention-Based Hierarchical Multi-Modal Fusion. IEEE Trans. Image Process. 2022, 31, 648–663. [Google Scholar] [CrossRef]

- Awad, M.; Elliethy, A.; Aly, H.A. Adaptive near-infrared and visible fusion for fast image enhancement. IEEE Trans. Comput. Imag. 2019, 6, 408–418. [Google Scholar] [CrossRef]

- Liu, X.; Li, J.B.; Pan, J.S. Feature point matching based on distinct wavelength phase congruency and log-gabor filters in infrared and visible images. Sensors 2019, 19, 4244. [Google Scholar] [CrossRef]

- Li, H.; Wu, X.J.; Kittler, J. MDLatLRR: A novel decomposition method for infrared and visible image fusion. IEEE Trans. Image Process. 2020, 29, 4733–4746. [Google Scholar] [CrossRef] [Green Version]

- Ricaurte, P.; Chilan, C.; Aguilera-Carrasco, C.A.; Vintimilla, B.X.; Sappa, A.D. Feature point descriptors: Infrared and visible spectra. Sensors 2014, 14, 3690–3701. [Google Scholar] [CrossRef] [PubMed]

- Zhong, X.; Lu, T.; Huang, W.; Ye, M.; Jia, X.; Lin, C. Grayscale Enhancement Colorization Network for Visible-Infrared Person Re-Identification. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1418–1430. [Google Scholar] [CrossRef]

- Hou, R.; Nie, R.; Zhou, D.; Cao, J.; Liu, D. Infrared and visible images fusion using visual saliency and optimized spiking cortical model in non-subsampled shearlet transform domain. Multimed. Tools Appl. 2019, 78, 28609–28632. [Google Scholar] [CrossRef]

- Salamati, N.; Fredembach, C.; Süsstrunk, S. Material classification using color and NIR images. In Color and Imaging Conference; Society for Imaging Science and Technology: Springfield, VA, USA, 2009; pp. 216–222. [Google Scholar]

- Salamati, N.; Suesstrunk, S. Material-based object segmentation using near-infrared information. In Proceedings of the IS&T/SID 18th Color Imaging Conference (CIC), San Antonio, TX, USA, 8–12 November 2010; pp. 196–201. [Google Scholar]

- Chen, J.; Li, X.; Luo, L.; Mei, X.; Ma, J. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf. Sci. 2020, 508, 64–78. [Google Scholar] [CrossRef]

- Chipman, L.J.; Orr, T.M.; Graham, L.N. Wavelets and image fusion. In Proceedings of the International Conference on Image Processing, Washington, DC, USA, 23–26 October 1995; pp. 248–251. [Google Scholar]

- Naidu, V.P.S. Image fusion technique using multi-resolution singular value decomposition. Def. Sci. J. 2011, 61, 479–484. [Google Scholar] [CrossRef]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Ma, J.; Chen, C.; Li, C.; Huang, J. Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 2016, 31, 100–109. [Google Scholar] [CrossRef]

- Burt, P.J.; Adelson, E.H. The Laplacian Pyramid as a Compact Image Code. In Readings in Computer Vision: Issues, Problems, Principles, and Paradigms; Morgan Kaufmann: Burlington, MA, USA, 1987; pp. 671–679. [Google Scholar]

- Lewis, J.J.; O’Callaghan, R.J.; Nikolov, S.G.; Bull, D.R.; Canagarajah, N. Pixel-and region-based image fusion with complex wavelets. Inf. Fusion 2007, 8, 119–130. [Google Scholar] [CrossRef]

- Park, S.; Yu, S.; Moon, B.; Ko, S.; Paik, J. Low-light image enhancement using variational optimization-based retinex model. IEEE Trans. Consum. Electron. 2017, 63, 178–184. [Google Scholar] [CrossRef]

- Farbman, Z.; Fattal, R.; Lischinski, D.; Szeliski, R. Edge-preserving decompositions for multi-scale tone and detail manipulation. ACM Trans. Graph. 2008, 27, 1–10. [Google Scholar] [CrossRef]

- Qiu, X.H.; Li, M.; Zhang, L.Q.; Yuan, X.J. Guided filter-based multi-focus image fusion through focus region detection. Signal Process. Image Commun. 2019, 72, 35–46. [Google Scholar] [CrossRef]

- Li, H.; Chan, T.N.; Qi, X.; Xie, W. Detail-preserving multi-exposure fusion with edge-preserving structural patch decomposition. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1–12. [Google Scholar] [CrossRef]

- Tan, W.; Zhou, H.; Song, J.; Li, H.; Yu, Y.; Du, J. Infrared and visible image perceptive fusion through multi-level Gaussian curvature filtering image decomposition. Appl. Opt. 2019, 58, 3064–3073. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Qi, X.; Xie, W. Fast infrared and visible image fusion with structural decomposition. Knowl. Based Syst. 2020, 204, 106182. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Dhuli, R. Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 2016, 76, 52–64. [Google Scholar] [CrossRef]

- Zhou, Z.; Dong, M.; Xie, X.; Gao, Z. Fusion of infrared and visible images for night-vision context enhancement. Appl. Opt. 2016, 55, 6480–6490. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Dhuli, R. Fusion of infrared and visible sensor images based on anisotropic diffusion and karhunen-loeve transform. IEEE Sens. J. 2016, 16, 203–209. [Google Scholar] [CrossRef]

- Dogra, A.; Goyal, B.; Agrawal, S. Osseous and digital subtraction angiography image fusion via various enhancement schemes and Laplacian pyramid transformations. Future Gener. Comput. Syst. 2018, 82, 149–157. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, S.; Zhang, C.; Liu, J.; Zhang, J. Bayesian fusion for infrared and visible images. Signal Process. 2020, 177, 107734. [Google Scholar] [CrossRef]

- Vanmali, A.V.; Gadre, V.M. Visible and NIR image fusion using weight-map-guided Laplacian–Gaussian pyramid for improving scene visibility. Sādhanā 2017, 42, 1063–1082. [Google Scholar] [CrossRef]

- Li, Z.; Hu, H.M.; Zhang, W.; Pu, S.; Li, B. Spectrum characteristics preserved visible and near-infrared image fusion algorithm. IEEE Trans. Multimed. 2021, 23, 306–319. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z.; Zheng, C.; Wu, S. Adaptive Weighted Guided Image Filtering for Depth Enhancement in Shape-From-Focus. Available online: https://arxiv.org/abs/2201.06823 (accessed on 30 August 2022).

- Mertens, T.; Kautz, J.; Reeth, F.V. Exposure fusion. In Proceedings of the 15th Pacific Conference on Computer Graphics and Applications (PG’07), Maui, HI, USA, 29 October–2 November 2007; pp. 382–390. [Google Scholar]

- Kou, F.; Li, Z.G.; Wen, C.Y.; Chen, W.H. Multi-scale exposure fusion via gradient domain guided image filtering. In Proceedings of the IEEE International Conference on Multimedia and Expo, Hong Kong, China, 10–14 July 2017; pp. 1105–1110. [Google Scholar]

- Kou, F.; Wei, Z.; Chen, W.; Wu, X.; Wen, C.; Li, Z. Intelligent detail enhancement for exposure fusion. IEEE Trans. Multimed. 2018, 20, 484–495. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2014, 24, 120–129. [Google Scholar] [PubMed]

- Chen, B.; Wu, S. Weighted aggregation for guided image filtering. Signal Image Video Process. 2020, 14, 491–498. [Google Scholar] [CrossRef]

- Kou, F.; Chen, W.; Li, Z.; Wen, C. Content adaptive image detail enhancement. IEEE Signal Process. Lett. 2015, 22, 211–215. [Google Scholar] [CrossRef]

- Tumblin, J.; Turk, G. LCIS: A Boundary Hierarchy for Detailpreserving Contrast Reduction. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 1 July 1999; pp. 83–90. [Google Scholar]

- Durand, F.; Dorsey, J. Fast bilateral filtering for the display of high dynamic-range images. ACM Trans. Graph. 2002, 21, 257–266. [Google Scholar] [CrossRef]

- Google-Drive. Available online: https://drive.google.com/file/d/1lCSFpWepxKjlV7ZoPhMCUKLT4vtQRail/view?usp=sharing (accessed on 30 August 2022).

- Roberts, J.W.; van Aardt, J.; Ahmed, F.B. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2, 023522. [Google Scholar]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef]

- Rubinstein, R. The cross-entropy method for combinatorial and continuous optimization. Methodol. Comput. Appl. Probab. 1999, 1, 127–190. [Google Scholar] [CrossRef]

- EPFL. Available online: https://www.epfl.ch/labs/ivrl/research/downloads/rgb-nir-scene-dataset/ (accessed on 30 August 2022).

- Wang, Q.; Chen, W.; Wu, X.; Li, Z. Detail-enhanced multi-scale exposure fusion in YUV color space. IEEE Trans. Circuits Syst. Video Technol. 2019, 26, 1243–1252. [Google Scholar] [CrossRef]

- Wang, Q.T.; Chen, W.H.; Wu, X.M.; Li, Z.G. Detail Preserving Multi-Scale Exposure Fusion. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1713–1717. [Google Scholar]

| Ours | Wavelets | GFCE | ADF | MSVD | DTCWT | CVT | Bayesian | MLGCF | |

|---|---|---|---|---|---|---|---|---|---|

| Time | 2.3639 | 4.3822 | 5.6738 | 4.2315 | 3.2449 | 1.3854 | 6.7786 | 5.2462 | 14.2767 |

| Ours | Wavelets | GFCE | ADF | MSVD | DTCWT | CVT | Bayesian | MLGCF | |

|---|---|---|---|---|---|---|---|---|---|

| MI | 3.4579 | 1.5512 | 1.7342 | 1.5782 | 1.5292 | 1.5213 | 1.4947 | 2.0398 | 1.7019 |

| CE | 0.4657 | 0.8837 | 1.5930 | 0.5453 | 0.9521 | 0.9413 | 0.9119 | 0.6647 | 0.6290 |

| EN | 6.8528 | 6.2181 | 7.1797 | 6.6929 | 6.2390 | 6.2535 | 6.2611 | 6.4260 | 6.6009 |

| Ours | Wavelets | GFCE | ADF | MSVD | DTCWT | CVT | Bayesian | MLGCF | |

|---|---|---|---|---|---|---|---|---|---|

| Time | 3.2151 | 3.2838 | 10.8168 | 5.8897 | 5.7947 | 2.1401 | 9.5064 | 9.9251 | 37.1023 |

| Ours | Wavelets | GFCE | ADF | MSVD | DTCWT | CVT | Bayesian | MLGCF | |

|---|---|---|---|---|---|---|---|---|---|

| MI | 3.7220 | 1.8467 | 1.9845 | 1.7093 | 1.6970 | 1.7371 | 1.7068 | 2.5319 | 2.0197 |

| CE | 0.6601 | 1.0117 | 1.9040 | 0.9279 | 1.0725 | 1.0751 | 1.1186 | 0.7838 | 0.9088 |

| EN | 6.8365 | 6.2451 | 7.1315 | 6.7550 | 6.2801 | 6.2893 | 6.2979 | 6.5153 | 6.6976 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, W.; Song, Z.; Li, Z. Multi-scale Fusion of Stretched Infrared and Visible Images. Sensors 2022, 22, 6660. https://doi.org/10.3390/s22176660

Jia W, Song Z, Li Z. Multi-scale Fusion of Stretched Infrared and Visible Images. Sensors. 2022; 22(17):6660. https://doi.org/10.3390/s22176660

Chicago/Turabian StyleJia, Weibin, Zhihuan Song, and Zhengguo Li. 2022. "Multi-scale Fusion of Stretched Infrared and Visible Images" Sensors 22, no. 17: 6660. https://doi.org/10.3390/s22176660

APA StyleJia, W., Song, Z., & Li, Z. (2022). Multi-scale Fusion of Stretched Infrared and Visible Images. Sensors, 22(17), 6660. https://doi.org/10.3390/s22176660