Investigation of Measurement Accuracy of Bridge Deformation Using UAV-Based Oblique Photography Technique

Abstract

:1. Introduction

2. Aerotriangulation Method Based on Oblique Photography

3. Experimental Work

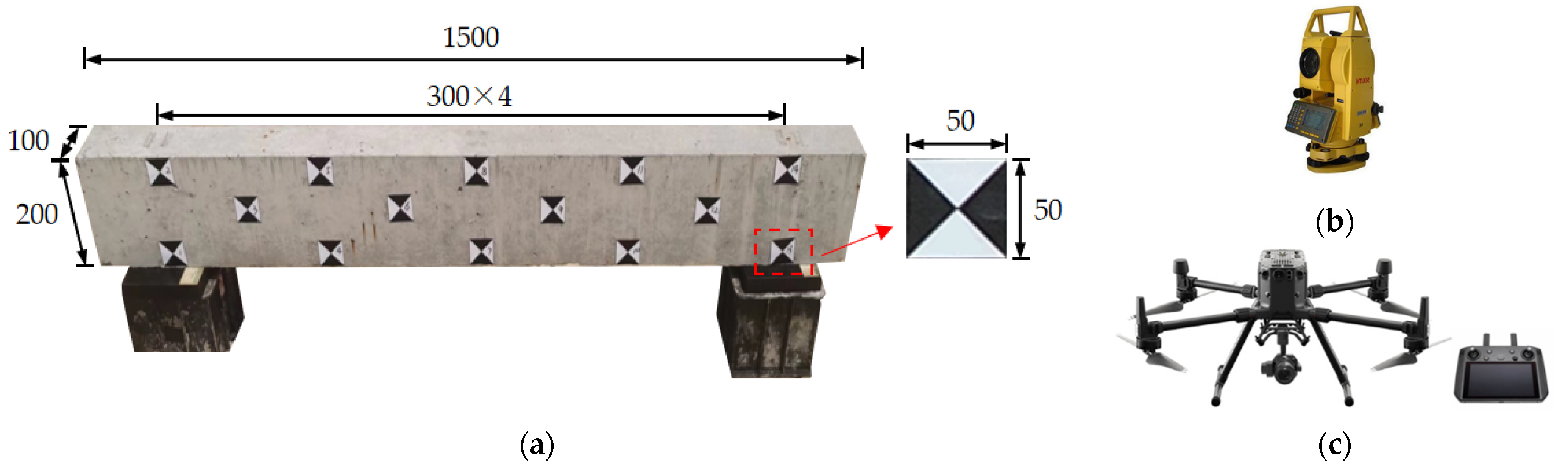

3.1. Monitored Object and Instrumentations

3.2. Test Parameters

3.2.1. UAV Flight Route Planning

3.2.2. Arrangement of Reference Points

3.3. Discussions on the Reconstructed 3D Model Based on UAVOP

3.3.1. Effect of Fusing Overall-Partial Flight Route

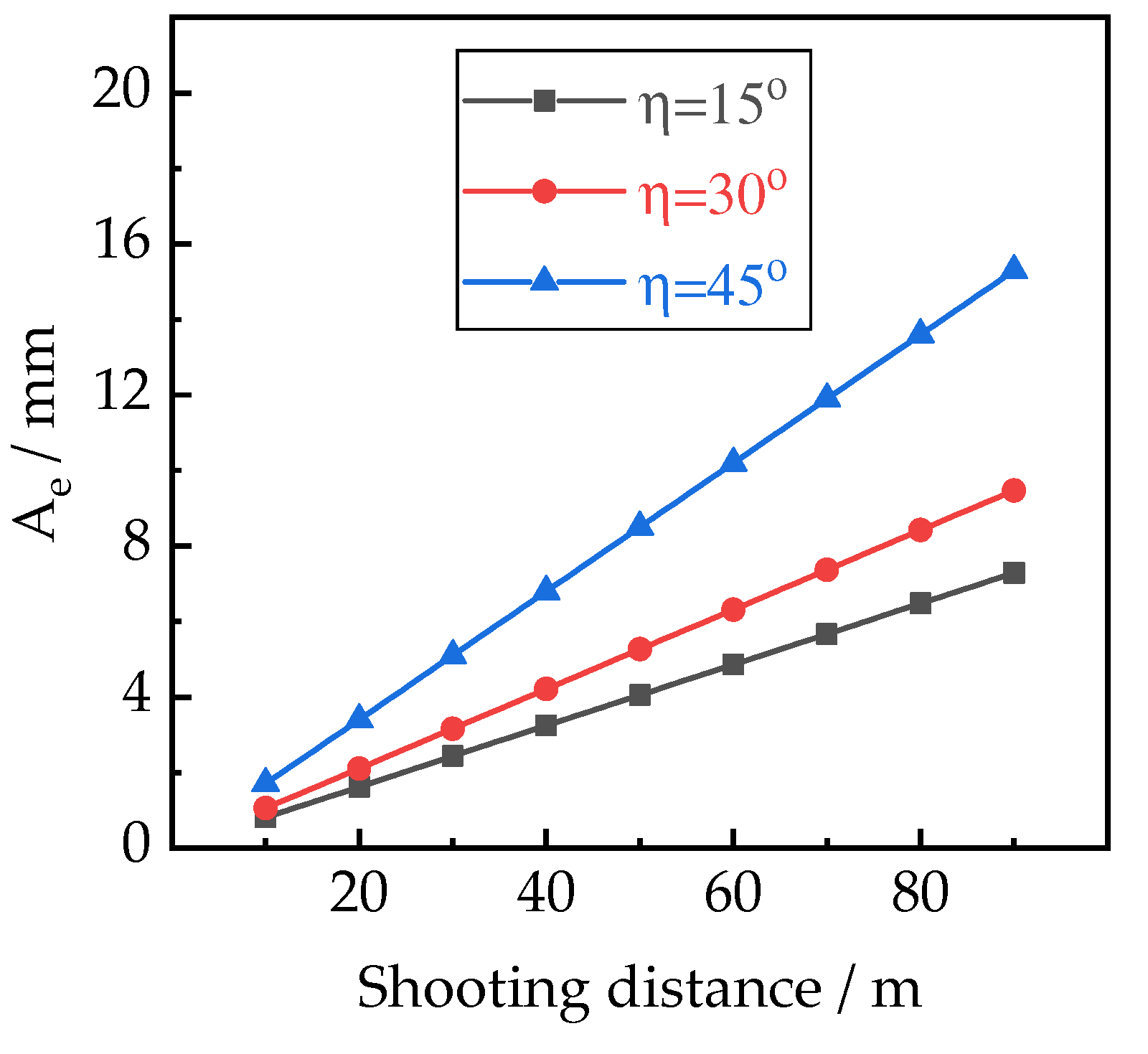

3.3.2. Effect of Local Shooting Distance

3.3.3. Effect of Partial Image Overlap

3.3.4. Effect of Control Point Arrangement

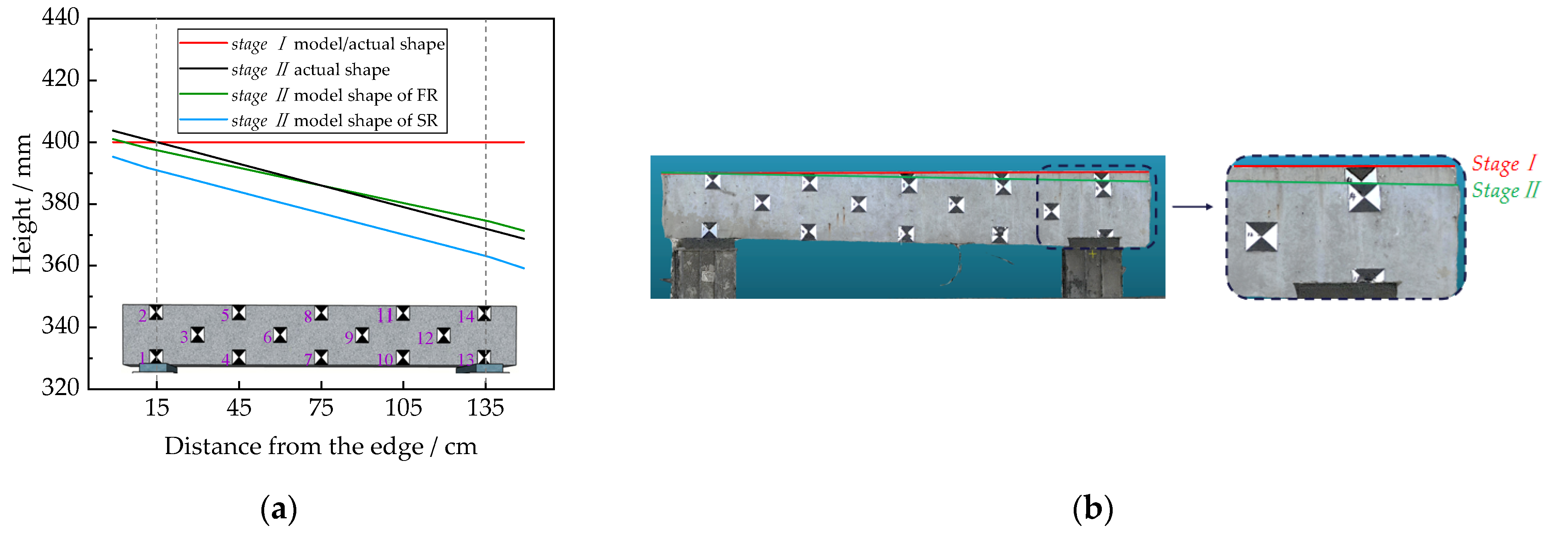

4. Bridge Deformation Measurement Using a UAVOP Technique

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gan, W.; Hu, W.; Liu, F.; Tang, J.; Li, S.; Yang, Y. Bridge continuous deformation measurement technology based on fiber optic gyro. Photonic Sens. 2016, 6, 71–77. [Google Scholar] [CrossRef]

- Yi, T.; Li, H.; Gu, M. Recent research and applications of GPS based technology for bridge health monitoring. Sci. China Technol. Sci. 2010, 53, 2597–2610. [Google Scholar] [CrossRef]

- Xu, Y.; Brownjohn, J.M.W. Review of machine-vision based methodologies for displacement measurement in civil structures. J. Civ. Struct. Health Monit. 2018, 8, 91–110. [Google Scholar] [CrossRef]

- Yu, J.; Xue, X.; Chen, C.; Chen, R.; He, K.; Li, F. Three-dimensional reconstruction and disaster identification of highway slope using unmanned aerial vehicle-based oblique photography technique. China J. Highw. Transp. 2022, 35, 77–86. [Google Scholar] [CrossRef]

- Rusnák, M.; Sládek, J.; Kidová, A.; Lehotsky, M. Template for high-resolution river landscape mapping using UAV technology. Measurement 2018, 115, 139–151. [Google Scholar] [CrossRef]

- Graves, W.; Aminfar, K.; Lattanzi, D. Full-scale highway bridge deformation tracking via photogrammetry and remote sensing. Remote Sens. 2022, 14, 2767. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, J.-F.; García-Ferrer, A.; Pérez-Porras, J.-F. Assessment of UAV-photogrammetric mapping accuracy based on variation of ground control points. Int. J. Appl. Earth Obs. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Garcia, M.V.Y.; Oliveira, H.C. The influence of flight configuration, camera calibration, and ground control points for digital terrain model and orthomosaic generation using unmanned aerial vehicles imagery. B Cienc. Geod. 2021, 27, 1–18. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.H.; Rodríguez-Pérez, J.R.; Ordonez, C. Accuracy of unmanned aerial vehicle (UAV) and SfM photogrammetry survey as a function of the number and location of ground control points used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Barba, S.; Barbarella, M.; Di Benedetto, A.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy assessment of 3D photogrammetric models from an unmanned aerial vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef] [Green Version]

- Oniga, V.E.; Breaban, A.I.; Pfeifer, N.; Chirila, C. Determining the suitable number of ground control points for UAS images georeferencing by varying number and spatial distribution. Remote Sens. 2020, 12, 876. [Google Scholar] [CrossRef]

- Tonkin, T.N.; Midgley, N.G. Ground-control networks for image based surface reconstruction: An investigation of optimum survey designs using UAV derived imagery and structure-from-motion photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef]

- Chen, R.; Wu, Y.; Yu, J.; He, K.; Xue, X.; Li, F. Method accuracy evaluations of building urban detailed 3D model based on the unmanned aerial vehicle image sequences and its accuracy evaluation. J. Hunan Univ. Nat. Sci. 2019, 46, 172–180. [Google Scholar] [CrossRef]

- Rupnik, E.; Nex, F.; Toschi, I.; Remondino, F. Aerial multi-camera systems: Accuracy and block triangulation issues. ISPRS-J. Photogramm. Remote Sens. 2015, 101, 233–246. [Google Scholar] [CrossRef]

- Morgenthal, G.; Hallermann, N.; Kersten, J.; Taraben, J.; Debus, P.; Helmrich, M.; Rodehorst, V. Framework for automated UAS-based structural condition assessment of bridges. Autom. Constr. 2019, 97, 77–95. [Google Scholar] [CrossRef]

- Zai, C. Study on Refined 3D Model Reconstructed Method Based on Multiple Photography Technology; Kunming University of Science and Technology: Yunnan, China, 2021. [Google Scholar] [CrossRef]

- Zollini, S.; Alicandro, M.; Dominici, D.; Quaresima, R.; Giallonardo, M. UAV photogrammetry for concrete bridge inspection using object-based image analysis (OBIA). Remote Sens. 2020, 12, 3180. [Google Scholar] [CrossRef]

- Manajitprasert, S.; Tripathi, N.K.; Arunplod, S. Three-dimensional (3D) modeling of cultural heritage site using UAV imagery: A case study of the pagodas in Wat Maha That, Thailand. Appl. Sci. 2019, 9, 3640. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D.; Cunningham, K.; Dell’Andrea, R.; Riley, M. Unmanned aerial vehicle inspection of the Placer River Trail Bridge through image-based 3D modelling. Struct. Infrastruct. Eng. 2018, 14, 124–136. [Google Scholar] [CrossRef]

- Zhou, X.; Xie, K.; Huang, K.; Liu, Y.; Zhou, Z.; Gong, M.; Huang, H. Offsite aerial path planning for efficient urban scene reconstruction. ACM Trans. Graph. 2020, 39, 1–16. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Kraus, K. Photogrammetry: Geometry from Images and Laser Scans; Walter de Gruyter: Berlin, Germany, 2007; ISBN 978-3-11-019007-6. [Google Scholar]

- Kang, L.; Wu, L.; Yang, Y. Robust multi-view L2 triangulation via optimal inlier selection and 3D structure refinement. Pattern Recognit. 2014, 47, 2974–2992. [Google Scholar] [CrossRef]

- Barba, S.; Barbarella, M.; Benedetto, A.D.; Fiani, M.; Limongiello, M. Quality assessment of UAV photogrammetric archaeological survey. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2019, XLII-2/W9, 93–100. [Google Scholar] [CrossRef]

- Verykokou, S.; Ioannidis, C. Oblique aerial images: A review focusing on georeferencing procedures. Int. J. Remote Sens. 2018, 39, 3452–3496. [Google Scholar] [CrossRef]

- Nitschke, C. Marker-based tracking with unmanned aerial vehicles. In Proceedings of the 2014 IEEE International Conference on Robotics and Biomimetics (ROBIO 2014), Bali, Indonesia, 5–10 December 2014; pp. 1331–1338. [Google Scholar] [CrossRef]

- DJI Matrice 300 RTK—User Manual v 3.2. 2022. Available online: https://www.dji.com/matrice-300/downloads (accessed on 25 July 2022).

- ASPRS. New ASPRS positional accuracy standards for digital geospatial data released. Photogramm. Eng. Remote Sens. 2015, 81, 1–26. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Applying ASPRS accuracy standards to surveys from small unmanned aircraft systems (UAS). Photogramm. Eng. Remote Sens. 2015, 81, 787–793. [Google Scholar] [CrossRef]

| Parameter | Number of Control Points | Layout of Control Points | Overall Flight Altitude (h)/m | Partial Flight Route | |

|---|---|---|---|---|---|

| Local Shooting Distance (d)/m | Partial Image Overlap (λ) | ||||

| Control point | 3, 6, 8 | See Table 2 | 50 | 4 | 80% |

| Overall flight altitude (h) | 3 | GP3 | 10, 30, 50 | 4 | 80% |

| Local shooting distance (d) | 3 | GP3 | 50 | 4, 8, 12 | 70%, 80%, 90% |

| Partial image overlap (λ) | 3 | GP3 | 50 | 4, 8, 12 | 70%, 80%, 90% |

| Code | Layout Type | Number of Control Points | Distribution Map |

|---|---|---|---|

| RP3 | Regional and planar | 3 |  |

| GL3 | Global and linear | 3 |  |

| GP3 | Global and planar | 3 |  |

| GP6 | Global and planar | 6 |  |

| GP8 | Global and planar | 8 |  |

| h/m | RMSExyz of FR/cm | RMSExyz of SR/cm | The Enhancing Effect of FR/% |

|---|---|---|---|

| 10 | 1.02 | 1.39 | 26.6 |

| 30 | 0.90 | 1.62 | 44.4 |

| 50 | 0.93 | 3.00 | 69.0 |

| RMSExy/cm | RMSEz/cm | RMSExyz/cm | ||||

|---|---|---|---|---|---|---|

| FR | SR | FR | SR | FR | SR | |

| Stage I | 0.75 | 2.31 | 0.53 | 1.99 | 0.92 | 3.05 |

| Stage II | 0.65 | 2.71 | 0.59 | 1.83 | 0.88 | 3.27 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, S.; Guo, X.; He, J.; Guo, B.; Zheng, C. Investigation of Measurement Accuracy of Bridge Deformation Using UAV-Based Oblique Photography Technique. Sensors 2022, 22, 6822. https://doi.org/10.3390/s22186822

He S, Guo X, He J, Guo B, Zheng C. Investigation of Measurement Accuracy of Bridge Deformation Using UAV-Based Oblique Photography Technique. Sensors. 2022; 22(18):6822. https://doi.org/10.3390/s22186822

Chicago/Turabian StyleHe, Shaohua, Xiaochun Guo, Jianyan He, Bo Guo, and Cheng Zheng. 2022. "Investigation of Measurement Accuracy of Bridge Deformation Using UAV-Based Oblique Photography Technique" Sensors 22, no. 18: 6822. https://doi.org/10.3390/s22186822