Towards Parallel Selective Attention Using Psychophysiological States as the Basis for Functional Cognition

Abstract

1. Introduction

- Identifying the significant audio-visual features for parallel selective attention.

- Performing a comprehensive analysis of existing systems that use a selective attention mechanism.

- Analyzing the role of psychophysical states on parallel selective attention mechanism.

- Designing of a human-inspired parallel selective attention mechanism for an artificial agent.

- Testing and verifying the behavior of the proposed attention mechanism for cognitive architecture in a virtual or real environment.

2. Related Work

2.1. Quantum and Bioinspired Intelligent and Consciousness Architecture (QuBIC)

2.2. LIDA

2.3. Vector LIDA

2.4. Limitations of Existing Cognitive Architectures

3. Materials and Methods

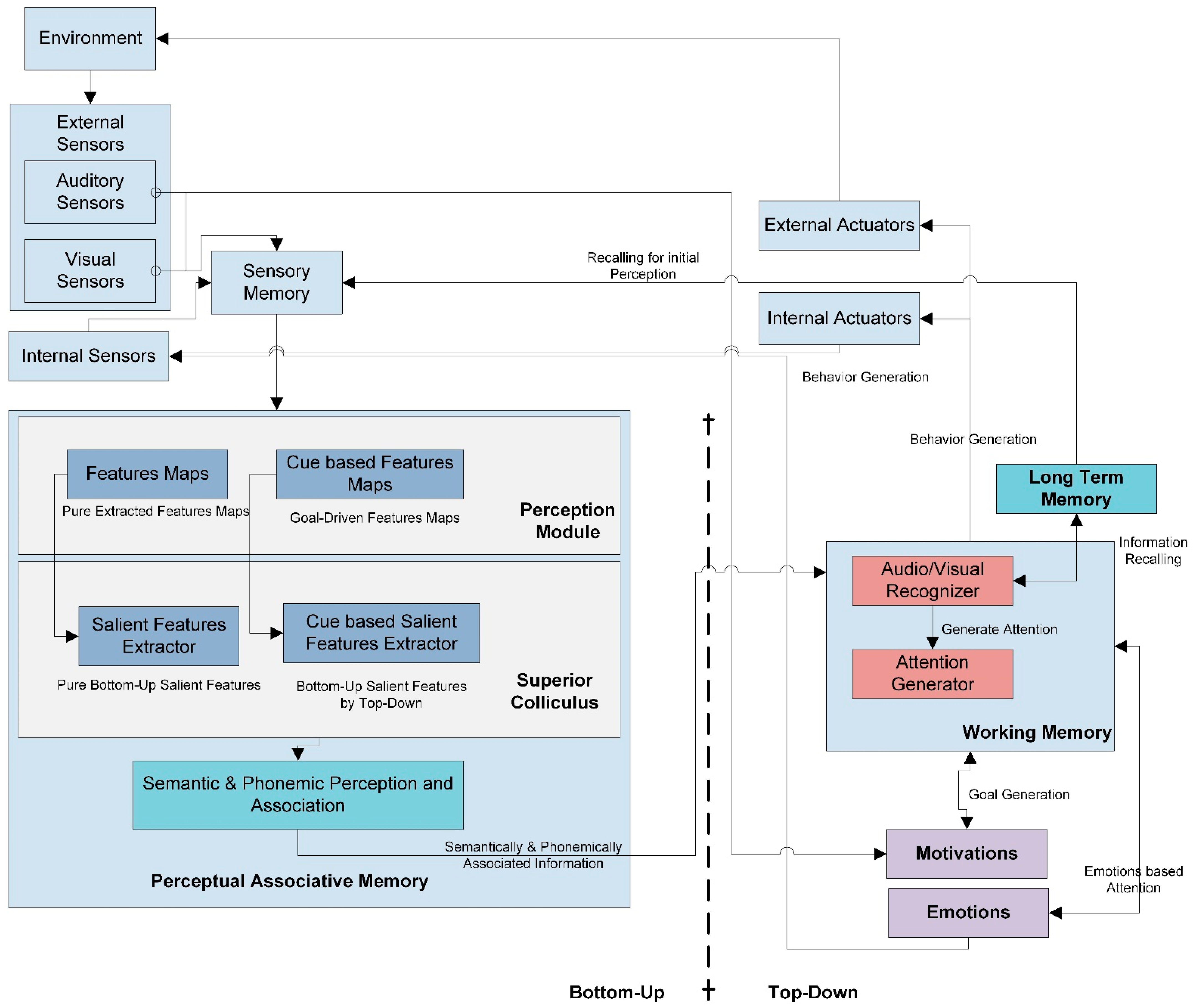

3.1. Parallel Selective Attention (PSA) Model

3.1.1. Sensors

3.1.2. Top-Down and Bottom-Up Attention

Perceptual Module

Superior Colliculus

Perceptual Associative Memory

Working Memory

- 1.

- Audio-Visual Recognizer:

- 2.

- Attention Generator:

Long-Term Memory

Psychophysical States

External and Internal Actuators

3.2. Functional Flow of PSA

3.3. Dataset

3.4. Experiment Setup

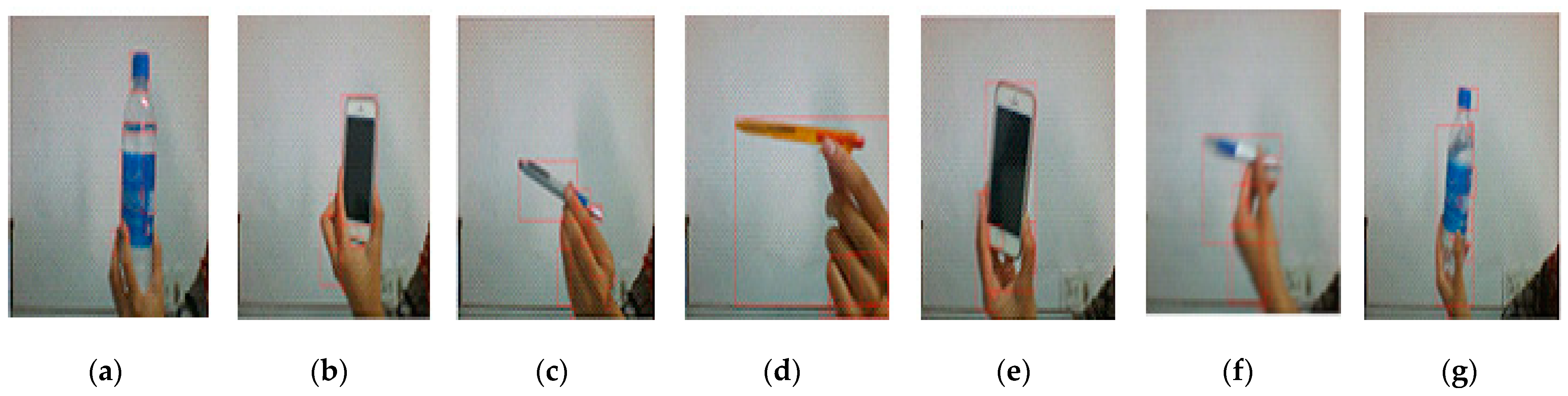

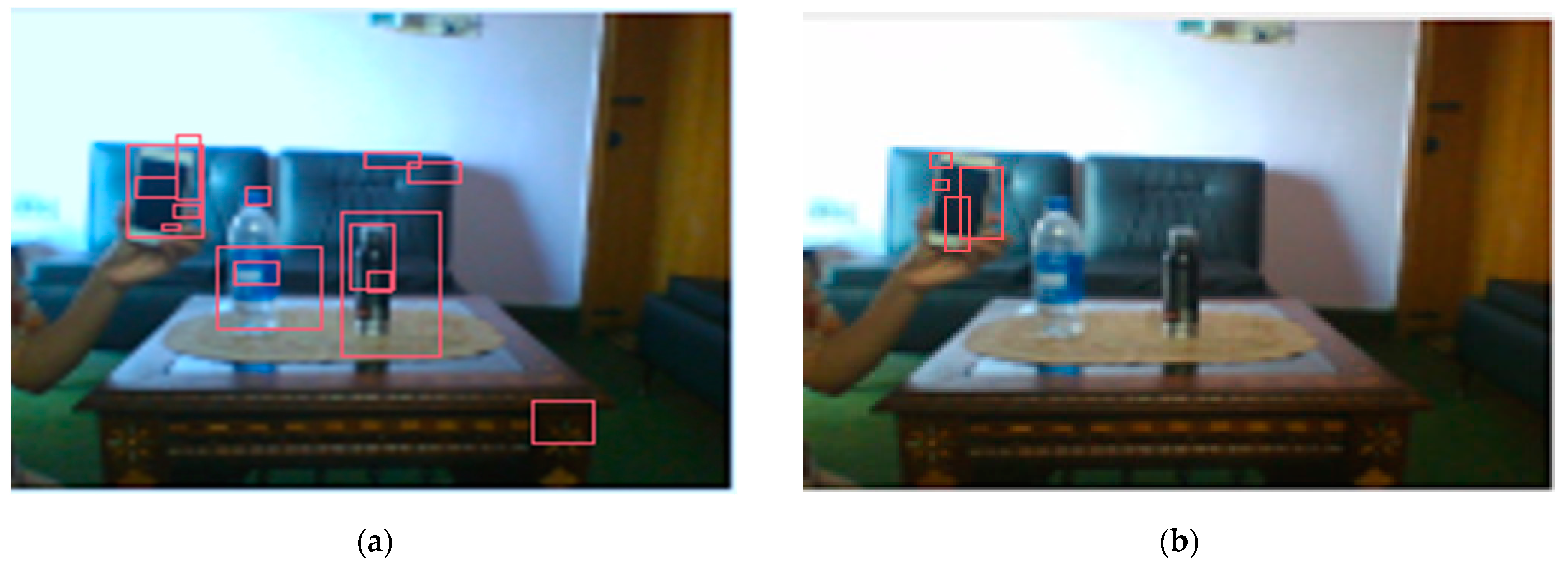

3.4.1. Bottom-Up and Top-Down Attention Cycle of PSA

Scenario

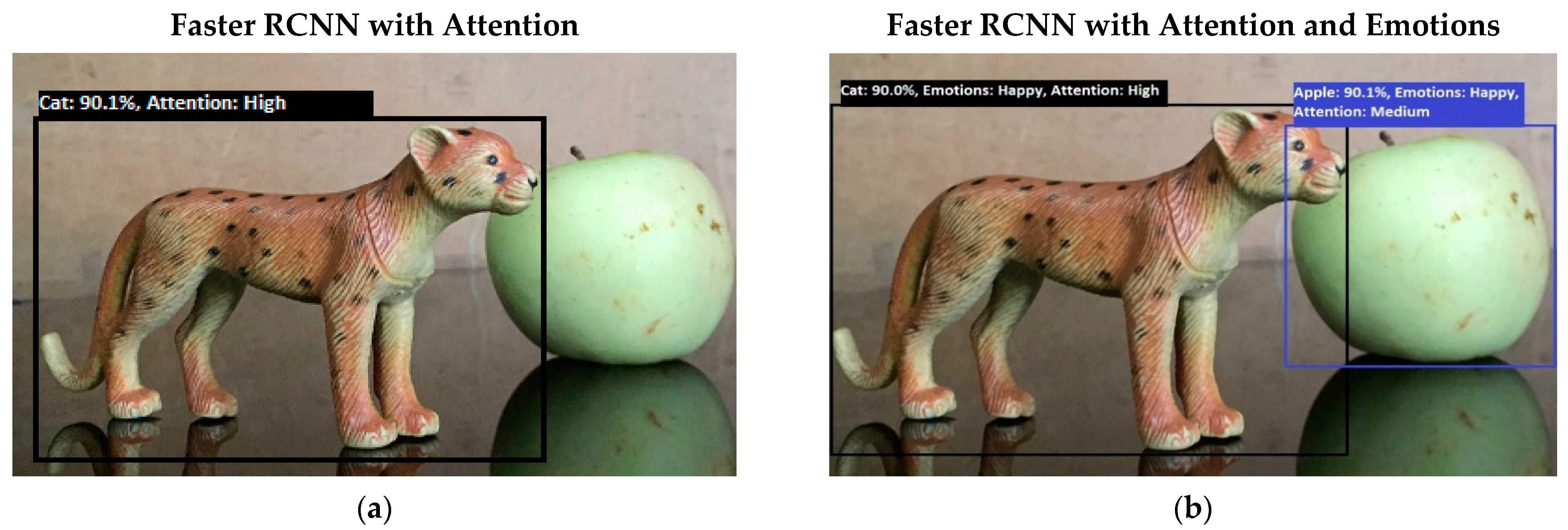

4. Results and Discussion

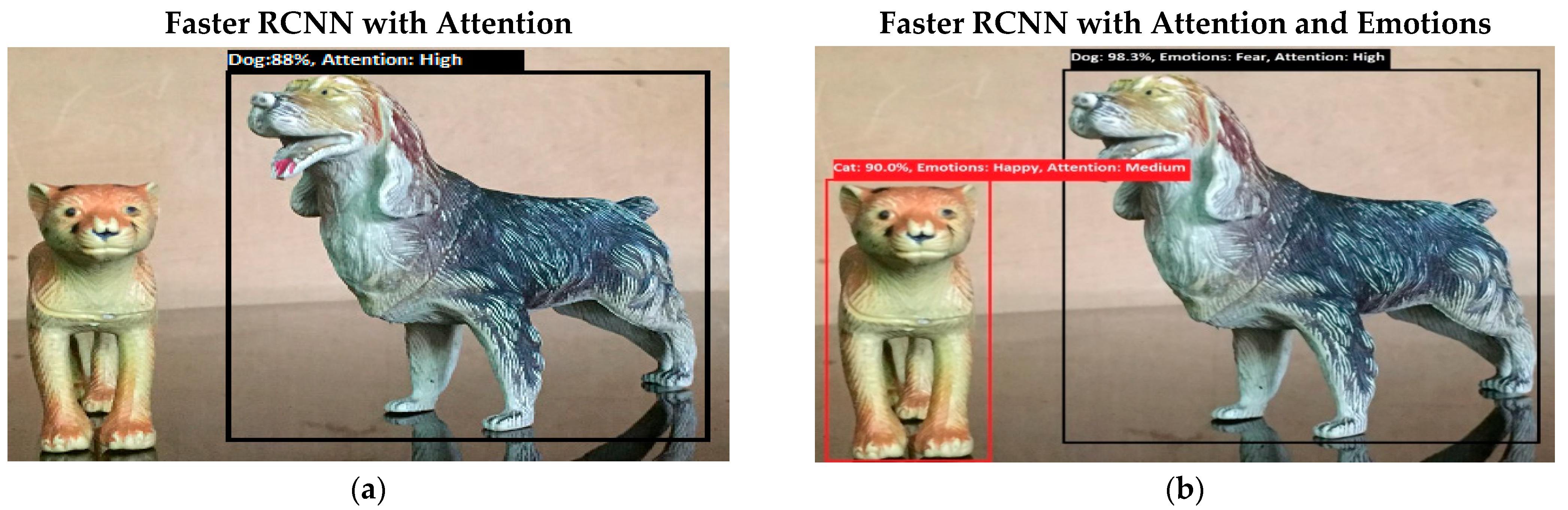

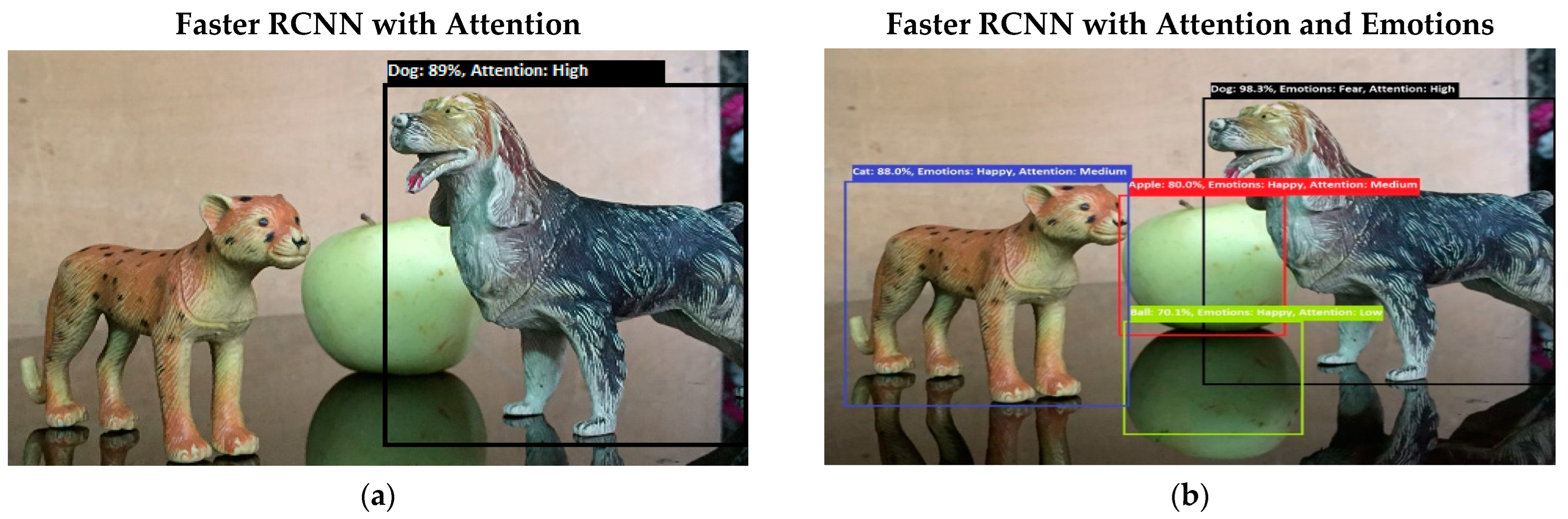

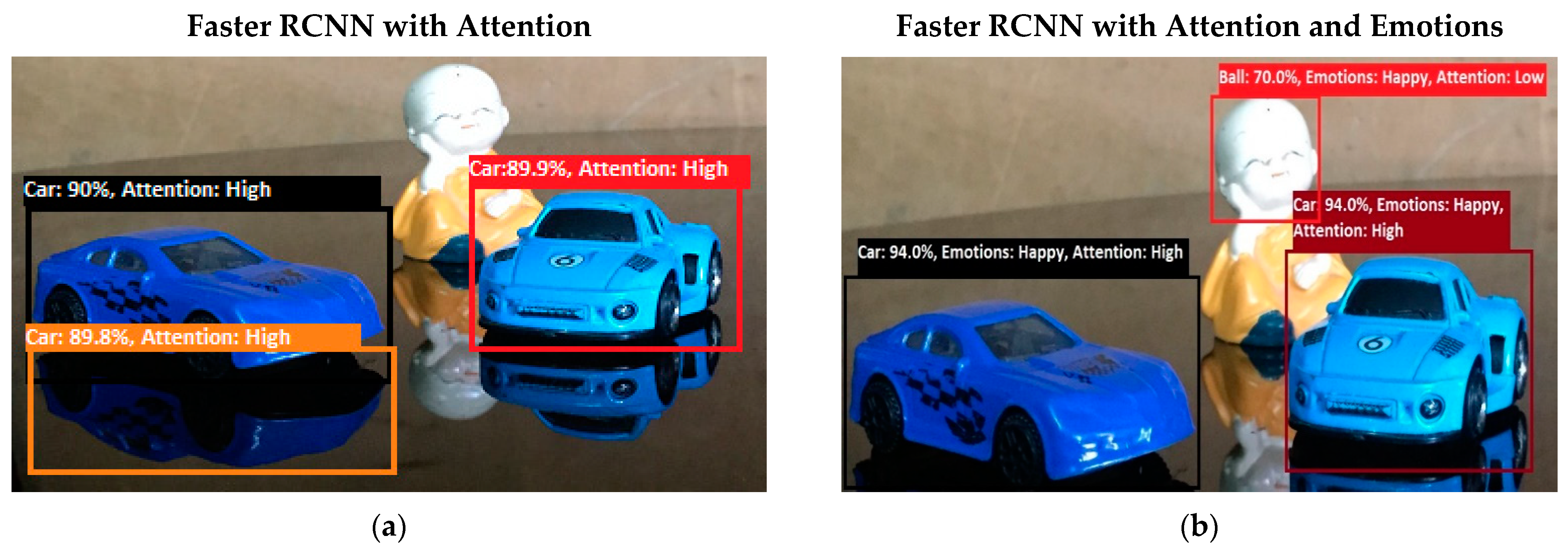

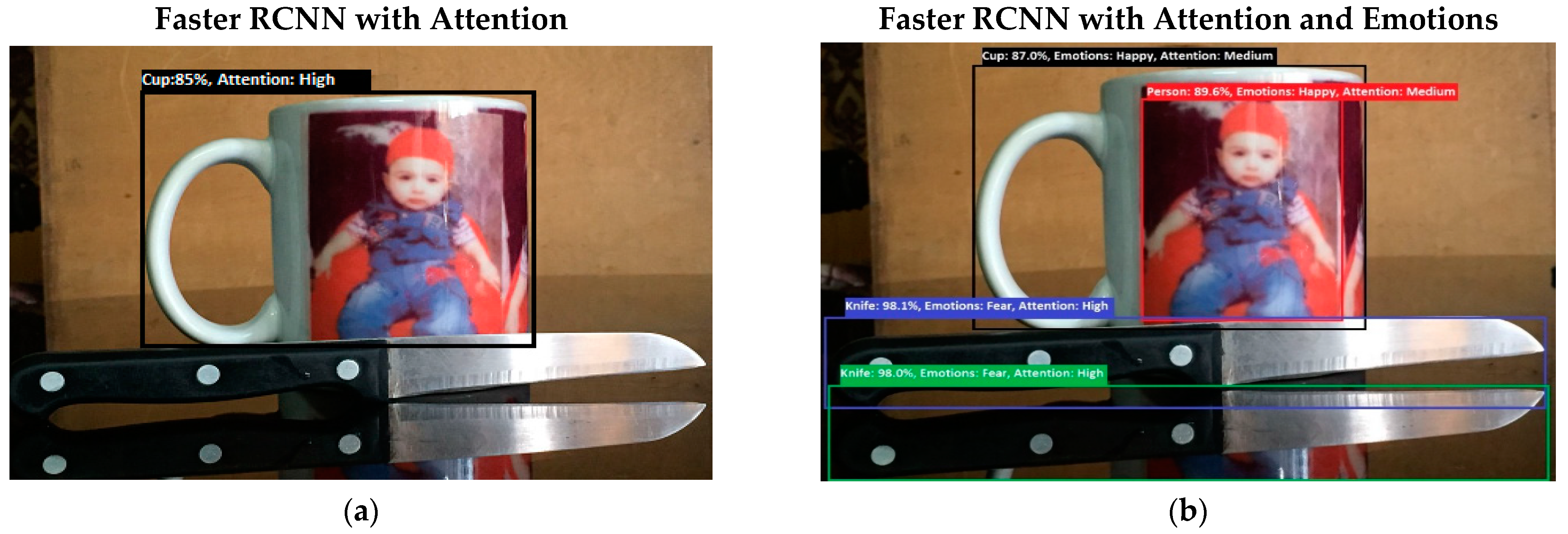

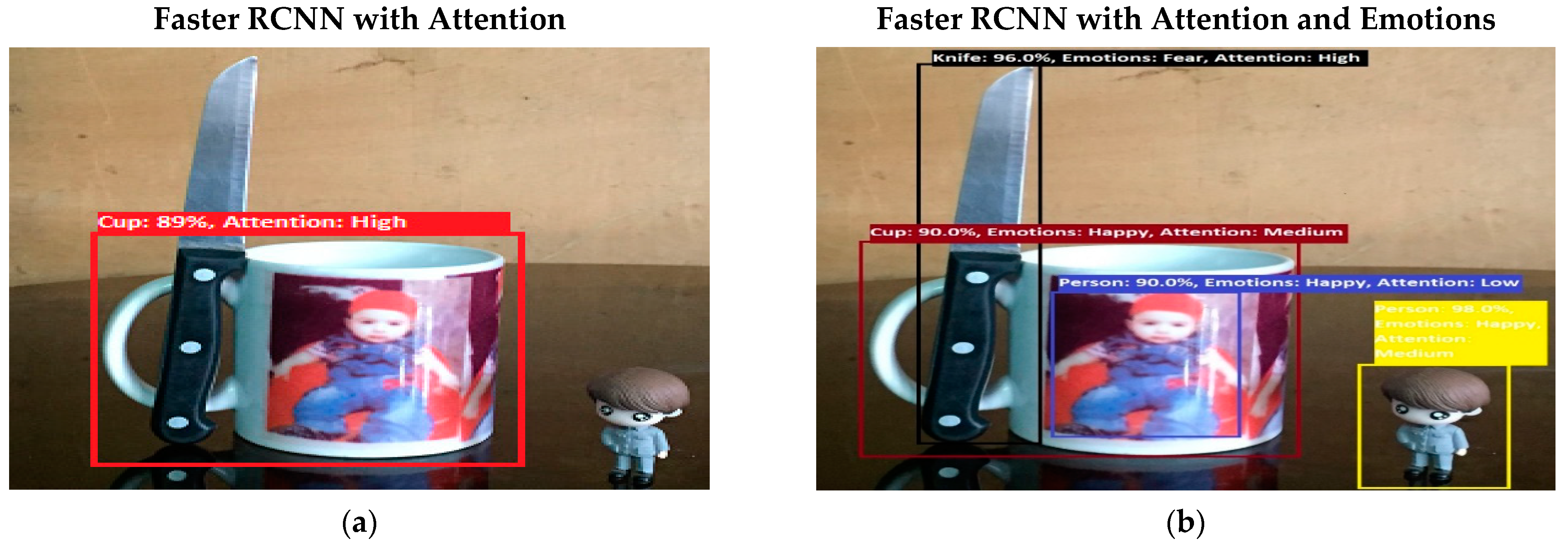

4.1. Comparative Experiment

4.2. Discussion

5. Conclusions

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Keizer, S.; Foster, M.E.; Wang, Z.; Lemon, O. Machine learning for social multiparty human-robot interaction. ACM Trans. Interact. Intell. Syst. 2014, 4, 1–32. [Google Scholar] [CrossRef]

- Carterette, E.C.; Friedman, M.P. Psychophysical Judgment and Measurement; Elsevier: Los Angles, CA, USA, 1974; ISBN 978-0-12-161902-2. [Google Scholar]

- Samsonovich, A.V. On a roadmap for the BICA challenge. Biol. Inspired Cogn. Archit. 2012, 1, 100–107. [Google Scholar] [CrossRef]

- Qazi, W.M.; Bukhari, S.T.S.; Ware, J.A.; Athar, A. NiHA: A conscious agent. In Proceedings of the 10th International Conference on Advanced Cognitive Technologies and Applications, Barcelona, Spain, 18–22 February 2018; ThinkMind: Barcelona, Spain, 2018; pp. 78–87. [Google Scholar]

- Helgason, H.P. General Attention Mechanism for Artificial Intelligence Systems. Ph.D. Thesis, School of Computer Science, Reykjavík University, Reykjavík, Iceland, May 2013. [Google Scholar]

- Itti, L.; Koch, C. Computational modelling of visual attention. Nat. Rev. Neurosci. 2001, 2, 194–203. [Google Scholar] [CrossRef]

- Begum, M. Visual Attention for Robotic Cognition: A Biologically Inspired Probabilistic Architecture. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2010. [Google Scholar]

- Nobre, A.C.; Kastner, S. The Oxford Handbook of Attention; Oxford University Press: Oxford, UK, 2014; ISBN 9780199675111. [Google Scholar]

- Madl, T.; Franklin, S.; Chen, K.; Trappl, R. Spatial working memory in the LIDA cognitive architecture. In Proceedings of the International Conference on Cognitive Modelling, Ottawa, ON, Canada, 11–14 January 2013. [Google Scholar]

- Babiloni, C.; Miniussi, C.; Babiloni, F.; Carducci, F.; Cincotti, F.; Percio, C.D.; Sirello, G.; Fracassi, C.; Nobre, S.C.; Rossini, P.M. Sub-second temporal attention modulates alpha rhythms. A high-resolution EEG study. Cogn. Brain Res. 2004, 19, 259–268. [Google Scholar] [CrossRef]

- Hinshaw, S.P.; Arnold, L.E. Attention-Deficit hyperactivity disorder, multimodal treatment, and longitudinal outcome: Evidence, paradox, and challenge. Wiley Interdiscip. Rev. Cogn. Sci. 2015, 6, 39–52. [Google Scholar] [CrossRef]

- Calcott, R.D.; Berkman, E.T. Attentional flexibility during approach and avoidance motivational states: The role of context in shifts of attentional breadth. J. Exp. Psychol. Gen. 2014, 143, 1393–1408. [Google Scholar] [CrossRef]

- Shanahan, M. Consciousness, emotion, and imagination: A brain-inspired architecture for cognitive robotics. In Proceedings of the AISB 2005 Symposium on Next-Generation Approaches to Machine Consciousness, Hatfield, UK, 12–15 April 2005; pp. 26–35. [Google Scholar]

- Baars, B.J.; Gage, N.M. Cognition, Brain, and Consciousness: Introduction to Cognitive Neuroscience, 2nd ed.; Elsevier: Beijing, China, 2010; ISBN 978-0-12-375070-9. [Google Scholar]

- Vuilleumier, P. How brains beware: Neural mechanisms of emotional attention. Trends Cogn. Sci. 2005, 9, 585–594. [Google Scholar] [CrossRef]

- Brosch, T.; Scherer, K.R.; Grandjean, D.; Sander, D. The impact of emotion on perception, attention, memory, and decision-making. Swiss Med. Wkly. 2013, 143, w13786. [Google Scholar] [CrossRef]

- Merrick, K. Value systems for developmental cognitive robotics: A survey. Cogn. Syst. Res. 2017, 41, 38–55. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Intrinsic and extrinsic motivations: Classic definitions and new directions. Contemp. Educ. Psychol. 2000, 25, 54–67. [Google Scholar] [CrossRef]

- Robinson, L.J.; Stevens, L.H.; Threapleton, C.J.D.; Vainiute, J.; McAllister-Williams, R.H.; Gallagher, P. Effects of intrinsic and extrinsic motivation on attention and memory. Acta Psychol. 2012, 141, 243–249. [Google Scholar] [CrossRef]

- IIT. Available online: http://www.iit.it/ (accessed on 20 December 2021).

- Qazi, W.M. Modeling Cognitive Cybernetics from Unified Theory of Mind Using Quantum Neuro-Computing for Machine Consciousness. Ph.D. Thesis, Department of Computer Science, NCBA&E, Lahore, Pakistan, 2011. [Google Scholar]

- Gobet, F.; Lane, P. The CHREST Architecture of Cognition the Role of Perception in General Intelligence; Advances in Intelligent Systems Research Series; Atlantis Press: Lugano, Switzerland, 2010. [Google Scholar]

- Thórisson, K.R.; Helgasson, H.P. Cognitive architectures and autonomy: A comparative review. J. Artif. Gen. Intell. 2012, 3, 1–30. [Google Scholar] [CrossRef]

- Franklin, S.; Madl, T.; D’Mello, S.; Snaider, J. LIDA: A systems-level architecture for cognition, emotion, and learning. IEEE Trans. Auton. Ment. Dev. 2014, 6, 19–41. [Google Scholar] [CrossRef]

- Goertzel, B. Opencog Prime: A cognitive synergy based architecture for artificial general intelligence. In Proceedings of the 2009 8th IEEE International Conference on Cognitive Informatics, Hong Kong, China, 15–17 June 2009; IEEE: Hong Kong, China, 2009; pp. 60–68. [Google Scholar]

- Sun, R. The CLARION cognitive architecture: Extending cognitive modeling to social simulation. In Cognition and Multi-Agent Interaction; Cambridge University Press: Cambridge, UK, 2006; pp. 79–100. [Google Scholar] [CrossRef]

- Chong, H.; Tan, A.; Ng, G. Integrated cognitive architectures: A survey. In Artificial Intelligence Review; Springer: Berlin/Heidelberg, Germany, 2007; Volume 28, pp. 103–130. [Google Scholar]

- Raza, S.A.; Kanwal, A.; Rehan, M.; Khan, K.A.; Aslam, M.; Asif, H.M.S. ASIA: Attention driven pre-conscious perception for socially interactive agents. In Proceedings of the 2015 International Conference on Information and Communication Technologies (ICICT), Karachi, Pakistan, 12–13 December 2015; IEEE: Karachi, Pakistan, 2015. [Google Scholar]

- Snaider, J.; Franklin, S. Vector LIDA. Procedia Comput. Sci. 2014, 41, 188–203. [Google Scholar] [CrossRef][Green Version]

- Chai, W.J.; Hamid, A.I.A.; Abdullah, J.M. Working memory from the psychological and neurosciences perspectives: A review. Front. Psychol. 2018, 9, 401. [Google Scholar] [CrossRef]

- Bach, M.P. Editorial: Usage of Social Neuroscience in E-Commerce Research—Current Research and Future Opportunities. J. Theor. Appl. Electron. Commer. Res. 2018, 13, 1–9. [Google Scholar]

- Lamberz, J.; Litfin, T.; Teckert, O.; Meeh-Bunse, G. Still Searching or Have You Found It Already?—Usability and Web Design of an Educational Website. Bus. Syst. Res. 2018, 9, 19–30. [Google Scholar] [CrossRef]

- Lamberz, J.; Litfin, T.; Teckert, O.; Meeh-Bunse, G. How Does the Attitude to Sustainable Food Influence the Perception of Customers at the Point of Sale?—An Eye-Tracking Study. ENTRENOVA 2019, 5, 402–409. [Google Scholar] [CrossRef]

- Thorisson, K.R. Communicative Humanoids: A Computational Model of Psycho-Social Dialogue Skills. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1996. [Google Scholar]

- Treisman, A. Perceptual grouping and attention in visual search for features and for objects. J. Exp. Psychol. Hum. Percept. Perform. 1982, 8, 194–214. [Google Scholar] [CrossRef]

- Miller, L.M. Neural mechanisms of attention to speech. In Neurobiology of Language; Hickok, G., Small, S.L., Eds.; Academic Press: Cambridge, MA, USA, 2016; pp. 503–514. [Google Scholar]

- A Comprehensive Guide to Convolutional Neural Networks—The ELI5 Way. Available online: https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53 (accessed on 15 January 2022).

- The Dummy’s Guide to MFCC. Available online: https://medium.com/prathena/the-dummys-guide-to-mfcc-aceab2450fd (accessed on 9 December 2021).

- Muda, L.; Begam, M.; Elamvazuthi, I. Voice recognition algorithms using mel frequency cepstral coefficient (MRCC) and dynamic time warping (DTW) techniques. arXiv 2010, arXiv:1003.4083. [Google Scholar] [CrossRef]

- Batch Norm Explained Visually—How It Works, and Why Neural Networks Need It. Available online: https://towardsdatascience.com/batch-norm-explained-visually-how-it-works-and-why-neural-networks-need-it-b18919692739 (accessed on 29 November 2021).

- Prasad, N.V.; Umesh, S. Improved cepstral mean and variance normalization using bayesian framework. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–13 December 2013; IEEE: Olomouc, Czech Republic, 2013; pp. 156–161. [Google Scholar]

- Region Proposal Network (RPN)—Backbone of Faster R-CNN. Available online: https://medium.com/egen/region-proposal-network-rpn-backbone-of-faster-r-cnn-4a744a38d7f9 (accessed on 2 December 2021).

- Kumar, A.A. Semantic memory: A review of methods, models, and current challenges. Psychon. Bull. Rev. 2021, 28, 40–80. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Schofield, E.; Gonen, M. A Tutorial on Dirichlet Process Mixture Modeling. J. Math. Psychol. 2019, 91, 128–144. [Google Scholar] [CrossRef]

- Fan, W.; Bouguila, N. Online learning of a dirichlet process mixture of generalized dirichlet distributions for simultaneous clustering and localized feature selection. In Proceedings of the JMLR: Workshop and Conference Proceedings, Asian Conference on Machine Learning, Singapore, 4–6 November 2012; Volume 25, pp. 113–128. [Google Scholar]

- Monte Carlo Markov Chain (MCMC), Explained, Understanding the Magic behind Estimating Complex Entities Using Randomness. Available online: https://towardsdatascience.com/monte-carlo-markov-chain-mcmc-explained-94e3a6c8de11 (accessed on 13 November 2021).

- Bardenet, R.; Doucet, A.; Holmes, C. Towards scaling up markov chain monte carlo: An adaptive subsampling approach. In Proceedings of the 31st International Conference on Machine Learning, PMLR, Beijing, China, 21–26 June 2014; Volume 32, pp. 405–413. [Google Scholar]

- Hidden Markov Models Simplified. Available online: https://medium.com/@postsanjay/hidden-markov-models-simplified-c3f58728caab (accessed on 29 November 2021).

- Chafik, S.; Cherki, D. Some algorithms for large hidden markov models. World J. Control Sci. Eng. 2013, 1, 9–14. [Google Scholar] [CrossRef]

- Rudas, I.J.; Fodor, J.; Kacprzyk, J. Towards Intelligent Engineering and Information Technology; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Recurrent Neural Network Tutorial (RNN). Available online: https://www.datacamp.com/tutorial/tutorial-for-recurrent-neural-network (accessed on 22 December 2021).

- Redden, R.; MacInnes, W.J.; Klein, R.M. Inhibition of return: An information processing theory of its natures and significance. Cortex 2021, 135, 30–48. [Google Scholar] [CrossRef] [PubMed]

- Meer, D.V.R.; Hoekstra, P.J.; Rooij, D.V.; Winkler, A.M.; Ewijk, H.V.; Heslenfeld, D.J.; Oosterlaan, J.; Faraone, S.V.; Franke, B.; Buitelaar, J.K.; et al. Anxiety modulates the relation between attention-deficit/hyperactivity disorder severity and working memory-related brain activity. World J. Biol. Psychiatry 2017, 19, 450–460. [Google Scholar] [CrossRef]

- The James-Lange Theory of Emotion. Available online: https://www.verywellmind.com/what-is-the-james-lange-theory-of-emotion-2795305 (accessed on 7 January 2022).

- Fredrickson, B.L. The broaden-and-build theory of positive emotions. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2004, 359, 1367–1378. [Google Scholar] [CrossRef]

- Lia, X.; Lia, Y.; Wanga, X.; Fana, X.; Tonga, W.; Hu, W. The effects of emotional valence on insight problem solving in global-local processing: An ERP study. Int. J. Psychophysiol. 2020, 155, 194–203. [Google Scholar] [CrossRef]

- COCO. Available online: https://cocodataset.org/#download (accessed on 8 September 2020).

- Summerfield, C.; Egner, T. Feature-based attention and feature-based expectation. Trends Cogn. Sci. 2016, 20, 401–404. [Google Scholar] [CrossRef]

- Pasquali, D.; Gonzalez-Billandon, J.; Rea, F.; Sandini, G.; Sciutti, A. Magic iCub: A humanoid robot autonomously catching your lies in a card game. In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 293–302. [Google Scholar]

- Grgič, R.G.; Calore, E.; de’Sperati, C. Covert enaction at work: Recording the continuous movements of visuospatial attention to visible or imagined targets by means of steady-state visual evoked potentials (SSVEPs). Cortex 2016, 74, 31–52. [Google Scholar] [CrossRef]

- Barikani, A.; Javadai, M.; Mohammad, A.; Firooze, B.; Shahnazi, M. Satisfaction and motivation of general physicians toward their career. Glob. J. Health Sci. 2013, 5, 166–173. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Kumar, S.; Singh, T. Role of executive attention in learning, working memory and performance: A brief overview. Int. J. Res. Soc. Sci. 2020, 10, 251–261. [Google Scholar]

| Cognitive Architecture | Sensor Type | Attention Cycle Type | Attention Type | Attention Features | Memories | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Unimodal | Multimodal | Top-Down | Bottom-Up | Feature-Based | Object-Based | Other | Significant Feature | Predefined Feature | Other | Cognitive Memories | |

| iCub [20] | No | Yes | No | Yes | Yes | Yes | Spatial | Yes (Ordinary) | No | Object detection | Yes |

| AKIRA [23] | Yes | No | Yes | No | Yes | No | Spatial | No | Yes | No | No |

| Ymir [5] | No | Yes | Yes | Yes | Yes | No | Spatial | Yes (Ordinary) | No | No | Yes, in frames form |

| OPENCOG PRIME [25] | Yes | No | Yes | No | No | Yes | Spatial | Economic attention network (attentional knowledge) | Yes | ||

| CHREST [22] | Yes | No | No | Yes | Yes | Spatial | Yes (Ordinary) | No | No | Yes, in schematic and chunks form | |

| QuBIC_Johi [4,21] | No | Yes | Yes | No | Yes | Yes | No | No | Yes | Visionary and auditory | Yes |

| LIDA [24] | Yes | No | Yes | Yes | Yes | No | Temporal | Yes | No | Textual information | Yes |

| Vector LIDA [29] | Yes | No | Yes | Yes | Yes | No | Temporal | Yes | No | Content-based working | Yes |

| Proposed Methodology | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Context-based object recognition | Yes |

| Notation | Description |

|---|---|

| EWS(s) | External world state for s |

| IWS | Internal world state |

| SS (s) | Sensor state for s |

| SRS | Sensory representation state |

| PS | Perception state |

| SCS | Superior colliculus state |

| PAS | Perceptual associative memory state |

| WMS | Working memory state |

| LTMS | Long-term memory state |

| MS | Motivation state |

| ES | Emotion state |

| EES | External effector state |

| From State | To State | Weights | Process | LP |

|---|---|---|---|---|

| EWS(s) | SS (s1) | w0 | Sensing external world state | LP1 |

| IWS | SS (s3) | w1 | Sensing internal world state | LP2 |

| SS (s1), SS (s3) | SRS | w2, w3 | Sensory memory representation | LP3 |

| SRS/WMS | PS | w4/w10 | Perception (feature extraction/goal-driven feature regularization) | LP4 |

| PS/WMS | SCS | w5/w9 | Perception refinement/salient feature assessment | LP5 |

| SCS/WMS | PAS | w6/w8 | Semantic top-down based association | LP6 |

| PAS/LTMS/ES/MS | WMS | w7/w12/w14/w16 | Preparing action (visual recognition and attention generation)/information recalling/emotion-based attention regularization/goal-based decision generation | LP7 |

| WMS | LTMS | w11 | Information storage | LP8 |

| WMS | EES | w17 | Action execution to the external world | LP9 |

| WMS | MS | w15 | Goal generation | LP11 |

| WMS | ES | w13 | Emotion-based attention | LP10 |

| Features | Computed Features for Figure 3a | Computed Features for Figure 3b | Computed Features for Figure 3c | Computed Features for Figure 3d | Computed Features for Figure 3e | Computed Features for Figure 3f | Computed Features for Figure 3g |

|---|---|---|---|---|---|---|---|

| Frame Per Second | 16.0667 | 16.0667 | 16 | 16 | 16 | 16 | 15.9333 |

| No. of Objects | 9 | 7 | 5 | 3 | 4 | 3 | 14 |

| Motion Level | 0.04341 | 0.09435 | 0.14763 | 0.12900 | 0.05761 | 0.09788 | 0.06630 |

| Red | 0.16079 | 0.18431 | 0.17647 | 0.17647 | 0.18431 | 0.18431 | 0.21961 |

| Green | 0.10196 | 0.11765 | 0.12156 | 0.10196 | 0.10980 | 0.10588 | 0.10980 |

| Blue | 0.04706 | 0.06667 | 0.08235 | 0.05098 | 0.05882 | 0.07451 | 0.10961 |

| ASpam (Activation Signals for PAM) | 1.22234 | 1.32193 | 1.23095 | 1.16507 | 1.33456 | 1.42375 | 2.89953 |

| Wpam (Weight for PAM) | 0.03831 | 0.02949 | 0.0364 | 0.03012 | 0.01656 | 0.01489 | 0.0 |

| Potential Deficit | 0.04683 | 0.03893 | 0.04484 | 0.03509 | 0.0221 | 0.0212 | 0.0 |

| ASStm (Activation Signals for STM) | 0.95317 | 0.96102 | 0.95516 | 0.96491 | 0.9779 | 0.9788 | 1.0 |

| New Potential Deficit | 0.04683 | 0.03893 | 0.04484 | 0.03509 | 0.0221 | 0.0212 | 0.0 |

| Super Class | Class Title | No. of Images | Super Class | Class Title | No. of Images |

|---|---|---|---|---|---|

| Person | Human | 400 | Sports | Sports ball | 200 |

| Vehicle | Car | 100 | Sports | Tennis racket | 100 |

| Vehicle | Bus | 50 | Kitchen | Bottle | 200 |

| Vehicle | Train | 50 | Kitchen | Cup | 200 |

| Animal | Bird | 100 | Kitchen | Knife | 100 |

| Animal | Cat | 200 | Food | Apple | 200 |

| Animal | Dog | 100 | Food | Banana | 200 |

| Animal | Lion | 100 | Food | Sandwich | 100 |

| Accessory | Handbag | 200 | Electronics | Mobile | 200 |

| Accessory | Umbrella | 100 | Furniture | Chair | 100 |

| Experiment No. | Attention Status on the Goal Object | Goal Object | Object/s Found | Goal Status |

|---|---|---|---|---|

| 1 | High | Null | Lion | - |

| 2 | High | Null | Cat | - |

| 3 | High | Null | Lion, cat | - |

| 4 | High | Cat | Cat | Yes |

| 5 | High | Dog | Dog | Yes |

| 6 | High | Dog | Dog | Yes |

| 7 | High | Car | Cars | Yes |

| 8 | Medium | Cup | Cup | Yes |

| 9 | Medium | Cup | Cup | Yes |

| Experiment No. | Attention Status on the Goal Object | Goal Object | Object/s Found | Previous Emotion State | Current Emotion State | Goal Status |

|---|---|---|---|---|---|---|

| 1 | High | Null | lion | Normal | Fear | - |

| 2 | High | Null | cat | Fear | Happy | - |

| 3 | Medium | Null | lion, cat | Happy | Fear | - |

| 4 | High | cat | cat, apple | Fear | Happy | Yes |

| 5 | High | Dog | Dog, cat | Fear | Fear | Yes |

| 6 | High | Dog | Dog, cat, apple, ball (miss perceived) | Fear | Fear | Yes |

| 7 | High | Car | Cars, ball (miss perceived) | Fear | Happy | Yes |

| 8 | Medium | Cup | Knives, cups, person | Happy | Fear | No |

| 9 | Medium | Cup | Knife, cup, persons | Fear | Fear | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kanwal, A.; Abbas, S.; Ghazal, T.M.; Ditta, A.; Alquhayz, H.; Khan, M.A. Towards Parallel Selective Attention Using Psychophysiological States as the Basis for Functional Cognition. Sensors 2022, 22, 7002. https://doi.org/10.3390/s22187002

Kanwal A, Abbas S, Ghazal TM, Ditta A, Alquhayz H, Khan MA. Towards Parallel Selective Attention Using Psychophysiological States as the Basis for Functional Cognition. Sensors. 2022; 22(18):7002. https://doi.org/10.3390/s22187002

Chicago/Turabian StyleKanwal, Asma, Sagheer Abbas, Taher M. Ghazal, Allah Ditta, Hani Alquhayz, and Muhammad Adnan Khan. 2022. "Towards Parallel Selective Attention Using Psychophysiological States as the Basis for Functional Cognition" Sensors 22, no. 18: 7002. https://doi.org/10.3390/s22187002

APA StyleKanwal, A., Abbas, S., Ghazal, T. M., Ditta, A., Alquhayz, H., & Khan, M. A. (2022). Towards Parallel Selective Attention Using Psychophysiological States as the Basis for Functional Cognition. Sensors, 22(18), 7002. https://doi.org/10.3390/s22187002