Fast Inline Microscopic Computational Imaging

Abstract

:1. Introduction

1.1. State of the Art in 3D, Microscopic Inline Imaging

1.2. Aim of This Paper

2. Microscopic Inline Light-Field Acquisition System

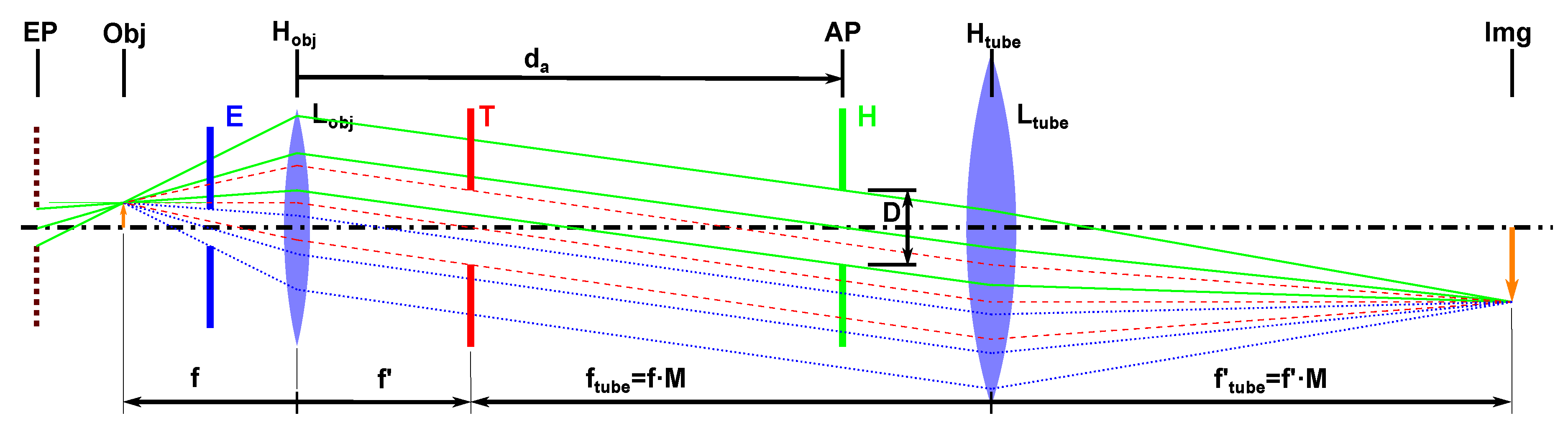

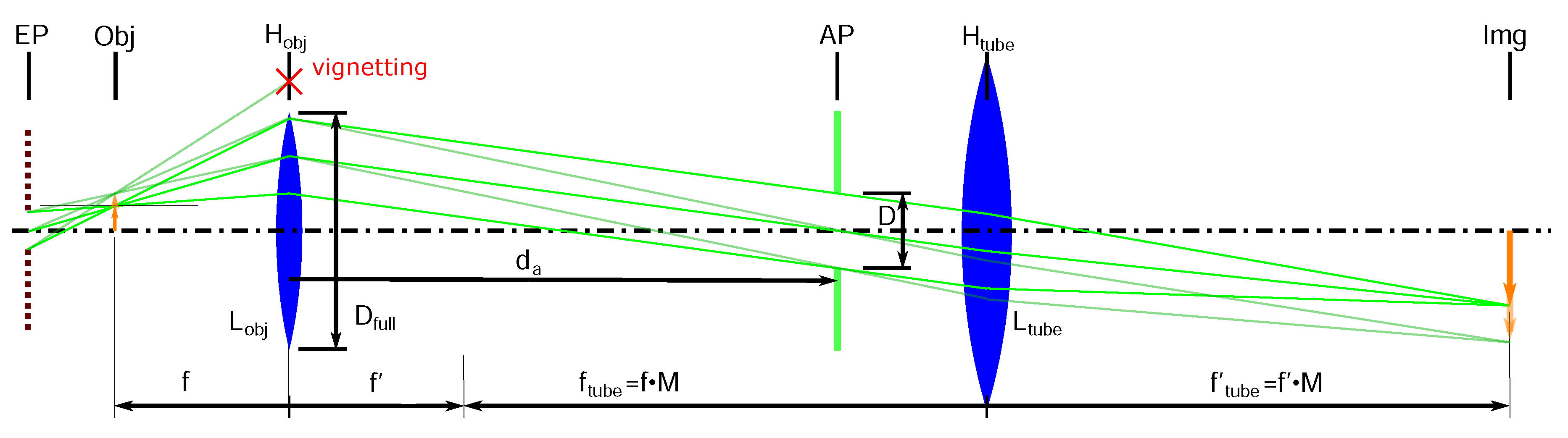

2.1. Optical Configuration for Inline Light-Field Acquisition

- A large aperture position decreases the distance between an object and the virtual location of the entrance pupil and thus increases the parallax effect and the theoretical z-resolution limit. (see Equation (3)).

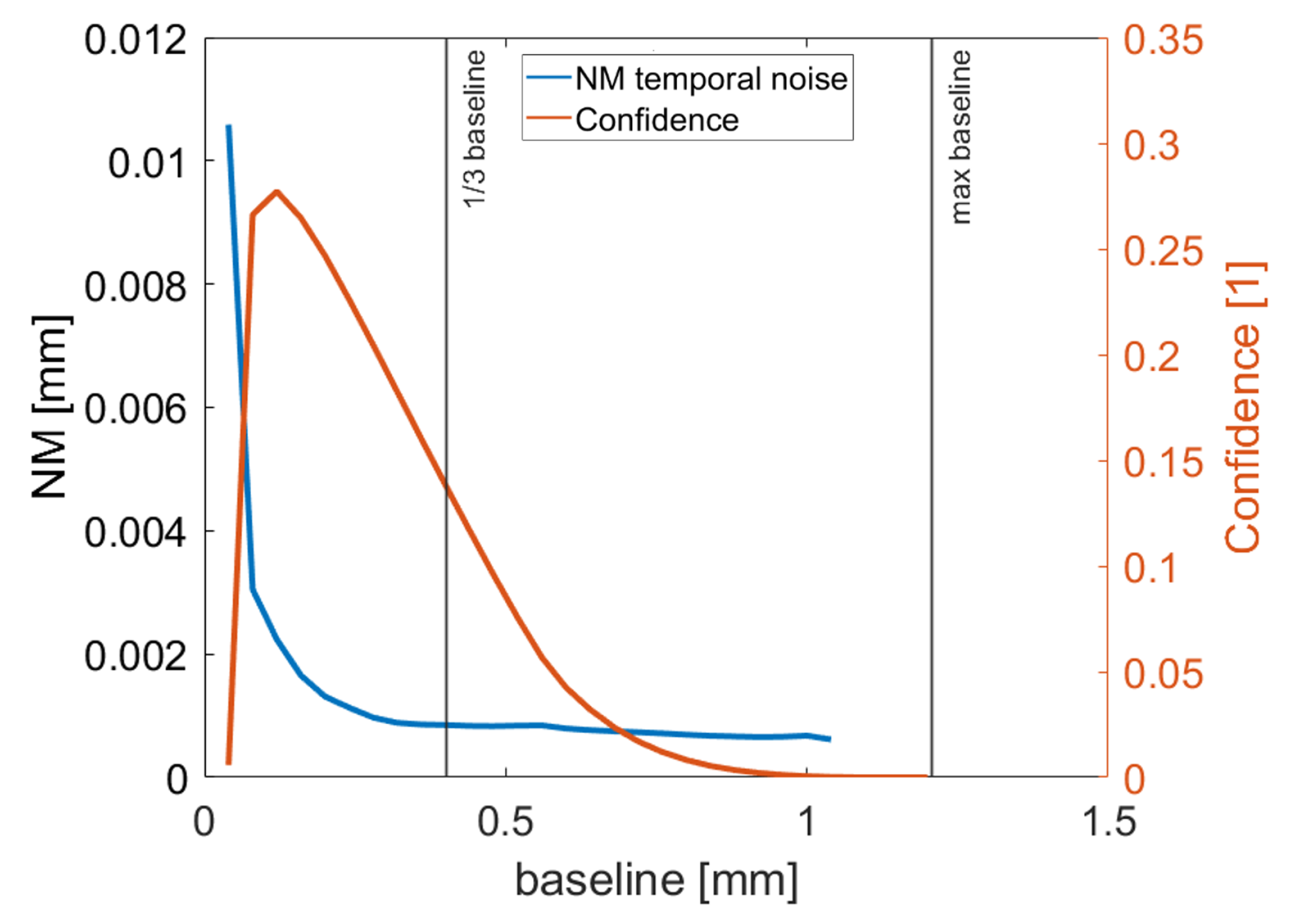

2.2. Algorithm for 3D Reconstruction

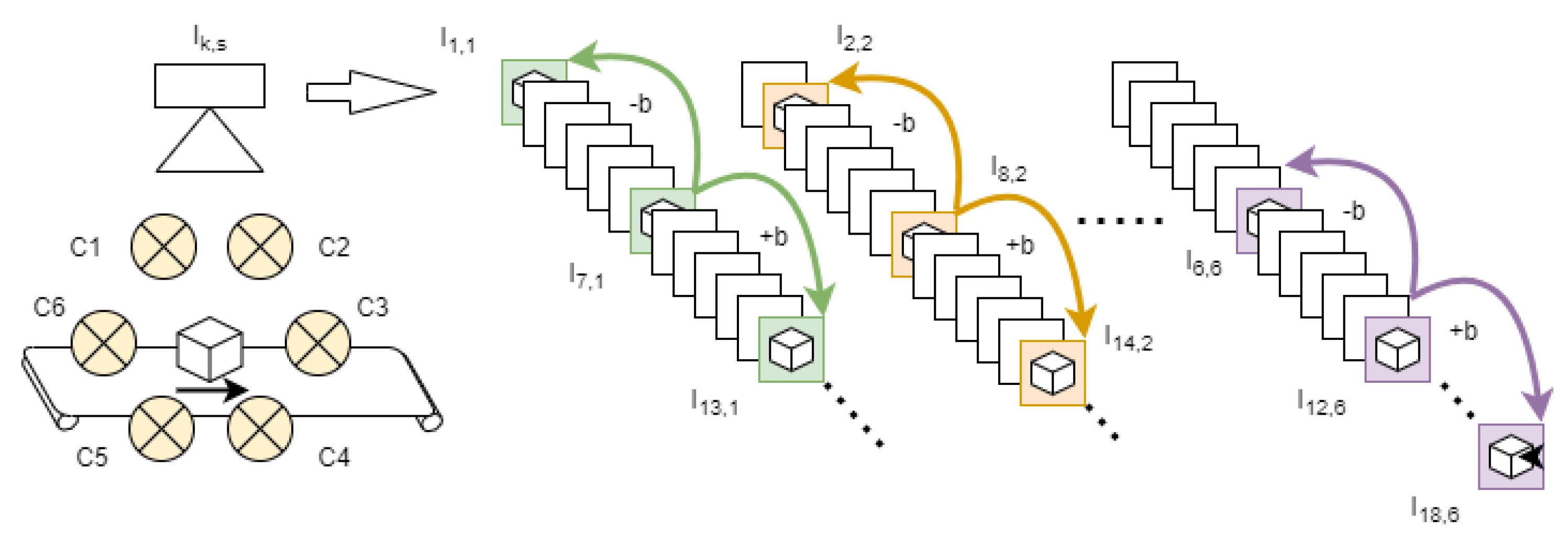

- Feature calculation and multi-view matching: For each pixel in every image , m features are calculated (e.g., m = 1 … 3 for 3 different kernel sizes). Multi-view matching is done between the images (forward projection) and (backward projection). Each image represents the object from a slightly different observation angle. b is the baseline distance chosen for the multi-view matching; see Figure 5. This results in m confidence and disparity maps for every image . Later, we will investigate the appropriate choice of the baseline b. would mean that matching is done with the neighboring images; hence, a very small baseline but high matching confidence as high similarity between adjacent observations can be expected. If one improves the baseline (increases b), the depth estimates will get more accurate, but the individual accuracy of single features will drop due to increased matching ambiguities (also visible in a lower confidence metric) (see also Traxler et al. [1] for definitions of accuracy and precision in this context.)

- Fusion of the disparity maps: To fuse the disparity maps, a mean over the m different confidence maps and a weighted mean are calculated (using the individual confidence maps). In the next step, the deviation of the m disparities is used to run an additional consistency check by reducing the confidence value in case of discrepancies. This gives exactly one disparity map and one confidence map per image and baseline b.

- Generation of the 3D model: Having employed a rectification method [14] for the inline system, different images can easily be integrated into a scene illuminated from s different light directions; and texture, disparity and confidence for each pixel of the scene, calculated. Due to this calibration, different image stacks stemming from the different illuminations s can be integrated, and thus a disparity/confidence tensor can be generated, which is as wide and long as the acquired scene and has “depth” s (because of s light sources). This means that differently from other methods that construct a depth volume, where “depth” is the number of tested disparity steps, there is a very lean and memory-friendly data structured that actually contains more information, namely, depth and confidence from s different illumination and (possibly) view directions, while using less memory.

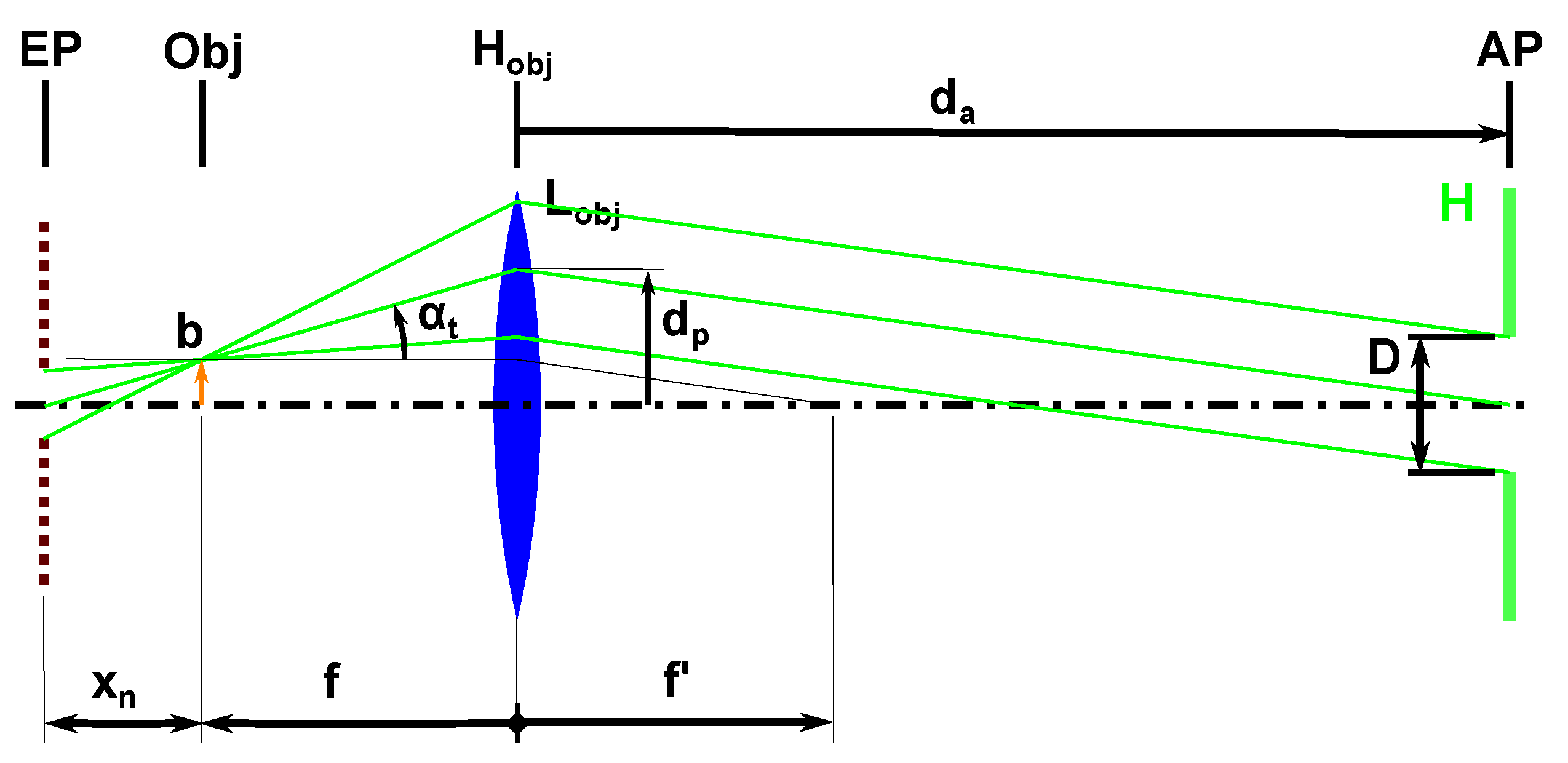

- Stereo baseline:. In classical stereo imaging, the achievable depth resolution is determined by one pixel disparity shift and the stereo baseline used for imaging b (see Equation (3)). In the hypercentric case, the depth resolution is defined by the focal length f and the distance between the maximal FOV in scanning direction and the projection center distance of the chosen aperture D (Equation (5)).

- Oversampling: As our sensor acquires not only the frames of the maximal angle of perspective but also perspectives of frames n in-between. Based on oversampling, we assume that additional depth estimation, which is calculated and summed up, increases the accuracy of the depth measurement noise by a factor . As described above, the chosen baseline b will be projected forward and backward, and , and the disparity was calculated. Hence, the achievable depth resolution depends on the baseline b used and on the oversampling, dependent on the number of images n acquired between . The final expression for the depth resolution is then Equation (7).is the distance of the object to the entrance pupil (see Figure 4).

3. Experimental Results

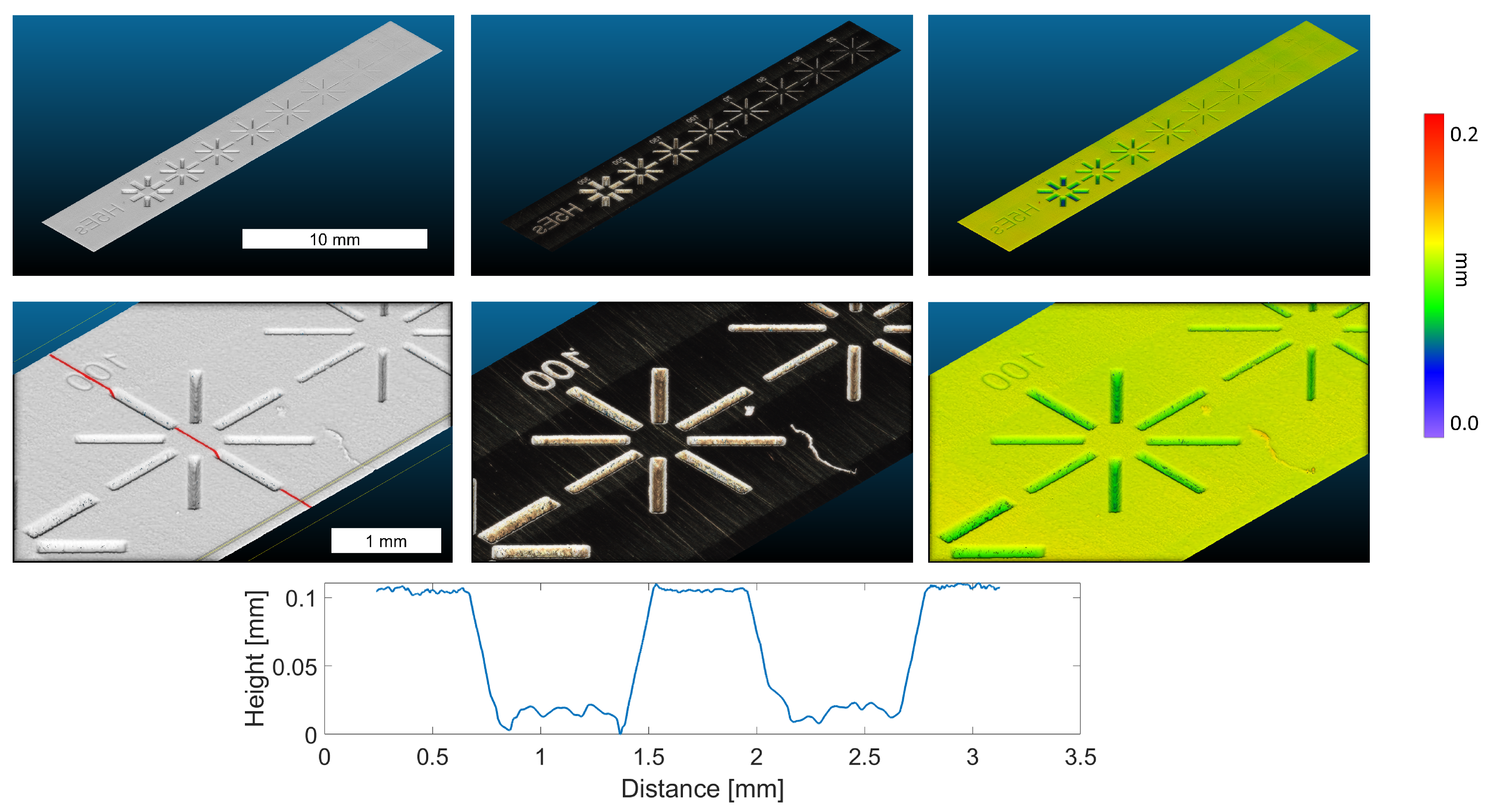

4. Usecase

4.1. Laser Cut

4.2. Klimt Banknote

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Traxler, L.; Ginner, L.; Breuss, S.; Blaschitz, B. Experimental Comparison of Optical Inline 3D Measurement and Inspection Systems. IEEE Access 2021, 9, 53952–53963. [Google Scholar] [CrossRef]

- Leach, R.; Sherlock, B. Applications of super-resolution imaging in the field of surface topography measurement. Surf. Topogr. Metrol. Prop. 2014, 2, 023001. [Google Scholar] [CrossRef]

- Dorsch, R.G.; Häusler, G.; Herrmann, J.M. Laser triangulation: Fundamental uncertainty in distance measurement. Appl. Opt. 1994, 33, 1306–1314. [Google Scholar] [CrossRef] [PubMed]

- Haessig, G.; Berthelon, X.; Ieng, S.-H.; Benosman, R. A Spiking Neural Network Model of Depth from Defocus for Event-based Neuromorphic Vision. Sci. Rep. 2019, 9, 3744. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.heliotis.com/sensoren/ (accessed on 1 June 2022).

- Ito, S.; Poik, M.; Csencsics, E.; Schlarp, J.; Schitter, G. High-speed scanning chromatic confocal sensor for 3-D imaging with modeling-free learning control. Appl. Opt. 2020, 59, 9234–9242. [Google Scholar] [CrossRef] [PubMed]

- Seppä1, J.; Niemelä, K.; Lassila, A. Metrological characterization methods for confocal chromatic line sensors and optical topography sensors. Meas. Sci. Technol. 2018, 29, 054008. [Google Scholar] [CrossRef]

- Niemelä, K. Chromatic Line Confocal Technology in High-Speed 3D Surface-Imaging Applications. In Proceedings of the Photonic Instrumentation Engineering VI, San Francisco, CA, USA, 22 April 2019; Volume 10925. [Google Scholar] [CrossRef]

- LMI Technologies. FocalSpec 3D Line Confocal Sensors and Line Confocal Imaging Technology; Product Brochure; LMI Technologies: Burnaby, BC, Canada, 2020. [Google Scholar]

- Traxler, L.; Stolc, S. 3D microscopic imaging using Structure-from-Motion. In IS&T International Symposium on Electronic Imaging: 3D Measurement and Data Processing; Society for Imaging Science and Technology: Springfield, VA, USA, 2019; pp. 3-1–3-6. [Google Scholar] [CrossRef]

- Blaschitz, B.; Breuss, S.; Traxler, L.; Ginner, L.; Stolc, S. High-speed Inline Computational Imaging for Area Scan Cameras. In IS&T International Symposium on Electronic Imaging: Intelligent Robotics and Industrial Applications Using Computer Vision; Society for Imaging Science and Technology: Springfield, VA, USA, 2021; pp. 301-1–301-6. [Google Scholar] [CrossRef]

- Wiora, G.; Bauer, W.; Seewig, J.; Krüger-Sehm, R. Definition of a Comparable Data Sheet for Optical Surface Measurement Devices. Initiative Fair Datasheet, version 1.2; Messe Stuttgart: Stuttgart, Germany, 2016. [Google Scholar]

- Haider, A.; Traxler, L.; Brosch, N.; Kapeller, C. Modular ring light for photometric analysis of micro-structured surfaces. In Forum Bildverarbeitung; KIT Scientific Publishing: Karlsruhe, Germany, 2020; pp. 39–50. [Google Scholar] [CrossRef]

- Blaschitz, B.; Štolc, S.; Antensteiner, D. Geometric calibration and image rectification of a multi-line scan camera for accurate 3D reconstruction. Electron. Imaging 2018, 9, 240-1–240-6. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ginner, L.; Breuss, S.; Traxler, L. Fast Inline Microscopic Computational Imaging. Sensors 2022, 22, 7038. https://doi.org/10.3390/s22187038

Ginner L, Breuss S, Traxler L. Fast Inline Microscopic Computational Imaging. Sensors. 2022; 22(18):7038. https://doi.org/10.3390/s22187038

Chicago/Turabian StyleGinner, Laurin, Simon Breuss, and Lukas Traxler. 2022. "Fast Inline Microscopic Computational Imaging" Sensors 22, no. 18: 7038. https://doi.org/10.3390/s22187038