Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+

Abstract

1. Introduction

- By combining UAV and deep learning vision technology, the problems of the low efficiency and the high cost of traditional manual inspection or robot inspection are solved;

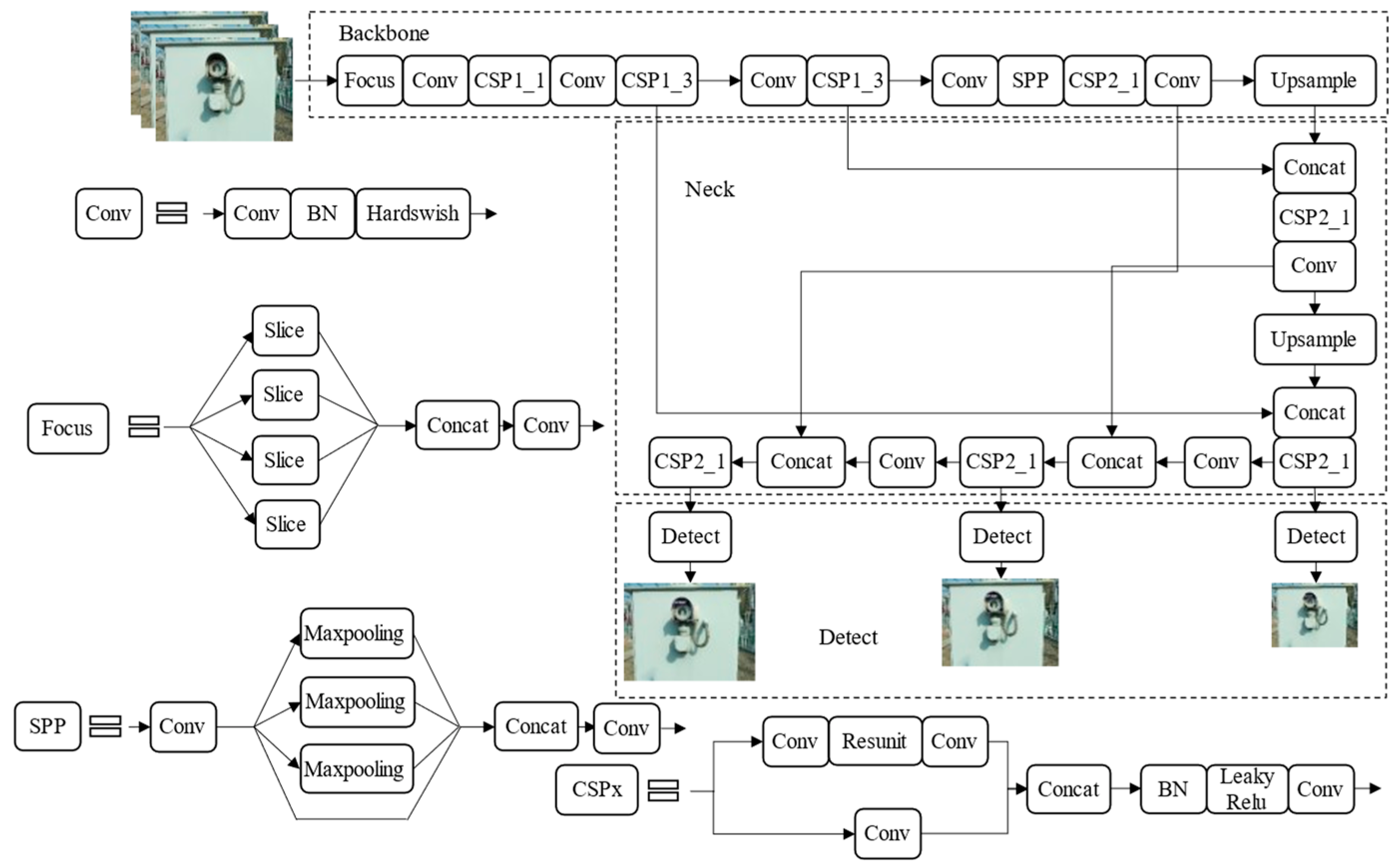

- The object detection algorithm YOLOv5s is introduced to improve the accuracy of detection of meter dial area and classification;

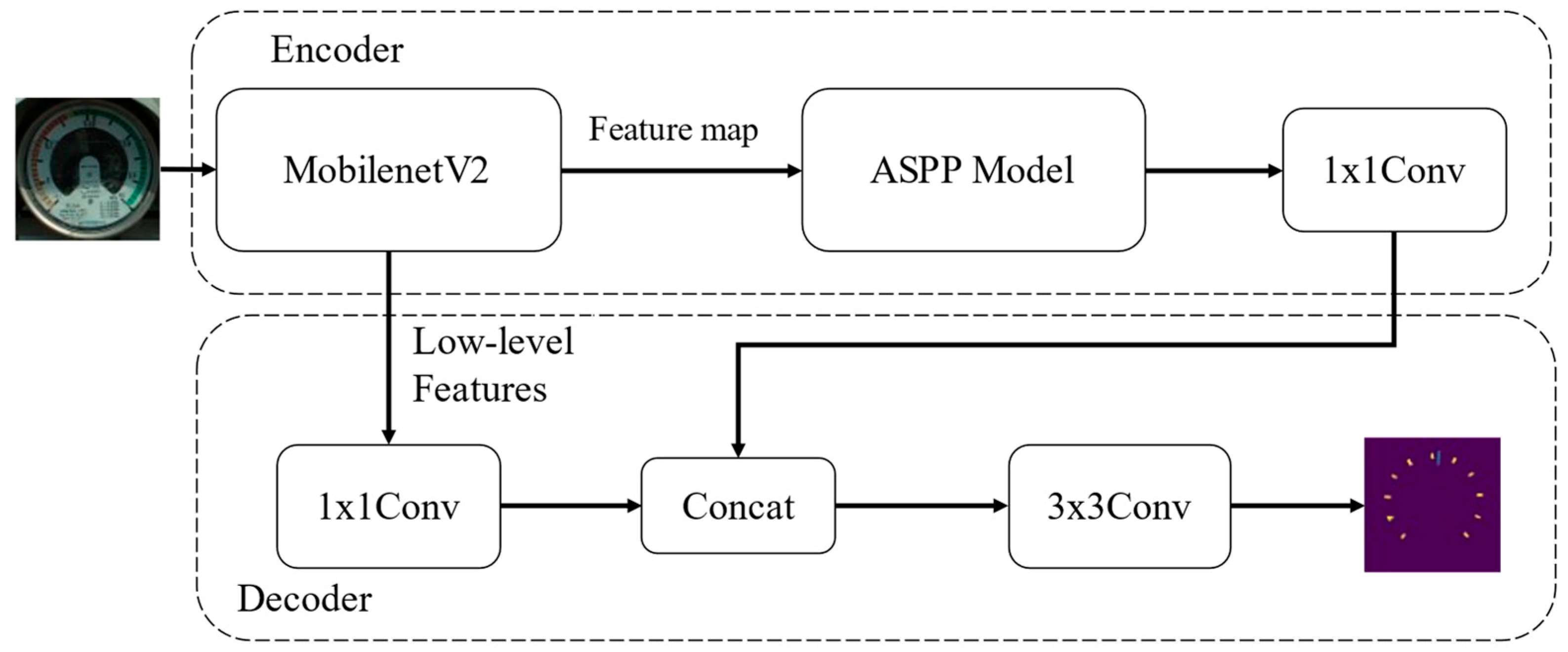

- Deeplabv3+ is used for image segmentation and this method improves the detection accuracy of the pointer and the scale line;

- Based on the image segmentation results, the concentric circle sampling method is proposed to flatten the dial to realize the reading of the dial image.

2. Methods

2.1. Meter Reading Recognition Based on Object Detection and Image Segmentation

2.2. The YOLO Model

2.3. Deeplabv3+ Split Tick Marks and Pointers

2.4. Post-Processing Methods

2.4.1. Erosion

2.4.2. The Flattening Method and Meter Readings

2.5. Evaluation Indicators

3. Experiment and Results

3.1. Experimental Conditions

3.1.1. Data Acquisition and Transmission

3.1.2. Experiment Platform

3.2. Experimental Results

3.2.1. YOLOv5s Detection Results

3.2.2. Deeplabv3+ Image Segmentation Results

3.2.3. Flattening Results

3.3. Comparative Requirements

- Compared with the Faster R-CNN algorithm, the YOLOv5 algorithm was used in this paper, and the detection speed was significantly faster;

- The Deeplabv3+ image segmentation algorithm is mainly used in industrial applications, but the U-Net image segmentation method is mainly used for medical image segmentation, so it is better to use the Deeplabv3+ method for meter readings in industrial applications;

- The post-processing methods such as concentric circle sampling in this paper were more robust than the industrial applications in paper [22].

3.4. Meter Reading Interface Display

3.5. Comparing Readings

4. Conclusions

- The use of UAVs to fly through designated routes at different times and different weather conditions and the collection of 1632 images, including five different types of meters for object detection model training;

- The improvement of: the backbone network of the Deeplabv3+ semantic segmentation network; and the inference speed of the segmentation algorithm for a single image, which was twice the speed of the original model and a reduction in the size of the model weight;

- The use of the erosion and concentric circle sampling method to flatten images to realize meter panel reading. The result has been to achieve an accurate reading of the meter readings while quickly detecting the meter area. In this paper, the inspection of substation instruments was combined with deep learning visual algorithms and mobile flying equipment. It is hoped that the work in this paper can provide some help for intelligent substation inspection.

5. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, C.; Su, Y.; Yuan, R.; Chu, D.; Zhu, J. Light-weight spliced convolution network-based automatic water meter reading in smart city. IEEE Access 2019, 7, 174359–174367. [Google Scholar] [CrossRef]

- Wu, X.; Shi, X.; Jiang, Y.C.; Gong, J. A high-precision automatic pointer meter reading system in low-light environment. Sensors 2021, 21, 4891. [Google Scholar] [CrossRef] [PubMed]

- Hong, Q.Q.; Ding, Y.W.; Lin, J.P.; Wang, M.H.; Wei, Q.Y.; Wang, X.W.; Zeng, M. Image-Based Automatic Watermeter Reading under Challenging Environments. Sensors 2021, 21, 434. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, Y.S.; Sheng, Q.H.; Chen, K.J.; Huang, J. A high-robust automatic reading algorithm of pointer meters based on text detection. Sensors 2020, 20, 5946. [Google Scholar] [CrossRef]

- Fang, H.; Ming, Z.Q.; Zhou, Y.F.; Li, H.Y.; Li, J. Meter recognition algorithm for equipment inspection robot. Autom. Instrum. 2013, 28, 10–14. [Google Scholar]

- Shi, J.; Zhang, D.; He, J.; Kang, C.; Yao, J.; Ma, X. Design of remote meter reading method for pointer type chemical instru-ments. Process Autom. Instrum. 2014, 35, 77–79. [Google Scholar]

- Huang, Y.L.; Ye, Y.T.; Chen, Z.L.; Qiao, N. New method of fast Hough transform for circle detection. J. Electron. Meas. Instrum. 2010, 24, 837–841. [Google Scholar] [CrossRef]

- Zhou, F.; Yang, C.; Wang, C.G.; Wang, B.; Liu, J. Circle detection and its number identification in complex condition based on random Hough transform. Chin. J. Sci. Instrum. 2013, 34, 622–628. [Google Scholar]

- Zhang, W.J. Pointer Meter Recognition via Image Registration and Visual Saliency Detection. Ph.D. Thesis, Chongqing University, Chongqing, China, 2016. [Google Scholar]

- Gao, J.W. Intelligent Recognition Method of Meter Reading for Substation Inspection Robot. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded up robust features. In Proceedings of the Ninth European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Nanni, L.; Lumini, A.; Loreggia, A.; Formaggio, A.; Cuza, D. An Empirical Study on Ensemble of Segmentation Approaches. Signals 2022, 3, 22. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv 2014. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Wang, W.H.; Xie, E.; Li, X.; Fan, D.P.; Song, K.T.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Xing, H.Q.; Du, Z.Q.; Su, B. Detection and recognition method for pointer-type meter in transformer substation. Chin. J. Sci. Instrum. 2017, 38, 2813–2821. [Google Scholar]

- Wan, J.L.; Wang, H.F.; Guan, M.Y.; Shen, J.L.; Wu, G.Q.; Gao, A.; Yang, B. An automatic identification for reading of substation pointer-type meters using faster R-CNN and U-Net. Power Syst. Technol. 2020, 44, 3097–3105. [Google Scholar]

- Ni, T.; Miao, H.F.; Wang, L.L.; Ni, S.; Huang, L.T. Multi-meter intelligent detection and recognition method under complex background. In Proceedings of the 2020 39th Chinese Control Conference (CCC), Shenyang, China, 27–30 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 7135–7141. [Google Scholar]

- Huang, H.Q.; Huang, T.B.; Li, Z.; Lyu, S.L.; Hong, T. Design of Citrus Fruit Detection System Based on Mobile Platform and Edge Computer Device. Sensors 2021, 22, 59. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.K.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Lv, Y.W.; Ai, Z.Q.; Chen, M.F.; Gong, X.R.; Wang, Y.X.; Lu, Z.H. High-Resolution Drone Detection Based on Background Difference and SAG-YOLOv5s. Sensors 2022, 22, 5825. [Google Scholar] [CrossRef]

- Lyu, S.L.; Li, R.Y.; Zhao, Y.W.; Li, Z.; Fan, R.J.; Liu, S.Y. Green Citrus Detection and Counting in Orchards Based on YOLOv5-CS and AI Edge System. Sensors 2022, 22, 576. [Google Scholar] [CrossRef]

- YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 June 2020).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1571–1580. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1920. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE Computer Society, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Neubeck, A.; Gool, L. Efficient Non-Maximum Suppression. In Proceedings of the International Conference on Pattern Recognition, IEEE Computer Society, Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottle-necks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4 Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

| bj | bjA | bjB | bjH | bjL | |

|---|---|---|---|---|---|

| Training set | 75 | 329 | 301 | 126 | 146 |

| Test set | 58 | 213 | 179 | 106 | 97 |

| Model | Speed/ms | FPS | Params | FLOPS |

|---|---|---|---|---|

| YOLOv5s | 22.2 | 45.0 | 7.5M | 13.2B |

| YOLOv5m | 27.0 | 37.0 | 21.8M | 39.4B |

| YOLOv5l | 29.2 | 34.2 | 47.8M | 88.1B |

| YOLOv5x | 30.8 | 32.5 | 89.0M | 166.4B |

| bj/% | bjA/% | bjB/% | bjH/% | bjL/% | mAP50/% | Speed/ms | Model Size/MB | |

|---|---|---|---|---|---|---|---|---|

| YOLOv3 | 99.536 | 99.623 | 99.592 | 99.575 | 99.567 | 99.579 | 27.4 | 123.4 |

| YOLOv4 | 99.538 | 99.610 | 99.571 | 99.561 | 99.566 | 99.569 | 34.0 | 256.3 |

| YOLOv5X | 99.540 | 99.626 | 99.593 | 99.576 | 99.571 | 99.581 | 30.8 | 177.5 |

| YOLOv5L | 99.539 | 99.613 | 99.584 | 99.575 | 99.570 | 99.576 | 29.2 | 90.8 |

| YOLOv5m | 99.542 | 99.630 | 99.600 | 99.579 | 99.573 | 99.585 | 27.0 | 41.3 |

| YOLOv5s | 99.542 | 99.628 | 99.599 | 99.579 | 99.571 | 99.584 | 22.2 | 14.1 |

| Backbone | bjmIoU/% | bjAmIoU/% | bjBmIoU/% | bjHmIoU/% | bjLmIoU/% | Speed/ms | Model Size/MB | |

|---|---|---|---|---|---|---|---|---|

| Deeplabv1 | VGG16 | 61.69 | 52.35 | 44.54 | 67.01 | 37.33 | 15.8 | 82.0 |

| Deeplabv2 | Resnet101 | 57.74 | 47.12 | 33.55 | 77.09 | 45.06 | 56.0 | 176.9 |

| Deeplabv3+ | Xception65 | 85.62 | 76.95 | 82.14 | 82.93 | 80.03 | 66.8 | 165.1 |

| Deeplabv3+ | MobileNetV2 | 78.92 | 76.15 | 79.12 | 81.17 | 75.73 | 35.1 | 11.1 |

| Manually Measured Values | Recognized Values | Error | |

|---|---|---|---|

| bj | 3.8500 | 3.8288 | 0.0212 |

| bjA | 0.4385 | 0.4383 | 0.0002 |

| bjB | 0.3900 | 0.3716 | 0.0184 |

| bjH | 0.0000 | 0.0330 | 0.0330 |

| bjL | 0.4055 | 0.4072 | 0.0017 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, G.; Huang, T.; Lin, B.; Liu, H.; Yang, R.; Jing, W. Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+. Sensors 2022, 22, 7090. https://doi.org/10.3390/s22187090

Deng G, Huang T, Lin B, Liu H, Yang R, Jing W. Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+. Sensors. 2022; 22(18):7090. https://doi.org/10.3390/s22187090

Chicago/Turabian StyleDeng, Guanghong, Tongbin Huang, Baihao Lin, Hongkai Liu, Rui Yang, and Wenlong Jing. 2022. "Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+" Sensors 22, no. 18: 7090. https://doi.org/10.3390/s22187090

APA StyleDeng, G., Huang, T., Lin, B., Liu, H., Yang, R., & Jing, W. (2022). Automatic Meter Reading from UAV Inspection Photos in the Substation by Combining YOLOv5s and DeeplabV3+. Sensors, 22(18), 7090. https://doi.org/10.3390/s22187090