A Review on Locomotion Mode Recognition and Prediction When Using Active Orthoses and Exoskeletons

Abstract

:1. Introduction

2. Methods

2.1. Search Strategy

2.2. Eligibility Criteria

2.3. Data Extraction

2.4. Quality Assessment

3. Results

3.1. Studies Selection

3.2. Quality of the Included Studies

3.3. Information Extracted from the Included Studies

3.3.1. Locomotion Modes and Speed

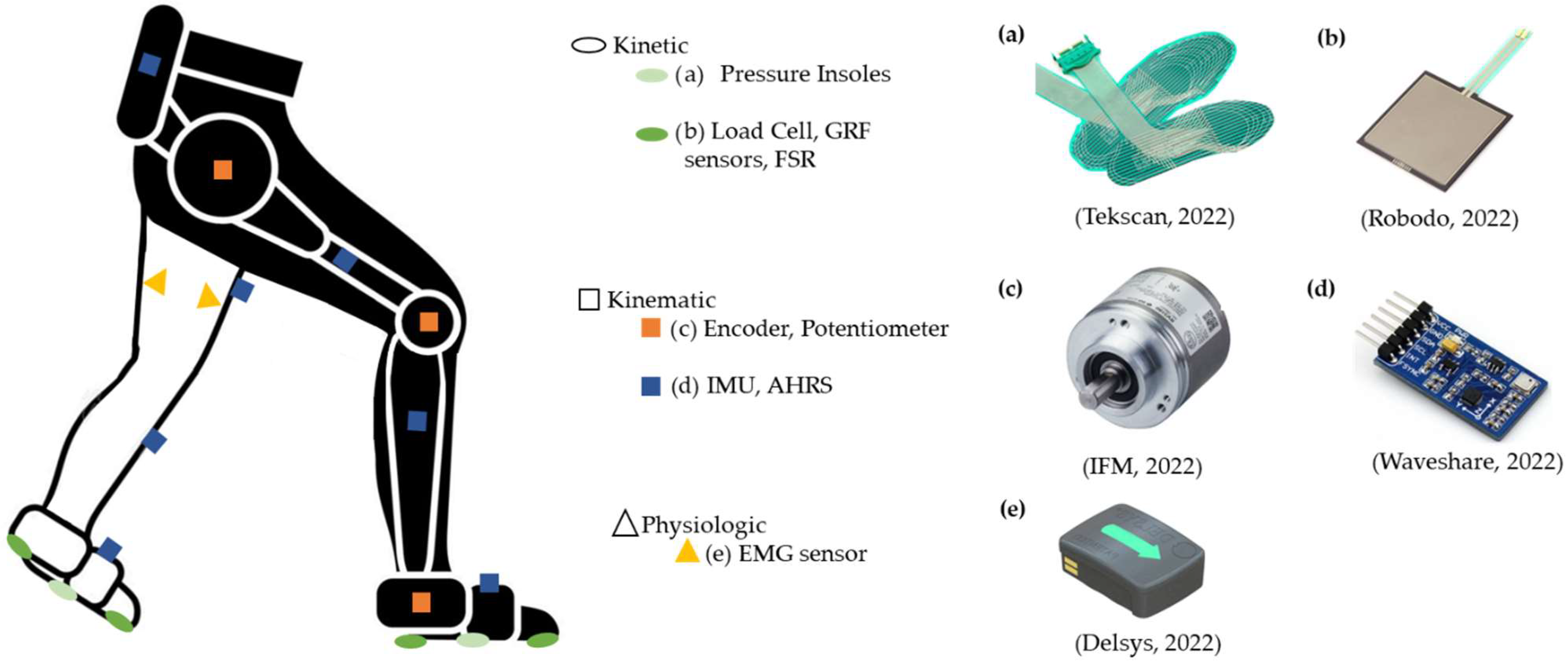

3.3.2. Sensor Systems, Features, and Analysis Windows

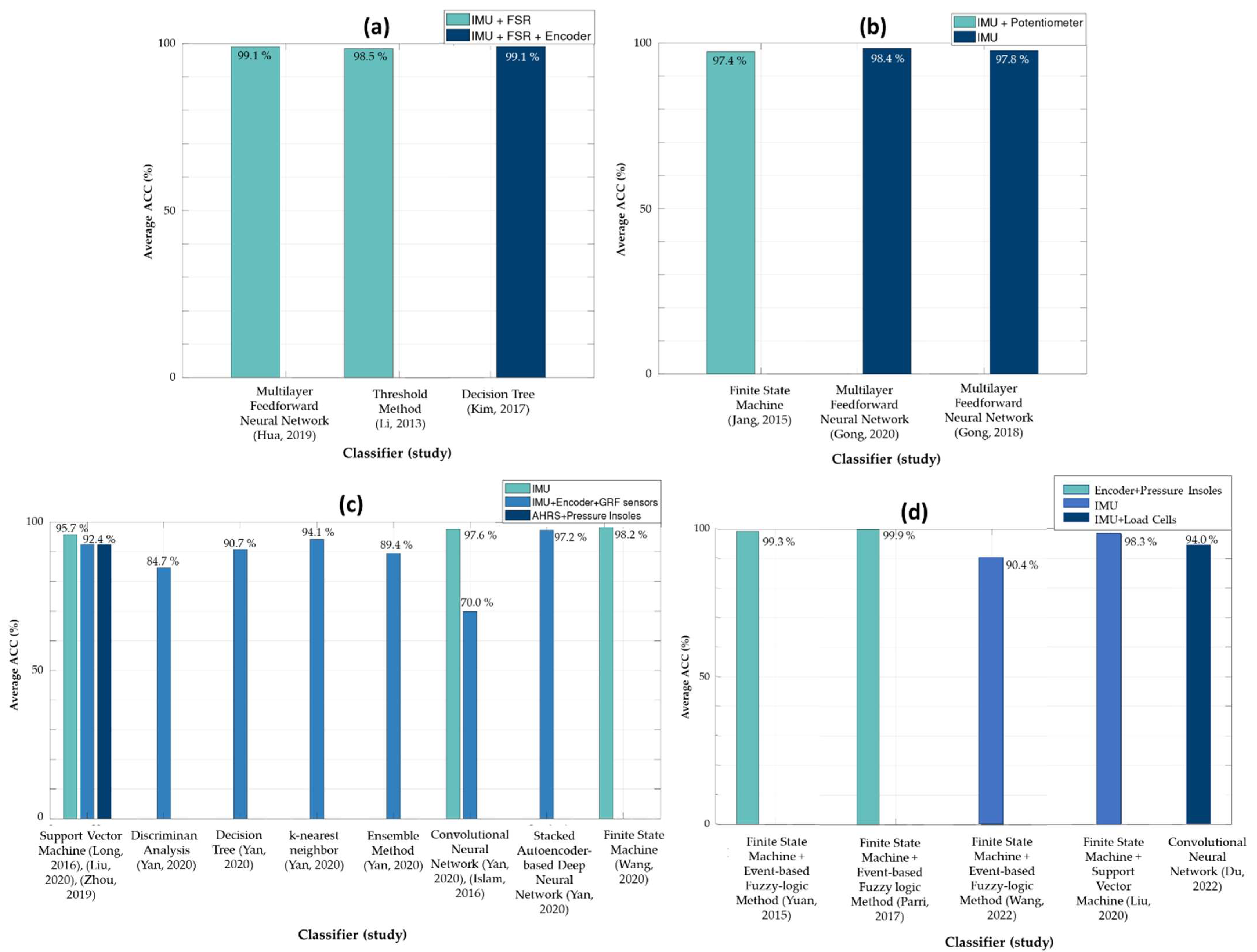

3.3.3. Classifiers

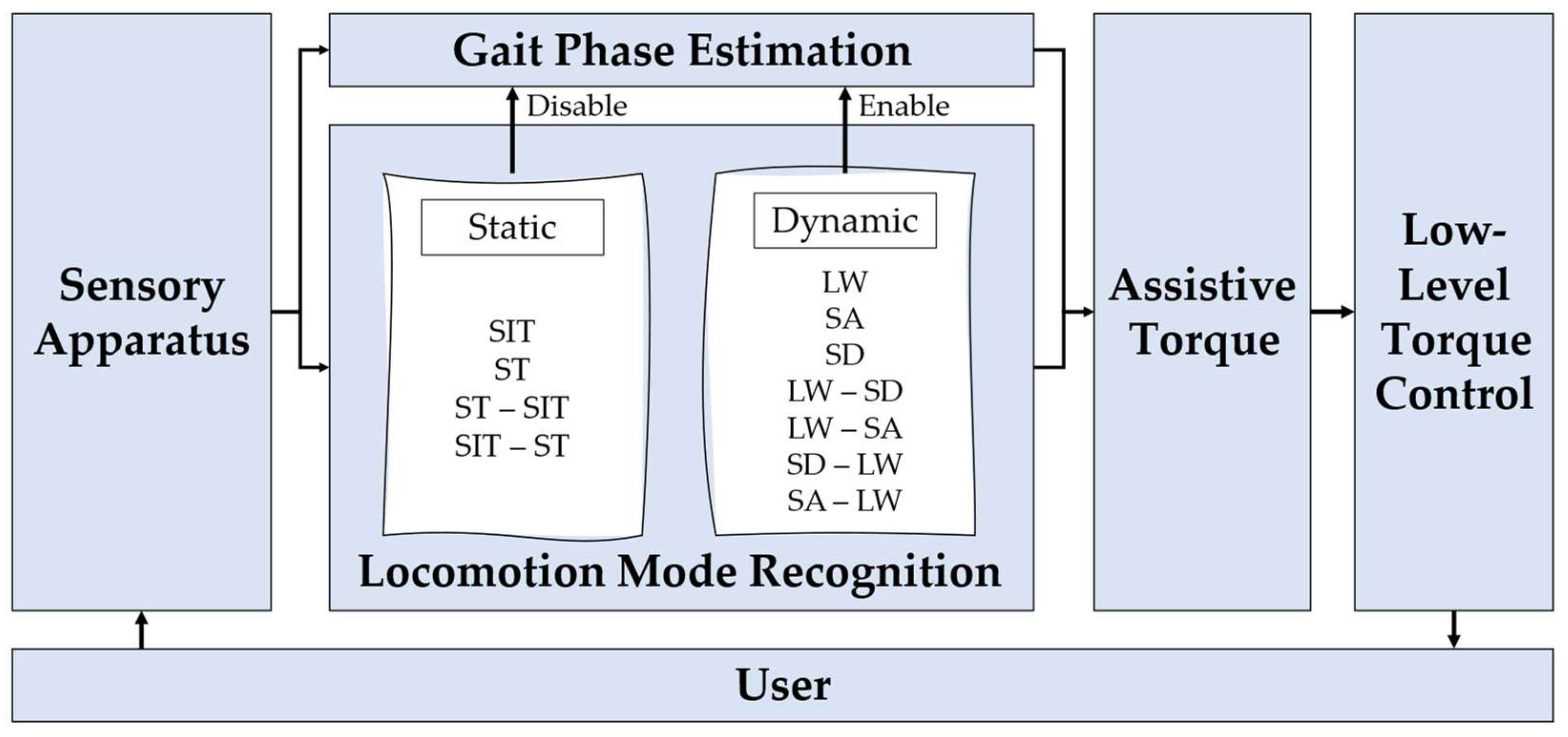

3.3.4. Control Type of the Wearable Assistive Device

3.3.5. Participants

4. Discussion

4.1. Which Are the Typical LMs and the Target Population Addressed?

4.2. Which Type of Wearable Sensors and Features Are Commonly Used for LM Recognition and Prediction?

4.3. Which Set of Algorithms Should Be Employed to Recognize/Predict Different LMs Attending to Accuracy and Time-Effectiveness?

4.4. How to Adapt the Exoskeleton/Orthosis Assistance According to the Decoded User’s LM

4.5. Review Limitations

4.6. Suggestions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- Criteria 1 (C1): Question/Objective sufficiently described?

- Criteria 2 (C2): Study design evident and appropriate?

- Criteria 3 (C3): Subject characteristics sufficiently described and representative?

- Criteria 4 (C4): Experimental protocol sufficiently described?

- Criteria 5 (C5): Sensors used and data collected clearly mentioned?

- Criteria 6 (C6): Input features clearly mentioned?

- Criteria 7 (C7): Window length clearly mentioned?

- Criteria 8 (C8): Classification algorithm clearly mentioned?

- Criteria 9 (C9): Evaluation method of the classification algorithm clearly mentioned?

- Criteria 10 (C10): Control strategy clearly mentioned?

- Criteria 11 (C11): Results reported with enough detail?

- Criteria 12 (C12): Conclusions supported by the results?

| Criterion | “Yes” = 2 | “Partial” = 1 | “No” = 0 |

|---|---|---|---|

| C1: Question/Objective | The question and objective of the study are clearly mentioned. They are easily identified in the introductory section (or first paragraph of the Methods section). Specifies all of the following: purpose, target population, and the specific intervention(s)/association(s)/ descriptive parameter(s) under investigation. | The question and the objective of the study are not clearly mentioned. Some information has to be gathered from parts of the paper other than the introduction/background/objective section. | The question and the objective of the study are not reported. |

| C2: Study Design | Design is easily identified and is appropriate to address the study question/objective. | >Design and study question not clearly identified; >Design is easily identified but only partially addresses the study question. | >Design used does not answer study question; >Design cannot be identified. |

| C3: Subjects Characteristics | >Inclusion and exclusion criteria; > Health condition; >Number of volunteers; >Gender; >Age (mean and std); >Height (mean and std); >Weight (mean and std). | If at least one of these factors is not specified: >Inclusion and exclusion; >Health condition; >Number of volunteers; >Mean and std of age, height, or weight. | If all the topics in the “Partial” section are not provided. |

| C4: Experimental Protocol | >Locomotion tasks addressed; >Speed information (when required); >Number of trials. | If at least one of these factors is not specified: >Locomotion tasks addressed; >Speed information (when required. Slow, natural, and fast speed counted for the “1” quote); >Number of trials. | If all the topics in the “Partial” section are not provided. |

| C5: Sensors and Data | >Sensors used; >Information on the sensors’ positioning; >Data collected. | If at least one of these factors is not specified: >Sensors used; >Information on the sensors’ positioning; >Data collected. | If all the topics in the “Partial” section are not provided. |

| C6: Input Features | The features used are clearly presented, even after the application of feature reduction techniques (such as PCA, for example) | The features used are clearly presented, but when feature reduction techniques are applied, the feature set is not specified | The extracted features are not mentioned. Note that if the raw data of the sensors were fed into the Classification Algorithm, the criterion was rated 2 out of 2. |

| C7: Window Length | >Window length; >Overlap/Window increment is provided in the case of multiple/sliding windows. | If at least one of these factors is not specified: >Window length; >Window increment/overlap. | If all the topics in the “Partial” section are not provided. |

| C8: Classification Algorithm | The classification algorithms are clearly mentioned. | The classification algorithms are not clearly mentioned. | |

| C9: Evaluation Method | The evaluation process of each algorithm (such as the cross-validation, only when used) as well as the evaluation metrics (such as Normalized Root Mean Square Error (NRMSE)) used are clearly mentioned. | >The evaluation process is presented, but the parameters are not given (such as the percentage split between the train and test sets); >Visual comparisons without presenting evaluation metrics are presented; If at least one of these factors is not specified: >Accuracy or NRMSE; >Recognition delay. | If all the topics in the “Partial” section are not provided. |

| C10: Control Strategy | >The control strategy implemented in each wearable assistive device is clearly mentioned and explained; >The control parameters of the wearable assistive device are clearly mentioned. | If at least one of these factors is not specified: >The control strategy implemented in each wearable assistive device is clearly mentioned and explained; >The control parameters of the wearable assistive device are clearly mentioned. | No information regarding the control strategy is provided. |

| C11: Results | The results for each algorithm are given (mean and standard deviation) | The results for each algorithm are given without the standard deviation. | The mean and the standard deviation are not given |

| C12: Conclusion | Conclusions are based on all results relevant to the study question: the negative as well as positive ones. | The conclusion is not supported by the results. |

References

- Labarrière, F.; Thomas, E.; Calistri, L.; Optasanu, V.; Gueugnon, M.; Ornetti, P.; Laroche, D. Machine Learning Approaches for Activity Recognition and / or Activity Prediction in Locomotion Assistive Devices—A Systematic Review. Sensors 2020, 20, 6345. [Google Scholar] [CrossRef]

- Kimura, M.; Pham, H.; Kawanishi, M.; Narikiyo, T. EMG-force-sensorless power assist system control based on Multi-Class Support Vector Machine. In Proceedings of the 11th IEEE International Conference on Control & Automation (ICCA), Taichung, Taiwan, 18–20 June 2014; pp. 284–289. [Google Scholar] [CrossRef]

- Jang, J.; Kim, K.; Lee, J.; Lim, B.; Shim, Y. Online gait task recognition algorithm for hip exoskeleton. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5327–5332. [Google Scholar] [CrossRef]

- Li, Y.D.; Hsiaowecksler, E.T. Gait mode recognition and control for a portable-powered ankle-foot orthosis. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–8. [Google Scholar]

- Wang, W.; Zhang, L.; Liu, J.; Zhang, B.; Huang, Q. A real-time walking pattern recognition method for soft knee power assist wear. Int. J. Adv. Robot. Syst. 2020, 17, 1–14. [Google Scholar] [CrossRef]

- Yuan, K.; Parri, A.; Yan, T.; Wang, L.; Munih, M.; Wang, Q.; Vitiello, N. A realtime locomotion mode recognition method for an active pelvis orthosis. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 6196–6201. [Google Scholar] [CrossRef]

- Parri, A.; Yuan, K.; Marconi, D.; Yan, T.; Crea, S.; Munih, M.; Lova, R.M.; Vitiello, N.; Wang, Q. Real-Time Hybrid Locomotion Mode Recognition for Lower Limb Wearable Robots. IEEE/ASME Trans. Mechatron. 2017, 22, 2480–2491. [Google Scholar] [CrossRef]

- Long, Y.; Du, Z.-J.; Wang, W.-D.; Zhao, G.-Y.; Xu, G.-Q.; He, L.; Mao, X.-W.; Dong, W. PSO-SVM-Based Online Locomotion Mode Identification for Rehabilitation Robotic Exoskeletons. Sensors 2016, 16, 1408. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Wang, Q. Real-Time Locomotion Mode Recognition and Assistive Torque Control for Unilateral Knee Exoskeleton on Different Terrains. IEEE/ASME Trans. Mechatron. 2020, 25, 2722–2732. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, X.; Jiang, Y.; Mai, J.; Wang, Q. Real-time onboard SVM-based human locomotion recognition for a bionic knee exoskeleton on different terrains. In Proceedings of the 2019 Wearable Robotics Association Conference (WearRAcon), Scottsdale, AZ, USA, 25–27 March 2019; pp. 34–39. [Google Scholar] [CrossRef]

- Gong, C.; Xu, D.; Zhou, Z.; Vitiello, N.; Wang, Q. BPNN-Based Real-Time Recognition of Locomotion Modes for an Active Pelvis Orthosis with Different Assistive Strategies. Int. J. Humanoid Robot. 2020, 17, 2050004. [Google Scholar] [CrossRef]

- Gong, C.; Xu, D.; Zhou, Z.; Vitiello, N.; Wang, Q. Real-Time On-Board Recognition of Locomotion Modes for an Active Pelvis Orthosis. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 346–350. [Google Scholar]

- Kim, H.; Shin, Y.J.; Kim, J. Kinematic-based locomotion mode recognition for power augmentation exoskeleton. Int. J. Adv. Robot. Syst. 2017, 14, 172988141773032. [Google Scholar] [CrossRef]

- Novak, D.; Riener, R. A survey of sensor fusion methods in wearable robotics. Robot. Auton. Syst. 2015, 73, 155–170. [Google Scholar] [CrossRef]

- Yan, T.; Cempini, M.; Oddo, C.M.; Vitiello, N. Review of assistive strategies in powered lower-limb orthoses and exoskeletons. Robot. Auton. Syst. 2015, 64, 120–136. [Google Scholar] [CrossRef]

- Xu, D.; Wang, Q. On-board Training Strategy for IMU-Based Real-Time Locomotion Recognition of Transtibial Amputees with Robotic Prostheses. Front. Neurorobot. 2020, 14, 47. [Google Scholar] [CrossRef] [PubMed]

- Zheng, J.; Cao, H.; Chen, D.; Ansari, R.; Chu, K.; Huang, M. Designing Deep Reinforcement Learning Systems for Musculoskeletal Modeling and Locomotion Analysis Using Wearable Sensor Feedback. IEEE Sens. J. 2020, 20, 9274–9282. [Google Scholar] [CrossRef]

- Sahoo, S.; Maheshwari, M.; Pratihar, D.K.; Mukhopadhyay, S. A Geometry Recognition-Based Strategy for Locomotion Transitions Early Prediction of Prosthetic Devices. IEEE Trans. Instrum. Meas. 2019, 69, 1259–1267. [Google Scholar] [CrossRef]

- Xu, D.; Wang, Q. BP Neural Network Based On-board Training for Real-time Locomotion Mode Recognition in Robotic Transtibial Prostheses. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 8158–8163. [Google Scholar] [CrossRef]

- Billah, Q.M.; Rahman, L.; Adan, J.; Kamal, A.M.; Islam, K.; Shahnaz, C.; Subhana, A. Design of Intent Recognition System in a Prosthetic Leg for Automatic Switching of Locomotion Modes. In Proceedings of the TENCON 2019—2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 1638–1642. [Google Scholar] [CrossRef]

- Laschowski, B.; McNally, W.; Wong, A.; McPhee, J. Preliminary Design of an Environment Recognition System for Controlling Robotic Lower-Limb Prostheses and Exoskeletons. In Proceedings of the 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR), Toronto, ON, Canada, 24–28 June 2019; pp. 868–873. [Google Scholar] [CrossRef]

- Khademi, G.; Simon, D. Convolutional Neural Networks for Environmentally Aware Locomotion Mode Recognition of Lower-Limb Amputees. In Proceedings of the ASME 2019 Dynamic Systems and Control Conference, Park City, UT, USA, 8–11 October 2019. [Google Scholar]

- Carvalho, S.; Figueiredo, J.; Santos, C.P. Environment-Aware Locomotion Mode Transition Prediction System. In Proceedings of the 2019 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Porto, Portugal, 24–26 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Figueiredo, J.; Carvalho, S.P.; Gonçalves, D.; Moreno, J.C.; Santos, C.P. Daily Locomotion Recognition and Prediction: A Kinematic Data-Based Machine Learning Approach. IEEE Access 2020, 8, 33250–33262. [Google Scholar] [CrossRef]

- Ryu, J.; Lee, B.-H.; Kim, D.-H. sEMG Signal-Based Lower Limb Human Motion Detection Using a Top and Slope Feature Extraction Algorithm. IEEE Signal Process. Lett. 2016, 24, 929–932. [Google Scholar] [CrossRef]

- Martinez-Hernandez, U.; Mahmood, I.; Dehghani-Sanij, A.A. Simultaneous Bayesian Recognition of Locomotion and Gait Phases with Wearable Sensors. IEEE Sens. J. 2017, 18, 1282–1290. [Google Scholar] [CrossRef]

- Kim, D.-H.; Cho, C.-Y.; Ryu, J. Real-Time Locomotion Mode Recognition Employing Correlation Feature Analysis Using EMG Pattern. ETRI J. 2014, 36, 99–105. [Google Scholar] [CrossRef]

- Mohebbi, A. Human-Robot Interaction in Rehabilitation and Assistance: A Review. Curr. Robot. Rep. 2020, 1, 131–144. [Google Scholar] [CrossRef]

- Yan, L.; Mir, M.; Sanchez, P.; Beg, M.; Peters, J.; Enriquez, O.; Gilbert, A. COVID-19 in a Hispanic Woman. Arch. Pathol. Lab. Med. 2020, 144, 1041–1047. [Google Scholar] [CrossRef] [PubMed]

- Hua, Y.; Fan, J.; Liu, G.; Zhang, X.; Lai, M.; Li, M.; Zheng, T.; Zhang, G.; Zhao, J.; Zhu, Y. A Novel Weight-Bearing Lower Limb Exoskeleton Based on Motion Intention Prediction and Locomotion State Identification. IEEE Access 2019, 7, 37620–37638. [Google Scholar] [CrossRef]

- Islam, M.; Hsiao-Wecksler, E.T. Detection of Gait Modes Using an Artificial Neural Network during Walking with a Powered Ankle-Foot Orthosis. J. Biophys. 2016, 2016, 7984157. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Wang, Z.; Ning, Z.; Zhang, Y.; Liu, Y.; Cao, W.; Wu, X.; Chen, C. A Novel Motion Intention Recognition Approach for Soft Exoskeleton via IMU. Electronics 2020, 9, 2176. [Google Scholar] [CrossRef]

- Fernandes, P.N.; Figueredo, J.; Moreira, L.; Felix, P.; Correia, A.; Moreno, J.C.; Santos, C.P. EMG-based Motion Intention Recognition for Controlling a Powered Knee Orthosis. In Proceedings of the 2019 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Porto, Portugal, 24–26 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Du, G.; Zeng, J.; Gong, C.; Zheng, E. Locomotion Mode Recognition with Inertial Signals for Hip Joint Exoskeleton. Appl. Bionics Biomech. 2021, 2021, 6673018. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wu, D.; Gao, Y.; Wang, X.; Li, X.; Xu, G.; Dong, W. Integral Real-time Locomotion Mode Recognition Based on GA-CNN for Lower Limb Exoskeleton. J. Bionic Eng. 2022, 19, 1359–1373. [Google Scholar] [CrossRef]

- Tekscan. Tekscan: Pressure Mapping, Force Measurement & Tactile Sensors. Available online: https://www.tekscan.com/products-solutions/systems/f-scan-system (accessed on 9 September 2022).

- Robodo. Robodo: Pressure Sensor. Available online: https://robodo.in/products/force-sensor-resistor-square-38-1mm-pressure-sensor (accessed on 9 September 2022).

- IFM. IFM: Encoder. Available online: https://www.ifm.com/pt/pt/product/RV3100#/ (accessed on 9 September 2022).

- Waveshare. Waveshare: 10 DOF IMU Sensor. Available online: https://www.waveshare.com/wiki/10_DOF_IMU_Sensor_(B.) (accessed on 9 September 2022).

- Delsys. Trigno EMG & Additional Sensors. Available online: https://delsys.com/trigno/sensors/ (accessed on 9 September 2022).

- Tucker, M.R.; Olivier, L.; Pagel, A.; Bleuler, H.; Bouri, M.; Lambercy, O.; Millán, J.D.R.; Riener, R.; Vallery, H.; Gassert, R. Control strategies for active lower extremity prosthetics and orthotics: A review. J. Neuroeng. Rehabil. 2015, 12, 1. [Google Scholar] [CrossRef] [PubMed]

- Endo, K.; Herr, H. Human walking model predicts joint mechanics, electromyography and mechanical economy. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 4663–4668. [Google Scholar] [CrossRef]

- Protopapadaki, A.; Drechsler, W.I.; Cramp, M.C.; Coutts, F.J.; Scott, O.M. Hip, knee, ankle kinematics and kinetics during stair ascent and descent in healthy young individuals. Clin. Biomech. 2007, 22, 203–210. [Google Scholar] [CrossRef] [PubMed]

- Riener, R.; Rabuffetti, M.; Frigo, C. Stair ascent and descent at different inclinations. Gait Posture 2002, 15, 32–44. [Google Scholar] [CrossRef]

- Winter, D.A. Chapter 9: Kinesiological Electromyography. In Biomechanics and Motor Control of Human Movement, 3rd ed.; Winter, D.A., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2005. [Google Scholar]

- Chen, C.; Zhang, Y.; Li, Y.; Wang, Z.; Liu, Y.; Cao, W.; Wu, X. Iterative Learning Control for a Soft Exoskeleton with Hip and Knee Joint Assistance. Sensors 2020, 20, 4333. [Google Scholar] [CrossRef] [PubMed]

- McIntosh, A.S.; Beatty, K.T.; Dwan, L.N.; Vickers, D.R. Gait dynamics on an inclined walkway. J. Biomech. 2006, 39, 2491–2502. [Google Scholar] [CrossRef]

- Kawamoto, H.; Sankai, Y. Comfortable power assist control method for walking aid by HAL-3. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Yasmine Hammamet, Tunisia, 6–9 October 2002; Volume 4. [Google Scholar] [CrossRef]

- Beaman, C.; Peterson, C.; Neptune, R.; Kautz, S. Differences in self-selected and fastest-comfortable walking in post-stroke hemiparetic persons. Gait Posture 2010, 31, 311–316. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moreira, L.; Figueiredo, J.; Fonseca, P.; Vilas-Boas, J.P.; Santos, C.P. Lower limb kinematic, kinetic, and EMG data from young healthy humans during walking at controlled speeds. Sci. Data 2021, 8, 103. [Google Scholar] [CrossRef]

- Moreira, L.; Figueiredo, J.; Vilas-Boas, J.; Santos, C. Kinematics, Speed, and Anthropometry-Based Ankle Joint Torque Estimation: A Deep Learning Regression Approach. Machines 2021, 9, 154. [Google Scholar] [CrossRef]

- Koopman, B.; van Asseldonk, E.; van der Kooij, H. Speed-dependent reference joint trajectory generation for robotic gait support. J. Biomech. 2014, 47, 1447–1458. [Google Scholar] [CrossRef]

- Stoquart, G.; Detrembleur, C.; Lejeune, T. Effect of speed on kinematic, kinetic, electromyographic and energetic reference values during treadmill walking. Neurophysiol. Clin. Neurophysiol. 2008, 38, 105–116. [Google Scholar] [CrossRef]

- Schwartz, M.H.; Rozumalski, A.; Trost, J.P. The effect of walking speed on the gait of typically developing children. J. Biomech. 2008, 41, 1639–1650. [Google Scholar] [CrossRef] [PubMed]

- Hassani, W.; Mohammed, S.; Rifai, H.; Amirat, Y. EMG based approach for wearer-centered control of a knee joint actuated orthosis. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 990–995. [Google Scholar]

- Hassani, W.; Mohammed, S.; Amirat, Y. Real-Time EMG Driven Lower Limb Actuated Orthosis for Assistance as Needed Movement Strategy. In Proceedings of the Robotics: Science and Systems IX, Berlin, Germany, 24–28 June 2013. [Google Scholar] [CrossRef]

- Yu, C.-J.; Chen, J.-S.; Li, Y.-J. Motion recognition for paraplegic patients wearing a powered lower limb orthosis in ascending and descending. In Proceedings of the 2015 10th Asian Control Conference (ASCC), Kota Kinabalu, Malaysia, 31 May–3 June 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, G.; Ye, J.; Liu, Q.; Duan, L.; Li, W.; Wu, Z.; Wang, C. Adaptive Control Strategy for Gait Rehabilitation Robot to Assist-When-Needed. In Proceedings of the 2018 IEEE International Conference on Real-time Computing and Robotics (RCAR), Malé, Maldives, 1–5 August 2018; pp. 538–543. [Google Scholar] [CrossRef]

- Eguren, D.; Cestari, M.; Luu, T.P.; Kilicarslan, A.; Steele, A.; Contreras-Vidal, J.L. Design of a customizable, modular pediatric exoskeleton for rehabilitation and mobility. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019. [Google Scholar] [CrossRef]

- Lyu, M.; Chen, W.-H.; Ding, X.; Wang, J. Knee exoskeleton enhanced with artificial intelligence to provide assistance-as-needed. Rev. Sci. Instrum. 2019, 90, 094101. [Google Scholar] [CrossRef] [PubMed]

| Study | Criterion | Score (%) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | C11 | C12 | ||

| [7] | 2 | 2 | 1 | 1 | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 87.5 |

| [13] | 2 | 2 | 2 | 2 | 2 | 2 | 0 | 2 | 2 | 2 | 2 | 2 | 91.7 |

| [6] | 2 | 2 | 1 | 1 | 2 | 2 | 0 | 2 | 2 | 2 | 2 | 2 | 83.3 |

| [10] | 1 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 87.5 |

| [30] | 1 | 2 | 0 | 1 | 2 | 1 | 0 | 2 | 2 | 0 | 2 | 2 | 62.5 |

| [8] | 1 | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 91.7 |

| [31] | 1 | 2 | 1 | 1 | 2 | 2 | 0 | 2 | 1 | 2 | 1 | 2 | 70.8 |

| [3] | 2 | 2 | 1 | 1 | 2 | 2 | 0 | 2 | 1 | 2 | 2 | 2 | 79.2 |

| [32] | 2 | 2 | 1 | 1 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 91.7 |

| [11] | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 1 | 2 | 87.5 |

| [12] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 2 | 95.8 |

| [4] | 2 | 2 | 1 | 1 | 2 | 2 | 0 | 2 | 2 | 2 | 2 | 2 | 83.3 |

| [9] | 2 | 2 | 1 | 1 | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 87.5 |

| [33] | 1 | 2 | 2 | 2 | 2 | 2 | 0 | 2 | 2 | 2 | 1 | 2 | 83.3 |

| [5] | 2 | 2 | 2 | 1 | 2 | 2 | 0 | 2 | 2 | 2 | 2 | 2 | 87.5 |

| [2] | 2 | 2 | 1 | 1 | 2 | 2 | 0 | 2 | 2 | 2 | 1 | 0 | 70.8 |

| [34] | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 1 | 2 | 2 | 95.8 |

| [35] | 2 | 2 | 1 | 2 | 2 | 2 | 2 | 2 | 0 | 2 | 2 | 2 | 87.5 |

| Mean Score ± standard deviation | 84.7 ± 8.7 | ||||||||||||

| Study | R/P 1 | Locomotion Tasks | Speed | Sensors (Location) | Features | Windows | Classifier | Performance (ACC 2, Delay) | Control Type | Participants (Status) |

|---|---|---|---|---|---|---|---|---|---|---|

| Parri et al. [7] | R | Static Tasks: SIT 3, ST; Dynamic Tasks: Continuous (LW, SA, and SD) and Transitions (LW→ST, ST→LW, SIT→ST, ST→SIT, LW→SA, LW→SD, SA→LW, SD→LW) | Slow, natural, and fast | -Encoder (hip exoskeleton) -Pressure insoles (feet) | -Hip joint angles and Center of Pressure (CoP) at specific gait events | 200 ms | -Static and Discrete Tasks: Finite State Machine (FSM); | -ACC > 97.4%; -Dynamic Motion: Delay about one step; -Transitions: Delay between 15.2% and 63.8% | -Zero-torque mode; -Assistive mode without considering the motion intention | 6 (healthy) |

| Kim et al. [13] | R | Dynamic Tasks: Continuous (LW, SA, SD, RA, and RD) | Fixed speed (4 km/h) | -Encoder (hip and knee exoskeleton); -5 IMU (exoskeleton back, thigh, and foot); -Load cells (exoskeleton insole) | -Vertical foot position -Thigh, shank, and foot inclination | NI 4 | Decision Tree (DT) | -Average ACC = 99.1%; -Delay between 0.0% and 5.13% | Zero-torque mode | 8 (healthy) |

| Yuan et al. [6] | Both | Static Tasks: SIT, ST; Dynamic Tasks: Continuous (LW, SA, SD) and Transitions (SIT→ST, ST→SIT, ST→LW, LW→ST, ST→SA, SA→ST, ST→SD, SD→ST, LW→SA, SA→LW, LW→SD, SD→LW) | Natural | -Encoder (hip exoskeleton); -Pressure insoles (feet) | -Hip joint angles and Center of Pressure (CoP) at specific gait events | NI | -Static Tasks and Transitions: FSM; -Continuous Tasks: Event-based fuzzy-logic method; | -ACC > 90.1%; -Delay between −30.9% and 100% | Zero-torque mode | 3 (healthy) |

| Zhou et al. [10] | Both | Dynamic Tasks: Continuous (LW, SA, SD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW) | NI | 2 IMU (exoskeleton thigh and shank) | Maximum (MAX), minimum (MIN), mean, standard deviation, and root mean square (RMS) of the thigh inclination angles, angular velocities, and angular accelerations | 150 ms with an increment of 10 ms | Support Vector Machine (SVM) | -ACC between 93.0% and 96.2%; -Delay between −40.0 ms and 185 ms; | Zero-torque mode | 3 (healthy) |

| Hua et al. [30] | R | Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW, LW→RA, RA→LW, LW→RD, RD→LW) | NI | -Encoder (exoskeleton); -1 IMU (exoskeleton back); -Ground Reaction Force (GRF) sensors (exoskeleton); | NI | NI | -DT; -Discriminant Analysis (DA); -SVM; -k-Nearest neighbor (KNN); -Ensemble Method (EM); -Convolutional Neural Network (CNN); -Stacked Autoencoder-based Deep Neural Network | -ACC = 99.7%; -Delay between 11.8% and 17.4%; | NI | NI |

| Long et al. [8] | Both | Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW, LW→RA, RA→LW, LW→RD, RD→LW) | Natural | -2 Attitude and Heading Reference System (AHRS) sensors (shank and foot); -6 GRF sensors (pressure insoles/feet); | Wavelet coefficients from (i) GRF during the swing phase; and (ii) thigh and foot inclination angles | 200 ms with an increment of 10 ms | SVM | -ACC between 97.3% and 99.5%; -Delay between −10.4% and 48% | Zero-torque mode | 3 (healthy) |

| Islam et al. [31] | R | Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) | NI | -1 IMU (orthosis foot); -Force Sensor Resistor (FSR) (orthosis insole); | -Vertical foot position -Foot orientation -FSR-based foot contact information | NI | Multilayer Feedforward Neural Network (MFNN) | -ACC > 98.3%; -Delay between 16% and 28% | Zero-torque mode | 5 (healthy) |

| Jang et al. [3] | R | Static Tasks: ST; Dynamic Tasks: Continuous (LW, SA, SD) | Natural | -Potentiometers (hip exoskeleton); -1 IMU (exoskeleton back); | -Hip joint angles -Vertical acceleration-based foot contact | NI | FSM | -ACC between 95% and 99%; -Delay of one-step delay | Zero-torque mode | 3 (healthy) |

| Zhu et al. [32] | R | Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW, LW→RA, RA→LW, LW→RD, RD→LW) | Natural | 4 IMU (thigh and shank) | Hip and knee joint angle, angular velocity, and angular acceleration | 100 ms with an increment of 50 ms | CNN | -ACC between 96.6 and 99.0%; -Delay between 3.96% and 24.0% | Assistive mode considering the motion intention | 7 (healthy) |

| Gong et al. [12] | R | Static Tasks: ST; Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) | Fixed speed (2.7 km/h) | 2 IMU (thigh) | MAX, MIN, mean, standard deviation, and RMS of the thigh inclination angles, angular velocities, and angular accelerations | 250 ms with an increment of 10 ms | MFNN | -Average ACC = 97.8% -Delay between 50 and 300 ms | Zero-torque mode | 1 (healthy) |

| Gong et al. [11] | R | Static Tasks: ST; Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) | Fixed speed (2.7 km/h) | 2 IMU (thigh) | MAX, MIN, mean, standard deviation, and RMS of the thigh inclination angles, angular velocities, and angular accelerations | 250 ms with an increment of 10 ms | MFNN | -Zero-torque mode: Average ACC = 98.4%; -Assistive mode: ACC between 97.6% and 98.4%; | -Zero-torque mode; -Assistive mode without considering the motion intention; | 3 (healthy) |

| Li et al. [4] | R | Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) | NI | -1 IMU (orthosis foot) -FSRs (orthosis insole) | -Orthosis orientation -Orthosis position | NI | FSM | -ACC between 97.2% and 99.5%; -Delay of one-step delay | Assistive mode considering the motion intention | 5 (healthy) |

| Liu et al. [9] | Both | Static Tasks: ST; Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW, LW→RA, RA→LW, LW→RD, RD→LW) | NI | 2 IMU (exoskeleton thigh and shank) | MAX, MIN, mean, standard deviation, and RMS of the thigh and shank inclination angles, angular velocities, and angular accelerations | 15 samples | -Static Tasks and Transitions: FSM; -Continuous Tasks: SVM; | -Healthy participants: average ACC between 97.6% and 98.3% and delay between −78.5 ms and 38.7 ms; -Stroke participant: average ACC = 97.4%; | Assistive mode considering the motion intention | -5 (healthy); -1 (stroke); |

| Fernandes et al. [33] | R | Dynamic Task: Continuous (LW) | Fixed speed (1 km/h and 1.5 km/h) | Electromyography (EMG) (Vastus Lateralis, Vastus Medialis, Semitendinosus, and Semimembranosus) | EMG data from Vastus Lateralis, Vastus Medialis, Semitendinosus, and Semimembranosus | NI | Proportional Gain Method | -NRMSE = 12%; -Delay = 22 ms; | Assistive mode considering the motion intention | 2 (healthy) |

| Wang et al. [5] | R | Dynamic Tasks: Continuous (LW, SA, SD) and Transitions (LW→SA, SA→LW, LW→SD, SD→LW) | Natural | 2 IMU (thigh and shank) | -MAX and MIN thigh and shank angles; -MAX and MIN knee angles; | NI | FSM | -ACC between 98.1% and 98.3%; -Delay between 41.1% and 58.2%; | Zero-torque mode | 18 (healthy) |

| Kimura et al. [2] | R | Dynamic Tasks: Transitions (SIT→ST, ST→SIT) | NI | Potentiometer (hip and knee exoskeleton) | -Hip and knee joint angle -Upper body pitch angle | NI | SVM | -F-Measure between 0.882 and 0.997 | Zero-torque mode | 6 (healthy) |

| Du et al. [34] | R | Static Tasks: ST; Dynamic Tasks: Continuous (LW, SA, SD) and Transitions (ST→LW, LW→ST) | Natural | 2 IMU (thigh) | Pitch and roll angles | 100 ms with an increment of 10 ms | -Static Tasks and Transitions: FSM; -Continuous Tasks: Event-based fuzzy-logic method; | -ACC of 91.9% between static tasks and ACC higher than 89.0% between dynamic tasks; -Delay = 554.4 ms | Zero-torque and Assistive mode considering motion intention | 3 (healthy) |

| Wang et al. [35] | R | Static Tasks: ST and SIT; Dynamic Tasks: Continuous (LW, SA, SD, RA, RD) and Transitions between Static Tasks | Natural | -6 IMU (thigh, shank, and shoes) -4 Load cells (insole) | NI | 100 ms with an increment of 10 ms | CNN | -ACC = 94.0%; -Delay between 18.1 and 53.3 ms | Zero-torque mode | 9 (healthy) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreira, L.; Figueiredo, J.; Cerqueira, J.; Santos, C.P. A Review on Locomotion Mode Recognition and Prediction When Using Active Orthoses and Exoskeletons. Sensors 2022, 22, 7109. https://doi.org/10.3390/s22197109

Moreira L, Figueiredo J, Cerqueira J, Santos CP. A Review on Locomotion Mode Recognition and Prediction When Using Active Orthoses and Exoskeletons. Sensors. 2022; 22(19):7109. https://doi.org/10.3390/s22197109

Chicago/Turabian StyleMoreira, Luís, Joana Figueiredo, João Cerqueira, and Cristina P. Santos. 2022. "A Review on Locomotion Mode Recognition and Prediction When Using Active Orthoses and Exoskeletons" Sensors 22, no. 19: 7109. https://doi.org/10.3390/s22197109

APA StyleMoreira, L., Figueiredo, J., Cerqueira, J., & Santos, C. P. (2022). A Review on Locomotion Mode Recognition and Prediction When Using Active Orthoses and Exoskeletons. Sensors, 22(19), 7109. https://doi.org/10.3390/s22197109