Power Tower Inspection Simultaneous Localization and Mapping: A Monocular Semantic Positioning Approach for UAV Transmission Tower Inspection

Abstract

1. Introduction

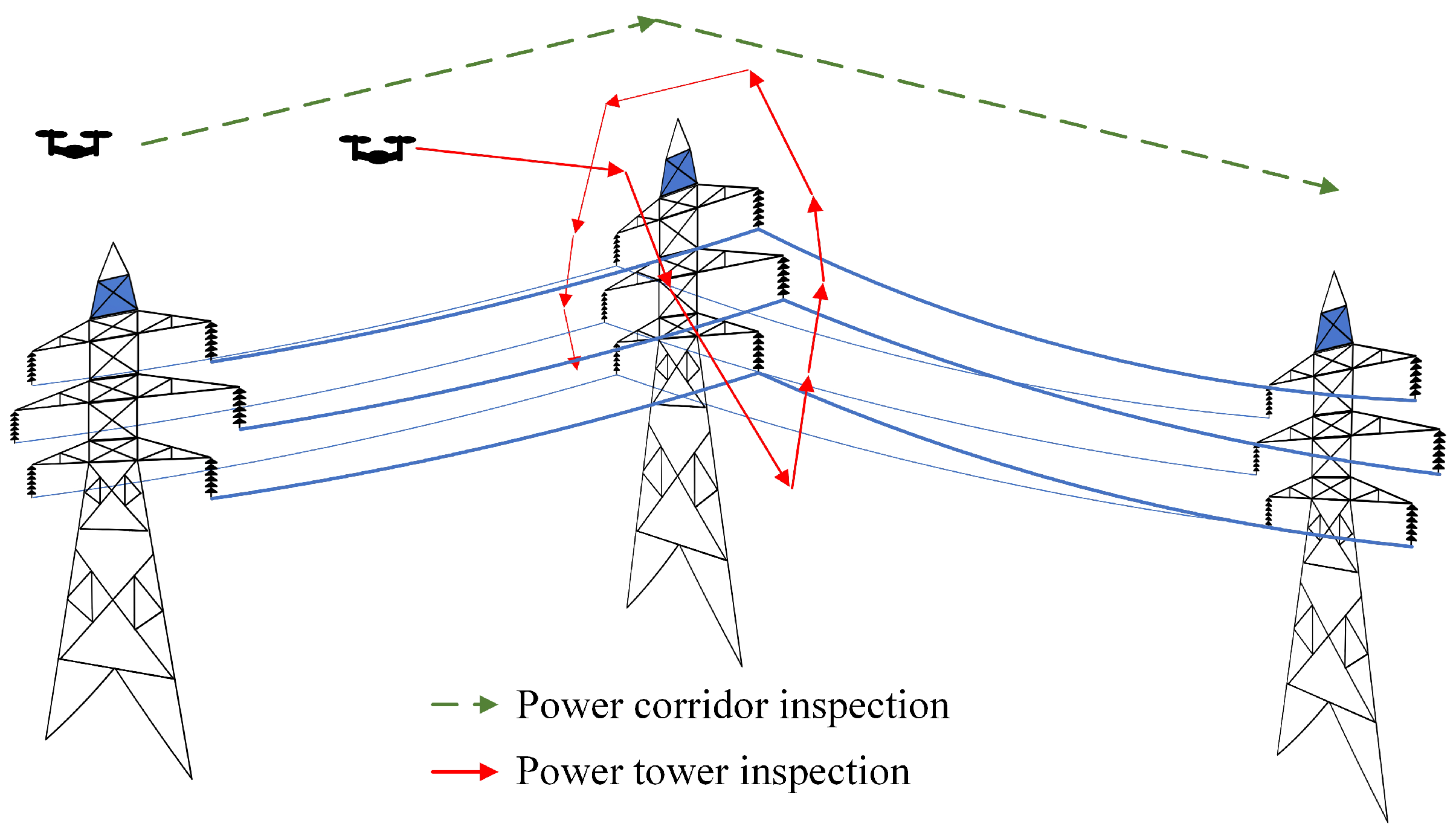

1.1. Automatic UAV Power Line Inspection

1.2. Scene Challenges for Monocular Object SLAM

1.3. The Objective of the Paper

2. Semantics Selection and Geographical UAV Positioning Scheme

2.1. Semantic Object Selection

2.1.1. Structure Semantics for Inspection Flight

2.1.2. Component Detectability

2.2. Geographical UAV Positioning Scheme

3. Methodology

3.1. Framework of PTI-SLAM

3.2. Semantic Positioning

- Although illumination changes often occur, the brightness of the image is usually consistent in a small area. By limiting the range of pixel tracking to a small region on both a spatial and a temporal scale, our method enhances the confidence in the assumption of brightness constancy.

- A few extreme cases, such as drastic but transient reflections from the target surface, can destabilize the direct method, resulting in a sharp decrease in the quantity of generated point clouds. We utilize this property to reduce the contribution of these unreliable observations to object positioning.

3.2.1. Semi-Dense Mapping for RoIs

3.2.2. Object Positioning within Batch

3.2.3. Object Association

3.3. Object-Based Geographical UAV Positioning

| Algorithm 1:Object-based geographical UAV positioning |

Ouput:

UAV position and pose of current frame in ENU coordinates ,

|

- When the observation of objects is possible, obtain the transformation matrix and recalculate the UAV position by using the object associations between SLAM and the GPS.

- When objects are not observed, transform the UAV positioning in the SLAM coordinates into GPS coordinates using the existing transformation matrix.

4. Experimental Results

4.1. Environment Setup

4.2. Trajectory Consistency Evaluation

4.3. Evaluation of Object Positioning

4.4. Evaluation of Object-Based Geographical Positioning

4.5. Study on Object Positioning Performance

4.5.1. Sliding Window Size

4.5.2. Influence Factor

- The accuracy of visual measurement decreases with increasing distance and the measurement for the object at the edge of the view field is usually less accurate than in the central area. The latter is partly related to lens distortion, even if distortion correction has been performed.

- The fusion-based direct method uses the relative relationships of a position and orientation between frames, rather than the absolute positioning of each frame, to restore the depth. In a short-term gradual motion, SLAM typically performs good movement tracking capabilities and the estimation errors of relative orientation and position between frames tend to converge to a consistent range. This error is less related to the absolute error of the current frame, unless the batch happens to be in an extremely unfavourable situation. Therefore, in normal conditions, there is no direct correlation between the positioning error of the camera and the depth estimation error.

4.6. Comparison of Methods

4.6.1. Robustness of Algorithms

4.6.2. Time Consumption

4.6.3. Geographical Positioning Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| SLAM | Simultaneous Localization And Mapping |

| PTI-SLAM | Power Tower Inspection SLAM |

| RTK GPS | Real-Time Kinematic fixed Global Positioning System |

| LiDAR | Light Detection And Ranging |

| RGB-D | RGB-Depth |

| RMSE | Root Mean Squared Error |

| ATE | Absolute Trajectory Error |

| RoI | Region of Interesting |

| AP | Average Precision |

| CEPRI | China Electric Power Research Institute |

| ZNCC | zero-mean normalized cross correlation |

| ROS | Robot Operating System |

| SDK | Software Development Kit |

| MAP | Mean Pixel Accuracy |

| MIoU | Mean Intersection over Union |

| Symbols | |

| The i-th keyframe | |

| The set of the coordinates of the object of reference frame, | |

| in the coordinate system of reference frame | |

| The coordinate of the j-th object of keyframe M, | |

| in the coordinate system of reference frame | |

| Object positioning using inverse depth filter | |

| The set of the coordinates of the object of reference frame, | |

| in the coordinate system of SLAM | |

| The transformation from SLAM coordinates to reference frame | |

| The existing coordinate of object J in SLAM coordinate system | |

| The coordinate of the j-th object of reference frame, | |

| in the coordinate system of SLAM | |

| The updated coordinate of object J in SLAM coordinate system | |

| The existing fusion weight of object J | |

| The fusion weight of the j-th object of reference frame | |

| The updated fusion weight of object J | |

| The image of the reference frame | |

| The image of the current frame | |

| The camera optical centres of the reference frame | |

| The camera optical centres of the current frame | |

| P | The spatial point |

| The projection points of P in | |

| The projection points of P in | |

| The epipolar lines of P in | |

| The matching score obtained by ZNCC | |

| the pixel blocks in and respectively | |

| The values of the pixels in A and B | |

| the mean value of A and B | |

| The hypothetical max depth of the spatial point P to | |

| The hypothetical min depth of the spatial point P to | |

| The initial value of depth estimating | |

| The expected value of inverse depth of P in the Gaussian distribution | |

| The error-variance of Gaussian distribution, | |

| it can be used as depth uncertainty | |

| The coordinate of the projection of P in | |

| The camera intrinsics | |

| The updated inverse depth estimating | |

| The updated error-variance | |

| The inverse depth estimating of new incoming | |

| The error-variance of new incoming | |

| The true depth of P in | |

| The false depth of P in | |

| UAV position and pose of reference frame in SLAM coordinate system | |

| UAV position and pose of current frame in SLAM coordinate system | |

| object position of reference frame in SLAM coordinate system | |

| object position of reference frame in SLAM coordinate system | |

| UAV position and pose of current frame in ENU coordinate system | |

| R | The rotation matrix |

| t | The translation vector |

| s | The scale factor |

References

- Nguyen, V.N.; Jenssen, R.; Roverso, D. Automatic autonomous vision-based power line inspection: A review of current status and the potential role of deep learning. Int. J. Electr. Power Energy Syst. 2018, 99, 107–120. [Google Scholar] [CrossRef]

- Luo, Y.; Yu, X.; Yang, D.; Zhou, B. A survey of intelligent transmission line inspection based on unmanned aerial vehicle. Artif. Intell. Rev. 2022. Early Access. [Google Scholar] [CrossRef]

- Liu, C.; Shao, Y.; Cai, Z.; Li, Y. Unmanned Aerial Vehicle Positioning Algorithm Based on the Secant Slope Characteristics of Transmission Lines. IEEE Access 2020, 8, 43229–43242. [Google Scholar] [CrossRef]

- Xu, C.; Li, Q.; Zhou, Q.; Zhang, S.; Yu, D.; Ma, Y. Power Line-Guided Automatic Electric Transmission Line Inspection System. IEEE Trans. Instrum. Meas. 2022, 71, 1–18. [Google Scholar] [CrossRef]

- Zhang, Y.; Liao, R.; Wang, Y.; Liao, J.; Yuan, X.; Kang, T. Transmission Line Wire Following System Based on Unmanned Aerial Vehicle 3D Lidar. J. Phys. Conf. Ser. 2020, 1575, 012108. [Google Scholar] [CrossRef]

- Li, Y.C.; Zhang, W.B.; Li, P.; Ning, Y.H.; Suo, C.G. A Method for Autonomous Navigation and Positioning of UAV Based on Electric Field Array Detection. Sensors 2021, 21, 1146. [Google Scholar] [CrossRef]

- Bian, J.; Hui, X.; Zhao, X.; Tan, M. A Point-Line-Based SLAM Framework for UAV Close Proximity Transmission Tower Inspection. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1016–1021. [Google Scholar]

- Cantieri, A.; Ferraz, M.; Szekir, G.; Antônio Teixeira, M.; Lima, J.; Schneider Oliveira, A.; Aurélio Wehrmeister, M. Cooperative UAV–UGV Autonomous Power Pylon Inspection: An Investigation of Cooperative Outdoor Vehicle Positioning Architecture. Sensors 2020, 20, 6384. [Google Scholar] [CrossRef]

- Garg, S.; Sünderhauf, N.; Dayoub, F.; Morrison, D.; Cosgun, A.; Carneiro, G.; Wu, Q.; Chin, T.J.; Reid, I.; Gould, S.; et al. Semantics for Robotic Mapping, Perception and Interaction: A Survey. Found. Trends® Robot. 2020, 8, 1–224. [Google Scholar] [CrossRef]

- Wang, J.; Rünz, M.; Agapito, L. DSP-SLAM: Object Oriented SLAM with Deep Shape Priors. arXiv 2019, arXiv:2108.09481v2. [Google Scholar]

- Li, X.; Ao, H.; Belaroussi, R.; Gruyer, D. Fast semi-dense 3D semantic mapping with monocular visual SLAM. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 385–390. [Google Scholar]

- Nicholson, L.; Milford, M.; Sünderhauf, N. QuadricSLAM: Dual Quadrics From Object Detections as Landmarks in Object-Oriented SLAM. IEEE Robot. Autom. Lett. 2019, 4, 1–8. [Google Scholar] [CrossRef]

- Yang, S.; Scherer, S. CubeSLAM: Monocular 3-D Object SLAM. IEEE Trans. Robot. 2019, 35, 925–938. [Google Scholar] [CrossRef]

- Fedor, C.L. Adding Features To Direct Real Time Semi-dense Monocular SLAM. Master’s Thesis, Penn State University, State College, PA, USA, 2016. [Google Scholar]

- Zhai, Y.; Yang, X.; Wang, Q.; Zhao, Z.; Zhao, W. Hybrid Knowledge R-CNN for Transmission Line Multifitting Detection. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Kong, L.F.; Zhu, X.H.; Wang, G.Y. Context Semantics for Small Target Detection in Large-Field Images with Two Cascaded Faster R-CNNs. In Proceedings of the 3rd Annual International Conference on Information System and Artificial Intelligence (ISAI), Suzhou, China, 22–24 June 2018; Iop Publishing Ltd: Bristol, UK, 2018; Volume 1069. [Google Scholar]

- Dai, Z.; Yi, J.; Zhang, H.; Wang, D.; Huang, X.; Ma, C. CODNet: A Center and Orientation Detection Network for Power Line Following Navigation. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, W.; Li, Y.; Zhao, Z. InsulatorGAN: A Transmission Line Insulator Detection Model Using Multi-Granularity Conditional Generative Adversarial Nets for UAV Inspection. Remote Sens. 2021, 13, 3971. [Google Scholar] [CrossRef]

- Ji, Z.; Liao, Y.; Zheng, L.; Wu, L.; Yu, M.; Feng, Y. An Assembled Detector Based on Geometrical Constraint for Power Component Recognition. Sensors 2019, 19, 3517. [Google Scholar] [CrossRef]

- Yu, C.; Liu, Z.; Liu, X.J.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar] [CrossRef]

- Lewis, J.P. Fast Normalized Cross-correlation. Vis. Interface 1995, 10, 120–133. [Google Scholar]

- Civera, J.; Davison, A.J.; Montiel, J.M.M. Inverse Depth Parametrization for Monocular SLAM. IEEE Trans. Robot. 2008, 24, 932–945. [Google Scholar] [CrossRef]

- Vogiatzis, G.; Hernandez, C. Video-based, Real-time Multi-view Stereo. Image Vis. Comput. 2011, 29, 434–441. [Google Scholar] [CrossRef]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 376–380. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-Scale Direct Monocular SLAM. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 834–849. [Google Scholar]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- He, T.; Zeng, Y.H.; Hu, Z.L. Research of Multi-Rotor UAVs Detailed Autonomous Inspection Technology of Transmission Lines Based on Route Planning. IEEE Access 2019, 7, 114955–114965. [Google Scholar] [CrossRef]

- Guan, H.C.; Sun, X.L.; Su, Y.J.; Hu, T.Y.; Wang, H.T.; Wang, H.P.; Peng, C.G.; Guo, Q.H. UAV-lidar aids automatic intelligent powerline inspection. Int. J. Electr. Power Energy Syst. 2021, 130, 106987. [Google Scholar] [CrossRef]

- Gui, J.J.; Gu, D.B.; Wang, S.; Hu, H.S. A review of visual inertial odometry from filtering and optimisation perspectives. Adv. Robot. 2015, 29, 1289–1301. [Google Scholar] [CrossRef]

| Objects | Object Detection | Aerial Image Fault Detection (mAP) | |

|---|---|---|---|

| Literature | UAV | Copter | |

| Foundation | / | 44% | 56% |

| Cable | Precision: 94% [17] | 66% | 23% |

| Insulator | AP: 96.4% [18] | 74% | 89% |

| Damper | AP: 95.2% [19] | / | 87% |

| Large-size fittings | mAP: 78.6% [15] | 77% | / |

| Small-size fittings | / | 87% | 67% |

| Tower | AP: 95% [16] | 63% | / |

| Ancillary facilities | AP: 73.2% [16] | 52% | 82% |

| Method | Component | RMSE | Mean | Median | Max | Min | S.D. |

|---|---|---|---|---|---|---|---|

| GPS | Resultant | 7.7874 | 7.7847 | 7.8375 | 8.1800 | 7.2905 | 0.2065 |

| x | 1.5370 | 1.5254 | 1.5160 | 1.9826 | 1.1034 | 0.1885 | |

| y | 5.4175 | 5.4095 | 5.4410 | 5.9644 | 4.7497 | 0.2936 | |

| z | 5.3788 | 5.3784 | 5.3839 | 5.5642 | 5.1226 | 0.0696 | |

| GPS-alignment | Resultant | 0.3790 | 0.3523 | 0.3790 | 0.6457 | 0.0003 | 0.1399 |

| x | 0.2010 | 0.1629 | 0.1594 | 0.5271 | 0.0001 | 0.1177 | |

| y | 0.3053 | 0.2602 | 0.2711 | 0.6383 | 0.0001 | 0.1597 | |

| z | 0.1004 | 0.0855 | 0.0820 | 0.2581 | 0.0003 | 0.0526 | |

| PTI-SLAM | Resultant | 0.1447 | 0.1219 | 0.1058 | 0.2693 | 0.0085 | 0.0779 |

| x | 0.1192 | 0.0921 | 0.0798 | 0.2119 | 0.0009 | 0.0757 | |

| y | 0.0787 | 0.0598 | 0.0448 | 0.1803 | 0.0005 | 0.0512 | |

| z | 0.0230 | 0.0176 | 0.0129 | 0.0515 | 0.0003 | 0.0148 |

| Centroid (m) | Point Cloud Number | |||

|---|---|---|---|---|

| x | y | z | ||

| Unfiltered | 1.4361 | −1.8895 | 0.8450 | 7295 |

| Statistical filtered | 1.1414 | −1.8834 | 0.8489 | 5514 |

| Groundtruth | 0.7759 | −2.0697 | 0.8904 | / |

| Centroid (m) | Euclidean Distance Error (m) | |||

|---|---|---|---|---|

| x | y | z | ||

| Groundtruth | 0.7759 | −2.0697 | 0.8904 | / |

| 1st batch | 0.9977 | −1.9386 | 0.8331 | 0.2639 |

| 2 batches fusion | 0.9765 | −1.8928 | 0.8471 | 0.2709 |

| 4 batches fusion | 0.9827 | −1.9155 | 0.8457 | 0.2618 |

| 6 batches fusion | 1.0182 | −1.9001 | 0.8418 | 0.2997 |

| 8 batches fusion | 1.0627 | −1.8784 | 0.8445 | 0.3478 |

| 10 batches fusion | 1.0552 | −1.8942 | 0.8447 | 0.3319 |

| 12 batches fusion | 1.0538 | −1.9164 | 0.8542 | 0.3194 |

| RMSE | 0.2472 | 0.1656 | 0.0463 | 0.3011 |

| Mean | 0.2449 | 0.1645 | 0.0460 | 0.2993 |

| Median | 0.2423 | 0.1695 | 0.0457 | 0.2997 |

| S.D. | 0.0336 | 0.0184 | 0.0059 | 0.0322 |

| Testing Scene | Window Coefficient N | Object Location Error (m) | Time (mSec) | Point Cloud Number | ||

|---|---|---|---|---|---|---|

| Total Delay | Depth Estimation | Positioning | ||||

| Normal | 6 | 0.2855 | 521 | 488 | 33 | 4270 |

| 8 | 0.2842 | 601 | 567 | 34 | 4437 | |

| 10 | 0.2845 | 671 | 637 | 34 | 4537 | |

| 12 | 0.2834 | 740 | 706 | 34 | 4626 | |

| 15 | 0.2856 | 829 | 795 | 34 | 4687 | |

| Extreme disadvantage | 6 | failed | 402 | 401 | 1 | / |

| 8 | 0.5479 | 579 | 575 | 4 | 388 | |

| 10 | 0.5514 | 702 | 696 | 6 | 801 | |

| 12 | 0.5625 | 849 | 842 | 7 | 948 | |

| 15 | 0.6049 | 958 | 950 | 8 | 1114 | |

| Object Error | SLAM Error | Object Distance (m) | Deviation from Center (pixel) | Mean Movement of Keyframes (m) | |||

|---|---|---|---|---|---|---|---|

| Depth | Location | Location | Orientation | ||||

| obs-1 | 0.0786 | 0.0791 | 0.0176 | 1.9693 | 2.224 | 49.6688 | 0.8462 |

| obs-2 | 0.1009 | 0.1213 | 0.0009 | 3.2653 | 1.8644 | 73.8296 | 0.845 |

| obs-3 | 0.1515 | 0.1118 | 0.0662 | 2.3923 | 3.443 | 109.0948 | 1.8682 |

| obs-4 | 0.1878 | 0.143 | 0.0724 | 3.0785 | 3.0254 | 145.2951 | 1.2238 |

| obs-5 | 0.1977 | 0.1108 | 0.0107 | 1.9836 | 4.1482 | 79.1419 | 1.0855 |

| Method | Attributes | Tesing Scene | Success Ratio | RMSE (m) |

|---|---|---|---|---|

| LSD-SLAM [26] | direct method dense mapping | normal | 6/10 | 0.3731 |

| rapid rotation | 4/10 | 0.4126 | ||

| light change | 3/10 | 0.4335 | ||

| Direct ORB-SLAM2 | direct method semi-dense mapping | normal | 7/10 | 0.0911 |

| rapid rotation | 3/10 | 0.2232 | ||

| light change | 3/10 | 0.2159 | ||

| ORB-SLAM2 [27] | feature-based sparse mapping | normal | 10/10 | 0.1083 |

| rapid rotation | 6/10 | 0.2332 | ||

| light change | 10/10 | 0.197 | ||

| PTI-SLAM | hybrid method sparse environment semi-dense object | normal | 10/10 | 0.1279 |

| rapid rotation | 6/10 | 0.2802 | ||

| light change | 10/10 | 0.2150 |

| Method | Attributes | Runtime (mSec) | ||

|---|---|---|---|---|

| Track | Semantic Segmentation | Semantic Positioning | ||

| LSD-SLAM [26] | monocular direct method | 42 | / | / |

| Direct ORB-SLAM2 | monocular direct method | 49 | / | / |

| ORB-SLAM2 [27] | monocular feature-based | 81 | / | / |

| DS-SLAM2 [20] | RGB-Depth/semantic feature-based | 506 | 298 | / |

| Cube-SLAM [13] | monocular/semantic feature-based | 130 | / | / |

| PTI-SLAM | monocular/semantic hybrid method | 81 | 291 | 703/0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Miao, X.; Xie, Z.; Jiang, H.; Chen, J. Power Tower Inspection Simultaneous Localization and Mapping: A Monocular Semantic Positioning Approach for UAV Transmission Tower Inspection. Sensors 2022, 22, 7360. https://doi.org/10.3390/s22197360

Liu Z, Miao X, Xie Z, Jiang H, Chen J. Power Tower Inspection Simultaneous Localization and Mapping: A Monocular Semantic Positioning Approach for UAV Transmission Tower Inspection. Sensors. 2022; 22(19):7360. https://doi.org/10.3390/s22197360

Chicago/Turabian StyleLiu, Zhiying, Xiren Miao, Zhiqiang Xie, Hao Jiang, and Jing Chen. 2022. "Power Tower Inspection Simultaneous Localization and Mapping: A Monocular Semantic Positioning Approach for UAV Transmission Tower Inspection" Sensors 22, no. 19: 7360. https://doi.org/10.3390/s22197360

APA StyleLiu, Z., Miao, X., Xie, Z., Jiang, H., & Chen, J. (2022). Power Tower Inspection Simultaneous Localization and Mapping: A Monocular Semantic Positioning Approach for UAV Transmission Tower Inspection. Sensors, 22(19), 7360. https://doi.org/10.3390/s22197360