Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces

Abstract

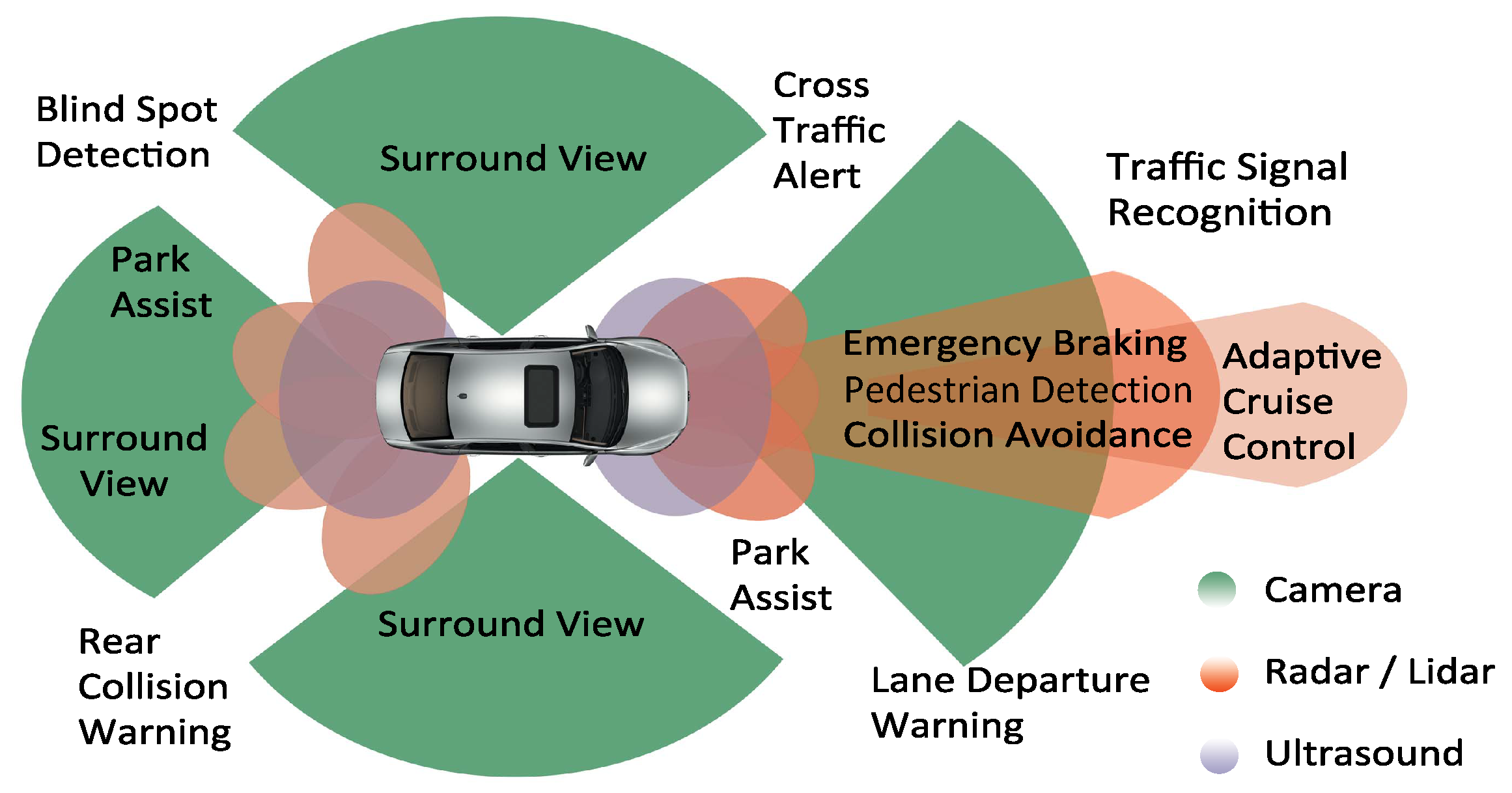

:1. Introduction

2. Background

LiDAR Working Principle

3. State of the Art

4. LiDAR Modeling Building Blocks

4.1. Open Standards

4.2. Functional Mock-Up Interface

4.3. Open Simulation Interface

5. LiDAR Sensor Model

5.1. Scan Pattern

5.2. Link Budget Module

5.3. SiPM Detector Module

5.4. Circuit Module

5.5. Ranging Module

6. Results

6.1. Validation of the Model on Time Domain

6.2. Validation of the Model on Point Cloud Level

6.2.1. Lab Test

- (1)

- The number of received points from the surface of the simulated and real objects of interest.

- (2)

- The comparison between the mean intensity values of received reflections from the surface of the simulated and real targets.

- (3)

- The distance error of point clouds obtained from the actual and virtual objects should not be more than the range accuracy of the real sensor, which is 2 cm in this case.

6.2.2. Proving Ground Tests

7. Conclusions

8. Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADAS | Advanced Driver-Assistance System |

| LiDAR | Light Detection And Ranging |

| RADAR | Radio Detection And Ranging |

| FMU | Functional Mock-Up Unit (FMU) |

| OSI | Open Simulation Interface |

| FMI | Functional Mock-Up Interface (FMI) |

| MAPE | Mean Absolute Percentage Error |

| ABS | Anti-Lock Braking System |

| ACC | Adaptive Cruise Control |

| ESC | Electronic Stability Control |

| LDW | Lane Departure Warning |

| PA | Parking Assistant |

| TSR | Traffic-Sign Recognition |

| MiL | Model-in-the-Loop |

| HiL | Hardware-in-the-Loop |

| SiL | Software-in-the-Loop |

| IP | Intellectual Property |

| KPIs | Key Performance Indicators |

| OEMs | Original Equipment Manufacturers |

| FoV | Field of View |

| RTDT | Round-Trip Delay Time |

| ToF | Time of Flight |

| HAD | Highly Automated Driving |

| RSI | Raw Signal Interface |

| OSMP | OSI Sensor Model Packaging |

| SNR | Signal-to-Noise Ratio |

| FSPL | Free Space Path Losses |

| BRDF | Bidirectional Reflectance Distribution Function |

| SiPM | Silicon Photomultipliers |

| APD | Avalanche Photodiode |

| SPAD | Single-Photon Avalanche diode |

| FX Engine | Effect Engine |

| MEMS | Microelectromechanical Mirrors |

| IDFT | Inverse discrete Fourier Transform |

| DFT | Discrete Fourier Transform |

| TDS | Time Domain Signals |

References

- KBA. Bestand Nach Fahrzeugklassen und Aufbauarten. Available online: https://www.kba.de/DE/Statistik/Fahrzeuge/Bestand/FahrzeugklassenAufbauarten/2021/b_fzkl_zeitreihen.html?nn=3524712&fromStatistic=3524712&yearFilter=2021&fromStatistic=3524712&yearFilter=2021 (accessed on 15 April 2022).

- Synopsys. What is ADAS? Available online: https://www.synopsys.com/automotive/what-is-adas.html (accessed on 26 August 2021).

- Thomas, W. Safety benefits of automated vehicles: Extended findings from accident research for development, validation and testing. In Autonomous Driving; Springer: Berlin/Heidelberg, Germany, 2016; pp. 335–364. [Google Scholar]

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. Handbook of Driver Assistance Systems; Springer International Publishing: Amsterdam, The Netherlands, 2016; pp. 405–430. [Google Scholar]

- VIVID Virtual Validation Methodology for Intelligent Driving Systems. Available online: https://www.safecad-vivid.net/ (accessed on 1 June 2022).

- DIVP Driving Intelligence Validation Platform. Available online: https://divp.net/ (accessed on 24 May 2022).

- VVM Verification Validation Methods. Available online: https://www.vvm-projekt.de/en/project (accessed on 24 May 2022).

- SET Level. Available online: https://setlevel.de/en (accessed on 24 May 2022).

- Kochhar, N. A Digital Twin for Holistic Autonomous Vehicle Development. ATZelectron. Worldw. 2021, 16, 8–13. [Google Scholar] [CrossRef]

- Blochwitz, T. Functional Mock-Up Interface for Model Exchange and Co-Simulation. 2016. Available online: https://fmi-standard.org/downloads/ (accessed on 20 March 2021).

- ASAM e.V. ASAM OSI. Available online: https://www.asam.net/standards/detail/osi/ (accessed on 13 September 2022).

- Schneider, S.-A.; Saad, K. Camera behavioral model and testbed setups for image-based ADAS functions. Elektrotech. Inf. 2018, 135, 328–334. [Google Scholar] [CrossRef]

- Rosenberger, P.; Holder, M.; Huch, S.; Winner, H.; Fleck, T.; Zofka, M.R.; Zöllner, J.M.; D’hondt, T.; Wassermann, B. Benchmarking and Functional Decomposition of Automotive Lidar Sensor Models. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2017; pp. 632–639. [Google Scholar]

- IPG Automotive GmbH. CarMaker 10.0.2. Available online: https://ipg-automotive.com/en/products-solutions/software/carmaker/ (accessed on 12 March 2022).

- dSPACE GmbH. AURELION 22.1. Available online: https://www.dspace.com/en/inc/home/news/aurelion_new-version_22-1.cfm (accessed on 12 March 2022).

- Roriz, R.; Cabral, J.; Gomes, T. Automotive LiDAR Technology: A Survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6282–6297. [Google Scholar] [CrossRef]

- Fersch, T.; Buhmann, A.; Weigel, R. The influence of rain on small aperture LiDAR sensors. In Proceedings of the 2016 German Microwave Conference (GeMiC), Bochum, Germany, 14–16 March 2016; pp. 84–87. [Google Scholar]

- McManamon, P.F. Field Guide to Lidar; SPIE Press: Bellingham, WA, USA, 2015. [Google Scholar]

- Ahn, N.; Höfer, A.; Herrmann, M.; Donn, C. Real-time Simulation of Physical Multi-sensor Setups. ATZelectron. Worldw. 2020, 15, 8–11. [Google Scholar] [CrossRef]

- Neuwirthová, E.; Kuusk, A.; Lhotáková, Z.; Kuusk, J.; Albrechtová, J.; Hallik, L. Leaf Age Matters in Remote Sensing: Taking Ground Truth for Spectroscopic Studies in Hemiboreal Deciduous Trees with Continuous Leaf Formation. Remote Sens. 2021, 13, 1353. [Google Scholar] [CrossRef]

- Feilhauer, M.; Häring, J. A real-time capable multi-sensor model to validate ADAS in a virtual environment. In Fahrerassistenzsysteme; Springer Vieweg: Wiesbaden, Germany, 2017; pp. 227–256. [Google Scholar]

- Hanke, T.; Hirsenkorn, N.; Dehlink, B.; Rauch, A.; Rasshofer, R.; Biebl, E. Generic architecture for simulation of ADAS sensors. In Proceedings of the 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 125–130. [Google Scholar]

- Stolz, M.; Nestlinger, G. Fast generic sensor models for testing highly automated vehicles in simulation. Elektrotech. Inf. 2018, 135, 365–369. [Google Scholar] [CrossRef] [Green Version]

- Hirsenkorn, N.; Hanke, T.; Rauch, A.; Dehlink, B.; Rasshofer, R.; Biebl, E. A non-parametric approach for modeling sensor behavior. In Proceedings of the 16th International Radar Symposium (IRS), Dresden, Germany, 24–26 June 2015; pp. 131–136. [Google Scholar]

- Muckenhuber, S.; Holzer, H.; Rubsam, J.; Stettinger, G. Object-based sensor model for virtual testing of ADAS/AD functions. In Proceedings of the IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–6. [Google Scholar]

- Linnhoff, C.; Rosenberger, P.; Winner, H. Refining Object-Based Lidar Sensor Modeling—Challenging Ray Tracing as the Magic Bullet. IEEE Sens. J. 2021, 21, 24238–24245. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Y.; Zhu, B.; Deng, W.; Sun, B. Method and Applications of Lidar Modeling for Virtual Testing of Intelligent Vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 22, 2990–3000. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Deng, W.; Li, X.; Jiang, L. LiDAR Sensor Modeling for ADAS Applications under a Virtual Driving Environment; SAE Technical Paper; SAE International: Warrendale, PA, USA, 2016. [Google Scholar]

- Schaefer, A.; Luft, L.; Burgard, W. An Analytical Lidar Sensor Model Based on Ray Path Information. IEEE Robot. Autom. Lett. 2017, 2, 1405–1412. [Google Scholar] [CrossRef]

- Rosenberger, P.; Holder, M.F.; Cianciaruso, N.; Aust, P.; Tamm-Morschel, J.F.; Linnhoff, C.; Winner, H. Sequential lidar sensor system simulation: A modular approach for simulation-based safety validation of automated driving. Automot. Engine Technol. 2020, 5, 187–197. [Google Scholar] [CrossRef]

- Gschwandtner, M.; Kwitt, R.; Uhl, A.; Pree, W. Blensor: Blender Sensor Simulation Toolbox. In Proceedings of the 7th International Symposium on Visual Computing, Las Vegas, NV, USA, 26–28 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 199–208. [Google Scholar]

- Goodin, C.; Kala, R.; Carrrillo, A.; Liu, L.Y. Sensor modeling for the Virtual Autonomous Navigation Environment. In Proceedings of the Sensors IEEE, Christchurch, New Zealand, 25–28 October 2009; pp. 1588–1592. [Google Scholar]

- Bechtold, S.; Höfle, B. HELIOS: A multi-purpose LIDAR simulation framework for research planning and training of laser scanning operations with airborne ground-based mobile and stationary platforms. ISPRS Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2016, 3, 161–168. [Google Scholar] [CrossRef] [Green Version]

- Hanke, T.; Schaermann, A.; Geiger, M.; Weiler, K.; Hirsenkorn, N.; Rauch, A.; Schneider, S.A.; Biebl, E. Generation and validation of virtual point cloud data for automated driving systems. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Goodin, C.; Carruth, D.; Doude, M.; Hudson, C. Predicting the influence of rain on LIDAR in ADAS. Electronics 2019, 8, 89. [Google Scholar] [CrossRef] [Green Version]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Vector DYNA4 Sensor Simulation: Environment Perception for ADAS and AD. 2022. Available online: https://www.vector.com/int/en/products/products-a-z/software/dyna4/sensor-simulation/ (accessed on 16 January 2022).

- dSPACE AURELION Lidar Model: Realistic Simulation of Lidar Sensors. 2022. Available online: https://www.dspace.com/en/pub/home/products/sw/experimentandvisualization/aurelion_sensor-realistic_sim/aurelion_lidar.cfm#175_60627 (accessed on 16 January 2022).

- Roth, E.; Dirndorfer, T.; Neumann-Cosel, K.V.; Fischer, M.O.; Ganslmeier, T.; Kern, A.; Knoll, A. Analysis and Validation of Perception Sensor Models in an Integrated Vehicle and Environment Simulation. In Proceedings of the 22nd International Technical Conference on the Enhanced Safety of Vehicles (ESV), Washington, DC, USA, 13–16 June 2011. [Google Scholar]

- Gomes, C.; Thule, C.; Broman, D.; Larsen, P.G.; Vangheluwe, H. Co-simulation: State of the art. arXiv 2017, arXiv:1702.00686. [Google Scholar]

- Blochwitz, T.; Otter, M.; Arnold, M.; Bausch, C.; Clauß, C.; Elmqvist, H.; Junghanns, A.; Mauss, J.; Monteiro, M.; Neidhold, T.; et al. The Functional Mockup Interface for Tool independent Exchange of Simulation Models. In Proceedings of the 8th International Modelica Conference 2011, Dresden, Germany, 20–22 March 2011; pp. 173–184. [Google Scholar]

- Van Driesten, C.; Schaller, T. Overall approach to standardize AD sensor interfaces: Simulation and real vehicle. In Fahrerassistenzsysteme 2018; Springer Vieweg: Wiesbaden, Germany, 2019; pp. 47–55. [Google Scholar]

- ASAM e.V. ASAM OSI Sensor Model Packaging Specification 2022. Available online: https://opensimulationinterface.github.io/osi-documentation/#_osi_sensor_model_packaging (accessed on 7 June 2021).

- ASAM e.V. ASAM Open Simulation Interface (OSI) 2022. Available online: https://opensimulationinterface.github.io/open-simulation-interface/index.html (accessed on 30 June 2022).

- IPG CarMaker. Reference Manual Version 9.0.1; IPG Automotive GmbH: Karlsruhe, Germany, 2021. [Google Scholar]

- Fink, M.; Schardt, M.; Baier, V.; Wang, K.; Jakobi, M.; Koch, A.W. Full-Waveform Modeling for Time-of-Flight Measurements based on Arrival Time of Photons. arXiv 2022, arXiv:2208.03426. [Google Scholar]

- Blickfeld Scan Pattern. Available online: https://docs.blickfeld.com/cube/latest/scan_pattern.html (accessed on 7 July 2022).

- Petit, F. Myths about LiDAR Sensor Debunked. Available online: https://www.blickfeld.com/de/blog/mit-den-lidar-mythen-aufgeraeumt-teil-1/ (accessed on 5 July 2022).

- Fersch, T.; Weigel, R.; Koelpin, A. Challenges in miniaturized automotive long-range lidar system design. In Proceedings of the Three-Dimensional Imaging, Visualization, and Display, Orlando, FL, USA, 10 May 2017; SPIE: Bellingham, WA, USA, 2017; pp. 160–171. [Google Scholar]

- National Renewable Energy Laboratory. Reference Air Mass 1.5 Spectra: ASTM G-173. Available online: https://www.nrel.gov/grid/solar-resource/spectra-am1.5.html (accessed on 26 February 2022).

- French, A.; Taylor, E. An Introduction to Quantum Physics; Norton: New York, NY, USA, 1978. [Google Scholar]

- Fox, A.M. Quantum Optics: An Introduction; Oxford Master Series in Physics Atomic, Optical, and Laser Physics; Oxford University Press: New York, NY, USA, 2007; ISBN 978-0-19-856673-1. [Google Scholar]

- Pasquinelli, K.; Lussana, R.; Tisa, S.; Villa, F.; Zappa, F. Single-Photon Detectors Modeling and Selection Criteria for High-Background LiDAR. IEEE Sens. J. 2020, 20, 7021–7032. [Google Scholar] [CrossRef]

- Bretz, T.; Hebbeker, T.; Kemp, J. Extending the dynamic range of SiPMs by understanding their non-linear behavior. arXiv 2010, arXiv:2010.14886. [Google Scholar]

- Swamidass, P.M. (Ed.) Mean Absolute Percentage Error (MAPE). In Encyclopedia of Production and Manufacturing Management; Springer: Boston, MA, USA, 2000; p. 462. [Google Scholar]

- Lang, S.; Murrow, G. The Distance Formula. In Geometry; Springer: New York, NY, USA, 1988; pp. 110–122. [Google Scholar]

| Authors | Model Type | Input of Model | Output of Model | Covered Effects | Validation Approach |

|---|---|---|---|---|---|

| Hanke et al. [23] | Ideal/low-fidelity | Object list | Object list | FoV and object occlusion | N/A |

| Stolz & Nestlinger [24] | Ideal/low-fidelity | Object list | Object list | FoV and object occlusion | N/A |

| Muckenhuber et al. [26] | Phenomenological/ low-fidelity | Object list | Object list | FoV, object class definition, occlusion, probability of false positive and false negative detections | Simulation result |

| Linnhoff et al. [27] | Phenomenological/ low-fidelity | Object list | Object list | Partial occlusion of objects, limitation of angular view, and decrease in the effective range due to atmospheric attenuation | Simulation result comparison with ray tracing model at object level |

| Hirsenkorn et al. [25] | Phenomenological/ low-fidelity | Object list | Object list | Ranging errors, latency, false-positive, and occlusion | Simulation result |

| Zhao et al. [28] | Phenomenological/ low-fidelity | Object list | Object list or point clouds | Occlusion, FoV and beam divergence | Simulation result |

| Li et al. [29] | Physical/ medium-fidelity | Object list | Object list or point clouds | Occlusion, FoV and beam divergence | Simulation result |

| Philipp et al. [31] | Physical/ medium-fidelity | Ray-casting | Point clouds & object list | Beam divergence, SNR, detection threshold, and material surface properties | Qualitative compar- ison with real and re- ference measuremen- ts at the object list le- vel for one dynamic scenario |

| Gschwandtner et al. [32] | Physical/ medium-fidelity | Ray-casting | Point clouds | Sensor noise, materials physical properties, and FSPL | Simulation results |

| Goodin et al. [33] | Physical/ medium-fidelity | Ray-casting | Point clouds | Beam divergence and a Gaussian beam profile | Simulation results |

| Bechtold & Höfle [34] | Physical/ medium-fidelity | Ray-casting | Point clouds | Beam divergence, atmospheric attenuation, scanner efficiency, and material surface properties | Simulation results |

| Hanke et al. [35] | Physical/ medium-fidelity | Ray-tracing | Point clouds | Beam divergence, material surface properties, detection threshold, noise effects, and atmospheric attenuation | Qualitative comparis- on of synthetic and re- al data at point cloud level for one dynamic scenario |

| Li et al. [29] | Physical/ medium-fidelity | Ray-tracing | Point clouds | Beam divergence, power loss due to rain, fog, snow, and haze | Simulation results for one static and one dy- namic scenario |

| Zhao et al. [28] | Physical/ medium-fidelity | Ray-tracing | Point clouds | False alarm due to the backscattering from water droplets | Qualitative comparis- on with measurement |

| CARLA [37] | Physical/ medium-fidelity | Ray-casting | Point clouds | signal attenuation, noise the drop-off in number of point clouds loss due to external perturbations | N/A |

| CarMaker [20] | Physical/ medium-fidelity | Ray-tracing | Point clouds | Noise, the drop-off in intensity, and the number of point clouds due to atmospheric attenuation | N/A |

| DYNA4 [38] | Physical/ medium-fidelity | Ray-casting | Point clouds | Physical effects, the material surface reflectivity and ray angle of incidence | N/A |

| VTD [40] | Physical/ medium-fidelity | Ray-tracing | Point clouds | Material properties | N/A |

| AURELION [39] | Physical/ medium-fidelity | Ray-tracing | Point clouds | Material surface reflectivity, sensor noise, atmospheric attenuation, and fast motion scan effect | N/A |

| Haider et al. (proposed model) | Physical/ high-fidelity | Ray-tracing | Time domain & point clouds | Material surface reflectivity, beam divergence, FSPL daylight, daylight filter, internal reflection of detector saturation of detector from bright targets, detector shot noise and dark count rate, and detection threshold | Qualitative comparison of simulation and real measurement at time do- main and point cloud level |

| Parameter | |

|---|---|

| Number of received points | 8.5% |

| Mean Intensity | 9.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haider, A.; Pigniczki, M.; Köhler, M.H.; Fink, M.; Schardt, M.; Cichy, Y.; Zeh, T.; Haas, L.; Poguntke, T.; Jakobi, M.; et al. Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces. Sensors 2022, 22, 7556. https://doi.org/10.3390/s22197556

Haider A, Pigniczki M, Köhler MH, Fink M, Schardt M, Cichy Y, Zeh T, Haas L, Poguntke T, Jakobi M, et al. Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces. Sensors. 2022; 22(19):7556. https://doi.org/10.3390/s22197556

Chicago/Turabian StyleHaider, Arsalan, Marcell Pigniczki, Michael H. Köhler, Maximilian Fink, Michael Schardt, Yannik Cichy, Thomas Zeh, Lukas Haas, Tim Poguntke, Martin Jakobi, and et al. 2022. "Development of High-Fidelity Automotive LiDAR Sensor Model with Standardized Interfaces" Sensors 22, no. 19: 7556. https://doi.org/10.3390/s22197556