1. Introduction

Cells in a biological being sometimes grow abnormally to form irregular volumes, which may affect the function of nearby healthy and normal cells. Human brain cells can also become tumors for multiple reasons, such as mutations or unrestrained cell division, disrupting the brain’s normal functionality and damaging healthy brain cells [

1,

2]. Brain tumors are considered one of the most life-threatening diseases, and thousands of lives are claimed annually. Early diagnosis holds the key to effective treatment and patient management. A radiologist normally uses brain Magnetic Resonance Images (MRI) to identify brain tumors manually [

3,

4]. The manual process is error-prone and time-consuming, even for the most expert radiologists. The different types, shapes, and sizes of tumors make the manual process even more challenging from a diagnosis point of view [

5]. To overcome the challenges in accurate BTD and identification, a reliable and accurate automated system [

6] is inevitable for the assistance of radiologists. This study specifically focuses accurate classification of MRI images into tumorous and normal MR images.

Radiologists utilize various medical imaging modalities to analyze brain images for tumor detection [

7]. The non-invasive nature of MRI has become one of the most frequently used techniques to detect brain tumors. Automated BTD utilizing MR images has been frequently studied. Conventional machine learning (ML) approaches for the detection of brain tumors using MRI consist of preprocessing, feature extraction, selection, and classification [

8]. Since Feature extraction and selection necessitates prior knowledge of the problem domain, it is the most critical stage of an effective automated BTD system [

9]. However, these traditional ML approaches are time-consuming, used on limited data, and require tailored feature extraction techniques. On the contrary, modern DL models perform better in image classification or detection. The automatic feature extraction process at the dense level of DL models enables it to compute reliable discriminative feature maps on a significant amount of labeled data; thus, no handcrafted feature extraction technique is required. Due to this, several studies have employed DL models to detect and classify brain tumors.

Several limitations are associated with the existing automated approaches for BTD, including performance issues and manual identification of tumor regions for the ML model to classify the area as normal or affected. On the other hand, DL techniques can automatically extract features without manual intervention; however, the DL models proposed for BTD in the literature require large memory and high computation power. Furthermore, the performance assessment based on various evaluation metrics other than accuracy (such as precision, recall, specificity, and F-Score) is also important.

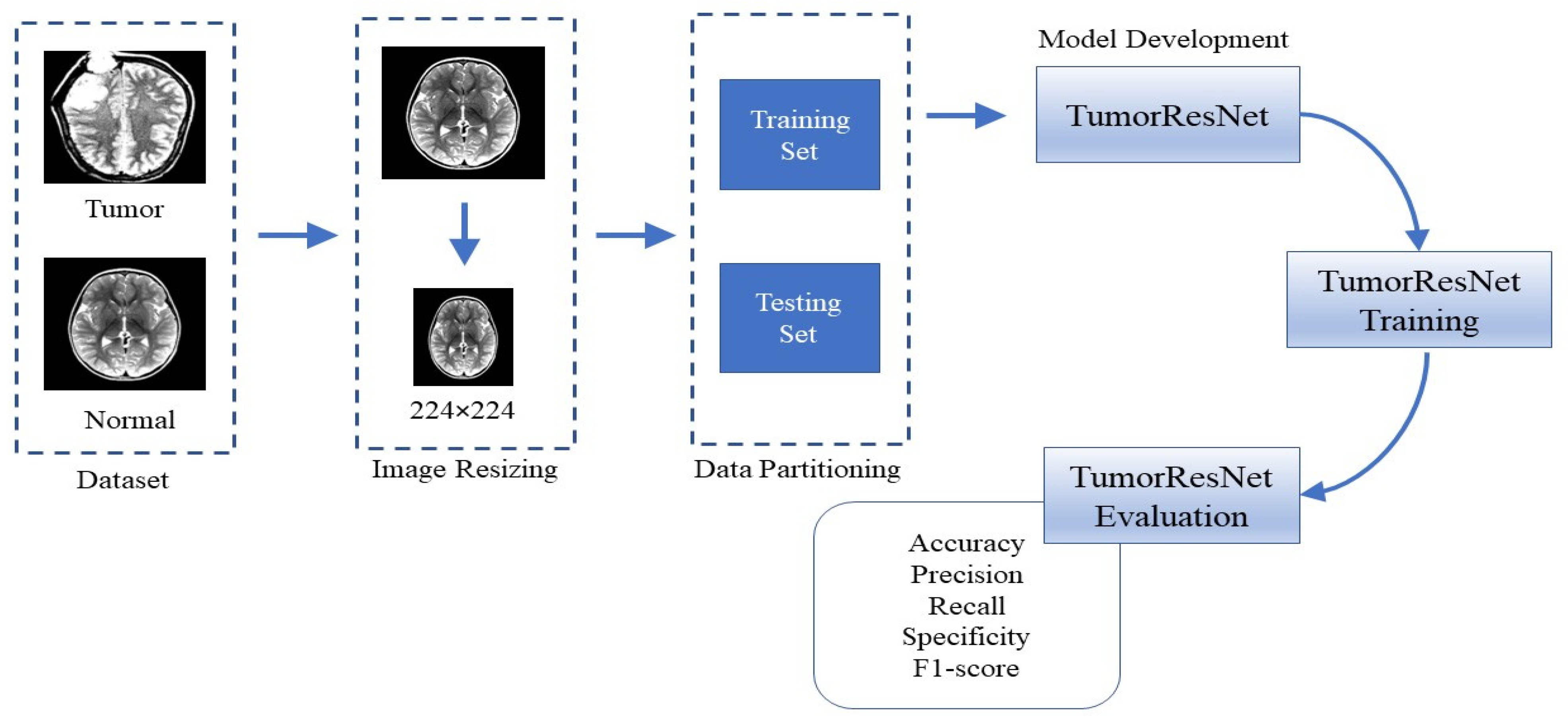

To cope with these limitations, in this paper, we proposed a novel TumorResNet DL-based model for BTD. The proposed model comprises several layers, such as convolution, LReLU, and batch normalization (BN). In contrast to earlier approaches, the proposed methodology does not include feature extraction and the selection or segmentation in the pre-processing stage [

10,

11], which needs prior feature extraction or segmentation of tumors from the MRI scans. The proposed model employs filter-based feature extraction, which can be useful in achieving high detection performance. The proposed model is capable of classifying images into different classes. The proposed framework uses a convolutional layer and LReLU activation function to extract the high-level features from the MR images. The main contributions of this study are:

We designed and implemented a fully automatic end-to-end TumorResNet DL framework for BTD.

The proposed method is robust to variations in intensity, size, shape, angle, and location of brain tumors in the images.

Detailed experiments are performed on a standard Kaggle dataset with two classes (normal/tumor) to prove the superiority of our framework over the contemporary approaches for BTD.

Moreover, we have also performed cross-validation over the “Brain MRI Images for BTD” dataset to show the applicability of the proposed method in real-world scenarios.

The remaining sections of the paper are arranged as follows:

Section 2 provides the details about the literature review (or related work);

Section 3 explains the proposed methodology.

Section 4 describes the details of the experiments conducted for performance evaluation.

Section 5 is about the discussion of our approach.

Section 5 concludes our work.

2. Related Work

Currently, the detection of brain tumors has received a lot of attention. In literature, different techniques have been proposed for BTD. These techniques include the traditional ML approaches [

8] and DL-based approaches [

7,

9]. The exploration of current methods for the detection of brain tumors is discussed in this section. The overview of existing approaches for BTD is also presented in

Table 1.

Typical processes in conventional ML approaches for classification include preprocessing, feature extraction and selection, dimension reduction, and classification [

12,

13]. The ability to extract features typically depends on the expert’s expertise in the relevant field. Using conventional ML techniques in research is a difficult endeavor for a novice. The classification accuracy depends on the retrieved features, a fundamental stage in classical ML. There are two different forms of feature extraction.

Table 1.

Overview of existing approaches for BTD.

Table 1.

Overview of existing approaches for BTD.

| Author | Method | Images Details of the Dataset | Advantages | Limitation |

|---|

| Woldeyohannes et al. [14] |

Two-dimensional discrete wavelet transforms (2D-DWT) are used for feature extraction and SVM for classification.

|

160 normal and 240 tumorous MRI

| Satisfactory results on a small dataset | Testing on imbalance dataset |

| Selvaraj et al. [15] | Statistical features (mean & variance), features from gray level cooccurrence matrices (entropy & contrast), and Least Squares SVM. | 833 tumorous and 267 normal MRI | Both linear and non-linear kernels are used | Testing on imbalance dataset |

| Srilatha et al. [16] | LBP for feature extraction and SVM for classification. |

58 normal MRI and 100 tumorous

| Computationally efficient | Results are reported for a small dataset |

| Mishra et al. [17] |

Graph Attention AutoEncoder-CNN

|

510 tumorous and 461 normal

| Good generalization ability | Computationally complex |

| Rai et al. [18] | A novel Less Layered and less complex U-Net CNN | 155 tumorous and 98 normal | The model is less complex and fast | Very low accuracy on uncropped images |

| Neeraja et al. [12] | CNN | 155 tumorous and 98 normal | The model is lightweight and efficient | Low classification accuracy |

| Cinar et al. [19] | Resnet50 | 155 tumorous and 98 normal | The model is efficient with good generalization ability | Low classification accuracy |

| Kiraz et al. [20] | weighted KNN | 300 tumorous and 300 normal | The model performs well on the combined images of two datasets | Performance is highly influenced by the location and size of brain tumors |

First, there are low-level (global) features like texture and intensity features, first-order statistics like skewness, mean, and standard deviation, and second-order statistics like wavelet transform, shape, and Gabor features. However, traditional ML feature extraction techniques have some limitations. Firstly, it focuses only on low-level or high-level features. Secondly, it uses handcrafted features which require domain-specific experience and knowledge. It needs the efforts for manual extraction of features, which can decrease the effectiveness of the BTD system. Because handcrafted features demand strong domain information (i.e., the location or position of the tumor in an MR image); hence, it is not an easy task and is prone to human errors. The location and position of the tumor region in the MR image, together with its margin, texture, shape, and size, are related to the most important information and distinctive features of brain tumors. It highlights the urgent need for robust automation for BTD that incorporates high-level and low-level features without needing custom features. The DL-based approaches address these problems due to automatic feature extraction, which is more effective and robust for classification and detection purposes [

21,

22].

DL-based approaches provide fully automated end-to-end systems for brain tumor classification and detection. DL models use convolutional and pooling layers to learn and extract the features from the images. In [

12], the authors used a novel CNN model comprised of four convolutional and two fully connected layers. A dataset consisting of two types of images, i.e., tumorous and normal MRI images, was used to assess the effectiveness of the proposed CNN. Data augmentation was used to increase the size of the training dataset. In [

23], the authors used a convolution neural network (CNN) to extract hidden features from MRI images. Then kernel extreme learning machines (KELM) were used for classification based on these features. A dataset consisting of three types of brain tumor MRI images, i.e., meningioma, glioma, and pituitary, was used to assess the effectiveness of the CNN and KELM model ensemble. In [

24], the authors introduced CNN based on an automatic solution for BTD and grouping using MRI images. In [

19], the Resnet50 DL model was employed for BTD. The last five layers of the Resnet50 architecture were removed, and eight new layers were added. Furthermore, the performance of Resnet50, Alexnet, InceptionV3, Densenet201, and Googlenet models were also compared to find the model with the highest performance for BTD. In [

25], the authors trained Faster R-CNN from scratch using MRI brain tumor images. Faster R-CNN combines the pre-trained AlexNet DL framework and region proposed network (RPN). The AlexNet model was taken as a base model for brain tumor MR image classification. RPN was given the AlexNet convolutional feature map as input. Fifty brain MRI images from a dataset were used to evaluate the framework. In [

26], the authors used fine-tuned EfficientNet-B0 CNN base network to detect and classify brain tumor images effectively. The image enhancement and data augmentation methods are utilized to enhance the quality of the MRI scans and increase the number of training samples. In [

27], the authors proposed a new brain tumor segmentation (Adaptive Fuzzy Deformable Fusion (AFDF)-based segmentation) and classification (Optimized CNN with Ensemble Classification) approach for brain tumor classification. In [

28], the authors employed ML algorithms to classify the MRI scans of three freely accessible datasets and numerous pre-trained deep CNNs to obtain significant features from the MRI scans. The outcomes demonstrate that the SVM with radial basis function kernel beats other machine learning classifiers. The authors of [

29] used Spectral Data Augmentation-based Deep Autoencoder, whereas the authors of [

30] used the CNN model for brain tumor MRIs classification into normal and tumorous.

A review of prior research discovered that there are many issues with current automated techniques for detecting brain tumors. For the detection of brain tumors, some methods make use of the manually designated tumor regions, which prevents them from being totally automated. DL approaches are gaining attention because of their automatic feature extraction capabilities; however, they require large memory and high computation power. Moreover, the DL models normally provide lower results with small datasets, which is very common in the case of medical image datasets. Furthermore, brain tumor detection approaches cannot achieve high detection and classification performance. Additionally, it is difficult to identify and detect brain tumors because of the variability in size, form, intensities, and location of brain tumors. To address the challenges associated with BTD, we proposed an effective end-to-end DL model for BTD. Our proposed DL-based model increases the performance of BTD and classification by automatically extracting both low-level and high-level features for classification.

5. Discussion

In this study, we proposed a TumorResNet DL-based model that can more accurately (99.33) identify brain tumors from MRI than competing models. As shown in

Figure 5, the model’s training and testing accuracy increase after each epoch while its training and testing loss rapidly decreases. The training of the proposed TumorResNet model took 540 min and 41 s for BTD. However, this time relies on the number of epochs and iterations per epoch. The proposed model is contrasted with hybrid methods (DL + SVM) and current cutting-edge models that may be found in the literature. We have validated the system using another common, freely obtainable Kaggle dataset, “TCD”, to analyze further the performance and generalizability of the proposed TumorResNet framework [

42]. The proposed framework performs admirably and outperforms cutting-edge and hybrid methods. Previous research on BTD utilized an imbalance dataset [

14,

15,

16,

17]. We used the same amount of brain MRI scans from healthy and tumorous conditions to address this problem. Our research approach performs well since the proposed TumorResNet model uses the LReLU activation function rather than the ReLu activation function. We also addressed the dying ReLU problem using the LReLU activation function. The DL network will remain dormant in case of a dying Relu problem. We implemented the proposed TumorResNet technique to solve this problem using an LReLU. The LReLU activation mechanism permits a small (non-zero) gradient when the unit is inactive. As a result, it keeps learning instead of coming to a stop or hitting a brick wall. As a result, the LReLU activation function enhances the proposed TumorResNet model’s feature extraction capacity, improving its tumor detection performance. The skip connections technique employed in TumorResNet solves the vanishing gradient and degradation problems. It will skip any layer that has a negative impact on the architecture’s performance and allow an alternative shortcut channel for the gradient to flow through. The skip connection adds the output from the preceding layer to a succeeding layer; thus, learning does not degrade from the initial layers to the final layer. Moreover, these outcomes are attributable to the fact that our proposed approach can properly extract the most distinct, robust, and in-depth features to represent the brain tumor MRI image for precise and trustworthy classification. The first convolution layers extract features (low-level) like color and edges etc. In contrast, deeper layers are responsible for extracting high-level features like an abnormality in the MR images.

Brain tumor manual detection takes a lot of time and effort. Additionally, the MRI scans’ noise and varying contrast reduce the clarity of the images. Consequently, it became challenging for clinicians to examine the MRI directly. This study offers an automated BTD method that aids in the early diagnosis of brain cancers. This procedure has a substantial impact on improving treatment options and patient survival. The proposed method offers a reliable and effective means to identify brain tumors using MRI, assisting the brain doctor in making decisions quickly and precisely.

Furthermore, an experiment was designed to assess the BTD performance of the TumorResNet model compared to other state-of-the-art methods [

29,

30] for detecting brain tumors using MRI images. In this experiment, we compared our work with those approaches which used the same dataset (Brain_Tumor_Detection_MRI). However, this is not a direct comparison due to differences in data preprocessing, training and validation procedures, and processing power employed in their methodologies. Nayak et al. [

29] used a deep autoencoder and spectral data augmentation to identify brain tumors. The morphological cropping procedure was used to downsize and decrease noise in the raw brain pictures in the first step. The data-space problem with feature reduction is then resolved using the discrete wavelet transform (DWT). The framework consists of seven hidden layers, which are used for encoding and decoding images. The encoder has three hidden layers that activate Dense, BN, and ReLU. To reduce generalization errors, provide regularization, and speed up the learning process, the authors used the BN operation. Finally, the dense layer was used to classify brain tumor MRIs into normal and tumorous [

29]. The proposed approach outperformed the existing approaches with an accuracy of 97% and an AUC ROC score of 0.9946 (

Table 10). Lamrani et al. [

30] proposed a CNN to identify a brain tumor’s existence. The major goal of the authors was to use CNN as a machine learning tool for BTD. The CNN model was comprised of different layers, i.e., convolution layers, Maxpooling layers, a flatten layer, and dense layers [

30]. The proposed approach achieved a remarkable accuracy of 96% and an AUC ROC score of 0.96 (

Table 10). This experiment (comparative analysis) reveals the effectiveness of the proposed framework for BTD from MRI scans. The proposed approach achieved the highest overall accuracy of 99.33% and AUC of 0.9997, and we identified that the proposed framework performed comparatively well in terms of accuracy. It is significant to note that because of the end-to-end learning architecture used in the proposed TumorResNet method, there are no isolated processes for feature extraction, selection, or segmentation.

Although the proposed approach produced encouraging results, we identified several shortcomings and offered some suggestions for further study. The several forms of brain tumors, including meningiomas, pituitary tumors, and gliomas, cannot be classified using the proposed method. It is unknown from the proposed TumorResNet approach how well the system recognizes brain tumors when employing additional imaging modalities, such as computer tomography (CT scans). In the proposed approach, we continually divide image data into a training set (80%) and a test set (20%). On the other hand, alternative divides could lead to various outcomes. Although the proposed strategy performed significantly well on two publicly accessible datasets, this study also has the flaw that its results have not been confirmed in actual clinical studies. This statement also applies to the vast majority of the models examined in this study.

In the future, we’ll try to use a larger dataset to employ the proposed methodology to show how well the TumorResNet algorithm works. As we have just compared the performance of the proposed model with hybrid approaches (DL + SVM), in the future, we will compare the results of our model with other transfer learning-based approaches in which we will use the FC layer instead of SVM for classification. In the future, we are also interested in identifying the performance of the proposed model in classifying MRI tumor images into more fine-grained classifications, including meningiomas, pituitary tumors, gliomas, etc., by considering alternative research datasets. In the future, we plan to evaluate the generalizability of the proposed TumorResNet model in more Tumor datasets or other medical datasets containing CT scans, MRI, or chest radiographs so that it can be used in practice to detect various diseases including tuberculosis, breast cancer, lunopacity, etc. Furthermore, we want to use genuine clinical contexts to verify the proposed approach findings to assess the TumorResNet approach. As a result, we will be able to compare the effectiveness of our suggested framework to experimental methods directly. The employment of extra layers or other regularization methods to handle a tiny image collection using a CNN model is another future possibility. Furthermore, in the future, including meningiomas, we will compare the results of our model with other transfer learning-based approaches.

6. Conclusions

In medical image processing, BTD is one of the most significant, laborious, and time-consuming activities since manual (human-assisted) classification can lead to inaccurate prediction and analysis. The end-to-end TumorResNet DL framework for the reliable, accurate, and automated detection of brain tumors has been provided in this work. Furthermore, using publicly accessible datasets, we have verified the robustness of the presented approach. A real-time dataset with various tumor locations, sizes, shapes, and image intensities was used for the experimental study. The accuracy of 99.33% for BTD has demonstrated the superiority of our framework over existing techniques. Experimental results show that the proposed model outperforms the existing BTD. Our proposed method achieved good accuracy for BTD with less pre-processing (no separate feature extraction or feature selection) compared to other techniques. In the future, we intend to reduce further the system complexity, memory space requirements, and computational time taken by the execution of the model. The same method may be used in the future to detect and study various disorders in other regions of the body (liver, kidney, lungs, etc.) and classify the types of brain tumors like benign or malignant. Additionally, to further generalize the proposed approach in detecting other important medical diseases [

44] together with the brain MRI, we aim to identify and capture the performance of the TumorResNet model by training and validating it on the identification of Covid-19 [

45] from chest radiograph images [

34], pest detection [

46], other popular brain tumor types [

47], predicting heart diseases [

48,

49], and mask detecting & removal [

50,

51] to generalize it further.