A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology

Abstract

:1. Introduction

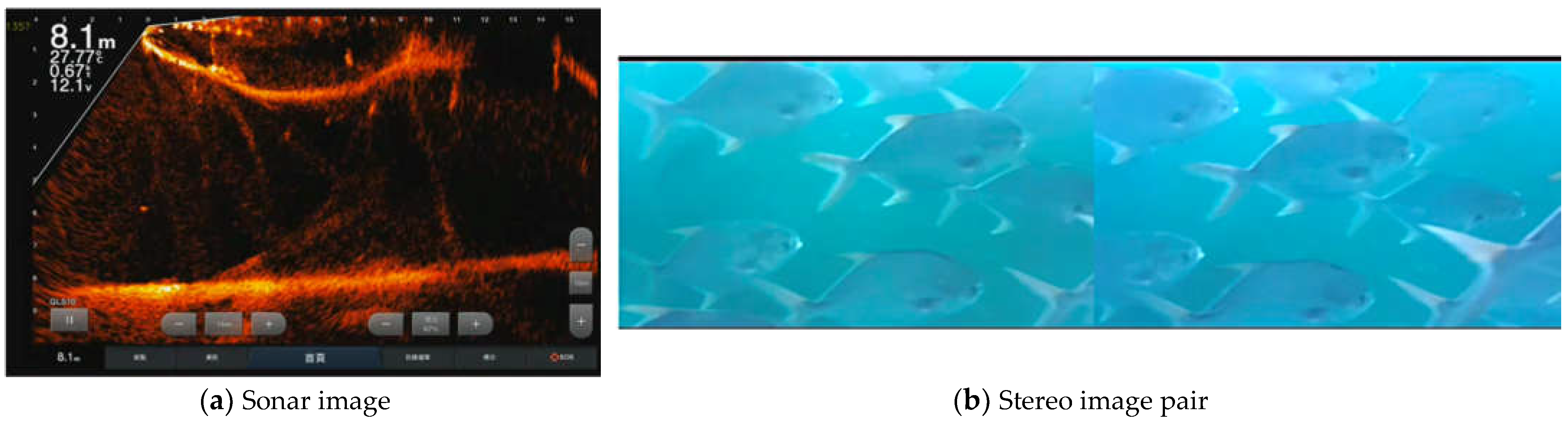

- We proposed an AIoT system that provides sonar and stereo camera fusion that supports automatic data collection from aquaculture farms and performs artificial intelligence functions such as fish type detection, fish count, and fish length estimation. To our knowledge, combining a low-cost sonar and stereo camera system tested in various aquaculture environments with different AI monitoring functions is a novel work.

- We designed a methodology to perform sonar and stereo camera system fusion. However, deploying IoT can be expensive, and to limit the cost of its implementation, we employed low-cost sensors that do not entail high additional expenses for aquaculture farmers.

- Using a sonar camera system, we developed our mechanism to estimate the fish’s length and weight. Additional plugin AI functions can also be deployed in the cloud to meet the emerging requirements of decision-making for aquaculture management based on the collected big data sets. Agile development realizes the design of learnable digital agents to achieve the goal of precision aquaculture.

2. Related Works

- Fish schools swim in the three-dimensional space;

- In the sonar image, fish close to the sonar system can often be incomplete, and fish away from the sonar system become blurrier;

- In sonar images, fish are often overlapped, and the location difference of fish in the direction perpendicular to the sonar beam is indistinguishable [32];

- Annotators are often required to examine successive sonar images to identify fish in sonar images because they find fish by the change of the pattern and strength of echoes.

3. Materials and Methods

3.1. Devices Used and Experimental Environments

3.2. Sonar and Stereo Camera Fusion

3.3. Sonar and Stereo Camera Fusion for Fish Metrics Estimation

3.3.1. Estimation of Fish Standard Length and Weight Using Sonar Image

- Apply Mask R-CNN to identify fish instances in each frame of the input sonar video. The standard length of an identified fish instance is estimated by the distance between the two farthest points on this instance.

- Apply the EM algorithm [57] to learn a GMM for the distribution of the length of the identified fish instance. The GMM for the distribution of the length x can be expressed as follows:

- Select the Gaussian component with the largest component weight as the component comprising a single fish instance. Then, output the statistics of the fish length in .

- Apply K-nearest neighbor regression with the training set, where the length and weight of the fish are measured manually to estimate the weight using the fish length in . This paper set parameter K for the K-nearest neighbor regression to 5.

3.3.2. Estimation of the Quantity of Fish in an Off-Shore Net Cage Using Sonar Image

3.3.3. CNN for Detecting the Fish-Gathering Frame

3.3.4. Semantic Segmentation Network for Segmenting Fish Regions

3.4. Object Detection for Fish Type Identification and Two-Mode Fish Counting

4. Experimental Results

4.1. Sonar and Stereo Camera Fusion Results

4.2. Estimation of Fish Standard Length and Weight Using Sonar Images

4.3. Estimation of Fish Quantity in a Net Cage Using Sonar Images

4.4. Object Detection for Fish Type Identification and Two-Mode Fish Count

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIoT | Artificial Intelligence-based Internet of Things |

| CNNs | Convolutional Neural Networks |

| EM | Expectation Maximization |

| GMM | Gaussian Mixture Model |

| IoT | Internet of Things |

| K-NN | K-Nearest Neighbors |

| ReLU | Rectified Linear Unit |

| RGB | Red, green, blue |

| YOLOv4 | You only look once version 4 |

References

- Food and Agriculture Organizations of the United Nations. State of the World and Aquaculture; FAO: Rome, Italy, 2020. [Google Scholar]

- O’Donncha, F.; Grant, J. Precision Aquaculture. IEEE Internet Things Mag. 2019, 2, 26–30. [Google Scholar] [CrossRef] [Green Version]

- O’Donncha, F.; Stockwell, C.; Planellas, S.; Micallef, G.; Palmes, P.; Webb, C.; Filgueira, R.; Grant, J. Data Driven Insight Into Fish Behaviour and Their Use for Precision Aquaculture. Front. Anim. Sci. 2021, 2, 695054. [Google Scholar] [CrossRef]

- Antonucci, F.; Costa, C. Precision aquaculture: A short review on engineering innovations. Aquac. Int. 2019, 28, 41–57. [Google Scholar] [CrossRef]

- Gupta, S.; Gupta, A.; Hasija, Y. Transforming IoT in aquaculture: A cloud solution in AI. In Edge and IoT-based Smart Agriculture A Volume in Intelligent Data-Centric Systems; Academic Press: Cambridge, MA, USA, 2022; pp. 517–531. [Google Scholar]

- Mustapha, U.F.; Alhassan, A.-W.; Jiang, D.-N.; Li, G.-L. Sustainable aquaculture development: A review on the roles of cloud computing, internet of things and artificial intelligence (CIA). Rev. Aquac. 2021, 3, 2076–2091. [Google Scholar] [CrossRef]

- Petritoli, E.; Leccese, F. Albacore: A Sub Drone for Shallow Waters A Preliminary Study. In Proceedings of the MetroSea 2020–TC19 International Workshop on Metrology for the Sea, Naples, Italy, 5–7 October 2020. [Google Scholar]

- Acar, U.; Kane, F.; Vlacheas, P.; Foteinos, V.; Demestichas, P.; Yuceturk, G.; Drigkopoulou, I.; Vargün, A. Designing An IoT Cloud Solution for Aquaculture. In Proceedings of the 2019 Global IoT Summit (GIoTS), Aarhus, Denmark, 17–21 June 2019. [Google Scholar]

- Chang, C.-C.; Wang, Y.-P.; Cheng, S.-C. Fish Segmentation in Sonar Images by Mask R-CNN on Feature Maps of Conditional Random Fields. Sensors 2021, 21, 7625. [Google Scholar] [CrossRef] [PubMed]

- Ubina, N.A.; Cheng, S.-C.; Chang, C.-C.; Cai, S.-Y.; Lan, H.-Y.; Lu, H.-Y. Intelligent Underwater Stereo Camera Design for Fish Metric Estimation Using Reliable Object Matching. IEEE Access 2022, 10, 74605–74619. [Google Scholar] [CrossRef]

- Cook, D.; Middlemiss, K.; Jaksons, P.; Davison, W.; Jerrett, A. Validation of fish length estimations from a high frequency multi-beam sonar (ARIS) and its utilisation as a field-based measurement technique. Fish. Res. 2019, 218, 56–98. [Google Scholar] [CrossRef]

- Hightower, J.; Magowan, K.; Brown, L.; Fox, D. Reliability of Fish Size Estimates Obtained From Multibeam Imaging Sonar. J. Fish Wildl. Manag. 2013, 4, 86–96. [Google Scholar] [CrossRef] [Green Version]

- Puig-Pons, V.; Muñoz-Benavent, P.; Espinosa, V.; Andreu-García, G.; Valiente-González, J.; Estruch, V.; Ordóñez, P.; Pérez-Arjona, I.; Atienza, V.; Mèlich, B.; et al. Automatic Bluefin Tuna (Thunnus thynnus) biomass estimation during transfers using acoustic and computer vision techniques. Aquac. Eng. 2019, 85, 22–31. [Google Scholar] [CrossRef]

- Ferreira, F.; Machado, D.; Ferri, G.; Dugelay, S.; Potter, J. Underwater optical and acoustic imaging: A time for fusion? A brief overview of the state-of-the-art. In Proceedings of the OCEANS 2016 MTS/IEEE, Monterey, CA, USA, 19–23 September 2016. [Google Scholar]

- Servos, J.; Smart, M.; Waslander, S.L. Underwater stereo SLAM with refraction correction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013. [Google Scholar]

- Føre, M.; Frank, K.; Norton, T.; Svendsen, E.; Alfredsen, J.; Dempster, T.; Eguiraun, H.; Watson, W.; Stahl, A.; Sunde, L.; et al. Precision fish farming: A new framework to improve production in aquaculture. Biosyst. Eng. 2018, 173, 176–193. [Google Scholar] [CrossRef]

- Hughes, J.B.; Hightower, J.E. Combining split-beam and dual-frequency identification sonars to estimate abundance of anadromous fishes in the roanoke river, North Carolina. N. Am. J. Fish. Manag. 2015, 35, 229–240. [Google Scholar] [CrossRef]

- Jing, D.; Han, J.; Wang, X.; Wang, G.; Tong, J.; Shen, W.; Zhang, J. A method to estimate the abundance of fish based on dual-frequency identification sonar (DIDSON) imaging. Fish. Sci. 2017, 35, 229–240. [Google Scholar] [CrossRef]

- Martignac, F.; Daroux, A.; Bagliniere, J.-L.; Ombredane, D.; Guillard, J. The use of acoustic cameras in shallow waters: New hydroacoustic tools for monitoring migratory fish population. a review of DIDSON technology. Fish Fish. 2015, 16, 486–510. [Google Scholar] [CrossRef]

- Baumann, J.R.; Oakley, N.C.; McRae, B.J. Evaluating the effectiveness of artificial fish habitat designs in turbid reservoirs using sonar imagery. N. Am. J. Fish. Manag. 2016, 36, 1437–1444. [Google Scholar] [CrossRef]

- Shahrestani, S.; Bi, H.; Lyubchich, V.; Boswell, K.M. Detecting a nearshore fish parade using the adaptive resolution imaging sonar (ARIS): An automated procedure for data analysis. Fish. Res. 2017, 191, 190–199. [Google Scholar] [CrossRef]

- Jing, D.; Han, J.; Wang, G.; Wang, X.; Wu, J.; Chen, G. Dense multiple-target tracking based on dual frequency identification sonar (DIDSON) image. In Proceedings of the OCEANS 2016, Shanghai, China, 10–13 April 2016. [Google Scholar]

- Wolff, L.M.; Badri-Hoeher, S. Imaging sonar- based fish detection in shallow waters. In Proceedings of the 2014 Oceans, St. John’s, NL, Canada, 14–19 September 2014. [Google Scholar]

- Handegard, N.O. An overview of underwater acoustics applied to observe fish behaviour at the institute of marine research. In Proceedings of the 2013 MTS/IEEE OCEANS, Bergen, Norway, 23–26 September 2013. [Google Scholar]

- Llorens, S.; Pérez-Arjona, I.; Soliveres, E.; Espinosa, V. Detection and target strength measurements of uneaten feed pellets with a single beam echosounder. Aquac. Eng. 2017, 78, 216–220. [Google Scholar] [CrossRef]

- Estrada, J.; Pulido-Calvo, I.; Castro-Gutiérrez, J.; Peregrín, A.; López, S.; Gómez-Bravo, F.; Garrocho-Cruz, A.; De La Rosa, I. Fish abundance estimation with imaging sonar in semi-intensive aquaculture ponds. Aquac. Eng. 2022, 97, 102235. [Google Scholar] [CrossRef]

- Burwen, D.; Fleischman, S.; Miller, J. Accuracy and Precision of Salmon Length Estimates Taken from DIDSON Sonar Images. Trans. Am. Fish. Soc. 2010, 139, 1306–1314. [Google Scholar] [CrossRef]

- Lagarde, R.; Peyre, J.; Amilhat, E.; Mercader, M.; Prellwitz, F.; Gael, S.; Elisabeth, F. In situ evaluation of European eel counts and length estimates accuracy from an acoustic camera (ARIS). Knowl. Manag. Aquat. Ecosyst. 2020, 421, 44. [Google Scholar] [CrossRef]

- Sthapit, P.; Kim, M.; Kang, D.; Kim, K. Development of Scientific Fishery Biomass Estimator: System Design and Prototyping. Sensors 2020, 20, 6095. [Google Scholar] [CrossRef]

- Valdenegro-Toro, M. End-to-end object detection and recognition in forward-looking sonar images with convolutional neural networks. In Proceedings of the 2016 IEEE/ OES Autonomous Underwater Vehicles (AUV), Tokyo, Japan, 6–9 November 2016. [Google Scholar]

- Liu, L.; Lu, H.; Cao, Z.; Xiao, Y. Counting fish in sonar images. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Christ, R.D.; Wernli, R.L. Chapter 15-Sonar. In The ROV Manual; Butterworth-Heinemann: Oxford, UK, 2014; pp. 387–424. [Google Scholar]

- Rosen, S.; Jørgensen, T.; Hammersland-White, D.; Holst, J.; Grant, J. DeepVision: A stereo camera system provides highly accurate counts and lengths of fish passing inside a trawl. Can. J. Fish. Aquat. Sci. 2013, 70, 1456–1467. [Google Scholar] [CrossRef]

- Shortis, M.; Ravanbakskh, M.; Shaifat, F.; Harvey, E.; Mian, A.; Seager, J.; Culverhouse, P.; Cline, D.; Edgington, D. A review of techniques for the identification and measurement of fish in underwater stereo-video image sequences. In Proceedings of the Videometrics, Range Imaging, and Applications XII; and Automated Visual Inspection, Munich, Germany, 14–16 May 2013. [Google Scholar]

- Huang, T.-W.; Hwang, J.-N.; Romain, S.; Wallace, F. Fish Tracking and Segmentation From Stereo Videos on the Wild Sea Surface for Electronic Monitoring of Rail Fishing. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3146–3158. [Google Scholar] [CrossRef]

- Vale, R.; Ueda, E.; Takimoto, R.; Martins, T. Fish Volume Monitoring Using Stereo Vision for Fish Farms. IFAC-PapersOnLine 2020, 53, 15824–15828. [Google Scholar] [CrossRef]

- Williams, K.; Rooper, C.; Towler, R. Use of stereo camera systems for assessment of rockfish abundance in untrawlable areas and for recording pollock behavior during midwater trawls. Fish. Bull.-Natl. Ocean. Atmos. Adm. 2010, 108, 352–365. [Google Scholar]

- Torisawa, S.; Kadota, M.; Komeyama, K.; Suzuki, K.; Takagi, T. A digital stereo-video camera system for three-dimensional monitoring of free-swimming Pacific bluefin tuna, Thunnus orientalis, cultured in a net cage. Aquat. Living Resour. 2011, 24, 107–112. [Google Scholar] [CrossRef] [Green Version]

- Cheng, R.; Zhang, C.; Xu, Q.; Liu, G.; Song, Y.; Yuan, X.; Sun, J. Underwater Fish Body Length Estimation Based on Binocular Image Processing. Information 2020, 11, 476. [Google Scholar] [CrossRef]

- Voskakis, D.; Makris, A.; Papandroulakis, N. Deep learning based fish length estimation. An application for the Mediterranean aquaculture. In Proceedings of the OCEANS 2021, San Diego, CA, USA, 20–23 September 2021. [Google Scholar]

- Shi, C.; Wang, Q.; He, X.; Xiaoshuan, Z.; Li, D. An automatic method of fish length estimation using underwater stereo system based on LabVIEW. Comput. Electron. Agric. 2020, 173, 105419. [Google Scholar] [CrossRef]

- Garner, S.B.; Olsen, A.M.; Caillouet, R.; Campbell, M.D.; Patterson, W.F. Estimating reef fish size distributions with a mini remotely operated vehicle-integrated stereo camera system. PLoS ONE 2021, 16, e0247985. [Google Scholar] [CrossRef]

- Kadambi, A.; Bhandari, A.; Raskar, R. 3D Depth Cameras in Vision: Benefits and Limitations of the Hardware. In Computer Vision and Pattern Recognition; Springer International Publishing: Cham, Switzerland, 2014; pp. 1–26. [Google Scholar]

- Harvey, E.; Shortis, M.; Stadler, M. A Comparison of the Accuracy and Precision of Measurements from Single and Stereo-Video Systems. Mar. Technol. Soc. J. 2002, 36, 38–49. [Google Scholar] [CrossRef]

- Bertels, M.; Jutzi, B.; Ulrich, M. Automatic Real-Time Pose Estimation of Machinery from Images. Sensors 2022, 22, 2627. [Google Scholar] [CrossRef]

- Boldt, J.; Williams, K.; Rooper, C.; Towler, R.; Gauthier, S. Development of stereo camera methodologies to improve pelagic fish biomass estimates and inform ecosystem management in marine waters. Fish. Res. 2017, 198, 66–77. [Google Scholar] [CrossRef]

- Berrio, J.S.; Shan, M.; Worrall, S.; Nebot, E. Camera-LIDAR Integration: Probabilistic Sensor Fusion for Semantic Mapping. IEEE Trans. Intell. Transp. Syst. 2022, 7, 7637–7652. [Google Scholar] [CrossRef]

- John, V.; Long, Q.; Liu, Z.; Mita, S. Automatic calibration and registration of lidar and stereo camera without calibration objects. In Proceedings of the 2015 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Yokohama, Japan, 5–7 November 2015. [Google Scholar]

- Roche, V.D.-S.J.; Kondoz, A. A Multi-modal Perception-Driven Self Evolving Autonomous Ground Vehicle. IEEE Trans. Cybern. 2021, 1–11. [Google Scholar] [CrossRef]

- Zhong, Y.; Chen, Y.; Wang, C.; Wang, Q.; Yang, J. Research on Target Tracking for Robotic Fish Based on Low-Cost Scarce Sensing Information Fusion. IEEE Robot. Autom. Lett. 2022, 7, 6044–6051. [Google Scholar] [CrossRef]

- Dov, D.; Talmon, R.; Cohen, I. Multimodal Kernel Method for Activity Detection of Sound Sources. EEE/ACM Trans. Audio Speech Lang. Processing 2017, 25, 1322–1334. [Google Scholar] [CrossRef]

- Mirzaei, G.; Jamali, M.M.; Ross, J.; Gorsevski, P.V.; Bingman, V.P. Data Fusion of Acoustics, Infrared, and Marine Radar for Avian Study. IEEE Sens. J. 2015, 15, 6625–6632. [Google Scholar] [CrossRef]

- Zhou, X.; Yu, C.; Yuan, X.; Luo, C. A Matching Algorithm for Underwater Acoustic and Optical Images Based on Image Attribute Transfer and Local Features. Sensors 2021, 21, 7043. [Google Scholar] [CrossRef]

- Andrei, C.-O. 3D Affine Coordinate Transformations. Master’s Thesis, School of Architecture and the Built Environment Royal Institute of Technology (KTH), Stockholm, Sweden, 2006. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Kim, B.; Joe, H.; Yu, S.-C. High-precision Underwater 3D Mapping Using Imaging Sonar for Navigation of Autonomous Underwater Vehicle. Int. J. Control. Autom. Syst. 2021, 19, 3199–3208. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. 1977, 39, 1–38. [Google Scholar]

- Gkalelis, N.; Mezaris, V.; Kompatsiaris, I. Mixture subclass discriminant analysis. IEEE Signal Processing Lett. 2011, 18, 319–322. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Li, D.; Miao, Z.; Peng, F.; Wang, L.; Hao, Y.; Wang, Z.; Chen, T.; Li, H.; Zheng, Y. Automatic counting methods in aquaculture: A review. J. World Aquac. Soc. 2020, 52, 269–283. [Google Scholar] [CrossRef]

| Environment | True Positive Rates |

|---|---|

| A | 85% |

| B | 90% |

| C | 75% |

| Environment | Length (cm) | Weight (g) | N | NG | c | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Manual | w/o GMM | w/GMM | Manual | w/o GMM | w/GMM | ||||||||

| A (month A) | 21.98 | 8.82 | 0.14 | 19.29 | 0.12 | 286.37 | 236.45 | 0.17 | 220.32 | 0.23 | 4, 456 | 2, 020 | 4 |

| A (month B) | 21.98 † | 27.13 | 0.24 | 23.27 | 0.06 | 286.37 † | 337.39 | 0.18 | 315.49 | 0.10 | 1, 911 | 1, 319 | 2 |

| A (month C) | 21.98 † | 23.77 | 0.08 | 22.31 | 0.01 | 286.37 † | 315.44 | 0.10 | 297.75 | 0.04 | 210 | 168 | 2 |

| A (month D) | 21.98 † | 25.16 | 0.14 | 22.63 | 0.03 | 286.37 † | 335.13 | 0.17 | 305.94 | 0.07 | 33 | 21 | 2 |

| A (month E) | 21.98 † | 24.38 | 0.11 | 22.21 | 0.01 | 286.37 † | 316.57 | 0.11 | 296.78 | 0.04 | 2, 413 | 1, 879 | 2 |

| B (month A) | 18.01 † | 20.18 | 0.12 | 18.90 | 0.05 | 180.12 † | 210.93 | 0.17 | 201.03 | 0.12 | 1, 201 | 1, 034 | 2 |

| B (month B) | 18.01 † | 20.30 | 0.13 | 19.26 | 0.07 | 180.12 † | 218.01 | 0.21 | 210.78 | 0.17 | 3, 279 | 1, 448 | 5 |

| B (month C) | 18.01 † | 21.16 | 0.18 | 21.16 | 0.18 | 180.12 † | 228.60 | 0.27 | 228.60 | 0.27 | 1, 1228 | 1, 228 | 1 |

| C (month A) | 15.46 | 19.78 | 0.28 | 17.98 | 0.16 | 171.66 | 272.13 | 0.59 | 249.82 | 0.46 | 12, 101 | 9, 781 | 2 |

| C (month B) | 15.50 | 19.79 | 0.28 | 17.51 | 0.13 | 173.90 | 262.86 | 0.51 | 236.27 | 0.36 | 14, 488 | 11, 165 | 2 |

| C (month C) | 16.13 | 16.13 | 0.22 | 17.58 | 0.09 | 189.36 | 262.84 | 0.39 | 235.98 | 0.25 | 10, 121 | 7, 814 | 2 |

| C (month D) | 16.33 | 16.33 | 0.20 | 18.03 | 0.10 | 211.43 | 290.57 | 0.37 | 269.56 | 0.27 | 8, 480 | 7, 013 | 2 |

| Actual | Predicted | |

|---|---|---|

| Gathering | Dispersing | |

| Gathering | 58 | 0 |

| Dispersing | 3 | 113 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, C.-C.; Ubina, N.A.; Cheng, S.-C.; Lan, H.-Y.; Chen, K.-C.; Huang, C.-C. A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors 2022, 22, 7603. https://doi.org/10.3390/s22197603

Chang C-C, Ubina NA, Cheng S-C, Lan H-Y, Chen K-C, Huang C-C. A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors. 2022; 22(19):7603. https://doi.org/10.3390/s22197603

Chicago/Turabian StyleChang, Chin-Chun, Naomi A. Ubina, Shyi-Chyi Cheng, Hsun-Yu Lan, Kuan-Chu Chen, and Chin-Chao Huang. 2022. "A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology" Sensors 22, no. 19: 7603. https://doi.org/10.3390/s22197603

APA StyleChang, C.-C., Ubina, N. A., Cheng, S.-C., Lan, H.-Y., Chen, K.-C., & Huang, C.-C. (2022). A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors, 22(19), 7603. https://doi.org/10.3390/s22197603